induction:

- base case: prove directly for H(1)

- inductive step: for general k, show that if H(k 1) is true, then H(k) is true

- the assumption H(k-1) is true is the inductive

hypothesis (IH)

- suppose we want to prove H(100): first, we use

the base case to prove H(1), then because H(1)

is true, H(2) is true via the inductive step, and

so on until H(100)

➢ selection sort

○ find the minimum, swap it into place, repeat

on the rest, repeat n-1 times

○ O(n2)

SelectionSort(A[1..n]):

1. For j = 1,...,n-1:

2.

min_pos = j

3.

For k = j+1,...,n:

4.

If (A[k] < A[min_pos]):

5.

min_pos = k

6.

Swap A[min_pos] and A[j]

➢ insertion sort

○ insert A[2] into place so A[1..2] is sorted,

repeat process n-1 times

○ O(n2)

InsertionSort(A[1..n]):

1. For j = 2,...,n:

2.

For k = j-1 down to 1:

3.

If (A[k] > A[k+1]

4.

Swap A[k] and A[k+1]

5.

else break from for-loop

➢ divide and conquer algorithms

○ split problem into smaller subproblems

○ recursively solve each subproblem

○ combine the solutions to the subproblems

➢ mergesort

○ merge runs in O(n)

○ mergesort runs in O(nlogn)

Merge(L, R): // L and R are sorted

Let n ← len(L) + len(R)

Let A be an array of length n

j ← 1, k ← 1

For i = 1..n:

If (j > len(L)):

A[i] ← R[k], k ← k + 1

ElseIf (k > len(R)):

A[i] ← L[j], j ← j + 1

ElseIf (L[j] <= R[k]):

A[i] = L[j], j ← j + 1

Else:

A[i] ← R[k], k ← k + 1

Return A

MergeSort(A):

If (len(A) = 1): Return A

Let m ← [len(A)/2]

Let L ← A[1:m], R ← A[m+1:n]

Let L ← MergeSort(L)

Let R ← MergeSort(R)

Let A ← Merge(L, R)

Return A

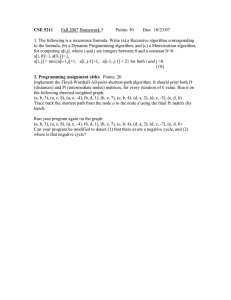

Level

#

0

1

1

2

2

4

i

2i

➢ “big-O” notation

○ f(n) = O(g(n)) if there exists c ∈ (0, ∞)

and n0 ∈ ℕ such that f(n) ≤ c × g(n) for

every n ≥ n0

○ ∃ a, b ≥ 0

∀ n ∈ ℕ f(n) ≤ a ×

g(n) + b

○ n∞f(n)g(n)<∞ (i.e., is finite)

○ proving from first principles: finding c and n0

○ constant factors can be ignored

○ lower order terms can be dropped

○ smaller exponents are big-oh of larger

exponents

○ any logarithm is big-oh of any polynomials

○ any polynomial is big-oh of any exponential

➢ big-omega notation

○ f(n) = 𝛺(g(n)) if there exists c ∈ (0, ∞)

and n0 ∈ ℕ such that f(n) ≥ c × g(n) for

every n ≥ n0

➢ big-theta notation

○ f(n) = 𝛳(g(n)) if there exists c1 ≤ c2 ∈ (0,

∞) and n0 ∈ ℕ such that

c2 × g(n) ≥ f(n) ≥ c1 × g(n) for every n ≥ n0

Karatsuba(u, v, n):

If (n = 1): Return u × v

Let m ← ceiling of n/2

Write u = 10m × a + b, v = 10m × c

+ d

Let e ← Karatsuba(a, c, m)

f ← Karatsuba(b, d, m)

g ← Karatsuba(b-a, c-d, m)

Return 102m × e + 10m × (e + f + g)

+ f

➢ running time of karatsuba

○ O(n1.59)

➢ solving recurrences

○ write the recurrence in a form so that you can

apply it for different problem sizes

○ build the recurrence tree

○ same at each level → T(n) is work-per-level

times number-of-levels

○ decreasing → examine nature of series

(geometric, arithmetic)

○ increasing → examine nature of series, pay

attention to contribution of leaves which could

dominate

➢ master theorem

○ T(n) = a × T(n/b) + Cnd

○ a/bd>1 → T(n) = 𝜃(nlog_b(a))

○ a/bd=1 → T(n) = 𝜃(nd logn)

○ a/bd<1 → T(n) = 𝜃(nd)

○ f(n) = O(nlog_b(a) - 𝜀) → T(n) = 𝜃(nlog_b(a))

○ f(n) = 𝜃(nlog_b(a)) → T(n) = 𝜃(f(n) × logn)

○ f(n) = 𝛺(nlog_b(a) + 𝜀) AND a × f(n/b) ≤

Cf(n) for C < 1 → T(n) = 𝜃(f(n))

work at level

Cn

n

7n/10

C(7n/10 +

2n/10) =

C(9n/10)

2n/10

14n/100

49n/100

14n/100

4n/100

C(81/n100)

i

(7/10)

log10/7n

Esha Pandya

C(9/10)i

➢ selection

➢ orders of growth

○ take an element of the array ← pivot

lgn, n1/2, n, nlogn, n2, n3, 2n, n!

○ partition into two parts, around this element (compare each element in A to pivot)

○ search in the appropriate part

○ T(n) = O(n) + T(n/5) + T(7n/10), T(1) = O(1)

○ runtime → O(n)

MOMSelect(A[1:n], k):

If(n ≤ 25): sort and return A[k]

Let p = MOM(A)

Partition around the pivot, let p = A[r]

If (k = r): return A[r]

ElseIf (k < r): return MOMSelect(A[1:r-1], k)

ElseIf (k > r): return MOMSelect(A[r+1:n], k-r)

MOM(A[1:n]):

Let m ← ceiling of n/5

For i = 1,..,m:

Meds[i] = median{A[5i-4], A[5i-3],.., A[5i]}

Let p ← MOMSelect(Meds[1:m], floor of m/2)

➢ binary search

○ compare x with middle element of A,

and if not found, recurse in

appropriate half

○ T(n) = T(n/2) + 1

○ T(n) = 𝜃(logn)

➢ mergesort

○ T(n) = 2T(n/2) + n

○ T(n) = 𝜃(n logn)

➢ integer multiplication

○ T(n) = 3T(n/2) + n

○ T(n) = 𝜃(nlog_2(3))

➢ divide and conquer: general paradigm

○ prove correctness, often using

induction

○ establish recurrence relation of

running time

○ solve recurrence using one of:

↳ master theorem

↳ recursion tree

↳ formulate a conjecture and prove

by induction

➢ memoization → idea of storing solutions to the

subproblems

➢ fibonacci numbers

➢ top down → recursive (make initial recursive call

from n down to base case) → O(n)

M ← empty array, M[1] ← 0, M[2] ← 1

FibII(n):

If (M[n] is not empty): return M[n]

ElseIf (M[n] is empty):

M[n] ← FibII(n-1) + FibII(n-2)

return M[n]

➢ bottom up → iterative approach (remove

recursion) → O(n)

FibIII(n):

M[1] ← 0, M[2] ← 1

For i=3,..n:

M[i] ← M[i-1] + M[i-2]

return M[n]

➢ dynamic programming recipe

○ (1) identify a set of subproblems

○ (2) relate the subproblems via a recurrence

○ (3) find an efficient implementation of

recurrence (top down or bottom up)

○ (4) reconstruct the solution from the DP table

➢ weighted interval scheduling

○ subproblems: let Oi be the optimal schedule

using only the intervals {1,..,i}

○ case 1: final interval is not in Oi (i ∉ Oi)

○ case 2: final interval is in Oi (i ∈ Oi)

○ OPT(i) = max{OPT(i-1), vi + OPT(p(i))}

○ OPT(0) = 0, OPT(1) = v1

○ space → O(n)

○ top down → O(n)

M ← empty array, M[0] ← 0, M[1] ← 1v

FindOPT(n):

If(M[n] is not empty): return M[n]

Else:

M[n] ← max{FindOPT(n-1), nv +

FindOPT(p(n))}

return M[n]

○ bottom up → O(n)

FindOPT(n):

M[0] ← 0, M[1] ← v1

For (i = 2,...,n):

M[i] ← max{M[i-1], v

+ M[p(i)]}

i

return M[n]

○ optimal schedule → O(n)

FindSched(M,n):

If (n = 0): return ∅

ElseIf (n = 1): return {1}

ElseIf (vn + M[p(n)] > M[n-1]):

return {n} + FindSched(M,

Else:

return FindSched(M, n-1)

➢ longest common subsequence (LCS)

○ input: two strings x ∈ ∑n, y ∈ ∑m

○ LCS(i, j) = length of LCS of x1:i and y1:j

○ equal: if xi = yj, then LCS(i, j) = LSC(i-1, j-1)

+ 1

○ case 1: xi is not in the LCS → LCS(i, j) =

LCS(i-1, j)

○ case 2: yj is not in the LCS → LCS(i, j) =

LCS(i, j-1)

○ recurrence: LCS(i, j) =

↳ 1 + LCS(i-1, j-1) if xi = yj

↳ max(LCS(i-1, j), LCS(i, j-1)) if xi ≠ yj

○ base cases: LCS(0, ∀j) = LCS(∀i, 0) = 0

○ bottom up → O(nm)

FindOPT(n, m):

M[i, 0] ← 0, M[0, j] ← 0

for (i = 1,...,n):

for (j = 1,...,m):

if (xi = yj):

M[i,j] ← 1 + M[i-1, j-1]

else:

M[i, j] ← max{M[i-1, j], M[i, j-1]}

return M[n, m]

○ space → O(nm)

➢ the knapsack problem

○ want: argmaxS⊆{1,..,n} VS such that WS ≤ T

○ SubsetSum: vi = wi

○ TugOfWar: vi = wi, T = ½ sum (start at i) vi

○ let On ⊆ {1,..,n} be the optimal subset of items

given the first n items

○ case 1: n ∉ On →On = On-1 with same capacity

○ case 2: n ∈ On → On = {n} ∪ On-1 with

capacity = previous capacity - wn

○ base cases: OPT(j, 0)=OPT(0, S)=0

○ runtime → O(nT), space → O(nT)

FindOPT(n, T):

M[0, S] ← 0, M[j, 0] ← 0

for (j = 1,..,n):

for (S = 1,..,T):

if(wj > S): M[j, S] ← M[j-1,

S]

else: M[j, S] ← max{M[j-1,

S], vj + M[j-1, S-wj]}

return M[n, T]

○ findsol runtime → O(n)

// M[0:n, 0:T] contains solutions to

subproblems

FindSol(M, n, T):

if (n = 0 or T = 0): return∅

else:

if (wn > T): return FindSol(M,

n-1, T)

else:

if (M[n-1, T] > nv + M[n-1,

T-wn]):

return FindSol(M, n-1,

T)

else:

return {n} + FindSol(M,

n-1, T-wn)

➢ longest increasing subsequence (LIS)

○ let LIS(j) be the length of the longest

increasing subsequence that ends with xj

○ case i: the last two numbers are xi and xj

○ recurrence: LIS(j) = 1 + max1≤i≤j and xi <

xj(LIS(i))

○ base case: LIS(1) = 1

○ bottom up → O(n2)

FindOPT(n):

M[1] ← 1

for(j = 2,...,n):

M[j] = 1 + max1≤i≤j and xi<xjM[i]

return max1≤i≤jM[j]

○ k = argmax1≤i≤nLIS(i)

↳ xk is final symbol in LIS

○ length of LIS

↳ LIS = max1≤j≤nLIS(j)

➢ segmented least squares

○ input: n data points P = {(x1, y1),...,(xn, yn)}, cost parameter C > 0

○ output: partition of P into contiguous segments and lines minimizing total cost

○ let L*i, j be the optimal line for {pi, …, pj} | Ɛi, j = error(L*i, j , {pi,..., pj})

○ let OPT(j) be the value of the optimal solution for points {p1,..., pn}

○ case i: final segment is {pi,..., pj}

○ total cost is Ɛi, j ← error for [pi,...,pj] + C ← cost parameter for [pi,...,pj] +

OPT(i-1) ← total cost of everything up to i-1 (inclusive)

○ recurrence → OPT(j)=min(i,j+C+OPT(i-1))

○ base cases → OPT(0)=0, OPT(1)=OPT(2)=C

○ top-down → O(n2)

M ← empty array, M[0] ← 0, M[1] ← C, M[2] ← C

FindOPT(n):

If (M[n] is not empty): return M[n]

Else:

M[n] ← min1≤i≤j(i,j + C + FindOPT(i-1)) // O(n) times

return M[n]

○ bottom-up → O(n2)

FindOPT(n):

M[0] ← 0, M[1] ← C, M[2] ← C

For (j = 3,...,n):

M[j] ← min1≤i≤j(i,j + C + FindOPT(i-1))

Return M[n]

○ findingsegments → O(n2), space → O(n2)

// M[0:n] contains solutions to subproblems

FindSol(M, n):

If(n = 0): return ∅

ElseIf (n = 1): return {1}

ElseIf (n = 2): return {1, 2}

Else:

Let x ← argmin1≤i≤n(Ɛj, n + C + M[i-1]):

Return {x,...,n} + FindSol(M, x-1)

➢ segmented least squares v.2

○ hard upper bound on the number of segments

○ sum (from i=1 to k)=error(Li,S1)

○ let OPT(j, 𝓁) be the optimal solution for points {1,...,j} using ≤ 𝓁 segments

○ case i: final segment is {pi,...,pj}

○ recurrence: OPT(j, 𝓁) = min1≤i≤j(𝜀i,j + OPT(i-1, 𝓁-1)

○ base cases: OPT(0, 𝓁) = 0 ∀ 𝓁 ≥ 0, OPT(j, 0) = ∞ ∀ j ≥ 1

○ top down → O(n2k)

M ← empty array, M[0, 𝓁] ← 0, M[j, 0] ← ∞

FindOpt(n, k):

if (M[n, k] is not empty): return M[n, k]

else:

M[n, k] ← min1≤i≤j(𝜀i,j + FindOPT(i-1, k-1))

return M[n, k]

○ bottom up → O(n2k)

FindOpt(n, k):

M ← empty array, M[0, 𝓁] ← 0, M[j, 0] ← ∞

for(𝓁 = 1,...,k):

for(j = 1,...,n):

M[j, 𝓁] ← min1≤i≤j(𝜀i,j + M[i-1, 𝓁-1])

return M[n, k]

○ finding segments → O(nk), space → O(n2 + nk)

FindSol(M,n, k):

if (n = 0): return ∅

elseif (n = 1): return {1}

else:

let x ← argmin1≤i≤j(𝜀i,j + M[i-1, k-1])

return {x,...,n} + FindSol(M, x-1, k-1)

↳ k recursive calls