Data analysis refers to process of evaluating big data sets using analytical and statistical tools

so as to discover useful information and conclusions to support business decision-making.

Pandas or Python Pandas is Python's library for data analysis. Pandas has derived its name

from "panel data system", which is an ecometrics term for multi-dimensional, structured data

sets.

Pandas is an open-source, BSD-licensed Python library providing high-performance, easy-touse data structures and data analysis tools for the Python programming language. Python with

Pandas is used in a wide range of fields including academic and commercial domains including

finance, economics, Statistics, analytics, etc.

Pandas is the most popular library in the scientific Python ecosystem for doing data analysis.

Pandas is capable of many tasks including¢) It can read or write in many different data formats (integer, float, double, etc.)

¢) It can calculate in all ways data is organized i.e., across rows and down columns

¢) It can easily select subsets of data from bulky data sets and even combine multiple datasets

together.

¢) It has functionality to find and fill missing data.

¢) It allows you to apply operations to independent groups within the data.

¢) It supports reshaping of data into different forms.

¢) It supports advanced time-series functionality (Time series forecasting is the use of a model to

predict future values based on previously observed values.)

¢) It supports visualization by integrating matplotlib and seaborn etc. libraries.

Key Features of Pandas

•

•

•

•

•

•

•

•

•

Fast and efficient DataFrame object with default and customized indexing.

Tools for loading data into in-memory data objects from different file formats.

Data alignment and integrated handling of missing data.

Reshaping and pivoting of date sets.

Label-based slicing, indexing and subsetting of large data sets.

Columns from a data structure can be deleted or inserted.

Group by data for aggregation and transformations.

High performance merging and joining of data.

Time Series functionality.

In other words, Pandas is best at handling huge tabular data sets comprising different data

formats. The Pandas library also supports the most simple of tasks needed with data such as

loading data or doing feature engineering on time series data etc.

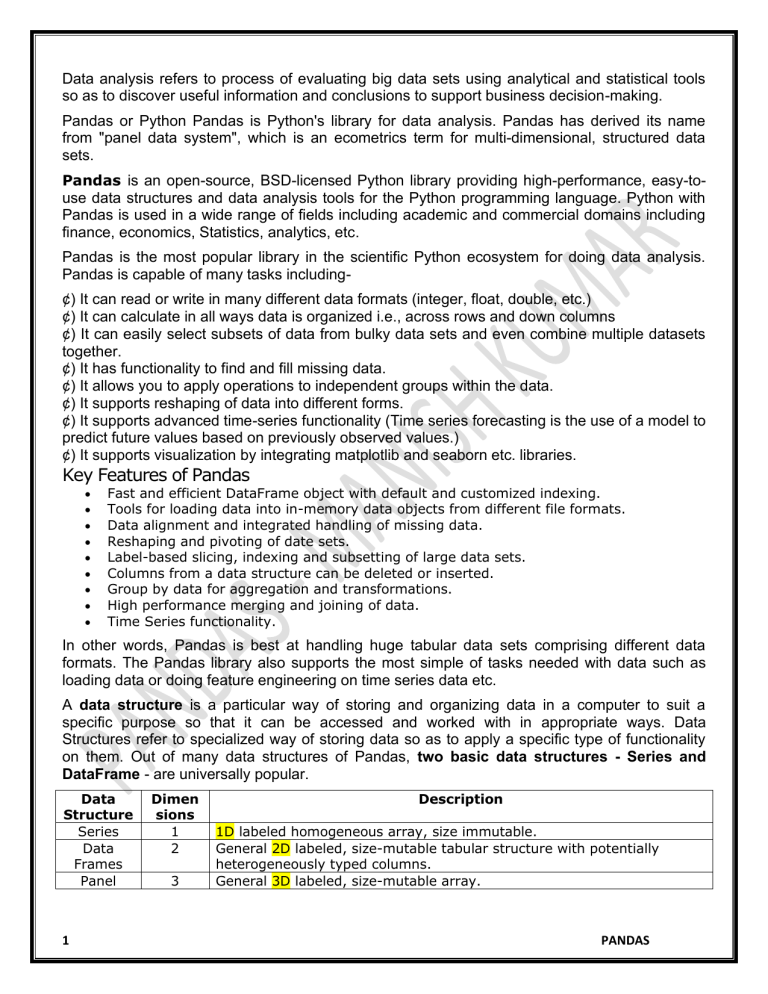

A data structure is a particular way of storing and organizing data in a computer to suit a

specific purpose so that it can be accessed and worked with in appropriate ways. Data

Structures refer to specialized way of storing data so as to apply a specific type of functionality

on them. Out of many data structures of Pandas, two basic data structures - Series and

DataFrame - are universally popular.

Data

Structure

Series

Data

Frames

Panel

1

Dimen

sions

1

2

3

Description

1D labeled homogeneous array, size immutable.

General 2D labeled, size-mutable tabular structure with potentially

heterogeneously typed columns.

General 3D labeled, size-mutable array.

PANDAS

Mutability - All Pandas data structures are value mutable (can be changed) and except Series

all are size mutable. Series is size immutable.

Series Data Structure - A Series is a Pandas data structure that represents a one-dimensional

array-like object containing an array of data (of any NumPy data type) and an associated array

of data labels, called its index.

Key Points : Homogeneous data, Size Immutable, Values of Data Mutable.

A Series type object has two main components: an array of actual data, an associated array of

indexes or data labels. A Series type object can be created in many ways using pandas library's

Series( ).

i. Create empty Series Object by using just the Series() with no parameter - Series type

object with no value having default datatype, which is float64. To create an empty object i.e.,

having no values, you can just use the Series() as: <Series Object>= pandas.Series

em = pd.Series()

print(em)

output : Series([ ], dtype: float64)

ii. Creating non-empty Series objects - To create non-empty Series objects, you need to

specify arguments for data and indexes as per following syntax :

<Series object>= pd.Series(data, index=idx) where data is the data part of the Series object, it

can be one of the following : 1) Sequence, 2) ndarray, 3) Dictionary, or 4) A scalar value.

(1) Specify data as Python Sequence. To give a sequence of values as attribute to Series( ),

i.e., as : <Series Object>= Series (<any Python sequence>)

obj1 = pd.Series(range(5))

print(obj1)

0

0

1

1

2

2

3

3

4

4

dtype: int64

(2) Specify data as an ndarray. The data attribute can be an ndarray also.

nda1 = np.arange(3, 13, 3.5)

ser1 = pd.Series(nda1)

print(ser1)

0

3.0

1

6.5

2

10.0

dtype: float64

(3) Specify data as a Python dictionary. can be any sequence, including dictionaries.

obj5 = pd.Series( { ‘Jan’ : 31, ‘Feb’ : 28, ‘Mar’ : 31 } )

Here, one thing is noteworthy that if you are creating a series object

from a dictionary object, then indexes, which are created from keys

are not in the same order as you have typed them.

(4) Specify data as a scalar / single value. BUT if data is a scalar value, then the index must

be provided. There can be one or more entries in index sequence. The scalar value (given as

data) will be repeated to match the length of index.

medalsWon = pd. Series ( 10, index = range ( 0, 1))

medals2 = pd.Series(15, index= range(1, 6, 2))

ser2 = pd. Series ( 'Yet to start' , index= ['Indore', 'Delhi', 'Shimla' ] )

2

PANDAS

Specifying/ Adding NaN values in a Series object - In such cases, you can fill missing data

with a NaN (Not a Number) value. Legal empty value NaN is defined in NumPy module and

hence you can use np.NaN to specify a missing value.

obj3 = pd.Series( [ 6.5, np.NaN, 2.34] )

(ii) Specify index(es) as well as data with Series(). While creating Series type object is that

along with values, you also provide indexes. Both values and indexes are sequences. Syntax is :

<Series Object>= pandas.Series(data = None, index= None)

Both data and index have to be sequences; None is taken by default, if you skip these

parameters.

arr= [31, 28, 31, 30]

mon = [ ‘Jan’, ‘Feb’, ‘Mar’, Apr’ ]

obj3 = pd. Series( data = arr, index = mon)

obj4 = pd.Series( data= [32, 34, 35], index=[ ‘A’,

‘B’, ‘C’ ] )

You may use loop for defining index sequence also, e.g.,

s1 = pd.Series( range(1, 15, 3), index= [x for x in 'abcde' ] )

output : a

1

b

4

c

7

d

10

e

13

dtype: int64

(iii) Specify data type along with data and index. You can also specify data type along with

data and index with Series() as per following syntax:

<Series Object>= pandas.Series(data = None, index= None, dtype = None)

obj4 = pd.Series( data= [32, 34, 35], index=[ ‘A’, ‘B’, ‘C’ ] , dtype=float )

print(obj4)

A

32.0

B

34.0

C

35.0

dtype: float64

NOTE : Series object's indexes are not necessarily to 0 to n -1 always.

(iv) Using a mathematical function/expression to create data array in Series(). The Series()

allows you to define a function or expression that can calculate values for data sequence.

<Series Object>= pd.Series (index= None, data =<function I expression> )

Numpy array

a= np.arange(9, 13)

obj7 = pd.Series ( index =

a, data=a* 2)

3

Python list

It is important to understand that if

we apply the operation / expression

Lst = [9, 10, 11, 12]

obj8 = pd.Series ( data = (2 *

Lst) )

PANDAS

print(obj7)

on a NumPy array then the given

operation is carried in vertorized way

that i.e., applied on each element of

the NumPy array and the newly

generated sequence is taken as data

array. BUT if you apply a similar

operation a Python list then the

result will be entirely different.

print(obj8)

NOTE : While creating a Series object, when you give index array as a sequence then there is

no compulsion for the uniqueness of indexes. That is, you can have duplicate entries in the

index array and Python won't raise any error. Indices need not be unique in Pandas Series. This

will only cause an error if/when you perform an operation that requires unique indices.

Common attributes of Series objects

Attribure

Series.index

Series.values

Series.dtype

Series.shape

Series.nbytes

Series.ndim

Series.size

Series.itemsize

Series.hasnans

Series.empty

Series.head()

Series.tail()

Series.axes

Series.dtype

Description

The index (axis labels) of the Series.

Return Series as ndarray or ndarray-like depending on the dtype

return the dtype object of the underlying data

return a tuple of the shape of the underlying data

return the number of bytes in the underlying data

return the number of dimensions of the underlying data

return the number of elements in the underlying data

return the size of the dtype of the item of the underlying data (in bytes)

return True if there are any NaN values; otherwise return False

return True if the Series object is empty, false otherwise

Returns

Returns

Returns

Returns

the first n rows.

the last n rows.

a list of the row axis labels.

the dtype of the object.

NOTE : If you use len( ) on a series object, then it returns total elements in it including NaNs but

<series>.count( ) returns only the count of non-NaN values in a Series object.

Accessing Individual Elements : To access individual elements of a Series object, you can

give its index in square brackets along with its name as you have been doing with other Python

sequences. For example : <Series Object name> [ <valid index> ] e.g.

obj5[ ‘feb’ ]. # BUT if you try to give an index which is not a legal index, it will give you an error.

Extracting Slices from Series Object : Like other sequences, you can extract slices too from a

Series object. Slicing is a powerful way to retrieve subsets of data from a pandas object.

Slicing takes place position wise and not the index wise in a series object.

All individual elements have position numbers starting from 0 onwards i.e., 0 for first element, 1

for 2nd element and so on.

A slice object is created from Series object using a syntax of <Object>[start : end : step), but the

start and stop signify the positions of elements not the indexes. The slice object of a Series

object is also a panda Series type object.

import pandas as pd

s = pd.Series([1,2,3,4,5],index =

['a','b','c','d','e'])

4

a

b

c

1

2

3

PANDAS

print(s[:3])

dtype: int64

Operations on Series Object :

1 . Modifying Elements of Series Object : The data values of a Series object can be easily

modified through item assignment, i.e., <SeriesObject>[ <index> ]= <new_data_value>

Above assignment will change the data value of the given index in Series object.

obj4 = pd.Series( [2,4,6,8,10])

obj4[1 : 3]= -1

print(obj4)

0

2

1

-1

2

-1

3

8

4

10

dtype: int64

Above assignment will replace all the values falling in given slice. Please note that Series

object's values can be modified but size cannot. So you can say that Series objects are valuemutable but size-immutable objects.

2. The head() and tail() functions: The head() function is used to fetch first n-rows from a

pandas object and tail() function returns last n-rows from a pandas object.

obj4 = pd.Series( [2,4,6,8,10])

print(obj4.head(2))

obj4 = pd.Series( [2,4,6,8,10])

print(obj4.tail(2))

0

2

1

4

dtype: int64

3

8

4

10

dtype: int64

3. Vector Operations on Series Object : Vector operations mean that if you apply a function or

expression then it is individually applied on each item of the object. Since Series objects are built

upon NumPy arrays (ndarrays), they also support vectorized operations, just like ndarrays.

obj4 = pd.Series( [2,4,6])

print(obj4 ** 2)

0

4

1

16

2

36

dtype: int64

4. Arithmetic on Series Objects : You can perform arithmetic like addition, subtraction, division

etc. with two Series objects and it will calculate result on two corresponding items of the two

objects given in expression BUT it has a caveat - the operation is performed only on the

matching indexes. Also, if the data items of the two matches indexes are not compatible to

perform operation, it will return NaN (Not a Number) as the result of those operations.

When you perform arithmetic operations on two Series type objects , the data is aligned on the

basis of matching indexes (this is called Data Alignment in panda objects) and then performed

arithmetic; for non-overlapping indexes, the arithmetic operations result as a NaN (Not a

5

PANDAS

Number). You can store the result of object arithmetic in another object, which will also be

a Series object : ob6 = ob1 + ob3

5. Filtering Entries : You can filter out entries from a Series objects using expressions that are

of Boolean type.

6. Re-indexing - Sometimes you need to create a similar object but with a different order of

same indexes. You can use reindexing for this purpose as per this syntax:

<Series Object> = <Object>.reindex( <sequence with new order of indexes> )

obj4 = pd.Series([2,4,6], index=[0,1,2])

obj4=obj4.reindex([1,2,3])

print(obj4)

1

4.0

2

6.0

3

NaN

dtype: float64

With this, the same data values and their indexes will be stored in the new object as per the

defined order of index in the reindex( ).

7. Dropping Entries from an Axis - Sometime, you do not need a data value at a particular

index. You can remove that entry from series object using drop( ) as per this syntax :

<Series Object>.drop( <index to be removed> )

obj4 = pd.Series([2,4,6], index=[0,1,2])

obj4=obj4.drop([1,2])

print(obj4)

0

2

dtype: int64

Difference between NumPy Arrays and Series Objects

(i) In case of ndarrays, you can perform vectorized operations only if the shapes of two ndarrays

match, otherwise it returns an error. But with Series objects, in case of vectorized operations, the

data of two Series objects is aligned as per matching indexes and operation is performed on

then and for non-matching indexes, NaN is returned.

(ii) In ndarrays, the indexes are always numeric starting from 0 onwards, BUT series objects can

have any type of indexes, including numbers (not necessarily starting from 0), letters, labels,

strings etc.

6

PANDAS

Accessing Data from Series with Position

Example 1 - Retrieve the first element. As we already know, the counting starts from

zero for the array, which means the first element is stored at zeroth position and so on.

s = pd.Series([1,2,3,4,5],index =

['a','b','c','d','e'])

#retrieve the first element

print(s[0])

1

Example 2 - Retrieve the first three elements in the Series. If a : is inserted in front of it,

all items from that index onwards will be extracted. If two parameters (with : between

them) is used, items between the two indexes (not including the stop index)

s = pd.Series([1,2,3,4,5],index =

['a','b','c','d','e'])

#retrieve the first three element

print(s[:3])

a 1

b 2

c 3

dtype: int64

Example 3 - Retrieve the last three elements.

s = pd.Series([1,2,3,4,5],index =

['a','b','c','d','e'])

#retrieve the last three element

print(s[-3:])

c 3

d 4

e 5

dtype: int64

Retrieve Data Using Label (Index)

A Series is like a fixed-size dict in that you can get and set values by index label.

Example 1 - Retrieve a single element using index label value.

s = pd.Series([1,2,3,4,5],index =

['a','b','c','d','e'])

#retrieve a single element

print(s['a'])

1

Example 2 - Retrieve multiple elements using a list of index label values.

s = pd.Series([1,2,3,4,5],index =

['a','b','c','d','e'])

#retrieve multiple elements

print(s[['a','c','d']])

a 1

c 3

d 4

dtype: int64

Example 3 - If a label is not contained, an exception is raised.

s = pd.Series([1,2,3,4,5],index =

['a','b','c','d','e'])

#retrieve missing element

print(s['f'])

7

…

KeyError: 'f'v

PANDAS

DataFrame Data Structure : A DataFrame in another pandas structure, which stores data in

two-dimensional way. It is actually a two-dimensional (tabular and spreadsheet like) labeled

array, which is actually an ordered collection of columns where columns may store different

types of data, e.g., numeric or string or floating point or Boolean type etc. A two-dimensional

array is an array in which each element is itself an array.

Features of DataFrame

1. Potentially columns are of different types

2. Size – Mutable

3. Labeled axes (rows and columns)

4. Can Perform Arithmetic operations on rows and columns

Major characteristics of a DataFrame data structure can be listed as:

(i) It has two indexes or we can say that two axes - a row (axis = 0) and a column (axis= 1).

(ii) Conceptually it is like a spreadsheet where each value is identifiable with the combination of

row index and column index. The row index is known as index in general and the column

index is called the column-name.

(iii) The indexes can be of numbers or letters or strings.

(iv) There is no condition of having all data of same type across columns; its columns can have

data of different types.

(v) You can easily change its values, i.e., it is value-mutable.

(vi) You can add or delete rows/columns in a DataFrame. In other words, it is size-mutable.

NOTE : DataFrames are both, value-mutable and size-mutable, i.e., you can change both its

values and size.

Creating and Displaying a DataFrame : You can create a DataFrame object by passing data in

many different ways, such as:

(i) Two-dimensional dictionaries i.e., dictionaries having lists or dictionaries or ndarrays or Series

objects etc.

(ii) Two-dimensional ndarrays (NumPy array)

(iii) Series type object

(iv) Another DataFrame object

(i) Two-dimensional dictionaries i.e., dictionaries having lists or dictionaries or ndarrays

or Series objects etc. A two dimensional dictionary is a dictionary having items as (key: value)

where value part is a data structµre of any type : another dictionary, an ndarray, a Series object,

a list etc. But here the value parts of all the keys should have similar structure and equal lengths.

(a) Creating a dataframe from a 2D dictionary having values as lists / ndarrays :

import pandas as pd

data = {'Name':['Tom', 'Jack', 'Steve',

'Ricky'],'Age':[28,34,29,42]}

df = pd.DataFrame(data)

print(df)

0

1

2

3

Age

28

34

29

42

Name

Tom

Jack

Steve

Ricky

The keys of 2d dictionary have become columns and Indexes have been generated 0 onwards

using np.range(n). You can specify your own indexes too by specifying a sequence by the name

index in the DataFrame( ) function,

import pandas as pd

data = {'Name':['Tom', 'Jack', 'Steve',

'Ricky'],'Age':[28,34,29,42]}

df = pd.DataFrame(data,

index=['rank1','rank2','rank3','rank4'])

8

rank1

rank2

rank3

rank4

Age

28

34

29

42

Name

Tom

Jack

Steve

Ricky

PANDAS

print(df)

(b) Creating a dataframe from a 2D dictionary having values as dictionary objects : A 2D

dictionary can have values as dictionary objects too. You can also create a data frame object

using such 20 dictionary object:

import pandas as pd

yr2015 = { 'Qtr1' : 34500, 'Qtr2'

yr2016 = { 'Qtr1' : 44900, 'Qtr2'

yr2017 = { 'Qtr1' : 54500, 'Qtr2'

disales = {2015 : yr2015, 2016 :

df1 = pd.DataFrame(disales)

print(disales)

: 56000, 'Qtr3' : 47000, 'Qtr4' : 49000}

: 46100, 'Qtr3' : 57000, 'Qtr4' : 59000}

: 51000, 'Qtr3' : 57000, 'Qtr4' : 58500}

yr2016, 2017 : yr2017}

Its output is as follows −

Qtr1

Qtr2

Qtr3

Qtr4

2015

34500

56000

47000

49000

2016

44900

46100

57000

59000

2017

54500

51000

57000

58500

NOTE : While creating a dataframe with a nested or 2d dictionary, Python interprets the outer

dict keys as the columns and the inner keys as the row indices.

Now, had there been a situation where inner dictionaries had non-matching keys, then in that

case Python would have done following things:

(i) There would have been total number of indexes equal to sum of unique inner keys in all the

inner dictionaries.

(ii) For a key that has no matching keys in other inner dictionaries, value NaN would be used to

depict the missing values.

yr2015 = { 'Qtr1' : 34500, 'Qtr2' : 56000, 'Qtr3' : 47000, 'Qtr4' : 49000}

yr2016 = { 'Qtr1' : 44900, 'Qtr2' : 46100, 'Qtr3' : 57000, 'Qtr4' : 59000}

yr2017 = { 'Qtr1' : 54500, 'Qtr2' : 51000, 'Qtr3' : 57000}

diSales = { 2015 : yr2015, 2016: yr2016, 2017 : yr2017}

df3 = pd.DataFrame(diSales)

print(df3)

Its output is as follows –

Qtr1

Qtr2

Qtr3

Qtr4

2015

34500

56000

47000

49000

2016

44900

46100

57000

59000

2017

54500.0

51000.0

57000.0

NaN

NOTE : Total number of indexes in a Data Frame object are equal to total unique inner keys of

the 20 dictionary passed to it and it would use NaN values to fill missing data i.e., where the

corresponding values for a key are missing in any inner dictionary.

(c) Create a DataFrame from List of Dicts - List of Dictionaries can be passed as input data to

create a DataFrame. The dictionary keys are by default taken as column names.

Example 1

data = [{'a': 1, 'b': 2},{'a': 5, 'b': 10,

9

a

b

c

PANDAS

'c': 20}]

df = pd.DataFrame(data)

print(df)

0

1

1

5

2

10

NaN

20.0

Note − Observe, NaN (Not a Number) is appended in missing areas.

Example 2

data = [{'a': 1, 'b': 2},{'a': 5, 'b': 10,

'c': 20}]

df = pd.DataFrame(data, index=['first',

'second'])

print(df)

first

second

a

1

5

b

2

10

c

NaN

20.0

Example 3

import pandas as pd

data = [{'a': 1, 'b': 2},{'a': 5, 'b': 10, 'c': 20}]

#With two column indices, values same as dictionary keys

df1 = pd.DataFrame(data, index=['first', 'second'], columns=['a', 'b'])

#With two column indices with one index with other name

df2 = pd.DataFrame(data, index=['first', 'second'], columns=['a', 'b1'])

print(df1)

print(df2)

Its output is as follows −

#df1 output

a

first

1

second

5

#df2 output

a

first

1

second

5

b

2

10

b1

NaN

NaN

2. Creating a DataFrame Object from a 1-D or 2-D ndarray – You can also pass a two-dimensional

NumPy array (i.e., having shape as (<n>, <n>)) to DataFrame( ) to create a dataframe object.

Example 1

data = [1,2,3,4,5]

df = pd.DataFrame(data)

print(df)

0

1

2

3

4

0

1

2

3

4

5

Example 2

data = [['Alex',10],['Bob',12],['Clarke',13]]

df =

pd.DataFrame(data,columns=['Name','Age'])

print(df)

0

1

2

Name

Alex

Bob

Clarke

Age

10

12

13

0

1

2

Name

Alex

Bob

Clarke

Example 3

data = [['Alex',10],['Bob',12],['Clarke',13]]

df =

pd.DataFrame(data,columns=['Name','Age'],dtype=float)

print(df)

Age

10.0

12.0

13.0

NOTE : By giving an index sequence, you can specify your own index names or labels .

10

PANDAS

If, however, the rows of ndarrays differ in length, i.e., if number of elements in each row differ,

then Python will create just single column in the dataframe object and the type of the column will

be considered as object.

narr3 = np.array( [ [ 101.5, 201.2 ], [ 400, 50, 600, 700 ], [ 212.3, 301.5, 405.2 ] ] )

dtf4=pd.DataFrame(narr3)

3. Creating a DataFrame object from a 2D dictionary with values as Series object

d = {'one' : pd.Series([1, 2, 3], index=['a', 'b', 'c']),

'two' : pd.Series([1, 2, 3, 4], index=['a', 'b', 'c', 'd'])}

df = pd.DataFrame(d)

print(df)

a

b

c

d

one

1.0

2.0

3.0

NaN

two

1

2

3

4

NOTE : The column names must be valid identifiers of Python.

4. Creating a DataFrame Object from another DataFrame Object

data = [['Alex',10],['Bob',12],['Clarke',13]]

df =

pd.DataFrame(data,columns=['Name','Age'])

df1 = pd.DataFrame(df)

print(df1)

0

1

2

Name

Alex

Bob

Clarke

Age

10

12

13

DataFrame Attributes:

Getting count of non-NA values in dataframe - Like Series, you can use count( ) with

dataframe too to get the count of Non-NaN values, but count( ) with dataframe is little elaborate

11

PANDAS

(i) If you do not pass any

argument or pass 0

(default is 0 only), then it

returns count of non-NA

values for each column,

e.g.,

(ii) If you pass argument as 1,

then it returns count of non-NA

values for each row, e.g.,

(iii) To get count of non-NA

values from rows/columns,

you can explicitly specify

argument to count() as axis =

'index' or axis = 'columns' as

shown below:

Numpy Representation of DataFrame - You can represent the values of a dataframe object in

numpy way using values attribute. E.g.

data = [['Alex',10],['Bob',12],['Clarke',13]]

df = pd.DataFrame(data,columns=['Name','Age'])

print(df.values)

[['Alex' 10]

['Bob' 12]

['Clarke' 13]]

Selecting / Accessing a Column –

<DataFrame object>[ <column name>] or

<Data Frame object>.<column name>

d = {'one' : pd.Series([1, 2, 3], index=['a', 'b', 'c']),

'two' : pd.Series([1, 2, 3, 4], index=['a', 'b', 'c', 'd'])}

df = pd.DataFrame(d)

print(df['one'])

# or

print(df.one)

a

b

c

d

Name:

1.0

2.0

3.0

NaN

one, dtype: float64

Column Addition

d = {'one' : pd.Series([1, 2, 3], index=['a', 'b',

'c']),

'two' : pd.Series([1, 2, 3, 4], index=['a',

'b', 'c', 'd'])}

df = pd.DataFrame(d)

print ("Adding a new column by passing as Series:")

df['three']=pd.Series([10,20,30],index=['a','b','c'])

print(df)

print ("Adding a new column using the existing

columns in DataFrame:")

df['four']=df['one']+df['three']

print(df)

12

Adding a new column by passing as Series:

one

two

three

a

1.0

1

10.0

b

2.0

2

20.0

c

3.0

3

30.0

d

NaN

4

NaN

Adding a new column using the existing

columns in DataFrame:

one

two

three

four

a

1.0

1

10.0

11.0

b

2.0

2

20.0

22.0

c

3.0

3

30.0

33.0

d

NaN

4

NaN

NaN

PANDAS

Column Deletion : Example

d = {'one' : pd.Series([1, 2, 3], index=['a', 'b',

'c']),

'two' : pd.Series([1, 2, 3, 4], index=['a',

'b', 'c', 'd']),

'three' : pd.Series([10,20,30],

index=['a','b','c'])}

df = pd.DataFrame(d)

print ("Our dataframe is:")

print(df)

print ("Deleting the first column using DEL

function:")

del df['one']

# using del function

print(df)

print ("Deleting another column using POP

function:")

df.pop('two')

# using pop function

print(df)

Our dataframe is:

one

three two

a

1.0

10.0

1

b

2.0

20.0

2

c

3.0

30.0

3

d

NaN

NaN

4

Deleting the first column using DEL function:

three

two

a

10.0

1

b

20.0

2

c

30.0

3

d

NaN

4

Deleting another column using POP function:

three

a 10.0

b 20.0

c 30.0

d NaN

Row Selection, Addition, and Deletion

Selection by Label - Rows can be selected by passing row label to a loc function.

d = {'one' : pd.Series([1, 2, 3], index=['a', 'b', 'c']),

'two' : pd.Series([1, 2, 3, 4], index=['a', 'b', 'c', 'd'])}

df = pd.DataFrame(d)

print(df.loc['b'])

one 2.0

two 2.0

Name: b, dtype: float64

The result is a series with labels as column names of the DataFrame. And, the

Name of the series is the label with which it is retrieved.

Selection by integer location - Rows can be selected by passing integer location to

an iloc function.

d = {'one' : pd.Series([1, 2, 3], index=['a', 'b', 'c']),

'two' : pd.Series([1, 2, 3, 4], index=['a', 'b', 'c', 'd'])}

df = pd.DataFrame(d)

print(df.iloc[2])

one

3.0

two

3.0

Name: c, dtype: float64

Slice Rows – Multiple rows can be selected using ‘ : ’ operator.

d = {'one' : pd.Series([1, 2, 3], index=['a', 'b', 'c']),

'two' : pd.Series([1, 2, 3, 4], index=['a', 'b', 'c', 'd'])}

df = pd.DataFrame(d)

print(df[2:4])

one

3.0

NaN

c

d

two

3

4

Addition of Rows - append function helps to append the rows at the end.

df = pd.DataFrame([[1, 2], [3, 4]], columns = ['a','b'])

df2 = pd.DataFrame([[5, 6], [7, 8]], columns = ['a','b'])

df = df.append(df2)

print(df)

0

1

0

1

a

1

3

5

7

b

2

4

6

8

Deletion of Rows - Use index label to delete or drop rows from a DataFrame. If label is

duplicated, then multiple rows will be dropped. If you observe, in the above example, the

labels are duplicate. Let us drop a label and will see how many rows will get dropped.

df = pd.DataFrame([[1, 2], [3, 4]], columns = ['a','b'])

df2 = pd.DataFrame([[5, 6], [7, 8]], columns = ['a','b'])

df = df.append(df2)

df = df.drop(0)

print(df)

13

a b

1 3 4

1 7 8

PANDAS

Selecting/ Accessing Multiple Columns –

<Data Frame object>[ [<column name>, <column name>, <column name>, ... ] ]

import pandas as pd

data = [['Alex',10,'Jaipur'],['Bob',12,'Kota'],['Clarke',13,'Ajmer']]

df = pd.DataFrame(data,columns=['Name','Age','City'])

print(df[['Name','City']])

0

1

2

Name

Alex

Bob

Clarke

City

Jaipur

Kota

Ajmer

NOTE : Columns appear in the order of column names given in the list inside square brackets.

Selecting / Accessing a Subset from a Dataframe using Row / Column Names –

<DataFrameObject>.loc [ <startrow> : <endrow>, <startcolumn> : <endcolumn>]

data = [['Alex',10,'Jaipur'],['Bob',12,'Kota'],['Clarke',13,'Ajmer']]

df = pd.DataFrame(data,columns=['Name','Age','City'])

print(df.loc[0:1, : ])

0

1

Name

Alex

Bob

Age

10

12

City

Jaipur

Kota

To access single row: <DataFrameObject> . loc [ <row> ]

data =

[['Alex',10,'Jaipur'],['Bob',12,'Kota'],['Clarke',13,'Ajmer']]

df = pd.DataFrame(data,columns=['Name','Age','City'])

print(df.loc[0])

Name

Alex

Age

10

City

Jaipur

Name: 0, dtype: object

To access multiple rows: <DataFrameObject> . loc [ <startrow> : <endrow>, : ]

data =

[['Alex',10,'Jaipur'],['Bob',12,'Kota'],['Clarke',13,'Ajmer']]

df = pd.DataFrame(data,columns=['Name','Age','City'])

print(df.loc[0:1])

0

1

Name

Alex

Bob

Age

10

12

0

1

2

Name

Alex

Bob

Clarke

0

1

Name

Alex

Bob

City

Jaipur

Kota

To access selective columns, use :

data =

[['Alex',10,'Jaipur'],['Bob',12,'Kota'],['Clarke',13,'Ajmer']]

df = pd.DataFrame(data,columns=['Name','Age','City'])

print(df.loc[ : , 'Name':'Age'])

Age

10

12

13

To access range of columns from a range of rows, use:

<DF object>.loc [<startrow>: <endrow>, <startcolumn>: <endcolumn>].

data =

[['Alex',10,'Jaipur'],['Bob',12,'Kota'],['Clarke',13,'Ajmer']]

df = pd.DataFrame(data,columns=['Name','Age','City'])

print(df.loc[0:1 , 'Name':'Age'])

Age

10

12

Obtaining a Subset/Slice from a Dataframe using Row/Column Numeric Index/Position:

You can extract subset from dataframe using the row and column numeric index/position, but

this time you will use iloc instead of loc.

NOTE : iloc means integer location.

<DFobject>. iloc[ <start row index> : <end row index>, < start col index> : <end column index> ]

data =

[['Alex',10,'Jaipur'],['Bob',12,'Kota'],['Clarke',13,'Ajmer']]

df = pd.DataFrame(data)

print(df.loc[0:1 , 0:1])

0

1

0

Alex

Bob

1

10

12

Selecting/Accessing Individual Value

(i) Either give name of row or numeric index in square brackets with, i.e., as this :

14

PANDAS

<DF object>.<column> [ <row name or row numeric index>]

data =

[['Alex',10,'Jaipur'],['Bob',12,'Kota'],['Clarke',13,'Ajmer']]

df = pd.DataFrame(data, columns=['Name','Age','City'])

print(df.Age[0:2])

0

10

1

12

Name: Age, dtype: int64

ii) You can use at or iat attribute with DF object as shown below :

<DF object>.at[ <row name> , <Column name> ]

Or

<DF object>. iat[ <numeric Row index>, <numeric Column index> ]

data = [['Alex',10,'Jaipur'],['Bob',12,'Kota'],['Clarke',13,'Ajmer']]

df = pd.DataFrame(data, columns=['Name','Age','City'])

print(df.at[0, 'Age'])

data = [['Alex',10,'Jaipur'],['Bob',12,'Kota'],['Clarke',13,'Ajmer']]

df = pd.DataFrame(data)

print(df.iat[0, 0])

10

Alex

Assigning/Modifying Data Values in Dataframes:

(a) To change or add a column, use syntax :

<DF object >.<column name> [ <row label>] = <new value>

data =

[['Alex',10,'Jaipur'],['Bob',12,'Kota'],['Clarke',13,'Ajmer']]

df = pd.DataFrame(data, columns=['Name','Age','City'])

df.Age[0]=11

print(df)

0

1

2

Name

Alex

Bob

Clarke

Age

11

12

13

City

Jaipur

Kota

Ajmer

(b) Similarly, to change or add a row, use syntax :

<DF object> at[ <row name>, : ] = <new value>

<DF object> loc[ <row name>, : ] = <new value>

Or

data =

[['Alex',10,'Jaipur'],['Bob',12,'Kota'],['Clarke',13,'Ajmer']]

df = pd.DataFrame(data, columns=['Name','Age','City'])

df.at[3, 0:1]='NONAME'

print(df)

data =

[['Alex',10,'Jaipur'],['Bob',12,'Kota'],['Clarke',13,'Ajmer']]

df = pd.DataFrame(data, columns=['Name','Age','City'])

df.loc[3, 0:1]='NONAME'

print(df)

0

1

2

3

0

1

2

3

Name

Alex

Bob

Clarke

NONAME

Name

Alex

Bob

Clarke

NONAME

Age

10.0

12.0

13.0

NaN

Age

10.0

12.0

13.0

NaN

City

Jaipur

Kota

Ajmer

NaN

City

Jaipur

Kota

Ajmer

NaN

(c) To change or modify a single data value, use syntax :

<DF>. <columnname> [ <row name/label>] = <Value>

data =

[['Alex',10,'Jaipur'],['Bob',12,'Kota'],['Clarke',13,'Ajmer']]

df = pd.DataFrame(data, columns=['Name','Age','City'])

df.Age[0]=11

print(df)

0

1

2

Name

Alex

Bob

Clarke

Age

11

12

13

City

Jaipur

Kota

Ajmer

Adding Columns in DataFrames

<DF object>.at [ : , <column name> ] = <values for column>

Or

<DF object>. loc [ : , <column name> ] = <values for column>

Or

<DF object> = <DF object>. assign( <column name> = <values for column> )

data =

15

Name

Age

City State

PANDAS

[['Alex',10,'Jaipur'],['Bob',12,'Kota'],['Clarke',13,'Ajmer']]

df = pd.DataFrame(data, columns=['Name','Age','City'])

df.at[:,'State']=['Raj','Raj','Raj']

print(df)

data =

[['Alex',10,'Jaipur'],['Bob',12,'Kota'],['Clarke',13,'Ajmer']]

df = pd.DataFrame(data, columns=['Name','Age','City'])

df.loc[:,'State']=['Raj','Raj','Raj']

print(df)

data =

[['Alex',10,'Jaipur'],['Bob',12,'Kota'],['Clarke',13,'Ajmer']]

df = pd.DataFrame(data, columns=['Name','Age','City'])

df=df.assign(State=['Raj','Raj','Raj'])

print(df)

0

1

2

Alex

Bob

Clarke

10

12

13

Jaipur

Kota

Ajmer

Raj

Raj

Raj

0

1

2

Name

Alex

Bob

Clarke

Age

10

12

13

City State

Jaipur

Raj

Kota

Raj

Ajmer

Raj

0

1

2

Name

Alex

Bob

Clarke

Age

10

12

13

City State

Jaipur

Raj

Kota

Raj

Ajmer

Raj

NOTE : When you assign something to a column of dataframe, then for existing column, it will

change the data values and for non-existing column, it will add a new column.

Deleting Columns in DataFrames

del <Df object>[ <column name>]

<Df object> . drop( [index or sequence of indexes], axis = 1)

import pandas as pd

d = {'one' : pd.Series([1, 2, 3], index=['a', 'b',

'c']),

'two' : pd.Series([1, 2, 3, 4], index=['a', 'b',

'c', 'd']),

'three' : pd.Series([10,20,30],

index=['a','b','c'])}

df = pd.DataFrame(d)

print ("Our dataframe is:")

print(df)

print ("Deleting the first column using DEL function:")

del df['one']

# using del function

print(df)

print ("Deleting another column using Drop function:")

df=df.drop('two',axis=1)

# using Drop

function

print(df)

Our dataframe is:

one two three

a 1.0

1

10.0

b 2.0

2

20.0

c 3.0

3

30.0

d NaN

4

NaN

Deleting the first column using DEL

function:

two three

a

1

10.0

b

2

20.0

c

3

30.0

d

4

NaN

Deleting another column using Drop

function:

three

a

10.0

b

20.0

c

30.0

d

NaN

To delete rows from a dataframe, you can use <DF>.drop(index or sequence of indexes), by

default axis value is 0.

Attribute/

Method

T

axes

dtypes

empty

ndim

shape

size

values

head()

tail()

16

Description

Transposes rows and columns.

Returns a list with the row axis labels and column axis labels as the only members.

Returns the dtypes in this object.

True if NDFrame is entirely empty [no items]; if any of the axes are of length 0.

Number of axes / array dimensions.

Returns a tuple representing the dimensionality of the DataFrame.

Number of elements in the NDFrame.

Numpy representation of NDFrame.

Returns the first n rows.

Returns last n rows.

PANDAS

Examples

import pandas as pd

import numpy as np

#Create a Dictionary of series

d = {'Name':pd.Series(['Tom','James','Ricky','Vin','Steve','Smith',

'Jack']),

'Age':pd.Series([25,26,25,23,30,29,23]),

'Rating':pd.Series([4.23,3.24,3.98,2.56,3.20,4.6,3.8])}

#Create a DataFrame

df = pd.DataFrame(d)

print("Our data series is:")

print(df)

T (Transpose)

interchange.

-

Our data series is:

Age

Name

Rating

0

25

Tom

4.23

1

26

James

3.24

2

25

Ricky

3.98

3

23

Vin

2.56

4

30

Steve

3.20

5

29

Smith

4.60

6

23

Jack

3.80

Returns the transpose of the DataFrame. The rows and columns will

print("The transpose of the data series

is:")

print(df.T)

The transpose of the data series is:

0

1

2

3

Age

25

26

25

23

Name

Tom

James

Ricky Vin

Rating

4.23 3.24

3.98

2.56

4

30

Steve

3.2

5

29

Smith

4.6

6

23

Jack

3.8

Axes - Returns the list of row axis labels and column axis labels.

print("Row axis labels and

column axis labels are:")

print(df.axes)

Row axis labels and column axis labels are:

[RangeIndex(start=0, stop=7, step=1), Index([u'Age', u'Name',

u'Rating'], dtype='object')]

dtypes - Returns the data type of each column.

print("The data types of each column are:")

print(df.dtypes)

The data types of each column are:

Age

int64

Name

object

Rating float64

dtype: object

Empty - Returns the Boolean value saying whether the Object is empty or not; True

indicates that the object is empty.

print("Is the object empty?")

print(df.empty)

Is the object empty?

False

Ndim - Returns the number of dimensions of the object. By definition, DataFrame is a 2D

object.

print("The dimension of the object is:")

print(df.ndim)

The dimension of the object is:

2

Shape - Returns a tuple representing the dimensionality of the DataFrame. Tuple (a,b),

where a represents the number of rows and b represents the number of columns.

print("The shape of the object is:")

print(df.shape)

Size

-

The shape of the object is:

(7, 3)

Returns the number of elements in the DataFrame.

print("The total number of elements in our object is:")

print(df.size)

17

The total number of elements in

our object is: 21

PANDAS

Values - Returns the actual data in the DataFrame as an NDarray.

print("The actual data in our data frame is:")

print(df.values)

The actual data in our data frame is:

[[25 'Tom' 4.23]

[26 'James' 3.24]

[25 'Ricky' 3.98]

[23 'Vin' 2.56]

[30 'Steve' 3.2]

[29 'Smith' 4.6]

[23 'Jack' 3.8]]

Head & Tail - To view a small sample of a DataFrame object, use the head() and tail()

methods. head() returns the first n rows (observe the index values). The default number of

elements to display is five, but you may pass a custom number.

The first two rows of the data frame is:

Age

Name

Rating

0 25

Tom

4.23

1 26

James 3.24

print("The first two rows of the data frame

is:")

print(df.head(2))

tail() returns the last n rows (observe the index values). The default number of

elements to display is five, but you may pass a custom number.

The last two rows of the data frame is:

Age

Name

Rating

5

29

Smith

4.6

6

23

Jack

3.8

print("The first two rows of the data frame

is:")

print(df.tail(2))

Iterating over a DataFrame

<DFobject>.iterrows( ) - The iterrows() method iterates over dataframe row-wise where each

horizontal subset is in the form of (row-index, Series) where Series contains all column values

for that row-index.

<DFobject>.iteritems( ) - The iteritems() method iterates over dataframe column-wise where

each vertical subset is in the form of (col-index, Series) where Series contains all row values for

that column-index.

NOTE : <DF>.iteritems{ ) iterates over vertical subsets in the form of (col-index, Series) pairs

and <DF>.iterrows( ) iterates over horizontal subsets in the form of (row-index, Series) pairs.

for (row, rowSeries) in df1 . iterrows ():

Each row is taken one at a time in the form of (row, rowSeries) where row would store the rowindex and rowSeries will store all the values of the row in form of a Series object.

for ( col, colSeries) in df1 . iteritems():

Each row is taken one at a time in the form of (col, colSeries) where col would store the rowindex and colSeries will store all the values of the row in form of a Series object.

To iterate over the rows of the DataFrame, we can use the following functions −

•

•

iteritems() − to iterate over the (key,value) pairs

iterrows() − iterate over the rows as (index,series) pairs

iteritems() - Iterates over each column as key, value pair with label as key and column value

as a Series object.

df =

pd.DataFrame(np.arange(1,13).reshape(4,3),

columns=['col1','col2','col3'])

for key,value in df.iteritems():

print(key, value)

18

col1 0

1

1

4

2

7

3

10

Name: col1, dtype: int32

PANDAS

col2 0

2

1

5

2

8

3

11

Name: col2, dtype: int32

col3 0

3

1

6

2

9

3

12

Name: col3, dtype: int32

Observe, each column is iterated separately as a key-value pair in a Series.

iterrows() - iterrows() returns the iterator yielding each index value along with a

series containing the data in each row.

df = pd.DataFrame(np.arange(1,13).reshape(4,3),

columns=['col1','col2','col3'])

for key,value in df.iterrows():

print(key, value)

0 col1

col2

col3

Name: 0,

1 col1

col2

col3

Name: 1,

2 col1

col2

col3

Name: 2,

3 col1

col2

col3

Name: 3,

1

2

3

dtype:

4

5

6

dtype:

7

8

9

dtype:

10

11

12

dtype:

int32

int32

int32

int32

Note − Because iterrows() iterate over the rows, it doesn't preserve the data type across the

row. 0,1,2 are the row indices and col1,col2,col3 are column indices.

Note − Do not try to modify any object while iterating. Iterating is meant for reading and the

iterator returns a copy of the original object (a view), thus the changes will not reflect on the

original object.

df = pd.DataFrame(np.arange(1,13).reshape(4,3),

columns=['col1','col2','col3'])

for index, row in df.iterrows():

row['a'] = 10

print(df)

0

1

2

3

col1

1

4

7

10

col2

2

5

8

11

col3

3

6

9

12

Binary Operations in a DataFrame : Binary operations mean operations requiring two values

to perform and these values are picked elementwise. In a binary operation, the data from the two

dataframes are aligned on the bases of their row and column indexes and for matching row,

column index, the given operation is performed and for nonmatching row, column indexes NaN

value is stored in the result. So you can say that like Series objects, data is aligned in two

dataframes, the data is aligned on the basis of matching row and column indexes and then

performed arithmetic for non-overlapping indexes, the arithmetic operations result as a NaN for

non-matching indexes.

You can perform add binary operation on two dataframe objects using either + operator or using

add( ) as per syntax: <DF1>.add(<DF2>) which means <DF1>+<DF2> or by using radd( ) i.e.,

reverse add as per syntax : <DF1>.radd(<DF2>) which means <DF2>+<DF1>.

You can perform subtract binary operation on two dataframe objects using either - (minus)

operator or using sub() as per syntax: <DF>.sub(<DF>) which means <DF1> - <DF2> or by

19

PANDAS

using rsub( ) i.e., reverse subtract as per syntax : <DF1>.radd(<DF2>) which means <DF2> <DF1>.

You can perform multiply binary operation on two dataframe objects using either * operator or

using mul() as per syntax: <DF>.mul(<DF>).

You can perform division binary operation on two dataframe objects using either / operator or

using div( ) as per syntax : <DF>.div(<DF>).

NOTE : Python integer types cannot store NaN values. To store a NaN value in a column, the

datatype of a column is changed to non-integer suitable type.

NOTE : If you are performing subtraction on two dataframes, make sure the data types of values

are subtraction compatible (e.g., you cannot subtract two strings) otherwise Python will raise an

error.

Some Other Essential Functions:

1. Inspection functions info( ) and describe( ) : To inspect broadly or to get basic information

about your dataframe object, you can use info() and describe( ) functions.

<DF>.info() - The info( ) gives following information for a dataframe object

¢) Its type. Obviously, it is an instance of a DataFrame.

¢) Index valbues. As each row of a dataframe object has an index, this information shows

the assigned indexes.

¢) Number of rows in the dataframe object.

¢) Data columns and values in them. It lists number of columns and count of only non-NA

values in them.

¢) Datatypes of each column. The listed datatypes are not necessarily in the

corresponding order to the listed columns. You can however use the dtypes attribute to get

the datatype for each column.

¢) Memory_usage. Approximate amount of RAM used to hold the DataFrame.

<DF>.describe() - The describe( ) gives following information for a dataframe object having

numeric columns:

¢) Count. Count of non-NA values in a column

¢) Mean. Computed mean of values in a column

¢) Std. Standard deviation of values in c1 column

¢) Min. Minimum value in a column

¢) 25°0, 50%, 75%. Percentiles of values in that column (how these percentile are

calculated, we are explaining below.

¢) Max. Maximum value in a column

The information returned by describe( ) for string columns includes:

¢) Count -the number of non-NA entries in the column

¢) Unique -number of unique entries in the column

20

PANDAS

¢) Top - the most common entry in the column, i.e., the one with highest frequency. If,

however, multiple values have the same highest count, then the count and most common

(i.e., top) pair will be arbitrarily chosen from among those with the highest count.

¢) Freq - it is the frequency of the most common element displayed as top above.

The default behavior of describe( ) is to only provide a summary for the numerical columns. You

can give include= 'all' as argument to describe( ) to list summary for all columns.

d = {'Name':pd.Series(['Tom','James','Ricky','Vin']),

'Age':pd.Series([25,26,25,23]),

'Rating':pd.Series([2.98,4.80,4.10,3.65])}

df = pd.DataFrame(d)

print(df.describe())

d = {'Name':pd.Series(['Tom','James','Ricky','Vin']),

'Age':pd.Series([25,26,25,23]),

'Rating':pd.Series([2.98,4.80,4.10,3.65])}

df = pd.DataFrame(d)

print(df.describe(include='all'))

d = {'Name':pd.Series(['Tom','James','Ricky','Vin']),

'Age':pd.Series([25,26,25,23]),

'Rating':pd.Series([2.98,4.80,4.10,3.65])}

df = pd.DataFrame(d)

print(df.info())

count

mean

std

min

25%

50%

75%

max

Age

4.000000

24.750000

1.258306

23.000000

24.500000

25.000000

25.250000

26.000000

count

unique

top

freq

mean

std

min

25%

50%

75%

max

Name

4

4

Tom

1

NaN

NaN

NaN

NaN

NaN

NaN

NaN

Rating

4.000000

3.882500

0.765436

2.980000

3.482500

3.875000

4.275000

4.800000

Age

4.000000

NaN

NaN

NaN

24.750000

1.258306

23.000000

24.500000

25.000000

25.250000

26.000000

Rating

4.000000

NaN

NaN

NaN

3.882500

0.765436

2.980000

3.482500

3.875000

4.275000

4.800000

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 4 entries, 0 to 3

Data columns (total 3 columns):

Name

4 non-null object

Age

4 non-null int64

Rating

4 non-null float64

dtypes: float64(1), int64(1), object(1)

memory usage: 120.0+ bytes

None

This function gives the mean, std and IQR values. And, function excludes the

character columns and given summary about numeric columns. 'include' is the

argument which is used to pass necessary information regarding what columns

need to be considered for summarizing. Takes the list of values; by default,

'number'.

•

object − Summarizes String columns

•

number − Summarizes Numeric columns

•

all − Summarizes all columns together (Should not pass it as a list value)

2. Retrieve head and tail rows using head( ) and tail( ) - You can use head() and tail() to

retrieve ‘N’ top or ‘N’ bottom rows respectively of a dataframe object. These functions are to be

used as : <DF>.head( [ n = 5 ] ) or

<DF>.tail( [ n = 5 ] )

You can give any value of ‘N’ as per your need (as many rows you want to list).

3. Cumulative Calculations Functions : You can use these functions for cumulative

calculations on dataframe objects : cumsum( ) calculates cumulative sum i.e., in the output of

21

PANDAS

this function, the value of each row is replace>d by sum of all prior rows including this row.

String value rows use concatenation. It is used. as: <DF> . cumsum( [axis== None])

df = pd.DataFrame([[25,26],[25,23]])

print(df.cumsum())

0

1

0

25

50

1

26

49

In the same manner you can use cumprod( ) to get cumulative product, cummax( ) to get

cumulative maximum and cummin( ) to get cumulative minimum value from a dataframe object.

4. Index of Maximum and Minimum Values - You can get the index of maximum and minimum

value in columns using idxmax( ) and idxmin( ) functions. <DF>.idxmax() or <DF>.idxmin( )

df = pd.DataFrame([[52,26,54],[25,72,78],[25,2,82]])

print(df.idxmax())

print(df.idxmin())

0

0

1

1

2

2

dtype: int64

0

1

1

2

2

0

dtype: int64

Python Pandas – Sorting - There are two kinds of sorting available in Pandas. They are −

1. By label

2. By Actual Value

By Label - Using the sort_index() method, by passing the axis arguments and the

order of sorting, DataFrame can be sorted. By default, sorting is done on row labels

in ascending order.

df=pd.DataFrame([[34,23],[76,43],[76,34],[78,99]],

index=[1,4,6,2], columns=['col2','col1'])

print(df)

sortdf=df.sort_index()

1

2

4

col2

34

76

76

78

col2

34

78

76

col1

23

43

34

99

col1

23

99

43

6

76

34

1

4

6

2

print(sortdf)

Order of Sorting - By passing the Boolean value to ascending parameter, the order of the

sorting can be controlled. Let us consider the following example to understand the same.

sortdf=df.sort_index(ascending=False)

print(sortdf)

6

4

2

1

col2

76

76

78

34

col1

34

43

99

23

Sort the Columns - By passing the axis argument with a value 0 or 1, the sorting can be

done on the column labels. By default, axis=0, sort by row. Let us consider the following

example to understand the same.

sortdf=df.sort_index(axis=1)

print(sortdf)

1

4

6

2

col1

23

43

34

99

col2

34

76

76

78

By Value - Like index sorting, sort_values() is the method for sorting by values. It

accepts a 'by' argument which will use the column name of the DataFrame with which the

values are to be sorted.

df=pd.DataFrame({'name':['aman','raman','lucky','pawan'],

'city':['ajmer','ludhiana','jaipur','jalandhar'],

22

0

name

aman

city state

ajmer

raj

PANDAS

'state':['raj','pb','raj','pb']})

print(df)

sortdf = df.sort_values(by='state')

print(sortdf)

sortdf = df.sort_values(by=['state','city'])

print(sortdf)

1

2

3

1

3

0

2

3

1

0

2

23

raman

lucky

pawan

name

raman

pawan

aman

lucky

name

pawan

raman

aman

lucky

ludhiana

pb

jaipur

raj

jalandhar

pb

city state

ludhiana

pb

jalandhar

pb

ajmer

raj

jaipur

raj

city state

jalandhar

pb

ludhiana

pb

ajmer

raj

jaipur

raj

PANDAS

Matching and Broadcasting Operations - You have read earlier that when you perform

arithmetic operations on two Series type objects , the data is aligned on the basis of matching

indexes and then performed arithmetic; for non-overlapping indexes, the arithmetic operations

result as a NaN (Not a Number), this is called Data Alignment in panda objects. While

performing arithmetic operations on dataframes, the same thing happens, i.e., whenever you

add two dataframes, data is aligned on the basis of matching indexes and then performed

arithmetic; for non-overlapping indexes, the arithmetic operations result as a NaN (Not a

Number). This default behaviour of data alignment on the basis of matching indexes is

called MATCHING.

While performing arithmetic operations, enlarging the smaller object operand by replicating its

elements so as to match the shape of larger object operand, is called BROADCASTING.

<DF>, add( <DF>, axis= 'rows')

<DF>. div( <DF>, axis= 'rows·)

<DF>, rdiv( <DF>, axis= 'rows')

<DF>. mul( <DF>, axis = 'rows')

<DF>. rsub( <DF>, axis= 'rows')

You can specify matching axis for these operations. (default matching is on columns i.e., when

you do not give axis argument)

Broadcasting using a scalar value

s = pd.Series(np.arange(5))

print(s * 10)

0

1

2

3

4

0

10

20

30

40

dtype: int32

df = pd.DataFrame({'a':[10,20], 'b':[5,15]})

print(df*10)

0

1

a

100

200

b

50

150

So what is technically happening here is that the scalar value has been broadcasted along the same

dimensions of the Series and DataFrame above.

Broadcasting using a 1-D array - Say we have a 2-D dataframe of shape 4x3 (4 rows, 3 columns)

we can perform an operation along the x-axis by using a 1-D Series that is the same length as the

row-length:

df = pd.DataFrame( { 'a' : [ 10, 20 ], 'b' : [ 5, 15 ] } )

print(df)

print(df.iloc[0])

print(df + df.iloc[0])

output

a

b

0 10 5

1 20 15

a 10

b 5

Name: 0, dtype: int64

a

b

0 20 10

1 30 20

The general rule is this: In order to broadcast, the size of the trailing axes for both arrays in an operation

must either be the same size or one of them must be one.

So if I tried to add a 1-D array that didn't match in length, say one with 4 elements, unlike numpy

which will raise a ValueError, in Pandas you'll get a df full of NaN values:

24

PANDAS

dt = pd.DataFrame([1])

print(df+dt)

Output:

a

0 NaN

1 NaN

b

NaN

NaN

0

NaN

NaN

Now some of the great things about

pandas is that it will try to align

using existing column names and

row labels, this can get in the way of

trying

to

perform

a

fancier

broadcasting like this:

print(df[['a']] + df.iloc[0])

0

1

a

20

30

b

NaN

NaN

In the above we can see a problem when trying to broadcast using the first row as the column

alignment only aligns on the first column. To get the same form of broadcasting to occur like the

diagram above shows we have to decompose to numpy arrays which then become anonymous data:

print( df[['a']].values + df.iloc[0].values )

[ [20 15]

[30 25] ]

Generally speaking the thing to remember is that aside from scalar values which are simple, for n-D

arrays the minor/trailing axes length must match or one of them must be 1.

Handling Missing Data : Missing values are the values that cannot contribute to any

computation or we can say that missing values are the values that carry no computational

significance. Pandas library is designed to deal with huge amounts of data or big data. In such

volumes of data, there may be some values which have NA values such as NULL or NaN or

None values. These are the values that cannot participate in computation constructively. These

values are known as missing values. You can handle missing data in many ways, most common

ones are: (i) Dropping missing data

(ii) Filling missing data (Imputation)

You can use isnull() and notnull() functions to detect missing values in a panda object; it

returns True or False for each value in a pandas object if it is a missing value or not. It can be

used as :

<PandasObject> . isnull()

<PandasObject> . notnull()

<PandaObject> means it is applicable to both Series as well as Dataframe objects.

df = pd.DataFrame(np.arange(0,12).reshape(4,3), index=['a', 'c',

'e', 'f'],columns=['one', 'two', 'three'])

df = df.reindex(['a', 'b', 'c', 'd', 'e', 'f'])

print(df['one'].isnull())

print("-------------------")

print(df['one'].notnull())

a

False

b

True

c

False

d

True

e

False

f

False

Name: one, dtype: bool

------------------a

True

b

False

c

True

d

False

e

True

f

True

Name: one, dtype: bool

Calculations with Missing Data

• When summing data, NA will be treated as Zero

• If the data are all NA, then the result will be Zero

df = pd.DataFrame(np.arange(0,12).reshape(4,3),

25

18.0

PANDAS

index=['a', 'c', 'e', 'f'], columns=['one', 'two',

'three'])

df = df.reindex(['a', 'b', 'c', 'd', 'e', 'f', 'g',

'h'])

print(df['one'].sum())

df =

pd.DataFrame(index=[0,1,2,3,4,5],columns=['one','two'])

print(df['one'].sum())

0

Handling Missing Data Dropping Missing Values – To drop missing values you can use

dropna( ) in following three ways :

(a) <PandaObjed>.dropna( ). This will drop all the rows that have NaN values in them, even row

with a single NaN value in it.

(b) <PandaObjed>.dropna( how=’all’ ). With argument how= 'all', it will drop only those rows that

have all NaN values, i.e., no value is non-null in those rows.

(c) dropna(axis = 1). With argument axis= 1, will drop columns that have any NaN values in

them. Using argument how= 'all' along with argument axis= 1 will drop columns with all NaN

values.

df = pd.DataFrame(np.arange(0,12).reshape(4,3), index=['a',

'c', 'e', 'f'],columns=['one', 'two', 'three'])

df = df.reindex(['a', 'b', 'c', 'd', 'e', 'f', 'g', 'h'])

print(df.dropna())

df = pd.DataFrame(np.arange(0,12).reshape(4,3), index=['a',

'c', 'e', 'f'],columns=['one', 'two', 'three'])

df = df.reindex(['a', 'b', 'c', 'd', 'e', 'f', 'g', 'h'])

print(df.dropna(axis=1))

a

c

e

f

one

0.0

3.0

6.0

9.0

two

1.0

4.0

7.0

10.0

three

2.0

5.0

8.0

11.0

Empty DataFrame

Columns: []

Index: [a, b, c, d, e, f, g, h]

Handling Missing Data Filling Missing Values - Though dropna( ) removes the null values,

but you also lose other non-null data with it too. To avoid this, you may want to fill the missing

data with some appropriate value of your choice. For this purpose you can use fillna( ) in

following ways:

(a) <PandaObject>.fillna(<n>). This will fill all NaN values with the given <n> value.

(b) Using dictionary with fillna() to specify fill values for each column separately. You can create

a dictionary that defines fill values for each of the columns. And then you can pass this dictionary

as an argument to fillna( ), Pandas will fill the specified value for each column defined in the

dictionary. It will leave those columns untouched or unchanged that are not in the dictionary. The

syntax of fillna( ) is : <DF>.fillna( <dictionary having fill values for columns> )

df = pd.DataFrame(np.arange(0,12).reshape(4,3),

index=['a', 'c', 'e', 'f'],columns=['one', 'two',

'three'])

df = df.reindex(['a', 'b', 'c', 'd', 'e', 'f'])

print(df.fillna(-1))

print("--------------------")

print(df.fillna({'one':-1, 'two':-2,'three':-3}))

26

one

two three

a 0.0

1.0

2.0

b -1.0 -1.0

-1.0

c 3.0

4.0

5.0

d -1.0 -1.0

-1.0

e 6.0

7.0

8.0

f 9.0 10.0

11.0

-------------------one

two three

a 0.0

1.0

2.0

b -1.0 -2.0

-3.0

c 3.0

4.0

5.0

d -1.0 -2.0

-3.0

PANDAS

e

f

6.0

9.0

7.0

10.0

8.0

11.0

Missing data / operations with fill values

In Series and DataFrame, the arithmetic functions have the option of inputting a fill_value, namely a value

to substitute when at most one of the values at a location are missing. For example, when adding two

DataFrame objects, you may wish to treat NaN as 0 unless both DataFrames are missing that value, in

which case the result will be NaN.

df1 = pd.DataFrame([[10,20,np.NaN],

[np.NaN,30,40]], index=['A','B'], columns=['One',

'Two', 'Three'])

df2 = pd.DataFrame([[np.NaN,20,30], [50,40,30]],

index=['A','B'], columns=['One', 'Two', 'Three'])

print(df1)

print(df2)

A

B

One

10.0

NaN

One

NaN

50.0

A

B

One

NaN

NaN

A

B

One

10.0

50.0

A

B

print(df1+df2)

print(df1.add(df2, fill_value=0))

Two

20

30

Two

20

40

Two

40

70

Two

40

70

Three

NaN

40.0

Three

30

30

Three

NaN

70.0

Three

30.0

70.0

Flexible Comparisons

Series and DataFrame have the binary comparison methods eq, ne, lt, gt, le, and ge whose behavior is

analogous to the binary arithmetic operations described above:

print(df1.gt(df2))

A

B

One

False

False

A

B

One

True

True

print(df2.ne(df1))

NOTE : eq = equal to

ne = not equal

le = less than equal to

ge = greater than equal to

Two

False

False

Two

False

True

lt = less than

Three

False

True

Three

True

True

gt = greater than

Comparisons of Pandas Objects – Thus, normal comparison operators ( == ) do not produce

accurate result for comparison of two similar objects as they cannot compare NaN values. To

compare objects having NaN values, it is better to use equals( ) that returns True if two NaN

values are compared for equality:

<expression 1 yielding a Panda object>. equals ( <expression 2 yielding a Panda object >)

NOTE : The equls( ) tests two objects for equality, with NaNs in corresponding locations treated

as equal.

NOTE : Series or Data Frame indexes of two objects need to be the same and in the same order

for equality to be True. Also, two panda objects being compared should be of same lengths.

Trying to compare two dataframes with different number of rows or two series objects with

different lengths will result in ValueError.

Boolean Reductions - Pandas offers Boolean reductions that summarize the Boolean result for

an axis. That is, with Boolean reduction, you can get the overall result of a row or column with a

27

PANDAS

single True or False. Boolean reductions are a way to summarize all com-parison results of a

dataframe's individual elements in form of single overall Boolean result per column or per row.

For this purpose Pandas offers following Boolean reduction functions or attributes.

¢) empty. this attribute is indicator whether a DataFrame is empty. It is used as: <DF>.empty

¢) any( ). This function returns True if any element is True over requested axis, By default, it

checks if any value in a column ( default axis is 0) meets this criteria, if it does it returns True,

i.e., if any of the values along the specified axis is True, this will return True. It is used as per

following syntax : <Data Frame comparison result object>. any( axis= None )

¢) all(). Unlike any(), the all() will return True / False if only all the values on an axis are True or

False according to given comparison . It is used as per syntax :

<DataFrame comparison result object> . all(axis =None)

You can apply the reductions: empty, any(), all(), and bool() to provide a way to summarize a

boolean result.

df1 = pd.DataFrame([[10,20,np.NaN], [np.NaN,30,40]],

columns=['One', 'Two', 'Three'])

df2

=

pd.DataFrame([[np.NaN,20,30],

[50,40,30]],

columns=['One', 'Two', 'Three'])

print((df1 > 0).all())

index=['A','B'],

index=['A','B'],

One

False

Two

True

Three

False

dtype: bool

print((df1 > 0).any())

one

True

two

True

three

True

dtype: bool

You can reduce to a final boolean value

print((df1 > 0).any().any())

True

You can test if a pandas object is empty, via the empty property.

print(df1.empty)

False

Combining DataFrames

combine_first( ) - The combine_first( ) combines the two dataframes in a way that uses the

method of patching the data : Patching the data means, if in a dataframe a certain cell has

missing data and corresponding cell (the one with same index and column id) in other dataframe

has some valid data then, this method with pick the valid data from the second dataframe and

patch it in the first dataframe so that now it also has valid data at that cell.

The combine_first() is used as per syntax :

<DF>.combine_first(<DF2>)

df1 = pd.DataFrame({'A': 10, 'B': 20, 'C':np.nan, 'D':np.nan}, index=[0])

df2 = pd.DataFrame({'B': 30, 'C': 40, 'D': 50}, index=[0])

df1=df1.combine_first(df2)

print("\n------------ combine_first ----------------\n")

print(df1)

0

A

10

B

20

C

40.0

D

50.0

The concat( ) can concatenate two dataframes along the rows or along the columns. This

method is useful if the two dataframes have similar structures.

It is used as per following syntax : The concat( ) can concatenate two dataframes along the rows

or along the columns. This method is useful if the two dataframes have similar structures. It is

used as per following syntax :

28

PANDAS

pd.concat([<df1>, <df2>])

pd. concat ( [ <df1>, <df2>], ignore_index == True)

pd. concat( [ <df1>, <df2>], axis== 1 )

If you skip the axis = 1 argument, it will join the two dataframes along the rows, i.e., the result

will be the union of rows from both the dataframes.

But if you do not want this mechanism for row indexes and want to have new row indexes

generated from 0 to n - 1, then you can give argument ignore_index = True.

By default it concatenates along the rows ; to concatenate along the column, you can give

argument axis = 1.

Pandas provides various facilities for easily combining together Series, DataFrame,

and Panel objects.

pd.concat(objs,axis=0,join='outer',join_axes=None, ignore_index=False)

•

•

•

•

•

objs − This is a sequence or mapping of Series, DataFrame, or Panel objects.

axis − {0, 1, ...}, default 0. This is the axis to concatenate along.

join − {‘inner’, ‘outer’}, default ‘outer’. How to handle indexes on other axis(es). Outer

for union and inner for intersection.

ignore_index − boolean, default False. If True, do not use the index values on the

concatenation axis. The resulting axis will be labeled 0, ..., n - 1.

join_axes − This is the list of Index objects. Specific indexes to use for the other (n-1)

axes instead of performing inner/outer set logic.

Concatenating Objects - The concat function does all of the heavy lifting of performing

concatenation operations along an axis. Let us create different objects and do concatenation.

one = pd.DataFrame({'Name': ['Alex', 'Amy', 'Allen'],

'sub_id':['sub1','sub2','sub4'],'Score':[98,90,87]},index

=[1,2,3])

two = pd.DataFrame({'Name': ['Billy', 'Brian',

'Bran'],'sub_id':['sub2','sub4','sub3'],'Score':[89,80,79

]},index=[1,2,3])

print(pd.concat([one,two]))

1

2

3

1

2

3

Name

Alex

Amy

Allen

Billy

Brian

Bran

Score sub_id

98

sub1

90

sub2

87

sub4

89

sub2

80

sub4

79

sub3

Suppose we wanted to associate specific keys with each of the pieces of the

chopped up DataFrame. We can do this by using the keys argument −

one = pd.DataFrame({'Name': ['Alex', 'Amy', 'Allen'],

'sub_id':['sub1','sub2','sub4'],'Score':[98,90,87]},index=[1,2,

3])

two = pd.DataFrame({'Name': ['Billy', 'Brian',

'Bran'],'sub_id':['sub2','sub4','sub3'],'Score':[89,80,79]},ind

ex=[1,2,3])

print(pd.concat([one,two],keys=['x','y']))

x 1

2

3

y 1

2

3

Name

Alex

Amy

Allen

Billy

Brian

Bran