Operating System Assignment: Processes, Scheduling, and Types

advertisement

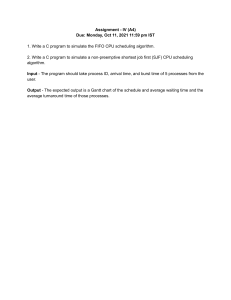

DILLA UNIVERSITY COLLEGE OF ENGINEERING AND TECHNOLOGY SCHOOL OF ELECTRICAL AND COMPUTER ENGINEERING DEPARTMENT OF COMPUTER ENGINEERING COURSE OPERATING SYSTEM INDIVIDUAL ASSIGNMENT Name ID 1, Ayele Asmamaw ………………………………………………..5275/19 SUBMITTED DATE 6/05/2023 SUBMITTED TO MR BeIay DILLA ETHIOPIA 1 Operating Systems Overview 1. What is the relationship between operating systems and computer hardware? An operating system (OS) is a software program that manages computer hardware and provides a platform for other software to run on. The OS acts as a bridge between the computer hardware and the software that runs on it. Computer hardware refers to the physical components of a computer system, such as the central processing unit (CPU), memory, storage devices, input/output (I/O) devices, and other components. The operating system interacts with these hardware components to manage their operation and provide an interface for software applications to use. The operating system communicates with the hardware through device drivers, which are software programs that provide a standardized interface between the OS and the hardware. The device drivers allow the OS to interact with the hardware components in a consistent and predictable manner, regardless of the specific hardware configuration. 3. What are the primary differences between Network Operating System and Distributed Operating System? A network operating system (NOS) and a distributed operating system (DOS) are both types of operating systems that are designed for use in networked environments. However, there are some key differences between the two: Scope: A NOS is designed to manage and control a network of computers and devices, while a DOS is designed to manage a group of interconnected computers that work together as a single system. Resource Management: A NOS typically manages network resources such as printers, servers, and storage devices, while a DOS manages both local and remote resources as a single unified system. Communication: A NOS primarily provides communication services between different devices on a network, while a DOS provides communication services between different processes running on different computers. Fault Tolerance: A DOS is designed to be fault-tolerant, meaning that it can continue to operate even if some of its nodes fail, while a NOS typically relies on redundancy and failover mechanisms to ensure availability. Security: A NOS focuses more on network security and access control, while a DOS focuses more on distributed security and protecting resources from unauthorized access. 2 Operating Systems Process 1. What is the Difference between a Job and a Process? In operating systems, a job and a process are related concepts, but they have some key differences: Definition: A job is a unit of work that a user or a program requests to be executed by the operating system. A job can consist of one or more processes. A process, on the other hand, is an instance of a program that is being executed by the operating system. Relationship: A job can consist of multiple processes that are related to each other and work together to achieve a common goal. A process, on the other hand, is an individual unit of execution that can exist independently or as part of a job. Lifecycle: A job has a lifecycle that includes the submission, processing, and completion stages. A process also has a lifecycle that includes the creation, execution, and termination stages. Resources: When a job is submitted, it may require a set of system resources such as memory, CPU time, and I/O devices. These resources are allocated to the job as a whole. When a process is created, it is allocated its own set of resources, which may include memory, CPU time, and I/O devices. Scheduling: The operating system schedules jobs for execution based on various criteria such as priority, available resources, and user input. Processes, on the other hand, are scheduled for execution by the operating system based on their priority, the state of the system, and other factors. 3. What are the advantages of Multiprocessing or Parallel System? Operating Systems Types Multiprocessing or parallel systems are operating systems that are designed to execute multiple tasks or processes simultaneously on multiple processors or cores. There are several advantages to using multiprocessing or parallel systems: Increased throughput: Multiprocessing systems can increase the overall throughput of the system by executing multiple tasks or processes simultaneously. This can result in faster response times and more efficient use of system resources. Improved performance: Parallel systems can improve the performance of individual tasks or processes by dividing them into smaller sub-tasks that can be executed concurrently. This can reduce the time required to complete each task, resulting in faster overall performance. 3 Better resource utilization: Multiprocessing systems can better utilize system resources such as CPU, memory, and I/O devices by distributing workloads across multiple processors or cores. This can result in more efficient use of system resources and improved system performance. Increased scalability: Parallel systems can be easily scaled up to handle larger workloads by adding additional processors or cores. This can provide a cost-effective way to increase system capacity as the workload grows. Improved fault tolerance: Multiprocessing systems can provide improved fault tolerance by allowing critical tasks or processes to be executed on multiple processors or cores. If one processor or core fails, the task or process can continue executing on another processor or core, reducing the impact of system failures. 1. What are the differences between Batch processing system and Real Time Processing System? Batch processing systems and real-time processing systems are two different types of operating systems that are designed for different types of applications. Here are the main differences between them: Nature of Processing: Batch processing systems are designed to process a large volume of data in batches, where data is collected and processed at a later time. Real-time processing systems, on the other hand, process data as it is received in real-time and provide immediate feedback. Response Time: Batch processing systems have a longer response time, as data is processed in batches and there can be a delay between data collection and processing. Real-time processing systems have a very short response time, as data is processed as it is received, providing immediate feedback. Resource Utilization: Batch processing systems can utilize system resources more efficiently as tasks can be scheduled and executed in an order that optimizes resource utilization. Real-time processing systems require a high level of resource availability to ensure that data is processed in real-time. Application: Batch processing systems are suitable for applications that do not require real-time processing, such as batch processing jobs like generating reports, processing large volumes of data, and billing systems. Real-time processing systems are suitable for applications that require immediate processing and response, such as online transaction processing systems, real-time control systems, and monitoring systems. Priority: Batch processing systems can prioritize tasks based on their importance and schedule them accordingly. Real-time processing systems prioritize data based on its importance and process it immediately. 4 3. What are the differences between multiprocessing and multiprogramming? Multiprocessing and multiprogramming are both techniques used in operating systems to maximize the utilization of system resources, but they are different in their approach and goals. Here are the main differences between multiprocessing and multiprogramming: Definition: Multiprocessing refers to the use of multiple processors or cores to execute multiple tasks or processes simultaneously. Multiprogramming, on the other hand, refers to the technique of executing multiple programs simultaneously on a single processor or core by dividing the processor's time among them. Primary goal: The primary goal of multiprocessing is to increase the overall throughput and performance of the system by executing multiple tasks or processes simultaneously across multiple processors or cores. The primary goal of multiprogramming is to maximize the utilization of the processor by keeping it busy and executing multiple programs simultaneously. Resource allocation: In multiprocessing, tasks or processes are assigned to different processors or cores based on the availability of resources, such as CPU time, memory, and I/O devices. In multiprogramming, the processor's time is divided among multiple programs in a time-sharing manner, with each program receiving a slice of CPU time. Context switching: In multiprocessing, context switching between different processes or tasks is done by switching between different processors or cores, which can be done quickly and efficiently. In multiprogramming, context switching between different programs is done by saving the current program's context and restoring the next program's context, which can be time-consuming and may impact performance. Concurrent execution: In multiprocessing, multiple tasks or processes can execute truly concurrently on separate processors or cores, while in multiprogramming, programs are executed interleaved in time on a single processor or core, giving the appearance of concurrency. Operating Systems Process Scheduling 1. What is a process scheduler? State the characteristics of a good process scheduler? OR What is scheduling? What criteria affects the scheduler’s performance? 5 A process scheduler is a component of an operating system that determines the order in which processes are executed on a CPU. The process scheduler determines which process should be executed next and allocates CPU time accordingly. The main goal of the process scheduler is to maximize the utilization of the CPU and ensure that all processes receive fair and efficient use of system resources. Here are the characteristics of a good process scheduler: Fairness: A good process scheduler should allocate CPU time fairly among all processes, ensuring that no process is starved of CPU time. Efficiency: The scheduler should be efficient in allocating CPU time to processes, minimizing the time that processes spend waiting for CPU time. Responsiveness: The scheduler should be able to respond quickly to changes in system load and adjust the scheduling policy accordingly. Predictability: The scheduler should be predictable in its behavior, ensuring that the order in which processes are scheduled remains consistent over time. Prioritization: The scheduler should be able to prioritize processes based on their importance or priority level, ensuring that critical processes receive sufficient CPU time. The scheduling policy used by the scheduler can have a significant impact on its performance. The following criteria can affect the scheduler's performance: CPU utilization: The scheduler's performance is measured by the CPU utilization rate, which is the percentage of time that the CPU is busy executing processes. The scheduler should aim to maximize CPU utilization while avoiding resource starvation and ensuring fairness. Response time: The response time is the time taken by the system to respond to a user request. The scheduler should aim to minimize response time by allocating CPU time efficiently and fairly. Throughput: The throughput is the number of processes completed per unit of time. The scheduler should aim to maximize the system throughput by scheduling processes efficiently and fairly. Fairness: The scheduler should aim to allocate CPU time fairly among all processes, ensuring that no process is starved of CPU time. Priority: The scheduler should be able to prioritize processes based on their priority level or importance, ensuring that critical processes receive sufficient CPU time. 6 3. What is Shortest Remaining Time, SRT scheduling? Shortest Remaining Time (SRT) scheduling is a CPU scheduling algorithm used in operating systems to schedule processes based on the amount of time remaining for the process to complete its execution. SRT is a preemptive algorithm, which means that the currently executing process can be preempted by a higher-priority process that arrives in the ready queue. In SRT scheduling, the process with the shortest remaining burst time is given the highest priority and is scheduled to run next. This means that if a new process arrives with a shorter burst time than the currently executing process, the new process will preempt the current process and start executing immediately. 5. What are the different principles which must be considered while selection of a scheduling algorithm? There are several principles that must be considered while selecting a scheduling algorithm for an operating system. These principles include: Process priorities: The scheduling algorithm should be able to prioritize processes based on their importance or priority level to ensure that critical processes receive sufficient CPU time. CPU utilization: The scheduling algorithm should aim to maximize CPU utilization while avoiding resource starvation and ensuring fairness. Response time: The scheduling algorithm should aim to minimize the response time by allocating CPU time efficiently and fairly. Throughput: The scheduling algorithm should aim to maximize the system throughput by scheduling processes efficiently and fairly. Fairness: The scheduling algorithm should aim to allocate CPU time fairly among all processes, ensuring that no process is starved of CPU time. Preemption: The scheduling algorithm should support preemption to allow higherpriority processes to interrupt lower-priority processes and execute immediately. Predictability: The scheduling algorithm should be predictable in its behavior, ensuring that the order in which processes are scheduled remains consistent over time. Overhead: The scheduling algorithm should have minimal overhead in terms of processing time and memory usage. Compatibility: The scheduling algorithm should be compatible with the system architecture and hardware, ensuring that it can effectively utilize available resources. Scalability: The scheduling algorithm should be scalable to handle larger workloads and increasing numbers of processes or threads. 7