Introduction

to Statistics

and Data Analysis

This page intentionally left blank

Introduction

to Statistics

and Data Analysis

Third Edition

Roxy Peck

California Polytechnic State University, San Luis Obispo

Chris Olsen

George Washington High School, Cedar Rapids, IA

Jay Devore

California Polytechnic State University, San Luis Obispo

Australia • Brazil • Canada • Mexico • Singapore • Spain • United Kingdom • United States

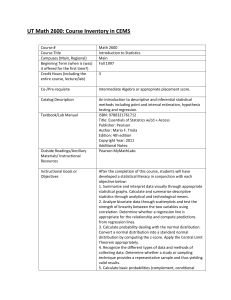

Introduction to Statistics and Data Analysis,

Third Edition

Roxy Peck, Chris Olsen, Jay Devore

Acquisitions Editor: Carolyn Crockett

Development Editor: Danielle Derbenti

Assistant Editor: Beth Gershman

Editorial Assistant: Ashley Summers

Technology Project Manager: Colin Blake

Marketing Manager: Joe Rogove

Marketing Assistant: Jennifer Liang

Marketing Communications Manager: Jessica Perry

Project Manager, Editorial Production: Jennifer Risden

Creative Director: Rob Hugel

Art Director: Vernon Boes

Print Buyer: Karen Hunt

Permissions Editor: Isabel Alves

Production Service: Newgen–Austin

Text Designer: Stuart Paterson

Photo Researcher: Gretchen Miller

Copy Editor: Nancy Dickson

Illustrator: Jade Myers; Newgen–India

Cover Designer: Stuart Paterson

Cover Image: Paul Chesley/Getty Images

Cover Printer: Courier Corporation/Kendallville

Compositor: Newgen–India

Printer: Courier Corporation/Kendallville

© 2008, 2005 Duxbury, an imprint of Thomson Brooks/Cole, a

part of The Thomson Corporation. Thomson, the Star logo, and

Brooks/Cole are trademarks used herein under license.

Thomson Higher Education

10 Davis Drive

Belmont, CA 94002-3098

USA

ALL RIGHTS RESERVED. No part of this work covered by the

copyright hereon may be reproduced or used in any form or by

any means—graphic, electronic, or mechanical, including photocopying, recording, taping, Web distribution, information storage

and retrieval systems, or in any other manner—without the written permission of the publisher.

Printed in the United States of America

1 2 3 4 5 6 7 11 10 09 08 07

ExamView ® and ExamView Pro ® are registered trademarks

of FSCreations, Inc. Windows is a registered trademark of the

Microsoft Corporation used herein under license. Macintosh and

Power Macintosh are registered trademarks of Apple Computer,

Inc. Used herein under license.

Library of Congress Control Number: 2006933904

Student Edition:

ISBN-13: 978-0-495-11873-2

ISBN-10: 0-495-11873-7

For more information about our products, contact us at:

Thomson Learning Academic Resource Center

1-800-423-0563

For permission to use material from this text or product, submit a

request online at http://www.thomsonrights.com.

Any additional questions about permissions can be submitted by

e-mail to thomsonrights@thomson.com.

■

To my nephews, Jesse and Luke Smidt, who

bet I wouldn’t put their names in this book.

R. P.

■

To my wife, Sally, and my

daughter, Anna

C. O.

■

To Carol, Allie, and Teri.

J. D.

■

About the Authors

ROXY PECK is Associate Dean of the

College of Science and Mathematics

and Professor of Statistics at California

Polytechnic State University, San Luis

Obispo. Roxy has been on the faculty

at Cal Poly since 1979, serving for six

years as Chair of the Statistics Department before

becoming Associate Dean. She received an M.S. in

Mathematics and a Ph.D. in Applied Statistics from

the University of California, Riverside. Roxy is nationally known in the area of statistics education,

and in 2003 she received the American Statistical

Association’s Founder’s Award, recognizing her contributions to K–12 and undergraduate statistics education. She is a Fellow of the American Statistical

Association and an elected member of the International Statistics Institute. Roxy has recently completed five years as the Chief Reader for the Advanced Placement Statistics Exam and currently

chairs the American Statistical Association’s Joint

Committee with the National Council of Teachers of

Mathematics on Curriculum in Statistics and Probability for Grades K–12. In addition to her texts in introductory statistics, Roxy is also co-editor of Statistical Case Studies: A Collaboration Between Academe

and Industry and a member of the editorial board for

Statistics: A Guide to the Unknown, 4th edition. Outside the classroom and the office, Roxy likes to travel

and spends her spare time reading mystery novels.

She also collects Navajo rugs and heads to New Mexico whenever she can find the time.

CHRIS OLSEN has taught statistics

at George Washington High School in

Cedar Rapids, Iowa, for over 25 years.

Chris is a past member of the Advanced Placement Statistics Test Development Committee and the author

of the Teacher’s Guide for Advanced Placement Statistics. He has been a table leader at the AP Statistics

reading for 6 years and since the summer of 1996 has

been a consultant to the College Board. Chris leads

workshops and institutes for AP Statistics teachers

in the United States and internationally. Chris was

the Iowa recipient of the Presidential Award for Excellence in Science and Mathematics Teaching in

1986. He was a regional winner of the IBM Com-

puter Teacher of the Year award in 1988 and received

the Siemens Award for Advanced Placement in mathematics in 1999. Chris is a frequent contributor to

the AP Statistics Electronic Discussion Group and

has reviewed materials for The Mathematics Teacher,

the AP Central web site, The American Statistician,

and the Journal of the American Statistical Association. He currently writes a column for Stats magazine. Chris graduated from Iowa State University

with a major in mathematics and, while acquiring

graduate degrees at the University of Iowa, concentrated on statistics, computer programming, psychometrics, and test development. Currently, he divides

his duties between teaching and evaluation; in addition to teaching, he is the assessment facilitator for

the Cedar Rapids, Iowa, Community Schools. In his

spare time he enjoys reading and hiking. He and his

wife have a daughter, Anna, who is a graduate student in Civil Engineering at Cal Tech.

JAY DEVORE earned his undergraduate degree in Engineering Science from the University of California

at Berkeley, spent a year at the University of Sheffield in England, and finished his Ph.D. in statistics at Stanford

University. He previously taught at the University of

Florida and at Oberlin College and has had visiting

appointments at Stanford, Harvard, the University

of Washington, and New York University. From 1998

to 2006, Jay served as Chair of the Statistics Department at California Polytechnic State University, San

Luis Obispo. The Statistics Department at Cal Poly

has an international reputation for activities in statistics education. In addition to this book, Jay has

written several widely used engineering statistics

texts and is currently working on a book in applied

mathematical statistics. He is the recipient of a distinguished teaching award from Cal Poly and is a

Fellow of the American Statistical Association. In

his spare time, he enjoys reading, cooking and eating

good food, tennis, and travel to faraway places. He is

especially proud of his wife, Carol, a retired elementary school teacher, his daughter Allison, who works

for the Center for Women and Excellence in Boston,

and his daughter Teri, who is finishing a graduate

program in education at NYU.

Contents

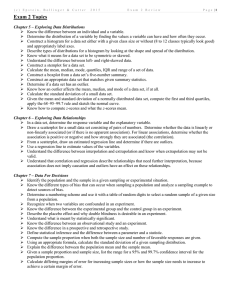

1 The Role of Statistics and the Data Analysis Process

1

1.1 Three Reasons to Study Statistics 1

1.2 The Nature and Role of Variability 4

1.3 Statistics and the Data Analysis Process 7

1.4 Types of Data and Some Simple Graphical Displays 12

Activity 1.1 Head Sizes: Understanding Variability 22

Activity 1.2 Estimating Sizes 23

Activity 1.3 A Meaningful Paragraph 24

2 Collecting Data Sensibly

27

2.1 Statistical Studies: Observation and Experimentation 27

2.2 Sampling 32

2.3 Simple Comparative Experiments 42

2.4 More on Experimental Design 51

2.5 More on Observational Studies: Designing Surveys (Optional) 56

2.6 Interpreting and Communicating the Results of

Statistical Analyses 61

Activity 2.1 Designing a Sampling Plan 63

Activity 2.2 An Experiment to Test for the Stroop Effect 64

Activity 2.3 McDonald’s and the Next 100 Billion Burgers 64

Activity 2.4 Video Games and Pain Management 65

Graphing Calculator Explorations 69

3 Graphical Methods for Describing Data

75

3.1 Displaying Categorical Data: Comparative Bar Charts

and Pie Charts 76

3.2 Displaying Numerical Data: Stem-and-Leaf Displays 87

vii

viii

■

Contents

3.3 Displaying Numerical Data: Frequency Distributions

and Histograms 97

3.4 Displaying Bivariate Numerical Data 117

3.5 Interpreting and Communicating the Results of

Statistical Analyses 127

Activity 3.1 Locating States 134

Activity 3.2 Bean Counters! 134

Graphing Calculator Explorations 141

4 Numerical Methods for Describing Data

147

4.1 Describing the Center of a Data Set 148

4.2 Describing Variability in a Data Set 159

4.3 Summarizing a Data Set: Boxplots 169

4.4 Interpreting Center and Variability: Chebyshev’s Rule,

the Empirical Rule, and z Scores 176

4.5 Interpreting and Communicating the Results of

Statistical Analyses 186

Activity 4.1 Collecting and Summarizing Numerical Data 190

Activity 4.2 Airline Passenger Weights 190

Activity 4.3 Boxplot Shapes 190

Graphing Calculator Explorations 195

5 Summarizing Bivariate Data

199

5.1 Correlation 200

5.2 Linear Regression: Fitting a Line to Bivariate Data 210

5.3 Assessing the Fit of a Line 221

5.4 Nonlinear Relationships and Transformations 238

5.5 Logistic Regression (Optional) 255

5.6 Interpreting and Communicating the Results

of Statistical Analyses 264

Activity 5.1 Exploring Correlation and Regression 267

Activity 5.2 Age and Flexibility 268

Graphing Calculator Explorations 272

6 Probability

279

6.1 Chance Experiments and Events 279

6.2 Definition of Probability 288

■

Contents ix

6.3 Basic Properties of Probability 295

6.4 Conditional Probability 302

6.5 Independence 313

6.6 Some General Probability Rules 323

6.7 Estimating Probabilities Empirically Using Simulation 335

Activity 6.1 Kisses 347

Activity 6.2 A Crisis for European Sports Fans? 347

Activity 6.3 The “Hot Hand” in Basketball 347

Graphing Calculator Explorations 351

7 Random Variables and Probability Distributions

357

7.1 Random Variables 358

7.2 Probability Distributions for Discrete Random Variables 361

7.3 Probability Distributions for Continuous Random Variables 367

7.4 Mean and Standard Deviation of a Random Variable 372

7.5 Binomial and Geometric Distributions 386

7.6 Normal Distributions 397

7.7 Checking for Normality and Normalizing Transformations 414

7.8 Using the Normal Distribution to Approximate a

Discrete Distribution 425

Activity 7.1 Rotten Eggs? 429

Graphing Calculator Explorations 434

8 Sampling Variability and Sampling Distributions

445

8.1 Statistics and Sampling Variability 446

8.2 The Sampling Distribution of a Sample Mean 450

8.3 The Sampling Distribution of a Sample Proportion 461

Activity 8.1 Do Students Who Take the SATs Multiple Times Have

an Advantage in College Admissions? 468

Graphing Calculator Explorations 471

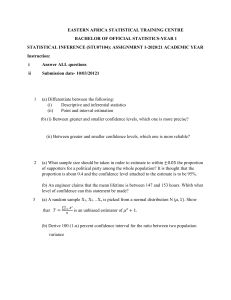

9 Estimation Using a Single Sample

475

9.1 Point Estimation 476

9.2 Large-Sample Confidence Interval for a Population Proportion 482

9.3 Confidence Interval for a Population Mean 495

x

■

Contents

9.4 Interpreting and Communicating the Results of

Statistical Analyses 508

Activity 9.1 Getting a Feel for Confidence Level 514

Activity 9.2 An Alternative Confidence Interval for a

Population Proportion 515

Activity 9.3 Verifying Signatures on a Recall Petition 516

Activity 9.4 A Meaningful Paragraph 516

Graphing Calculator Explorations 521

10 Hypothesis Testing Using a Single Sample

525

10.1 Hypotheses and Test Procedures 526

10.2 Errors in Hypotheses Testing 531

10.3 Large-Sample Hypothesis Tests for a Population Proportion 537

10.4 Hypotheses Tests for a Population Mean 550

10.5 Power and Probability of Type II Error 562

10.6 Interpreting and Communicating the Results of

Statistical Analyses 571

Activity 10.1 Comparing the t and z Distributions 574

Activity 10.2 A Meaningful Paragraph 575

Graphing Calculator Explorations 580

11 Comparing Two Populations or Treatments

583

11.1 Inferences Concerning the Difference Between Two Population

or Treatment Means Using Independent Samples 583

11.2 Inferences Concerning the Difference Between Two Population

or Treatment Means Using Paired Samples 606

11.3 Large Sample Inferences Concerning a Difference Between Two

Population or Treatment Proportions 619

11.4 Interpreting and Communicating the Results of

Statistical Analyses 629

Activity 11.1 Helium-Filled Footballs 632

Activity 11.2 Thinking About Data Collection 633

Activity 11.3 A Meaningful Paragraph 633

Graphing Calculator Explorations 641

■

Contents xi

12 The Analysis of Categorical Data and Goodness-ofFit Tests 647

12.1 Chi-Square Tests for Univariate Data 647

12.2 Tests for Homogeneity and Independence in a Twoway Table 660

12.3 Interpreting and Communicating the Results of

Statistical Analyses 677

Activity 12.1 Pick a Number, Any Number . . . 680

Activity 12.2 Color and Perceived Taste 680

Graphing Calculator Explorations 685

13 Simple Linear Regression and Correlation:

Inferential Methods 689

13.1 Simple Linear Regression Model 690

13.2 Inferences About the Slope of the Population Regression Line 702

13.3 Checking Model Adequacy 713

13.4 Inferences Based on the Estimated Regression Line

(Optional) 725

13.5 Inferences About the Population Correlation Coefficient

(Optional) 734

13.6 Interpreting and Communicating the Results of

Statistical Analyses 737

Activity 13.1 Are Tall Women from “Big” Families? 739

Graphing Calculator Exploration 746

14 Multiple Regression Analysis

749

14.1 Multiple Regression Models 750

14.2 Fitting a Model and Assessing Its Utility 763

14.3 Inferences Based on an Estimated Model 14-1

14.4 Other Issues in Multiple Regression 14-13

14.5 Interpreting and Communicating the Results of

Statistical Analyses 14-26

Activity 14.1 Exploring the Relationship Between Number of

Predictors and Sample Size 780

Sections and/or chapter numbers in color can be found at www.thomsonedu.com/statistics/peck

xii

■

Contents

15 Analysis of Variance

783

15.1 Single-Factor ANOVA and the F Test 784

15.2 Multiple Comparisons 800

15.3 The F Test for a Randomized Block Experiment 15-1

15.4 Two-Factor ANOVA 15-9

15.5 Interpreting and Communicating the Results of

Statistical Analyses 15-22

Activity 15.1 Exploring Single-Factor ANOVA 808

Graphing Calculator Exploration 811

16 Nonparametric (Distribution-Free) Statistical Methods

16-1

16.1 Distribution-Free Procedures for Inferences About a Difference

Between Two Population or Treatment Means Using Independent

Samples (Optional) 16-1

16.2 Distribution-Free Procedures for Inferences About a Difference

Between Two Population or Treatment Means Using

Paired Samples 16-10

16.3 Distribution-Free ANOVA 16-23

Appendix A: Statistical Tables 813

Appendix B: References 833

Answers to Selected Odd-Numbered Exercises 835

Index I-1

Sections and/or chapter numbers in color can be found at www.thomsonedu.com/statistics/peck

Preface

I

n a nutshell, statistics is about understanding the role that variability plays in drawing conclusions based on data. Introduction to Statistics and Data Analysis, Third Edition develops this crucial understanding of variability through its focus on the data

analysis process.

An Organization That Reflects the Data Analysis Process

Students are introduced early to the idea that data analysis is a process that begins with

careful planning, followed by data collection, data description using graphical and

numerical summaries, data analysis, and finally interpretation of results. This process

is described in detail in Chapter 1, and the ordering of topics in the first ten chapters

of the book mirrors this process: data collection, then data description, then statistical

inference.

The logical order in the data analysis process can be pictured as shown in the following figure.

Step 1:

Acknowledging

Variability—

Collecting

Data Sensibly

Step 2:

Describing

Variability

in the Data—

Descriptive

Statistics

Step 3:

Drawing

Conclusions

in a Way That

Recognizes

Variability in

the Data

Unlike many introductory texts, Introduction to Statistics and Data Analysis,

Third Edition is organized in a manner consistent with the natural order of the data

analysis process:

xiii

xiv

■

Preface

Step 1:

Acknowledging

Variability—

Collecting Data

Sensibly

Step 2:

Describing

Variability

in the Data—

Descriptive

Statistics

Chapters 1–2

Chapters 3–5

Probability Supports

the Connection

Chapters 6–7

Step 3:

Drawing

Conclusions

in a Way That

Recognizes

Variability in

the Data

Chapters 8–15

The Importance of Context and Real Data

Statistics is not about numbers; it is about data—numbers in context. It is the context

that makes a problem meaningful and something worth considering. For example, exercises that ask students to compute the mean of 10 numbers or to construct a dotplot

or boxplot of 20 numbers without context are arithmetic and graphing exercises. They

become statistics problems only when a context gives them meaning and allows for interpretation. While this makes for a text that may appear “wordy” when compared to

traditional mathematics texts, it is a critical and necessary component of a modern statistics text.

Examples and exercises with overly simple settings do not allow students to practice interpreting results in authentic situations or give students the experience necessary to be able to use statistical methods in real settings. We believe that the exercises

and examples are a particular strength of this text, and we invite you to compare the

examples and exercises with those in other introductory statistics texts.

Many students are skeptical of the relevance and importance of statistics. Contrived problem situations and artificial data often reinforce this skepticism. A strategy

that we have employed successfully to motivate students is to present examples and

exercises that involve data extracted from journal articles, newspapers, and other published sources. Most examples and exercises in the book are of this nature; they cover

a very wide range of disciplines and subject areas. These include, but are not limited

to, health and fitness, consumer research, psychology and aging, environmental research, law and criminal justice, and entertainment.

A Focus on Interpretation and Communication

Most chapters include a section titled “Interpreting and Communicating the Results of

Statistical Analyses.” These sections include advice on how to best communicate the

results of a statistical analysis and also consider how to interpret statistical summaries

■

Preface xv

found in journals and other published sources. A subsection titled “A Word to the

Wise” reminds readers of things that must be considered in order to ensure that statistical methods are employed in reasonable and appropriate ways.

Consistent with Recommendations for the Introductory Statistics Course Endorsed

by the American Statistical Association

In 2005, the American Statistical Association endorsed the report “College Guidelines

in Assessment and Instruction for Statistics Education (GAISE Guidelines),” which

included the following six recommendations for the introductory statistics course:

1.

2.

3.

4.

5.

6.

Emphasize statistical literacy and develop statistical thinking.

Use real data.

Stress conceptual understanding rather than mere knowledge of procedures.

Foster active learning in the classroom.

Use technology for developing conceptual understanding and analyzing data.

Use assessments to improve and evaluate student learning.

Introduction to Statistics and Data Analysis, Third Edition is consistent with these recommendations and supports the GAISE guidelines in the following ways:

1. Emphasize statistical literacy and develop statistical thinking.

Statistical literacy is promoted throughout the text in the many examples and exercises that are drawn from the popular press. In addition, a focus on the role of variability, consistent use of context, and an emphasis on interpreting and communicating results in context work together to help students develop skills in statistical

thinking.

2. Use real data.

The examples and exercises from Introduction to Statistics and Data Analysis,

Third Edition are context driven and reference sources that include the popular

press as well as journal articles.

3. Stress conceptual understanding rather than mere knowledge of procedures.

Nearly all exercises in Introduction to Statistics and Data Analysis, Third Edition

are multipart and ask students to go beyond just computation. They focus on interpretation and communication, not just in the chapter sections specifically devoted

to this topic, but throughout the text. The examples and explanations are designed

to promote conceptual understanding. Hands-on activities in each chapter are also

constructed to strengthen conceptual understanding. Which brings us to . . .

4. Foster active learning in the classroom.

While this recommendation speaks more to pedagogy and classroom practice, Introduction to Statistics and Data Analysis, Third Edition provides 33 hands-on activities in the text and additional activities in the accompanying instructor resources

that can be used in class or assigned to be completed outside of class. In addition,

accompanying online materials allow students to assess their understanding and develop a personalized learning plan based on this assessment for each chapter.

5. Use technology for developing conceptual understanding and analyzing data.

The computer has brought incredible statistical power to the desktop of every investigator. The wide availability of statistical computer packages such as MINITAB,

S-Plus, JMP, and SPSS, and the graphical capabilities of the modern microcomputer have transformed both the teaching and learning of statistics. To highlight the

role of the computer in contemporary statistics, we have included sample output

xvi

■

Preface

throughout the book. In addition, numerous exercises contain data that can easily

be analyzed by computer, though our exposition firmly avoids a presupposition that

students have access to a particular statistical package. Technology manuals for

specific packages, such as MINITAB and SPSS, are available in the online materials that accompany this text.

The appearance of hand-held calculators with significant statistical and graphing capability has also changed statistics instruction in classrooms where access to

computers is still limited. The computer revolution of a previous generation is now

being writ small—or, possibly we should say, smaller—for the youngest generation

of investigators. There is not, as we write, anything approaching universal or even

wide agreement about the proper role for the graphing calculator in college statistics classes, where access to a computer is more common. At the same time, for

tens of thousands of students in Advanced Placement Statistics in our high schools,

the graphing calculator is the only dependable access to statistical technology.

This text allows the instructor to balance the use of computers and calculators

in a manner consistent with his or her philosophy and presents the power of the calculator in a series of Graphing Calculator Explorations. These are placed at the end

of each chapter, unobtrusive to those instructors whose technology preference is

the computer while still accessible to those instructors and students comfortable

with graphing calculator technology. As with computer packages, our exposition

avoids assuming the use of a particular calculator and presents the calculator capabilities in a generic format; specifically, we do not teach particular keystroke sequences, believing that the best source for such specific information is the calculator manual. For those using a TI graphing calculator, there is a technology manual

available in the online materials that accompany this text. As much as possible, the

calculator explorations are independent of each other, allowing instructors to pick

and choose calculator topics that are more relevant to their particular courses.

6. Use assessments to improve and evaluate student learning.

Assessment materials in the form of a test bank, quizzes, and chapter exams are

available in the instructor resources that accompany this text. The items in the test

bank reflect the data-in-context philosophy of the text’s exercises and examples.

Advanced Placement Statistics

We have designed this book with a particular eye toward the syllabus of the Advanced

Placement Statistics course and the needs of high school teachers and students. Concerns expressed and questions asked in teacher workshops and on the AP Statistics Electronic Discussion Group have strongly influenced our exposition of certain topics, especially in the area of experimental design and probability. We have taken great care to

provide precise definitions and clear examples of concepts that Advanced Placement

Statistics instructors have acknowledged as difficult for their students. We have also expanded the variety of examples and exercises, recognizing the diverse potential futures

envisioned by very capable students who have not yet focused on a college major.

Topic Coverage

Our book can be used in courses as short as one quarter or as long as one year in duration. Particularly in shorter courses, an instructor will need to be selective in deciding which topics to include and which to set aside. The book divides naturally into four

major sections: collecting data and descriptive methods (Chapters 1–5), probability

material (Chapters 6–8), the basic one- and two-sample inferential techniques (Chapters 9–12), and more advanced inferential methodology (Chapters 13–16). We include

■

Preface xvii

an early chapter (Chapter 5) on descriptive methods for bivariate numerical data. This

early exposure raises questions and issues that should stimulate student interest in the

subject; it is also advantageous for those teaching courses in which time constraints

preclude covering advanced inferential material. However, this chapter can easily be

postponed until the basics of inference have been covered, and then combined with

Chapter 13 for a unified treatment of regression and correlation.

With the possible exception of Chapter 5, Chapters 1–10 should be covered in order. We anticipate that most instructors will then continue with two-sample inference

(Chapter 11) and methods for categorical data analysis (Chapter 12), although regression could be covered before either of these topics. Optional portions of Chapter 14

(multiple regression) and chapter 15 (analysis of variance) and Chapter 16 (nonparametric methods) are included in the online materials that accompany this text.

A Note on Probability

The content of the probability chapters is consistent with the Advanced Placement Statistics course description. It includes both a traditional treatment of probability and

probability distributions at an introductory level, as well as a section on the use of simulation as a tool for estimating probabilities. For those who prefer a briefer and more

informal treatment of probability, the book Statistics: The Exploration and Analysis of

Data, by Roxy Peck and Jay Devore, may be a more appropriate choice. Except for

the treatment of probability and the omission of the Graphing Calculator Explorations,

it parallels the material in this text. Please contact your sales rep for more information

about this alternative and other alternative customized options available to you.

New to This Edition

There are a number of changes in the Third Edition, including the following:

■

■

■

■

■

■

More than 80 new examples and more than 180 new exercises that use data

from current journals and newspapers are included. In addition, more of the

exercises specifically ask students to write (for example, by requiring students to

explain their reasoning, interpret results, and comment on important features of an

analysis).

Examples and exercises that make use of data sets that can be accessed online from the text website are designated by an icon in the text, as are examples that are further illustrated in the technology manuals (MINITAB, SPSS,

etc.) that are available in the online materials that accompany this text.

More than 90 exercises have video solutions, presented by Brian Kotz of Montgomery College, which can be viewed online or downloaded for viewing later.

These exercises are designated by an icon in the text.

A number of new hands-on activities have been added to the end-of-chapter

activities. These activities can be used as a chapter capstone or can be integrated

at appropriate places as the chapter material is covered in class.

Students can now go online to test their understanding of the material covered

in each chapter and develop a personalized learning plan to assist them in addressing any areas of weakness.

A detailed description of the data analysis process now appears in Chapter 1.

Although the order of topics in the text generally mirrors the data collection

process with methods of data collection covered first, two graphical displays (dotplots and bar charts) are covered in Chapter 1 so that these simple graphical analysis tools can be used in the conceptual development of experimental design and so

xviii

■

Preface

■

■

■

■

that students have some tools for summarizing the data they collect through sampling and experimentation in the exercises, examples, and activities of Chapter 2.

A new optional section on logistic regression is now included in Chapter 5 for

those who would like more complete coverage of data analysis techniques for categorical data.

Advanced topics that are often omitted in a one-quarter or one-semester course,

such as inference and variable selection methods in multiple regression (Sections

14.3 and 14.4) and analysis of variance for randomized block and two-factor designs (Sections 15.3 and 15.4), have been moved to the online materials that

accompany this text.

Coverage of distribution-free procedures for inferences about the difference

between two population or treatment means using independent samples (formerly

Section 11.4) has been moved to Chapter 16. This chapter, titled “Nonparametric

(Distribution-Free) Statistical Methods,” also includes new material on inferences

about the difference between two population or treatment means using paired

samples and distribution-free analysis of variance, and is available in the online

materials that accompany this text.

Updated materials for instructors. In addition to the usual instructor supplements such as a complete solutions manual and a test bank, the following are also

available to instructors:

An Instructor’s Resource Binder, which contains additional examples that

can be incorporated into classroom presentations and cross-references to resources such as Fathom, Workshop Statistics, and Against All Odds. Of particular interest to those teaching Advanced Placement Statistics, the binder also

includes additional data analysis questions of the type encountered on the free

response portion of the Advanced Placement exam, as well as a collection of

model responses.

For those who use student response systems in class, a set of “clicker” questions (see JoinIn™ on TurningPoint ® under Instructor Resources—Media) for

assessing student understanding is available.

■

■

Student Resources

■

Available Online

If your text includes a printed access card, you will have instant access to the following resources referenced throughout your text:

■

■

■

■

■

ThomsonNOW™ (see below for a full description of this powerful study tool).

Complete step-by-step instructions for MINITAB, Excel, TI-83 Graphing Calculator, JMP, and SPSS indicated by the icon throughout the text.

Data sets formatted for MINITAB, Excel, SPSS, SAS, JMP, TI-83, Fathom, and

ASCII indicated by ● icon throughout the text.

Applets used in the Activities found in the text.

Print

Student Solutions Manual (ISBN 0-495-11876-1) by Mary Mortlock of California

Polytechnic State University, San Luis Obispo.

Check your work—and your understanding—with this manual, which provides

worked-out solutions to the odd-numbered problems in the text.

■

Preface xix

Activities Workbook (0-495-11883-4) by Roxy Peck.

Use this convenient workbook to take notes, record data, and cement your learning by

completing textbook and bonus activities for each chapter.

■

Media

ThomsonNOW™ Homework (0-495-39230-8)

Save time, learn more, and succeed in the course with this online suite of resources

(including an integrated eBook and Personalized Study plans) that give you the choices

and tools you need to study smarter and get the grade. Note: If your text did not include a printed access card for ThomsonNOW, it is available for purchase online at

http://www.thomsonedu.com.

Instructor Resources

■

Print

Annotated Instructor’s Edition (0-495-11888-5)

The Annotated Instructor’s Edition contains answers for all exercises, as well as an annotated table of contents with comments written by Roxy Peck.

Instructor’s Solutions Manual (0-495-11879-6) by Mary Mortlock of California

Polytechnic State University, San Luis Obispo.

This manual contains worked-out solutions to all of the problems in the text.

Instructor’s Resource Binder (0-495-11892-3) prepared by Chris Olsen.

Includes transparencies and Microsoft ® PowerPoint ® slides to make lecture and class

preparation quick and easy. New to this edition, we have added some Activities Worksheets authored by Carol Marchetti of Rochester Institute of Technology.

Test Bank (0-495-11880-X) by Josh Tabor of Wilson High School, Peter FlannaganHyde of Phoenix Country Day School, and Chris Olsen.

Includes test questions for each section of the book.

Activities Workbook (0-495-11883-4) by Roxy Peck.

Students can take notes, record data, and complete activities in this ready-to-use workbook, which includes activities from the textbook plus additional bonus activities for

each chapter.

■

Media

Enhanced WebAssign (ISBN 0-495-10963-0)

Enhanced WebAssign is the most widely used homework system in higher education. Available for this title, Enhanced WebAssign allows you to assign, collect, grade,

and record homework assignments via the web. This proven homework system has

been enhanced to include links to the textbook sections, video examples, and problemspecific tutorials. Enhanced WebAssign is more than a homework system—it is a complete learning system for students.

ThomsonNOW™ Homework (0-495-39230-8)

ThomsonNOW’s Personalized Study plans allow students to study smarter by diagnosing their weak areas, and helping them focus on what they need to learn. Based on

responses to chapter specific pre-tests, the plans suggest a course of study for students,

xx

■

Preface

including many multimedia and interactive exercises to help students better learn the

material. After completing the study plan, they can take a post-test to measure their

progress and understanding.

ExamView ® Computerized Testing (0-495-11886-9)

Create, deliver, and customize tests and study guides (both print and online) in minutes with this easy-to-use assessment and tutorial system, which contains all questions

from the Test Bank in electronic format.

JoinIn™ on TurningPoint ® (0-495-11881-8)

The easiest student classroom response system to use, JoinIn features instant classroom assessment and learning.

Acknowledgments

We are grateful for the thoughtful feedback from the following reviewers that has

helped to shape this text over the last two editions:

■

Reviewers of the Third Edition

Arun K. Agarwal

Grambling State University

Marvin Creech

Chapman University

Jacob Amidon

Finger Lakes Community College

Ron Degges

North Dakota State University

Holly Ashton

Pikes Peak Community College

Hemangini Deshmukh

Mercyhurst College

Barb Barnet

University of Wisconsin at

Platteville

Ann Evans

University of Massachusetts at

Boston

Central Carolina Community College

Eddie Bevilacqua

State University of New York

College of Environmental Science

& Forestry

Piotr Bialas

Borough of Manhattan Community

College

Kelly Black

Union College

Gabriel Chandler

Connecticut College

Andy Chang

Youngstown State University

Jerry Chen

Suffolk Community College

Richard Chilcoat

Wartburg College

Guangxiong Fang

Daniel Webster College

Sharon B. Finger

Nicholls State University

Steven Garren

James Madison University

Tyler Haynes

Saginaw Valley State University

Sonja Hensler

St. Petersburg College

Trish Hutchinson

Angelo State University

Bessie Kirkwood

Sweet Briar College

Jeff Kollath

Oregon State University

■

Preface xxi

Christopher Lacke

Rowan University

Michael I. Ratliff

Northern Arizona University

Michael Leitner

Louisiana State University

David R. Rauth

Duquesne University

Zia Mahmood

College of DuPage

Kevin J. Reeves

East Texas Baptist University

Art Mark

Georgoa Military College

Robb Sinn

North Georgia College & State

University

David Mathiason

Rochester Institute of Technology

Bob Mattson

Eureka College

C. Mark Miller

York College

Megan Mocko

University of Florida

Kane Nashimoto

James Madison University

Helen Noble

San Diego State University

Broderick Oluyede

Georgia Southern University

Elaine Paris

Mercy College

Shelly Ray Parsons

Aims Community College

Greg Sliwa

Broome Community College

Angela Stabley

Portland Community College

Jeffery D. Sykes

Ouachita Baptist University

Yolande Tra

Rochester Institute of Technology

Nathan Wetzel

University of Wisconsin Stevens

Point

Dr. Mark Wilson

West Virginia University Institute

of Technology

Yong Yu

Ohio State University

Toshiyuki Yuasa

University of Houston

Judy Pennington-Price

Midway College

Hazard Community College

Jackson County High School

■

Reviewers for the Second Edition

Jim Bohan

Manheim Township High School

John Imbrie

University of Virginia

Pat Buchanan

Pennsylvania State University

Pam Martin

Northeast Louisiana University

Mary Christman

American University

Iowa State University

Paul Myers

Woodward Academy

Mark Glickman

Boston University

Deanna Payton

Oklahoma State University

xxii

■

Preface

Michael Phelan

Chapman University

Lawrence D. Ries

University of Missouri Columbia

Alan Polansky

Northern Illinois University

Joe Ward

Health Careers High School

Additionally, we would like to express our thanks and gratitude to all who helped to

make this book possible:

■

■

■

■

■

■

■

■

■

■

■

Carolyn Crockett, our editor and friend, for her unflagging support and thoughtful advice for more than a decade.

Danielle Derbenti, Beth Gershman, and Colin Blake at Thomson Brooks/Cole, for

the development of all of the ancillary materials details and for keeping us on

track.

Jennifer Risden, our project manager at Thomson Brooks/Cole, and Anne Seitz at

Hearthside Publishing Services, for artfully managing the myriad of details associated with the production process.

Nancy Dickson for her careful copyediting.

Brian Kotz for all his hard work producing the video solutions.

Mary Mortlock for her diligence and care in producing the student and instructor

solutions manuals for this book.

Josh Tabor and Peter Flannagan-Hyde for their contributions to the test bank that

accompanies the book.

Beth Chance and Francisco Garcia for producing the applet used in the confidence

interval activities.

Gary McClelland for producing the applets from Seeing Statistics used in the regression activities.

Bittner Development Group for checking the accuracy of the manuscript.

Rachel Dagdagan, a student at Cal Poly, for her help in the preparation of the

manuscript.

And, as always, we thank our families, friends, and colleagues for their continued

support.

Roxy Peck

Chris Olsen

Jay Devore

Peck, Olsen, Devore’s

Introduction to Statistics and Data Analysis, Third Edition . . .

. . . Emphasizes

Statistical Literacy and

Statistical Thinking

■ E x e r c i s e s 2.1–2.9 ..............................

▲

Context Driven

Applications

2.1 ▼ The article “Television’s Value to Kids: It’s All in

How They Use It” (Seattle Times, July 6, 2005) described

a study in which researchers analyzed standardized test results and television viewing habits of 1700 children. They

found that children who averaged more than two hours of

television viewing per day when they were younger than

3 tended to score lower on measures of reading ability and

short term memory.

a. Is the study described an observational study or an

experiment?

b. Is it reasonable to conclude that watching two or more

hours of television is the cause of lower reading scores?

Explain.

Real data examples and exercises

throughout the text are drawn from the

popular press, as well as journal articles.

Page 31

..........................................................................................................................................

E x a m p l e 3 . 2 2 Education Level and Income—Stay in School!

Focus on Interpreting

and Communicating

The time-series plot shown in Figure 3.34 appears on the U.S. Census Bureau web

site. It shows the average earnings of workers by educational level as a proportion of

the average earnings of a high school graduate over time. For example, we can see

from this plot that in 1993 the average earnings for people with bachelor’s degrees

was about 1.5 times the average for high school graduates. In that same year, the average earnings for those who were not high school graduates was only about 75%

Chapter sections on interpreting and

communicating results are designed to

emphasize the importance of being

■

Page 123

▲

able to interpret statistical output and

communicate its meaning to nonstatisticians. A subsection entitled “A

Word to the Wise” reminds students of

things that must be considered in order

to ensure that statistical methods are

used in reasonable and appropriate

ways.

4.5

........................................................................................................................................

Interpreting and Communicating the Results of

Statistical Analyses

As was the case with the graphical displays of Chapter 3, the primary function of the

descriptive tools introduced in this chapter is to help us better understand the variables

under study. If we have collected data on the amount of money students spend on textbooks at a particular university, most likely we did so because we wanted to learn

about the distribution of this variable (amount spent on textbooks) for the population

of interest (in this case, students at the university). Numerical measures of center and

spread and boxplots help to enlighten us, and they also allow us to communicate to

others what we have learned from the data.

A Word to the Wise: Cautions and Limitations ...............................................

When computing or interpreting numerical descriptive measures, you need to keep in

mind the following:

Page 186

1. Measures of center don’t tell all. Although measures of center, such as the mean

and the median, do give us a sense of what might be considered a typical value for

a variable, this is only one characteristic of a data set. Without additional information about variability and distribution shape, we don’t really know much about the

behavior of the variable.

2. Data distributions with different shapes can have the same mean and standard deviation. For example, consider the following two histograms:

Page 188

xxiii

Peck, Olsen, Devore’s

Introduction to Statistics and Data Analysis, Third Edition . . .

. . . Encourages

Conceptual Understanding

and Active Learning

Thirty-three hands-on activities

in the text, and additional activities

in the accompanying instructor resources, can be used to encourage active learning inside or

outside the classroom.

▲

Hands-on Activities in

Every Chapter

A c t i v i t y 2.4

Video Games and Pain Management

Background: Video games have been used for pain management by doctors and therapists who believe that the

attention required to play a video game can distract the

player and thereby decrease the sensation of pain. The paper “Video Games and Health” (British Medical Journal

[2005]:122–123) states:

“However, there has been no long term follow-up and

no robust randomized controlled trials of such interventions. Whether patients eventually tire of such

games is also unclear. Furthermore, it is not known

whether any distracting effect depends simply on concentrating on an interactive task or whether the content of games is also an important factor as there have

been no controlled trials comparing video games with

other distracters. Further research should examine

factors within games such as novelty, users’ preferences, and relative levels of challenge and should

compare video games with other potentially distracting activities.”

1. Working with a partner, select one of the areas of potential research suggested in the passage from the paper

and formulate a specific question that could be addressed

by performing an experiment.

2. Propose an experiment that would provide data to address the question from Step 1. Be specific about how

subjects might be selected, what the experimental conditions (treatments) would be, and what response would be

measured.

3. At the end of Section 2.3 there are 10 questions that

can be used to evaluate an experimental design. Answer

these 10 questions for the design proposed in Step 2.

4. After evaluating your proposed design, are there

any changes you would like to make to your design?

Explain.

Page 65

E x p l o r a t i o n 3.3 Scaling the Histogram

Figure 3.44

Page 144

xxiv

▲

Figure 3.43

When we constructed a histogram in the previous Exploration there were some numbers that we temporarily ignored in the view screen. We would like to return to those

numbers now because they can seriously affect the look of a histogram. When we left

the histogram the numbers in our view window were set as shown in Figure 3.43.

These settings place the view window over the calculator’s Cartesian system for effective viewing of the histogram from the data of Example 3.15.

We would now like to experiment a bit with the “Xscale.” In all statistical graphs

produced by the calculator the Xscale and Yscale choices will control the placement

of the little “tick” marks on the x and y axis. In Exploration 3.2, the XScale and YScale

were set at 5 and 1, respectively. The little tick marks on the x-axis were at multiples

of 5. (Because of the data, the x-axis tick marks were at multiples of 5 and the y-axis

didn’t appear.) Change the Xscale value to 2 and redraw the histogram. You should see

a graph similar to Figure 3.44. The y-axis tick marks now appear at multiples of 2, . . . .

Note that changing the Xscale has altered not only the tick marks but also the class

intervals for the histogram. The choice of class intervals can significantly change the

look and feel of the histogram. The choice of Xscale can affect judgments about the

shape of the histogram. Because of this possibility it is wise to look at a histogram

with varying choices of the Xscale value. If the shape appears very similar for different choices of Xscale, you can interpret and describe the shape with more confidence.

However, if different Xscale choices alter the look of the histogram you should probably be more tentative.

Graphing Calculator

Explorations

Found at the end of most chapters,

these explorations allow students to

actively experience technology

and promote statistical thinking.

. . . Uses Technology to

Develop Conceptual

Understanding

Real Data Sets

Real data sets promote statistical

analysis, as well as technology use. They

are formatted for MINITAB, Excel, SPSS,

Continue generating intervals until you have seen

at least 1000 intervals, and then answer the following

question:

a. How does the proportion of intervals constructed that

contain m ! 100 compare to the stated confidence level of

Within the Activities, applets are

used to illustrate and promote a

Page 515

deeper understanding of the key

statistical concepts.

SAS, JMP, TI-83, and ASCII and are

indicated by the

icon throughout

●

▲

Applets Allow Students to

See the Concepts

. . . And Analyze Data

the text.

▲

27.0 would then fall in the class 27.0 to "27.5.

..........................................................................................................................................

E x a m p l e 3 . 1 4 Enrollments at Public Universities

● States differ widely in the percentage of college students who are enrolled in public institutions. The National Center for Education Statistics provided the accompanying data on this percentage for the 50 U.S. states for fall 2002.

Percentage of College Students Enrolled in

Public Institutions

3.22–3.34

3.22 ● Medicare’s new medical plans offer a wide range

of variations and choices for seniors when picking a drug

plan (San Luis Obispo Tribune, November 25, 2005). The

monthly cost for a stand-alone drug plan varies from plan

to plan and from state to state. The accompanying table

gives the premium for the plan with the lowest cost for

each state.

State

Alabama

Alaska

Arizona

Arkansas

California

Colorado

Connecticut

Cost per Month

(dollars)

14.08

20.05

6.14

10.31

5.41

8.62

7.32

Page 101

Cost per Month

(dollars)

State

New Hampshire

New Jersey

New Mexico

New York

North Carolina

North Dakota

Ohio

Oklahoma

Oregon

Pennsylvania

Rhode Island

South Carolina

South Dakota

Tennessee

Texas

19.60

4.43

10.65

4.10

13.27

1.87

14.43

10.07

6.93

10.14

7.32

16.57

1.87

14.08

10.31

Step-by-Step Technology

Instructions

Complete online step-by-step instructions

for MINITAB, Excel, TI-83 Graphing

Page 113

Calculator, JMP, and SPSS are indicated

by the

icon throughout the text.

▲

■ Exercises

86 96 66 86 80 78 62 81 77 81 77 76

73 69 76 90 78 82 70 83 46 80 78 93

66 91 76 86 58 81 91 57 81 88 71 86

84 57 44 82 79 67 86 75 55 75 80 80

85 69

The smallest observation is 44 (Rhode Island) and the largest is 96 (Alaska). It

...........................................................................................

is reasonable to start the first class interval at 40 and let each interval have a width

..........................................................................................................................................

Example 1.9

Revisiting Motorcycle Helmets

Example 1.8 used data on helmet use from a sample of 1700 motorcyclists to construct a frequency distribution (Table 1.1). Figure 1.5 shows the bar chart corresponding to this frequency distribution.

Frequency

900

800

700

600

500

Page 17

xxv

Peck, Olsen, Devore’s

Introduction to Statistics and Data Analysis, Third Edition . . .

. . . Evaluates Students’

Understanding

▲

Evaluate as You Teach

Using Clickers

Using clicker content authored by Roxy

Peck, evaluate your students’ understanding

immediately—in class—after teaching a

concept. Whether it’s a quick quiz, a poll to

be used for in-class data, or just checking in

to see if it is time to move on, our quality,

tested content creates truly interactive

classrooms with students’ responses shaping

the lecture as you teach.

3.25 ● ▼ USA Today (July 2, 2001) gave the following information regarding cell phone use for men and women:

Women

0 to "200

200 to "400

400 to "600

600 to "800

.56

.18

.10

.16

.61

.18

.13

.08

Relative Frequency

a. Construct a relative frequency histogram for average

number of minutes used per month for men. How would

you describe the shape of this histogram?

b. Construct a relative frequency histogram for average

number of minutes used per month for women. Is the distribution for average number of minutes used per month

similar for men and women? Explain.

c. What proportion of men average less than 400 minutes

per month?

d. Estimate the proportion of men that average less than

500 minutes per month.

E i

h

i

f

h

450

ThomsonNOW allows students to

assess their understanding and develop

a personalized learning plan based on

this assessment for each chapter.

Pre- and post-tests include feedback

authored by Roxy Peck.

xxvi

▲

Get Feedback from Roxy

Peck on What You Need

to Learn

Video Solutions Motivate

Student Understanding

More than 90 exercises will have video

solutions, presented by Brian Kotz of

Montgomery College, which can be

viewed online or downloaded for later

viewing. These exercises will be

designated by the

in the text.

▲

Men

▲

Average Number

of Minutes Used

per Month

Page 114

Resources for Students*

Resources for Instructors*

AVA I L A B L E O N L I N E

PRINT

ThomsonNOW ™ Personalized

Study.

Annotated Instructor’s Edition

(0-495-11888-5)

Step-by-step instructions for MINITAB, Excel, TI-83

Graphing Calculator, JMP, and SPSS.

Instructor’s Solutions Manual

(0-495-11879-6)

Data sets formatted for MINITAB, Excel, SPSS,

SAS, JMP, TI-83, and ASCII.

Instructor’s Resource Binder

(0-495-11892-3)

■

Video solutions.

Test Bank (0-495-11880-X)

■

Applets used in the Activities.

Activities Workbook (0-495-11883-4)

■

■

■

PRINT

MEDIA

Student Solutions Manual

(0-495-11876-1)

Enhanced WebAssign

(0-495-10963-0)

Activities Workbook

(0-495-11883-4)

ThomsonNOW ™ Homework

(0-495-39230-8)

MEDIA

ExamView ® Computerized Testing

(0-495-11886-9)

ThomsonNOW ™ Homework

(0-495-39230-8)

JoinIn™ on TurningPoint ®

(0-495-11881-8)

* See the full preface for complete descriptions.

xxvii

This page intentionally left blank

1

© 2006 Jupiterimages/Brand x Pictures/trbphoto

Chapter

The Role of Statistics

and the Data Analysis

Process

W

e encounter data and conclusions based on data every day. Statistics is the scientific discipline that provides methods to help us make sense of data. Some people

are suspicious of conclusions based on statistical analyses. Extreme skeptics, usually

speaking out of ignorance, characterize the discipline as a subcategory of lying—

something used for deception rather than for positive ends. However, we believe that

statistical methods, used intelligently, offer a set of powerful tools for gaining insight

into the world around us. Statistical methods are used in business, medicine, agriculture, social sciences, natural sciences, and applied sciences, such as engineering. The

widespread use of statistical analyses in diverse fields has led to increased recognition

that statistical literacy—a familiarity with the goals and methods of statistics—should

be a basic component of a well-rounded educational program.

The field of statistics teaches us how to make intelligent judgments and informed

decisions in the presence of uncertainty and variation. In this chapter, we consider the

nature and role of variability in statistical settings, introduce some basic terminology,

and look at some simple graphical displays for summarizing data.

1.1

........................................................................................................................................

Three Reasons to Study Statistics

Because statistical methods are used to organize, summarize, and draw conclusions

from data, a familiarity with statistical techniques and statistical literacy is vital in today’s society. Everyone needs to have a basic understanding of statistics, and many

Improve your understanding and save time! Visit http://www.thomsonedu.com/login where you will find:

■

■

■

Step-by-step instructions for MINITAB, Excel, TI-83, SPSS,

and JMP

Video solutions to selected exercises

Data sets available for selected examples and exercises

■

■

Exam-prep pre-tests that build a Personalized Learning

Plan based on your results so that you know exactly

what to study

Help from a live statistics tutor 24 hours a day

1

2 Chapter 1

■

The Role of Statistics and the Data Analysis Process

college majors require at least one course in statistics. There are three important reasons why statistical literacy is important: (1) to be informed, (2) to understand issues

and be able to make sound decisions based on data, and (3) to be able to evaluate decisions that affect your life. Let’s explore each reason in detail.

■

The First Reason: Being Informed ....................................................................

How do we decide whether claims based on numerical information are reasonable?

We are bombarded daily with numerical information in news, in advertisements, and

even in conversation. For example, here are a few of the items employing statistical

methods that were part of just two weeks’ news.

■

■

■

■

■

The increasing popularity of online shopping has many consumers using Internet

access at work to browse and shop online. In fact, the Monday after Thanksgiving

has been nicknamed “Cyber Monday” because of the large increase in online purchases that occurs on that day. Data from a large-scale survey conducted in early

November, 2005, by a market research firm was used to compute estimates of the

percent of men and women who shop online while at work. The resulting estimates probably won’t make most employers happy— 42% of the men and 32% of

the women in the sample were shopping online at work! (Detroit Free Press and

San Luis Obispo Tribune, November 26, 2005)

A story in the New York Times titled “Students Ace State Tests, but Earn D’s From

U.S.” investigated discrepancies between state and federal standardized test results. When researchers compared state test results to the most recent results on

the National Assessment of Educational Progress (NAEP), they found that large

differences were common. For example, one state reported 89% of fourth graders

were proficient in reading based on the state test, while only 18% of fourth graders

in that state were considered proficient in reading on the federal test! An explanation of these large discrepancies and potential consequences was discussed. (New

York Times, November 26, 2005)

Can dogs help patients with heart failure by reducing stress and anxiety? One of

the first scientific studies of the effect of therapeutic dogs found that a measure of

anxiety decreased by 24% for heart patients visited by a volunteer and dog, but

only by 10% for patients visited by just the volunteer. Decreases were also noted

in measures of stress and heart and lung pressure, leading researchers to conclude

that the use of therapeutic dogs is beneficial in the treatment of heart patients. (San

Luis Obispo Tribune, November 16, 2005)

Late in 2005, those eligible for Medicare had to decide which, if any, of the many

complex new prescription medication plans was right for them. To assist with this

decision, a program called PlanFinder that compares available options was made

available online. But are seniors online? Based on a survey conducted by the Los

Angeles Times, it was estimated that the percentage of senior citizens that go online is only between 23% and 30%, causing concern over whether providing only

an online comparison is an effective way to assist seniors with this important decision. (Los Angeles Times, November 27, 2005)

Are kids ruder today than in the past? An article titled “Kids Gone Wild” summarized data from a survey conducted by the Associated Press. Nearly 70% of

those who participated in the survey said that people were ruder now than 20 years

ago, with kids being the biggest offenders. As evidence that this is a serious problem, the author of the article also referenced a 2004 study conducted by Public

Agenda, a public opinion research group. That study indicated that more than

1.1

■

■

■

■

■

Three Reasons to Study Statistics 3

one third of teachers had either seriously considered leaving teaching or knew a

colleague who left because of intolerable student behavior. (New York Times,

November 27, 2005)

When people take a vacation, do they really leave work behind? Data from a poll

conducted by Travelocity led to the following estimates: Approximately 40% of

travelers check work email while on vacation, about 33% take cell phones on vacation in order to stay connected with work, and about 25% bring a laptop computer on vacation. The travel industry is paying attention—hotels, resorts, and

even cruise ships are now making it easier for “vacationers” to stay connected to

work. (San Luis Obispo Tribune, December 1, 2005)

How common is domestic violence? Based on interviews with 24,000 women in

10 different countries, a study conducted by the World Health Organization found

that the percentage of women who have been abused by a partner varied widely—

from 15% of women in Japan to 71% of women in Ethiopia. Even though the domestic violence rate differed dramatically from country to country, in all of the

countries studied women who were victims of domestic violence were about

twice as likely as other women to be in poor health, even long after the violence

had stopped. (San Francisco Chronicle, November 25, 2005)

Does it matter how long children are bottle-fed? Based on a study of 2121 children between the ages of 1 and 4, researchers at the Medical College of Wisconsin concluded that there was an association between iron deficiency and the length

of time that a child is bottle-fed. They found that children who were bottle-fed

between the ages of 2 and 4 were three times more likely to be iron deficient than

those who stopped by the time they were 1 year old. (Milwaukee Journal Sentinel

and San Luis Obispo Tribune, November 26, 2005)

Parental involvement in schools is often regarded as an important factor in student

achievement. However, data from a study of low-income public schools in California led researchers to conclude that other factors, such as prioritizing student

achievement, encouraging teacher collaboration and professional development,

and using assessment data to improve instruction, had a much greater impact on

the schools’ Academic Performance Index. (Washington Post and San Francisco

Chronicle, November 26, 2005)

To be an informed consumer of reports such as those described above, you must be

able to do the following:

1. Extract information from tables, charts, and graphs.

2. Follow numerical arguments.

3. Understand the basics of how data should be gathered, summarized, and analyzed

to draw statistical conclusions.

Your statistics course will help prepare you to perform these tasks.

■

The Second Reason: Making Informed Judgments .....................................

Throughout your personal and professional life, you will need to understand statistical information and make informed decisions using this information. To make these

decisions, you must be able to do the following:

1. Decide whether existing information is adequate or whether additional information

is required.

2. If necessary, collect more information in a reasonable and thoughtful way.

3. Summarize the available data in a useful and informative manner.

4 Chapter 1

■

The Role of Statistics and the Data Analysis Process

4. Analyze the available data.

5. Draw conclusions, make decisions, and assess the risk of an incorrect decision.

People informally use these steps to make everyday decisions. Should you go out for

a sport that involves the risk of injury? Will your college club do better by trying to

raise funds with a benefit concert or with a direct appeal for donations? If you choose

a particular major, what are your chances of finding a job when you graduate? How

should you select a graduate program based on guidebook ratings that include information on percentage of applicants accepted, time to obtain a degree, and so on? The

study of statistics formalizes the process of making decisions based on data and provides the tools for accomplishing the steps listed.

■

The Third Reason: Evaluating Decisions That Affect Your Life ......................

While you will need to make informed decisions based on data, it is also the case that

other people will use statistical methods to make decisions that affect you as an individual. An understanding of statistical techniques will allow you to question and evaluate decisions that affect your well-being. Some examples are:

■

■

■

■

Many companies now require drug screening as a condition of employment. With

these screening tests there is a risk of a false-positive reading (incorrectly indicating drug use) or a false-negative reading (failure to detect drug use). What are the

consequences of a false result? Given the consequences, is the risk of a false result acceptable?

Medical researchers use statistical methods to make recommendations regarding

the choice between surgical and nonsurgical treatment of such diseases as coronary heart disease and cancer. How do they weigh the risks and benefits to reach

such a recommendation?

University financial aid offices survey students on the cost of going to school and

collect data on family income, savings, and expenses. The resulting data are used

to set criteria for deciding who receives financial aid. Are the estimates they use

accurate?

Insurance companies use statistical techniques to set auto insurance rates, although some states restrict the use of these techniques. Data suggest that young

drivers have more accidents than older ones. Should laws or regulations limit how

much more young drivers pay for insurance? What about the common practice of

charging higher rates for people who live in urban areas?

An understanding of elementary statistical methods can help you to evaluate

whether important decisions such as the ones just mentioned are being made in a reasonable way.

We hope that this textbook will help you to understand the logic behind statistical

reasoning, prepare you to apply statistical methods appropriately, and enable you to

recognize when statistical arguments are faulty.

1.2

........................................................................................................................................

The Nature and Role of Variability

Statistics is a science whose focus is on collecting, analyzing, and drawing conclusions

from data. If we lived in a world where all measurements were identical for every individual, all three of these tasks would be simple. Imagine a population consisting of

1.2

■

The Nature and Role of Variability 5

all students at a particular university. Suppose that every student took the same number of units, spent exactly the same amount of money on textbooks this semester, and

favored increasing student fees to support expanding library services. For this population, there is no variability in the number of units, amount spent on books, or student

opinion on the fee increase. A researcher studying a sample from this population to

draw conclusions about these three variables would have a particularly easy task. It

would not matter how many students the researcher included in the sample or how the

sampled students were selected. In fact, the researcher could collect information on

number of units, amount spent on books, and opinion on the fee increase by just stopping the next student who happened to walk by the library. Because there is no variability in the population, this one individual would provide complete and accurate information about the population, and the researcher could draw conclusions based on

the sample with no risk of error.

The situation just described is obviously unrealistic. Populations with no variability are exceedingly rare, and they are of little statistical interest because they present

no challenge! In fact, variability is almost universal. It is variability that makes life

(and the life of a statistician, in particular) interesting. We need to understand variability to be able to collect, analyze, and draw conclusions from data in a sensible way.

One of the primary uses of descriptive statistical methods is to increase our understanding of the nature of variability in a population.

Examples 1.1 and 1.2 illustrate how an understanding of variability is necessary

to draw conclusions based on data.

..........................................................................................................................................

Example 1.1

If the Shoe Fits

The graphs in Figure 1.1 are examples of a type of graph called a histogram. (The

construction and interpretation of such graphs is discussed in Chapter 3.) Figure

1.1(a) shows the distribution of the heights of female basketball players who played

at a particular university between 1990 and 1998. The height of each bar in the

Frequency

Frequency

40

20

30

20

10

10

0

0

58

60

62

64

66

68

70

72

74

76

78

Height

(a)

58

60

62

64

66

68 70

Height

72

74

76

78

(b)

F i g u r e 1 . 1 Histograms of heights (in inches) of female athletes: (a) basketball

players; (b) gymnasts.

6 Chapter 1

■

The Role of Statistics and the Data Analysis Process

graph indicates how many players’ heights were in the corresponding interval. For

example, 40 basketball players had heights between 72 in. and 74 in., whereas only

2 players had heights between 66 in. and 68 in. Figure 1.1(b) shows the distribution

of heights for members of the women’s gymnastics team over the same period. Both

histograms are based on the heights of 100 women.

The first histogram shows that the heights of female basketball players varied,

with most heights falling between 68 in. and 76 in. In the second histogram we see

that the heights of female gymnasts also varied, with most heights in the range of

60 in. to 72 in. It is also clear that there is more variation in the heights of the gymnasts than in the heights of the basketball players, because the gymnast histogram

spreads out more about its center than does the basketball histogram.

Now suppose that a tall woman (5 ft 11 in.) tells you she is looking for her sister

who is practicing with her team at the gym. Would you direct her to where the basketball team is practicing or to where the gymnastics team is practicing? What reasoning would you use to decide? If you found a pair of size 6 shoes left in the locker

room, would you first try to return them by checking with members of the basketball

team or the gymnastics team?

You probably answered that you would send the woman looking for her sister

to the basketball practice and that you would try to return the shoes to a gymnastics

team member. To reach these conclusions, you informally used statistical reasoning

that combined your own knowledge of the relationship between heights of siblings

and between shoe size and height with the information about the distributions of

heights presented in Figure 1.1. You might have reasoned that heights of siblings

tend to be similar and that a height as great as 5 ft 11 in., although not impossible,

would be unusual for a gymnast. On the other hand, a height as tall as 5 ft 11 in.

would be a common occurrence for a basketball player. Similarly, you might have

reasoned that tall people tend to have bigger feet and that short people tend to have

smaller feet. The shoes found were a small size, so it is more likely that they belong