MACHINE LEARNING AND DATA MINING

Final syllabus topics and solve

https://drive.google.com/drive/folders/1CoNE3BkgOJNj6q9lhPhjcs

qyK0fYDUAG?usp=sharing

TASNIM

C191267

1

Contents

1

Segment 4 .............................................................................................................................................................................................................. 1

1.1

2

3

1.1.1

Data Cube and OLAP, ............................................................................................................................................................................. 1

1.1.2

Data Warehouse Design and Usage ...................................................................................................................................................... 1

Classification: ......................................................................................................................................................................................................... 6

2.1.1

Basic Concepts,....................................................................................................................................................................................... 6

2.1.2

Decision Tree Induction, ......................................................................................................................................................................... 6

2.1.3

Bayes Classification Methods, ................................................................................................................................................................ 6

2.1.4

Rule-Based Classification, ....................................................................................................................................................................... 8

2.1.5

Model Evaluation and Selection. ............................................................................................................................................................ 9

Classification Advanced Topics: .............................................................................................................................................................................. 9

3.1.1

Techniques to Improve, .......................................................................................................................................................................... 9

3.1.2

classification Accuracy: ........................................................................................................................................................................... 9

3.1.3

Ensemble Methods................................................................................................................................................................................. 9

3.1.4

Bayesian Belief Networks, .................................................................................................................................................................... 10

3.1.5

Classification by Backpropagation, ....................................................................................................................................................... 11

3.1.6

Support Vector Machines, .................................................................................................................................................................... 13

3.1.7

Lazy Learners (or Learning from Your Neighbors): ............................................................................................................................... 13

3.1.8

Other Classification Methods ............................................................................................................................................................... 14

3.2

4

Data Warehouse Modeling: .......................................................................................................................................................................... 1

Cluster Analysis: ........................................................................................................................................................................................... 14

3.2.1

Basic Concepts,..................................................................................................................................................................................... 14

3.2.2

Partitioning Methods,........................................................................................................................................................................... 14

3.2.3

Hierarchical Methods ........................................................................................................................................................................... 16

3.2.4

Density-Based Methods deletion. ........................................................................................................................................................ 20

Segment 5 ............................................................................................................................................................................................................ 20

4.1

Outliers Detection and Analysis: .............................................................................................................................................................. 20

4.2

Outliers Detection Methods....................................................................................................................................................................... 20

4.2.1

5

6

Mining Contextual and Collective ........................................................................................................................................................ 21

From class lecture:............................................................................................................................................................................................... 23

5.1

K-Nearest Neighbor (KNN) Algorithm for Machine Learning ..................................................................................................................... 23

5.2

Apriori vs FP-Growth in Market Basket Analysis – A Comparative Guide .................................................................................................... 25

5.3

Random Forest Algorithm ........................................................................................................................................................................... 30

5.4

Regression Analysis in Machine learning ................................................................................................................................................... 31

5.5

Confusion Matrix in Machine Learning....................................................................................................................................................... 34

Exercise: ............................................................................................................................................................................................................... 35

1 Segment 4

1.1 Data Warehouse Modeling:

1.1.1 Data Cube and OLAP,

1.1.2 Data Warehouse Design and Usage

1. a) What do you mean by Data Warehouse? Do you think if you have proper database, still need data warehouse? Justify your

answer.

b) Consider about Agora Super Shop. Based on "Agora" develop and draw a sample data cube.

c) Why do we need data mart?

1.

a) Draw the multi-tiered architecture of a data warehouse and explain briefly. 4

b) Compare between star and snowflake schema with necessary figures. 3

c) Explain the different choices for data cube materialization. 3

What Is a Data Warehouse?

Tasnim (C191267)

2

Data warehousing provides architectures and tools for business executives to systematically organize, understand, and use their data to make

strategic decisions. Datawarehouse systems are valuable tools in today’s competitive, fast-evolving world. In the last several years, many firms

have spent millions of dollars in building enterprise-wide data warehouses. Many people feel that with competition mounting in every industry,

data warehousing is the latest must-have marketing weapon—a way to retain customers by learning more about their needs.

Let’s take a closer look at each of these key features.

•

•

•

•

Subject-oriented: A data warehouse is organized around major subjects such as customer, supplier, product, and sales. Rather than

concentrating on the day-to-day operations and transaction processing of an organization, a data warehouse focuses on the modeling

and analysis of data for decision makers. Hence, data warehouses typically provide a simple and concise view of particular subject issues

by excluding data that are not useful in the decision support process.

Integrated: A data warehouse is usually constructed by integrating multiple heterogeneous sources, such as relational databases, flat

files, and online transaction records. Data cleaning and data integration techniques are applied to ensure consistency in naming

conventions, encoding structures, attribute measures, and so on.

Time-variant: Data are stored to provide information from an historic perspective (e.g., the past 5–10 years). Every key structure in the

data warehouse contains, either implicitly or explicitly, a time element.

Nonvolatile: A data warehouse is always a physically separate store of data transformed from the application data found in the

operational environment. Due to this separation, a data warehouse does not require transaction processing, recovery, and concurrency

control mechanisms. It usually requires only two operations in data accessing: initial loading of data and access of data.

Differences between Operational Database Systems and Data Warehouses

Because most people are familiar with commercial relational database systems, it is easy to understand what a data warehouse is by

comparing these two kinds of systems. The major task of online operational database systems is to perform online transaction and

query processing. These systems are called online transaction processing (OLTP) systems. They cover most of the day-to-day

operations of an organization such

as purchasing, inventory, manufacturing, banking, payroll, registration, and accounting. Data warehouse systems, on the other hand,

serve users or knowledge workers in the role of data analysis and decision making. Such systems can organize and present data in

various formats in order to accommodate the diverse needs of different users. These systems are known as online analytical

processing (OLAP) systems.

The major distinguishing features of OLTP and OLAP are summarized as follows: Users and system orientation: An OLTP system

is customer-oriented and is used for transaction and query processing by clerks, clients, and information technology professionals. An

OLAP system is market-oriented and is used for data analysis by knowledge workers, including managers, executives, and analysts.

Data contents: An OLTP system manages current data that, typically, are too detailed to be easily used for decision making. An

OLAP system manages large amounts of historic data, provides facilities for summarization and aggregation, and stores and manages

information at different levels of granularity.

These features make the data easier to use for informed decision making.

4.1.3 But, Why Have a Separate Data Warehouse?

Reasons for having a separate data warehouse:

1. Performance: Operational databases are optimized for specific tasks, while data warehouse queries are more complex and

computationally intensive. Processing OLAP queries directly on operational databases would degrade performance for

operational tasks.

2. Concurrency: Operational databases support concurrent transactions and employ concurrency control mechanisms. Applying

these mechanisms to OLAP queries would hinder concurrent transactions and reduce OLTP throughput.

3. Data Structures and Uses: Operational databases store detailed raw data, while data warehouses require historic data for

decision support. Operational databases lack complete data for decision making, requiring consolidation from diverse sources

in data warehouses.

Although separate databases are necessary now, vendors are working to optimize operational databases for OLAP queries, potentially

reducing the separation between OLTP and OLAP systems.

Tasnim (C191267)

3

Data Warehousing: A Multitiered Architecture

Data warehouses often adopt a three-tier architecture, as presented in Figure 4.1.

Figure from Slide

Figure from book

The three-tier data warehousing architecture consists of a bottom tier with a warehouse database server, a middle tier with an OLAP server, and

a top tier with front-end client tools.

The bottom tier, implemented as a relational database system, handles data extraction, cleaning, transformation, and loading functions. It

collects data from operational databases or external sources, merges similar data, and updates the data warehouse. Gateways like ODBC, OLEDB,

and JDBC are used for data extraction, and a metadata repository stores information about the data warehouse.

The middle tier consists of an OLAP server, which can be based on a relational OLAP (ROLAP) or a multi-dimensional OLAP (MOLAP) model.

ROLAP maps multidimensional operations to relational operations, while MOLAP directly implements multidimensional data and operations. The

OLAP server is responsible for processing and analyzing the data stored in the data warehouse.

The top tier is the front-end client layer that provides tools for querying, reporting, analysis, and data mining. It includes query and reporting

tools, analysis tools, and data mining tools. These tools allow users to interact with the data warehouse, perform ad-hoc queries, generate

reports, and gain insights through analysis and data mining techniques.

Overall, the three-tier data warehousing architecture facilitates efficient data extraction, processing, and analysis, enabling organizations to make

informed decisions and leverage business intelligence.

Data Warehouse Models: Enterprise Warehouse,

Data Mart, and Virtual Warehouse

The three data warehouse models are the enterprise warehouse, data mart, and virtual warehouse.

1. Enterprise Warehouse: An enterprise warehouse collects data from various sources across the entire organization. It provides

corporate-wide data integration and is cross-functional in scope. It contains detailed and summarized data, and its size can

range from gigabytes to terabytes or more. It requires extensive business modeling and may take a long time to design and

build. Examples include a data warehouse that integrates sales, marketing, finance, and inventory data from multiple

departments within a company.

Tasnim (C191267)

4

2. Data Mart: A data mart is a subset of data from the enterprise warehouse that is tailored for a specific group of users or a

particular subject area. It focuses on selected subjects, such as sales, marketing, or customer data. Data marts usually contain

summarized data and are implemented on low-cost departmental servers. They have a shorter implementation cycle compared

to enterprise warehouses. Examples include a marketing data mart that provides data on customer demographics, purchase

history, and campaign performance for the marketing department.

3. Virtual Warehouse: A virtual warehouse is a set of views over operational databases. It provides efficient query processing by

materializing only selected summary views. Virtual warehouses are relatively easy to build but require excess capacity on

operational database servers. They can be used when real-time access to operational data is required, but with the benefits of a

data warehouse's querying capabilities.

Pros and Cons of Top-Down and Bottom-Up Approaches:

• Top-Down Approach: In the top-down approach, an enterprise warehouse is developed first, providing a systematic solution

and minimizing integration problems. However, it can be expensive, time-consuming, and lacks flexibility due to the difficulty

of achieving consensus on a common data model for the entire organization.

• Bottom-Up Approach: The bottom-up approach involves designing and deploying independent data marts, offering flexibility,

lower cost, and faster returns on investment. However, integrating these disparate data marts into a consistent enterprise data

warehouse can pose challenges.

It is recommended to develop data warehouse systems in an incremental and evolutionary manner. This involves defining a high-level

corporate data model first, which provides a consistent view of data across subjects and reduces future integration problems. Then,

independent data marts can be implemented in parallel with the enterprise warehouse, gradually expanding the data warehouse

ecosystem.

houses and departmental data marts, will greatly reduce future integration problems.

Second, independent data marts can be implemented in parallel with the enterprise

Data Cube: A Multidimensional Data Model:

•

What Are the Elements of a Data Cube?

Now that we’ve laid the foundations, let’s get acquainted with the data cube terminology. Here is a summary of the individual

elements, starting from the definition of a data cube itself:

•

A data cube is a multi-dimensional data structure.

•

A data cube is characterized by its dimensions (e.g., Products, States, Date).

•

Each dimension is associated with corresponding attributes (for example, the attributes of the Products dimensions are T-Shirt,

Shirt, Jeans and Jackets).

•

The dimensions of a cube allow for a concept hierarchy (e.g., the T-shirt attribute in the Products dimension can have its own,

such as T-shirt Brands).

•

All dimensions connect in order to create a certain fact – the finest part of the cube.

•

A data cube, such as sales, allows data to be modeled and viewed in multiple dimensions

•

Dimension tables, such as item (item_name, brand, type), or time(day, week, month, quarter, year)

•

Fact table contains measures (such as dollars_sold) and keys to each of the related dimension tables

•

In data warehousing literature, an n-D base cube is called a base cuboid. The top most 0-D cuboid, which holds the highestlevel of summarization, is called the apex cuboid. The lattice of cuboids forms a data cube.

What Are the Data Cube Operations?

Data cubes are a very convenient tool whenever one needs to build summaries or extract certain portions of the entire dataset. We will

cover the following:

•

Rollup – decreases dimensionality by aggregating data along a certain dimension

•

Drill-down – increases dimensionality by splitting the data further

•

Slicing – decreases dimensionality by choosing a single value from a particular dimension

•

Dicing – picks a subset of values from each dimension

•

Pivoting – rotates the data cube

Advantages of data cubes:

•

Multi-dimensional analysis

•

Interactivity

•

Speed and efficiency:

•

Data aggregation

•

Helps in giving a summarized view of data.

•

Data cubes store large data in a simple way.

•

Data cube operation provides quick and better analysis,

•

Improve performance of data.

Disadvantages of data cube:

Tasnim (C191267)

5

•

Complexity:

•

Data size limitations:

•

Performance issues:

•

Data integrity

•

Cost:

•

Inflexibility

Stars, Snowflakes, and Fact Constellations: Schemas for Multidimensional Data Models

Schema is a logical description of the entire database. It includes the name and description of records of all record types including all

associated data-items and aggregates. Much like a database, a data warehouse also requires to maintain a schema. A database uses

relational model, while a data warehouse uses Star, Snowflake, and Fact Constellation schema. In this chapter, we will discuss the

schemas used in a data warehouse.

Star Schema:

• Each dimension in a star schema is represented with only one-dimension table.

• This dimension table contains the set of attributes.

• The following diagram shows the sales data of a company with respect to the four dimensions, namely time, item, branch, and

location.

•

•

There is a fact table at the center. It contains the keys to each of four dimensions.

The fact table also contains the attributes, namely dollars sold and units sold.

Snowflake Schema

• Some dimension tables in the Snowflake schema are normalized.

• The normalization splits up the data into additional tables.

• Unlike Star schema, the dimensions table in a snowflake schema are normalized. For example, the item dimension table in star

schema is normalized and split into two dimension tables, namely item and supplier table.

Tasnim (C191267)

6

•

•

Now the item dimension table contains the attributes item_key, item_name, type, brand, and supplier-key.

The supplier key is linked to the supplier dimension table. The supplier dimension table contains the attributes supplier_key

and supplier_type.

Fact Constellation Schema

• A fact constellation has multiple fact tables. It is also known as galaxy schema.

• The following diagram shows two fact tables, namely sales and shipping.

•

•

•

•

The sales fact table is same as that in the star schema.

The shipping fact table has the five dimensions, namely item_key, time_key, shipper_key, from_location, to_location.

The shipping fact table also contains two measures, namely dollars sold and units sold.

It is also possible to share dimension tables between fact tables. For example, time, item, and location dimension tables are

shared between the sales and shipping fact table.

Extraction, Transformation, and Loading (ETL)

▪ Data extraction

▪ get data from multiple, heterogeneous, and external sources

▪ Data cleaning

▪ detect errors in the data and rectify them when possible

▪ Data transformation

▪ convert data from legacy or host format to warehouse format

▪ Load

▪ sort, summarize, consolidate, compute views, check integrity, and build indicies and partitions

▪ Refresh

▪ propagate the updates from the data sources to the warehouse

2 Classification:

2.1.1 Basic Concepts,

https://www.datacamp.com/blog/classification-machine-learning

2.1.2 Decision Tree Induction,

https://www.softwaretestinghelp.com/decision-tree-algorithm-examples-data-mining/

2.1.3 Bayes Classification Methods,

2.1.3.1

Naïve Bayes Classifier Algorithm

Naïve Bayes algorithm is a supervised learning algorithm, which is based on Bayes theorem and used for solving classification

problems.

It is mainly used in text classification that includes a high-dimensional training dataset.

Naïve Bayes Classifier is one of the simple and most effective Classification algorithms which helps in building the fast machine

learning models that can make quick predictions.

It is a probabilistic classifier, which means it predicts on the basis of the probability of an object.

Some popular examples of Naïve Bayes Algorithm are spam filtration, Sentimental analysis, and classifying articles.

Why is it called Naïve Bayes?

The Naïve Bayes algorithm is comprised of two words Naïve and Bayes, Which can be described as:

Tasnim (C191267)

7

Naïve: It is called Naïve because it assumes that the occurrence of a certain feature is independent of the occurrence of other features.

Such as if the fruit is identified on the bases of color, shape, and taste, then red, spherical, and sweet fruit is recognized as an apple.

Hence each feature individually contributes to identify that it is an apple without depending on each other.

Bayes: It is called Bayes because it depends on the principle of Bayes' Theorem.

Bayes' Theorem:

Bayes' theorem is also known as Bayes' Rule or Bayes' law, which is used to determine the probability of a hypothesis with prior

knowledge. It depends on the conditional probability.

The formula for Bayes' theorem is given as:

Where,

P(A|B) is Posterior probability: Probability of hypothesis A on the observed event B.

P(B|A) is Likelihood probability: Probability of the evidence given that the probability of a hypothesis is true.

P(A) is Prior Probability: Probability of hypothesis before observing the evidence.

P(B) is Marginal Probability: Probability of Evidence.

Working of Naïve Bayes' Classifier:

Working of Naïve Bayes' Classifier can be understood with the help of the below example:

Suppose we have a dataset of weather conditions and corresponding target variable "Play". So using this dataset we need to decide

that whether we should play or not on a particular day according to the weather conditions. So to solve this problem, we need to

follow the below steps:

Convert the given dataset into frequency tables.

Generate Likelihood table by finding the probabilities of given features.

Now, use Bayes theorem to calculate the posterior probability.

Problem: If the weather is sunny, then the Player should play or not?

Solution: To solve this, first consider the below dataset:

0

Outlook

Play

Rainy

Yes

1

Sunny

2

Overcast

3

Overcast

4

Sunny

5

Rainy

6

Sunny

7

Overcast

8

Rainy

9

Sunny

10

Sunny

11

Rainy

12

Overcast

13

Overcast

Frequency table for the Weather Conditions:

Yes

Yes

Yes

No

Yes

Yes

Yes

No

No

Yes

No

Yes

Yes

Weather

Overcast

Rainy

Sunny

Total

Likelihood table weather condition:

Yes

5

2

3

10

Weather

No

Overcast

0

Rainy

2

Sunny

2

All

4/14=0.29

Applying Bayes'theorem:

Yes

5

2

3

10/14=0.71

P(Yes|Sunny)= P(Sunny|Yes)*P(Yes)/P(Sunny)

P(Sunny|Yes)= 3/10= 0.3

P(Sunny)= 0.35

P(Yes)=0.71

Tasnim (C191267)

No

0

2

2

5

5/14= 0.35

4/14=0.29

5/14=0.35

8

So P(Yes|Sunny) = 0.3*0.71/0.35= 0.60

P(No|Sunny)= P(Sunny|No)*P(No)/P(Sunny)

P(Sunny|NO)= 2/4=0.5

P(No)= 0.29

P(Sunny)= 0.35

So P(No|Sunny)= 0.5*0.29/0.35 = 0.41

So as we can see from the above calculation that P(Yes|Sunny)>P(No|Sunny)

Hence on a Sunny day, Player can play the game.

Advantages of Naïve Bayes Classifier:

Naïve Bayes is one of the fast and easy ML algorithms to predict a class of datasets.

It can be used for Binary as well as Multi-class Classifications.

It performs well in Multi-class predictions as compared to the other Algorithms.

It is the most popular choice for text classification problems.

Disadvantages of Naïve Bayes Classifier:

Naive Bayes assumes that all features are independent or unrelated, so it cannot learn the relationship between features.

Applications of Naïve Bayes Classifier:

It is used for Credit Scoring.

It is used in medical data classification.

It can be used in real-time predictions because Naïve Bayes Classifier is an eager learner.

It is used in Text classification such as Spam filtering and Sentiment analysis.

Types of Naïve Bayes Model:

There are three types of Naive Bayes Model, which are given below:

Gaussian: The Gaussian model assumes that features follow a normal distribution. This means if predictors take continuous values

instead of discrete, then the model assumes that these values are sampled from the Gaussian distribution.

Multinomial: The Multinomial Naïve Bayes classifier is used when the data is multinomial distributed. It is primarily used for

document classification problems, it means a particular document belongs to which category such as Sports, Politics, education, etc.

The classifier uses the frequency of words for the predictors.

Bernoulli: The Bernoulli classifier works similar to the Multinomial classifier, but the predictor variables are the independent

Booleans variables. Such as if a particular word is present or not in a document. This model is also famous for document classification

tasks.

2.1.4 Rule-Based Classification,

IF-THEN Rules

Rule-based classifier makes use of a set of IF-THEN rules for classification. We can express a rule in the following from −

IF condition THEN conclusion

Let us consider a rule R1,

R1: IF age = youth AND student = yes

THEN buy_computer = yes

Points to remember −

The IF part of the rule is called rule antecedent or precondition.

The THEN part of the rule is called rule consequent.

The antecedent part the condition consist of one or more attribute tests and these tests are logically ANDed.

The consequent part consists of class prediction.

Note − We can also write rule R1 as follows −

R1: (age = youth) ^ (student = yes))(buys computer = yes)

If the condition holds true for a given tuple, then the antecedent is satisfied.

Rule Extraction

Here we will learn how to build a rule-based classifier by extracting IF-THEN rules from a decision tree.

Points to remember −

To extract a rule from a decision tree −

One rule is created for each path from the root to the leaf node.

To form a rule antecedent, each splitting criterion is logically ANDed.

The leaf node holds the class prediction, forming the rule consequent.

Tasnim (C191267)

9

2.1.5 Model Evaluation and Selection.

https://neptune.ai/blog/ml-model-evaluation-and-selection

3 Classification Advanced Topics:

3.1.1 Techniques to Improve,

3.1.2 classification Accuracy:

3.1.3 Ensemble Methods

Ensemble learning helps improve machine learning results by combining several models. This approach allows the production of

better predictive performance compared to a single model. Basic idea is to learn a set of classifiers (experts) and to allow them to vote.

Advantage: Improvement in predictive accuracy.

Disadvantage: It is difficult to understand an ensemble of classifiers.

Why do ensembles work?

Dietterich(2002) showed that ensembles overcome three problems –

Statistical Problem –

The Statistical Problem arises when the hypothesis space is too large for the amount of available data. Hence, there are many

hypotheses with the same accuracy on the data and the learning algorithm chooses only one of them! There is a risk that the accuracy

of the chosen hypothesis is low on unseen data!

Computational Problem –

The Computational Problem arises when the learning algorithm cannot guarantees finding the best hypothesis.

Representational Problem –

The Representational Problem arises when the hypothesis space does not contain any good approximation of the target class(es).

Main Challenge for Developing Ensemble Models?

The main challenge is not to obtain highly accurate base models, but rather to obtain base models which make different kinds of

errors. For example, if ensembles are used for classification, high accuracies can be accomplished if different base models misclassify

different training examples, even if the base classifier accuracy is low.

Methods for Independently Constructing Ensembles –

Majority Vote

Bagging and Random Forest

Randomness Injection

Feature-Selection Ensembles

Error-Correcting Output Coding

Methods for Coordinated Construction of Ensembles –

Boosting

Stacking

Reliable Classification: Meta-Classifier Approach

Co-Training and Self-Training

Types of Ensemble Classifier –

Bagging:

Bagging (Bootstrap Aggregation) is used to reduce the variance of a decision tree. Suppose a set D of d tuples, at each iteration i, a

training set Di of d tuples is sampled with replacement from D (i.e., bootstrap). Then a classifier model Mi is learned for each training

set D < i. Each classifier Mi returns its class prediction. The bagged classifier M* counts the votes and assigns the class with the most

votes to X (unknown sample).

Implementation steps of Bagging –

Multiple subsets are created from the original data set with equal tuples, selecting observations with replacement.

A base model is created on each of these subsets.

Tasnim (C191267)

10

Each model is learned in parallel from each training set and independent of each other.

The final predictions are determined by combining the predictions from all the models.

Random Forest:

Random Forest is an extension over bagging. Each classifier in the ensemble is a decision tree classifier and is generated using a

random selection of attributes at each node to determine the split. During classification, each tree votes and the most popular class is

returned.

Implementation steps of Random Forest –

Multiple subsets are created from the original data set, selecting observations with replacement.

A subset of features is selected randomly and whichever feature gives the best split is used to split the node iteratively.

The tree is grown to the largest.

Repeat the above steps and prediction is given based on the aggregation of predictions from n number of trees.

3.1.4 Bayesian Belief Networks,

Bayesian Belief Network is a graphical representation of different probabilistic relationships among random variables in a

particular set. It is a classifier with no dependency on attributes i.e it is condition independent. Due to its feature of jo int

probability, the probability in Bayesian Belief Network is derived, based on a condition — P(attribute/parent) i.e probability of an

attribute, true over parent attribute.

(Note: A classifier assigns data in a collection to desired categories.)

•

Consider this example:

In the above figure, we have an alarm ‘A’ – a node, say installed in a house of a person ‘gfg’, which rings upon two

probabilities i.e burglary ‘B’ and fire ‘F’, which are – parent nodes of the alarm node. The alarm is the parent node of

two probabilities P1 calls ‘P1’ & P2 calls ‘P2’ person nodes.

• Upon the instance of burglary and fire, ‘P1’ and ‘P2’ call person ‘gfg’, respectively. But, there are few drawbacks in this

case, as sometimes ‘P1’ may forget to call the person ‘gfg’, even after hearing the alarm, as he has a tendency to forget

things, quick. Similarly, ‘P2’, sometimes fails to call the person ‘gfg’, as he is only able to hear the alarm, from a

certain distance.

Q) Find the probability that ‘P1’ is true (P1 has called ‘gfg’), ‘P2’ is true (P2 has called ‘gfg’) when the alarm ‘A’ rang, but no

burglary ‘B’ and fire ‘F’ has occurred.

•

Tasnim (C191267)

11

=> P ( P1, P2, A, ~B, ~F) [ where- P1, P2 & A are ‘true’ events and ‘~B’ & ‘~F’ are ‘false’ events]

[ Note: The values mentioned below are neither calculated nor computed. They have observed values ]

Burglary ‘B’ –

• P (B=T) = 0.001 (‘B’ is true i.e burglary has occurred)

• P (B=F) = 0.999 (‘B’ is false i.e burglary has not occurred)

Fire ‘F’ –

• P (F=T) = 0.002 (‘F’ is true i.e fire has occurred)

• P (F=F) = 0.998 (‘F’ is false i.e fire has not occurred)

Alarm ‘A’ –

B

F

P (A=T)

P (A=F)

T

T

0.95

0.05

T

F

0.94

0.06

F

T

0.29

0.71

F

F

0.001

0.999

The alarm ‘A’ node can be ‘true’ or ‘false’ ( i.e may have rung or may not have rung). It has two parent nodes burglary

‘B’ and fire ‘F’ which can be ‘true’ or ‘false’ (i.e may have occurred or may not have occurred) depending upon

different conditions.

Person ‘P1’ –

•

A

P (P1=T)

P (P1=F)

T

0.95

0.05

F

0.05

0.95

The person ‘P1’ node can be ‘true’ or ‘false’ (i.e may have called the person ‘gfg’ or not) . It has a parent node, the

alarm ‘A’, which can be ‘true’ or ‘false’ (i.e may have rung or may not have rung ,upon burglary ‘B’ or fire ‘F’).

Person ‘P2’ –

•

A

P (P2=T)

P (P2=F)

T

0.80

0.20

F

0.01

0.99

The person ‘P2’ node can be ‘true’ or false’ (i.e may have called the person ‘gfg’ or not). It has a parent node, the alarm

‘A’, which can be ‘true’ or ‘false’ (i.e may have rung or may not have rung, upon burglary ‘B’ or fire ‘F’).

Solution: Considering the observed probabilistic scan –

With respect to the question — P ( P1, P2, A, ~B, ~F) , we need to get the probability of ‘P1’. We find it with regard to its parent

node – alarm ‘A’. To get the probability of ‘P2’, we find it with regard to its parent node — alarm ‘A’.

We find the probability of alarm ‘A’ node with regard to ‘~B’ & ‘~F’ since burglary ‘B’ and fire ‘F’ are parent nodes of alar m ‘A’.

•

From the observed probabilistic scan, we can deduce –

P ( P1, P2, A, ~B, ~F)

= P (P1/A) * P (P2/A) * P (A/~B~F) * P (~B) * P (~F)

= 0.95 * 0.80 * 0.001 * 0.999 * 0.998

= 0.00075

3.1.5 Classification by Backpropagation,

backpropagation:

Backpropagation is a widely used algorithm for training feedforward neural networks. It computes the gradient of the loss function

with respect to the network weights. It is very efficient, rather than naively directly computing the gradient concerning each weight.

This efficiency makes it possible to use gradient methods to train multi-layer networks and update weights to minimize loss; variants

such as gradient descent or stochastic gradient descent are often used.

The backpropagation algorithm works by computing the gradient of the loss function with respect to each weight via the chain rule,

computing the gradient layer by layer, and iterating backward from the last layer to avoid redundant computation of intermediate terms

in the chain rule.

Features of Backpropagation:

•

•

•

•

•

it is the gradient descent method as used in the case of simple perceptron network with the differentiable unit.

it is different from other networks in respect to the process by which the weights are calculated during the learning period of

the network.

training is done in the three stages :

the feed-forward of input training pattern

the calculation and backpropagation of the errorupdation of the weight

Tasnim (C191267)

12

Working of Backpropagation:

Neural networks use supervised learning to generate output vectors from input vectors that the network operates on. It Compares

generated output to the desired output and generates an error report if the result does not match the generated output vector. Then it

adjusts the weights according to the bug report to get your desired output.

Backpropagation Algorithm:

Step 1: Inputs X, arrive through the preconnected path.

Step 2: The input is modeled using true weights W. Weights are usually chosen randomly.

Step 3: Calculate the output of each neuron from the input layer to the hidden layer to the output layer.

Step 4: Calculate the error in the outputs

Backpropagation Error= Actual Output – Desired Output

Step 5: From the output layer, go back to the hidden layer to adjust the weights to reduce the error.

Step 6: Repeat the process until the desired output is achieved.

Parameters :

x = inputs training vector x=(x1,x2,…………xn).

t = target vector t=(t1,t2……………tn).

δk = error at output unit.

δj = error at hidden layer.

α = learning rate.

V0j = bias of hidden unit j.

Training Algorithm :

Step 1: Initialize weight to small random values.

Step 2: While the stepsstopping condition is to be false do step 3 to 10.

Step 3: For each training pair do step 4 to 9 (Feed-Forward).

Step 4: Each input unit receives the signal unit and transmitsthe signal xi signal to all the units.

Step 5 : Each hidden unit Zj (z=1 to a) sums its weighted input signal to calculate its net input

zinj = v0j + Σxivij

( i=1 to n)

Applying activation function zj = f(zinj) and sends this signals to all units in the layer about i.e output units

For each output l=unit yk = (k=1 to m) sums its weighted input signals.

yink = w0k + Σ ziwjk

(j=1 to a)

and applies its activation function to calculate the output signals.

yk = f(yink)

Backpropagation Error :

Step 6: Each output unit yk (k=1 to n) receives a target pattern corresponding to an input pattern then error is calculated as:

δk = ( tk – yk ) + yink

Tasnim (C191267)

13

Step 7: Each hidden unit Zj (j=1 to a) sums its input from all units in the layer above

δinj = Σ δj wjk

The error information term is calculated as :

δj = δinj + zinj

Updation of weight and bias :

Step 8: Each output unit yk (k=1 to m) updates its bias and weight (j=1 to a). The weight correction term is given by :

Δ wjk = α δk zj

and the bias correction term is given by Δwk = α δk.

therefore wjk(new) = wjk(old) + Δ wjk

w0k(new) = wok(old) + Δ wok

for each hidden unit zj (j=1 to a) update its bias and weights (i=0 to n) the weight connection term

Δ vij = α δj xi

and the bias connection on term

Δ v0j = α δj

Therefore vij(new) = vij(old) + Δvij

v0j(new) = v0j(old) + Δv0j

Step 9: Test the stopping condition. The stopping condition can be the minimization of error, number of epochs.

Need for Backpropagation:

Backpropagation is “backpropagation of errors” and is very useful for training neural networks. It’s fast, easy to implement, and

simple. Backpropagation does not require any parameters to be set, except the number of inputs. Backpropagation is a flexible method

because no prior knowledge of the network is required.

Types of Backpropagation

There are two types of backpropagation networks.

Static backpropagation: Static backpropagation is a network designed to map static inputs for static outputs. These types of networks

are capable of solving static classification problems such as OCR (Optical Character Recognition).

Recurrent backpropagation: Recursive backpropagation is another network used for fixed-point learning. Activation in recurrent

backpropagation is feed-forward until a fixed value is reached. Static backpropagation provides an instant mapping, while recurrent

backpropagation does not provide an instant mapping.

Advantages:

It is simple, fast, and easy to program.

Only numbers of the input are tuned, not any other parameter.

It is Flexible and efficient.

No need for users to learn any special functions.

Disadvantages:

It is sensitive to noisy data and irregularities. Noisy data can lead to inaccurate results.

Performance is highly dependent on input data.

Spending too much time training.

The matrix-based approach is preferred over a mini-batch.

https://www.javatpoint.com/pytorch-backpropagation-process-in-deep-neural-network

3.1.6 Support Vector Machines,

https://www.geeksforgeeks.org/support-vector-machine-algorithm/

https://www.javatpoint.com/machine-learning-support-vector-machine-algorithm

3.1.7 Lazy Learners (or Learning from Your Neighbors):

KNN is often referred to as a lazy learner. This means that the algorithm does not use the training data points to do any generalizations. In other

words, there is no explicit training phase. Lack of generalization means that KNN keeps all the training data. It is a non-parametric learning

algorithm because it doesn’t assume anything about the underlying data. Leazy learning is also known as instance-based learning and memorybased learning. It postpones most of the processing and computation until a query or prediction request.

Here, the algorithm stores the training data set in its original form without deriving general rules from it. When we have a new object to process,

the algorithm searches the training data for the most similar objects and uses them to produce the output, like the k-nearest neighbors (kNN)

algorithm:

Tasnim (C191267)

14

In the example above, kNN classifies an unknown point by checking its neighborhood when it arrives as the input.

Difference between eager and lazy learners in data mining

https://www.baeldung.com/cs/lazy-vs-eager-learning

3.1.8 Other Classification Methods

4 Cluster Analysis:

4.1.1 Basic Concepts,

What Is Cluster Analysis?

Cluster analysis or simply clustering is the process of partitioning a set of data objects (or observations) into subsets. Each subset is a

cluster, such that objects in a cluster are similar to one another, yet dissimilar to objects in other clusters. The set of clusters resulting

from a cluster analysis can be referred to as a clustering. In this context, different clustering methods may generate different

clustering on the same data set. The partitioning is not performed by humans, but by the clustering algorithm. Hence, clustering is

useful in that it can lead to the discovery of previously unknown groups within the data.

The basic concept of clustering in data mining is to group similar data objects together based on their characteristics or

attributes.

However, there are several requirements that need to be addressed for effective clustering:

1. Scalability: Clustering algorithms should be able to handle large datasets containing millions or billions of objects. Scalable

algorithms are necessary to avoid biased results that may occur when clustering on a sample of the data.

2. Handling Different Types of Attributes: Clustering algorithms should be able to handle various types of data, including

numeric, binary, nominal, ordinal, and complex data types such as graphs, sequences, images, and documents.

3. Discovery of Clusters with Arbitrary Shape: Algorithms should be capable of detecting clusters of any shape, not just spherical

clusters. This is important when dealing with real-world scenarios where clusters can have diverse and non-standard shapes.

4. Reduced Dependency on Domain Knowledge: Clustering algorithms should minimize the need for users to provide domain

knowledge and input parameters. The quality of clustering should not heavily rely on user-defined parameters, which can be

challenging to determine, especially for high-dimensional datasets.

5. Robustness to Noisy Data: Clustering algorithms should be robust to outliers, missing data, and errors commonly found in realworld datasets. Noise in the data should not significantly impact the quality of the resulting clusters.

6. Incremental Clustering and Insensitivity to Input Order: Algorithms should be capable of incorporating incremental updates

and new data into existing clustering structures without requiring a complete recomputation. Additionally, the order in which

data objects are presented should not drastically affect the resulting clustering.

7. Capability of Clustering High-Dimensionality Data: Clustering algorithms should be able to handle datasets with a large

number of dimensions or attributes, even in cases where the data is sparse and highly skewed.

8. Constraint-Based Clustering: Clustering algorithms should be able to consider and satisfy various constraints imposed by realworld applications. Constraints may include spatial constraints, network constraints, or specific requirements related to the

clustering task.

9. Interpretability and Usability: Clustering results should be interpretable, comprehensible, and usable for users. Clustering

should be tied to specific semantic interpretations and application goals, allowing users to understand and apply the results

effectively.

These requirements highlight the challenges and considerations involved in developing clustering algorithms that can effectively

analyze and group data objects based on their similarities and characteristics.

4.1.2 Partitioning Methods,

The simplest and most fundamental version of cluster analysis is partitioning, which organizes the objects of a set into several

exclusive groups or clusters. To keep the problem specification concise, we can assume that the number of clusters is given as

Tasnim (C191267)

15

background knowledge. This parameter is the starting point for partitioning methods. Formally, given a data set, D, of n objects, and k,

the number of clusters to form, a partitioning algorithm organizes the objects into k partitions (k ≤ n), where each partition represents

a cluster. The clusters are formed to optimize an objective partitioning criterion, such as a dissimilarity function based on distance, so

that the objects within a cluster are “similar” to one another and “dissimilar” to objects in other clusters in terms of the data set

attributes.

The most well-known and commonly used partitioning methods are

▪

The k-Means Method

▪

k-Medoids Method

Centroid-Based Technique:

The K-Means Method The k-means algorithm takes the input parameter, k, and partitions a set of n objects intok clusters so that the resulting

intracluster similarity is high but the inter cluster similarity is low. Cluster similarity is measured in regard to the mean value of the objects in a

cluster, which can be viewed as the cluster’s centroid or center of gravity. The k-means algorithm proceeds as follows

▪

▪

▪

▪

First, it randomly selects k of the objects, each of which initially represents a cluster mean or center.

For each of the remaining objects, an object is assigned to the cluster to which it is the most similar, based on the distance

between the object and the cluster mean

It then computes the new mean for each cluster.

This process iterates until the criterion function converges.

Typically, the square-error criterion is used, defined as

where E is the sum of the square error for all objects in the data set p is the point in space representing a given object mi is the mean of

cluster Ci

The k-means partitioning algorithm: The k-means algorithm for partitioning, where each cluster’s center is represented by the mean

value of the objects in the cluster.

Clustering of a set of objects based on the k-means method

The k-Medoids Method

The k-means algorithm is sensitive to outliers because an object with an extremely large value may substantially distort the distribution

of data. This effect is particularly exacerbated due to the use of the square-error function. Instead of taking the mean value of the objects

in a cluster as a reference point, we can pick actual objects to represent the clusters, using one representative object per cluster.

Each remaining object is clustered with the representative object to which it is the most similar The partitioning method is then performed

based on the principle of minimizing the sum of the dissimilarities between each object and its corresponding reference point. That is,

an absolute-error criterion is used, defined as

where E is the sum of the absolute error for all objects in the data set p is the point in space representing a given object in cluster Cj oj

is the representative object of Cj The initial representative objects are chosen arbitrarily.

The iterative process of replacing representative objects by non representative objects continues as long as the quality of the resulting

clustering is improved. This quality is estimated using a cost function that measures the average dissimilarity between an object and the

representative object of its cluster. To determine whether a non representative object, oj random, is a good replacement for a current

Tasnim (C191267)

16

representative

object,

oj,

the

following

four

cases

are

examined

for

each

of

the

non

representative

objects.

Case 1: p currently belongs to representative object, oj. If ojis replaced by orandomasa representative object and p is closest to one of

the other

representative objects, oi,i≠j, then p is reassigned to oi.

Case 2: p currently belongs to representative object, oj. If ojis replaced by o random as a representative object and p is closest to o

random, then p is reassigned to o random.

Case 3: p currently belongs to representative object, oi, i≠j. If oj is replaced by o random as a representative object and p is still closest

to oi, then the assignment does not change.

Case 4: p currently belongs to representative object, oi, i≠j. If ojis replaced by o randomas a representative object and p is closest to o

random, then p is reassigned to o random.

The k-Medoids Algorithm: The k-medoids algorithm for partitioning based on medoid or central objects.

https://educatech.in/classical-partitioning-methods-in-data-mining

4.1.3 Hierarchical Methods

https://www.saedsayad.com/clustering_hierarchical.htm

4.1.3.1 Hierarchical Clustering

Hierarchical clustering involves creating clusters that have a predetermined ordering from top to bottom. For example, all files and folders on the

hard disk are organized in a hierarchy. There are two types of hierarchical clustering, Divisive and Agglomerative.

Divisive method

In divisive or top-down clustering method we assign all of the observations to a single cluster and then partition the cluster to two least similar

clusters using a flat clustering method (e.g., K-Means). Finally, we proceed recursively on each cluster until there is one cluster for each observation.

There is evidence that divisive algorithms produce more accurate hierarchies than agglomerative algorithms in some circumstances but is

conceptually more complex.

Tasnim (C191267)

17

Agglomerative method

In agglomerative or bottom-up clustering method we assign each observation to its own cluster. Then, compute the similarity (e.g., distance)

between each of the clusters and join the two most similar clusters. Finally, repeat steps 2 and 3 until there is only a single cluster left. The related

algorithm is shown below.

Before any clustering is performed, it is required to determine the proximity matrix containing the distance between each point using a distance

function. Then, the matrix is updated to display the distance between each cluster. The following three methods differ in how the distance between

each cluster is measured.

Single Linkage

In single linkage hierarchical clustering, the distance between two clusters is defined as the shortest distance between two points in each cluster.

For example, the distance between clusters “r” and “s” to the left is equal to the length of the arrow between their two closest points.

Complete Linkage

In complete linkage hierarchical clustering, the distance between two clusters is defined as the longest distance between two points in each cluster.

For example, the distance between clusters “r” and “s” to the left is equal to the length of the arrow between their two furthest points.

Average Linkage

In average linkage hierarchical clustering, the distance between two clusters is defined as the average distance between each point in one cluster to

every point in the other cluster. For example, the distance between clusters “r” and “s” to the left is equal to the average length each arrow

between connecting the points of one cluster to the other.

Tasnim (C191267)

18

Example: Clustering the following 7 data points.

X1

X2

A

10

5

B

1

4

C

5

8

D

9

2

E

12

10

F

15

8

G

7

7

Step 1: Calculate distances between all data points using Euclidean distance function. The shortest distance is between data points C and G.

A

B

C

D

E

B

9.06

C

5.83

5.66

D

3.16

8.25

E

5.39 12.53

F

5.83 14.56 10.00 16.16

3.61

G

3.61

5.83

F

7.21

7.28 14.42

6.71

2.24

8.60

8.06

Step 2: We use "Average Linkage" to measure the distance between the "C,G" cluster and other data points.

A

B

C,G

D

B

9.06

C,G

4.72

6.10

D

3.16

8.25

E

5.39

12.53

6.50 14.42

F

5.83

14.56

9.01 16.16

Step 3:

Tasnim (C191267)

E

6.26

3.61

19

A,D

B

C,G

B

8.51

C,G

5.32

6.10

E

6.96

12.53

6.50

F

7.11

14.56

9.01

E

3.61

Step 4:

A,D

B

C,G

B

8.51

C,G

5.32

6.10

E,F

6.80

13.46

Step 5:

A,D,C,G

B

B

6.91

E,F

6.73

Step 6:

A,D,C,G,E,F

B

Final dendrogram:

Tasnim (C191267)

9.07

13.46

7.65

20

4.1.4 Density-Based Methods deletion.

What is Density-based clustering?

Density-Based Clustering refers to one of the most popular unsupervised learning methodologies used in model building and machine

learning algorithms. The data points in the region separated by two clusters of low point density are considered as noise. The

surroundings with a radius ε of a given object are known as the ε neighborhood of the object. If the ε neighborhood of the object

comprises at least a minimum number, MinPts of objects, then it is called a core object.

https://www.javatpoint.com/density-based-clustering-in-data-mining

5 Segment 5

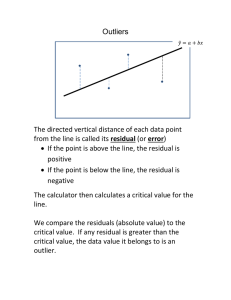

5.1 Outliers Detection and Analysis:

an outlier is an object that deviates significantly from the rest of the objects. They can be caused by measurement or execution

error. The analysis of outlier data is referred to as outlier analysis or outlier mining.

Why outlier analysis?

Most data mining methods discard outliers’ noise or exceptions, however, in some applications such as fraud detection, the rare

events can be more interesting than the more regularly occurring one and hence, the outlier analysis becomes important in suc h

case.

Why Should You Detect Outliers?

In the machine learning pipeline, data cleaning and preprocessing is an important step as it helps you better understand the data.

During this step, you deal with missing values, detect outliers, and more.

As outliers are very different values—abnormally low or abnormally high—their presence can often skew the results of statistical

analyses on the dataset. This could lead to less effective and less useful models.

But dealing with outliers often requires domain expertise, and none of the outlier detection techniques should be applied without

understanding the data distribution and the use case.

For example, in a dataset of house prices, if you find a few houses priced at around $1.5 million—much higher than the median house

price, they’re likely outliers. However, if the dataset contains a significantly large number of houses priced at $1 million and above—

they may be indicative of an increasing trend in house prices. So it would be incorrect to label them all as outliers. In this case, you

need some knowledge of the real estate domain.

The goal of outlier detection is to remove the points—which are truly outliers—so you can build a model that performs well on unseen

test data. We’ll go over a few techniques that’ll help us detect outliers in data.

How to Detect Outliers Using Standard Deviation

When the data, or certain features in the dataset, follow a normal distribution, you can use the standard deviation of the data, or the

equivalent z-score to detect outliers.

In statistics, standard deviation measures the spread of data around the mean, and in essence, it captures how far away from the mean

the data points are.

For data that is normally distributed, around 68.2% of the data will lie within one standard deviation from the mean. Close to 95.4%

and 99.7% of the data lie within two and three standard deviations from the mean, respectively.

Let’s denote the standard deviation of the distribution by σ, and the mean by μ.

One approach to outlier detection is to set the lower limit to three standard deviations below the mean (μ - 3*σ), and the upper limit to

three standard deviations above the mean (μ + 3*σ). Any data point that falls outside this range is detected as an outlier.

As 99.7% of the data typically lies within three standard deviations, the number of outliers will be close to 0.3% of the size of the

dataset.

5.2 Outliers Detection Methods

Outlier detection methods can be categorized in two orthogonal ways: based on the availability of domain expert-labeled data and

based on assumptions about normal objects versus outliers. Let's summarize each category and provide an example for each model:

Categorization based on the availability of labeled data:

Tasnim (C191267)

21

a. Supervised Methods: If expert-labeled examples of normal and/or outlier objects are available, supervised methods can be used.

These methods model data normality and abnormality as a classification problem. For example, a domain expert labels a sample of

data as either normal or outlier, and a classifier is trained to recognize outliers based on these labels.

Example: In credit card fraud detection, historical transactions are labeled as normal or fraudulent by domain experts. A supervised

method can be trained to classify new transactions as either normal or fraudulent based on the labeled training data.

b. Unsupervised Methods: When labeled examples are not available, unsupervised methods are used. These methods assume that

normal objects follow a pattern more frequently than outliers. The goal is to identify objects that deviate significantly from the

expected patterns.

Example: In network intrusion detection, normal network traffic is expected to follow certain patterns. Unsupervised methods can

analyze network traffic data and identify deviations from these patterns as potential intrusions.

c. Semi-Supervised Methods: In some cases, a small set of labeled data is available, but most of the data is unlabeled. Semi-supervised

methods combine the use of labeled and unlabeled data to build outlier detection models.

Example: In anomaly detection for manufacturing processes, some labeled normal samples may be available, along with a large

amount of unlabeled data. Semi-supervised methods can leverage the labeled samples and neighboring unlabeled data to detect

anomalies in the manufacturing process.

Categorization based on assumptions about normal objects versus outliers:

a. Statistical Methods: Statistical or model-based methods assume that normal data objects are generated by a statistical model, and

objects not following the model are considered outliers. These methods estimate the likelihood of an object being generated by the

model.

Example: Using a Gaussian distribution as a statistical model, objects falling into regions with low probability density can be

considered outliers.

b. Proximity-Based Methods: Proximity-based methods identify outliers based on the proximity of an object to its neighbors in feature

space. If an object's neighbors are significantly different from the neighbors of most other objects, it can be classified as an outlier.

Example: By considering the nearest neighbors of an object, if its proximity to these neighbors deviates significantly from the

proximity of other objects, it can be identified as an outlier.

c. Clustering-Based Methods: Clustering-based methods assume that normal data objects belong to large and dense clusters, while

outliers belong to small or sparse clusters or do not belong to any cluster at all.

Example: If a clustering algorithm identifies a small cluster or data points that do not fit into any cluster, these points can be

considered outliers.

These are general categories of outlier detection methods, and there are numerous specific algorithms and techniques within each

category. The examples provided illustrate the application of each method in different domains, highlighting their utilization in outlier

detection.

5.2.1

Mining Contextual and Collective

Contextual outlier

The main concept of contextual outlier detection is to identify objects in a dataset that deviate significantly within a specific context. Contextual

attributes, such as spatial attributes, time, network locations, and structured attributes, define the context in which outliers are evaluated.

Behavioral attributes, on the other hand, determine the characteristics of an object and are used to assess its outlier status within its context.

There are two categories of methods for contextual outlier detection based on the identification of contexts:

1. Transforming Contextual Outlier Detection to Conventional Outlier Detection: In situations where contexts can be clearly identified, this

method involves transforming the contextual outlier detection problem into a standard outlier detection problem. The process involves

two steps. First, the context of an object is identified using contextual attributes. Then, the outlier score for the object within its context

is calculated using a conventional outlier detection method.

For example, in customer-relationship management, outlier customers can be detected within the context of customer groups. By grouping

customers based on contextual attributes like age group and postal code, comparisons can be made within the same group using conventional

outlier detection techniques.

2. Modeling Normal Behavior with Respect to Contexts: In applications where it is difficult to explicitly partition the data into contexts, this

method focuses on modeling the normal behavior of objects with respect to their contexts. A training dataset is used to train a model

that predicts the expected behavior attribute values based on contextual attribute values. To identify contextual outliers, the model is

applied to the contextual attributes of an object, and if its behavior attribute values significantly deviate from the predicted values, it is

considered an outlier.

For instance, in an online store recording customer browsing behavior, the goal may be to detect contextual outliers when a customer purchases

a product unrelated to their recent browsing history. A prediction model can be trained to link the browsing context with the expected behavior,

and deviations from the predicted behavior can indicate contextual outliers.

In summary, contextual outlier detection expands upon conventional outlier detection by considering the context in which objects are evaluated.

By incorporating contextual information, outliers that cannot be detected otherwise can be identified, and false alarms can be reduced.

Contextual attributes play a crucial role in defining the context, and various methods, such as transforming the problem or modeling normal

behavior, can be employed based on the availability and identification of contexts.

Collective outlier:

Collective outlier detection aims to identify groups of data objects that, as a whole, deviate significantly from the entire dataset. It involves

examining the structure of the dataset and relationships between multiple data objects. There are two main approaches for collective outlier

detection:

Tasnim (C191267)

22

Reducing to Conventional Outlier Detection: This approach identifies structure units within the data, such as subsequences, local areas, or

subgraphs. Each structure unit is treated as a data object, and features are extracted from them. The problem is then transformed into outlier

detection on the set of structured objects. A structure unit is considered a collective outlier if it deviates significantly from the expected trend in

the extracted features.

Modeling Expected Behavior of Structure Units: This approach directly models the expected behavior of structure units. For example, a Markov

model can be learned from temporal sequences. Subsequences that deviate significantly from the model are considered collective outliers.

The structures in collective outlier detection are often not explicitly defined and need to be discovered during the outlier detection process. This

makes it more challenging than conventional and contextual outlier detection. The exploration of data structures relies on heuristics and can be

application-dependent.

Overall, collective outlier detection is a complex task that requires further research and development.

Outlier detection process:

The outlier detection process involves identifying and flagging data objects that deviate significantly from the expected patterns or

behaviors of the majority of the dataset. While the specific steps may vary depending on the approach or algorithm used, the general

outlier detection process can be outlined as follows:

1. Data Preparation: This step involves collecting and preparing the dataset for outlier detection. It may include data cleaning,

normalization, and handling missing values or outliers that are already known.

2. Feature Selection/Extraction: Relevant features or attributes are selected or extracted from the dataset to represent the

characteristics of the data objects. Choosing appropriate features is crucial for effective outlier detection.

3. Define the Expected Normal Behavior: The next step is to establish the expected normal behavior or patterns of the data

objects. This can be done through statistical analysis, domain knowledge, or learning from a training dataset.

4. Outlier Detection Algorithm/Application: An outlier detection algorithm or technique is applied to the dataset to identify

potential outliers. There are various approaches available, including statistical methods (e.g., z-score, boxplot), distance-based

methods (e.g., k-nearest neighbors), density-based methods (e.g., DBSCAN), and machine learning-based methods (e.g.,

isolation forest, one-class SVM).

5. Outlier Score/Threshold: Each data object is assigned an outlier score or measure indicating its degree of deviation from the

expected behavior. The outlier score can be based on distance, density, probability, or other statistical measures. A threshold

value is set to determine which objects are considered outliers based on their scores.

6. Outlier Identification/Visualization: Objects with outlier scores exceeding the threshold are identified as outliers. They can be

flagged or labeled for further analysis. Visualization techniques, such as scatter plots, heatmaps, or anomaly maps, can be used

to visually explore and interpret the detected outliers.

7. Validation/Evaluation: The detected outliers should be validated and evaluated to assess their significance and impact. This

may involve domain experts reviewing the flagged outliers, conducting further analysis, or performing outlier impact analysis

on the overall system or process.

8. Iteration and Refinement: The outlier detection process may require iterations and refinements based on feedback, domain

knowledge, or additional data. This allows for continuous improvement and adaptation to changing data patterns or

requirements.

It's important to note that outlier detection is a complex task, and the effectiveness of the process depends on the quality of the data,

the selection of appropriate features, and the choice of an appropriate outlier detection method for the specific dataset and application.

Why outlier can be more important than normal data?

Let's consider a dataset representing monthly sales revenue for a retail company over the course of a year. The dataset contains 12 data

points, one for each month.

Normal Data: January: $10,000 February: $12,000 March: $11,000 April: $10,500 May: $10,200 June: $10,300 July: $10,400 August:

$10,100 September: $10,200 October: $10,100 November: $10,000 December: $10,300

Outlier Data: January: $10,000 February: $12,000 March: $11,000 April: $10,500 May: $10,200 June: $10,300 July: $10,400 August:

$10,100 September: $10,200 October: $10,100 November: $10,000 December: $100,000

In this example, the normal data represents the regular monthly sales revenue for the retail company. These values are consistent,

within a certain range, and can be considered as the expected or typical sales figures.

However, in December, there is an outlier data point where the sales revenue is $100,000, which is significantly higher than the other

months. This can be due to a variety of reasons, such as a one-time large order from a major client, a seasonal spike in sales, or an

error in data entry.

Now, let's analyze why this outlier can be more important than the normal data:

1. Financial Impact: The outlier value of $100,000 represents a substantial increase in sales revenue compared to the normal

monthly figures. This can have a significant positive impact on the company's financial performance for the year, contributing

to higher profits, improved cash flow, and potentially influencing important financial decisions.

2. Decision Making: The outlier value can influence strategic decisions within the company. For example, if the company is

considering expansion, investment in marketing campaigns, or allocating resources for the upcoming year, the exceptional

sales revenue in December would carry more weight and influence these decisions.

3. Performance Evaluation: In performance evaluations and goal setting, the outlier value can significantly affect assessments and

targets. For instance, if the company's sales team has monthly targets based on the normal data, the outlier value in December

might lead to adjustments in expectations, incentives, or bonuses.

4. Anomaly Detection: Identifying and understanding the cause of the outlier value is crucial. It could be indicative of underlying

factors that need attention, such as unusual market conditions, customer behavior, or operational inefficiencies. Addressing and

managing these factors can help maintain or replicate the outlier's positive impact in future periods.

Tasnim (C191267)

23

5. Industry Comparison: Outliers can also be important when comparing performance against industry benchmarks or

competitors. In this case, if the company's December sales revenue significantly exceeds industry norms, it could signify a

competitive advantage, market dominance, or differentiation in the industry.

In summary, the outlier value of $100,000 in December represents a significant deviation from the normal monthly sales revenue. It

can have a substantial impact on financial performance, decision making, goal setting, anomaly detection, and industry positioning.

Understanding and leveraging the insights from this outlier value can be crucial for the company's success and growth.

OR,

Outliers can be more important than normal data in certain contexts due to the following reasons:

Anomalies and Errors: Outliers often represent unusual or unexpected events, errors, or anomalies in the data. They can indicate data

quality issues, measurement errors, or abnormal behavior that needs attention. Identifying and addressing these outliers can improve

data integrity and the overall quality of analysis or decision-making.

Critical Events: Outliers may correspond to critical events or situations that have a significant impact on the system or process being