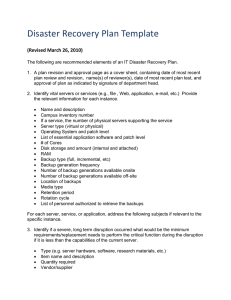

Lecture 14 Lecture 14 1 Topics: Backup/Version Control, History of Git Developers focus on version control, Operations staff focus on backups Version control needs: - big undo feature - explore history for ‘why’ (replacement for comments) Backup needs: - undo/restore massive data changes - Failure scenarios: - your flash drive fails (partially/totally) - a user deletes/trashes data by mistake - outside attacker deletes/trashes data on purpose (ransomware attack) - or insider attacker does the same thing - losing power (battery dies) Annualized Failure Rate (AFR) - probability that a drive will fail in a year of use - use -> must be quantified - a flash AFR will be 0.2%-2.5% Strategies for backups: - mirroring (simple) - have multiple drives with same data (RAID 1) - reduced AFR: - not 0.1*0.1 = 0.001 - failures are not independent, so we can’t do multiplication - also system is more reliable, the 0.001 is too pessimistic - you replace bad drives, and if you replace it within a day then you shrink 0.001 by 365 days. - recovery rate What to back up - mirroring means we don’t lose a copy of today’’s date - if you delete the files, the mirror is also deleted 1. File contents 2. File system data (other than contents of files) a. file meta data (ownership, timestamps, etc) 3. df -h a. lists all file systems mounted on system b. file system organization i. partitions, mount points, etc. 4. Firmware a. when booting system runs code that lives in EE prom, not a flashdrive 2 approaches to backup 1. Block level a. divide device into equal size blocks, and backup each of the blocks to a secondary device 2 2. File system level a. still have blocks, but divide into blocks based on knowledge of file system i. ie: you know why you’re backing up to the data box because you updated data rather than just updating the box Which changes to ignore: - don’t back up unused areas - speed up area - cache of deducible data, which you can regenerate from existing data - speeds up backups, but slows recovery when do you reclaim backup storage (run out of backup drives) - 1/day for last 6 mo - 1/week for last 6 yrs, etc How to backup cheaply - do it less often - backup to a cheaper device (hard disk, mag tape optical) - staging strategies, multilevel strategies. fast flash - > slow flash -> disk, etc - Remote backups - issue of privacy and trust - involves encryption - incremental backups - only put changed blocks - how to express deltas (changes): - block level backup - list of indices of new contents - text-file backups - edit script - delete lines 5-19 - insert following 3 lines at line 27 - replace = delete + insert - there’s a scripting language for incremental backups Deduplication (at block level) - lots of blocks will be identical - you have a list of blocks - A B C D tagged with 1 2 3 4 - then you have a list of 1, 2, 3, 4 which indicate the order in which the blocks are used - errors: - more subject to problems if there are partial drive failures - the assumption is that this is very rare How do you know the backups are working? - test them as realistically as possible - checksums: - instead of recovering the entire database and comparing, save checksums what what the data used to be and when you pull data they match up - efficiently test backups, which is cheaper Versioning at file level (OS help) approaches - 1. files can have versions - $ ls -l --all-versions Lecture 14 Lecture 14 3 - - this doesn’t work, hypothetical - problems: - who decides when to increment versions? - usually under application control - will make OS more complicated - what about pruning older versions? - DBMS won’t want versions, because it’ll slow the database management system 2. Snapshots - applications don’t decide when new versions of files are updated - the system periodically takes snapshots of every file in the system - used in many modern file systems - cd .snapshot - then you can ls and view the snapshots - copy-on-write (CoW) - $ cp --reflink=always a b - when you copy file a to file b, filesystem notices the info about a and points b’s info at A - you deferring the work until you change a/b - when you update B, you don’t change A but you create a new pointer in B which points to the specific data at A you changed WAFL filesystem - has a root directory with meta info - meta info points to a block - data in directory, can point to other directories with meta info and blocks, which might eventually point to files - when you update a block, it updates only the new block and its ancestors. The rest stay the same - only reclaim space in memory when the snapshots have nothing that points to the blocks Version Control Systems (we assume files work): - control source code, test cases, documentation, sales blurb, list of blob names - same limits - big binary blobs, but can be fixed with a pointer to blobs What we want in version control systems: 1. histories (indefinite) a. snapshots only last around like 2 weeks b. contain meta data and data i. file metadata + history metadata 1. who made this change to this file (committer) ii. file renaming 1. deleted A, created B iii. last 3 changes made to the system 2. atomic commits to multiple files a. atomic - everybody sees either the entire change or nothing at all 3. hooks a. places in VCS that user can execute arbitrary code 4 b. git doesn’t do exactly what you want, so hooks let you use project specific rules 4. security a. authenticating changes 5. format conversion a. CRLF vs LF -> windows vs mac b. UTF-8 vs others 6. navigation of complex history History of Version Control: - SCCS Bell labs 1975 (single file VC) - interleaved format - single pass through the repo to build it, saves RAM - problems: - O(size of repository) to retrieve - RCS - file only - latest version in repository + deltas - you can grab latest really fast, and older versions have delta - O(size of latest version) - CVS - central repository - Subversion - no central repo - BItkeeper - proprietary code for linux - atomic commits, no central repository - Git (2002) - Bitkeeper but open source