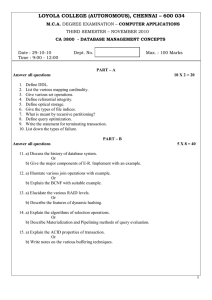

DBMS PYQs

2018 – 19

10.a) Define catalog management in distributed database.

Ans: Efficient catalog management in distributed databases is critical to ensure satisfactory performance related to site

autonomy, view management, and data distribution and replication. Catalogs are databases themselves containing

metadata about the distributed database system.

Three popular management schemes for distributed catalogs are centralized catalogs, fully replicated catalogs,

and partitioned catalogs. The choice of the scheme depends on the database itself as well as the access patterns of the

applications to the underlying data.

•

Centralized Catalogs: In this scheme, the entire catalog is stored in one single site. Owing to its central nature, it is

easy to implement. On the other hand, the advantages of reliability, availability, autonomy, and distribution of

processing load are adversely impacted. For read operations from noncentral sites, the requested catalog data is

locked at the central site and is then sent to the requesting site. On completion of the read operation, an

acknowledgement is sent to the central site, which in turn unlocks this data. All update operations must be

processed through the central site. This can quickly become a performance bottleneck for write-intensive

applications.

•

Fully Replicated Catalogs: In this scheme, identical copies of the complete catalog are present at each site. This

scheme facilitates faster reads by allowing them to be answered locally. However, all updates must be broadcast to

all sites. Updates are treated as transactions and a centralized two-phase commit scheme is employed to ensure

catalog consistency. As with the centralized scheme, write-intensive applications may cause increased network

traffic due to the broadcast associated with the writes.

•

Partially Replicated Catalogs: The centralized and fully replicated schemes restrict site autonomy since they must

ensure a consistent global view of the catalog. Under the partially replicated scheme, each site maintains complete

catalog information on data stored locally at that site. Each site is also permitted to cache entries retrieved from

remote sites. However, there are no guarantees that these cached copies will be the most recent and updated. The

system tracks catalog entries for sites where the object was created and for sites that contain copies of this object.

Any changes to copies are propagated immediately to the original (birth) site. Retrieving updated copies to replace

stale data may be delayed until an access to this data occurs. In general, fragments of relations across sites should

be uniquely accessible. Also, to ensure data distribution transparency, users should be allowed to create synonyms

for remote objects and use these synonyms for subsequent referrals.

10.b) Explain the disadvantage of Distributed DBMS.

Ans:

•

The database is easier to expand as it is already spread across multiple systems and it is not too complicated to

add a system.

•

The distributed database can have the data arranged according to different levels of transparency i.e. data with

different transparency levels can be stored at different locations.

•

The database can be stored according to the departmental information in an organization. In that case, it is

easier for an organizational hierarchical access.

•

It is cheaper to create a network of systems containing a part of the database. This database can also be easily

increased or decreased.

•

Even if some of the data nodes go offline, the rest of the database can continue its normal functions.

11. Sort Notes:

a) Wait-Die and Wound-Wait:

Wait-Die Scheme

In this scheme, if a transaction requests to lock a resource (data item), which is already held with a conflicting lock by

another transaction, then one of the two possibilities may occur −

•

If TS(Ti) < TS(Tj) − that is Ti, which is requesting a conflicting lock, is older than Tj − then Ti is allowed to wait until

the data-item is available.

•

If TS(Ti) > TS(tj) − that is Ti is younger than Tj − then Ti dies. Ti is restarted later with a random delay but with the

same timestamp.

This scheme allows the older transaction to wait but kills the younger one.

Wound-Wait Scheme

In this scheme, if a transaction requests to lock a resource (data item), which is already held with conflicting lock by

some another transaction, one of the two possibilities may occur −

•

If TS(Ti) < TS(Tj), then Ti forces Tj to be rolled back − that is Ti wounds Tj. Tj is restarted later with a random delay

but with the same timestamp.

•

If TS(Ti) > TS(Tj), then Ti is forced to wait until the resource is available.

This scheme, allows the younger transaction to wait; but when an older transaction requests an item held by a younger

one, the older transaction forces the younger one to abort and release the item.

In both the cases, the transaction that enters the system at a later stage is aborted.

b) View and Conflict serializability in DBMS:

S.No. Conflict Serializability

1.

2.

Two schedules are said to be conflict equivalent if all

Two schedules are said to be view equivalent if the

the conflicting operations in both the schedule get

order of initial read, final write and update

executed in the same order. If a schedule is a conflict

operations is the same in both the schedules. If a

equivalent to its serial schedule then it is called

schedule is view equivalent to its serial schedule then

Conflict Serializable Schedule.

it is called View Serializable Schedule.

If a schedule is view serializable then it may or may

If a schedule is conflict serializable then it is also view

not be conflict serializable.

serializable schedule.

Conflict equivalence can be easily achieved by

3.

View Serializability

reordering the operations of two transactions

therefore, Conflict Serializability is easy to achieve.

View equivalence is rather difficult to achieve as both

transactions should perform similar actions in a

similar manner. Thus, View Serializability is difficult

to achieve.

S.No. Conflict Serializability

For a transaction T1 writing a value A that no one

4.

else reads but later some other transactions say T2

write its own value of A, W(A) cannot be placed

under positions where it is never read.

View Serializability

If a transaction T1 writes a value A that no other

transaction reads (because later some other

transactions say T2 writes its own value of A) W(A)

can be placed in positions of the schedule where it is

never read.

c) Tuple and Domain relation calculus:

S.

Basis of

No.

Comparison

1.

2.

Tuple Relational Calculus (TRC)

Domain Relational Calculus (DRC)

The Tuple Relational Calculus (TRC) is

The Domain Relational Calculus (DRC)

used to select tuples from a relation. The

employs a list of attributes from which to

tuples with specific range values, tuples

choose based on the condition. It’s similar

with certain attribute values, and so on

to TRC, but instead of selecting entire

can be selected.

tuples, it selects attributes.

Representation

In TRC, the variables represent the tuples

In DRC, the variables represent the value

of variables

from specified relations.

drawn from a specified domain.

A tuple is a single element of relation. In

A domain is equivalent to column data type

database terms, it is a row.

and any constraints on the value of data.

This filtering variable uses a tuple of

This filtering is done based on the domain

relations.

of attributes.

Definition

3.

Tuple/ Domain

4.

Filtering

The predicate expression condition

associated with the TRC is used to test

5.

Return Value

every row using a tuple variable and

return those tuples that met the

condition.

5.

DRC takes advantage of domain variables

and, based on the condition set, returns the

required attribute or column that satisfies

the criteria of the condition.

Membership

The query cannot be expressed using a

The query can be expressed using a

condition

membership condition.

membership condition.

S.

Basis of

No.

Comparison

Tuple Relational Calculus (TRC)

Domain Relational Calculus (DRC)

The QUEL or Query Language is a query

The QBE or Query-By-Example is query

language related to it,

language related to it.

It reflects traditional pre-relational file

It is more similar to logic as a modeling

structures.

language.

6.

Query Language

7.

Similarity

8.

Syntax

Notation: {T | P (T)} or {T | Condition (T)}

9.

Example

{T | EMPLOYEE (T) AND T.DEPT_ID = 10}

10.

Focus

11.

Variables

Uses tuple variables (e.g., t)

Uses scalar variables (e.g., a1, a2, …, an)

12.

Expressiveness

Less expressive

More expressive

13.

Ease of use

Easier to use for simple queries.

More difficult to use for simple queries.

Useful for selecting tuples that satisfy a

Useful for selecting specific values or for

certain condition or for retrieving a

constructing more complex queries that

subset of a relation.

involve multiple relations.

14.

Use case

Focuses on selecting tuples from a

relation

Notation: { a1, a2, a3, …, an | P (a1, a2, a3,

…, an)}

{ | < EMPLOYEE > DEPT_ID = 10 }

Focuses on selecting values from a relation

d) Checkpoint, Rollback and Commit:

Checkpoint: A checkpoint is a process that saves the current state of the database to disk. This includes all transactions

that have been committed, as well as any changes that have been made to the database but not yet committed. The

checkpoint process also includes a log of all transactions that have occurred since the last checkpoint. This log is used to

recover the database in the event of a system failure or crash.

When a checkpoint occurs, the DBMS will write a copy of the current state of the database to disk. This is done to ensure

that the database can be recovered quickly in the event of a failure. The checkpoint process also includes a log of all

transactions that have occurred since the last checkpoint. This log is used to recover the database in the event of a

system failure or crash.

Commit: COMMIT in SQL is a transaction control language that is used to permanently save the changes done in the

transaction in tables/databases. The database cannot regain its previous state after its execution of commit.

Rollback: ROLLBACK in SQL is a transactional control language that is used to undo the transactions that have not been

saved in the database. The command is only been used to undo changes since the last COMMIT.

Difference between COMMIT and ROLLBACK

COMMIT

ROLLBACK

1. COMMIT permanently saves the changes made

by the current transaction.

ROLLBACK undo the changes made by the current transaction.

2. The transaction can not undo changes after

COMMIT execution.

Transaction reaches its previous state after ROLLBACK.

3. When the transaction is successful, COMMIT is

applied.

When the transaction is aborted, incorrect execution, system

failure ROLLBACK occurs.

4. COMMIT statement permanently save the

state, when all the statements are executed

successfully without any error.

In ROLLBACK statement if any operations fail during the

completion of a transaction, it cannot permanently save the

change and we can undo them using this statement.

5. Syntax of COMMIT statement are:

Syntax of ROLLBACK statement are:

COMMIT;

ROLLBACK;

e) Reference architecture of distributed DBMS:

1. Data is distributed system are usually fragmented and replicated. Considering this fragmentation and replication

issue

2.The reference architecture of DBMS consist of the following schemas: o

o

o

o

A set of global external schema.

A global conceptual schema.

A fragmentation schema and allocation schema.

A set of schemas for each local DBMS.

Global external schema- In a distributed system, user applications and user access to the distributed database are

represented by a number of global external schemas. This is the topmost level in the reference architecture of Domestic

level describes the part of the distributed database that is relevant to different users.

Global conceptual schema- The GCS represents the logical description of entire database as if it is not distributed. This

level contains definitions of all entities, relationships among entities and security and integrity information of whole

databases stored at all sites in a distributed system.

Fragmentation schema and allocation schema- The fragmentation schema describes how the data is to be logically

partitioned in a distributed database. The GCS consists of a set of global relations, and the mapping between the global

relations and fragments is defined in the fragmentation schema.

The allocation schema is a description of where the data(fragments)are to be located, taking account of any replication.

The type of mapping in the allocation schema determined whether the distributed database is redundant or nonredundant. In case of redundant data distribution, the mapping is one to many, whereas in case of non-redundant data

distribution is one to one.

Local schemas- In a distributed database system, the physical data organization at each machine is probably different,

and therefore it requires an individual internal schema definition at each site, called local internal schema.

To handle fragmentation and replication issues, the logical organization of data at each site is described by a third layer

in the architecture called local conceptual schema.

The GCS is the union of all local conceptual schemas thus the local conceptual schemas are mappings of the global

schema onto each site. This mapping is done by local mapping schemas.

This architecture provides a very general conceptual framework for understanding distributed database.

2017 – 18

2. What is Data Dictionary in DBMS?

Ans: A data dictionary contains metadata i.e., data about the database. The data dictionary is very important as it

contains information such as what is in the database, who is allowed to access it, where is the database physically stored

etc. The users of the database normally don't interact with the data dictionary, it is only handled by the database

administrators.

The data dictionary in general contains information about the following −

•

Names of all the database tables and their schemas.

•

Details about all the tables in the database, such as their owners, their security constraints, when they were

created etc.

•

Physical information about the tables such as where they are stored and how.

•

Table constraints such as primary key attributes, foreign key information etc.

•

Information about the database views that are visible.

This is a data dictionary describing a table that contains employee details.

Field Name

Data Type

Field Size for display

Description

Example

EmployeeNumber

Integer

10

Unique ID of each employee

1645000001

Name

Text

20

Name of the employee

David Heston

Date of Birth

Date/Time

10

DOB of Employee

08/03/1995

Phone Number

Integer

10

Phone number of employee

6583648648

3. Explain two phase locking protocol.

Ans:

2PL locking protocol

Every transaction will lock and unlock the data item in two different phases.

•

Growing Phase − All the locks are issued in this phase. No locks are released, after all changes to data-items are

committed and then the second phase (shrinking phase) starts.

•

Shrinking phase − No locks are issued in this phase, all the changes to data-items are noted (stored) and then

locks are released.

The 2PL locking protocol is represented diagrammatically as follows −

In the growing phase transaction reaches a point where all the locks it may need has been acquired. This point is called

LOCK POINT.

After the lock point has been reached, the transaction enters a shrinking phase.

Two phase locking is of two types −

•

Strict two-phase locking protocol

A transaction can release a shared lock after the lock point, but it cannot release any exclusive lock until the transaction

commits. This protocol creates a cascade less schedule.

•

Rigorous two-phase locking protocol

A transaction cannot release any lock either shared or exclusive until it commits.

5. Discuss different levels of views.

Ans: There are mainly 3 levels of data abstraction:

Physical: This is the lowest level of data abstraction. It tells us how the data is actually stored in memory. The access

methods like sequential or random access and file organization

methods like B+ trees and hashing are used for the same.

Usability, size of memory, and the number of times the records

are factors that we need to know while designing the database.

Suppose we need to store the details of an employee. Blocks of

storage and the amount of memory used for these purposes are

kept hidden from the user.

Logical: This level comprises the information that is actually

stored in the database in the form of tables. It also stores the

relationship among the data entities in relatively simple

structures. At this level, the information available to the user at

the view level is unknown.

We can store the various attributes of an employee and

relationships, e.g., with the manager can also be stored.

View: This is the highest level of abstraction. Only a part of the actual database is viewed by the users. This level exists

to ease the accessibility of the database by an individual user. Users view data in the form of rows and columns. Tables

and relations are used to store data. Multiple views of the same database may exist. Users can just view the data and

interact with the database, storage and implementation details are hidden from them.

6. What is weak entity set? Discuss with suitable example.

Ans: Weak entities are represented with double rectangular box in the ER Diagram and the identifying relationships are

represented with double diamond. Partial Key attributes are represented with dotted lines.

Example-1:

In the below ER Diagram, ‘Payment’ is the weak entity. ‘Loan Payment’ is the identifying relationship and ‘Payment

Number’ is the partial key. Primary Key of the Loan along with the partial key would be used to identify the records.

7. a) Why distributed deadlocks occur?

Ans: Deadlock is a state of a database system having two or more transactions, when each transaction is waiting for a

data item that is being locked by some other transaction. A deadlock can be indicated by a cycle in the wait-for-graph.

This is a directed graph in which the vertices denote transactions and the edges denote waits for data items.

For example, in the following wait-for-graph, transaction T1 is waiting for data item X which is locked by T3. T3 is waiting

for Y which is locked by T2 and T2 is waiting for Z which is locked by T1. Hence, a waiting cycle is formed, and none of

the transactions can proceed executing.

7.b) What are distributed wait for graph and local wait for graph? How wait for graph helps in deadlock detection?

Under what circumstances global wait for graph biased deadlock handling leads to unnecessary rollback?

Ans:

Wait for Graph

o

This is the suitable method for deadlock detection. In this method, a graph is created based on the transaction

and their lock. If the created graph has a cycle or closed loop, then there is a deadlock.

o

The wait for the graph is maintained by the system for every transaction which is waiting for some data held by

the others. The system keeps checking the graph if there is any cycle in the graph.

The wait for a graph for the above scenario is shown below:

8.a) Define equivalence transformation.

Ans: The equivalence rule says that expressions of two forms are the same or equivalent because both expressions

produce the same outputs on any legal database instance. It means that we can possibly replace the expression of the

first form with that of the second form and replace the expression of the second form with an expression of the first

form. Thus, the optimizer of the query-evaluation plan uses such an equivalence rule or method for transforming

expressions into the logically equivalent one.

8.b) What is parametric query?

Ans: Parameterized SQL queries allow you to place parameters in an SQL query instead of a constant value. A parameter

takes a value only when the query is executed, which allows the query to be reused with different values and for

different purposes. Parameterized SQL statements are available in some analysis clients, and are also available through

the Historian SDK.

For example, you could create the following conditional SQL query, which contains a parameter for the collector name:

SELECT* FROM ihtags WHERE collectorname=? ORDER BY tagname

9.a) What do you mean by referential integrity?

Ans: Referential Integrity Rule in DBMS is based on Primary and Foreign Key. The Rule defines that a foreign key have a

matching primary key. Reference from a table to another table should be valid.

Referential Integrity Rule example −

<Employee>

EMP_ID

EMP_NAME

DEPT_ID

DEPT_NAME

DEPT_ZONE

<Department>

DEPT_ID

The rule states that the DEPT_ID in the Employee table has a matching valid DEPT_ID in the Department table.

To allow join, the referential integrity rule states that the Primary Key and Foreign Key have same data types.

10.c) Write down the basic time stamp methods.

Ans: Every transaction is issued a timestamp based on when it enters the system. Suppose, if an old transaction Ti has

timestamp TS(Ti), a new transaction Tj is assigned timestamp TS(Tj) such that TS(Ti) < TS(Tj). The protocol manages

concurrent execution such that the timestamps determine the serializability order. The timestamp ordering protocol

ensures that any conflicting read and write operations are executed in timestamp order. Whenever some

Transaction T tries to issue a R_item(X) or a W_item(X), the Basic TO algorithm compares the timestamp

of T with R_TS(X) & W_TS(X) to ensure that the Timestamp order is not violated. This describes the Basic TO protocol in

the following two cases.

1. Whenever a Transaction T issues a W_item(X) operation, check the following conditions:

o

If R_TS(X) > TS(T) or if W_TS(X) > TS(T), then abort and rollback T and reject the operation. else,

o

Execute W_item(X) operation of T and set W_TS(X) to TS(T).

2. Whenever a Transaction T issues a R_item(X) operation, check the following conditions:

o

If W_TS(X) > TS(T), then abort and reject T and reject the operation, else

o

If W_TS(X) <= TS(T), then execute the R_item(X) operation of T and set R_TS(X) to the larger of TS(T) and

current R_TS(X).

11.a) Distributed Transparency:

Distribution transparency is the property of distributed databases by the virtue of which the internal details of the

distribution are hidden from the users. The DDBMS designer may choose to fragment tables, replicate the fragments

and store them at different sites.

The three dimensions of distribution transparency are −

o

Location transparency

o

Fragmentation transparency

o

Replication transparency

Location Transparency

Location transparency ensures that the user can query on any table(s) or fragment(s) of a table as if they were stored

locally in the user’s site. The fact that the table or its fragments are stored at remote site in the distributed database

system, should be completely oblivious to the end user. The address of the remote site(s) and the access mechanisms

are completely hidden.

Fragmentation Transparency

Fragmentation transparency enables users to query upon any table as if it were unfragmented. Thus, it hides the fact

that the table the user is querying on is actually a fragment or union of some fragments. It also conceals the fact that the

fragments are located at diverse sites.

Replication Transparency

Replication transparency ensures that replication of databases are hidden from the users. It enables users to query upon

a table as if only a single copy of the table exists.

Replication transparency is associated with concurrency transparency and failure transparency. Whenever a user

updates a data item, the update is reflected in all the copies of the table. However, this operation should not be known

to the user. This is concurrency transparency. Also, in case of failure of a site, the user can still proceed with his queries

using replicated copies without any knowledge of failure. This is failure transparency.

11.b) Normalization

If a database design is not perfect, it may contain anomalies, which are like a bad dream for any database administrator.

Managing a database with anomalies is next to impossible.

•

Update anomalies − If data items are scattered and are not linked to each other properly, then it could lead to

strange situations. For example, when we try to update one data item having its copies scattered over several

places, a few instances get updated properly while a few others are left with old values. Such instances leave the

database in an inconsistent state.

•

Deletion anomalies − We tried to delete a record, but parts of it was left undeleted because of unawareness,

the data is also saved somewhere else.

•

Insert anomalies − We tried to insert data in a record that does not exist at all.

Normalization is a method to remove all these anomalies and bring the database to a consistent state.

First Normal Form

First Normal Form is defined in the definition of relations (tables) itself. This rule defines that all the attributes in a

relation must have atomic domains. The values in an atomic domain are indivisible units.

We re-arrange the relation (table) as below, to convert it to First Normal Form.

Each attribute must contain only a single value from its pre-defined domain.

Second Normal Form

Before we learn about the second normal form, we need to understand the following −

•

Prime attribute − An attribute, which is a part of the candidate-key, is known as a prime attribute.

•

Non-prime attribute − An attribute, which is not a part of the prime-key, is said to be a non-prime attribute.

If we follow second normal form, then every non-prime attribute should be fully functionally dependent on prime key

attribute. That is, if X → A holds, then there should not be any proper subset Y of X, for which Y → A also holds true.

We see here in Student_Project relation that the prime key attributes are Stu_ID and Proj_ID. According to the rule, nonkey attributes, i.e. Stu_Name and Proj_Name must be dependent upon both and not on any of the prime key attribute

individually. But we find that Stu_Name can be identified by Stu_ID and Proj_Name can be identified by Proj_ID

independently. This is called partial dependency, which is not allowed in Second Normal Form.

We broke the relation in two as depicted in the above picture. So there exists no partial dependency.

Third Normal Form

For a relation to be in Third Normal Form, it must be in Second Normal form and the following must satisfy −

•

No non-prime attribute is transitively dependent on prime key attribute.

•

For any non-trivial functional dependency, X → A, then either −

o

X is a superkey or,

o

A is prime attribute.

We find that in the above Student_detail relation, Stu_ID is the key and only prime key attribute. We find that City can

be identified by Stu_ID as well as Zip itself. Neither Zip is a superkey nor is City a prime attribute. Additionally, Stu_ID →

Zip → City, so there exists transitive dependency.

To bring this relation into third normal form, we break the relation into two relations as follows −

Boyce-Codd Normal Form

Boyce-Codd Normal Form (BCNF) is an extension of Third Normal Form on strict terms. BCNF states that −

•

For any non-trivial functional dependency, X → A, X must be a super-key.

In the above image, Stu_ID is the super-key in the relation Student_Detail and Zip is the super-key in the relation

ZipCodes. So,

Stu_ID → Stu_Name, Zip

and

Zip → City

Which confirms that both the relations are in BCNF.

11.d) Fragmentation in DDBMS:

Fragmentation is the task of dividing a table into a set of smaller tables. The subsets of the table are called fragments.

Fragmentation can be of three types: horizontal, vertical, and hybrid (combination of horizontal and vertical). Horizontal

fragmentation can further be classified into two techniques: primary horizontal fragmentation and derived horizontal

fragmentation.

Fragmentation should be done in a way so that the original table can be reconstructed from the fragments. This is needed

so that the original table can be reconstructed from the fragments whenever required. This requirement is called

“reconstructiveness.”

Advantages of Fragmentation

• Since data is stored close to the site of usage, efficiency of the database system is increased.

• Local query optimization techniques are sufficient for most queries since data is locally available.

• Since irrelevant data is not available at the sites, security and privacy of the database system can be

maintained.

Disadvantages of Fragmentation

• When data from different fragments are required, the access speeds may be very low.

• In case of recursive fragmentations, the job of reconstruction will need expensive techniques.

• Lack of back-up copies of data in different sites may render the database ineffective in case of failure of a

site.

Vertical Fragmentation

In vertical fragmentation, the fields or columns of a table are grouped into fragments. In order to maintain

reconstructiveness, each fragment should contain the primary key field(s) of the table. Vertical fragmentation can be used

to enforce privacy of data.

For example, let us consider that a University database keeps records of all registered students in a Student table having

the following schema.

STUDENT

Regd_No

Name

Course

Address

Semester

Fees

Marks

Now, the fees details are maintained in the accounts section. In this case, the designer will fragment the database as

follows −

CREATE TABLE STD_FEES AS

SELECT Regd_No, Fees

FROM STUDENT;

Horizontal Fragmentation

Horizontal fragmentation groups the tuples of a table in accordance to values of one or more fields. Horizontal

fragmentation should also confirm to the rule of reconstructiveness. Each horizontal fragment must have all columns of

the original base table.

For example, in the student schema, if the details of all students of Computer Science Course needs to be maintained at

the School of Computer Science, then the designer will horizontally fragment the database as follows −

CREATE COMP_STD AS

SELECT * FROM STUDENT

WHERE COURSE = "Computer Science";

Hybrid Fragmentation

In hybrid fragmentation, a combination of horizontal and vertical fragmentation techniques are used. This is the most

flexible fragmentation technique since it generates fragments with minimal extraneous information. However,

reconstruction of the original table is often an expensive task.

Hybrid fragmentation can be done in two alternative ways −

•

•

At first, generate a set of horizontal fragments; then generate vertical fragments from one or more of the

horizontal fragments.

At first, generate a set of vertical fragments; then generate horizontal fragments from one or more of the

vertical fragments.

2016 – 17

7.b) Explain the advantages of Distributed DBMS.

Ans:

•

The database is easier to expand as it is already spread across multiple systems and it is not too complicated to add

a system.

•

The distributed database can have the data arranged according to different levels of transparency i.e data with

different transparency levels can be stored at different locations.

•

The database can be stored according to the departmental information in an organisation. In that case, it is easier

for a organisational hierarchical access.

•

there were a natural catastrophe such as fire or an earthquake all the data would not be destroyed it is stored at

different locations.

•

It is cheaper to create a network of systems containing a part of the database. This database can also be easily

increased or decreased.

•

Even if some of the data nodes go offline, the rest of the database can continue its normal functions.

9.a) What is Package in Oracle? What is its advantage.

Ans: A package is a collection object that contains definitions for a group of related small functions or programs.

It includes various entities like the variables, constants, cursors, exceptions, procedures, and many more. All packages

have a specification and a body.

Advantage

•

•

•

•

•

Helps in making the code modular.

Provides security by hiding the implementation details.

Helps in improving the functionality.

Makes it easy to use the pre-compiled code.

Allows the user to get quick authorization and access.

2015 – 16

3. Describe about view in SQL.

Ans: Views in SQL are kind of virtual tables. A view also has rows and columns as they are in a real table in the database.

We can create a view by selecting fields from one or more tables present in the database. A View can either have all the

rows of a table or specific rows based on certain condition.

We can create View using CREATE VIEW statement. A View can be created from a single table or multiple tables. Syntax:

CREATE VIEW view_name AS

SELECT column1, column2.....

FROM table_name

WHERE condition;

6. Define the concept of aggregation.

Ans: In aggregation, the relation between two entities is treated as a single entity. In aggregation, relationship with its

corresponding entities is aggregated into a higher-level entity.

For example: Center entity offers the Course entity act as a single entity in the relationship which is in a relationship

with another entity visitor. In the real world, if a visitor visits a coaching center, then he will never enquiry about the

Course only or just about the Center instead he will ask the enquiry about both.

8.c) Write sort note on Trigger in Database.

Ans: A trigger is a stored procedure in database which automatically invokes whenever a special event in the database

occurs. For example, a trigger can be invoked when a row is inserted into a specified table or when certain table columns

are being updated.

Syntax:

create trigger [trigger_name]

[before | after]

{insert | update | delete}

on [table_name]

[for each row]

[trigger_body]

Explanation of syntax:

1.

2.

3.

4.

5.

6.

create trigger [trigger_name]: Creates or replaces an existing trigger with the trigger_name.

[before | after]: This specifies when the trigger will be executed.

{insert | update | delete}: This specifies the DML operation.

on [table_name]: This specifies the name of the table associated with the trigger.

[for each row]: This specifies a row-level trigger, i.e., the trigger will be executed for each row being affected.

[trigger_body]: This provides the operation to be performed as trigger is fired

BEFORE and AFTER of Trigger:

BEFORE triggers run the trigger action before the triggering statement is run. AFTER triggers run the trigger action after

the triggering statement is run.

10.a) What is Query Optimization? Write the steps of Query Optimization.

Ans: Query optimization is of great importance for the performance of a relational database, especially for the execution

of complex SQL statements. A query optimizer decides the best methods for implementing each query.

The query optimizer selects, for instance, whether or not to use indexes for a given query, and which join methods to

use when joining multiple tables. These decisions have a tremendous effect on SQL performance, and query

optimization is a key technology for every application, from operational Systems to data warehouse and analytical

systems to content management systems.

There is the various principle of Query Optimization are as follows −

•

Understand how your database is executing your query − The first phase of query optimization is

understanding what the database is performing. Different databases have different commands for this. For

example, in MySQL, one can use the “EXPLAIN [SQL Query]” keyword to see the query plan. In Oracle, one can

use the “EXPLAIN PLAN FOR [SQL Query]” to see the query plan.

•

Retrieve as little data as possible − The more information restored from the query, the more resources the

database is required to expand to process and save these records. For example, if it can only require to fetch

one column from a table, do not use ‘SELECT *’.

•

Store intermediate results − Sometimes logic for a query can be quite complex. It is possible to produce the

desired outcomes through the use of subqueries, inline views, and UNION-type statements. For those methods,

the transitional results are not saved in the database but are directly used within the query. This can lead to

achievement issues, particularly when the transitional results have a huge number of rows.

Steps of Query Optimization:

Query optimization involves three steps, namely query tree generation, plan generation, and query plan code

generation.

Step 1 − Query Tree Generation

A query tree is a tree data structure representing a relational algebra expression. The tables of the query are

represented as leaf nodes. The relational algebra operations are represented as the internal nodes. The root represents

the query as a whole.

During execution, an internal node is executed whenever its operand tables are available. The node is then replaced by

the result table. This process continues for all internal nodes until the root node is executed and replaced by the result

table.

For example, let us consider the following schemas −

EMPLOYEE

EmpID

EName

Salary

DeptNo

DateOfJoining

DEPARTMENT

DNo

DName

Location

Example 1

Let us consider the query as the following.

$$\pi_{EmpID} (\sigma_{EName = \small "ArunKumar"} {(EMPLOYEE)})$$

The corresponding query tree will be −

Example 2

Let us consider another query involving a join.

$\pi_{EName, Salary} (\sigma_{DName = \small "Marketing"} {(DEPARTMENT)}) \bowtie_{DNo=DeptNo}{(EMPLOYEE)}$

Following is the query tree for the above query.

Step 2 − Query Plan Generation

After the query tree is generated, a query plan is made. A query plan is an extended query tree that includes access

paths for all operations in the query tree. Access paths specify how the relational operations in the tree should be

performed. For example, a selection operation can have an access path that gives details about the use of B+ tree index

for selection.

Besides, a query plan also states how the intermediate tables should be passed from one operator to the next, how

temporary tables should be used and how operations should be pipelined/combined.

Step 3− Code Generation

Code generation is the final step in query optimization. It is the executable form of the query, whose form depends upon

the type of the underlying operating system. Once the query code is generated, the Execution Manager runs it and

produces the results.

Approaches to Query Optimization

Among the approaches for query optimization, exhaustive search and heuristics-based algorithms are mostly used.

Exhaustive Search Optimization

In these techniques, for a query, all possible query plans are initially generated and then the best plan is selected.

Though these techniques provide the best solution, it has an exponential time and space complexity owing to the large

solution space. For example, dynamic programming technique.

Heuristic Based Optimization

Heuristic based optimization uses rule-based optimization approaches for query optimization. These algorithms have

polynomial time and space complexity, which is lower than the exponential complexity of exhaustive search-based

algorithms. However, these algorithms do not necessarily produce the best query plan.

Some of the common heuristic rules are −

•

Perform select and project operations before join operations. This is done by moving the select and project

operations down the query tree. This reduces the number of tuples available for join.

•

Perform the most restrictive select/project operations at first before the other operations.

•

Avoid cross-product operation since they result in very large-sized intermediate tables.

10.b) What is schedule and when it is called conflict serializable?

Ans: A series of operations from one transaction to another transaction is known as a Schedule.

A schedule is called conflict serializable if it can be transformed into a serial schedule by swapping non-conflicting

operations.

Conflicting operations: Two operations are said to be conflicting if all conditions satisfy:

•

•

•

They belong to different transactions

They operate on the same data item

At Least one of them is a write operation

10.c) Describe briefly the process how one can test whether a non-serial-schedule is conflict serializable or not.

Ans: Consider the following schedule:

S1: R1(A), W1(A), R2(A), W2(A), R1(B), W1(B), R2(B), W2(B)

If Oi and Oj are two operations in a transaction and Oi< Oj (Oi is executed before Oj), same order will follow in the

schedule as well. Using this property, we can get two transactions of schedule S1:

T1: R1(A), W1(A), R1(B), W1(B)

T2: R2(A), W2(A), R2(B), W2(B)

Possible Serial Schedules are: T1->T2 or T2->T1

-> Swapping non-conflicting operations R2(A) and R1(B) in S1, the schedule becomes,

S11: R1(A), W1(A), R1(B), W2(A), R2(A), W1(B), R2(B), W2(B)

-> Similarly, swapping non-conflicting operations W2(A) and W1(B) in S11, the schedule becomes,

S12: R1(A), W1(A), R1(B), W1(B), R2(A), W2(A), R2(B), W2(B)

S12 is a serial schedule in which all operations of T1 are performed before starting any operation of T2. Since S has been

transformed into a serial schedule S12 by swapping non-conflicting operations of S1, S1 is conflict serializable.

Let us take another Schedule:

S2: R2(A), W2(A), R1(A), W1(A), R1(B), W1(B), R2(B), W2(B)

Two transactions will be:

T1: R1(A), W1(A), R1(B), W1(B)

T2: R2(A), W2(A), R2(B), W2(B)

Possible Serial Schedules are: T1->T2 or T2->T1

Original Schedule is as:

S2: R2(A), W2(A), R1(A), W1(A), R1(B), W1(B), R2(B), W2(B)

Swapping non-conflicting operations R1(A) and R2(B) in S2, the schedule becomes,

S21: R2(A), W2(A), R2(B), W1(A), R1(B), W1(B), R1(A), W2(B)

Similarly, swapping non-conflicting operations W1(A) and W2(B) in S21, the schedule becomes,

S22: R2(A), W2(A), R2(B), W2(B), R1(B), W1(B), R1(A), W1(A)

In schedule S22, all operations of T2 are performed first, but operations of T1 are not in order (order should be R1(A),

W1(A), R1(B), W1(B)). So S2 is not conflict serializable.

11. Sort Notes.

a) Distributed Failures:

Designing a reliable system that can recover from failures requires identifying the types of failures with which the

system has to deal. In a distributed database system, we need to deal with four types of failures: transaction failures

(aborts), site (system) failures, media (disk) failures, and communication line failures. Some of these are due to

hardware and others are due to software.

1. Transaction Failures: Transactions can fail for a number of reasons. Failure can be due to an error in the transaction

caused by incorrect input data as well as the detection of a present or potential deadlock. Furthermore, some

concurrency control algorithms do not permit a transaction to proceed or even to wait if the data that they attempt to

access are currently being accessed by another transaction. This might also be considered a failure.The usual approach

to take in cases of transaction failure is to abort the transaction, thus resetting the database to its state prior to the start

of this transaction.

2. Site (System) Failures: The reasons for system failure can be traced back to a hardware or to a software failure. The

system failure is always assumed to result in the loss of main memory contents. Therefore, any part of the database that

was in main memory buffers is lost as a result of a system failure. However, the database that is stored in secondary

storage is assumed to be safe and correct. In distributed database terminology, system failures are typically referred to

as site failures, since they result in the failed site being unreachable from other sites in the distributed system. We

typically differentiate between partial and total failures in a distributed system. Total failure refers to the simultaneous

failure of all sites in the distributed system; partial failure indicates the failure of only some sites while the others remain

operational.

3. Media Failures: Media failure refers to the failures of the secondary storage devices that store the database. Such

failures may be due to operating system errors, as well as to hardware faults such as head crashes or controller failures.

The important point is that all or part of the database that is on the secondary storage is considered to be destroyed and

inaccessible. Duplexing of disk storage and maintaining archival copies of the database are common techniques that deal

with this sort of catastrophic problem. Media failures are frequently treated as problems local to one site and therefore

not specifically addressed in the reliability mechanisms of distributed DBMSs.

4. Communication Failures There are a number of types of communication failures. The most common ones are the

errors in the messages, improperly ordered messages, lost messages, and communication line failures. The first two

errors are the responsibility of the computer network; we will not consider them further. Therefore, in our discussions of

distributed DBMS reliability, we expect the underlying computer network hardware and software to ensure that two

messages sent from a process at some originating site to another process at some destination site are delivered without

error and in the order in which they were sent. Lost or undeliverable messages are typically the consequence of

communication line failures or (destination) site failures. If a communication line fails, in addition to losing the

message(s) in transit, it may also divide the network into two or more disjoint groups. This is called network partitioning.

If the network is partitioned, the sites in each partition may continue to operate. In this case, executing transactions that

access data stored in multiple partitions becomes a major issue.

b) OODBMS and ORDBMS:

OODBMS

The object-oriented database system is an extension of an object-oriented programming language that includes DBMS

functions such as persistent objects, integrity constraints, failure recovery, transaction management, and query

processing. These systems feature object description language (ODL) for database structure creation and object query

language (OQL) for database querying. Some examples of OODBMS are ObjectStore, Objectivity/DB, GemStone, db4o,

Giga Base, and Zope object database.

ORDBMS

An object-relational database system is a relational database system that has been extended to incorporate objectoriented characteristics. Database schemas and the query language natively support objects, classes, and inheritance.

Furthermore, it permits data model expansion with new data types and procedures, exactly like pure relational systems.

Oracle, DB2, Informix, PostgreSQL (UC Berkeley research project), etc. are some of the ORDBMSs.

Difference between OODBMS and ORDBMS:

OODBMS

ORDBMS

It stands for Object Oriented Database Management

System.

It stands for Object Relational Database Management

System.

Object-oriented databases, like Object Oriented

Programming,

An object-relational database is one that is based on both

the

represent data in the form of objects and classes.

relational and object-oriented database models.

OODBMSs support ODL/OQL.

ORDBMS adds object-oriented functionalities to SQL.

Every object-oriented system has a different set of

constraints

Keys, entity integrity, and referential integrity are

constraints of

that it can accommodate.

an object-oriented database.

The efficiency of query processing is low.

Processing of queries is quite effective.

2015

2. Why BCNF is stronger than 3NF? “All candidate key(s) is / are super key(s), but all super key(s) is / are not candidate

key(s)” - justify.

Ans:

1.

2.

3.

4.

5.

BCNF is a normal form used in database normalization.

3NF is the third normal form used in database normalization.

BCNF is stricter than 3NF because each and every BCNF is relation to 3NF but every 3NF is not relation to BCNF.

BCNF non-transitionally depends on individual candidate key but there is no such requirement in 3NF.

Hence BCNF is stricter than 3NF

All candidate key(s) is / are super key(s), but all super key(s) is / are not candidate key(s)

•

•

•

•

•

A Super key is a single key or a group of multiple keys that can uniquely identify tuples in a table.

Super keys can contain redundant attributes that might not be important for identifying tuples.

Super keys are a superset of Candidate keys.

Candidate keys are a subset of Super keys. They contain only those attributes which are required to identify tuples

uniquely.

All Candidate keys are Super keys. But the vice-versa is not true.

3. What is functional dependency? Explain with an example.

Ans: Functional dependency refers to the relation of one attribute of the database to another. With the help of functional

dependency, the quality of the data in the database can be maintained.

The symbol for representing functional dependency is -> (arrow).

Example of Functional Dependency

Consider the following table.

Employee Number

Name

City

Salary

1

bob

Bangalore

25000

2

Lucky

Delhi

40000

The details of the name of the employee, salary and city are obtained by the value of the number of Employee (or id of

an employee). So, it can be said that the city, salary and the name attributes are functionally dependent on the attribute

Employee Number.

Example

SSN->ENAME read as SSN functionally dependent on ENAME or SSN

determines ENAME.

PNUMBER->{PNAME,PLOCATION} (PNUMBER determines PNAME and PLOCATION)

{SSN,PNUMBER}->HOURS (SSN and PNUMBER combined determines HOURS)

8.a) What are the criteria to be satisfied during fragmentation?

Ans: Fragmentation is a process of dividing the whole or full database into various sub tables or sub relations so that

data can be stored in different systems. The small pieces of sub relations or sub tables are called fragments. These

fragments are called logical data units and are stored at various sites. It must be made sure that the fragments are such

that they can be used to reconstruct the original relation (i.e., there isn’t any loss of data).

In the fragmentation process, let’s say, if a table T is fragmented and is divided into a number of fragments say T1, T2,

T3…. TN. The fragments contain sufficient information to allow the restoration of the original table T. This restoration

can be done by the use of UNION or JOIN operation on various fragments. This process is called data fragmentation. All

of these fragments are independent which means these fragments cannot be derived from others. The users needn’t be

logically concerned about fragmentation which means they should not concern that the data is fragmented and this is

called fragmentation Independence or we can say fragmentation transparency.

8.b) Describe how replication affects the implementation of distributed database.

Ans: Distributed Database Replication is the process of creating and maintaining multiple copies (redundancy) of data in

different sites. The main benefit it brings to the table is that duplication of data ensures faster retrieval. This eliminates

single points of failure and data loss issues if one site fails to deliver user requests, and hence provides you and your

teams with a fault-tolerant system.

However, Distributed Database Replication also has some disadvantages. To ensure accurate and correct responses to

user queries, data must be constantly updated and synchronized at all times. Failure to do so will create inconsistencies

in data, which can hamper business goals and decisions for other teams.

2015 – 16 (9.a)

Give an example of a relation schema R and a set of dependencies such that R is in BCNF, but is not in 4NF.

Ans: The relation schema R = (A, B, C, D, E) and the set of dependencies

A → BC

B → CD

E → AD

constitute a BCNF decomposition, however it is clearly not in 4NF. (It is BCNF because all FDs are trivial).

2016 – 17 (5)

What is indexing and what are the different types of indexing?

Ans:

•

•

Indexing is used to optimize the performance of a database by minimizing the number of disk accesses required

when a query is processed.

The index is a type of data structure. It is used to locate and access the data in a database table quickly.

Indexes can be created using some database columns.

•

The first column of the database is the search key that contains a copy of the primary key or candidate key of the

table. The values of the primary key are stored in sorted order so that the corresponding data can be accessed

easily.

•

The second column of the database is the data reference. It contains a set of pointers holding the address of the

disk block where the value of the particular key can be found.

(Extra)

Recovery in DBMS.

Crash Recovery

DBMS is a highly complex system with hundreds of transactions being executed every second. The durability and

robustness of a DBMS depends on its complex architecture and its underlying hardware and system software. If it fails or

crashes amid transactions, it is expected that the system would follow some sort of algorithm or techniques to recover

lost data.

Failure Classification

To see where the problem has occurred, we generalize a failure into various categories, as follows −

Transaction failure

A transaction has to abort when it fails to execute or when it reaches a point from where it can’t go any further. This is

called transaction failure where only a few transactions or processes are hurt.

Reasons for a transaction failure could be −

•

Logical errors − Where a transaction cannot complete because it has some code error or any internal error

condition.

•

System errors − Where the database system itself terminates an active transaction because the DBMS is not

able to execute it, or it has to stop because of some system condition. For example, in case of deadlock or

resource unavailability, the system aborts an active transaction.

System Crash

There are problems − external to the system − that may cause the system to stop abruptly and cause the system to

crash. For example, interruptions in power supply may cause the failure of underlying hardware or software failure.

Examples may include operating system errors.

Disk Failure

In early days of technology evolution, it was a common problem where hard-disk drives or storage drives used to fail

frequently.

Disk failures include formation of bad sectors, unreachability to the disk, disk head crash or any other failure, which

destroys all or a part of disk storage.

Storage Structure

We have already described the storage system. In brief, the storage structure can be divided into two categories −

•

Volatile storage − As the name suggests, a volatile storage cannot survive system crashes. Volatile storage

devices are placed very close to the CPU; normally they are embedded onto the chipset itself. For example, main

memory and cache memory are examples of volatile storage. They are fast but can store only a small amount of

information.

•

Non-volatile storage − These memories are made to survive system crashes. They are huge in data storage

capacity, but slower in accessibility. Examples may include hard-disks, magnetic tapes, flash memory, and nonvolatile (battery backed up) RAM.

Recovery and Atomicity

When a system crashes, it may have several transactions being executed and various files opened for them to modify

the data items. Transactions are made of various operations, which are atomic in nature. But according to ACID

properties of DBMS, atomicity of transactions as a whole must be maintained, that is, either all the operations are

executed or none.

When a DBMS recovers from a crash, it should maintain the following −

•

It should check the states of all the transactions, which were being executed.

•

A transaction may be in the middle of some operation; the DBMS must ensure the atomicity of the transaction

in this case.

•

It should check whether the transaction can be completed now or it needs to be rolled back.

•

No transactions would be allowed to leave the DBMS in an inconsistent state.

There are two types of techniques, which can help a DBMS in recovering as well as maintaining the atomicity of a

transaction −

•

Maintaining the logs of each transaction, and writing them onto some stable storage before actually modifying

the database.

•

Maintaining shadow paging, where the changes are done on a volatile memory, and later, the actual database is

updated.

Log-based Recovery

Log is a sequence of records, which maintains the records of actions performed by a transaction. It is important that the

logs are written prior to the actual modification and stored on a stable storage media, which is failsafe.

Log-based recovery works as follows −

•

The log file is kept on a stable storage media.

•

When a transaction enters the system and starts execution, it writes a log about it.

<Tn, Start>

•

When the transaction modifies an item X, it writes logs as follows −

<Tn, X, V1, V2>

It reads Tn has changed the value of X, from V1 to V2.

•

When the transaction finishes, it logs −

<Tn, commit>

The database can be modified using two approaches −

•

Deferred database modification − All logs are written on to the stable storage and the database is updated

when a transaction commits.

•

Immediate database modification − Each log follows an actual database modification. That is, the database is

modified immediately after every operation.

Recovery with Concurrent Transactions

When more than one transaction are being executed in parallel, the logs are interleaved. At the time of recovery, it

would become hard for the recovery system to backtrack all logs, and then start recovering. To ease this situation, most

modern DBMS use the concept of 'checkpoints'.

Checkpoint

Keeping and maintaining logs in real time and in real environment may fill out all the memory space available in the

system. As time passes, the log file may grow too big to be handled at all. Checkpoint is a mechanism where all the

previous logs are removed from the system and stored permanently in a storage disk. Checkpoint declares a point

before which the DBMS was in consistent state, and all the transactions were committed.

Recovery

When a system with concurrent transactions crashes and recovers, it behaves in the following manner −

•

The recovery system reads the logs backwards from the end to the last checkpoint.

•

It maintains two lists, an undo-list and a redo-list.

•

If the recovery system sees a log with <Tn, Start> and <Tn, Commit> or just <Tn, Commit>, it puts the transaction

in the redo-list.

•

If the recovery system sees a log with <Tn, Start> but no commit or abort log found, it puts the transaction in

undo-list.

All the transactions in the undo-list are then undone and their logs are removed. All the transactions in the redo-list and

their previous logs are removed and then redone before saving their logs.