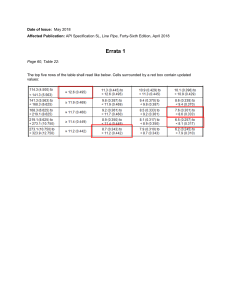

See discussions, stats, and author profiles for this publication at: https://www.researchgate.net/publication/344882855 Review on over-fitting and under-fitting problems in Machine Learning and solutions Article in International Journal of Advanced Research in Electrical Electronics and Instrumentation Engineering · September 2018 DOI: 10.15662/IJAREEIE.2018.0709015 CITATIONS READS 2 1,399 1 author: Swathi Pothuganti hindawistar 12 PUBLICATIONS 36 CITATIONS SEE PROFILE Some of the authors of this publication are also working on these related projects: machine learning View project All content following this page was uploaded by Swathi Pothuganti on 26 October 2020. The user has requested enhancement of the downloaded file. ISSN (Print) : 2320 – 3765 ISSN (Online): 2278 – 8875 International Journal of Advanced Research in Electrical, Electronics and Instrumentation Engineering (A High Impact Factor, Monthly, Peer Reviewed Journal) Website: www.ijareeie.com Vol. 7, Issue 9, September 2018 Review on over-fitting and under-fitting problems in Machine Learning and solutions Swathi Pothuganti Lecturer, Computer science Engineering, Sreechaitanya degree college, Sathvahana University, Telangana, India ABSTRACT: Overfitting is a vital issue in supervised machine learning, which forestalls us from consummately summing up the models to very much fit watched information on preparing information, just as inconspicuous information on the testing set. In light of the presence of clamor, the restricted size of preparing the set, and the multifaceted nature of classifiers, overfitting occurs. This paper will discuss fitting from the points of view of causes and solutions.To reduce the impacts of overfitting, different procedures are proposed to deliver to these causes: (1) "early-stopping" system is acquainted with forestall overfitting by stopping preparing before the exhibition stops streamline; (2)"network-reduction" technique is utilized to prohibit the commotions in preparing set; (3) "dataexpansion" methodology is proposed for muddled models to calibrate the hyper-boundaries sets with many data; and (4) "regularization" technique is proposed to ensure models execution by and large while managing correct issues by highlight determination, and by separating more valuable and less helpful highlights. KEYWORDS: Overfitting, supervised machine learning, regularization, I. INTRODUCTION In supervised machine learning, there is an un-rerouting issue. The model does not sum up well from watched data to inconspicuous data, which is called overfitting. As a result of overfitting, the model performs consummately on the training set while fitting ineffectively on the testing set. This is because of that over-fitted model experiences issues adapting to bits of the data in the testing set, which might be unique about those in the training set. Then again, overfitted models will, in general, retain all the data, remembering unavoidable clamor for the training set, rather than learning the order taken cover behind the data[1].The causes of this phenomenon might be complicated. Generally, we can categorize them into three kinds: 1. Noise learning on the training set: when the training set is excessively small in size or has less agent information or such a large number of noises. This circumstance causes the noises to have extraordinary opportunities to be educated and later go about as a premise of predictions. 2. Hypothesiscomplexity: The compromise in unpredictability, a key idea in statistic and machining learning, is a tradeoff among Variance and Bias. It alludes to harmony among exactness and consistency. When the calculations have such a large number of the hypothesis (such a large number of data sources), the model becomes more exact on regular with lower consistency. Various correlations systems are omnipresent in induction algorithms, just as in other Artificial Intelligence (AI) algorithms. We generally look at numerous things dependent on scores from an assessment work and select the thing with the most significant score during these cycles. Notwithstanding, this cycle will likely pick a few things, which will not improve or even decrease classification accuracy [2].To decrease the impact of overfitting, numerous arrangements depend on various methodologies to restrain the various triggers. The more significant part of them performs ineffectively when managing correct issues in light of the hypothesis's great measure[3]. Nonetheless, none of the hypothesis sets can cover all the application fields. Copyright to IJAREEIE DOI:10.15662/IJAREEIE.2018.0709015 3692 ISSN (Print) : 2320 – 3765 ISSN (Online): 2278 – 8875 International Journal of Advanced Research in Electrical, Electronics and Instrumentation Engineering (A High Impact Factor, Monthly, Peer Reviewed Journal) Website: www.ijareeie.com Vol. 7, Issue 9, September 2018 II. EARLY-STOPPING This system is utilized to keep away from the wonder "learning speed slow-down." This issue implies that the accuracy of algorithms quits improving after some point, or in any event, deteriorating due to commotion learning. The thought has a reasonably long history, which can be going back to the 1970s regarding the Landweber iteration. Likewise, it is generally utilized in iterative algorithms, particularly in neural networks beginning from the 1990s.Figure 1, where the horizontal axis is the epoch, and the vertical axis is a mistake, the blue line shows the training mistake, and the red line shows the approval blunder [4]. On the off chance that the model keeps learning after the point, the approval mistake will increment while the training blunder will keep diminishing. Fig 1:Validation error vs. testing error On the off chance that we quit learning before the fact, it is under-fitting. On the off chance that we stop after the point, we get over-fitting. So the aim is to locate the specific highlight of quit training. This way, we got an ideal fit between under-fitting and over-fitting[5]. For artificial neural nets, the learning cycle is to locate an ideal arrangement of loads and bias.The neurons learn at a rate controlled by the fractional subordinates of the cost-work: and So the speed of learning relies upon the estimations of those two incomplete subordinates, which can be separately portrayed with the accompanying recipes,where j is the th weight, is the bias, is the cost, jis the thinput, is the output, and is the output when data input is 1. = (1) To discover the highlight stop learning, the absolute path is to monitor accuracy on the test data as our network trains. In other words, we process the accuracy toward the finish of every age and stop training when the accuracy of test data stops improving.Essentially, to discover the highlight stop learning, the conspicuous path is to monitor accuracy on the test data as our network trains [6]. In other words, we process the accuracy toward the finish of every age and stop training when the accuracy of test data stops improving. By and large, we can follow the accuracy on the approval set rather than the test set to decide when to stop training. In other words, we use the approval set to sort out an ideal arrangement of qualities for the hyper-boundaries and later utilize the test set to do the last assessment of accuracy [7]. Network-reduction As we probably are aware, noise learning is one significant reason for overfitting. So sensibly, clamor decrease becomes one exploring course for over fittinghindrance [8]. In light of this reasoning, pruning is proposed to decrease the size of finial classifiers in social learning, particularly in choice tree learning. Pruning is a massive hypothesis used Copyright to IJAREEIE DOI:10.15662/IJAREEIE.2018.0709015 3693 ISSN (Print) : 2320 – 3765 ISSN (Online): 2278 – 8875 International Journal of Advanced Research in Electrical, Electronics and Instrumentation Engineering (A High Impact Factor, Monthly, Peer Reviewed Journal) Website: www.ijareeie.com Vol. 7, Issue 9, September 2018 to diminish classification intricacy by taking out less critical or insignificant data, forestall overfitting, and improving classification accuracy. Pre-pruning and post-pruning are two standard methodologies used to managing commotion: Pre-pruning algorithms work during the learning cycle [9]. Ordinarily, they use stopping standards from deciding when to stop adding conditions to a standard or adding the rule to a model portrayal, for example[10], encoding length limitation dependent on the assessment of encoding cost; importance testing depends on huge contrasts between the conveyance of positive and negative models; cutoff stopping measure dependent on a predefined threshold [11]. Postpruning parts the training set into two subsets: developing set and pruning set. Contrasted with pre-learning, postpruning algorithms overlook overfitting issues during the learning cycle on developing set. Instead, they forestall overfitting through erasing conditions and rules from the model created during learning. III. EXPANSION OF THE TRAINING DATA In machine learning, the algorithm is not the primary key influencing the last classification accuracy. Its presentation can be essentially influenced by the amount and nature of the training dataset as a rule, particularly in the region of managed learning. Model training is a cycle of tuning its hyper-boundary. Very much tuned boundaries make a decent harmony between training accuracy and routineness, and afterward restrain the impact of overfitting, just as that of under-fitting [12]. To tune these boundaries, the model needs good examples for learning. The measure of tests is corresponding to the number of boundaries. Furthermore, the more convoluted model you have, the more boundaries should be tuned. In other words, an extended dataset can improve expectation accuracy by and large, particularly in convoluted models. That is why data expansion is broadly utilized and compelling as an overall procedure to improve models' speculation execution in numerous application zones, such as design acknowledgment and picture handling. Be that as it may, the enormous size of data will expand training time [13]. Moreover, training data can be costly or hard to obtain because it needs human intervention loads, such as labeling. Regularization To sort out the base of cost work, L1 regularization utilizes the Lasso Regression, one straight regression hypothesis. In this methodology, we utilize the supposed taxi separation, the entirety of supreme estimations of the apparent multitude of loads, as the term. (2) To limit the cost work, we have to set loads of specific highlights to be zero [14]. In other words, we eliminate a few highlights from our model and keep just those highlights more critical. Dropout Dropout is a mainstream and compelling technique against overfitting in neural networks. The underlying thought of dropout is to arbitrarily drop units and relevant associations from the neural networks during training. This keeps units from co-adjusting excessively. By briefly eliminating a few units from neural nets, dropout approximates the impact of averaging all these diminished networksexpectations [15]. This way, overfitting is restrained to a few degrees while consolidating the forecasts of various enormous neural nets at test time. Plus, dropout diminishes the measure of calculations altogether. This settles on it a viable decision for enormous or convoluted networks that need heaps of training data. IV. CONCLUSION Overfitting is a general issue in supervised machine learning, which cannot be dodged. It happens due to either the restrictions of training data, which can have a restricted measure or incorporate many commotions, or the constraints of algorithms, which are excessively confounded, and require such a large number of boundaries. Reacting to these causes, an assortment of algorithms is acquainted with diminishing the impact of overfitting. From one viewpoint, to Copyright to IJAREEIE DOI:10.15662/IJAREEIE.2018.0709015 3694 ISSN (Print) : 2320 – 3765 ISSN (Online): 2278 – 8875 International Journal of Advanced Research in Electrical, Electronics and Instrumentation Engineering (A High Impact Factor, Monthly, Peer Reviewed Journal) Website: www.ijareeie.com Vol. 7, Issue 9, September 2018 manage commotions in the training set, algorithms dependent on the "early-stopping" technique help us to stop training before learning clamors; also, algorithms dependent on the "decrease the size of network" system give us a way to deal with lessen commotions in the training set. Then again, the "data-development" procedure is proposed for confounded models that require copious data to calibrate their hyper-boundaries. Additionally, "Regularization"- based algorithms help us recognize commotions, which means and futile highlights and allot various loads to them. To manage genuine issues, most models are convoluted, because by and large, thefinal output can be influenced by many or even several variables. A very much summed up model willbe inclined to contemplate the apparent multitude of expected highlights rather than subjectively disposing of the pointless like ones. The expansion of boundaries requests many training data to tune the hyper-boundaries set, for example, the loads. In this manner, data turns into a central issue in machine learning, mainly supervised machine learning. REFERENCES [1]K. He, X. Zhang, S. Ren, and J. Sun, “Deep Residual Learning for Image Recognition,” in IEEE Conf. Comput. Vis. Pattern Recognit. IEEE, 2016, pp. 770–778. [Online]. Available: https://doi.org/10.1109/CVPR.2016.90 [2]Ankit Narendrakumar Soni (2018). Image Segmentation Using Simultaneous Localization and Mapping Algorithm. International Journal of Scientific Research and Engineering Development, 1(2), 279-282. [3]Vishal Dineshkumar Soni. (2018). ROLE OF AI IN INDUSTRY IN EMERGENCY SERVICES. International Engineering Journal For Research & Development, 3(2), 6. https://doi.org/10.17605/OSF.IO/C67BM [4]C. Szegedy, W. Liu, Y. Jia, P. Sermanet, S. Reed, D. Anguelov, D. Erhan, V. Vanhoucke, and A. Rabinovich, “Going deeper with convolutions,,” in Conference on Computer Vision and Pattern Recognition (CVPR), vol. 2015. IEEE, 2015, pp. 1–9. [5]Ketulkumar Govindbhai Chaudhari. (2018). Analysis on Transport Layer Protocols and Its Developments.International Journal of Innovative Research in Computer and Communication Engineering, 6(9), 7707-7712. DOI: 10.15680/IJIRCCE.2018. 0609046. [6]Ankit Narendrakumar Soni (2018). Data Center Monitoring using an Improved Faster Regional Convolutional Neural Network. International Journal of Advanced Research in Electrical, Electronics and Instrumentation Engineering, 7(4), 1849-1853. doi:10.15662/IJAREEIE.2018.0704058. [7]Karunakar Pothuganti, Aredo Haile, Swathi Pothuganti,(2016)” A Comparative Study of Real Time Operating Systems for Embedded Systems” International Journal of Innovative Research in Computer and Communication Engineering, Vol. 4, Issue 6, June 2016. [8]Ketulkumar Govindbhai Chaudhari. (2018). Comparitive Analysis of CNN Models To Diagnose Breast Cancer. International Journal of Innovative Research in Science, Engineering and Technology, 7(10), 8180-8187. DOI:10.15680/IJIRSET.2018.0710074. [9]P. Luo, X. Wang, W. Shao, and Z. Peng, “Understanding Regularization in Batch Normalization,,”arXiv, 2018. [Online].Available:http://export.arxiv.org/abs/1809.00846 [10]Vishal Dineshkumar Soni (2018). Prediction of Stock Market Values using Artificial Intelligence. International Journal of Advanced Research in Electrical Electronics and Instrumentation Engineering, 7(4), 1844-1848. doi:10.15662/IJAREEIE.2018.0704057. [11]Y. Bengio, P. Simard, and P. Frasconi, “Learning long-term dependencies with gradient descent is difficult,” IEEE Transactions on Neural Networks, vol. 5, no. 2, pp. 157–166, 1994. [Online]. Available: https://doi.org/10.1109/72.279181 [12]Ankit Narendrakumar Soni (2018). Application and Analysis of Transfer Learning-Survey. International Journal of Scientific Research and Engineering Development, 1(2), 272-278. [13]T. N. Sainath, B. Kingsbury, G. Saon, H. Soltau, A. Mohamed, G. Dahl, and B. Ramabhadran, “Deep Convolutional Neural Networks for Large-scale Speech Tasks,” Neural Networks, vol. 64, pp. 39–48, 2015. [Online]. Available: https://doi.org/10.1016/J.NEUNET.2014.08.005 [14]Vishal Dineshkumar Soni .(2018). Internet of Things based Energy Efficient Home Automation System. International Journal of Innovative Research in Science Engineering and Technology, 7(3), 2924-2929. doi:10.15680/IJIRSET.2018.0703148. [15]Ketulkumar Govindbhai Chaudhari. (2018). E-voting System using Proof of Voting (PoV) Consensus Algorithm using Block Chain Technology. International Journal of Advanced Research in Electrical, Electronics and Instrumentation Engineering, 7(11), 4051-4055. DOI:10.15662/IJAREEIE.2018.0711019. Copyright to IJAREEIE View publication stats DOI:10.15662/IJAREEIE.2018.0709015 3695