Gradient Descent

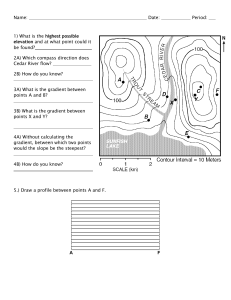

Review: Gradient Descent

• In step 3, we have to solve the following optimization

problem:

𝜃 ∗ = arg min 𝐿 𝜃

𝜃

L: loss function 𝜃: parameters

Suppose that θ has two variables {θ1, θ2}

0

𝜃

𝜕𝐿 𝜃1 Τ𝜕𝜃1

Randomly start at 𝜃 0 = 10

𝛻𝐿 𝜃 =

𝜃2

𝜕𝐿 𝜃2 Τ𝜕𝜃2

𝜃11

𝜃10

𝜕𝐿 𝜃10 Τ𝜕𝜃1

𝜃 1 = 𝜃 0 − 𝜂𝛻𝐿 𝜃 0

1 =

0 −𝜂

0 Τ

𝜃2

𝜃2

𝜕𝐿 𝜃2 𝜕𝜃2

𝜃12

𝜃11

𝜕𝐿 𝜃11 Τ𝜕𝜃1

1 −𝜂

2 =

𝜃2

𝜕𝐿 𝜃21 Τ𝜕𝜃2

𝜃2

𝜃 2 = 𝜃 1 − 𝜂𝛻𝐿 𝜃 1

Review: Gradient Descent

𝜃2

𝛻𝐿 𝜃 0

𝜃

Gradient: Loss 的等高線的法線方向

Start at position 𝜃 0

𝛻𝐿 𝜃 1

0

𝜃1

Gradient

Movement

Compute gradient at 𝜃 0

𝛻𝐿 𝜃 2

𝜃2

𝜃3

𝛻𝐿 𝜃 3

Move to 𝜃 1 = 𝜃 0 - η𝛻𝐿 𝜃 0

Compute gradient at 𝜃 1

Move to 𝜃 2 = 𝜃 1 – η𝛻𝐿 𝜃 1

……

𝜃1

Gradient Descent

Tip 1: Tuning your

learning rates

i

Learning Rate

If there are more than three

parameters, you cannot

visualize this.

i 1

L

i 1

Set the learning rate η carefully

Loss

Very Large

small

Large

Loss

Just make

No. of parameters updates

But you can always visualize this.

Adaptive Learning Rates

• Popular & Simple Idea: Reduce the learning rate by

some factor every few epochs.

• At the beginning, we are far from the destination, so we

use larger learning rate

• After several epochs, we are close to the destination, so

we reduce the learning rate

• E.g. 1/t decay: 𝜂 𝑡 = 𝜂 Τ 𝑡 + 1

• Learning rate cannot be one-size-fits-all

• Giving different parameters different learning

rates

Adagrad

𝜂𝑡 =

𝜂

𝑡+1

𝑡

𝜕𝐿

𝜃

𝑔𝑡 =

𝜕𝑤

• Divide the learning rate of each parameter by the

root mean square of its previous derivatives

Vanilla Gradient descent

𝑤 𝑡+1 ← 𝑤 𝑡 − 𝜂𝑡 𝑔𝑡

Adagrad

𝑤 𝑡+1

𝑡

𝜂

← 𝑤 𝑡 − 𝑡 𝑔𝑡

𝜎

w is one parameters

𝜎 𝑡 : root mean square of

the previous derivatives of

parameter w

Parameter dependent

𝜎 𝑡 : root mean square of

the previous derivatives of

parameter w

Adagrad

0

𝜂

𝑤 1 ← 𝑤 0 − 0 𝑔0

𝜎

1

𝜂

𝑤 2 ← 𝑤 1 − 1 𝑔1

𝜎

2

𝜂

𝑤 3 ← 𝑤 2 − 2 𝑔2

𝜎

𝜎0 =

2

1

1

𝑔0

2

2

+ 𝑔1

2

2

1

𝑔0

3

2

+ 𝑔1

2

𝜎 =

𝜎 =

……

𝑤 𝑡+1

𝑡

𝜂

← 𝑤 𝑡 − 𝑡 𝑔𝑡

𝜎

𝑔0

𝑡

𝜎𝑡

=

1

𝑔𝑖

𝑡+1

𝑖=0

2

+ 𝑔2

2

Adagrad

• Divide the learning rate of each parameter by the

root mean square of its previous derivatives

𝜂

𝑡

1/t decay

𝜂 =

𝑡

+

1

𝑡

𝜂𝜂

𝑡+1

𝑡

𝑤

← 𝑤 − 𝑡 𝑔𝑡

𝑡

𝜎

𝜎𝑡

1

𝑡

𝜎 =

𝑔𝑖 2

𝑡+1

𝑖=0

𝑤 𝑡+1

←

𝑤𝑡

−

𝜂

σ𝑡𝑖=0 𝑔𝑖

𝑔𝑡

2

Contradiction?

𝜂

𝜂𝑡 =

𝑡+1

𝑡

𝜕𝐿

𝜃

𝑔𝑡 =

𝜕𝑤

Vanilla Gradient descent

𝑤 𝑡+1

←

𝑤𝑡

Larger gradient,

larger step

− 𝜂 𝑡 𝑔𝑡

Adagrad

𝑤 𝑡+1

←

𝑤𝑡

−

𝜂

σ𝑡𝑖=0 𝑔𝑖

𝑔𝑡

Larger gradient,

larger step

2

Larger gradient,

smaller step

𝜂

𝑡

𝜕𝐶

𝜃

𝜂𝑡 =

𝑔𝑡 =

𝑡+1

𝜕𝑤

Intuitive Reason

• How surprise it is 反差

𝑤

特別大

g0

g1

g2

g3

g4

0.001 0.001 0.003 0.002 0.1

……

……

g0

10.8

……

……

𝑡+1

g1

20.9

𝑡

←𝑤 −

g2

31.7

g3

12.1

特別小

𝜂

σ𝑡𝑖=0 𝑔𝑖

g4

0.1

𝑔𝑡

2

造成反差的效果

Larger gradient, larger steps?

Best step:

𝑏

|𝑥0 +

|

2𝑎

1st

Larger order

derivative means far

from the minima

𝑦 = 𝑎𝑥 2 + 𝑏𝑥 + 𝑐

|2𝑎𝑥0 + 𝑏|

2𝑎

𝑥0

𝑏

−

2𝑎

|2𝑎𝑥0 + 𝑏|

𝜕𝑦

= |2𝑎𝑥 + 𝑏|

𝜕𝑥

𝑥0

Larger 1st order

derivative means far

from the minima

Do not cross parameters

Comparison between

different parameters

a

a>b

b

𝑤1

𝑤2

c

c>d

𝑤1

d

𝑤2

Second Derivative

𝑦 = 𝑎𝑥 2 + 𝑏𝑥 + 𝑐

𝜕𝑦

= |2𝑎𝑥 + 𝑏|

𝜕𝑥

𝜕2𝑦

= 2𝑎

2

𝜕𝑥

Best step:

𝑏

|𝑥0 +

|

2𝑎

|2𝑎𝑥0 + 𝑏|

2𝑎

𝑥0

𝑏

−

2𝑎

|2𝑎𝑥0 + 𝑏|

The best step is

𝑥0

|First derivative|

Second derivative

Larger 1st order

derivative means far

from the minima

Do not cross parameters

Comparison between

different parameters

The best step is

Larger

Second

|First derivative|

Second derivative

a

a>b

b

𝑤1

smaller second derivative

𝑤2

c

Smaller

Second

𝑤1

c>d

d

𝑤2

Larger second derivative

𝜂

𝑤 𝑡+1 ← 𝑤 𝑡 −

σ𝑡𝑖=0 𝑔𝑖

The best step is

𝑔𝑡

|First derivative|

2

?

Second derivative

Use first derivative to estimate second derivative

larger second

derivative

smaller second

derivative

𝑤1

first derivative

2

𝑤2

Gradient Descent

Tip 2: Stochastic

Gradient Descent

Make the training faster

Stochastic Gradient Descent

2

Loss is the summation over

all training examples

𝐿 = 𝑦ො 𝑛 − 𝑏 + 𝑤𝑖 𝑥𝑖𝑛

𝑛

Gradient Descent i i 1 L i 1

Stochastic Gradient Descent

Pick an example xn

𝐿𝑛 = 𝑦ො 𝑛 − 𝑏 + 𝑤𝑖 𝑥𝑖𝑛

Loss for only one example

2

Faster!

i i 1 Ln i 1

• Demo

Stochastic Gradient Descent

Stochastic Gradient Descent

Gradient Descent

Update after seeing all

examples

See all

examples

Update for each example

If there are 20 examples,

20 times faster.

See only one

example

See all

examples

Gradient Descent

Tip 3: Feature Scaling

Source of figure:

http://cs231n.github.io/neuralnetworks-2/

Feature Scaling

𝑦 = 𝑏 + 𝑤1 𝑥1 + 𝑤2 𝑥2

𝑥2

𝑥2

𝑥1

𝑥1

Make different features have the same scaling

𝑦 = 𝑏 + 𝑤1 𝑥1 + 𝑤2 𝑥2

Feature Scaling

1, 2 ……

100, 200 ……

w2

x1

w1

w2

x2

y

1, 2 ……

1, 2 ……

b

w2

Loss L

w1

x1

w1

w2

x2

y

b

Loss L

w1

Feature Scaling

𝑥1

𝑥11

𝑥21

𝑥2

𝑥12

𝑥22

𝑥𝑟

𝑥3

𝑥𝑅

For each

dimension i:

……

……

……

……

……

……

𝑟

𝑥

𝑖 − 𝑚𝑖

𝑟

𝑥𝑖 ←

𝜎𝑖

……

mean: 𝑚𝑖

standard

deviation: 𝜎𝑖

The means of all dimensions are 0,

and the variances are all 1

Gradient Descent

Theory

Question

• When solving:

𝜃 ∗ = arg min 𝐿 𝜃

𝜃

by gradient descent

• Each time we update the parameters, we obtain 𝜃

that makes 𝐿 𝜃 smaller.

𝐿 𝜃 0 > 𝐿 𝜃1 > 𝐿 𝜃 2 > ⋯

Is this statement correct?

Warning of Math

Formal Derivation

• Suppose that θ has two variables {θ1, θ2}

2

2

Given a point, we can

easily find the point

with the smallest value

nearby. How?

1

0

1

L(θ)

Taylor Series

• Taylor series: Let h(x) be any function infinitely

differentiable around x = x0.

h k x0

k

x x0

h x

k!

k 0

h x0

2

x x0

h x0 h x0 x x0

2!

When x is close to x0

h x h x0 h x0 x x0

E.g. Taylor series for h(x)=sin(x) around x0=π/4

sin(x)=

……

The approximation

is good around π/4.

Multivariable Taylor Series

h x0 , y0

h x0 , y0

x x0

y y0

h x, y h x0 , y0

x

y

+ something related to (x-x0)2 and (y-y0)2 + ……

When x and y is close to x0 and y0

h x0 , y0

h x0 , y0

x x0

y y0

h x, y h x0 , y0

x

y

Back to Formal Derivation

Based on Taylor Series:

If the red circle is small enough, in the red circle

La, b

La, b

1 a

2 b

L La, b

1

2

s La, b

La, b

La, b

u

,v

1

2

L(θ)

2

a, b

L

s u 1 a v 2 b

1

Back to Formal Derivation

constant

Based on Taylor Series:

If the red circle is small enough, in the red circle

L s u 1 a v 2 b

Find θ1 and θ2 in the red circle

minimizing L(θ)

1 a 2 b

2

2

s La, b

La, b

La, b

u

,v

1

2

d2

L(θ)

2

Simple, right?

a, b

d

1

Gradient descent – two variables

Red Circle: (If the radius is small)

L s u 1 a v 2 b

1

2 1, 2

Find θ1 and θ2 in the red circle

minimizing L(θ)

1 a 2 b

2

1

2

1, 2

d2

u, v

2

To minimize L(θ)

1

u

v

2

1 a

u

b v

2

Back to Formal Derivation

Based on Taylor Series:

If the red circle is small enough, in the red circle

L s u 1 a v 2 b

constant

s La, b

La, b

La, b

u

,v

1

2

Find 𝜃1 and 𝜃2 yielding the smallest value of 𝐿 𝜃 in the circle

La, b

1 a

u

a

This is gradient

1

b

v

b

La, b descent.

2

2

Not satisfied if the red circle (learning rate) is not small enough

You can consider the second order term, e.g. Newton’s method.

End of Warning

More Limitation

of Gradient Descent

Loss

Very slow at

𝐿

the plateau

Stuck at

saddle point

𝑤1

𝑤2

Stuck at local minima

𝜕𝐿 ∕ 𝜕𝑤

≈0

𝜕𝐿 ∕ 𝜕𝑤

=0

The value of the parameter w

𝜕𝐿 ∕ 𝜕𝑤

=0

Acknowledgement

• 感謝 Victor Chen 發現投影片上的打字錯誤