Building Material Characterization with Multispectral and LiDAR

advertisement

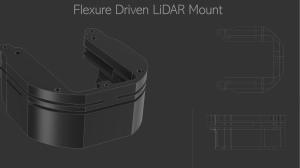

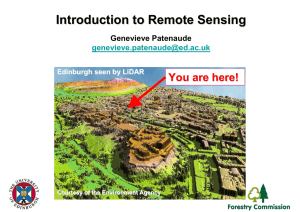

Journal of Building Engineering 44 (2021) 102603 Contents lists available at ScienceDirect Journal of Building Engineering journal homepage: www.elsevier.com/locate/jobe Characterizing building materials using multispectral imagery and LiDAR intensity data Zohreh Zahiri a, Debra F. Laefer b, *, Aoife Gowen c a Department of Physics University of Antwerp, Universiteitsplein 1, B-2610, Antwerpen, Belgium Center for Urban Science and Progress and Department of Civil and Urban Engineering, Tandon School of Engineering, New York University, 370 Jay St., Brooklyn, NY, 11201, USA c UCD School of Biosystems and Food Engineering, University College Dublin, Belfield, Dublin 4, Ireland b A R T I C L E I N F O A B S T R A C T Keywords: Multispectral Laser scanning Building materials Classification Façades This paper addresses the underlying bottleneck of unknown materials and material characteristics for assessing the life cycle of an existing structure or considering interventions. This is done by classifying and characterizing common building materials with two readily accessible, remote sensing technologies: multispectral imaging and Light Detection and Ranging (LiDAR). A total of 142 samples, including concrete of 3 different water/cement ratios, 2 mortar types, and 2 brick types (each type fired at 3 different temperatures) were scanned using a 5band multispectral camera in the visible, RedEdge, and Near Infrared range and 2 laser scanners with different wavelengths. A Partial Least Squares Discriminant Analysis model was developed to classify the main materials and then the subcategories within each material type. With multispectral data, an 82.75% average correct classification rate was achieved (improving to 83.07% when combined with LiDAR intensity data), but the effect was not uniformly positive. This paper demonstrates the potential to identify building materials in a non-destructive, non-contact manner to aid in in-situ facade material labelling. 1. Introduction Presently, obtaining fast and cheap information about building ge­ ometries and materials at a city-scale plays an important role in many urban applications including asset management, computational model­ ling, and resiliency assessment. Despite significant research that has been undertaken for the automatic detection of the geometry of build­ ings (e.g. Refs. [1,2] and individual features or defects [3,4], relatively little has been done to remotely identify component materials [5,6], especially characterizing differences within single classes of materials (e.g. weak stone versus strong stone). As noted by Dizhur et al. [7]; “One of the primary needs when assessing a building for refurbishment and/or retrofit is to characterize the constituent material properties.”. However, doing so without destructive testing is difficult to achieve, especially at scale [8]. With respect to asset management knowing such differences can be critical in terms of long-term performance expectations such as whether a mortar contains lime [9] or the in-situ strength of concrete [10]. While ultra­ sonic pulse tests have been effective in characterizing different clays and specific firing levels for structural clay blocks [11], such technology cannot be deployed at scale. Recent work by Chuta, Colin, and Jeong [12] on changed surface properties of concretes with different water-cement ratios provides a rational basis to investigate the use of non-contact remote sensing for automatic material determination. If automatic detection of building materials and their differences could be achieved by remote sensing (RS) data, great improvements could be obtained for more automated documentation and life-cycle assessment of existing buildings. This paper considers the possible benefits of two RS techniques in detecting primary building materials and distinguishing differences within classes of materials. 2. Background In recent decades, remote sensing data in different forms [e.g. Light Detection And Ranging (LiDAR), multispectral imaging, hyperspectral imaging) have been widely used for classification purposes in many areas such as geological investigation [13,14], vegetation identification [15,16], cultural heritage monitoring, and damage detection [17,18]. Such datasets have even been used for estimation of water depth [19] and quantification of river channel bed morphology [20]. However, * Corresponding author. E-mail address: zohreh.zahiri@uantwerpen.be (D.F. Laefer). Available online 27 April 2021 https://doi.org/10.1016/j.jobe.2021.102603 2352-7102/© 2021 Published by Elsevier Ltd. Received 8 November 2020; Received in revised form 7 March 2021; Accepted 21 April 2021 Z. Zahiri et al. relatively little research has been undertaken to classify building ma­ terials and discern differences within a class of construction materials. Presently, the most common RS data types for material classification studies are spectral imaging data. Early applications of the spectral imaging in building material characterization were conducted through reflected-light imagery in the visible and near-infrared (NIR) ranges with both unsupervised classifi­ cation [21] and supervised classification approaches [22]. Lerma, Ruiz, and Buchón [23] combined spectral images [red, green, blue (RGB), and NIR] with three textural images (calculated from the Red band image) for classification of six different materials (cleaned cement mortar, dirty cement mortar, flaking, polished limestone, washed limestone, and dark limestone) from the façade of Santos Juanés Church in Valencia, Spain using a maximum likelihood classification approach [23]. While combining the textural images and multispectral images qualitatively improved the classification images, no quantitative results were pre­ sented. Later, Lerma [24] investigated the advantages of using multi-band data in the identification of different facade materials (i.e., rock, wood, and various cement mortars). Multi-band images were generated using a set of registered images related to the same object but taken at different times, from distinct positions, under various lighting conditions and with multiple sensors of different electromagnetic wavelengths (RGB and NIR). In that work, multi-band data combined with multi-spectral data obtained better classification results compared to multi-spectral classification under single lighting and atmospheric conditions. As that work was done on only a single facade with a limited number of materials tested, a universal solution has yet to be developed where a repeatable spectral signature is identifiable. LiDAR is another RS technique. In that technology, a laser beam is used to collect range measurements from which 3D coordinates (x-y-z) are established. LiDAR systems usually operate at a monochromatic wavelength and record the strength of the reflected energy from the object encountered in this line-of-sight-technology. The reflected energy is referred to as the intensity and is controlled by material surface properties and atmospheric factors, as well as equipment specific con­ siderations [25]. Intensity values are the most common LiDAR outputs used for material classification. At a large scale, this has been success­ fully employed for distinguishing vegetation from non-vegetation [26], for identifying shadowed areas from non-shadowed ones [27], and for classifying different urban land covers [25,28]. Sithole [29] combined LiDAR data with hue, saturation, value trip­ lets (obtained from converting RGB values) in a three-dimensional (3D) triangulation model to detect brick/stone blocks from surrounding mortar joints. Similarly, Hemmleb et al. [30] deployed multispectral laser scanners to classify brick, mortar, and stone materials, as well as damage from moisture, biological agents, and salt blooming, and Morsy, Shaker, and El-Rabbany [31] combined three intensity images and a Digital Surface Model image (all created from multispectral LiDAR data) to classify buildings, trees, roads, and grass. While more versatile than single-channel laser scanners, multispectral laser scanners are still limited by their spectral sensitivity and have, therefore, only been able to distinguish a limited number of materials. More recently, Yuan, Guo, and Wang [32], used the reflectance, hue saturation values, and surface roughness extracted from LiDAR data as the material classification features. They concluded that the reflectance values deduced from LiDAR intensity values were more accurate than using the intensity values directly, as the latter varied with respect to the angle of incidence and distance from the scanner. In contrast to LiDAR and multispectral technologies, hyperspectral cameras can collect spectral data from hundreds of continuous bands. The hyperspectral data in the range of 1300–2200 nm was used recently to classify concrete with different water/cement ratios [33], bricks of different clay and firing temperatures [34] and mortars with different binder types [35]. Although hyperspectral data provides more infor­ mation than regular RGB images and multispectral images, the higher dimensionality of the data is significantly more complex to process due to the larger number of readings [36]. This makes estimation of different Journal of Building Engineering 44 (2021) 102603 material classes difficult, especially if the training dataset is limited [37]. Another disadvantage is that hyperspectral cameras are relatively expensive and heavy, which complicates collecting façade data from multiple elevations. Conversely, multispectral cameras are cheaper and lighter, thereby, allowing for a wide range of options for unmanned aerial vehicle usage, as described by Chen, Laefer, and Mangina. [38]; thereby giving great flexibility in data collection. To date, the combi­ nation of RedEdge multispectral and LiDAR data sets for characterizing building materials has not been thoroughly explored. This paper con­ tributes to this area. 3. Material and methods In the study herein, data from a multispectral camera in the Near IR and RedEdge ranges (RedEdge Micasense) were used to classify multiple building material samples. The classification process was repeated with the addition of two LiDAR scans taken with different laser wavelengths, and various data configurations (i.e. each technique separately or combined) were tested to optimise classification accuracy, as will be described in detail in this section. 3.1. Equipment For the multispectral camera, a RedEdge MicaSense camera was used. The unit has five sensors each collecting light intensity at five different bands (Fig. 1): Blue (475 nm), Green (560 nm), Red (668 nm), NIR (840 nm), and Red edge (717 nm). The LiDAR data were collected by two terrestrial laser scanners operating at different laser wavelengths (Table 1). The Trimble GS200 scanner had a green laser with a 532 nm wavelength (Fig. 2a), while the Leica scanner had a red laser with a 658 nm wavelength (Fig. 2b). Collected data were in the form of a point cloud with each point pos­ sessing an X-, Y-, and Z-coordinate and an intensity value. 3.2. Sample preparation The experimental sample set was comprised of three common building materials: concrete, brick, and mortar with a range of compo­ sitions to cover some of the range of available material configurations. Additionally, each class contained sub-classes of materials. For the concrete, there were 54 samples, with 18 each of 3 different water cement ratios (50%, 65%, and 80%). They produced according to ASTM standard C 192-90a [39] and were wholly identical, except for the water to cement ratio (Table 2). The dimensions of each concrete sample were approximately 50 × 50 × 50 mm. The brick samples were produced from two common types of clay: red and yellow firing clay. The bricks were machine pressed and deliv­ ered unfired by the Vandersanden brick factory of Bilzen, Belgium 2 Fig. 1. RedEdge MicaSense Multispectral camera (support.micasense.com). Z. Zahiri et al. Table 1 Laser scanner specifications. Journal of Building Engineering 44 (2021) 102603 Specifications Trimble GS200™ Leica Scan Station P20 Laser colour Laser wavelength Point accuracy Field of view Green 532 nm 3 mm @ 100 m 360◦ × 60o Red 658 nm 3 mm @ 50 m; 6 mm @ 100 m 360◦ × 60o Table 2 Characterization of concrete mixes. Water/Cement Ratio (%) Density (kg/ m3) Compressive Strength (MPa)a Slump (mm) 50% 65% 80% 2424.67 2365.83 2359.67 35.72 26.78 15.25 82 117 197 a Averaged from three cube 10 cm × 10 cm × 10 cm specimens according to BS 1881-116:2002. Table 3 Component materials of the tested brick. Composition* Size (mm) Factory Code Compressive Strength** (MPa) Red firing brick Yellow firing brick Red firing local loam, white firing German clay Yellow firing local loam, red firing local loam, and chalk 190 × 90 × 50 D24-S315077 11.40 210 × 100 × 65 D08-S315087 15.00 *As reported by the manufacturer. **Average from three samples when fired at 1060 ◦ C. Fig. 2. Laser scanners used for collecting LiDAR data. a) Trimble laser scanner. b) Leica laser scanner. (Table 3). Prior to firing, the bricks were cut by the authors into small cubes (roughly 40 × 40 × 40 mm) providing a total of 117 samples across the 2 brick types (63 red brick samples and 54 yellow brick samples). The samples were divided into 3 groups, with each fired at a different temperature: 700 ◦ C, 950 ◦ C, and 1060 ◦ C. For each firing level, there were 21 red brick samples and 18 yellow brick samples. A total of 42 mortar samples were used. Half were lime mortar and the other half Type S mortar [40], as described in Table 4. The lime mortar was made using a lime putty from Cornish Lime Co. LTD Bodmin, Cornwall, UK. As the material was already in a paste form, only a small amount of additional water was added to achieve good workability. For the mortar Type S, Portland cement was mixed with natural hydraulic lime (obtained from The Lime Store, Dublin. Ireland). The hydraulic lime, cement, and sand were mixed in a small counter-top mixer, and then the water was added gradually, until the mix obtain a good workability. The mortar was cast in cubes 40 × 40 × 40 mm and cured at room temperature. The calibration and validation samples for the classification models were assembled as two, separate, dry-laid block walls (Fig. 3). Rows 1–3 at the bottom of each wall were concrete samples, with each row a different water to cement (w/c) mix stacked from strongest to weakest from the bottom up. The samples in rows 4–9 (from the bottom) were brick samples comprised of 12 yellow and 14 red bricks in each row. Within each colour group (rows 4–6 and 7–9), the bricks were placed in clusters according to firing temperature from the least fired group (rows 4 and 7) to the most fired group (rows 6 and 9) [Fig. 3a]. The top two rows of each wall were mortar samples, with the lime samples (row 10) beneath mortar Type S (row 11) [Fig. 3a]. The validation wall was composed in the same order with the remaining samples (1/3rd of the total samples) (Fig. 3b). The two walls were scanned with the multi­ spectral camera and the two terrestrial laser scanners described in Table 1. The light illuminance at all corners of the two walls was measured with a ISO-TECH 1335 light meter registering 30 klux, thereby confirming a uniform lighting (sunlight) across the samples. Table 4 Mortar composition. Mortar Type Sand Water Cement Lime Type S Lime 3 2.5 0.8 0.15 1 0 0.5 1 incorporating functions from the Image Processing and Statistics tool­ boxes and additional functions written in-house. 4.1. Multispectral integration As previously mentioned, the MicaSense multispectral camera has 5 sensors each of which collects reflected light intensity at specific band, resulting in 5 different images. Due to small offsets of the sensors on the camera’s face, the position from which the data were taken differs slightly with respect to the angle of incidence. Hence, the images were registered and aligned using “imregconfig” function, and later they were combined using the “cat” function in Matlab to create a cube of a fixed length and width for all images and at a fixed depth of five bands. 4.2. LiDAR and multispectral integration 4. Data processing All data analysis was conducted using MATLAB (release R2014b) Brick Type 3 The LiDAR point clouds from the two different terrestrial laser scanners were converted into a pair of two-dimensional planes with only x- and y-coordinates along with intensity data for each point (Fig. 4a). Then the planes were divided into grids such that the number of grids in the x- and y-directions were equal to the number of pixels in the length and width of the multispectral image (Fig. 4b). Finally, the mean in­ tensity value of all the points confined in each grid was calculated and displayed as a pixel with spatial positions corresponding to the pixels in the multispectral image (Fig. 4c). As building components may appear similar but be of different ma­ terials, the experiment was designed to exclude the geometry as that cannot be relied upon. For this, samples of identical shapes and surface properties were produced so that the only criteria by which they can be identified would be their electromagnetic behavior and not some geometrical characteristic which might appear in the field in the form of Z. Zahiri et al. Journal of Building Engineering 44 (2021) 102603 Fig. 3. Specimen input (213 samples in total). Fig. 4. Converting Trimble 3D point cloud of Trimble scanner to 2D intensity plane. variable z values. Additionally, the intensity data were not converted to reflectance data, because the scanning area was relatively small (less than 1 m wide) and little variance was seen. More information about the data integration is provided in Appendix A. The intensity values of the LiDAR data (29–255 from the Trimble and − 1140–1514 from the Leica) and multispectral data (7168–63,175) were all normalized individually between 0 and 1 (by subtracting the minimum value from all values and dividing them by the difference between the minimum and maximum values). Then the LiDAR image planes were combined with the five spectral image planes of the mul­ tispectral images using the “cat” function in Matlab resulting in a cube of a fixed length and width and at a fixed depth of seven sensor data sets (instead of the previous five) [Fig. 5]. With both multispectral and LiDAR technologies, the sensors measure the intensity of light reflected from the target object. Hence, to prevent confusion, the readings in all Fig. 5. Combining multispectral and LiDAR data. bands (multispectral and LiDAR) are referred to as “intensity” throughout this document. Each sample contained at least 200 pixels. For each sample in the wall, a region of 5 × 5 pixels (a total of 25 pixels) was selected from the sample’s centre. The mean, multi-sensor spectrum of these pixels was considered as the representative spectrum of that sample. This resulted in 213 spectra for the 213 samples. For further investigation of the created classification models, the models were applied to all pixels in the validation image. Standard Normal Variate (SNV) pre-treatment (as described in Ref. [41] was applied to the multi-sensor data to attempt to correct for any surface induced variations in the measured signal. 4.3. PLSDA classification models In this study, Partial Least Squares Discriminative Analysis (PLSDA) [42] was used to classify the brick, concrete, and mortar samples. PLSDA is a supervised classification technique based on PLS regression (PLSR) to find the relation between two matrices (X and Y), in which X is the information measured for each sample (i.e. the spectra of the samples) and Y is a column vector defining the class membership for each sample in X. The Y variable is 1 for “in class” samples and 0 for “out of class” samples. For instance, if a PLSDA model is built to detect the brick class, the Y variable is 1 for brick samples and 0 for non-brick (i.e. concrete and mortar) classes. With this, five classification models were built (Fig. 6 and Table 5). The first model was to classify the three main building materials from each other. The other four models were gener­ ated to distinguish classes within each material group. Models were built on both raw and SNV processed data. 4 Z. Zahiri et al. Journal of Building Engineering 44 (2021) 102603 Fig. 6. Graphical description of different classification models. is provided in Table 7, where a plus indicates an improvement, a minus sign a worsening in the results, and an equal sign no change. Table 5 Sample quantities in the calibration and validation sets of each model. Model Calibration Samples Validation Samples 1 142 (36 concrete, 78 brick, and 28 mortar) 78 (36 yellow and 42 red) 78 (26 for each firing level) 28 (14 for each mortar) 36 (6 for each w/c ratio) 71 (18 concrete, 39 brick, and 14 mortar) 39 (18 yellow and 21 red) 39 (13 for each firing level) 14 (7 for each mortar) 18 (6 for each w/c ratio) 2 3 4 5 5.2. Applying classification models to pixels in validation wall 5. Results 5.1. Assessment of classification model performance Table 6 summarizes the correct classification rates (CCRs) achieved for the validation samples across all models. For each classification model, four different variations of the data were considered: a) multi­ spectral data without pre-treatment, b) multispectral with pretreatment, c) multispectral and LiDAR without pre-treatment, and d) multispectral and LiDAR with pre-treatment. The main observation from Table 6 is that the use of SNV pre-treatment on the data (both multi­ spectral data and the combined multispectral and LiDAR data) decreased the classification accuracy in Model 2 (brick type) and Model 3 (brick firing level) by at least 10%. While, using the pre-treated data improved the classification of Model 5 (concrete with different w/c) and had little impact on the classification results of Model 1 and Model 4. In general, the SNV pre-treatment worsened results among the 5 models from 81.08% to 76.60% for multispectral data and from 80.85% to 78.18% for the combined LiDAR and multispectral data. This decrease in CCR after applying SNV might be due to the similarities of spectral shape among classes; as described in Appendix B. Table 6 also shows that when LiDAR data were added and no pretreatment occurred, the classification models improved for Model 2 (brick type) and Model 4 (mortar type) at 5% and 7% respectively. However, including LiDAR data decreased the accuracy of Model 3 (brick firing levels) and Model 5 (concrete) by 5% and 10%, respec­ tively. The average CCR of the 5 models with the addition of the LiDAR data improved only marginally from 82.76% to 83.07% (Table 6). A summary of the impact of the data sources and SNV pre-treatment The classification models were later applied to the entirety of the pixels in the validation set to visualise the performance of the models. In the making of the previous models, the mean spectrum of the 25 pixels in the centre of each sample was used for testing the models. However, in this instance, the models were applied to all the pixels including the 25 pixels in the centre of the samples. Using the larger data set produced the following results. For the first 3 classification models, better results were achieved when the LiDAR data were added to the multispectral images without applying SNV (Model 1c, 2c, and 3c in Fig. 7). Among these 3 models, the best result was obtained for Model 1 (85.63%), with nearly all bricks (99.31%), 85.92% of concrete, and 71.65% of the mortar properly classified (n.b. all mortar misclassifications appeared as con­ crete). However, the mortar samples were better classified with SNV pretreatment (78.45%) [Model 1d in Fig. 7]. The next best result was obtained for Model 2 when the LiDAR data were added (Model 2c in Fig. 7). Similarly, SNV pre-treatment reduced the overall CCR of Model 2 (Model 2b and Model 2d compared to Model 2a and Model 2c in Fig. 7). This improvement of adding the LiDAR data was especially notable in the red brick class resulting in an improvement in CCR from 73.58% to 79.34% and with better segmentation for the top-most row in red bricks (Model 2c in Fig. 7). In Model 3 for the brick firing level, the CCR varied significantly between the red and yellow bricks. However, similar to Models 1 and 2, the best overall CCR for this Table 7 Summary of the impact of the data sources and pre-treatment approaches on the CCRs. Classification models a) Base Condition (multispectral) b) No LiDAR with SNV c) LiDAR without SNV d) LiDAR with SNV Model 1 Model 2 Model 3 Model 4 Model 5 95.77% 92.31% 87.18% 85.71% 44.44% þ þ þ þ þ - þ ¼ Table 6 Correct classification rate (CCR %) for both multispectral and combined validation sets before and after SNV pre-treatment with best results shown per model in bold. Data Model 1 (3 materials) Model 2 (Brick type) M* Model 3 (Firing level) No SNV^ 95.77 92.31 87.18 With SNV 98.59 76.92 71.79 Average of best results from 5 classification models for multispectral data M & L** No SNV 98.59 97.43 82.05 With SNV 97.18 84.61 71.82 Average of best results from 5 classification models for combined multispectral and LiDAR data 5 *M: Multispectral **L: LiDAR ^SNV: Standard normal variate pre-treatment. Model 4 (Mortar type) Model 5 (Concrete w/c) Average 85.71 85.71 44.44 50 92.85 92.85 33.33 44.44 81.08 76.60 82.76 80.85 78.18 83.07 Z. Zahiri et al. Journal of Building Engineering 44 (2021) 102603 Fig. 7. Classification images of 5 models for different data sets with and without SNV with best results of each dataset (considering SNV effect) in bold text and the average of the best overall results presented for each technology (M and M&L) *M: Multispectral **L: LiDAR ^SNV: Standard Normal Variate pre-treatment. model (52.24%) was also obtained after adding the LiDAR data and without applying SNV pre-treatment. Clear segmentation can be observed for most samples, except for yellow brick samples fired at 700 ◦ C (Model 3c in Fig. 7). Model 4 for classifying mortar classes resulted in a slight reduction in the overall CCR after adding the LiDAR data (66.76% vs. 65.53%). Although the segmentation of lime mortar improved by 8% after adding the LiDAR data, the one for Type S mortar worsened (11% lower CCR, as shown in Model 4a vs. Model 4c in Fig. 7). With both data types, the SNV pre-treatment slightly reduced the overall CCR (Model 4b and Model 4d 6 in Fig. 7). The combined LiDAR and multispectral data set had a mixed impact on Model 5 for differentiating concrete classes. Similar to Models 1–3, the best result (44.27%) in Model 5 was obtained after adding LiDAR data but with SNV pre-treatment (Model 5d in Fig. 7). In this model (Model 5d), 56.82% of the concrete class 50% was classified correctly. Notably, the results for concrete classes 65% and 80% were poorer (41.58% and 34.41% respectively). Averaging the best results obtained with multispectral data (irrespective of processing approaches) was 62.95%, an increase of more than 2%–65.13%. In general, the concrete classification model was the worst among the 5 models. Table 8 Z. Zahiri et al. Table 8 Data type resulting in the best CCR for each of the 5-classification models. Best Results Model 1 (3 materials) Model 2 (Brick type) Model 3 (Firing level) Model 4 (Mortar type) Model 5 (Concrete w/c) Mean spectra of samples Pixel data M* with SNV^ M&L** M&L M M&L M with SNV M&L M&L M&L M M&L with SNV Journal of Building Engineering 44 (2021) 102603 *M: Multispectral **L: LiDAR ^SNV: Standard Normal Variate pre-treatment. Fig. 8. Comparing the PLSDA classification performance of different spec­ tral data. shows the approach that generated the best outputs when applied to the mean spectra of samples’ central regions and to the pixel data. In both methods, most of the models worked better when multispectral data were combined with the LiDAR data. better (Table 9). The bar chart in Fig. 8 compares the classification results from spectrometry data with more than 200 bands in short-wave Infrared [33–35], RedEdge multispectral data in Vis-NIR (5 bands), and com­ bined multispectral and LiDAR intensity data (7 bands). These results demonstrate that while spectrometry data in SWIR with more than 200 bands obtained the highest performance, the multispectral data (either exclusively or combined with LiDAR intensity) perform similarly, except for concrete classification (Model 5). The poor results of the approach in concrete classification were also due to the fact that multi-sensor data were mostly within visible range and NIR range, which are insensitive to water. In future, utilizing laser scanners with an Infrared laser (e.g a ILRIS laser scanner) or including more SWIR wavelengths in the multi-spectral sensor might improve the classification results, especially when desiring to classify concrete based on water content. Although the overall results of most classification models improved slightly by adding LiDAR data (Table 8 and Fig. 7), the capability of the MicaSense multispectral data exclusively, was confirmed to detect dif­ ferences in material samples (Fig. 8). Hence, the multispectral imaging with MicaSense RedEdge camera presented a compelling approach to be the basis for material classification, especially given its limited spectral resolution, significantly easier set up compared to a laser scanner, and its rapid data capturing ability. While these observations provide useful insights into possible equipment based means to classify building materials in a nondestructive, non-contact manner, to be able to more rigorously gener­ alize the observations will require extensive additional investigations that combination of close range non-destructive testing and destructive chemical analysis (e.g. XRD, TGA, etc.) beyond the scope of this study. 6. Discussion The CCRs in this study varied among the different classification models by (1) using exclusively multispectral data, (2) combining them with LiDAR intensity data, and (3) applying SNV pre-treatment. How­ ever, overall, the combination of multispectral and LiDAR data resulted in the best CCR in most classification models (Table 8) and marginally improved the average CCR of all models (82.76%–83.07%). Previous work for land cover classification has also shown that integration of LiDAR intensity data and multispectral marginally increased the clas­ sification accuracy from 82.5% to 83.5% [43]. In that research, inclu­ sion of LiDAR intensity improved the classification for water (from 90% to 100%) and pavement (from 68% to 88%) but significantly decreased classification accuracy of bare ground (from 80% to 52%). Similarly, in the study herein, the introduction of LiDAR intensity data improved classification of some materials but not uniformly. However, the often marginal and inconsistent results achieved with the introduction of the LiDAR intensity data indicate that further research is needed to deter­ mine whether the relative benefits outweigh the extra difficulties and costs associated with collecting data from multiple remote sensing de­ vices and the subsequent storage and processing, as well as the most beneficial wavelength(s) for the LiDAR. To determine whether the results could be improved through a different modelling approach, a Support vector machine (SVM) classi­ fier, which is commonly used for image classification, was built from the same training data and was applied to the test data for further analysis. In SVM, a hyperplane is used to linearly separate the higher dimensional data. Non-linear data in the original dimension is mapped to linearly separable higher dimensional space [44]. SVM could achieve 100% accuracy for all types and combinations of data in Model 1 (Table 6 vs. Table 9). In Model 2, for classifying brick types, SVM only slightly improved the results from Multispectral data without pre-treatment but worsened or did not change the results for other types of data. The results of Model 3, Model 4 and Model 5 also worsened by using SVM classifier for almost all data. In general, the results from SVM classification were worse for most of the models and proved that the PLSDA classifier, which was considered initially, works 7. Conclusions Multispectral images were considered with and without intensity data from RedEdge multispectral camera and two LiDAR units operating at different emitting wavelengths in an attempt to classify different building materials (concrete, brick, and mortar). A PLS-DA approach applied to the data was capable to classify main material classes (con­ crete, brick, and mortar) and then differentiate distinctions within each certain material class (different brick, mortar, and concrete types). There were promising results in the models related to mortar type, brick Table 9 Correct classification rate (CCR %) results achieved by SVM classifier. Data M* M & L** No SNV^ With SNV No SNV With SNV Model 1 (3 materials) Model 2 (Brick type) Model 3 (Firing level) Model 4 (Mortar type) Model 5 (Concrete w/c) Average 100 100 100 100 94.87 82.05 92.31 84.62 71.79 69.23 82.05 64.10 57.14 57.14 57.14 57.14 33.33 33.33 33.33 33.33 71.43 68.35 72.97 67.84 *M: Multispectral **L: LiDAR ^SNV: Standard Normal Variate pre-treatment. 7 Z. Zahiri et al. clay type, and brick firing levels. Poorer results were obtained dis­ tinguishing concretes made with different water to cement ratios. The results also showed that in most cases, multispectral data (either exclusively or combined with LiDAR data) obtained exceed 80% for correct classification rates. This proves the potential for using RedEdge multispectral camera for detecting changes in building materials and that such technologies may be able to estimate mechanical properties of material via a non-destructive, non-contact technique. Such an ability would represent a major breakthrough in building conservation with respect to identifying and distinguishing in situ materials. To determine the full value of this type of work, future experimental efforts should be undertaken for brick with additional clay types and compositions, mortars of different aggregates and lime contents, and concretes with other cements and aggregate types, as well as with plasticizers and retarders and those with long-term exposure to pollutant. This technique should also be assessed under varying levels of sunlight noting, however, that the multispectral camera used in this study requires direct sunlight for capturing proper images in the NIR and RedEdge bands. Mr. John Ryan from UCDJournal technician team, as well as Mr. Bert Neyens of Building Engineering 44 (2021) 102603 (Vandersanden Group) for their support and assistance in conducting the experimental parts of the study. This work was supported by New York University’s Center for Urban Science and Progress; and The Eu­ ropean Research Council (ERC) [grant number ERC-2013-StG-335508— BioWater]. Author statement Zohreh Zahiri undertook the conceptualization, methodology, vali­ dation, formal analysis, investigation, writing, visualization. Debra Laefer provided the conceptualization, resources, writing, visualization, supervision, project administration, and funding acquisition. Aoife Gowan contributed to the methodology, software, reviewing. Declaration of competing interest The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper. Acknowledgements The authors wish to thank Mr. Donal Lennon, Mr. Derek Holmes, and Appendix A. Concatenation In this study, a multispectral image of the wall with fixed dimensions (x*y) was used where x is the number of pixels in the x direction and y is the number of pixels in the y direction. The data collected by laser scanners are not pixel-based but are, instead, parts of point clouds with thousands of points indicating the x, y, z coordinates, as well as the intensity values for each point. To be able to combine these two data sets (multispectral image and LiDAR point clouds), the three-dimensional point cloud data had to be projected into two-dimensional planes, as mentioned in the main text. This was done by making a mesh with the exact number of x and y pixels as that which appears in the multispectral image and by computing the average intensity of all the points within every cell of this mesh. In this case, the two resulting planes are of the same dimensions of the multispectral image, which enables a co-registration. The ’cat’ function is the shortened name for concatenate and is a function in Matlab, which was used to concatenate two matrices along a certain dimension. For example, C = cat(dim,A,B) concatenates B to the end of A along dimension dim. The aim was to concatenate the multispectral matrix and the LiDAR planes not along the x or y dimensions, but along the 3rd dimension, which is the intensity values [MnL = cat(3,Multispectral, LiDAR)]. For the multispectral image, there are 5 different intensity matrices (5 spectral bands), and for the LiDAR planes, there are 2 different intensity matrices (2 spectral bands from 2 different laser scanners). Hence, after concatenating, the MnL matrix has fixed x and y dimensions, the 3rd dimension is now 7. While the Cat function does not explicitly introduce error, there is a marginal concatenating error in combining the two datasets, because they are completely different data types (one is a 2D image, while the other 3D point cloud). By checking the edges of the wall, the error was established to be less than 5 mm in the length in both the calibration and validation walls (60 cm and 30 cm, respectively). Appendix B. Additional Results B1.1. Model 1 classification results (classifying main materials) After scanning with both techniques (i.e. multispectral and LiDAR), the mean spectra for the brick, concrete, and mortar samples were calculated Fig. B1. Mean spectra of materials in Model 1. 8 Z. Zahiri et al. Journal of Building Engineering 44 (2021) 102603 Fig. B2. Validation results of Model 1 for classifying main materials B: Brick C: Concrete M: Mortar. and plotted (Fig. B1). In general, the mean spectra of the three main material classes showed distinctive patterns in the multispectral bands, with the mortar and concrete spectra showing higher intensities (especially in the blue and green bands) compared to the brick spectra (Fig. B1a). Less effective for the three materials were the Near IR and RedEdge bands (around 0.2) where less distinction was apparent, except with the mortar in the RedEdge band. After adding the LiDAR data, the brick and mortar exhibited similar intensity in the Leica (wavelength 658 nm) and Trimble (wavelength 532 nm) Fig. B3. Mean spectra of yellow and red bricks. 9 Z. Zahiri et al. Journal of Building Engineering 44 (2021) 102603 Fig. B4. Validation results of Model 2 for classifying yellow and red bricks (Y: Yellow brick R: Red brick). Fig. B5. Mean spectra of bricks at different firing level. bands (around 0.65 and 0.8 respectively), but the concrete exhibited more distinguishable intensities [Fig. B1a]. This further distinction in the concrete spectra with the LiDAR bands justifies the improvement in classification of concrete and mortar and a slight increase in the overall CCR by adding LiDAR data (from 95.77% to 98.59%). When SNV pre-treatment was applied to the multispectral data, the brick spectra was distinctive among the materials (Fig. B1b). Specifically, better separation between spectra was observed in the Leica (L) and Trimble (T) data in Fig. B1b. With Model 1, when only the multispectral data were used, 3 of the 71 samples were misclassified without the SNV (Fig. B2a) and 1 with the SNV (Fig. B2b). With the LiDAR data, there was 1 misclassification both with and without the SNV pre-treatment (Fig. B2c and Fig. B2d). Interestingly, in all cases, the misclassification was only between the mortar and concrete, but in each instance different samples were misclassified. 10 Z. Zahiri et al. B1.2. Model 2 classification results (classifying yellow and red bricks) Journal of Building Engineering 44 (2021) 102603 The mean spectra of each of the two brick classes (Model 2) are plotted in Fig. B3. Despite having similar patterns in the multispectral bands, the red brick had lowere intensities than the yellow bricks. However, the intensities from the yellow and red bricks became closer to each other in the Near IR and RedEdge bands (Fig. B3a). Similarly, when the LiDAR data were added, distinctive behaviour was observed in spectra with higher intensities for the yellow brick (Fig. B3a). When SNV was applied to the multispectral data, the offset between the spectra disappeared, and they became closer to each other, especially in the blue and red bands (Fig. B3b). Similarly, the SNV pre-treatment made the spectra of two brick types very close to each other in the LiDAR bands (Fig. B3b). When Model 2 was built on the multispectral data (Model 2a), all yellow bricks were classified correctly, but 3 of the 21 red bricks were mis­ classified as yellow bricks without the SNV (Fig. B4a). Misclassification increased four-fold to 12 when SNV pre-treatment was applied (Fig. B4b). The addition of the LiDAR data reduced the misclassification to a single instance (Fig. B4c). SNV pre-treatment also had a worsening effect and resulted in 6 misclassified samples (Fig. B4d). So, while the LiDAR data improved the classification, the SNV did not. Notably, most misclassified bricks were the red ones fired at the highest temperature. The RGB image displayed a yellowing of these samples, which may in part explain the misclassification. B1.3. Model 3 classification results (classifying 3 levels of brick firing) The mean spectra of bricks fired at different temperature are plotted in Fig. B5. Despite similar spectra, the mean spectra were still distinguishable [especially in the Green, Red, and RedEdge bands (Fig. B5a)]. When the LiDAR data were added, bricks fired at 700 ◦ C and 950 ◦ C showed almost the same intensity in the Leica and Trimble bands (Fig. B5a). This might explain the reduction in CCR after adding LiDAR data. In general, the spectra showing the brick at the three firing levels were very similar in both multispectral and combined data sets. After applying SNV to the multispectral and combined data sets, the spectra became even closer to each other and less distinguishable (Fig. B5a vs Fig. B5b), thus accounting for the poorer results for Model 3 after applying SNV pre-treatment. Initially, for the 700 ◦ C firing class data, 9 of the 13 samples were correctly classified with just the multispectral data (Fig. B6a). This decreased to only 7 when the LiDAR data were added (Fig. B6c). No misclassification was observed with the bricks fired at 950 ◦ C, and only 1 sample was mis­ classified for the bricks fired at 1060 ◦ C with both multispectral and combined datasets (Fig. B6a vs. Fig. B6c). When SNV pre-treatment was applied, the number of misclassified samples increased in the multispectral data (Model 3b) in both the 700 ◦ C and 950 ◦ C classes but remained unchanged in the 1060 ◦ C class, with only 1 misclassified sample (Fig. B6b). The negative impact of SNV pre-treatment was even worse in Model 3d with the Fig. B6. Validation results of Model 3 for classifying brick firing level. 11 Z. Zahiri et al. Journal of Building Engineering 44 (2021) 102603 Fig. B7. Mean spectra of mortar classes. combined dataset (Fig. B6d). While bricks at 700 ◦ C got relatively better segmentation, the misclassifications samples increased for bricks fired at higher temperatures (950 ◦ C and 1060 ◦ C). Fig. B8. Validation results of Model 4 for classification of mortar. B1.4. Model 4 classification results (classifying 2 mortar classes) The Type S and lime mortars were similar in most multispectral bands, except in the Blue and Green bands, with the lime mortar having higher intensity (Fig. B7a). The addition of LiDAR data changed this, with higher intensity exhibited in the Leica and Trimble bands (Fig. B7a). This may explain the classification improvement (from 85.71% to 92.85%) with the introduction of the LiDAR data. Significant change was not observed in the spectra of the mortar after applying SNV (Fig. B7a vs. Fig. B7b). With the multispectral data, two lime mortar samples were misclassified as mortar Type S, both before and after pre-treatment (Fig. B8a vs. Fig. B8b). Incorporation of the LiDAR data corrected one of the misclassified samples (Fig. B8c). In contrast, application of SNV pre-treatment had no impact on the classification results (Fig. B8d). B1.5. Classification results of model 5 (classifying 3 concrete classes) The concrete spectra behaved very similarly in the multispectral bands, except in the Blue and Green bands (Fig. B9a). The intensity of the concrete class 50% was highest in the Green band, while in the Blue band this was almost the same as that of concrete class 80% and slightly more than concrete class 65% (Fig. B9a). When the LiDAR data were added, the same trend happened in the Trimble band (532 nm), with the higher intensity values for 12 concrete classes 50% and 80% (Fig. B9a). The application of SNV pre-treatment had no notable effect on the spectra in multispectral bands but slightly Z. Zahiri et al. Journal of Building Engineering 44 (2021) 102603 Fig. B9. Mean spectra of concrete. Fig. B10. Validation results of Model 5 for concrete classification. 13 Z. Zahiri et al. increased the intensity of concrete class 65% in the Leica band of 658 nm (Fig. B9b). Journal of Building Engineering 44 (2021) 102603 The initial PLS-DA model on multispectral data (before applying SNV) successfully classified all concrete class 65% samples (Fig. B10a) but only two samples from concrete class 50% and none from concrete class 80%. SNV pre-treatment doubled the correct classification rate of concrete class 50% and enabled 5 of the 6 samples from concrete class 80% to be predicted correctly but then failed with all concrete 65% samples (Fig. B10b). When LiDAR data were added, all concrete samples were predicted in concrete class 65% (Fig. B10c). After applying SNV to the combined dataset, 5 samples from concrete class 50%, only 3 samples in concrete class 65% were classified correctly and none in concrete class 80% (Fig. B10d). In summary, the results of the models were very mixed across the three concrete classes, with none working well across all samples. The inconsistent results from all versions of Model 5 might be related to the very similar spectra of these three concrete classes (Fig. B9) in the limited number of bands that were available. References [21] J.M. Grunicke, G. Sacher, B. Strackenbrock, Image processing for mapping damages to buildings, in: CIPA International Symposium, Cracow, Poland, 1990, pp. 257–263. [22] J. Herráez, P. Navarro, J.L. Lerma, Integration of normal colour and colour Infrared emulsions for the identification of pathologies in architectural heritage using a digital photogrammetric system, Int. Arch. Photogram. Rem. Sens. (1997) 240–245. XXXII(5C1B). [23] J.L. Lerma, L.Á. Ruiz, F. Buchón, Application of spectral and textural classifications to recognize materials and damages on historic building facades, Int. Arch. Photogram. Rem. Sens. (2000) 480–484. XXXIII(B5). [24] J.L. Lerma, Multiband versus multispectral supervised classification of architectural images, Photogramm. Rec. 17 (97) (2001) 89–101. [25] W.Y. Yan, A. Shaker, N. El-Ashmawy, Urban land cover classification using airborne LiDAR data: a review, Remote Sens. Environ. 158 (2015) 295–310. [26] B. Höfle, M. Hollaus, J. Hagenauer, Urban vegetation detection using radiometrically calibrated small-footprint full-waveform airborne LiDAR data, ISPRS J. Photogrammetry Remote Sens. 67 (2012) 134–147. [27] G. Tolt, M. Shimoni, J. Ahlberg, A shadow detection method for remote sensing images using VHR hyperspectral and liDAR data, in: Proceedings of International Geoscience and Remote Sensing Symposium (IGARSS), Canada, Vancouver, 2011, pp. 4423–4426, 25-29 July. [28] M. Idrees, H.Z.M. Shafri, V. Saeidi, Imaging spectroscopy and light detection and ranging data fusion for urban features extraction, Am. J. Appl. Sci. 10 (12) (2013) 1575–1585. [29] G. Sithole, Detection of bricks in a masonry wall, Int. Arch. Photogram. Rem. Sens. Spatial Inf. Sci. (2008) 567–571. XXXVII(B5). [30] M. Hemmleb, F. Weritz, A. Schiemenz, A. Grote, C. Maierhofer, Multispectral data acquisition and processing techniques for damage detection on building surfaces, Int. Arch. Photogram. Rem. Sens. Spatial Inf. Sci. 36 (B5) (2006) 6. [31] S. Morsy, A. Shaker, A. El-Rabbany, Multispectral LiDAR data for land cover classification of urban areas, Sensors 17 (5) (2017) 958. [32] L. Yuan, J. Guo, Q. Wang, Automatic classification of common building materials from 3D terrestrial laser scan data, Autom. ConStruct. 110 (2020) 103017. [33] Z. Zahiri, D.F. Laefer, A. Gowen, The feasibility of short-wave infrared spectrometry in assessing water-to-cement ratio and density of hardened concrete, Construct. Build. Mater. 185 (2018) 661–669. [34] D.F. Laefer, Z. Zohreh, A. Gowen, Using short-wave infrared range spectrometry data to determine brick characteristics, Int. J. Architect. Herit. (2018), https://doi. org/10.1080/15583058.2018.1503362. [35] Z. Zahiri, D.F. Laefer, A. Gowen, Classification of Hardened Cement and Lime Mortar Using Short-Wave Infrared Spectrometry Data. Structural Analysis of Historical Constructions, Springer, Cham, 2019, pp. 437–446. [36] W. Liao, A. Pizurica, P. Scheunders, W. Philips, Y. Pi, Semi supervised local discriminant analysis for feature extraction in hyperspectral images, IEEE Trans. Geosci. Rem. Sens. 51 (1) (2013) 184–198. [37] D.L. Donoho, High-dimensional data analysis: the curses and blessing of dimensionality, in: AMS Math. Challenges 21st Century, 2000. [38] S. Chen, D. Laefer, E. Mangina, State of technology review of civilian UAVs, Engineering Patent Journal, Bentham 10 (3) (2016) 160–174. [39] Astm C192-90a, Standard Practice for Making and Curing Concrete Test Specimens in the Laboratory, American Society Testing & Materials, USA, 1990. [40] Astm C270-14a, Standard Specification for Mortar for Unit Masonry, ASTM International, West Conshohocken, PA, 2014. www.astm.org. [41] C. Esquerre, A.A. Gowen, J. Burger, G. Downey, C.P. O’Donnell, Suppressing sample morphology effects in near infrared spectral imaging using chemometric data pre-treatments, Chemometr. Intell. Lab. Syst. 117 (2012) 129–137. [42] P. Geladi, B.R. Kowalski, Partial least-squares regression: a tutorial, Anal. Chim. Acta 185 (1986) 1–17. [43] K.A. Hartfield, K.I. Landau, W.J.D. Van Leeuwen, Fusion of high resolution aerial Multispectral and LiDAR data: land cover in the context of urban mosquito habitat, Rem. Sens. 3 (2011) 2364–2383. [44] V. Sowmya, K.P. Soman, M. Hassaballah, Hyperspectral image: fundamentals and advances, in: Recent Advances in Computer Vision, Springer, Cham, 2019, pp. 401–424. [1] L.J. Sánchez-Aparicio, B. Riveiro, D. Gonzalez-Aguilera, L.F. Ramos, The combination of geomatic approaches and operational modal analysis to improve calibration of finite element models: a case of study in Saint Torcato Church (Guimarães, Portugal), Construct. Build. Mater. 70 (2014) 118–129. [2] H. Aljumaily, D.F. Laefer, D. Cuadra, Big-Data approach for three-dimensional building extraction from aerial laser scanning, J. Comput. Civ. Eng. 30 (3) (2015), 04015049. [3] S. Zolanvari, D.F. Laefer, Slicing method for building facade extraction from LiDAR point clouds, ISPRS J. Photogrammetry Remote Sens. 119 (2016) 334–346. [4] M. Cabaleiro, J. Hermida, B. Riveiro, J.C. Caamaño, Automated processing of dense points clouds to automatically determine deformations in highly irregular timber structures, Construct. Build. Mater. 146 (2017) 393–402. [5] S. Kotthaus, T.E. Smith, M.J. Wooster, C.S.B. Grimmond, Derivation of an urban materials spectral library through emittance and reflectance spectroscopy, ISPRS J. Photogrammetry Remote Sens. 94 (2014) 194–212. [6] R. Ilehag, M. Weinmann, A. Schenk, S. Keller, B. Jutzi, S. Hinz, Revisiting existing classification approaches for building materials based on hyperspectral data, Int. Arch. Photogram. Rem. Sens. Spatial Inf. Sci. 42 (2017). [7] D. Dizhur, M. Giaretton, I. Giongo, K.Q. Walsh, J.M. Ingham, Material property testing for the refurbishment of a historic URM building in Yangon, Myanmar, J. Build. Eng 26 (2019) 100858. [8] S. Chang, D. Castro-Lacouture, Y. Yamagata, Decision support for retrofitting building envelopes using multi-objective optimization under uncertainties, J.Build. Eng (2020) 101413. [9] I. Torres, G. Matias, P. Faria, Natural hydraulic lime mortars-the effect of ceramic residues on physical and mechanical behaviour, J.Build. Eng (2020) 101747. [10] D. Breysse, X. Romao, M. Alwash, Z.M. Sbartaï, V.A. Luprano, Risk evaluation on concrete strength assessment with NDT technique and conditional coring approach, J.Build. Eng (2020) 101541. [11] M.T. Marvila, A.R.G. Azevedo, J. Alexandre, E.B. Zanelato, N.G. Azeredo, N. T. Simonassi, S.N. Monteiro, Correlation between the properties of structural clay blocks obtained by destructive tests and ultrasonic pulse tests, J.Build. Eng 26 (2019) 100869. [12] E. Chuta, J. Colin, J. Jeong, The impact of the water-to-cement ratio on the surface morphology of cementitious materials, J.Build. Eng (2020) 101716. [13] T.H. Kurz, J.S. Buckley, J.A. Howell, Close-range hyperspectral imaging for geological field studies: workflow and methods, Int. J. Rem. Sens. 34 (5) (2013) 1798–1822. [14] P. Hartzell, C. Glennie, K. Biber, S. Khan, Application of multispectral LiDAR to automated virtual outcrop geology, ISPRS J. Photogrammetry Remote Sens. 88 (2014) 147–155. [15] E.W. Bork, J.G. Su, Integrating LIDAR data and multispectral imagery for enhanced classification of rangeland vegetation: a meta-analysis, Remote Sens. Environ. 111 (2007) 11–24. [16] S. Yoga, J. Bégin, B. St-Onge, D. Gatziolis, Lidar and multispectral imagery classifications of balsam fir tree status for accurate predictions of merchantable volume, Forests 8 (7) (2017) 253. [17] L. Palombi, D. Lognoli, V. Raimondi, G. Cecchi, J. Hällström, K. Barup, C. Conti, R. Grönlund, A. Johansson, S. Svanberg, Hyperspectral fluorescence LiDAR imaging at the Colosseum, Rome: elucidating past conservation interventions, Opt Express 16 (10) (2008) 6794–6808. [18] V. Raimondi, G. Cecchi, D. Lognoli, L. Palombi, R. Grönlund, A. Johansson, S. Svanberg, K. Barup, J. Hällström, The fluorescence LiDAR technique for the remote sensing of photoautotrophic biodeteriogens in the outdoor cultural heritage: a decade of in situ experiments, Int. Biodeterior. Biodegrad. 63 (7) (2009) 823–835. [19] R.P. Stumpf, K. Holderied, M. Sinclair, Determination of water depth with highresolution satellite imagery over variable bottom types, Limnol. Oceanogr. 48 (1) (2003) 547–556. [20] S.J. Winterbottom, D.J. Gilvear, Quantification of channel bed morphology in gravel-bed rivers using airborne multispectral imagery and aerial photography, River Res. Appl. 13 (6) (1997) 489–499. 14