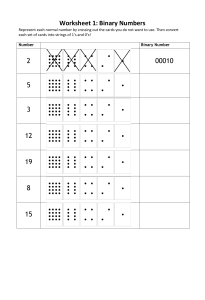

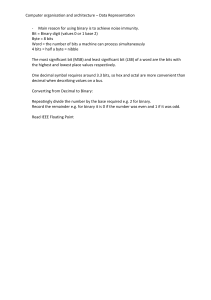

COMPUTER ARCHITECTURE Unit Code: CCS2224 Prerequisites: CCS2113 Computer Systems Organisation Lecturer: Mr Henry K. ___________________________________________________________________ Class 1 Computer organisation (preview) Computer organization is the physical and logical structure of a computer system and how its various components work together to perform tasks. It entails topics such as computer architecture, memory organization, input/output (I/O) systems, and processor design. In computer organization, the focus is on understanding how computers work at the hardware level, including the design of the central processing unit (CPU), the memory system, and the various peripherals. This knowledge is essential for computer hardware and software designers, as well as computer engineers and technicians, who need to understand how computer systems operate and are constructed. History of Computers (This is just additional information on the side) Computers can be traced back to ancient times with simple tools such as the abacus. In the 20th century, electronic computers emerged with the Atanasoff-Berry Computer (ABC) in 1937 and the Z3 in 1941. The ENIAC, the first general-purpose electronic computer, was built in 1944. In the 1970s, the development of microprocessors led to the creation of personal computers. In 1981, IBM introduced the first PC. The internet took off in the 1990s and has since led to the development of new technologies such as mobile computing and artificial intelligence. Today, computers play a crucial role in many aspects of our lives. Types of computers Computers come in a variety of forms and sizes, each designed to meet specific needs and perform specific tasks. There are computers designed for personal use, large organizations, and everything in between. The choice of which type of computer to use depends on the user's needs and requirements, such as size, portability, and performance. Whether it's a small tablet for personal use or a powerful workstation for scientific simulations, there is a type of computer designed to meet the needs of every user. Here are some of the most common types of computers: √ Personal Computers (PCs): A personal computer is designed for individual use. PCs are used for general-purpose tasks such as word processing, web browsing, and gaming. There are two main types of PCs: desktops and laptops. √ Mainframes: Mainframes are large, powerful computers used by large organizations for mission-critical applications, such as banking, airline reservation systems, and government systems. Mainframes are highly reliable and can handle a large number of users and transactions simultaneously. √ Servers: A server is a computer that provides shared resources and services to other computers on a network. Servers are used for web hosting, file sharing, and email. √ Workstations: A workstation is a high-performance computer designed for technical or scientific applications. Workstations are commonly used for 3D animation, CAD/CAM, and scientific simulations. √ Tablets: A tablet is a portable computer that is similar in functionality to a laptop but is designed to be more compact and portable. Tablets typically use a touch-screen interface and are popular for reading ebooks, browsing the web, and playing games. √ Smartphones: These are mobile phones that include advanced computing capabilities. Smartphones are used for making phone calls, sending text messages, and browsing the web. Characteristics of Modern Computers Modern computers have several key characteristics that differentiate them from early computers and allow them to perform a wide range of tasks efficiently and effectively. Some of the most important characteristics of modern computers include √ Speed: Modern computers can perform millions of calculations per second, allowing them to quickly and efficiently process large amounts of data. √ Storage capacity: Modern computers have large storage capacities, allowing users to store vast amounts of data, including documents, music, videos, and photos. √ Connectivity: Modern computers are connected to networks, allowing them to communicate and share data with other computers and devices. √ Portability: Many modern computers are designed to be portable, allowing users to take them with them wherever they go. This makes it easier to work, stay connected, and access information on the go. √ Multitasking: Modern computers are capable of performing multiple tasks simultaneously, allowing users to work on multiple projects at the same time. √ User-friendly interfaces: Modern computers typically use graphical user interfaces (GUIs), which make it easier for users to interact with and use computers. √ High performance: Modern computers are capable of running demanding applications, such as video editing software and 3D games, allowing users to perform complex tasks with ease. √ Increased security: Modern computers are equipped with various security features to protect against threats such as viruses, malware, and hacking. Class 2 Number Systems A number system is a method of representing numbers in a specific base, also known as radix. There are several number systems, but some of the most common are: √ Binary Number System: The binary number system uses only two digits, 0 and 1, to represent numbers. This system is used by computers because it is easy to implement with the use of electrical switches. √ Decimal Number System: The decimal number system, also known as the base-10 system, is the most commonly used system in everyday life. It uses ten digits, 0 to 9, to represent numbers. √ Octal Number System: The octal number system uses eight digits, 0 to 7, to represent numbers. It is used in some computer applications and can simplify certain calculations by reducing the number of digits needed to represent a number. √ Hexadecimal Number System: The hexadecimal number system uses sixteen digits, 0 to 9 and A to F, to represent numbers. It is widely used in computer programming as a shorthand representation of binary numbers.In general, the number of digits used in a number system determines its base. The value of a digit in a number system is determined by its position in the number and the base of the system. For example, in the decimal number system, the digit in the one's place has a value of 1, the digit in the tens place has a value of 10, and so on. It is also important to understand the concept of positional notation, which is the basis for all modern number systems. The positional notation means that the value of a digit in a number depends on its position in the number, not just its face value. This allows for efficient representation of large numbers with a small number of digits. Signed vs Unsigned Binary Numbers In computing, the concept of signed and unsigned binary numbers is an important one as it relates to how numbers are stored and processed in computer memory. The difference between these two types of binary numbers lies in their representation of positive and negative values, with signed binary numbers able to represent both positive and negative values while unsigned binary numbers can only represent positive values. The distinction between these two types of binary numbers has implications for how numbers are represented and used in various computer applications, including arithmetic operations, data storage, and communication between different systems. In arithmetic operations, using the correct representation of signed or unsigned numbers can lead to accurate results, while using the wrong representation can result in overflow or underflow errors. Underflow & Overflow errors? An overflow error occurs when the result of an arithmetic operation is too large to be represented within the specified number of bits in computer memory. For example, if an 8-bit signed binary number is used to represent values between -128 and 127, and an operation produces a result of 128, it would result in an overflow error. The result would not be accurate, and the error could cause further issues in the system. An underflow error, on the other hand, occurs when the result of an operation is too small to be represented by the specified number of bits. For example, if a floating-point number is used to represent small decimal values, and an operation produces a result that is too small to be represented by the floating-point representation, an underflow error would occur. In data storage, the choice of signed or unsigned representation affects the range of values that can be stored and the accuracy of the stored values. For example, if a large positive number is stored as an unsigned binary number, it can be represented accurately, but if the same number is stored as a signed binary number, the most significant bit would be interpreted as a sign bit, leading to incorrect results. In communication between different systems, it is important to agree on a common representation of signed and unsigned numbers to ensure that data is transmitted and received accurately. How is this achieved? To represent binary code as a signed number, a sign bit is used to indicate whether the number is positive or negative. This allows the same binary representation to be used for both positive and negative numbers, rather than using separate representations for positive and negative numbers. Typically, the left-most bit in the binary representation is chosen as the sign bit, with a value of 0 indicating a positive number and a value of 1 indicating a negative number. The remaining bits are used to represent the magnitude of the number(sign magnitude). For example, if an 8-bit signed binary number is used to represent values between -128 and 127, the left-most bit would be the sign bit, and the remaining 7 bits would be used to represent the magnitude of the number. Using a sign bit to represent signed binary numbers has several advantages: √ It allows the same binary representation to be used for both positive and negative numbers, making it easier to compare and perform arithmetic operations on signed binary numbers. √ It allows negative numbers to be represented using the same binary representation as positive numbers, reducing the complexity of computer algorithms and making it easier to write code that handles signed binary numbers. It is important to note that when performing arithmetic operations on signed binary numbers, special care must be taken to ensure that overflow and underflow errors do not occur, as these can lead to incorrect results. How are such kind of errors mitigated? Underflow and overflow errors in computer systems can be mitigated through a variety of methods, depending on the nature of the problem and the requirements of the application. Some common methods of mitigating these errors include: 1. Increasing the precision or range of the numerical representation This can be done by using a larger number of bits to represent numbers, or by using a different numerical representation that provides a wider range of values or higher precision. For example, using double-precision floating-point numbers instead of single- precision floating-point numbers can reduce the likelihood of underflow errors. 2. Scaling the input data - Scaling the input data can help prevent overflow errors by reducing the magnitude of the numbers involved in a calculation. For example, in a financial application, dividing all values by a factor of 1000 can prevent overflow errors. 3. Using error detection or error correction codes - Error detection or error correction codes can be used to detect and correct underflow or overflow errors. For example, in a digital communication system, a parity bit can be added to detect and correct errors in the transmitted data. 4. Implementing software safeguards - The software can be designed to detect and handle underflow or overflow errors. For example, a software routine can be used to check the result of a calculation for signs of underflow or overflow and to take appropriate action, such as rounding the result or reporting an error. 5. Using hardware features - Hardware features can be used to mitigate underflow or overflow errors. For example, hardware floating-point units (FPUs) can be used to perform floating-point calculations, which are more accurate and efficient than softwarebased calculations. Sign Magnitude Sign magnitude is a method of representing signed numbers in binary format. In this representation, the left-most bit is used to indicate the sign of the number, with a value of 0 indicating a positive number and a value of 1 indicating a negative number. The remaining bits are used to represent the magnitude of the number, with no additional processing required to determine the sign of the number. Sign magnitude is one of the simplest methods of representing signed numbers in binary format, but it has several disadvantages: √ The same binary representation can be used to represent two different numbers (e.g., both +5 and -5 can be represented as 0101), making it difficult to perform arithmetic operations on signed numbers using this representation. √ The representation is not symmetrical, as the magnitude of negative numbers is represented differently from the magnitude of positive numbers. What does this mean? In the sign-magnitude representation of signed numbers, the leftmost bit is used to indicate the sign of the number (0 for positive and 1 for negative), while the remaining bits represent the magnitude. This representation is not symmetrical as positive and negative numbers are represented differently, leading to difficulties when performing arithmetic operations such as adding a positive and a negative number - the result may be either positive or negative, depending on the specific binary representation of the numbers involved. Despite these disadvantages, sign magnitude is still used in some computer systems, particularly in older systems or in specialised applications where its simplicity outweighs the disadvantages of this representation. However, most modern computer systems use more sophisticated methods of representing signed numbers, such as two's complement representation or IEEE 754 floating-point representation, which provide more precise and efficient ways of representing and processing signed numbers. Two’s Complement Two's complement is a binary representation of signed numbers used in many computer systems. In two's complement, the leftmost bit represents the sign of the number (0 for positive and 1 for negative), and the remaining bits represent the magnitude. The magnitude of a negative number is calculated by taking the complement (flipping the bits) of its absolute value and adding 1. This representation provides a more symmetrical representation of positive and negative numbers compared to sign-magnitude representation and makes it easier to perform arithmetic operations on signed numbers. How can we find the two’s complements of a binary number? The decimal number 5 can be represented as 00000101 in 8-bit two's complement. The decimal number -5 can be represented as 11111011 in 8-bit two's complement. To get this representation, first, we take the complement of 5 (by flipping the bits 00000101 to 11111010), then add 1 to get 11111011. The two's complement representation has several benefits over signmagnitude representation: 1. Symmetry: In two's complement representation, both positive and negative numbers have the same number of representations, making it a symmetrical representation. This makes it easier to perform arithmetic operations such as addition and subtraction. 2. Ease of Comparison: comparisons between In two's positive complement and negative representation, numbers are straightforward, as the most significant bit is used to determine the sign. 3. Avoids ambiguous representation of zero: In sign-magnitude representation, there is an ambiguous representation of zero, as both +0 and -0 are represented by all bits being 0. This ambiguity is avoided in the two's complement representation, as only one representation of zero is used. 4. Efficient Hardware Implementation: Two's complement representation is more hardware-friendly, as hardware circuits can be used to perform arithmetic operations such as addition and subtraction with only minor modifications. Storage Definitions What is a bit? A bit (short for "binary digit") is the smallest unit of data in a computer, representing either a 0 or 1. These binary values are used to represent all the information processed by a computer, including instructions, text, images, and audio. What is a nIbble? A nibble is a unit of data in computing, consisting of 4 bits. A nibble is half of a byte, which typically consists of 8 bits. In some computer architectures and data transmission systems, nibbles are used to represent or process data, allowing for efficient processing and storage of information. For example, in certain memory systems, nibbles may be used to store the least significant or most significant bits of data, while in others they may be used to represent hexadecimal (base-16) numbers, with each nibble representing one hexadecimal digit. What is a word? A word is a unit of data in computing, typically consisting of a fixed number of bits, such as 16, 32, or 64 bits. A word is the basic building block for data storage and processing in computer systems. The size of a word can depend on the architecture of the system, and the size of a word determines the range of values that can be represented and processed. For example, a 16-bit word can represent a number from 0 to 65535, while a 32-bit word can represent a number from 0 to 4294967295. In general, larger words provide a wider range of representable values, but also require more memory to store and process. What is a qword? A qword is a unit of data in computing, which stands for "quadword." A qword is typically defined as 64 bits and is used to store and process large data values. The term is most commonly used in x86 architecture, where a qword is used to store a 64-bit value in a register or memory location. Qwords are often used in high-performance computing applications, where a large range of values is required and the processing of large data values is optimized. In addition, qwords are commonly used in cryptography and encryption, where large numbers are used in complex mathematical operations. What is a block? A block is a unit of data in computing, typically consisting of a fixed number of bytes or bits. A block can be used for various purposes, including data storage, data transmission, and data processing. In data storage systems, blocks are used to organize data into units that can be efficiently read from or written to storage devices such as hard drives or flash memory. In data transmission systems, blocks are used to transmit data over networks, often with error correction and compression techniques applied to ensure the integrity and efficiency of data transfer. In data processing, blocks can be used to divide data into smaller, manageable units for processing and analysis. The size of a block can vary, depending on the specific use case and system architecture. Decoding the Differences: Fixed-Point vs. Floating-Point Number Representation and Arithmetic Fixed-point and floating-point number representation are two methods used to represent real numbers in a computer system. The fixed-point representation uses a fixed number of bits to represent the fractional part of a number and another fixed number of bits to represent the whole part of a number. This type of representation is useful for applications that require a fixed number of decimal places and do not require a wide range of magnitudes. For example, if the fixed-point system uses 8 bits with 4 bits for the integer part and 4 bits for the fractional part, the fixed-point representation of 4.375 would be 100.1110, where 100 represents 4, 0.1110 represents 0.375, and the fractional part is rounded to the nearest value that can be represented using 4 bits. Note that the fixed-point representation of a number can vary depending on the number of bits used for the integer and fractional parts, so the representation of 4.375 in a different fixed-point system with different bit allocations might be different. Floating-point representation, on the other hand, uses a fixed number of bits to represent the exponent and another fixed number of bits to represent the mantissa. This type of representation is useful for applications that require a wide range of magnitudes and require a high level of precision. Binary floating-point numbers are represented in a standardized format, that defines how the sign, exponent, and mantissa parts of the number are stored in a computer's memory. A binary floating-point number has three parts: √ Sign: A single bit that indicates whether the number is positive or negative. √ Exponent: A field that represents the magnitude of the number. The exponent is stored as a binary number and is used to determine the power of 2 by which the mantissa should be multiplied. √ Mantissa: A fractional binary number that represents the precision of the number. The mantissa is stored as a normalization of the binary fraction between 1 and less than 2. Before diving into an example, there are two concepts we need to know: √ Normalization √ Biasing In the above picture, you can see that changing the decimal point brings about the exponent factor and its value will be equal to the number of places moved (-ve value when moved to the right & +ve value when moved to the left). However, there will be many representations of the same binary number depending on where the decimal place (radix) is placed, This brings about the need for normalisation There are two types of normalization: √ Implicit normalization - This involves moving the decimal point to the right-hand side of the most significant ‘1’. √ Explicit normalization - This involves moving the decimal point to the left-hand side of the most significant ‘1’. Normalization ensures that the most significant bit of the mantissa is always non-zero, to provide a unique representation for each number and prevent loss of precision. Biasing Refers to the process of adjusting the exponent field in a floating-point representation so that it represents the exponent value with respect to a fixed value, called the bias. This allows the representation to be more compact and efficient. For example, in a floating-point number representation, the actual exponent value is usually stored as an unsigned binary number. To allow negative exponent values, the actual exponent value is offset by a bias value, which is a fixed constant. The sum of the actual exponent value and the bias value is stored in the exponent field of the floating-point representation. The choice of the bias value affects the range and precision of the floating-point representation and is determined by the design of the computer system. In most cases, the bias value is chosen so that the exponent field can represent both positive and negative exponent values with maximum precision and efficiency. Example: For example, if a number is represented as 0.0101 in binary, it can be normalized to 0.101 x 2^-1 (explicit normalization) and if another is represented as 101.101 in binary, it can be implicitly normalized to 1.01101 x 2^2. Normalization ensures that numbers are stored and processed most efficiently, minimizing the risk of loss of precision or rounding errors. From the above results (0.101 x 2^-1 & 1.01101 x 2^2), the exponents are -1 and 2. Biasing is used to represent the exponent values in memory space as shown below, either 0 or 1 is used as the sign bit and the rest of the remaining memory space is used to store the mantissa Additionally, normalization allows for a consistent representation of numbers across different systems and can simplify arithmetic operations such as addition and multiplication. For example, the floating-point representation of the decimal number 4.375 would be stored as a 32-bit binary number with the first bit representing the sign (0 for positive), the next 8 bits representing the exponent with a bias of 127, and the remaining 23 bits representing the mantissa. The binary representation would look something like this: 0 10000001 100000000000000000001. Arithmetic operations on fixed-point numbers are straightforward and can be performed efficiently by the computer. However, precision can be lost during arithmetic operations if the numbers being operated on are too large or too small. Arithmetic operations on floating-point numbers can be more complex and may require special hardware or software to perform efficiently. However, floating-point numbers can represent a wider range of numbers with a high level of precision, making them ideal for scientific, engineering, and financial applications that require a high degree of accuracy. Next Classes: (Classes 3 - 4) Topics to cover include: √ Computer Block Diagram √ ALU, Register, Control Unit and Buses √ Von Neumann Architecture √ Parallel Architecture √ Microprocessor Pin Diagram √ 8086, 8088, 80x86, Pentium, Itanium