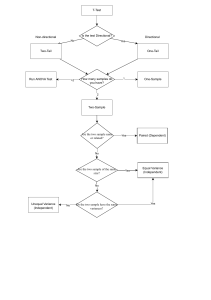

Setting the scene: what is ANOVA? ANOVA is an acronym for Analysis of Variance. It covers a series of tests that explores differences between groups or across conditions. The type of ANOVA employed depends on a number of factors: how many independent variables there are; whether those independent variables are explored between- or within-groups; and the number of dependent variables being examined ANOVA explores the amount of variance that can be ‘explained’. . For example, we could give a questionnaire to a group of people. Responses to the questions can be allocated scores according to how they have been answered. Because people are different, it is quite likely that the response scores will differ between them. To examine that, we find the overall (grand) mean score and see how much the scores vary either side of that. The amount that the scores vary is called the variance. When using ANOVA tests, we can partition that variance into separate pots. We call those pots the ‘sum of squares’ The overall variance is found in the ‘total sum of squares’: this illustrates how much the scores have varied overall to that grand mean. Sometimes the scores will vary a lot, other times they will vary a little, and occasionally the scores will not vary at all. When the scores vary, we need to investigate the cause of the variation. The scores may have varied because of some fundamental differences between the people answering the questions. For example, income may vary across a sample of people according to the level of education. If those differences account for most of the variation in the scores, we could say that we have ‘explained’ the variance. At the other extreme, the scores may have varied simply due to random or chance factors: this is the variance that we cannot explain. ANOVA tests seek to explore how much variance can be explained – this is found in the ‘model sum of squares’. Any variance that cannot be explained is found in the ‘residual sum of squares’ (or error). We express the sum of squares in relation to the relevant degrees of freedom (we will see how later). This produces the model mean square and residual mean square. If we divide the model mean square by the residual mean square, we are left with something called an ‘F ratio’– this illustrates the proportion of the overall variance that has been explained (in relation to the unexplained variance). The higher the F ratio, the more likely it will be that there is a statistically significant difference in mean scores between groups (or conditions for within-group ANOVAs). What is independent one-way ANOVA? An independent one-way ANOVA explores differences in mean scores from a single (para- metric) dependent variable (usually) across three or more distinct groups of a categorical independent variable (the test can be used with two groups, but such analyses are usually undertaken with an independent t-test). We explored the requirements for determining whether data are parametric in Chapter 5, but we will revisit this a little later in this chapter. As we saw just now, the variance in dependent variable scores is examined according to how much of that can be explained (by the groups) in relation to how much cannot be explained (the error variance). The main outcome is illustrated by the ‘omnibus’ (overall) ANOVA test, but this will indicate only whether there is a difference in mean scores across the groups. \ Research question for independent one-way ANOVA We can illustrate independent one-way ANOVA by way of a research question. A group of higher education researchers, FUSS (Fellowship of University Student Surveys), would like to know whether contact time varies between university courses. To explore this they collect data from several universities and investigate how many hours are spent in lectures, according to three courses (law, psychology and media). FUSS expect that there is a difference but they have not predicted which group will spend more time attending lectures. In this example, the explained variance will be illustrated by how mean lecture hours vary across the student groups, in relation to the grand mean. For the record, all outcomes in this chapter are based on entirely fictitious data! Theory and rationale Identifying differences Essentially, all of the ANOVA methods have the same method in common: they assess explained (systematic) variance in relation to unexplained (unsystematic) variance (or ‘error’). With an independent one-way ANOVA, the explained variance is calculated from the group means in comparison to the grand mean (the overall average dependent variable score from the entire sample, regardless of group). The unexplained (error) variance is similar to the standard error that we encountered in Chapter 4. In our example, if there is a significant difference in the number of hours spent in lectures between the university groups, the explained variance must be sufficiently larger than the unexplained variance. We examine this by partitioning the variance into the model sum of squares and residual sum of squares. We can see how this is calculated in Box 9.3. It is strongly recommended that you try to work through this manual example as it will help you understand how variance is partitioned and how this relates that to the statistical outcome in SPSS. Post hoc tests If no specific prediction has been made about differences between the groups, post hoc tests must be used to determine the source of difference. We can also choose to use post hoc tests in preference to non-orthogonal planned contrasts. However, there must be a significant ANOVA outcome in order for post hoc tests to be employed. If we try to run these tests on a nonsignificant ANOVA outcome it might be regarded as ‘fishing’. Also, we run post hoc tests only if there are three or more groups. If there are two groups we can use the mean scores to indicate the source of difference. Post hoc tests explore each pair of groups to assess whether there is a significant difference between them (such as Group 1 vs. 2, Group 2 vs. 3 and Group 1 vs. 3). Most post hoc tests account for multiple comparisons automatically (so long as the appropriate type of test has been selected – see later). How SPSS performs independent one-way ANOVA We can perform an independent one-way ANOVA in SPSS. To illustrate, we will use the same data that we examined manually earlier. We are investigating whether there is a significant difference in the number of lecture hours attended according to student groups (law, psychology and media). There are ten students in each group. This means that we will not need to consult Brown–Forsythe F or Welch's F outcomes (see assumptions and restrictions). However, because we do not know whether we have homogeneity of variance between the groups, we still need to potentially account for that in choosing the correct post hoc test (so we should request the outcome for Games–Howell in addition to Tukey – see Box 9.8). We can be confident that a count of lecture hours attendance represents interval data, so that the parametric criterion is OK. However, we do not know whether the data are normally distributed; we will look at that shortly. Testing for normal distribution To examine normal distribution, we start by running the Kolmogorov–Smirnov/Shapiro– Wilk tests (we saw how to do this for between-group studies in Chapter 7). If the outcome indicates that the data may not be normally distributed, we could additionally run z-score analyses of skew and kurtosis, or look to ‘transform’ the scores (see Chapter 3). We will not repeat the instruction for performing Kolmogorov–Smirnov/Shapiro–Wilk tests in SPSS (but refer to Chapter 3 for guidance). However, we should look at the outcome from those analyses (see Figure 9.3). Because we have a sample size of ten (for each group), we should refer to the Shapiro– Wilk outcome. Figure 9.3 indicates that lecture hours appear to be normally distributed for all courses: Law, W (10) = .976, p = .943); Psychology, W (10) = .893, p = .184); and Media, W (10) = .976, p = .937). You will recall that KS and SW tests investigate whether the data are significantly different to a normal distribution, so we need the significance (‘Sig.’) to be greater than .05. Running independent one-way ANOVA in SPSS Now we can perform the main analysis: We explored the various post hoc options earlier. We know that we have equal group sizes (ten in each group), so we are pretty safe in selecting Tukey. However, we do not yet know whether we have equality of variances, so we should also select Games–Howell just in case. Interpretation of output Figure 9.8 shows the lecture hours appear to be higher for psychology than for the other two courses, and appear to be higher for law than media. However, we cannot make any inferences about that until we have assessed whether these differences are significant. We need to check homogeneity of (between-group) variances. Figure 9.9 indicates that significance is greater than .05. Since the Levene statistic assesses whether variances are significantly different between the groups we can be confident that the variances are equal. Figure 9.10 indicates that there is a significant difference in mean lecture hours across the courses. We report this as follows: F (2, 27) = 12.687, p 6 .001. The between–groups line in SPSS is equivalent to the model sum of squares we calculated in Box 9.3. The within–groups line equates to the residual sum of squares (or error). There are conventions for reporting statistical information in published reports, such as those suggested by the American Psychological Association (APA). The British Psychological Society also dictates that we should adhere to those conventions. When reporting ANOVA outcomes, we state the degrees of freedom (df), which we present (in brackets) immediately after ‘F’. The first figure is the between groups (numerator) df; the second figure is the within groups (denominator) df. The ‘Sig’ column represents the significance (p). It is generally accepted that we should report the actual p value (e.g. p = .002, rather than p 6 .05). When a number cannot be greater than 1 (as is the case with probability values) we drop the ‘leading’ 0 (so we write ‘p = 002’, rather than ‘p = 0.002’). The only exception is when the significance is so small that SPSS reports it as .000. In this case we report the outcome as p 6 .001. We cannot say that p = 0, because it almost certainly is not (when we explored the ANOVA outcome manually earlier, we saw that p = .0001; this is less than .001, but it is not 0). Welch/Brown–Forsythe adjustments to ANOVA outcome If we find that homogeneity of variance has been violated, we should refer to the Welch/Brown– Forsythe statistics to make sure that the outcome has not been compromised. The tests make adjustment to the degrees of freedom (df) and/or F ratio outcomes (shown under ‘Statistic’– Figure 9.11). We do not need to consult this outcome, as we did satisfy the assumption of homogeneity of variance. However, it is useful that you can see what the output would look like if you did need it. Post hoc outcome As we stated earlier, if we have three or more groups (as we do), the ANOVA outcome tells us only that we have a difference; it does not indicate where the differences are. We need to refer to post hoc tests to help us with that. Figure 9.12 shows two post hoc tests: one for Tukey and one for Games–Howell. We selected both because, at the time, we were not certain whether we had homogeneity of variances. As we do, we can refer to the Tukey outcome. The first column confirms the post hoc test name. The second column is split into three blocks: Law, Psychology and Media (our groups). The third column shows how each group compares to the two remaining groups (in respect of lecture hours). For example, Law vs. Psychology (row 1) and Law vs. Media (row 2). The fourth column shows the difference in the mean lecture hours between that pair of course groups. To assess the source of difference we need to find between-pair differences that are significant (as indicated by cases where ‘Sig.’ is less than .05). Presenting data graphically We can also draw a graph for this result. However, never just repeat data in a graph that has already been shown in tables. The illustration is shown in Figure 9.16, in case you ever need to run the graphs. We can use SPSS to ‘drag and drop’ variables into a graphic display with between- group studies (it is not so straightforward for within-group studies).