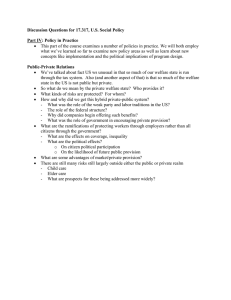

UNIVERSITY OF CALIFORNIA Santa Barbara A Psychological Calculus for Welfare Tradeoffs A dissertation submitted in partial satisfaction of the requirements for the degree Doctor of Philosophy in Psychology by Andrew Wyatt Delton Committee in charge: Professor Leda Cosmides, Chair Professor John Tooby Professor Daphne B. Bugental Professor Tamsin C. German Professor Nancy L. Collins September 2010 UMI Number: 3427833 All rights reserved INFORMATION TO ALL USERS The quality of this reproduction is dependent upon the quality of the copy submitted. In the unlikely event that the author did not send a complete manuscript and there are missing pages, these will be noted. Also, if material had to be removed, a note will indicate the deletion. UMI 3427833 Copyright 2010 by ProQuest LLC. All rights reserved. This edition of the work is protected against unauthorized copying under Title 17, United States Code. ProQuest LLC 789 East Eisenhower Parkway P.O. Box 1346 Ann Arbor, MI 48106-1346 The dissertation of Andrew Wyatt Delton is approved. _____________________________________________ John Tooby _____________________________________________ Daphne B. Bugental _____________________________________________ Tamsin C. German _____________________________________________ Nancy L. Collins _____________________________________________ Leda Cosmides, Committee Chair August 2010 A Psychological Calculus for Welfare Tradeoffs Copyright © 2010 by Andrew Wyatt Delton iii Acknowledgements I dedicate this to my parents, Jim and Sharon Delton, for their years of love and support. Whether they know it or not, this is all their fault. I would like to acknowledge my collaborators on much of this research, Daniel Sznycer and Julian Lim, whose contributions greatly improved the research and whose jokes kept me greatly entertained. Thanks also go to the members of the Center for Evolutionary Psychology for their valuable feedback throughout the years. Daphne Bugental, Tamsin German, and Nancy Collins all graciously agreed to be committee members and, perhaps more importantly, generously shared of their time and expertise during my entire graduate career. I would especially like to thank Daphne for her wonderful grant-writing advice that made the impossible possible. Scott Grafton was instrumental in helping us to design the pilot fMRI experiment presented in the appendices. Many thanks to him for his collaboration on this project. It’s difficult to express my appreciation for my advisors, Leda Cosmides and John Tooby, particularly given the circumstances under which this research was conducted. Despite numerous acts of god that threatened their health, home, and sanity, they remained insightful, supportive, and somehow—through a supreme act of biblical defiance—good-humored. Most people know their scientific heroes through works of history. I was luck enough to be trained by mine. Finally, I would like to thank my lifetime collaborator and the love of my life, Tess Robertson, for making happiness all the rage. iv Vita of Andrew Wyatt Delton August 2010 Research Interests Evolutionary psychological approaches to cooperation and altruism, evolutionary game theory, social neuroscience, and neuroimaging. Supervised by Professors Leda Cosmides and John Tooby. Education BS in Psychology (2003), honors college graduate, with highest distinction (Summa Cum Laude), Arizona State University. Thesis: Memory for faces and evolved motivational states. Thesis Advisor: Dr. Douglas Kenrick MA in Psychology (2006), University of California, Santa Barbara. Thesis: What counts as free riding? Using intentions—but not contribution level—to identify free riders. Thesis Advisor: Dr. Leda Cosmides PhD in Evolutionary and Developmental Psychology (2010), with an emphasis in Quantitative Methods for the Social Sciences, an emphasis in Cognitive Sciences, and an emphasis in Interdisciplinary Human Development, University of California, Santa Barbara. Dissertation Advisors: Dr. Leda Cosmides and Dr. John Tooby. Publications Cimino, A. & Delton, A. W. (2010). On the perception of newcomers: Toward an evolved psychology of intergenerational coalitions. Human Nature, 21, 186202. Delton, A. W. & Cimino, A. (2010). Exploring the NEWCOMER concept: Experimental tests of a cognitive model. Evolutionary Psychology, 8, 317-335. Delton, A. W., Krasnow, M. M., Cosmides, L., & Tooby, J. (2010). Evolution of Fairness: Rereading the data. Science, 329 (5990), 389. Delton, A. W., Robertson, T. E., & Kenrick, D. T. (2006). The mating game isn’t over: A reply to Buller’s critique of the evolutionary psychology of mating. Evolutionary Psychology, 4, 262-273. v Griskevicius, V., Delton, A. W., & Robertson, T. E., Tybur, J. M. (in press). Environmental contingency in life-history strategies: Influence of mortality and socioeconomic status on reproductive timing. Journal of Personality and Social Psychology. Kenrick, D. T., Delton, A. W., Robertson, T. E., Becker, D. V., & Neuberg, S. L. (2007). How the mind warps. In J. P. Forgas, M. G. Haselton, & W. Von Hippel (Eds.), The Evolution of the social mind: Evolutionary psychology and social cognition (pp. 49-68). New York: Psychology Press. Klein, S. B., Robertson, T. E., & Delton, A. W. (2010). Facing the future: Memory as an evolved system for planning future acts. Memory and Cognition, 38, 13-22. Klein, S. B., Robertson, T. E., & Delton, A. W. (in press). The future-orientation of memory: Planning as a key component mediating the high levels of recall found with survival processing. Memory. Maner, J. K., Kenrick, D. T., Becker, D. V., Delton, A. W., Hofer, B., Wilbur, C. J., & Neuberg, S. L. (2003). Sexually selective cognition: Beauty captures the mind of the beholder. Journal of Personality and Social Psychology, 85, 1107-1120. Maner, J. K., Kenrick, D. T., Becker, D. V., Robertson, T. E., Hofer, B., Neuberg, S. L., Delton, A. W., Butner, J., & Schaller, M. (2005). Functional projection: How fundamental social motives can bias interpersonal perception. Journal of Personality and Social Psychology, 88, 63-78. Grants Co-PI on The Hidden Correlates of Social Exclusion (NSF funded; $400,000). Grant investigates evolved, domain-specific responses to social exclusion and their hormonal correlates. With Co-PIs Theresa Robertson, Leda Cosmides, & John Tooby. Invited Talks Delton, A. W. (2009, March). How the mind makes welfare tradeoffs. Invited paper presentation at the 3UC Conference, San Luis Obispo, CA. Delton, A. W. & Krasnow, M. M. (2008, February). A cue-theoretic approach to cooperation. Invited paper presentation at the Evolution and Sociality of Mind Conference, Santa Barbara, CA. vi Published Conference Presentations Petersen, M. B., Delton, A. W., Robertson, T. E., Tooby, J. and Cosmides, L. (2008, April) Politics in the Evolved Mind: Political Parties and Coalitional Reasoning. Paper presented at the Midwest Political Science Association 66th Annual National Conference, Chicago, IL. PDF available at http://www.allacademic.com/meta/p268315_index.html. Professional Employment Graduate Researcher (2006-2007, 2008-2010) Supported on project entitled: “NIH Director’s Pioneer Award for a Computational Approach to Motivation,” UC Santa Barbara, Principal Investigators: Leda Cosmides & John Tooby. This research breaks down problems of motivation into their elementary computational components using theories from evolutionary biology. Student Researcher (2003) Supported on projected entitled: “Social Motives and Cognition Grant,” Arizona State University, Principal Investigators: Douglas T. Kenrick, Steven L. Neuberg, & Mark Schaller. This research examined how fundamental motivational states relevant to social relationships influence basic social cognition processes. Assistant Textbook Researcher (2003) for a revision of Social Psychology: Unraveling the mystery by Douglas T. Kenrick, Steven L. Neuberg, and Robert B. Cialdini. Duties: Assisted in review of current social psychological literature. Instructional Activities Lecturer (Instructor of Record) Developmental Psychology (2008) Developmental Psychology (2006) Laboratory Instructor Advanced Research Methods (2005) Discussion Leader Introductory Statistics (Spring 2005, Summer 2005) Introductory Psychology (2004) Teaching Assistant Developmental Psychology (2004) Social Psychology (2004) vii Fellowships, Scholarships, and Awards New Investigator Award from the Human Behavior and Evolution Society (2009) Fellowship for the Summer Institute in Cognitive Neuroscience: Social Neuroscience & Neuroeconomics, UC Santa Barbara (2007) National Science Foundation Graduate Fellowship Honorable Mention (2005) Winner, Special Competition of Time-sharing Experiments in the Social Sciences (TESS; an NSF funded research platform) for “Motivational Systems in Political Collective Action: Managing Free-Riders, Exiters, and Recruits.” Co-authored with Leda Cosmides (2005) National Science Foundation Graduate Fellowship Honorable Mention (2004) UCSB Chancellor’s Funds for Professional Activities (2004) UCSB Chancellor’s Fellowship (2003) Outstanding Undergraduate Research Paper in Psychology, ASU (2003) National Dean’s List, ASU (2003) ASU Dean’s Council Award for Psychology (2002) National Merit Finalist Tuition Waiver (1999-2003) National Merit Finalist Resident University Award (1999-2003) National Merit Finalist University Award (1999-2003) ASU President’s Scholarship (1999-2003) ASU Dean’s List (1999-2003) viii Abstract A Psychological Calculus for Welfare Tradeoffs by Andrew Wyatt Delton Members of social species routinely make decisions that involve welfare allocations—decisions that impact the welfare of two or more parties. These decisions often involve welfare tradeoffs such that increasing one organism’s welfare comes at the expense of another organisms’ welfare. The overarching goal of this dissertation is to understand how the human mind makes these tradeoffs. First, I review a number of theories from evolutionary biology that predict how and why welfare tradeoffs should be made. Collectively, these theories predict that organisms must make estimates of a number of features about themselves and others (for instance, degree of relatedness and quality as a reciprocation partner). To make effective welfare tradeoffs, however, these estimates must combined into a summary variable—a welfare tradeoff ratio—that is used to regulate social decision-making. By consulting this variable, an organism can determine when it is and is not appropriate to cede personal welfare on behalf of another. Second, I provide experimental, cross-national evidence that welfare tradeoff ratios exist and regulate social decision-making in ix surprisingly precise ways. Demonstrating this requires developing a new quantitative measurement device and methods to analyze the resulting data. Third, in further experiments I show that the mind can also estimate welfare tradeoff ratios stored in the minds of others. Finally, I review the connections between welfare tradeoff theory and other approaches to social decision-making. The discovery of this neurocomputational element helps to explain how complex behaviors—such as cooperation, generosity, and aggression—can arise from a physical device such as the human brain. x Table of Contents Chapter 1: Theoretical Overview of Welfare Tradeoff Theory ............................. 1 Computational Theories......................................................................................... 2 Evolutionary Biological Theories of Valuation and Welfare Tradeoffs ............ 5 Helping Through Recent Common Descent ..................................................... 6 Direct Reciprocity ............................................................................................... 9 Reputation & Indirect Reciprocity.................................................................. 13 Graph Theory.................................................................................................... 15 The Asymmetric War of Attrition................................................................... 16 Multi-Level Selection Theory........................................................................... 18 Mutualism, Coordination, Pseudo-reciprocity, Markets, and Externalities 19 Summary............................................................................................................ 22 Computational Mechanisms that Compute and Instantiate Valuation ........... 23 A Kinship Index ................................................................................................ 24 Indices Relevant to Reciprocity ....................................................................... 26 A Formidability Index ...................................................................................... 28 Sexual Value Indices ......................................................................................... 28 The Problem of Integration and the Necessity of Welfare Tradeoff Ratios 30 Standards of Evidence for the Existence of Welfare Tradeoff Ratios ......... 31 The Problem of Estimation .............................................................................. 34 Standards of Evidence that the Mind Estimates Welfare Tradeoff Ratios . 35 Summary of Predictions from Welfare Tradeoff Theory ................................. 36 Chapter 2: The Existence of Welfare Tradeoff Ratios .......................................... 38 The Welfare Tradeoff Task.................................................................................. 38 Theoretical Overview........................................................................................ 38 The Task ............................................................................................................ 46 Methods.................................................................................................................. 53 General Design .................................................................................................. 53 Subjects and Specific Design............................................................................ 53 Dependent Measures......................................................................................... 58 Results .................................................................................................................... 63 Result 1: A Single Switch Point Predicts Decisions ....................................... 64 Result 2: Consistency is Not Due to the Artificial Nature of the Task......... 66 Result 3: Subjects’ Decisions Represent Actual Valuation of Others; They Are Not “Cheap Talk”...................................................................................... 68 Result 4: WTRs Are Person-Specific; They Carry More Information Than a Simple Other-Regarding Preference............................................................... 68 Result 5: Individualized WTRs Are Correlated with Decisions Involving Only Third-Parties............................................................................................ 73 Results 6 & 7: Welfare tradeoff ratios behave as other magnitudes, but are algorithmically separable from other magnitudes......................................... 77 Correlations of the WTR Measure with Other Measures............................. 88 Summary of Chapter 2: The Existence of Welfare Tradeoff Ratios................ 90 xi Chapter 3: The Estimation of Welfare Tradeoff Ratios........................................ 92 The Logic of Welfare Tradeoff Ratio Estimation .............................................. 93 Study 6: Variations in Cost Incurred.................................................................. 95 Methods.............................................................................................................. 95 Results ................................................................................................................ 98 Study 7: Varying Costs with Constant Intentions ........................................... 103 Method ............................................................................................................. 103 Results .............................................................................................................. 104 Study 8: Manipulating the Target of Costs Incurred ...................................... 104 Method ............................................................................................................. 105 Results .............................................................................................................. 105 Discussion......................................................................................................... 106 Studies 9 & 10: Varying Benefits Provided ...................................................... 107 Methods............................................................................................................ 108 Results & Discussion....................................................................................... 109 Summary of Chapter 3: The Estimation of Welfare Tradeoff Ratios ........... 110 Chapter 4: Discussion and Conclusions................................................................ 111 Extensions and Future Directions ..................................................................... 111 Ongoing Work on Welfare Tradeoff Theory ............................................... 111 Studying Welfare Tradeoffs Without Numbers........................................... 113 Broadening the Breadth of Decisions............................................................ 114 Comparisons to Other Research Programs...................................................... 116 Affective Heuristic Approaches ..................................................................... 116 The Elementary Forms of Human Interaction Approach .......................... 118 Other-Regarding Preferences Approaches................................................... 121 Interdependence Theory ................................................................................ 123 Social Contract Theory................................................................................... 125 Parting Thoughts: Existentialism Meets Evolutionary Psychology ............... 128 Appendix 1: Example Materials for Study 1........................................................ 129 Appendix 2: Screen Shots of Computerized Tasks.............................................. 138 Appendix 3: Materials for Study 3 ........................................................................ 139 Appendix 4: Material for Study 4.......................................................................... 142 Appendix 5: Materials for Study 5 ........................................................................ 146 Appendix 6: Probing the Sensitivity of Welfare Tradeoff Computations ......... 151 Appendix 7: Dissociating Welfare Tradeoff Computation from Other Intuitive Economic Computation: A Pilot Functional Magnetic Resonance Imaging Study ................................................................................................................................... 159 Appendix 8: Materials for Chapter 3.................................................................... 177 References................................................................................................................ 188 xii Chapter 1: Theoretical Overview of Welfare Tradeoff Theory Chad sits alone in his dorm room with an important decision to make: Does he help tutor a friend who desperately needs to pass a calculus exam or does he go on a blind date as originally planned? Paola also has an important decision to make: She’s currently safe from the guerilla fighters as she hides in the forest just outside her small village, but her younger brother has yet to make it out. Does she go back to help him or does she remain where she is? Whether considering mundane daily life or more serious circumstances, decisions involving welfare allocations—that is, decisions impacting the welfare of two or more parties—are a ubiquitous part of life for members of a social species. Often, welfare allocations involve welfare tradeoffs, as in the above examples. Such decisions involve a tradeoff between personal welfare and the welfare of other (Tooby & Cosmides, 1990; Tooby, Cosmides, Sell, Lieberman, & Sznycer, 2008) The first goal of this dissertation is to elucidate the engineering complexities of making welfare allocations and tradeoffs—that is, to describe at least some of representations and algorithms necessary for a machine like the human mind to successfully make welfare tradeoffs and to describe what would count as success. The second goal is to provide preliminary evidence for the existence of a critical component of this machinery, the welfare tradeoff ratio (WTR), a variable computed by the mind that specifies how much of one’s own welfare should be traded off to 1 increase or decrease the welfare of another individual. The third goal is to provide preliminary evidence that the mind has the ability to estimate the welfare tradeoff ratios in the minds of others. Finally, I will compare welfare tradeoff ratio theory to relevant alternative approaches. Computational Theories A few pounds of protinaceous fibers strung along a series of semi-rigid rods, all interconnected by miles of wire and plumbing, with a stretchable sack to hold it together. How does one get a machine made of meat to make welfare tradeoffs? I approach the problem from a computational perspective (Marr, 1982). Such an approach focuses on formally characterizing the information to be processed (i.e. the representations the system operates on and the primitives from which they are built) and the transformations performed on these representations (i.e. the rules of the system). This style of analysis has led to numerous insights in linguistics (Jackendoff, 2002), vision (Marr, 1982), and in other domains of thought, including social thought (Jackendoff, 2006; Marr, 1982; Pinker, 2007). As an example, consider that basic arithmetic is a simple computational system (see Marr, 1982, for an extended discussion). It has a set of primitives (the numerals “0” through “9”, “+”, “–”, and “=”); real world entities can be represented within the system (e.g., three socks can be represented by the primitive “3”); and larger representations can be built up (e.g., “3 + 4”) and transformed into new representations (e.g., via the rules of arithmetic “3 + 4” is transformed into “7”). As is always the case in computational systems, not all possible properties of the to-be- 2 represented entity are actually represented (e.g., given a set of three socks, many things besides the size of the set could be represented, such as their softness); which features are represented is determined by the problem that is being solved and the representational system activated (e.g., the number of socks—not their shape, color, or texture—is the only feature relevant for arithmetical inference). Finally, it is the logic that a notational system embodies—not its particular orthography—that matters (e.g., instead of Arabic numerals, we could use “I”, “V”, “X”, “L”, “C”, “D”, and “M”; although the mechanics of arithmetic are more difficult using Roman numerals, the underlying logic is identical.) But how do we extrapolate from computational theories concerned with seemingly objective relationships, such as arithmetical inference, to devise theories of seemingly subjective phenomena, such as making “appropriate” welfare tradeoffs? Indeed, as a rough heuristic it is useful to draw a distinction between computation that generates representations about states of the world (loosely, what exists?) and computation that generates representations about valuation and motivation (loosely, what should be done?) because different approaches apply to each. Although one cannot go directly from facts of the world to statements about what one should do in the world, it is still possible to scientifically investigate the computations underlying motivation. A familiar example of value computation with a long history in psychology is null-hypothesis significance testing. This approach divides the task of statistics into two. First, generate a statistical description of the world: the computation of means, 3 standard deviations, t- or F-statistics, p values and so on. Second, generate a statistical inference by determining a significance threshold (e.g., p must be less than .05 to conclude or act as if there is a difference between groups). This latter task is ultimately a value judgment—as anyone familiar with the ease of calculating a mean versus the difficulty of deciding what result is truly “significant” can attest—but it can, in principle, be arrived at through means that quantify the likely costs of making various kinds of decisions (e.g., the cost of rejecting true hypotheses, the cost of allowing false hypotheses to be believed true). Of course, these computations were devised by human scientists and statisticians over the course of a few centuries. We are, however, concerned with computational devices crafted by natural selection over deep time, the more common focus of computational approaches. Marr’s (1982) pioneering work on applying a computational approach was targeted at the problem of vision. The assumption underlying this approach was that there really is a three dimensional world out there, with surfaces, color, etc. This implies that the laws of physics, such as the laws of optics and the laws of motion, could be used to deduce what computational procedures the mind/brain would need to successfully create useful descriptions of the physical world (Leslie, 1994; Shepard, 1984). But how do we develop theories of computation for valuation? Although physics may not be of much direct help here, we can instead turn to evolutionary biology. Over the past five decades, evolutionary biologists have developed a variety of theories for predicting when and why organisms should tradeoff off their own welfare in favor of another organism. 4 Evolutionary Biological Theories of Valuation and Welfare Tradeoffs Evolutionary biologists study how the process of natural selection crafts organisms over deep time. A central problem for early generations of biologists was the problem of altruism and cooperation. Basically, why ever be nice? It would seem that genes that created generous organisms would be at a disadvantage relative to genes that did not. All else equal, organisms—and hence the genes inside them— replicate faster when they have more resources. Why ever give any of these resources away? Despite this, we regularly see animals behaving generously to conspecifics. To understand the process by which natural selection creates generous behavior I review in some detail two theories for the evolution of generosity and review several others more synoptically. The analyses discussed below are generally presented in the framework of evolutionary game theory as developed by Maynard Smith (1982), among others. Under this framework, one specifies (1) different heritable strategies that organisms can play (such as giving to others at a personal cost or aggressing against intruders in one’s territory), (2) the fitness consequences for those strategies when they meet themselves or other strategies (what are the fitness impacts for a strategy for giving when meeting another strategy for giving? when it meets a strategy that does not give?), and (3) the starting frequencies of various strategies (some strategies may outcompete other strategies only if already at high frequencies, but not if there are only a few copies in the population). One can then determine the long run outcome (does a strategy for giving outcompete and replace other strategies 5 over evolutionarily-relevant timescales?). The ultimate goal of reviewing these theories is to illustrate how diverse causal processes all lead to the same underlying logic. Helping Through Recent Common Descent Early work by Williams & Williams (1957) and Hamilton (1964) developed one solution to this problem. Although indiscriminate generosity cannot evolve, one route for the evolution of discriminate generosity is to selectively give to those who are likely to share the same gene for giving by recent common descent. This is often summarized in a mathematical statement that called Hamilton’s Rule, motivated below. Hamilton’s rule describes the logic under which an organism should, at a cost to the self, provide benefits to other organisms as a function of genetic relatedness by recent common descent. Indeed, Hamilton’s rule was one of the first formal treatments in a rigorous, modern evolutionary context that describes when and why organisms should give benefits to another. To understand the logic of Hamilton’s Rule, assume that organisms have the possibility to give one another a benefit b at personal cost c. Assume also that benefits are greater than costs, b > c. Next, imagine that among the entire population a rare mutant has a novel design that causes it to actually perform this behavior of giving at personal cost. However, this rare mutant—call it ALLG for Always Give—gives at a personal cost to any organism it encounters. This, of course, contrasts with the majority of the population which plays a strategy of never giving—NEVERG. How do ALLG and 6 NEVERG fare against each other? The vast majority of encounters ALLG has will be with NEVERG. ALLG’s fitness after these encounters will be reduced by c, whereas NEVERG’s will be increased by b. (When NEVERG encounters itself, it doesn’t give, so its fitness doesn’t change.) Clearly, ALLG is doing worse. NEVERG will reproduce at a faster rate and ALLG is likely to disappear from the population. Do things get better if we assume that, somehow, ALLG has managed to become the dominant type in the population—that most of the population is ALLG with a few scattered NEVERG mutants? This does not solve the problem. Most of NEVERG’s encounters will be with ALLG. As before, in these encounters, NEVERG does better than ALLG, earning b compared to ALLG’s –c. Most of ALLG’s encounters will be with other copies of itself, earning it a net of b – c. Notice that NEVERG is usually earning b and ALLG is usually earning b – c. Obviously b > b – c. Thus, NEVERG is still doing better than ALLG. Thus, even if ALLG starts out at the predominant strategy in population, it will eventually be overtaken by NEVERG. Hamilton’s insight was that strategies for providing benefits to others at a personal cost can evolve in a population if they find ways to selectively transfer benefits only to copies of themselves. One way to do this is for the strategy to selectively transfer benefits to organisms who have copies of it due to recent common descent. This is usually considered in the context of diploid organisms (e.g., mammals, birds, many insects) where each organism has two copies of each chromosome, one copy inherited maternally and one paternally. 7 Although the logic does not depend on this, it is easiest to see the logic of recent common descent if by imagining that you have a rare mutation passed on to you by one of your parents (ignoring for now what that mutation does). This mutation is so rare that only one of your parents has it (i.e., only one of the chromosomes you inherited has this rare mutation and it could only have come from one of your parents). Ignoring some complications (such as genetic imprinting, Haig, 1998) and assuming simple Mendelian inheritance, you have no way to know which parent you inherited this rare gene from, so there is an even chance it comes from either parent. Now, if you have a sibling, what is the chance that they also have this rare gene? If your mother had the rare gene (50% chance of this), there is a 50% chance your sibling will inherit it from her. A 50% chance of your mother having it multiplied by a 50% chance of passing it on to a sibling leads to there being a 25% (= 50% * 50%) chance that your sibling will inherit through your mother the same rare gene you have. Similar considerations apply when considering inheritance through your father, meaning there is also a 25% chance that your sibling inherits the gene from your father. Adding these two probabilities reveals a 50% chance that your sibling will inherit the same rare gene that you have. Hamilton termed this probability the coefficient of relatedness, r. For a full sibling, r = 0.5. What Hamilton showed formally was that if this rare gene caused its carrier to help individuals who are likely to have the same gene due to recent common descent, the gene would spread through the population if its help only occurred when r * b > c. That is, genes that cause organisms to give to others at a personal cost can evolve, but 8 only when it is sufficiently likely that the help will be targeted at other organisms who also have the gene for helping. Another way of stating this, useful for later discussions, is that the benefits that will be shared must first be discounted by a factor that tracks the selectivity of help (here, the coefficient of relatedness); only then can the (now discounted) benefits be compared to the costs. Yet another way of saying this, also useful for later, is that the focal organism must translate the fitness benefits that the other will receive into fitness benefits that the self will receive. Given that there is uncertainty about whether a full sibling would actually share this rare gene, the gene that causes an organism to give at a personal cost is not guaranteed to recoup what it gives; there is only a probability r that the gene will recoup what it gives. Fifty years later, this rule still holds: Although in modern formalizations the interpretations of the various symbols in the equation may be somewhat more complicated, the functional form of r * b > c is still there (Gardner, West, & Barton, 2007). Because this approach specifies how aid toward kin should evolve it is often called kin selection theory. Direct Reciprocity However, many animals, including humans, provide costly benefits to individuals who are not closely related to them. What explains this type of cooperation? Trivers (1971) formalized one solution to this problem, now usually called direct reciprocity (Trivers preferred reciprocal altruism). Although Trivers’ original description was largely verbal, I will frame this discussion based on the mathematical version developed by Axelrod & Hamilton (1981). 9 Imagine that pairs of organisms have the opportunity to deliver benefits b to each other at a personal cost c, where benefits and costs are measured in fitness terms. If I deliver you a benefit but you do not give me one, then my fitness has been decreased by c and yours increased by b. If we both give each other benefits, then our net fitness change is b – c. If neither of us give, then our fitness does not change. Formally, this arrangement of costs and benefits constitutes a prisoners’ dilemma (PD). If a PD is played only one time, then the fitness maximizing choice is to not give—to defect. This is because regardless of what you do, I am always better off not giving: If you give and I do not, then I earn b compared to the smaller quantity if I do give of b – c. If you don’t give and I don’t give, I earn 0 compared to the negative quantity if I do give of –c. In fact, it turns out that if we can chain multiple PDs together in row, it is still fitness maximizing to always defect—so long as the number of times we play the PD (i.e., the number of rounds) is fixed and finite. Although one could imagine some sort of system of threats that could keep organisms “honest”— strategies that specify giving so along as the other behaves in a certain way—these ultimately unravel. Consider that no matter how many round are played, there is always the last round, round t. Given that there will be no more rounds, this becomes a one-shot PD and the fitness maximizing choice is to defect no matter what. (The ability to modify behavior based on entering the last round would not require an explicit representation that round t is the final round. For instance, if an organism could count the number of rounds, then it could evolve decision rules that use the round number as input.) Now, given that in round t both organisms are definitely 10 defecting, what happens in round t – 1? This round becomes effectively the last round of interaction and therefore effectively a one-shot PD. Hence, the fitness maximizing choice here is to also defect. This logic continues on round after round, implying that the fitness maximizing strategy when the number of rounds is fixed and finite is to always defect—whether the number of rounds is 5 or 5 million. (Although this logic is reminiscent of what economists call backward induction, it is only fitness accounting, not the rational calculation implied by economic backward induction. Of course, the two processes would be much more similar if backward induction is taken to refer to an end-state produced by an unspecified process; in this case, backward induction would only metaphorically describe the logic leading to the endpoint, not the equilibration process itself.) One insight of Axelrod & Hamilton (1981) was that if interactions had indefinite endpoints—an unknown number of rounds—then there is the possibility for mutual giving to evolve in and remain in the population. Given that humans are longlived and have mobility, residence patterns, and life spans affected by a number of stochastic factors, this seems to be a fair characterization of human interactions at least. They considered how the strategy Tit-for-Tat (TFT) would perform against a strategy that Always Defects (ALLD) when interactions have indefinite endpoints. TFT cooperates on the first round of interactions and then copies whatever its partner did on the previous round. ALLD, as its name implies, defects no matter what. When playing a copy of itself, TFT will give at personal cost and receive a benefit each 11 round until the interaction ends. When playing ALLD, TFT will pay a one time cost of c on the first round, ALLD will receive a one-time benefit of b on the first round, and thereafter neither will give and thus neither’s fitness will change. When playing itself, ALLD’s fitness does not change. By selectively choosing who they interact with across multiple rounds, TFT earns large fitness benefits when it interacts with copies of itself and only pays a small cost when interacting with ALLD. Thus, although a specific organism playing TFT has lower fitness than its partner if its partner plays ALLD, across an entire population playing these strategies—where TFT will sometimes be playing itself— TFT will on average earn greater fitness than ALLD, allowing TFT to dominate the populate. Axelrod & Hamilton show mathematically that TFT dominates the population when w * b > c, where w represents the probability that an interaction will continue from one round to the next. In words, this mean that so long as the length of interactions is sufficiently long (i.e., w is sufficiently large) and the net within-round benefits are sufficiently large, then giving at a personal cost is evolutionarily stable. (Axelrod & Hamilton modeled this using the simplifying assumption that the population was literally infinite. Under this assumption, rare mutant organisms playing TFT cannot invade a population otherwise composed of ALLD without there being some sort of positive assortment—that is, unless TFT is more likely to play itself than random pairings would predict. However, Nowak, 2006, reviews analytic work showing that in finite populations with the possibility of genetic drift, even a 12 single TFT mutant, with no possibility of assortment, can eventually lead to TFT invading the population.) Although it took a lot of work to get here, the upshot of all this is that Hamilton’s Rule, r * b > c, and Axelrod and Hamilton’s condition for the evolution of direct reciprocity, w * b > c, are surprisingly similar. I next review more briefly several other theories that lead to other similar rules for making welfare tradeoffs. Reputation & Indirect Reciprocity Formalizations of direct reciprocity typically assume that agents learn about their partner’s strategy solely from personal interaction with their partner. However, a pre-theoretical observation, about humans at least, is that we learn a great deal about other’s cooperative tendencies by observing them in interactions and from hearing what others say about them (gossip, reputation, etc., Dunbar, 2004). This can form another basis for making welfare tradeoffs. How does reputation-based cooperation evolve? One simple way to formally model this would be to extend Axelrod & Hamilton’s model. Their model assumes that an organism interacts with one and only one agent and then dies immediately after reproducing according to its fitness in that one interaction. One could imagine, though, that organisms have multiple, serial interaction partners in their lifetime. If TFT begins its second interaction with knowledge of what its partner’s first round behavior was in its partner’s previous interaction, then TFT can avoid paying even the first round cost of providing benefits to ALLD by defecting immediately. After all, if the strategies under consideration are TFT and ALLD, then all organisms defecting 13 on the first round of their first interaction will be ALLD. Thus, a variant of TFT that conditions its current behavior on its partner’s past behavior would do better than a variant of TFT that does not. Even if we make knowledge imperfect—sometimes TFT knows it partner’s past behavior, sometimes not—it’s hard to see how this could make cooperation less profitable than in the austere case considered by Axelrod & Hamilton. However, reputation is often modeled in a different way, through a paradigm known as indirect reciprocity (Nowak & Sigmund, 2005; Ohtsuki & Iwasa, 2006; Panchanathan & Boyd, 2003). In indirect reciprocity, organisms are randomly paired with each other and only one member of the pair has an opportunity to give at a personal cost. After this one opportunity, these organisms can never interact again. Moreover, they can never end up in long distance loops; the possibility of A giving to B, B giving to C, and C giving to A is ruled out a priori (see Boyd & Richerson, 1989, for a model of network reciprocity like this). Given these constraints, organisms are paired with multiple partners across a number of rounds. Except for the first round, organisms who have the opportunity to give at a personal cost can do so contingent on whether their partner gave in a previous round. (Note that this requires organisms switching roles across rounds— sometimes giver, sometimes receiver.) Strategies that cause organisms to give to other organisms that also give can evolve when q * b > c, where q is the probability that the recipient’s past behavior is known (Nowak, 2006b; Nowak & Sigmund, 2005). Although there a number of qualifications to this work (Leimar & 14 Hammerstein, 2001; Ohtsuki & Iwasa, 2006; Panchanathan & Boyd, 2003), it’s striking how this model with its very different—and somewhat esoteric—assumptions leads to an equation with the same structural form as Hamilton’s rule and direct reciprocity. Graph Theory A more recent tool for modeling social evolution (and its attendant welfare tradeoffs) is graph theory (Nowak, 2006b). Most modeling approaches assume a population with no structure: Although there may be some type of assortment, agents are randomly mixed together with fixed probabilities. Graph Theory goes beyond this assumption, allowing populations to have a “physical” geometry. This work imagines that agents are vertices on a lattice. If two vertices are connected by an edge, then those two organisms can interact; those organisms are neighbors. (Edges also determine where offspring end up.) Consider a population containing cooperators who give to each neighbor a benefit b, with each act of giving incurring a personal cost c, and defectors who do not provide any help. Note that cooperators do not have a conditional strategy; they always cooperate with their neighbors no matter what. Given a number of simplifying assumptions, it can be shown that cooperators outcompete defectors when k-1 * b > c, where k represents the number of neighbors each organism has; that this equation involves the reciprocal of k indicates that more neighbors makes it more difficult for cooperation to evolve. The intuition behind this result is that cooperators flourish if they are in clusters; the smaller the neighborhood, 15 the less likely that cooperator–cooperator interactions will be swamped by cooperator–defector interactions. Despite a very different analysis, graph theory leads to a Hamilton’s rule-style equation for making welfare tradeoffs. Although in the abstract this rule doesn’t seem to imply any information processing, taking a larger view we can envision an organism whose social ecology includes many different “graphs,” where some graphs involve few neighbors and others involve many. Organisms in such an environment would need some way to discriminate different graphs with different neighbors. The Asymmetric War of Attrition The previous models investigated cases where there is some potential for the alignment of interests. In the PD, both players are better off if both give than if neither give. However, animals routinely face situations of conflicting interests, such as situations of zero-sum resource divisions. Moreover, the previous models considered situations where organisms could deliver benefits to others. Here I consider a model of conflict where organisms can inflict costs on each other. Despite these differences, the functional form of the equation describing the selection pressure is surprisingly similar to those described above. Hammerstein & Parker (1982) studied this under the rubric of the asymmetric war of attrition. For exposition, imagine two animals who come across an indivisible packet of food. The animals fight until one gives up and walks away, leaving the other with the food. What determines who gives up first? What determines this will be how the costs of fighting compare to the value of the food. So long as the food 16 brings the animal more fitness than it loses through its cumulative time spent fighting, it should continue fighting. Once the cumulative costs of fighting are greater than the benefits provided by the food, the animal should walk away. Thus the war of attrition: One doesn’t “defeat” one’s opponents so much as wear them down. One component of the model is the value of the food, which may differ between animals. The first animal, for instance, may have just eaten and thus their internal valuation of the food may be relatively low, whereas the second animal may have not eaten for a number of days, and thus highly value the food. All else equal, the second animal in this example will fight longer for the food. The second component of the model is the relative abilities of the animals to inflict costs on each other and to bear costs, a feature of the animals called formidability. The first animal, for instance, may be physically stronger than the second animal. All else equal, the first animal in this example will fight longer for the food. Mathematically, an animal should walk away first when vself < f * vother, where vi represents the value of the resources to animal i and f represents the relative formidability such that f is larger the greater the opponent’s formidability relative to one’s own. As before, the “benefits” received by another are discounted by a factor and compared to a “cost” to the self. (If animals can reliably judge their relative formidability, they can potentially avoid paying any costs by walking away before fighting begins; see Maynard Smith & Price, 1973.) As with the other models, this recovers an equation that looks strikingly similar to the form of Hamilton’s Rule. 17 Multi-Level Selection Theory The functional forms of the equations described above seem to be a general principle of social evolution: other-benefiting, personally costly acts can evolve so long as the benefits given are greater than the costs—after the benefits have been discounted by a factor that (with the exception of the asymmetric war of attrition) represents positive assortment—the degree to which generous strategies are paired with other generous strategies. This can be seen by looking at the equations derived from multi-level selection theory (McElreath & Boyd, 2007; Price, 1970, 1972), a very general accounting system for tracking allele changes over time. To understand this theory, it is easiest to imagine (a) alleles contained within individual organisms and selection occurring between individuals (called selection within groups) while simultaneously viewing (b) these individual organisms as “contained” within groups and considering selection occurring between groups (called selection between groups). Will strategies that engage in beneficial, but personally costly behaviors spread? Yes, so long as σ * βgroup > βpersonal, where βgroup represents the degree of positive effect that this strategy has on the group, βpersonal represents the degree of negative effect that this strategy has on the individual, and σ represents the amount of variation that exists between groups, that is, the extent to which generous organisms are more likely than chance to be in groups with other generous organisms. (Cases of mutualism would have βpersonal be not a cost but a benefit. Under most realistic 18 assumptions, for most species, σ is effectively zero so there is no possibility for between group selection.) Although the mathematics of multi-level selection are more challenging than simpler game theoretic approaches designed to deal with specific cases, multi-level selection in fact subsumes all these other approaches, such as kin selection and direct reciprocity (Gardner, et al., 2007; Henrich, 2004; D. S. Wilson & Sober, 1994). In other words, models of social evolution are generally all the same equation just expressed in different mathematical languages. In an insightful review of these issues, Henrich (2004) notes that “[a]ll genetic evolutionary explanations to the altruism dilemma are successful to the degree that they allow natural selection to operate on statically reliable patterns or regularities in the environment” (p. 4) and that understanding what these regularities are in any particular case requires knowledge of “[t]he details of an organism’s social structure, physiology, genome, cognitive abilities, migration patterns or imitative abilities” (p. 6). Given that all explanations for generous but costly acts ultimately reduce to the same equation, the real scientific challenge will be to unpack the statistical regularities that allow the evolution of generosity and the psychology that operates over these regularities. Mutualism, Coordination, Pseudo-reciprocity, Markets, and Externalities Not all cases of non-kin generosity and cooperation represent cases where there is a possibility of cheating—cases where non-cooperators can even in principle do better than cooperators. Consider a style of cooperation often called coordination or mutualism (Clutton-Brock, 2002, 2009). In situations of this structure, benefits are 19 only available to those individuals who choose to cooperate; non-contributors receive no benefits. Only if we both coordinate our actions can we successfully reap any benefits: One cannot defect; one can only make an error. Because there is no selectionist puzzle here, there has not been much mathematical modeling of this case (but see Pacheco, Santos, Souza, & Skyrms, 2009). Logically, however, there should be a general equation with a similar functional form, something along the lines of p * b > o, where p represents the probability that cooperation will be effective (e.g., as a function of each party’s skill at the task at hand), b represents the benefit obtained by mutual cooperation, and o represents the “outside option,” the benefit available by not trying to coordinate one’s actions with others. Other cases of non-kin cooperation and beneficence may be driven by the availability of positive externalities (Tooby & Cosmides, 1996); this style of cooperation has been sometimes been termed pseudo-reciprocity (Connor, 1986). Externalities represent by-products of one’s actions that impact on others; importantly, they are not designed consequences, but are incidental outcomes. For instance, as many plants grow they provide increasing shade. The shade created is not a design feature of the plant; it is a by-product of increasing leaf size and number. Humans and other animals routinely take advantage of these externalities to avoid direct sunlight. Pseudo-reciprocity occurs when an organism is designed to increase the rate or quality of externalities another organism delivers. For instance, one could imagine 20 an organism that depends on trees for shade and has adaptations to fertilize trees so as to create more shade. To the extent that the tree has no way to distinguish situations where other organisms “purposefully” fertilize it from situations where it was simply lucky enough to grow up in rich soil, the tree can do nothing to respond to the other organism’s actions as such. However, it will still grow bigger and provide greater shade. Note that there is no possibility for the organism doing the fertilizing or the organism being fertilized to cheat each other in this situation. The tree cannot take fertilizer yet refuse to grow bigger; why would it want to? Although the other organism pays the cost of providing fertilizer, it necessarily gets more shade (and presumably finds the benefits of shade to outweigh the costs of fertilizer). Thus, an organism can engage in acts that are both other-benefiting and, ultimately, selfbenefiting without the recipient of their largesse acting contingently. (It is a separate issue whether other organisms who could enjoy the shade could exploit the organism doing the fertilizing.) One up-shot of these theories is that there becomes a market for interaction partners (Noe & Hammerstein, 1994, 1995). Even if cheating is not possible, there are still transaction costs. Imagine that an organism provides you with positive externalities. It takes you some time and energy to reap these externalities and to increase the rate at which they are delivered. Although you are getting a net fitness benefit by interacting with this organism, there may be other organisms who would provide you with even greater fitness benefits. Thus, your current interaction 21 represents an opportunity cost. This suggests that there may be a number of dimensions that organisms evaluate each other along, such as foraging efficiency or sexual attractiveness, to choose interaction partners who can deliver the greatest benefits—even if this benefit-delivery provides no possibility of cheating. Summary Fifty years of theory have investigated the selection pressures that can cause organisms to trade off personal welfare to benefit others. Some of these selection pressures, such as kin selection or externality-driven altruism, lead to unilateral helping: The recipient need not respond or even know that aid was given—altruism is evolutionarily favored regardless. Other selection pressures, such as direct reciprocity, require contingency: Aid that is given must be eventually returned. Although such a design might go into temporary fitness “debt,” it must recoup its losses—and make a profitable return—by the end of its life. Still other selection pressures, such as the asymmetric war of attrition, explore zero-sum situations where benefits are extracted through threat of force. Despite these larges differences in the situations they apply to, all of these selection pressures lead to equations with remarkably similar functional forms: d * b > c; the benefits given must outweigh the costs incurred, after the benefits have been discounted. This discount factor generally represents the relationship between the donor and the receiver, such as how likely they are to be of the same type or how their relative formidabilities compare. What consequences do these theories of selection have for theories of computation and psychology? 22 Computational Mechanisms that Compute and Instantiate Valuation The previous section discussed a number of selection pressures that lead one organism to trade off personal welfare in favor of another organism, each of which generally has the form d * b > c. However, selection pressures describe the abstract logic by which genes can increase or decrease in frequency in the population; they do not describe the causal routes through which information relevant to these selection pressures is detected and acted upon. Consider a hypothetical species of wasp where females lay their eggs inside figs. Females mate with only one male and only lay eggs in figs that no other wasps have already used. In this case, a newborn wasp will meet only other full siblings inside the fig; it does not need any psychological mechanisms that allow it to distinguish kin from non-kin. Thus, even if kin selection was acting on this species to affect its behavior toward sibs inside a wasp’s natal fig, there would be no need for any machinery that could estimate kinship or act differentially toward kin and non-kin; the world, not the organism’s behavior, creates a statistical regularity that selection can act on (Tooby & Cosmides, 1989). This is not the case for humans—long-lived, mobile organisms who interact repeatedly with many individuals, some kin, some non-kin. If kin selection has crafted mechanisms in the human mind, one component would be the ability to estimate the kinship status of others vis-à-vis the self. The goal of this section is to review evidence for psychological adaptations in the human mind that instantiate the logic of kin selection and other theories. I review these proposals some detail because (a) they illustrate 23 how valuation can be studied computationally and (b) fleshing out these proposals reveals a more general problem that they collectively create. A Kinship Index The theory of inclusive fitness predicts that organisms should aid individuals who are likely related by recent common decent. This follows because recent common descent is likely to cause the strategy for aiding close kin to reside in both the body of the donor and the body of the recipient. Although humans preferentially shunt resources toward close-kin over more distant relatives and non-kin (as one example among many, see Kaplan & Hill, 1985), seeming to support inclusive fitness theory, what are the psychological mechanisms that allow humans to detect kin, that is, to estimate r in r * b > c (Kurland & Gaulin, 2005)? Work spanning over one hundred years suggests that one available environmental cue that allows for sibling detection in particular is the length of coresidence during childhood: The longer you co-reside with someone, the more likely they are to be a close sibling. Westermarck (1891) originally hypothesized this cue in the context of incest avoidance. Given, for instance, that close relatives often have the same deleterious recessives (rare alleles that cause serious developmental abnormalities or death when an organism has two copies), mating with close relatives has a much greater potential to create unfit offspring, creating opportunity costs for the parents. Thus, Westermarck proposed that to avoid this problem, humans are designed to develop strong sexual aversions to those who co-resided with us during childhood. (More recent research has quantitatively matched length of co-residence to 24 the degree of disgust at sibling incest (Fessler & Navarrete, 2004; Lieberman, Tooby, & Cosmides, 2003).) Recent theory and data (Lieberman, Tooby, & Cosmides, 2007) suggests, however, that human kin detection may be more complex. Human foraging societies have a fission-fusion structure: Although nuclear families often aggregate into large groupings, nuclear families regularly migrate independently of one another. Thus, regular co-residence would be a valid cue of siblinghood. However, there may be a better cue available. First, this theory assumes that humans can know with great certainty who their biological mothers are, a reasonable assumption given the close and frequent interactions that human infants have with their mothers. Building on this, older siblings can observe a cue that Lieberman and colleagues call maternal perinatal association—the close interaction of one’s mother with a newborn infant. Just as knowledge of one’s mother’s identity is gained through close interaction with her during infancy, knowledge of one’s younger sibling’s identity is gained through observations of close interactions with one’s mother during a sib’s infancy. For a variety of reasons, maternal perinatal association is likely to be a stronger cue than co-residence (e.g., a cousin might be co-resident if orphaned). Thus, Lieberman and colleagues predict and find evidence for the following: First, older siblings, with respect to their younger siblings, use maternal perinatal association when it is available as a cue and neglect co-residence in regulating altruism and incest. Second, because younger siblings do not have access to maternal perinatal association, they use co-residence to regulate altruism and incest. This evidence 25 reveals mechanisms in the human mind for estimating r; Lieberman and colleagues call this estimate a psychological kinship index. Indices Relevant to Reciprocity The theory of direct reciprocity predicts that organisms should make shortterm fitness sacrifices to aid another organism when there is a sufficient probability that there will be a long-term net benefit to making those sacrifices. One way to cause this is for interactions to be sufficiently long. Thus, human reciprocity should be conditioned on cues to the length of the remaining interaction. Consistent with this, a great deal of behavioral economic evidence reveals that reciprocity diminishes when people are informed that their experimental interactions will be short or ending soon (Camerer, 2003): As the final rounds of interaction approaches, defection becomes more and more common. Interestingly, this is not an effect of rational updating in these games. First, although a group of experimental subjects interacting in a prisoners’ dilemma-style laboratory game will lower their levels of cooperation as the game approaches the final round, if they start a new game, even with the same partners, their cooperation will start out high again, only diminishing as they approach the final round again. Second, it is not the length of the games per se that leads to a drop in cooperation: Whether a game lasts 10 round or 100 rounds, it is only within approximately the last 5 to 10 rounds that levels of cooperation begin to drop. A related line of research has investigated the effects of anonymity—the greater the anonymity the less likely that the interaction can effectively continue into 26 the future. Here, too, greater anonymity leads to lower levels of cooperation (Hoffman, McCabe, & Smith, 1996). Still other evidence, consistent with direct reciprocity and reputation-based theories, shows that the mind conditions its own cooperative behavior toward a partner on that partner’s behavior toward others (Milinski, Semmann, Bakker, & Krambeck, 2001). Ultimately, however, long interactions can only sustain direct reciprocity because they allow contingently cooperative strategies to outcompete cheaters: A cheater might receive a short-term benefit at the expense of a cooperator, but the contingent cooperator will quickly stop cooperating. When cooperator meets cooperator, they engage in cycles of mutual cooperation. The length of these cycles is important: Only if they are sufficiently long can the benefits gained by a contingently cooperative strategy, when averaged across multiple bodies, outweigh the average fitness gains made by a cheater strategy that exploits cooperators. Thus, the theory of direct reciprocity also requires that behavior be conditioned not just on the shadow of the future but on the behavior of one’s partner. Cosmides (1989; Cosmides & Tooby, 2005) presents evidence that humans have a complex psychology that allows for the contingent, mutual exchange of rationed benefits. This research shows that the mind contains mechanisms that embody a logic of social exchange that is not reducible to the content-free logics developed by logicians. One component of this psychology is an ability to detect cheaters on exchanges—a necessity if one is going to behave contingently in social exchanges. 27 Thus, research also reveals evidence that the mind computes estimates of w and related factors, what might be called reciprocity indices. A Formidability Index The theory of the asymmetric war of attrition predicts that organism will cede a resource to another organism as a function of their relative formidability. This implies that the mind has mechanisms for estimating the formidability of others—for estimating their ability to inflict costs. One component of men’s ability to inflict costs is strength, particularly upper body strength. Sell, Cosmides, and colleagues (2009) present evidence that humans can reliably estimate the strength of men from both men’s bodies and faces. Moreover, people’s judgments of men’s fighting abilities are essentially identical to their judgments of men’s strength. In other research, Sell, Tooby, & Cosmides (2009) show that men’s strength regulates how entitled they feel and their self-reported ability to prevail in conflicts. Aside from personal physical strength, another potential component of formidability is coalitional strength: the number and physical strength of one’s allies. Sell, Tooby, & Cosmides (2009) show that, among a society of hunter-horticulturalists, one’s number of allies regulates emotions related to entitlement. Taken together, this research suggests that the mind computes one or more formidability indices that are used to regulate who gets what during a resource conflict. Sexual Value Indices Although kinship, reciprocity, and threat of force are the most commonly studied routes through which organisms can provide benefits to one another, there are 28 a number of other routes as well (see, e.g., above section on Mutualism). As an example of this, I develop the possibility of sexual value indices. Like other mammals, human females energetically invest a great deal in their offspring (e.g., through gestation, nursing). Moreover, human males, unlike many other mammals, also invest a great deal of energy provisioning their offspring (e.g. through hunting of calorically dense meat) (Kaplan, Hill, Lancaster, & Hurtado, 2000). Given that so much is invested in the products of a sexual union, it should not be surprising that there is a complex psychology that determines sexual valuation. The complex psychology of human sexual valuation has been one of the most intensely studied topics within evolutionary psychology. Researchers in this domain have identified a number of cues that play a pivotal role in determining attractiveness. These include visual cues of youth and a relatively low waist-to-hip ratio (for men judging women), cues of status and ambition (for women judging men), and cues of kindness (especially towards oneself) and of shared beliefs and worldviews (true for either sex judging the other) (reviewed in Buss, Shackelford, Kirkpatrick, & Larsen, 2001; Sugiyama, 2005). Moreover, cues of close kinship can radically lower the sexual value of what might otherwise be an attractive partner (Fessler & Navarrete, 2004; Lieberman, et al., 2003, 2007). Excepting certain cases (e.g., potential sib–sib matings), those with greater sexual value are generally preferred as sexual partners. Those with high sexual value, moreover, are valued outside of explicit mateships (Sugiyama, 2005) and receive better treatment from others in a variety of ways (e.g., Budesheim & DePoala, 1994; 29 Downs & Lyons, 1991; Stewart, 1985). Moreover, attractive women’s minds embody a recognition of their greater value to others—and hence get angrier more easily and feel more entitled (Sell, Tooby, et al., 2009). Although the lack of game theoretical challenges means there is little math modeling regarding sexual value (outside of models of arbitrary signals (Grafen, 1990)), there is a rich psychology that determines the sexual value of others and uses it to determine behavior. The Problem of Integration and the Necessity of Welfare Tradeoff Ratios The previous section reviewed evidence that the mind has a number of specific dimensions that it evaluates others on and that it uses these dimensions of valuation to make decisions that affect both the self and the other. But this presents an important information processing problem: How are these various dimensions to be used to make any given decision? Although research often proceeds best when examining a single factor in isolation (e.g., examining only how variations in attractiveness affect treatment), things are not so simple in the real world. As an example, consider an interaction between Joe and Ken. Ken has no cues of being related to Joe, Ken sometimes returns favors (but sometimes does not), and Ken is very strong. If Joe has an opportunity to aid Ken, should he? According to theories of kinship, Joe should not: his kinship index toward Ken is vanishingly small. According to theories of reciprocity, Joe should be uncertain: his reciprocity index toward Ken is on a knife-edge that separates cooperation from non-cooperation. According to theories of conflict, Joe should help Ken: he has a high formidability index toward Ken. Joe, being a macroscopic physical object, cannot easily be in a 30 superposition of states—he cannot simultaneously help Ken and not help Ken. Logically, the mind must have some way of integrating together all these various indices into a single summary index that can be used to make specific decisions in the here and now. We call this summary index a welfare tradeoff ratio (WTR). The crux of this dissertation is that WTRs enter into decisions through a process that is a generalization of the theories of valuation reviewed above: An organism should give to a specific other when WTR * b > c; that is, when benefits to the other, after being discounted by the self’s WTR toward the other, are greater than the costs the self pays to deliver the benefits. By hypothesis, welfare tradeoff ratios are computed by a welfare tradeoff function, a function that combines the various indices discussed above, other indices that remain to be discovered, as well as other situational factors. The goal of this dissertation is to provide preliminary evidence for the existence of welfare tradeoff ratios. In the next section, I outline a number of design features that WTRs are predicted to have. Standards of Evidence for the Existence of Welfare Tradeoff Ratios What would it take to show that such an internal regulatory variable exists, that the mind computes welfare tradeoff ratios? Here I provide several standards of evidence. First, if such a variable exists, then the precision of its operation should be evident in the ways that people make welfare tradeoffs regarding a specific other person. That is, if we observe a person making a number of welfare tradeoffs regarding a specific other, that person’s responses should be summarizable by a 31 single number—the hypothesized WTR. (This assumes we hold constant situational factors, such as audience and type or amount of resource at stake.) If a person’s responses cannot be so summarized that would provide evidence against the view advanced here. I call this the criterion of consistency. Such consistency, while expected by welfare tradeoff theory, would be particularly surprising in light of the many inconsistencies and irrationalities shown in human judgment and decision making. For example, research shows that people do not have consistent revealed beliefs (Ellsberg, 1961), that independence of preferences is often violated (e.g., Oliver, 2003), that preferences can be reversed in the presence of irrelevant alternatives (e.g., Ohtsubo & Watanabe, 2003), that preferences are not always transitive (e.g., Tversky, 1969), and that moral preferences are changed by seemingly irrelevant factors (e.g., the side-effect effect, Leslie, Knobe, & Cohen, 2006). The studies described below use controlled, but artificial laboratory experiments to test whether people’s responses can be summarized by a single number. Even if there is substantial consistency in people’s responses, what does this imply? Just as human cognition often appears blinkered when analyzed under inappropriate assumptions or when tested with inappropriate methods in laboratory settings (see Gigerenzer, Hell, & Blank, 1988), could the artificiality of the lab lead to levels of consistency that would not be observed out in the real world? I call this the criterion of non-artificiality. 32 Related to laboratory artificiality is the issue of “cheap talk.” The studies presented below use welfare tradeoffs decisions that generally involve hypothetical sums of money. When real money is not at stake, it is always possible that subjects’ decisions to do not represent their real valuation of the options, that they are engaging in cheap talk in the service of (e.g.) self-presentation. Thus, it is important to test whether the responses we assess in welfare tradeoff tasks involving hypotheticals do not differ from tasks involving real stakes. I call this the criterion of consequentiality. Welfare tradeoffs ratios are hypothesized to be computed as target-specific magnitudes. Thus, the mind should assign different WTRs to different social agents in one’s environment. This follows because different people represent different levels of formidability, different levels of sexual value, different levels of kinship, etc. I call this the criterion of target-specificity. It is important to be clear that WTRs do not represent the sum total of the mind’s knowledge of a specific other. Thus, it is entirely possible that the mind could distinguish between two different social agents and still assign them approximately the same welfare tradeoff ratio. Nonetheless, although it is logically possible that the various people in one’s social network all ultimately get assigned the same number, in practice the multitude of factors that should contribute to setting WTRs make this seem unlikely. The theory predicts that at least some pairs of people are assigned different WTRs. The first-order problem solved by the existence of welfare tradeoff ratios is that of making tradeoffs involving the self and another. But a commonly faced second-order problem is making tradeoffs involving two other individuals. One’s 33 WTR to person A should be a function of a number of upstream factors characterizing person A, and similarly for person B. How one makes tradeoffs between the welfare of A and B should be, in part, a function of the same upstream factors. Thus, one’s WTRs for A and B should predict how one makes tradeoffs between A and B. I call this the criterion of common effects. Tests that falsify this would provide serious obstacles to the view advanced here. WTRs are hypothesized to be internal magnitudes that are used to regulate behavior. They should therefore behave like other psychological magnitudes. I call this the criterion of magnitude isofunctionality. This can be testing by comparing the consistency of decisions that involve welfare tradeoffs to the consistency of decisions that necessarily involve the psychological manipulation of other types of magnitudes. However, WTRs are hypothesized to be magnitudes computed by specialized algorithms (where the specialization of the algorithms derives from the specialized tasks they are designed to accomplish). Thus, there should be ways in which computation involving WTRs differs from the use of other magnitudes. I call this the criterion of algorithmic separability. The Problem of Estimation I have outlined a number of reasons to think that the mind computes welfare tradeoff ratios that regulate one’s social decision making: The mind computes a number of specific indices, all of which follow the same abstract logic, but the mind must also integrate these specific indices into a downstream index to actually regulate behavior. If this is true, it implies that not only will the mind assess multiple factors 34 about others and regulate behavior toward them as a function of these factors, but also that others will be doing the same. Thus, the WTRs that others compute will have consequences for both the self directly and, more generally, for the self’s social world. This implies that mind may also contain mechanisms that can estimate the magnitude of WTRs of in others’ minds. Such an ability would be beneficial because it would allow one to know who has a high WTR toward the self, a person worth allocating time and energy toward (Tooby & Cosmides, 1996), and who has a low WTR toward the self, a person who might profitably be ignored or even avoided. Moreover, knowing the WTRs of others can allow one to manipulate those WTRs. For instance, Sell, Tooby, & Cosmides (2009) provide evidence that the emotion of anger is designed to raise other’s WTRs when they are lower than what the mind calculates they should be. This necessarily involves an ability to estimate the welfare tradeoff ratios set by others toward the self. Standards of Evidence that the Mind Estimates Welfare Tradeoff Ratios The mind should allocate in favor of another when the benefit the other receives is greater than the cost the self incurs, but only after the benefit to the other has been discounted by the welfare tradeoff ratio toward the other. Mathematically, allocate to the other when WTR * b > c. Although welfare tradeoff ratios are assumed to be purely internal magnitudes—there are no external physical objects or actions that directly correspond to a WTR—I assume that the delivery of benefits and the imposition of costs are 35 (often) observable. That is, I assume that the mind contains procedures that can translate objects, actions, and effects in the real world (e.g., a bite of an apple, a punch in the solar plexus) into an internal numerical scale (Tooby, Cosmides, & Barrett, 2005). Such an ability would be necessary for even a solitary forager who must decide whether this or that patch is more profitable to forage in. (However, because it is beyond the scope of this investigation, I am ignoring the fact that what counts as an object, an action, etc. is not a feature of the objective world, but is a feature of the organism’s psychology.) Given this, the mind can infer (boundaries) on another’s WTR by observing the benefits it delivers and consumes and the costs it incurs and inflicts. By rearranging, we see that the mind should allocate to another when WTR > c / b. Thus, if a benefit is given at a personal cost, one can infer that the WTR of the agent delivering the benefit is at least as great as the cost–benefit ratio. Holding benefits constant, greater costs incurred imply greater WTRs. Thus the mind should infer differences in the lower bounds of welfare tradeoff ratios as a function of differences in costs incurred. This prediction is tested in Chapter 3. That chapter also conducts further experiments to distinguish predictions from welfare tradeoff theory from rational choice and payoff-based theories. Summary of Predictions from Welfare Tradeoff Theory According to welfare tradeoff theory, the mind needs a number of computational abilities. One is the ability to compute a downstream variable—a welfare tradeoff ratio—that determines how personal welfare is traded off against the 36 welfare of another. I have outlined multiple standards of evidence for demonstrating the existence of this variable and test for its existence in Chapter 2. A second ability is to estimate the welfare tradeoff ratios that exist in the minds of others. I have also outlined standards of evidence for this ability and test for it in Chapter 3. The two chapters use very different methods to provide convergent validity for the general concept of welfare tradeoff ratios. The confirmation of predictions in both chapters would provide strong support for welfare tradeoff theory. 37 Chapter 2: The Existence of Welfare Tradeoff Ratios1 The Welfare Tradeoff Task Theoretical Overview The goal of this chapter is to provide evidence consistent with the hypothesis that the mind computes a magnitude used to regulate welfare allocations—a welfare tradeoff ratio. WTRs are predicted to be fairly precise magnitudes. Thus, to assess this prediction, we need an appropriate measurement device. This rules out many standard measurement instruments, such as arbitrary numbers attached to ad hoc rating scales. It’s difficult to see, moreover, how the basic hypothesis about the existence of welfare tradeoff ratios can be tested using standard social cognition methods such as reaction times based measures (e.g., the implicit associations test). Instead, this research borrows methods developed by cognitive, behavioral, and economic psychology to study other internal regulatory variables. Considering this research program in some detail will help draw out the logic and assumptions of the approach. Considerable effort in cognitive psychology has been devoted to understanding how the mind makes intertemporal tradeoffs (Green & Myerson, 2004). Typically, this research asks the question of whether a smaller, earlier benefit would be preferred over a larger, later benefit. (Often, the smaller benefit would be available immediately if chosen.) An assumption of this work is that, all else equal, 1 Theresa Robertson, Daniel Sznycer, and Julian Lim all collaborated on the research described in this chapter. 38 the longer a benefit is delayed into the future, the less subjective value it will have (e.g., $5 today is worth more than $5 in ten years). This would happen, for example, if the mind embodies the fact that at greater and greater delays, the future is less and less likely to be reached (Williams, 1957; M. Wilson & Daly, 2004). Thus there is a tradeoff: Although the delayed benefit is absolutely larger than the immediate benefit, its delay lowers its value, potentially making it less valuable than the absolutely smaller, but immediate, benefit. A second assumption is that animal minds cannot determine which benefit to choose unless it can put them on a common scale, a scale that incorporates both the amount of benefit available and the degree of delay. To do this, the mind must discount the value available in the future so that it can be stated in terms of present, immediately available benefits. This is accomplished by plugging future benefits into a discount function, which research often shows to have the form: computed present value = nominal value / (1 + k * delay), where k is an individual difference parameter that determines how much the future is discounted. (There is not yet widespread agreement for why future discounting takes this form, called a hyperbolic function, but see Green & Myerson (2004) and Killeen (2009) for possible rationalizations.) Consider the choice between $10 available immediately and $20 available in two weeks. One could imagine a mind that uses one or the other factor alone to make this choice. Whatever your preference, however, prior research indicates that it would change if either one of the amounts or the delay were sufficiently modified. Thus, the mind does not consider the absolute amounts or the delay alone; the decision must be 39 made with some sort of process that combines both. One could imagine a heuristic process that evaluates one attribute first, only moving on to the other if the first evaluation fails (see Brandstatter, Gigerenzer, & Hertwig (2006) for such a proposal in the related domain of probability discounting). For instance, a simple heuristic might be “If one benefit is at least an order of magnitude larger than the other, choose that benefit. If not, then choose the benefit that has a shorter delay, so long as the delay is at least one month shorter. If this cannot be satisfied, then choose randomly.” Such a heuristic does not explicitly recognize that there is tradeoff to be made between an earlier, small benefit and a later, large benefit: The same basic heuristic (with different content) could equally well be applied to a situation where (a) a set of cues are all positively correlated with some outcome and each other, (b) some cues have stronger predictive ability such that if you have these cues available there is nothing further to be gained by looking at additional cues, and (c) there is imprecision in estimates of all cues such that very close values cannot reasonably be distinguished from each other. In such a situation, the mind should start with the most predictive cue, discard it if it yields an ambiguous determination, move on to the next most predictive cue, and so on. Could a simple heuristic such as this be applied to temporal discounting? Probably not: the complexity and nuance of people’s intuitions regarding temporal discounting speaks against such an account (Green & Myerson, 2004), as does recent neuroscience evidence (Kable & Glimcher, 2007; Preuschoff, Bossaerts, & Quartz, 2006). Moreover, despite recent proposals, simple heuristics such as this do not seem 40 to sufficiently account for people’s decisions on gambling-like choice tasks (tasks involving risk; see commentators on Brandstatter, Gigerenzer, & Hertwig (2006): (Birnbaum, 2008a; Johnson, Schulte-Mecklenbeck, & Willemsen, 2008; Rieger & Wang, 2008)). Thus, to make the above tradeoff, the mind must first convert $20 available in two weeks to an equivalent amount available now. It must then compare this computed amount to $10 available now and choose the larger. Whether the computed amount is greater or less than $10 will depend on how strongly the mind discounts the future. This style of analysis can also be applied to welfare allocations. First, is there a potential for welfare allocations to involve tradeoffs? One factor determining welfare allocations is the targets of the allocation: If all else is held equal (and notwithstanding a few important exceptions), $5 in my hands is more valuable to me than $5 in your hands. But another factor is the relative size of the allocations: Diminishing marginal returns ensures that many situations exist in which a large benefit can be delivered to another at only a small cost to oneself (e.g., a successful hunter who has eaten his fill could share leftover meat with a hunter who has not been successful). This means that decision-makers will sometimes find themselves facing a choice between allocating a smaller benefit to themselves or allocating a larger benefit to another. (In many cases, the “benefit” allocated to the self is avoiding the cost that would have been incurred to allocate a benefit to another.) Because the two factors point in opposite directions, there is a tradeoff—e.g., do I allocate $10 to myself or $20 to a friend? 41 Second, is there reason to think that a mathematical function (as opposed to some sort of simpler heuristic) is necessary to solve this problem? Suggestive evidence comes from an investigation on how (a) the potential cost of helping another and (b) the relationship of the potential helper to the recipient combine to determine the help given (Stewart-Williams, 2007). In this study, the benefits of helping were perfectly correlated with costs of helping; more help means more cost. For kin (siblings and cousins), subjects were increasingly likely to help as the benefits of help increases. For non-kin (friends and acquaintances), subjects were decreasingly likely to help as the benefits of help increased. This pattern could only occur if both kinship and cost of helping had simultaneous, functional consequences during at least some steps of the decision making process. Although the evidence here is consistent with the necessity of a mathematical function, the dependent measures were ad hoc rating scales asking about difficult-to-quantify behaviors. More direct evidence comes from research using methods from the discounting literature in cognitive psychology (Jones & Rachlin, 2006, 2009; Rachlin & Jones, 2008a, 2008b). These researchers analogized from the existence of temporal discounting to hypothesize that there exists social discounting. Just as one can use knowledge of temporal delay and a person’s temporal discounting parameter (k in the equation above) to compute the subjective value delayed benefits would have if they were instead available immediately, so, too, can one use knowledge of social distance (the analogue of temporal delay) and a person’s social discounting parameter (the analogue of k) to compute the subjective value that benefits accruing to another 42 person would have if they instead accrued to the self. Thus, these researchers asked subjects to “imagine that you have made list of the 100 people closest to you in the world ranging from your dearest friend or relative at position #1 to a mere acquaintance at #100 (Jones & Rachlin, 2006). The person at number one would be someone you know well and is your closest friend or relative. The person at #100 might be someone you recognize and encounter but perhaps you may not even know their name. You do not have to physically create the list—just imagine that you have done so” (p. 284). The position of another person on this list represents social distance. The researchers next asked subjects to make a series of monetary decisions involving tradeoffs. In these decisions, subjects chose between Allocation A— allocating a large sum of money to themselves and nothing to a specific other—or Allocation B—allocating a smaller sum to themselves and a sum equal to this smaller sum to the specific other. All subjects answered regarding multiple specific others: the people occupying the positions 1, 2, 5, 10, 20, 50, & 100 on their lists. Note, however, that subjects did not actually generate any such list and were never asked to specify the specific people occupying even the few positions they were asked about. Thus, subjects were asked about “specific others” only in the sense of there being specific numbers, not in the sense of there being specific, identifiable people. The results showed a quite surprising effect: Subjects had no difficulty doing this task and the mathematical function best describing how they made interpersonal tradeoffs was the same form as the equation given above for intertemporal tradeoffs. This form, moreover, also describes how people make probabilistic tradeoffs (e.g., do 43 you want $10 for sure or a 60% chance at earning $20?). (See Green & Myerson, 2004, on how intertemporal and probabilistic tradeoffs are instantiated by different mechanisms.) Despite this similarity, all three types of tradeoffs involve functions that are not economically rational—market forces should create exponential discounting functions, yet all three of these functions are hyperbolic discounting functions (Green & Myerson, 2004; Killeen, 2009). This helps rule out the possibility that all of this is somehow the product of general rationality, instead of specialized computational systems. Altogether, these results are consistent with the need to model welfare allocations—and the resulting tradeoffs—as being the output of a psychological mechanism that mathematically integrates the amounts being allocated and the nature of the targets of the allocation. Although I will be using a task similar to the social discounting task of Jones & Rachlin (2006), it is important to examine the theoretical and methodological differences between our approaches. First, Jones & Rachlin suggest that there is unitary dimension that determines choices—social distance—along which people can be arrayed in one’s mind. Although they do not commit themselves to a definition of social distance, they suggest that social distance may be a product of common or shared interests (p. 285). This is reflected in the instructions they use (“the 100 people closest to you”). Although welfare tradeoff theory also proposes that there is a single downstream variable (the welfare tradeoff ratio) that ultimately determines people’s choices, there are multiple, principled determinants of welfare tradeoff ratios. A 44 common interest is not the only, or necessarily the primary, determinant: Some relationships may be regulated almost entirely by relative formidability, with a less formidable person assigning a more formidable person a very high WTR despite a complete lack of psychological closeness. Other WTRs may be mostly set by the ability to confer benefits, such as setting a high WTR toward a person who is attractive or has high status—in what sense is valuing someone due to their attractiveness or status synonymous with common interests or closeness? Indeed, on welfare tradeoff theory it is difficult to create a linear ordering of targets because the welfare tradeoff function integrates multiple factors—which can “contradict” one another—and these factors are dynamically updated. (Even estimates of kinship can be updated into adulthood: E.g., if a man learns his wife is currently engaging in extra-pair mating, his mind may lower its estimate of his paternity for previous children she has borne.) Thus, welfare tradeoff theory provides a more nuanced view of the determinants of social discounting. Second, Jones & Rachlin’s assumption of a unitary dimension of social distance motivated their empirical strategy of asking about unspecified others defined by numbers on an imaginary list. Welfare tradeoff theory focuses on the personspecific determinants of welfare tradeoff ratios. Without specifying a specific other, it is unclear exactly what is being tapped by these decision questions—are subjects spontaneously filling in specific others, resorting to “default” settings, or something else? Indeed, Stewart-Williams’ (2007) data showing that friends are less likely to receive help as it becomes more costly, but kin more so, shows how important it is to 45 take into account person-specific factors, instead of a general dimension of closeness. Nonetheless, Jones & Rachlin’s data provides converging evidence that the mind computes relatively precise internal magnitudes that regulate welfare allocations. The Task The welfare tradeoff task is designed to measure how much personal welfare the mind will tradeoff to enhance the welfare of a specific other. To do this, the task involves money. Using money has several benefits: First, it allows for easy and precise numerical quantification, allowing a very stringent test of the hypothesis that these tradeoffs are regulated by decision rules that consult a variable that has a magnitude. Second, it allows for goods available to the self and for goods available to others to be in the same currency. Third, previous works shows that monetary tasks provide orderly and replicable results for domains, such as temporal discounting (a domain which replicates with other species and currencies, such as pigeons and food). There are also drawbacks: Money is evolutionarily novel. Ancestrally, many acts of help and generosity—such as childcare or food sharing—involve currencies that are not fungible. Money, on the other hand, is completely fungible. (In Chapter 4 I outline one way to investigate welfare tradeoffs without directly using money.) Inspired both by temporal and social discounting tasks (Green & Myerson, 2004; Jones & Rachlin, 2006), the task I created requires subjects to decide whether (a) the other would receive a sum of money and the subject would receive nothing or (b) the subject would receive a different, usually smaller sum of money and the specific other nothing. A simply put example: Will you allocate $5 to yourself or will 46 you allocate $10 to your friend? The key to estimating WTRs lies in having subjects complete multiple decisions regarding a specific other where the ratio of the values is manipulated. Considering our simple example, if you prefer to allocate to your friend, that implies that $5 < WTR * $10. That is, even after discounting the $10 due to the fact your friend receives it instead of you, it is still a better outcome to you than directly receiving $5. Simple algebraic rearrangement implies that your WTR toward your friend is at least 0.5. What if we give you another decision, this time $7 for you or $10 for your friend, and you now choose to allocate to yourself? This implies that your WTR is no more than 0.7. By varying the ratios, we now have an upper and lower bound for your WTR toward your friend; simple arithmetic averaging estimates your WTR toward your friend as 0.6. The actual instrument uses this strategy, but with a larger range of possible ratios, as well as multiple decisions with the same ratio. In the experiments presented below, for each specific other, a subject completes six anchored sets, each set with ten separate decisions. Thus, for a given target, subjects make sixty separate decisions. I call the sets “anchored” because within a set the amount that the other could be allocated is fixed—it provides an anchor for the variable sums that could be allocated to the self. This constant anchoring amount for the other was 19, 23, 37, 46, 68, or 75. The amount for the subject was determined by multiplying the anchor by different, evenly-spaced ratios—-0.35, -0.15, 0.05, 0.25, 0.45, 0.65, 0.85, 1.05, 1.25, and 1.45—and then rounding to the nearest integer. In principle, each ratio represents the WTR of a 47 subject who is perfectly indifferent between the two choices; the ratios are “indifference WTRs.” Figure 1 shows some of the decisions created by this algorithm. When making these decisions, subjects are asked to assume that each choice is made independently and to let other choices affects their decisions. They are also asked to assume that the money cannot be shared between themselves and the targets of their choices (e.g., if they allocate to a friend, that friend cannot then give them a portion of that allocation). As an example, consider the decision between $75 for the other and $0 for the self or $0 for the other and $49 for the self; the ratio of these values is 49 / 75 = 0.65. If a subject were perfectly indifferent between these two options, then the subject’s WTR for the other is simply the ratio of the two sums, .65. People, however, are not indifferent and usually prefer one over the other. In this example, a subject may prefer to receive 49 instead of allocating 75 to the other. But the same subject may prefer to allocate 75 to the other instead of receiving 34 (where 34 = 0.45 * 75). Thus, one can infer that the subject’s WTR with respect to the other is less than 0.65 but greater than 0.45. A numerical estimate can be obtained by averaging these two bounds together: (0.65 + 0.45)/2 = 0.55. One way of putting this is that by giving subjects a series of decisions one can look for a switch point. These switch points represent subjects’ WTRs. By giving subjects multiple anchored sets, the reliability of the measure is increased. However, subjects are not always perfectly consistent such that there is one and only one switch point (or no switch points) in a given question. Therefore, to 48 estimate WTRs in the absence of perfect consistency, I employ a method developed by Kirby & Maraković (1996). In this method, each possible WTR is computed by averaging together each successive pair of the ratios listed above (-.35 to 1.45). This gives possible WTRs between -.25 and 1.35 inclusive, with increments of .2. For simplicity, I assume that if subjects always allocate to themselves in an anchored set then that reflects a WTR of -.45 (the average of -0.35 and the value -0.55 that would be present if the instrument continued downward in similar increments), and if subjects always allocate to the other, then that reflects a WTR of 1.55 (the average of 1.45 and 1.65 if the instrument continued upward in similar increments). This gives a total of 11 possible WTRs that could be assigned to a single anchored set. Within an anchored set, for each possible WTR, the number of choices consistent with that possible WTR is calculated. The possible WTR that has the greatest consistency is then assigned as the actual WTR for that anchored set. (It is the best-fitting WTR.) If two possible WTRs have equal consistency, then they are averaged together. Finally, the WTRs assigned to each anchored set are averaged together to give an estimated WTR for a participant. 49 You Other A Option 1 54 0 Option 2 0 37 You Other B Option 1 15 0 Option 2 0 23 You Other Option 1 46 0 Option 2 0 37 You Other Option 1 4 0 Option 2 0 75 You Other Option 1 39 0 Option 2 0 37 You Other Option 1 -3 0 Option 2 0 19 You Other Option 1 31 0 Option 2 0 37 You Other Option 1 71 0 Option 2 0 68 You Other Option 1 24 0 Option 2 0 37 You Other Option 1 31 0 Option 2 0 37 Option 1 Option 2 You Other 17 0 0 37 Option 1 Option 2 You Other 21 0 0 46 Option 1 Option 2 You Other 9 0 0 37 Option 1 Option 2 You Other 54 0 0 37 You Other Option 1 2 0 Option 2 0 37 You Other Option 1 44 0 Option 2 0 68 You Other Option 1 -6 0 Option 2 0 37 You Other Option 1 -6 0 Option 2 0 37 Figure 1. Examples of the choice task. The left pane (A) shows an example of the Ordered condition and the right pane (B) shows an example of the Random condition in Studies 1 & 2. 50 I calculate two additional dependent measures from these data. The first is a perfect consistency score. For each anchored set, I determine whether there is one and only one switch point (or no switch points at all for the lowest and highest possible WTR values). Thus, for a specific target, subjects can have between 0 and 6 perfectly consistent anchored sets. The final perfect consistency score is this count divided by 6 to create a percentage (e.g., if a subject had 5 perfectly consistent anchored sets for a given target, her perfect consistency score would be 5/6 or 83.3%). Obviously, because the perfect consistency score requires perfect consistency, it is susceptible to even minor lapses in attention. The second additional measure, a less strict consistency measure, is a consistency maximization score. For a given anchored set, this is the percentage of decisions consistent with the WTR that is most consistent with the decisions in that set. Thus, if the best-fitting WTR has only 7 decisions consistent with it, then the consistency maximization score for that anchored set is 7 / 10 = 70%. It is important to note that WTRs, perfect consistency scores, and consistency maximization scores can be all calculated separately for each anchored set. Thus, each of these dependent measures is computed six times for each target and, within a dependent measure, they can then be aggregated together in various ways depending on the purpose of the analysis. Does human performance conform to the standards evidence described above? Specifically, does performance conform to the criteria of: 51 1. Consistency: Are welfare tradeoffs made consistently? Are decisions summarizable by a single number? 2. Non-artificiality: If welfare tradeoffs are made consistently is this due to the artificiality of the lab task or to real abilities of the mind? 3. Consequentiality: Are the revealed welfare tradeoff ratios due to cheap talk or real valuation? 4. Target-specificity: Are welfare tradeoff ratios target-specific? 5. Common effects: Do WTRs toward two others—which regulate how the self’s welfare is traded off to benefit those others—correlate with how tradeoffs involving only those two others are made? 6. Magnitude Isofunctionality: Do WTRs behave as other psychological magnitudes? 7. Algorithmic Separability: Are welfare tradeoff computations performed by specialized algorithms? Because many of these criteria were tested across multiple studies, I first provide details of the methods of each study and then discuss the results organized by the hypotheses. In addition to the above criteria, which were designed specifically to test for the existence of an internal magnitude, I also test whether welfare tradeoff ratios correlate with standard self-report measures of valuation. This provides convergent evidence that the welfare tradeoff task is tapping what it is theoretically predicted to tap. 52 Methods General Design The general design of all studies in this chapter was relatively straightforward: With respect to one or more targets, subjects completed the 60 decisions that comprised the WTR task. For instance, in Studies 1 and 2, subjects completed the 60 decisions about a same-sex friend and also completed the 60 decisions about a samesex acquaintance. After making these decisions, in most studies subjects next completed additional questions. In the remainder of this section I give the details of the specific studies. Subjects and Specific Design Study 1: Measuring WTRs in the United States. One hundred sixty-seven subjects (90 female) at the University of California, Santa Barbara, completed the welfare tradeoff ratio task as detailed above, once each for a close friend and for an acquaintance. The task was in pencil-and-paper format. The order of answering about the friend and acquaintance was randomized across subjects. Subject were also randomly assigned to one of two between-subjects conditions. In the Ordered condition, the decisions were presented as in Figure 1’s left-hand side such that all decisions with the same anchored value for the other were grouped as a set and within each of these sets the value for the self was titrated up or down. In the Random condition, the same sixty decisions were presented in a random order (which was held constant across subjects). After making all of the welfare tradeoff decisions regarding both their friend and acquaintance, subjects then answered several additional 53 dependent measures: the social value orientation, the communal strength scale, the inclusion of other in self scale, and a relationship quality index (the later scale was an ad hoc scale developed specifically for this research). Appendix 1 presents the welfare tradeoff component of Study 1 in full, including all instructions. It also contains the relationship quality index. Study 2: Measuring WTRs in Argentina. The sample for Study 2 consisted of 125 students (78 female) from the University of Buenos Aires in Argentina. The details were otherwise identical to Study 1 except for the following: In this study, the instruments were translated into Spanish by a native Spanish speaker, one of the collaborators on this project (Daniel Sznycer). We did not employ any backtranslation or verification techniques. Study 3: Measuring WTRs when real dollars are at stake. One hundred forty-seven subjects (93 female) from UCSB completed a computerized version of the WTR task as Study 3. The same set of decisions used in Studies 1 and 2 were employed. Also as in Studies 1 and 2, subjects responded about both a friend and an acquaintance; which came first was randomized. Because of the computerized format of Study 3, however, subjects only saw a single decision at a time and could not revisit or review a decision once it was made. Although the randomized version in Studies 1 & 2 was a single randomization used across all subjects, in Study 3 each subject experienced a unique randomization of all decisions within a target. Moreover, the computer displayed the friend’s or the acquaintance’s initials in place 54 of “Other” as shown in the examples of the task in Figure 1. Screen shots of the computerized tasks used in Studies 3-5 are shown in Appendix 2. In Studies 1 and 2, decisions were purely hypothetical. In this study, however, half of the subjects could potentially earn real money for themselves or allocate real money to a friend or acquaintance based on their decisions on the WTR task. To do this, this study used an incentive compatible payoff mechanism. Subjects in this condition were told that after they completed the task they would have the opportunity to roll two dice. If they rolled double sixes, then two things would happen. First, the subject would receive an additional $30. Second, they could randomly select one of their decisions (i.e. blind to the values or their choice on that decision) and that decision would be made real. If that decision gave money to the subject, they would receive a check in the mail for that sum plus $30. If that decision gave money to the friend or acquaintance, the subject would receive a $30 check in the mail and the friend or acquaintance would receive a check in mail for the sum that the decision dictated be allocated to the friend or acquaintance. The initial sum of $30 was chosen so that no subject would pay out-of-pocket to participate in the experiment (there are decisions that could cause the subject to lose up to $27). After learning about the payoff mechanism and being thoroughly quizzed to ensure understanding, subjects then completed the WTR task for both a friend and an acquaintance. To avoid violations of the privacy of the friends and acquaintances, subjects were asked to select same-sex friends and acquaintances with the constraint that these people appeared in a public phone book that was provided. In practice, only 55 1 subject in this condition rolled double sixes and the choice dictated giving money to the subject. After completing their decisions, but before finishing with the payoff mechanism, subjects completed the same additional dependent measures as in Studies 1 & 2. In the other half of Study 3’s sample, subjects did not have the opportunity for their decisions to become real. However, they did have the opportunity to roll two dice at the end of the experiment. If they rolled double sixes, they would receive $30 in the mail. Subjects were also quizzed prior to engaging in the task to ensure they understood the payoff mechanism. All other aspects were identical between the two conditions. In both conditions, subjects chose their friend and acquaintance prior to learning the payoff mechanism; thus, whether money was a stake could not affect the targets subjects chose. In both conditions, all subjects received $10 in cash as a showup fee. The instructions given verbally by the experimenter to subjects are shown in Appendix 3. Once subjects began the computerized task, instructions were as in Studies 1 and 2. Study 4: Decisions involving two other individuals. Study 4 consisted of 82 subjects (48 female) from UCSB, who were paid for their participation. Subjects again completed a computerized version as in Study 3. The program asked subjects to choose six individuals with whom they had a range of relationships. See Appendix 4 for complete instructions. The program then randomly selected two of these 56 individuals. This was done to get a broader range of targets. At the end of the survey, subjects were asked to choose which of the two they felt closer to. To simplify exposition, in Study 4 I call the closer person “friend” and the more distant person “acquaintance.” When subjects were filling out the WTR tasks, these individuals were specified by their initials. Subjects completed the WTR task with four different types of content: (1) Tradeoffs involving themselves and their friend. (2) Tradeoffs involving themselves and their acquaintance. (3) Tradeoffs between their friend and acquaintance with acquaintance in the “anchored” role. (4) Tradeoffs between their friend and acquaintance with friend in the “anchored” role. The order that subjects completed these four formats in was randomized. (1) & (2) provide estimates of standard WTRs. (3) & (4) provide numerical estimates of the tradeoff between third-parties; these third-party tradeoffs should be a function of standard WTRs. (Subjects did not complete any additional dependent measures in this study.) Study 5: Comparing logically identical tasks. Study 5 consisted of 112 subjects (78 female) from UCSB who were paid for their participation. Subjects in this sample completed the WTR task for a friend or an acquaintance, not both. They also completed two versions of a task that asked them to compare sums of money expressed as dollars and Euros and determine which was larger. These tasks were computerized and randomized as in Studies 3 and 4. Instead of using the actual exchange rate, subjects were given a hypothetical exchange rate that allowed them to convert Euros to dollars. These hypothetical 57 exchange rates were drawn from the empirically determined WTRs in Study 1. Thus, the exchange rate task had the same logical structure as the WTR task: compare two numbers after discounting one by a specific number. Each subject completed one version of the exchange task with the exchange rate presented as a decimal and another with a different exchange rate presented as a fraction. Whether the first exchange task presented the rate as a decimal or fraction was randomized across subjects. Full instructions are given in Appendix 5. Subjects did not complete the same additional dependent measures as in Studies 1-3, but did complete a different, smaller set of additional measures described in the next section. Dependent Measures Welfare tradeoff task. In all studies, subjects completed the welfare tradeoff task described above and illustrated in Figure 1. The full ordered version of the task as employed in Study 1 can be seen in Appendix 1. Exchange rate tasks and supplemental questions. Each subject in Study 5 completed two tasks that were mathematically identical to the WTR task. Both tasks asked subjects to pick which of two sums of currency represented a larger value. One sum was expressed as US dollars, the other as Euros. Each task required subjects to make sixty decisions. The numbers used in these decisions were identical to the numbers used in the WTR task; the sums expressed in Euros corresponded to the anchored values in the anchored WTR sets. Unlike the WTR task, the exchange rate tasks required us to give subjects a tradeoff value to use in their decisions—an exchange rate that allowed them to 58 convert Euros into dollars. One version of the task gave subjects the exchange rate as a decimal; the other as a fraction. As exchange rates, I used the WTRs assigned to subjects in the unordered condition of Study 1. (Although subjects in Study 1 did not complete the computerized version of the task, the procedure was otherwise the most similar to Sample 5.) I used WTRs from a previous sample for two reasons. First, although subjects in Sample 5 will not be using self-generated numbers in the exchange rate task, they will still be using numbers that other subjects were able to use successfully in making decisions. Second, by using WTRs as exchange rates I can further reduce any differences between the exchange rates tasks and the WTR task. There were two exceptions to using Sample 1’s WTRs as exchange rates: First, I excluded negative WTRs because an exchange rate cannot be negative. Second, if the WTR from Sample 1 was between -.05 and 0 inclusive, I recoded the WTR as +.01. Given our scoring algorithm, -.05 is one possible WTR that could be assigned to an anchored set and, for consistency, we assigned that WTR to subjects with the appropriate switch point. However, it is more realistic to expect that subjects with this switch point simply put zero weight on the other person’s welfare. Because my goal with Sample 5 was to use estimated WTRs as exchange rates, I elected to convert these to slightly positive numbers. After making these exclusions and conversions, and rounding each revealed WTR to two decimal places, I then created two multinomial distributions, one composed of WTRs for friends and one of WTRs for acquaintances. The probability associated with each WTR in these distributions was the proportion of subjects 59 having that WTR in Sample 1. For each subject in Sample 5, the computer randomly drew two numbers from the friend distribution or two numbers from the acquaintance distribution, consistent with the subject’s condition. One of these numbers was expressed as a two digit decimal numeral; the other was expressed as a fraction. Because the same numbers were used in this task as in the WTR task and the logic of the tasks is identical, I can compute a “revealed” exchange rate for the exchange rate tasks in the same manner as an estimated WTR is computed in the WTR task. Thus, I compute a revealed exchange rate as if I had not given subjects an exchange rate and was attempting to determine what exchange rate they were in fact using. The consistency measures presented below are based on this revealed exchange rate. This avoids biasing the results such that WTR performance appears more consistent—after all, I do not have access to subjects’ actual WTRs, only their performance. Subjects’ responses and reactions times for each decision were recorded in Study 5. After completing all three tasks, subjects were also asked to rate the difficulty of each task on separate 7-point scales from 1 = “not at all difficult” to 7 = “very difficult.” Due to a computer error, this measure was not recorded for eleven subjects. Additional measures. To assess whether there were predictable relationships between our assessment of subjects’ WTRs and psychological responses that should covary with or are instantiated by WTRs, we included additional measures in Studies 1, 2, & 3. 60 Social Value Orientation. The Social Value Orientation (SVO) measure is designed to assess general preferences for resource allocation and has been shown to be an ecologically valid measure of actual behavior (Van Lange, Otten, DeBruin, & Joireman, 1997). This measure consists of nine items. Each item has three choices. Each choice allocates a number of “points” to the subject and a number of “points” to the friend/acquaintance. One choice is the prosocial choice. Subjects choosing this option reveal a preference that both they and the other receive equal amounts. Another choice is the individualistic choice. Subjects choosing this prefer to receive the most possible (relative to their payoffs under the other two choices), regardless of the other’s outcome. (In this case, the other receives less than the subject and less than in the prosocial choice.) The remaining choice is competitive. Subjects preferring this choice desire to maximize the difference between their allocation and the other’s allocation even though this makes the other worse off than in the prosocial and individualistic choices and makes the subject worse off than in the individualistic choice but identical to the prosocial choice. According to the standard scoring procedure (Van Lange, et al., 1997), a subject is categorized as prosocial, individualistic, or competitive if 6 out the 9 choices are consistent with that orientation. Subjects who do not have 6 choices consistent with any of the orientations do not appear in any analyses involving SVO. In practice, very few subjects were classified as competitive. To simplify the analyses I combine the individualist and competitive categories into a single category (as is standard in this literature, Van Lange (1999)). If the WTR measure is actually 61 measuring an internal magnitude that regulates welfare tradeoffs, then there should be a positive correlation between subjects’ SVO (prosocial dummy coded as 1; individualistic/competitive dummy coded as 0) and WTRs: Individuals with higher WTRs toward their friends/acquaintance should act in a more prosocial way toward them. Communal Strength. The Communal Strength Scale (CSS) is designed to measure “the degree of responsibility a person feels for a particular communal partner’s welfare” (Mills, Clark, Ford, & Johnson, 2004, p. 213). It is a valid and reliable measure that predicts actual behavior (Mills, et al., 2004). Sample items include “How large a cost would you incur to meet a need of your friend/acquaintance?” and “How much would you be willing to give up to benefit your friend/acquaintance?” Responses are made on 10-point scales with “not at all” and “extremely” as anchors. In many ways, the CSS is a face-valid rating-scale measure of the WTR construct (especially as applied to relationships based on “intrinsic” valuation). Reliability measures (Cronbach’s α) were high, ranging from .75 to 88. The final measure is created by averaging the items. CSS scores should positively correlate with WTR scores. Inclusion of Other in Self. The Inclusion of Other in Self (IOS) scale is designed to measure the phenomenology of psychological closeness (Aron, Aron, & Smollan, 1992). The scale is a single, graphical item. It depicts seven sets of two circles that are labeled “self” and “other.” The pairs of the circles are depicted as having progressively more overlap. Subjects are asked to choose which degree of 62 overlap best represents their relationship with the other. More overlap represents greater inclusion of the other in the self. The scale is coded 1 to 7, with higher values representing greater closeness. IOS scores should positively correlate with WTR scores. Relationship Quality Items. I created six additional items to measure the quality of subjects’ relationships with their friend and acquaintance. Items were rated on a 7-point scale with anchors of “not at all,” “somewhat,” and “very” at 1, 4, and 7. The items were (1) how much the subjects liked their friend/acquaintance, (2) how close they felt to their friend/acquaintance, (3) how much they could rely on their friend/acquaintance, (4) how much their friend/acquaintance could rely on them, (5) how willing they would be to do their friend/acquaintance a favor, and (6) how willing their friend/acquaintance would be to do them a favor. Reliability measures (Cronbach’s α) were high, ranging from .88 to .92. The final measure is created by averaging the items. The relationship quality items should be positively correlated with WTRs. Results To recap, for each subject I estimated (i) an average WTR across the six anchored sets, (ii) the percentage of anchored sets that are perfectly consistent (i.e. have one switch point), and (iii) the average percentage of choices within an anchored set that are consistent with the WTR assigned to that set by consistency maximization. Welfare tradeoff decisions were completed by subjects once for a close 63 friend and once for an acquaintance (in Studies 1-4) or for only one of these categories (Study 5). All significance tests use two-tailed p-values. Standard Pearson correlations (r) are used as a measure of effect size. Unless otherwise noted, I test hypotheses using focused contrasts (Rosenthal, Rosnow, & Rubin, 2000). I examined every WTR measure across all five studies for sex differences, applying a two-tailed α of .005 to each comparison. (Given that many of the dependent measures are not independent, it is difficult to do standard corrections, such as the Bonferroni. An α of .005 applied to each comparison acts as if there were only two independent tests per study and a total α of .05, obviously a very liberal threshold). No differences passed this threshold. Sex differences do not appear to qualify my results. Result 1: A Single Switch Point Predicts Decisions Do subjects’ responses meet the criterion of consistency? Are their decisions summarizable by a single number? Yes. In the US, 83-85% of anchored sets were perfectly consistent with a single switch point; in Argentina, the percent was lower, but still remarkably high at 70% (see Table 1, leftmost data column). If subjects were responding randomly, the expected value is only 1.1% (relative to this null, all ps < 10-43, effect sizes (r) range from .91 to .97). 64 Table 1 Means (Standard Deviations) of the Consistency Measures Perfect Consistency Sample 1 Ordered Unordered Collapsed Sample 2 Ordered Unordered Collapsed Sample 3 (Unordered) Sample 4 (Unordered) Consistency Maximization % Sets Consistent Using Target Identity % Sets Consistent As General Social Preference Test of the Difference r p 88% (23%) 83% (24%) 85% (23%) — — 25% (32%) — — .88 — — 10-54 74% (32%) 65% (29%) 70% (31%) — — 16% (27%) — — .86 — — 10-39 85% (22%) 32% (33%) .86 10-44 83% (25%) 32% (35%) .84 10-22 % Decisions Consistent Using Target Identity % Decisions Consistent As General Social Preference Test of the Difference r p Sample 1 Ordered 98% (6%) — — — Unordered 97% (5%) — — — Collapsed 97% (5%) 90% (8%) .76 10-32 Sample 2 Ordered 95% (8%) — — — Unordered 95% (7%) — — — Collapsed 95% (8%) 88% (9%) .82 10-31 Sample 3 98% (4%) 93% (6%) .71 10-23 (Unordered) Sample 4 97% (5%) 91% (10%) .64 10-10 (Unordered) Note. Results in the columns labeled “Using Target Identity” average across consistency scores assigned to tasks involving friends and acquaintances. “Collapsed” averages across data in the “Ordered” and “Unordered” rows. 65 As its name implies, the perfect consistency score requires perfect consistency. But inconsistencies due to lapses of attention or error are expected. If even one decision among 10 is not consistent with the switch point used for the other 9 decisions in an anchored set, that set will not count as perfectly consistent. The consistency maximization measure quantifies consistency without requiring perfection. By this alternative measure, 95-98% of choices were consistent with the welfare tradeoff ratio ultimately assigned to that ordered set; this is higher than the expected value of 70.84% if answers were random (relative to this null, all ps < 10-59, effect sizes (r) range from .95 to .99). By either measure, subjects’ decisions were highly consistent with a single switch point, suggesting that decision-making procedures are consulting a precise, internal magnitude for each social other. Result 2: Consistency is Not Due to the Artificial Nature of the Task Are subjects’ consistent responses due to the artificial nature of the task? To see how this could be the case, observe that, in Fig. 1A, the tradeoffs within an anchored set are ordered: the amount for the other—the anchor—stays the same, and the amount for the self decreases with each successive decision: for example, $39 for self or $37 for friend; $31 self or $37 friend; $24 self or $37 friend, and so on. Ordered sets invite consistency, especially when all tradeoffs are presented simultaneously on the same paper or screen: a person who is willing to forgo $31 (but not $39) to deliver $37 to a friend, can see, with no further computation, that all subsequent tradeoffs in the set allow them to deliver the same benefit to their friend ($37) with even less self-sacrifice (forgoing $24, $17, $9, etc). Consider, in contrast, 66 Fig. 1B, where the order of presentation is randomized, not just within an anchored set but between them: $31 self or $37 friend could be flanked by $21 self or $46 friend, $71 self or $68 friend or indeed, by any tradeoff in the instrument. There is no simple way to extract a single switch point from this unordered task format; any attempt to achieve consistency through deliberation—by remembering and comparing one’s responses across tradeoffs—will impose a large load on working memory, especially when tradeoffs are presented one at a time on a computer screen. If the consistency observed results from deliberative processes that construct a switch point on the fly—that is, in response to the task instead of through computations based on stored magnitudes—then consistency will suffer when subjects are given the unordered, randomized format. But if decisions are made by a system that consults internal magnitudes, the welfare tradeoff ratios, then consistency should be just as high for unordered as for ordered sets. Because each decision is standalone, it can be forgotten as soon as it is made with no loss in consistency. On this view, consistency is achieved by consulting an internal magnitude computed from stored, upstream values, not by deliberative processes that remember and make comparisons across decisions. As Table 1 shows, consistency is high regardless of task format: there were no significant differences in consistency between the ordered and unordered versions of the task in Samples 1 and 2, whether the standard was perfect consistency or consistency maximization (.08 ≤ ps ≤ .92, .01 ≤ rs ≤ .16). Indeed, consistency was just as high for unordered tasks of Samples 3 and 4, where tradeoffs were presented 67 one at a time on a computer screen—a method that prevents subjects from revisiting any previous decision. High levels of consistency that are insensitive to large variations in task formats is what one would expect if responses are made by consulting welfare tradeoff ratios rather than being computed online. Result 3: Subjects’ Decisions Represent Actual Valuation of Others; They Are Not “Cheap Talk” As seen in Table 2, subjects’ average welfare tradeoff ratios are well above zero. Is this apparent generosity a product of “cheap talk?” No: Hypothetical decisions and decisions with real monetary consequences produced similar estimated welfare tradeoff ratios and consistencies (Sample 3, Table 2). For WTRs for friends, the size of the difference between the two conditions was r = .14, p = .099. For WTRs for acquaintances, the size of the difference was even smaller, r = .05, p = .51. As shown in Fig. 2, the frequencies of estimated welfare tradeoff ratios for friends and acquaintances, while not identical, are extremely similar across the two conditions. Regardless of the stakes, subjects’ responses appear to be based on an internal variable representing real valuation. Result 4: WTRs Are Person-Specific; They Carry More Information Than a Simple Other-Regarding Preference Result 4A: The mind assigns different WTRs to different individuals. Do subjects’ responses reveal target-specificity in their decision making? Or are friends and acquaintances treated identically, as if subjects had a single other-regarding preference? If the mind computes person-specific welfare tradeoff ratios as part of a 68 system for making adaptive welfare allocations, then different individuals should be valued differently. To test this prediction in Studies 1-4, subjects completed the task twice, once for a friend and once for an acquaintance. As Table 2 shows, subjects’ revealed WTRs were higher for friends than for acquaintances. Result 4B: Decisions regarding two individuals cannot be summarized by a single WTR. Result 1 showed that subjects’ responses for a given target could be summarized by a single switch point. But does that finding reflect the existence of a target-specific WTR or is it due to a general—but consistent—social preference that is applied across targets? I recomputed consistency scores for each subject, collapsing across the 10 decisions they made for a friend and the 10 they made for an acquaintance in a given anchored set. If a subject’s decisions reflect nothing more than a general social preference, a single switch point should be found; that is, consistency scores should be as high as those found when the computation is done separately for friends and acquaintances. An examination of Table 1 shows that the identity of the target cannot be ignored. Whether looking at perfect consistency or consistency maximization, subjects’ responses are much less consistent if summarized by a single switch point. Effect sizes (r) when comparing target-specific consistency to general preference consistency were large, ranging from .84 to .88 for perfect consistency and .64 to .82 for consistency maximization. 69 Table 2 Welfare Tradeoff Ratio Means (Standard Deviations) Friend-Acq Difference Origin & Sample # Friend Welfare Tradeoff Ratio Acquaintance Welfare Tradeoff Ratio r p United States .62 (.35) .34 (.36) .67 10-22 Sample 1 Argentina .75 (.37) .50 (.37) .66 10-17 Sample 2 United States Sample 3 Pay .43 (.40) .30 (.41) .56 10-6 Hypothetical .54 (.38) .34 (.39) .60 10-8 Collapsed .48 (.39) .32 (.40) .58 10-13 United States .44 (.45) .27 (.40) .41 10-4 Sample 4 Note. Acq = acquaintance. “Collapsed” averages across data in the “Pay” and “Hypothetical” rows. 70 WTR for Friend Number of Subjects 25 Hypothetical Pay 20 15 10 5 1. 45 25 1. 05 1. 0. 85 65 0. 45 0. 25 0. 05 0. 15 -0 . -0 . 35 0 WTR Value WTR for Acquaintance Number of Subjects 20 Hypothetical Pay 15 10 5 .1 5 0. 05 0. 25 0. 45 0. 65 0. 85 1. 05 1. 25 1. 45 -0 -0 .3 5 0 WTR Value Figure 2. Frequency distributions of welfare tradeoff ratios for friends and acquaintances when choices do and do not have monetary consequences. Although the size of the WTRs is reduced in the condition with monetary consequences, the majority of subjects in that condition still have greater-than-zero WTRs. 71 However, at least for the perfect consistency measure, there is a potential confound. For an anchored set to count as perfectly consistent when treating targets individually, a subject need only make ten decisions without error. However, to count a set as perfectly consistent when treating WTRs as a general social preference, a subject must make twenty decisions without error (i.e., the ten about a friend and the ten about an acquaintance for the same anchored value). Because there are more possible ways to make an error, this could be artificially lowering the apparent consistency of subjects when their responses are evaluated as a general social preference. (Note that this objection cannot apply to the consistency maximization results and so cannot be used as a counter-hypothesis for this dependent variable. This is because the consistency of decisions regarding, e.g., a friend cannot affect the consistency of decisions regarding, e.g., an acquaintance for the consistency maximization measure—the consistent effects are purely additive. However, for the perfect consistency measure, if the decisions regarding a friend are not made perfectly consistently then, when analyzing as a general social preference, it will not matter whether the decisions involving an acquaintance were made perfectly consistently— regardless, the combined set will be counted as not perfectly consistent.) To make sure this was not the case I did the following. The probability of making no errors in a ten item ordered set—call this P(correct|10 decisions)—can be estimated by computing the average percentage of perfectly consistent anchored sets that a subject completes. Note that completing two anchored sets perfectly is required of subjects when consistency is evaluated as if WTRs were a general social 72 preference. So, if subjects did have a general social preference, then the probability of completing the same anchored set for a friend and an acquaintance in a perfectly consistent fashion is the square of P(correct|10 decisions). For example, using Sample 1’s collapsed mean from Table 1, P(correct|10 decisions) = 85%. Squaring this value returns 72% as the predicted probability of making a perfectly consistent set of 20 decisions. However, attempting to summarize the 10 decisions regarding a friend and the 10 decisions regarding an acquaintance with only a single general social preference results in only 25% of sets being perfectly consistent, far below 72%. I computed this value for each subject and tested it against the percentage of consistent sets when acting as if WTRs were a general social preference. Relative to the effect sizes in Table 1, the effects sizes in these comparisons are barely reduced, .77 < r < .84, all ps < 10-17. This analysis reaffirms the conclusion that subjects’ decisions regarding multiple others cannot be reduced to a general social preference. Result 5: Individualized WTRs Are Correlated with Decisions Involving Only Third-Parties Welfare tradeoff ratios are hypothesized to be part of an evolved welfare allocation system. If this is true, then aside from allowing direct tradeoffs between one’s own and another’s welfare, decision-making procedures will also consult WTRs, or the variables that determine WTRs, when making tradeoffs between two other individuals (the criterion of common effects). Consider, for example, decisions in which a parent must decide which of two children gets the last piece of food. 73 Making such a decision requires integrating the mind’s separate valuations of each child. To test this, subjects in Study 4 completed the standard self–other tasks for a friend and an acquaintance (Fig 1B), and also a version in which they made tradeoff decisions between the same friend and acquaintance. Just as the standard version allows the estimation of how much a subject will tradeoff personal welfare to benefit another, this new version allows the estimation of how much a subject will tradeoff a friend’s welfare to benefit an acquaintance. If target-specific WTRs (or the factors that set target specific WTRs) are used in making tradeoffs between two third-parties, then these two values—WTRfriend and WTRacquaintance—should predict friend-acquaintance tradeoffs in a multiple regression. The more one values a friend—that is, the greater one’s WTR for the friend—the less one will trade off that friend’s welfare to benefit an acquaintance. I first test this using as the dependent variable the version of the task involving trading off a friend’s welfare to benefit an acquaintance (i.e., where the acquaintance is in the “anchored” position). Results conformed to predictions: When WTRfriend and WTRacquaintance were entering simultaneously in a multiple regression analysis, the unstandardized regression weight for WTRfriend as a predictor of friend-acquaintance tradeoffs was negative, b = -.32, p = .008. Similarly, the more one values an acquaintance—that is, the greater one’s WTR for the acquaintance—the more one will trade off a friend’s welfare to benefit that acquaintance. This too was supported: in the same regression analysis, the unstandardized regression weight for WTRacquaintance was positive, b = 74 .65, p = 10-6 . These results show that decisions involving two other individuals are partially a function of the target-specific welfare tradeoff ratios (or the variables that are ultimately used to compute WTRs), as predicted by the hypothesis that WTRs are important internal regulatory variables within an integrated social allocation system. Like the self–other instrument, the friend–acquaintance instrument used above puts the individual likely to be less valued—the acquaintance—in the anchor position. Although I also gave subjects a friend–acquaintance instrument in which the friend was in the anchor position, there are interpretational issues when the more highly valued person is in the anchor position (e.g., for the self-other instrument, putting self in the anchor role rather than where it is in Figure 1; or for the friend-acquaintance instrument, putting the friend into the anchor role). Consider a case where my WTRfriend is.5 and my WTRacquaintance is .1. Hypothetically, I should give to the acquaintance if the following inequality is satisfied: WTRfriend * Benefitfriend < WTRacquaintance * Benefitacquaintance. Rearranging, WTRfriend/WTRacquaintance < Benefitacquaintance/Benefitfriend. Substituting in .5/.1 = 5 < Benefitacquaintance/Benefitfriend. So Benefitacquaintance would need to be more than five times Benefitfriend for me to even begin allocating to my acquaintance. That is, if my friend is the anchor/“other” role in Fig. 1 and my acquaintance is in the “you” role, then I might not choose the option benefiting my acquaintance until the amount was >5 times higher than the amount available for my friend. Yet the instrument never had differences this large; the value for the acquaintance did not go above 1.55 times the amount for the friend. This means that no subject who values their friend > 1.55 times as much as their 75 acquaintance will make a decision favoring their acquaintance over their friend. As a result, these people will all be scored as having the highest friend-favoring ratio (i.e., the highest WTRfriend/WTRacquaintance ratio) that the instrument allows (1.55), even though their actual friend-favoring ratio can have any value exceeding 1.55 (reciprocally, they will have the lowest acquaintance-favoring WTR allowed by the instrument, 1/1.55 producing a floor effect). This ceiling effect dramatically curtails the variance in measured WTRs, restricting its ability to correlate with other measures. A ceiling effect was clearly present in the data: when friend was in the anchor position, the distribution of measured WTRs was strongly skewed with the modal value being the highest possible score. (In contrast, WTRs were normally distributed when acquaintance was in the anchor position, just as they were when other was the anchor in the self-other instruments. The distribution of choice values were chosen on the assumption that the lower valued person would be in the anchor position). Although this effect was anticipated, I decided to keep the task identical to the other samples to allow direct comparability. Even though there was the expected ceiling effect when friend was in the anchor position, multiple regression showed that this measure of tradeoff between acquaintance and friend was positively related to WTRfriend (b = .38, p = .016), and negatively (though not significantly) related to WTRacq (b = -.24, p = .16). That is, you are more willing to sacrifice the welfare of your acquaintance to help your friend the more highly you value your friend relative to yourself; and you are less willing to sacrifice the welfare of your acquaintance to 76 help your friend when you value that acquaintance highly relative to yourself. The weaker effect sizes here are expected given the range restriction artificially imposed by my measure, but the direction of the effect sizes was preserved. Results 6 & 7: Welfare tradeoff ratios behave as other magnitudes, but are algorithmically separable from other magnitudes Welfare tradeoff theory predicts that the mind contains an evolved internal variable designed to regulate welfare tradeoffs and that an instantiation of this variable is created for each social actor with whom the self interacts. Further, the existence of this variable would explain subjects’ ability to achieve high levels of consistency on the WTR task. However, one may reasonably ask to what extent these effects are due to general rationality or mathematical abilities. To begin answering this question, note that the relevance of the consistency data to the hypothesis that there is an internal regulatory variable for making welfare tradeoffs is due to the switch point being self-generated. Subjects appear to create their own decision threshold and use that threshold to make an internally consistent series of decisions. Moreover, this decision threshold correlates with theoretically relevant measures (see Table 3 below) and is largely unaffected by whether decisions have monetary consequences (Figure 2 and Table 2). If the WTR was not a stable feature of the mind, then manipulations that make it less likely for subjects to create a single, coherent switch point—such as a random presentation of the choices—should have a considerable impact on subjects’ consistency. Yet they do not (compare the ordered and random conditions in Result 2). 77 The hypothesis is not that people—particularly college-educated subjects— would have difficulty making consistent tradeoffs if they were given a specified tradeoff ratio to use in their calculations. There is a great deal of evidence that human mind contains evolved specializations for manipulating analog magnitudes and that numerals can be assigned to these magnitudes (e.g., Spelke, 2000). Moreover, members of industrialized societies have “culturally bootstrapped” systems of exact mathematics (e.g. conscious arithmetic skills) (e.g., Dehaene, Izard, Spelke, & Pica, 2008). These abilities would allow subjects to easily complete the task—if a tradeoff value were specified for them. The ability of subjects to complete the WTR task using a self-generated tradeoff value (i.e. the hypothesized WTR) is most likely partially dependent on the ability to represent and manipulate analog representations of quantity. To choose between two sums for self and other expressed with numerals, the numerals would need to be mapped to internal magnitudes. The novel theoretical point advanced here is that the internal representations of the two external sums would then be input for a domain-specific algorithm that arrives at an adaptive decision using the WTR. Two potential ways this algorithm may work are by (a) discounting the internal value for the other by the WTR and then comparing it to the internal value for the self or (b) computing the ratio of the value for the self to the value for the other and then comparing this to the WTR. Because a general mathematical ability account could not, in and of itself, explain the origin of welfare tradeoff ratios—why subjects spontaneously generate 78 these numbers or any numbers at all—it is difficult to see how such an account could parsimoniously explain the fundamental result—the self-generation of a single, precise, target-specific WTR. Nonetheless, I would like to go further and examine the ease with which subjects completed our task. If there is domain-specific machinery for making welfare tradeoffs, subjects should find this an easy and intuitive task. To examine this, I conducted Study 5 in which subjects again completed the WTR task (for one person only) and also completed two tasks that were mathematically identical to the WTR task. Both tasks asked subjects to decide whether X dollars or Y Euros was worth more. Subjects were given a hypothetical exchange rate that allowed them to convert Euros to dollars. One version of the task gave subjects a hypothetical exchange rate expressed as a decimal. The second version gave subjects a different hypothetical exchange rate expressed as a fraction. Subjects were not required to memorize these exchange rates; the computer displayed them throughout the task. Given the structure of this task, it can also answer questions about whether our results could plausibly be due to the operation of domain-general rationality. Welfare tradeoff theory proposes that the mind contains specialized information-processing machinery designed for making adaptive welfare tradeoffs and allocations. One might propose as an alternative hypothesis that the mind has a general mechanism for computing preferences for a wide variety of stimuli, social and non-social, and that domain-general rationality operates over the resulting preference function. Under this view, preferences would be assigned to the X dollars for self and the Y dollars for the 79 other, or, just as easily, to hot dogs, investment rates into a personal retirement account, potential spouses, and so on. Then, (for mutually exclusive choices) the various preferences would be compared and the most preferred would be chosen. Given that different targets elicit different revealed WTRs, the function that maps objective dollars for the other person into a preference must necessarily include a person-specific component. I have been assuming that, at a minimum, it involves multiplying dollars for the other by a person-specific constant. Even if one considers this particular assumption questionable, the more general claim that decision-making must involve multiplication is not: all psychological theories of choices over subjective utilities necessitate that the mind be able to multiply (Birnbaum, 2008b). Thus, a logically prior ability for making choices over preferences is the ability of preference mechanisms to implicitly perform multiplication. Moreover, rational choice theories rely heavily on computations involving probabilities. Surely, by these accounts, such probabilities are represented by magnitudes that functionally have two decimals of precision, such as I use in the decimal exchange task to represent the exchange rate. Thus, on a rational choice account, using a number with two decimal places should be unproblematic. Altogether, this implies that the task demands of the WTR task and the exchange task are similar: both require computations that, at a minimum, involve the multiplication of two numbers and then the comparison of two numbers. Indeed, there are several ways in which the exchange task has fewer demands. First, there is no need to transform objective money into subjective preferences; one need only 80 multiply one number by the exchange rate and then compare. Second, all information required to solve the exchange task is presented simultaneously and there is no need to access any stored preference information; for the WTR task not all information is presented, requiring some to be drawn from memory. Therefore, the alternative hypothesis that our results can be explained by general rationality predicts that the exchange task will be just as easy, if not easier, to solve. Comparisons of the WTR task with the two versions of the exchange rate task can answer four questions: (1) Are there any differences on measures of consistency between the WTR task and the other two tasks? (2) Are there differences in the time it takes subjects to makes their decisions depending on the task? Greater time for the exchange rate tasks would suggest those tasks are more difficult. (3) Are the exchange rate tasks experienced as being more difficult than the WTR task? (4) Do similar manipulations have similar effects on the WTR task and the exchange rate tasks? If not, this would suggest that they are being processed by distinct algorithms despite their mathematical similarity. First, as expected, there were no differences on either measure of consistency. To make the computation of consistency as similar as possible across the two task types, instead of comparing subjects’ decisions to the given exchange, I calculate consistency as for the WTR task and determine whether any switch point is consistent with subjects’ decisions in the exchange task. (Note that the mean absolute difference between the given and revealed exchange rate was .16 and that the median absolute difference was .08, both less than the resolution of the instrument.) The mean 81 percentage of anchored sets that were perfectly consistent in the WTR task (M = 82%, SD = 25%) was not different from the means percentage of sets that were perfectly consistent in the decimal exchange rate task (M = 87%, SD = 22%), t(111) = -1.48, p = .141 (two-tailed), r = -.14, or in the fractional exchange rate task (M = 82%, SD = 25%), t(111) = -.09, p = .932 (two-tailed), r = .01. Using the consistency maximization method, the percentage of choices consistent with the assigned tradeoff value in the WTR task (M = 98%, SD = 5%) was not different from the percentage of consistent choices in the decimal exchange rate task (M = 98%, SD = 4%), t(111) = 1.35, p = .180 (two-tailed), r = -.13, or in the fractional exchange task (M = 98%, SD = 4%), t(111) = -.25, p = .801 (two-tailed), r = -.02. These results show as expected that subjects were equally able to give consistent responses to all choice tasks. Note, moreover, that the switch points in the exchange rate tasks were given to the subjects and were always displayed on the computer screen whereas subjects had to generate the switch point in the WTR task. Second, subjects took approximately 50%-80% longer to complete exchange rate decisions relative to WTR decisions. The time per decision in the WTR task (M = 1823 msec, SD = 654 msec) was significantly shorter than the time per decision in the decimal exchange rate task (M = 2939 msec, SD = 1629 msec), t(111) = 7.48, p = 1010 , r = .58, and in the fractional exchange task (M = 3309 msec, SD = 2345 msec), t(111) = 6.45, p = 10-8, r = .52. We can exclude the first 10 decisions for each task type to remove any effects that may be due to subjects becoming familiar with the mechanics of the task. Here as well, the time per decision in the WTR task (M = 1376 82 msec, SD = 614 msec) was significantly shorter than the time per decision in the decimal exchange rate task (M = 2378 msec, SD = 1374 msec), t(111) = 6.33, p = 108 , r = .52, and in the fractional exchange task (M = 2652 msec, SD = 1990 msec), t(111) = 5.43, p = 10-6, r = .46. These results suggest that the exchange rate tasks were more difficult for subjects. Third, subjects experienced the WTR task as significantly easier than the other two tasks. The rated difficulty of the WTR task (M = 2.42, SD = 1.49) was less than the rated difficulty of the decimal exchange task (M = 3.56, SD = 1.62), t(100) = 5.45, p = 10-6, r = .48, and the fractional exchange task (M = 4.05, SD = 1.84), t(100) = 6.84, p = 10-9, r = .56. Finally, I can examine whether the same manipulation causes the same effects on responses to both types of task. Specifically, I examine how increasing the size of the anchored values in the anchored sets affects the switch point computed for each set. Consider the anchored set depicted in Figure 1A. If the ratios of the amounts in each decision are held constant but the anchored value (i.e. the value for the other) is increased, the absolute amounts involved are increased. Although in principle one might expect magnitude effects (as are observed for other types of discounting (Green & Myerson, 2004)), I specifically chose anchored values to try to minimize them. Nonetheless, across the first four samples, initial analyses suggested that there was a small, but statistically reliable, effect of magnitude. The effect was such that the greater the anchored value within a set, the smaller was the WTR assigned to that 83 set—when more money was at stake, subjects were less generous. On average, the maximum difference between anchored sets was approximately 0.1, only half of the distance between successive WTRs that could actually be assigned to an anchored set. If decisions on the WTR task and the exchange rate tasks are being generated by the same process, then one can expect that the revealed exchange rate in each anchored set should decrease as the absolute value increases. (A subject’s revealed exchange rate is potentially different from the exchange rate assigned to that subject by the experimenter, see Methods.) This was not the case: As the absolute amount involved increased, subjects’ WTRs decreased, but their revealed exchange rates increased, see Figure 3. A focused contrast (Rosenthal, et al., 2000) revealed that the slope of WTR task data was significantly different from the slope of the exchange task data: The linear contrast for the WTR task was significantly different from the average linear contrast of the exchange rate tasks, t(111) = 3.56, p = 10-3, r = .32. The linear contrast for the WTR task shows a significantly negative slope (-0.48), t(111) = -2.60, p = .011, r = .24; as the amount at stake increases, subjects become less generous. The average linear contrast of the exchange rate tasks shows a significantly positive slope (0.29), t(111) = 2.74, p = .007, r = .25; in these tasks, subjects appear to assume that the greater the number in the anchored position, the greater its relative value. It is not just that the slopes differ in magnitude, they differ in direction as well. This supports the hypothesis that separate processes are involved in the WTR and exchange rate tasks. 84 These data can also be used to further support Result 2: welfare tradeoff ratios are computed by specialized machinery, not constructed deliberatively in response to the task. As shown above, subjects’ WTRs as revealed in a given anchored set subtly decreased as the absolute magnitude involved increased, even when the decisions from all the anchored sets were randomly mixed together. Such subtle responses are expected from a system designed to make adaptive, context-sensitive social allocations. (See Appendix 6 for an additional experiment bearing on this point.) This pattern would make sense if, for example, the mind were attempting to minimize risk or opportunity cost—there is more to lose if one forgoes $75 relative to forgoing $19 (cf. Stewart-Williams, 2007). This pattern of responses is very unlikely if subjects are constructing an arbitrary threshold online and attempting to make decisions consistent with it. Why not simply use the same threshold at all times? Indeed, varying the threshold as a function of the absolute amounts involved greatly increases how challenging the task is. If subjects’ minds were not using a stored magnitude embedded within a context-sensitive social allocation system—for instance, if conscious deliberation were driving our results—it is difficult to see how or why such subtle and patterned responses would emerge. 85 0.7 Decimal Exchange Task Revealed Switch Points (+/- SEM) 0.65 0.6 0.55 Fraction Exchange Task 0.5 0.45 0.4 WTR Task 0.35 0.3 19 23 37 46 68 75 Anchor Size Figure 3. Revealed exchange rates and WTRs as a function of the value of the anchor in the anchored sets. As the anchored values increase, the amount at stake increases. 86 To make full use of the data, the above results were computed using a withinsubjects approach. However, one could wonder whether contrast effects, order effects, or practice effects may have affected the results. To address this, I also analyzed the data using a between-subjects approach, using only the first task each subject completed. The pattern of the results was identical. For tests of differences in consistency, there were again no differences, all ps > .45. All other tests revealed significant effects, all ps < .006. These results show that the underlying processes giving rise to WTR task decisions and exchange rate task decisions are not identical. Although subjects are ultimately just as consistent on all types of tasks, they nonetheless take at a minimum 50% longer to complete the exchange rate tasks, they experience the exchange rate tasks as more difficult, and the same manipulation affects responses in the two tasks in opposite ways. If subjects’ performance was due simply to general rationality or mathematical abilities, all the tasks should have been just as difficult to complete. Indeed, on this alternative account the WTR task might be even more difficult. For the exchange rate tasks, subjects are given an exchange rate to use and are not required to memorize it. For the WTR task, however, subjects must both generate and remember their WTR if they are to perform consistently. Although some aspects of rationality and mathematical abilities may be necessary components for making welfare tradeoffs, they are not sufficient to explain them. Instead, the results are consistent with the existence of a domain-specific social allocation decision system. 87 For further evidence regarding the specialized nature of welfare tradeoff computation, see Appendix 7. Correlations of the WTR Measure with Other Measures If the WTR task is actually measuring the output of an internal variable designed to regulate welfare tradeoffs—designed to index valuation of another individual—then it should show positive correlations with other measures designed to measure sharing, helping, relationship quality, and the phenomenology of interpersonal closeness. As seen in Table 3, the four rating scale measures generally had large and significant correlations with the switch point calculated from the WTR task. This table also displays the psychometric reliability (Cronbach’s α) of the WTR task (α was calculated for the six separate series of decisions subjects completed for a single target); these values are uniformly very high. 88 Table 3 Reliability of the WTR Measure and Its Correlations with Other Scales United States Sample 1 WTR for Friend WTR for Acquaintance Argentina Sample 2 WTR for Friend WTR for Acquaintance United States Sample 3 (Pay/ Hypothetical) WTR for Friend WTR for Acquaintance Reliability (Cronbach’s α) Social Value Orientation Communal Strength Relationship Quality Inclusion of Other in Self .92 .44*** .34*** .32*** .11 .95 .40*** .41*** .40*** .25*** .89 .31*** .20* .27** .10 .93 .35*** .21* .14 .25** .97 .45*** .30*** .17* .05 .98 .51*** .45*** .44*** .27*** Note. See methods for a description of the measures. The reliabilities for Sample 4 (which did not include the other measures) ranged from .96 to .99. The lack of a correlation between the Friend WTR and the Inclusion of Other in Self (IOS) is possibly due to there being little variance in IOS measure; most people rated their relationship with their friend very high on this measure. ***p ≤ .001, **p < .01, *p < .05. 89 Summary of Chapter 2: The Existence of Welfare Tradeoff Ratios The five studies of this chapter provide evidence consistent with the hypothesis that the mind calculates an internal variable for regulating welfare tradeoffs—a welfare tradeoff ratio. These studies showed seven primary results: 1. Subjects’ welfare tradeoff decisions were summarizable by a single number; that is, their decisions were made consistently. 2. This consistency was not an artificial feature of the task. Subjects behaved just as consistently regardless of whether decisions were made in an ordered or random series. 3. Subjects’ WTRs were not the product of cheap talk: The magnitude of their WTRs was essentially unchanged regardless of whether real money was at stake. 4. Welfare tradeoff ratios were person-specific: Subjects had higher WTRs for their friends, relative to acquaintances, and decisions for both friends and acquaintances could not be summarized by a single number. 5. Subjects’ separate welfare tradeoff ratios toward a friend and an acquaintance correlated with welfare tradeoffs between the friend and acquaintance. 6. Results from a mathematically identical task show that the WTR behaves as other psychological magnitudes, allowing consistent decisions to be made. 7. Nonetheless, the pattern of responses to the WTR task—the ease and speed with which subjects complete it—shows that welfare tradeoff computations are handled by a specialized system. 90 Although the mind may calculate welfare tradeoff ratios toward others, does it estimate WTRs stored in others’ minds? I address this question in Chapter 3. 91 Chapter 3: The Estimation of Welfare Tradeoff Ratios2 The difference between a full and an empty stomach can be a matter of life and death. Not surprisingly, research in animal psychology and behavioral ecology reveals exquisitely crafted psychological machinery for estimating variables necessary to support efficient foraging (Gallistel, 1990; Stephens, Brown, & Ydenberg, 2007). Humans are no exception: experimental and ethnographic evidence reveals that the human mind also contains foraging specializations (Kameda & Tamura, 2007; New, Krasnow, Truxaw, & Gaulin, 2007; Silverman & Eals, 1992; Winterhalder & Smith, 2000). But are there other variables that need to be estimated, aside from rates of return, especially for a social animal such as humans? In this chapter, I investigate whether humans also estimate the welfare tradeoff ratios contained in the minds of others. To provide converging evidence with Chapter 2, the methodology of this chapter involves substantial methodological changes. First, I examine whether WTR machinery can be applied to any agent—not just individual humans. If so, then it should be applicable to coalitions as well. Part of the evolution of multi-person cooperation may have involved the ability to conceptualize cooperative groups as agents (Tooby, Cosmides, & Price, 2006). Therefore, to establish the generality of WTR psychology, subjects in the experiments below were (implicitly) asked to infer the WTRs that individual humans have toward a coalition of humans. 2 The research presented in this chapter was conducted in collaboration with Theresa Robertson. 92 Second, instead of explicitly asking subjects what kinds of tradeoffs other people would make, I used an implicit task to determine whether subjects viewed individuals with different profiles of costs or benefits—and presumably different WTRs—as belonging to separate categories. Although WTRs are conceptualized as continuous magnitudes, a great deal of evidence suggests that humans can flexibly turn dimensional distinctions into categorical distinctions (Hampton, 2007; Jackendoff, 1983). Thus, although the measure is ultimately categorical (either a person is or is not in a given category), it can still be used to probe an underlying continuous dimension. Only after the categorization measure does the subject answer explicit questions designed to test whether the categories are related to welfare tradeoff content. The Logic of Welfare Tradeoff Ratio Estimation Does the mind estimate the welfare tradeoff ratios of others? If so, what inputs would a system for WTR estimation use? Consider how standard WTR computation proceeds: If I can potentially provide my group a benefit Bgroup at personal cost Cme, I will only do so in actuality when Bgroup * WTRme,group > Cme, or, rearranging, when WTRme,group > Cme / Bgroup. That is, the group will receive the benefit when my welfare tradeoff ratio for the group—my valuation of the group—is greater than the costbenefit ratio. All else equal, as Cme increases, so should one’s (lower bound) estimate of my WTR. Individuals willing to sacrifice more personal welfare will be inferred to have a higher WTR toward the group. This is tested in Studies 6-8. 93 An examination of the equation relating WTRs to costs and benefits suggests an additional hypothesis: As Bgroup increases, the entire fraction becomes smaller, implying that smaller B’s should be linked with larger inferred (lower bound) estimates of WTRs. Indeed, in other work the size of the benefits does matter in inferences about WTRs (Sell, 2005). Instead of testing this aspect of welfare tradeoff theory, however, I would like to examine variations in benefits for a slightly different purpose, using a somewhat different manipulation than would be required to test the more obvious prediction in this context. Specifically, I would like to further test against rational choice and payoff-based approaches for understanding social perception in social foraging. From these perspectives, information regarding benefits provided should be used to make selective partner choices. However, the costs a person pays to provide these benefits are immaterial. After all, if you are designed to maximize your returns, why should you care how much my contributions cost me? Even if costs did have some information value, variations in benefits should still have a larger impact. From welfare tradeoff theory, however, benefits and costs are only inferentially powerful when they are revealing about underlying regulatory variables. Thus, when variations in benefits delivered cannot reveal anything about a person’s WTR, then they should not be encoded. This is tested in Studies 9 & 10. (Of course, it is likely that the size of the benefits will have some cue value, even if it is not as effective as variations in cost. Although I attempt to remove any explicit information about efficiency or ability in this chapter’s experiments, the mind may nonetheless 94 assume that individuals who provide more benefits are somewhat more able and therefore more valuable.) To see whether the mind distinguishes between individuals who pay small or large costs, or between individuals who provide large or small benefits, I use an implicit categorization task (Taylor, Fiske, Etcoff, & Ruderman, 1978). This task assumes that members of the same category are more likely to be confused with each other than with members of different categories. For example, a person paying a small cost should be confused with others who pay small costs, but not with others who pay large costs. Although WTRs are conceptualized as continuous magnitudes, a great deal of evidence suggests that humans can flexibly turn dimensional distinctions into categorical distinctions (Hampton, 2007; Jackendoff, 1983). Thus, a categorical measure can still be used to probe an underlying continuous dimension. Although the categorization measure tells whether the mind makes certain distinctions, it does not reveal the content of the categories. Therefore, after the categorization task, subjects complete a number of rating scales to determine whether the categories have the social consequences predicted by welfare tradeoff theory. Study 6: Variations in Cost Incurred Methods Subjects. Fifty-eight students (33 female) at the University of California, Santa Barbara (UCSB) participated in exchange for partial course credit. Procedure and dependent variables. All materials and dependent variables for this study and the remaining studies in Chapter 3 are presented in Appendix 8. 95 Subjects were asked to form impressions of eight fictitious individuals (each represented by a facial photograph of a white man) whose plane had crashed on a deserted island. The target individuals’ survival required that they all work together and forage for food. For each of five “days” on the island, subjects read a sentence describing each target’s foraging experience (40 total sentences). On three days, each target found and shared a resource by paying a “default” cost (i.e., the time and energy necessary to walk and look for food); because these sentences do no discriminate between targets, I call them non-diagnostic sentences. For example, “With some passion fruit in hand, he walked over a few fallen logs and went back to camp.” On two additional days, half of the targets chose to pay an exceptionally large cost to forage and the other half paid a small, accidental cost; because these do discriminate, I call them diagnostic sentences. For example, “In order to catch some crabs, he waded into the shark-infested shallows off the bay” versus “While picking strawberries, he cracked and broke his watch against a rock.” The order of sentences and the pairings of sentences to targets were randomly determined for each subject with the constraints that all targets were paired with a non-diagnostic sentence on the first day and no target was paired with a diagnostic sentence two days in a row. After viewing the five foraging days, subjects completed a surprise memory test requiring them to match each event to the target who originally performed it. Categorization is assessed using the memory confusion paradigm of Taylor and colleagues (1978). In this paradigm, the pattern of errors made by subjects in the surprise memory test is used to infer social categorization. (Correct responses are not 96 analyzed because it is impossible to know if they are due to accurate memory, a memory confusion, or random responding.) If subjects make more within- than between-category confusions, this suggests that the hypothesized categories are psychologically real to them. Within-category confusions occur when a subject misattributes an action by (e.g.) an individual who paid a large cost to another individual who paid a large cost. Between-category confusions occur when a subject misattributes an action by (e.g.) an individual who paid a large cost to an individual who paid a small cost. I compute a categorization score by subtracting betweencategory confusions from within-category confusions (after correcting for differing base rates by multiplying between-category confusions by ¾; without such a correction, random responding would appear as systematic misattribution to the opposite category). If categorization is occurring, categorization scores should be greater than zero. Based on previous work (Delton, Cosmides, Guemo, Tooby, & Robertson, 2010), I expect categorization scores for the diagnostic sentences to show the strongest, most consistent evidence of categorization, with comparatively weaker effects for the non-diagnostic sentences. Thus, although I report results for both types of sentences, my interpretations are based only on results for the diagnostic sentences. After completing the categorization measures, subjects rated the targets on a number of dimensions using 7-point scales with appropriate anchors at “1” and “7.” Brief descriptions are displayed in Tables 1 & 2. At no point during the memory test or the impression ratings did I provide subjects with information about targets’ behavior; only photographs were available. 97 Results I examined every categorization and impression variable across all five studies in this chapter for sex differences with a two-tailed α of .001 (slightly less conservative than a Bonferroni correction for a total α of .05). No differences passed this threshold. Sex differences do not appear to qualify my results. All p-values are two-tailed. Does categorization occur as a function of costs incurred? Yes, subjects categorized targets as a function of costs incurred: Categorization scores for the diagnostic sentences were reliably greater than zero, M = 2.73, SD = 3.50, t(57) = 5.94, p = 10-6, r = .62. There was also a tendency for categorization scores for the non-diagnostic sentences to be greater than zero, M = 0.78, SD = 3.85, t(57) = 1.54, p = .128, r = .20. Do impressions differ as a function of costs incurred? Yes, subjects viewed targets who incurred larger costs more positively on all items, all ps < .07 and all rs ≥ .24 (Tables 4 & 5). 98 Table 4 Effect sizes (r) of mean differences on the impression items Study 6 Study 7 Study 8 Benefiting group at large cost always viewed more positively Study 9 Study 10 Providing large benefits always viewed more positively Item Feels altruistic toward group .36** .40** .42*** .22 .08 Cares about group .58*** .14 .50*** .15 .18 Desirable as a group member .60*** .41** .49*** .03 .25† Desirable for 1:1 cooperation – .45*** .50*** .30† .28* Willing to Contribute – .46*** .48*** .10 .28* Trustworthy .45*** .32* .54*** .09 .26* Deserves respect .48*** .43*** .49*** .19 .28* Likeable .49*** .18 .45*** .32* .14 Put in effort .59*** .54*** .52*** .07 .24† Competent .61*** .46*** .49*** .21 .19 Is not selfish .24† – – – – Intended to pay large cost .48*** – – – – Worthy of being leader .57*** – – – – Does not deserve punishment .29* – – – – 99 Deserves reward .50*** – – – – Note. Each effect size, r, represents the comparison of two means: One mean from targets who pay a large cost and one from targets who pay a small cost (Studies 6-8) or one mean from targets who provide a large benefit and one from targets who provide a small benefit (Studies 9 & 10). Comparisons were made using repeated measures t-tests. Interested readers can convert using r = Sqrt( t2 / ( t2 + df )); t = ( r2 * df ) / ( 1 – r2 ); df = sample size minus 1. “–” indicates that data on this item were not collected in a particular study. †p < .10, *p < .05, **p < .01, ***p < .001. 100 Table 5 Means (Standard Deviations) of Impression Items Study 6 High Low WTR WTR 4.78 4.48 (0.94) (1.03) Study 7 High Low WTR WTR 4.92 4.60 (0.86) (0.90) Study 8 High Low WTR WTR 4.70 4.13 (1.22) (1.16) Cares about group 5.29 (0.84) 4.75 (1,00) 5.13 (0.96) 5.01 (0.93) 5.23 (0.92) 4.51 (1.09) 5.41 (0.92) 5.29 (0.99) 5.17 (1.17) 4.96 (1.15) Desirable as a group member 5.38 (0.93) 4.63 (1.20) 5.14 (0.94) 4.77 (0.94) 4.99 (1.05) 4.36 (0.94) 5.07 (1.12) 5.03 (0.80) 4.75 (1.17) 4.49 (1.04) Desirable for 1:1 cooperation – – 4.94 (1.02) 4.49 (0.98) 4.60 (1.09) 3.89 (1.05) 4.98 (1.11) 4.66 (1.03) 4.32 (1.16) 4.05 (1.08) Willing to Contribute – – 5.57 (0.88) 5.14 (0.93) 5.24 (0.84) 4.63 (1.05) 5.51 (0.95) 5.41 (0.89) 5.07 (1.03) 4.81 (1.08) Trustworthy 5.19 (0.86) 4.73 (1.08) 5.12 (0.94) 4.86 (0.98) 4.87 (0.91) 4.23 (0.95) 5.01 (0.96) 4.91 (0.80) 4.73 (1.13) 4.46 (1.07) Deserves respect 5.09 (0.91) 4.56 (1.05) 5.23 (0.94) 4.78 (0.95) 4.93 (0.78) 4.33 (0.84) 5.16 (0.94) 4.99 (1.07) 4.67 (0.92) 4.39 (0.95) Likeable 5.04 (0.82) 4.56 (1.07) 4.92 (0.95) 4.76 (0.98) 4.81 (0.81) 4.24 (0.89) 5.03 (0.96) 4.70 (0.86) 4.43 (0.96) 4.31 (0.92) Put in effort 5.45 (0.85) 4.73 (1.03) 5.54 (0.85) 4.99 (0.86) 5.24 (0.89) 4.54 (0.95) 5.40 (1.03) 5.33 (0.98) 5.04 (1.02) 4.80 (1.02) Item Feels altruistic toward group Study 9 Large Small Benefits Benefits 5.02 4.84 (1.27) (1.29) Study 10 Large Small Benefits Benefits 4.56 4.46 (1.17) (0.98) 101 Competent 5.41 (0.82) 4.78 (1.06) 5.26 (0.97) 4.82 (0.88) 5.15 (0.99) 4.63 (1.02) 5.29 (0.97) 5.12 (0.90) 4.77 (1.07) 4.58 (1.06) Selfish 2.72 (1.13) 2.95 (1.06) – – – – – – – – Intended to pay large cost 4.39 (1.18) 3.78 (1.20) – – – – – – – – Worthy of being leader 4.75 (1.07) 3.91 (1.35) – – – – – – – – Deserves punishment 2.16 (1.07) 2.43 (1.20) – – – – – – – – Deserves reward 5.36 (0.99) 4.77 (1.27) – – – – – – – – 102 Note. “–” indicates that data on this item were not collected in a particular study. The median repeated measures correlation was .57 (first quartile = .47; third quartile = .61). Study 7: Varying Costs with Constant Intentions Study 6 is consistent with the hypothesis that larger incurred costs lead to greater estimated welfare tradeoff ratios. However, there is a potential confound in the stimuli: All targets necessarily paid the default costs of foraging, but only those who paid a large cost paid any additional cost intentionally; those who incurred a small, incidental cost did so accidentally. Could the effects in Study 6 be driven by whether an additional cost was paid intentionally, instead of the size of the cost? To rule this alternative explanation out, Study 7 replicated the first study but removed sentences involving incidental costs. Thus half the targets paid an exceptional cost on some occasions on top of the default costs of foraging whereas the other half only paid the default costs. In all cases, all costs were paid intentionally. Method Subjects. Fifty-nine students (31 female) at UCSB participated in exchange for partial course credit. Procedure and dependent variables. The procedure was identical to Study 6 except that all sentences with small incidental costs were removed. This required having only four days of foraging such that there was no initial day during which only non-diagnostic sentences were shown. Note that only targets who paid large costs were paired with diagnostic sentences. I added two new questions to more directly assess the value of targets as cooperation partners. I also reduced the total length of the rating portion by removing a number of peripheral questions. 103 Results Does categorization occur as a function of costs incurred? Yes, subjects categorized targets as a function of costs incurred: Categorization scores for the diagnostic sentences—which in this study only existed for people paying large costs—were reliably greater than zero, M = 1.38, SD = 2.82, t(58) = 3.75, p < .0004, r = .44. There was no tendency for categorization scores for the non-diagnostic sentences to be greater than zero, M = -0.39, SD = 3.65, t(58) = -0.81, p = .210, r = .11. Do impressions differ as a function of costs incurred? Yes, subjects viewed targets who incurred larger costs more positively on most items, most ps < .05 and rs ≥ .30 (Tables 4 & 5). Although in the predicted direction, the effects for likeability and caring about the group were somewhat small. Study 8: Manipulating the Target of Costs Incurred In Studies 6 & 7, subjects consistently perceived targets paying a larger cost as more competent. Is a perceived difference in ability to contribute—not a perceived difference in willingness—driving our results? To test against this possibility, in Study 3 half the targets incurred an exceptional cost to benefit the group and the other half benefited the group at the “default” cost and then incurred an exceptional cost to benefit themselves (thus equating ability to incur costs). Indeed, the latter targets actually found more food and so in an objective sense are more able. The hypothesis that greater costs incurred lead to higher inferred WTRs assumes that the mind is attempting to estimate group-specific welfare tradeoff ratios. 104 It thus predicts that the targets will be categorized as a function of who receives the food (the group vs. the target himself) and that targets who provision the group will be viewed more positively. Method Subjects. Seventy-six students (36 female) at the UCSB participated in exchange for partial course credit. Procedure and dependent variables. The procedure was identical to Study 6 except that half the targets were paired with diagnostic sentences depicting them as paying a large cost to provision the group, whereas the other targets were paired with diagnostic sentences depicting them as paying default costs to provision the group and then paying a large cost to provision himself. For example, “He exposed himself to the hazardous waves on the rocks of the bay so he could catch sea bass to bring to the group” versus “After providing some food for the group, he waded through a river full of deadly piranhas to hunt duck for himself.” The rating items were identical to Study 7. Arranging the stimuli in this manner works against the hypothesis that individuals incurring a large cost on behalf of the group will be viewed more positively: These individuals actually produce less than individuals provisioning both themselves and the group. Results Does categorization occur as a function of who received a benefit? Yes, subjects categorized as a function of whether large costs were incurred on behalf of the group or on behalf of the target himself: Categorization scores for the diagnostic 105 sentences were reliably greater than zero, M = 2.25, SD = 3.90, t(75) = 5.04, p = 10-5, r = .50. Categorization scores for the non-diagnostic sentences were not appreciably greater than zero, M = 0.40, SD = 3.65, t(75) = 0.96, p = .171, r = .11. Do impressions differ as a function of who received a benefit? Yes, relative to targets benefiting themselves at a large cost, subjects’ viewed targets who provisioned the group at a large cost more positively on all items, all ps < .001 and all rs ≥ .42. As in the earlier studies, targets who incurred large costs on behalf of the group were viewed as more competent. This occurred despite the other targets actually finding more food, suggesting that social factors can influence perceptions of competence. Discussion Studies 6-8 reveal that targets are categorized by the size of the costs they incur. Nonetheless, I note a potential caveat in interpreting these data. In these studies targets elicited significantly different ratings of competence as a function of the costs they incurred (a measure of efficiency or ability). Although it was not the goal of this chapter to investigate the effects of ability on person perception, it is possible that the stimuli inadvertently included subtle ability information. Although I tried to correct for this experimentally in Study 8 by having the targets who placed low value on the group be objectively more able, this study still showed differences in perceived competence such that targets who placed greater value on the group were seen as having greater competence. One interpretation is that this is due to a “halo” effect, 106 stemming from the more direct inference regarding care and altruism. Another possibility, however, is that I was unable to remove inadvertent cues to ability. Studies 9 & 10: Varying Benefits Provided Studies 6-8 provided consistent evidence that targets willing to incur greater costs on behalf of the group were categorized separately from those who weren’t; moreover, they were viewed more positively, including on WTR-relevant dimensions (e.g. on items of care and altruistic feelings toward the group). That costs are encoded at all is not strongly consistent with rational choice or payoff-based approaches. (Indeed, these approaches might predict that people who pay more to deliver the same benefits—that is, have lower efficiency—might be viewed less positively.) What role do variations in benefits have? According to welfare tradeoff theory, variations in benefit delivery should be encoded when they cannot reveal underlying differences in WTRs. To test this, new diagnostic sentences were created: Half the targets in Studies 9 & 10 were twice depicted as paying default costs to provide a very small benefit (e.g. a small handful of nuts); the remainder were twice depicted as paying default costs to provide a very large benefit (e.g. a very large amount of fruit). These differences are not revealing of underlying WTRs because it is random with respect to effort whether a person will encounter a large or a small resource. All targets paid the default costs of searching the island for food; only chance determined what resources they would find. 107 Studies 9 & 10 used different sets of non-diagnostic sentences: In Study 9, on three further occasions all targets provided a medium sized benefit at default costs. In this study the environment is depicted as relatively resource rich—all targets always find at least something. If positive sentiment is preferentially directed at targets finding large benefits, it could be that subjects perceive targets finding small benefits as making poor decisions—why settle for a handful of nuts when you can find a basket of fruit? To test against this, in Study 10, on the three further occasions, all the targets failed to find any food. Thus, resource acquisition was not certain. Note that a manipulation check at the end of Study 9 showed that subjects perceived the large benefits as much more beneficial than the small benefits (r = .83, p < .001). Although subjects certainly noticed the size of the benefits, will this difference affect perceptions of the targets? Methods Subjects. Forty-three students (24 female; Study 9) and 57 students (29 female; Study 10) at UCSB participated in exchange for partial course credit. Procedure and dependent variables. The procedure was identical to Study 1 with the following exceptions. In Studies 9 & 10, half the diagnostic sentences depicted the targets finding a small benefit at a default cost; the remainder depicted the targets finding a large benefit at a default cost. For example, “He got food for the group by climbing one the highest trees on the island to collect the few cashews he saw at the top” versus “He noticed a large patch of peaches in the grove at the top of a perilous cliff and climbed up to gather loads to bring back for the group” In Study 9, 108 the non-diagnostic sentences depicted targets as finding a medium benefit at a default cost as in previous experiments. In Study 10, the non-diagnostic sentences depicted targets as failing to find a benefit at a default cost. For example, “He searched all over the island but found no food to bring back.” The rating items were identical to Study 2. Results & Discussion Does categorization occur as a function of the size of the benefit? No, categorization scores for diagnostic sentences were not reliably different from zero (and were in the wrong direction) in Study 9 and Study 10, respectively: M = -0.15, SD = 2.44, t(42) = -0.41, p = .34, r = -.06; M = -0.29, SD = 2.25, t(56) = -0.96, p = .17, r = -.13. This was also true for non-diagnostic sentences, Study 9 and Study 10, respectively: M = 0.48, SD = 2.94, t(42) = 1.06, p = .15, r = .16; M = -0.07, SD = 2.77, t(56) = -0.18, p = .43, r = .02. Do impressions differ as a function of the size of the benefit? There was some tendency for targets who provided larger benefits to be viewed more positively, although the size of the effects were relatively small and sometimes only marginally significant (Tables 4 & 5): Study 9, rs ranged from .03 to .32 and ps from .03 to .82; Study 10, rs ranged from .08 to .28 and ps from .03 to .53. Although all impression measures favored individuals providing large benefits, the effect sizes in Studies 9 & 10 were smaller than in Studies 6-8. This is opposite the relative effect sizes that would be predicted by rational choice or payoff-based accounts. 109 Summary of Chapter 3: The Estimation of Welfare Tradeoff Ratios Consistent with predictions, subjects categorized targets by the size of costs incurred and did not categorize targets based on unrevealing variations in benefits delivered. This is unpredicted by rational choice and payoff-based approaches to choosing cooperation partners. Under these perspectives, individuals are valuable as cooperation partners when they provide large benefits; the costs others pay are much less relevant. Altogether, the results of this chapter are consistent with the prediction from welfare tradeoff theory that the mind can estimate the welfare tradeoff ratios of others. 110 Chapter 4: Discussion and Conclusions This research has explored the nature of a summary variable—the welfare tradeoff ratio—and shown (a) that the mind computes this variable and (b) that the mind can estimate this variable in the minds of others. The discovery of this neurocomputational element helps to explain how a physical device such as the human brain can engage in behavior as complex as cooperation, aggression, and altruism. The characterization of human social decision-making in precise, information processing terms allows researchers to build cumulative (and falsifiable) theories of human social behavior. By adopting the common language of information processing, researchers from the many disciplines interested in human behavior— anthropology, economics, neuroscience, political science, and psychology, among others—can begin to pool their knowledge and increase the rate at which human nature is mapped. Extensions and Future Directions Ongoing Work on Welfare Tradeoff Theory Chapter 3 provided preliminary evidence that the mind can estimate welfare tradeoff ratios. In other work, Sznycer and colleagues (2010) have gone further. In this experiment, pairs of friends were brought into the lab. Both members of the pair filled out the WTR decisions used in Chapter 2 with respect to their friend who came with them to the lab. Subjects also completed the scale as they believed their friend would toward them (i.e., toward the subject). Results showed that the expected WTR 111 from the friend accurately tracked the friend’s actual WTR. Moreover, this accuracy was person-specific: Each subject also completed the task with respect to a third-party and completed the task as they believed the third-party would toward them. Controlling for either of these variables—which serve as measures of generalized generosity and generalized expectations of generosity—did not account for subjects’ accuracy regarding their friend. Related to this, Lim and colleagues (2010) have shown that the mind can distinguish between multiple levels of WTRs expressed toward the self. In this experiment, subjects were paired with a (sham) partner. Through a computer, the subject observed this partner make a number of welfare tradeoff decisions that affected the subject and the partner, with the decisions all being consistent with a particular WTR toward the subject. Between subjects, this WTR was varied from zero to .9, with four intermediate values as well. Importantly, Lim et al. manipulated the numbers at stake such that regardless of the partner’s welfare tradeoff ratio toward the subject, the objective amount the subject received was in all cases identical. Consistent with welfare tradeoff theory, subjects’ WTRs toward the partner increased monotonically with the partner’s WTR toward the subject. Thus, subjects (a) extract relatively precise WTRs from observing the patterns of decisions of others and (b) sensitively regulate their behavior in response to differences in inferred WTR. Although barely addressed in the present work, Lim et al. and Sznycer et al. also explore the intimate links between welfare tradeoff ratios and emotional systems. For instance, Lim et al. show that decisions by the partner that are especially 112 revealing of a high WTR toward the subject elicit gratitude; this is not true of decisions that simply involve large benefits accruing to the subject. In a similar fashion, decisions revealing an especially low WTR toward the subject elicit anger and annoyance; this is not true of decisions that simply involve small benefits accruing to the subject. Future work can benefit by further integrating welfare tradeoff ratio methodology and emotions. Studying Welfare Tradeoffs Without Numbers One limitation of the studies in Chapter 2 is that they involved explicitly presented numerals. Presumably, welfare tradeoff allocation systems evolved prior to the cultural invention of numerals and should thus be able to operate without them. (Although Chapter 3 did not use explicit numerals, it did not examine WTRs at a very high level of resolution. These studies were only able to discriminate “large” from “small” estimated WTRs.) It would provide strong converging evidence to show that WTRs affect decision-making even without using explicit numbers. One way to do this would take advantage of the matching law (Herrnstein, Rachlin, & Laibson, 1997). When presented with two places to forage in, animals usually divide their time in proportion to the relative rates of benefit delivery at the two locations; this behavioral regularity is called the matching law. For example, if equal sized pellets of food are available at both locations, but are delivered to patch 1 at twice the rate as patch 2, then the animal will spend twice as much time at patch 1. (There are technical qualifications in exactly how the schedule of benefits must be designed to ensure matching (Herrnstein, et al., 1997); I do not go into these here.) 113 This regularity can be used to investigate welfare tradeoff psychology in the following way. First, consider that in normal matching experiments, the benefits of choosing either foraging patch accrue to the chooser. Thus, if I had to choose between patch 1 and 2, I would choose patch 1 at twice the rate. Starting from this baseline, an experiment could manipulate whom the benefits of a foraging patch accrue to. Foraging patch 1 still accrues to me, but when I select patch 2, any benefits I find could accrue to my friend. Now, instead of dividing my time in a 2-to-1 ratio, I should divide my time in a 2-to-(1*WTR) ratio. No explicit numerals are necessary. Instead, “pellets” of identical value appear at two different patches and the beneficiary of at least one patch is manipulated. Subjects’ behavior under these conditions could reveal exactly how precise the internal magnitudes used in welfare tradeoff calculations are. Broadening the Breadth of Decisions Chapter 2 mostly considered a relatively simple decision: Whether to allocate one benefit to oneself or a different sized benefit to another. According to welfare tradeoff theory, these decisions require computing whether cself < WTR * bother. Yet many decisions do not fall into this pattern. As a more general description of welfare allocation problems, consider the following framework. (This framework is presented has an “in principle” mathematical formalism; in practice processing constraints or other considerations may limit whether the mind could or would instantiate what is described here.) 114 First, assume there is a set S of mutually exclusive states of the world that the decision-maker could bring about, s1 through sn. (Although states are mutually exclusive with another, they may contain shared sub-states.) Associated with each state is a welfare allocation from the set A, a1 through an, such that the realization of (e.g.) s1 causes a1 to obtain. Each state and welfare allocation impacts a set of agents P, p1 through pn. When state si is realized, leading to welfare allocation ai, each agent p1 through pn has their welfare affected by an amount vi,1 through vi,n, with the second subscript indexing the agent that the welfare change applies to. (Each a is a set of welfare changes, making A a set of sets. The welfare changes, v, can be any cognizable magnitude able to enter into these calculations, including presumably zero.) Finally, the decision-maker has a set of welfare tradeoff ratios, W, toward each agent, w1 through wn. One of the ps, pself, represents the decision-maker; wself should in theory equal 1. The total welfare gained by the decision-maker for a given si and ai is the sum of all vi,k * wk, as k ranges from 1 to n. If the decision-maker is unilaterally able to determine which state is realized, this becomes a maximization problem: realize the state si that leads to the highest welfare gain for the decision-maker. Each component of this mathematical formalism requires extensive processing. For instance, determining the set of welfare changes v is a substantial problem. Moreover, as the number of possible allocations and persons involved increases, a computational explosion results, making computations involving large numbers of allocations and person impossible. Nonetheless, as illustrated by Study 4 in Chapter 2, the mind can successfully perform welfare tradeoff tasks involving two 115 other people (aside from the self) and a quick reflection on everyday life suggests that people can perform welfare allocations involving small groups of people. Although an unbounded version of this extended formalism is surely not computationally feasible, the breadth of the mind’s ability to perform welfare allocations suggests that the machinery must allow more complex computations than those involving the self and one other and only two possible allocations. Comparisons to Other Research Programs The previous chapters have developed reasons to believe that the mind estimates a number of indices about others in its social world and integrates these estimates into a summary representation, a person-specific welfare tradeoff ratio, to determine how to allocate welfare between different parties. What are some existing theoretical viewpoints that relate to, or perhaps contradict, this? I compare welfare tradeoff theory to several below. In most cases, WTR theory is not at odds with existing theory, but complements it by adding specificity to important aspects of welfare allocation. Affective Heuristic Approaches A common view among many branches of cognitive and social psychology is that judgment and decision making—when accomplished through means other than conscious manipulation of magnitudes—is accomplished by inexact, often affective means (Bechara, Damasio, & Damasio, 2000; Haidt, 2001). Consider how this view would interpret choices people make between gambles (e.g., would you rather have $50 for sure, a 50% chance of earning $120, or a 10% chance of earning $2000?). 116 The affective heuristic approach would predict that the mind generates a rough, affective response to each option. Whichever option has the most positive affect (or least negative affect) would be chosen. According to this view, there is no need for precision computation; inexact, heuristic estimation satisfices. (Some proponents of this view also argue that there is a “rational” system at work as well, but that this does not often come into operation.) The results of the present studies do not lend much support to this view. Although subjects in my studies do not do conscious manipulation of magnitudes when completing the welfare tradeoff task, their data nonetheless reveal extremely precise patterns of behavior. Subjects’ informal descriptions of their decision process often reduce to “I gave to me when it felt appropriate, and the other when that felt appropriate.” Despite this vagueness in the phenomenology of their decision process, their data can neatly be summarized by a single number—a welfare tradeoff ratio— across a wide number of randomly mixed decisions. This is not due, moreover, to subjects generally anchoring on switch points that would require little computation, such as zero or one (leading one to always allocate to the self (except when the amount is negative) or to always allocate the larger amount, respectively). An examination of Figure 2 shows that the majority of subjects use switch points other than these. Of course, the present data do not directly bear on the type of data usually accounted for by affective heuristic approaches: choices over gambles. However, data directly investigating these domains often reveals surprisingly precise choice 117 behavior of subjects, in ways that seem to require precise complex calculations (Birnbaum, 2008b; Rode, Cosmides, Hell, & Tooby, 1999). Preuschoff and colleagues (2006) conducted a neuroimaging study of gambling decisions. Instead of finding that the brain encodes rough, approximate magnitudes, they predicted and found that the brain encoded in a precise fashion the expected value and the variance of gambles. Moreover, these activations were found in brain areas typically thought to be involved in affective heuristic processing. The Elementary Forms of Human Interaction Approach In a wide-ranging research program, Fiske (1992) has amassed a great deal of evidence that, he argues, shows that human interaction is structured by four elementary types of prosocial relationships. One of the major components of these elementary relationships is that they determine how resources are to be shared. These elementary types of interaction and their associated sharing rules are: 1. Communal Sharing: For a specified set of people, for a specified purpose and resource, resources are pooled: Individuals contribute what they can and take what they need—“from each according to his abilities, to each according to his needs.” 2. Authority Ranking: Individuals are ranked, with higher ranked people being entitled to more and better resources. (On this view, however, higher ranked people are also expected to be beneficent toward their subordinates.) 3. Equality Matching: Everyone receives identical shares and contributes equally. 118 4. Market Pricing: Exchanges and allocations of resources are accomplished by implicit or explicit contracts. (See the section on social contract theory below for relevant comments on this sharing rule.) Given the wide range of cultures and situations in which Fiske finds these four types of relationships, they appear to capture something real about human psychology. What is the relationship between these modes of interaction and welfare tradeoff theory? Although some relationships, such as nuclear families, may be structured by communal sharing, there is no specification of when a person’s “needs” become more than the rest of the set is willing to endure. Would members of a communal sharing set be willing to let an individual whose self-reported needs are essentially infinite take the vast majority of the resources created by the set? Welfare tradeoff theory can help explain how limits to communal sharing are instantiated: Although members of a communal sharing pool may all set high WTRs toward other members, their WTRs are not infinitely high; eventually, taking according to one’s need may come to be seen not as entitlement but as exploitation when such taking crosses WTR boundaries. A similar issue arises in authority ranking. Individuals gain authority because of their ability to inflict costs and/or confer benefits. In virtue of this, according to elementary relationship forms theory, lower ranked people allow them to take larger or better shares. But what counts as too much? A variety of anthropological evidence shows that lower ranked people will eventually rebel against a highly ranked person who has overstepped what the lower-ranked feel is appropriate (Boehm, 1993). (A 119 similar phenomenon is even seen in capuchin monkeys (Gros-Louis, Perry, & Manson, 2003).) The hypothesis that the mind computes relatively precise WTRs with respect to higher and lower ranked people can explain why authority ranking does not allow infinite benefit taking. At some point the benefits taken by a higher ranked person, relative to the costs inflicted on lower ranked people, are too far from the welfare tradeoff ratios that lower ranked individuals set toward the higher ranked person. Whereas systems for communal sharing and authority ranking may interact with welfare tradeoff computations, equality matching may simply apply to situations where nuanced WTR calculations are not possible. Such situations may include multiperson cooperative ventures where tracking the inputs of multiple contributors and apportioning benefits proportionate to inputs may be difficult or impossible (Tooby, et al., 2006). Here, there may not be a useful connection between the structures instantiating the relationship type and WTR computation. Alternatively, people’s decisions to join a collective endeavor may depend on their WTRs toward other contributors. Consider that a person may perceive their personal benefits from a collective endeavor to be subjectively less than the costs if they contributed. Thus, on grounds of personal welfare alone, it would not be rational for them to contribute. However, if the person’s WTRs toward one or more of the other parties is sufficiently high, it nonetheless might be adaptive to contribute. 120 Altogether, welfare tradeoff theory and the elementary forms of interaction approach do not conflict. Instead, welfare tradeoff theory can supply boundary conditions to at least some of the forms. Other-Regarding Preferences Approaches Standard approaches in economic game theory, when applied to the behavior of individuals, attempted to explain behavior in terms of rational computations designed to maximize personal objective payoffs. (Although it is not, in principle, necessary for agents to attempt to maximize personal objective payoffs, this is what has been traditionally assumed.) Years of experimental evidence have falsified this assumption: Subjects in behavioral economic games do not behave as predicted if attempting to maximize personal payoff; instead, they often act in ways that benefit others even at a cost to the self (Fehr & Henrich, 2003). Because of this, various theories of other-regarding preferences have been developed to better explain subjects’ economic decision-making (Camerer & Fehr, 2006). According to Fehr (2009): “A ‘social preference’ is now considered to be a characteristic of an individual’s behavior or motives, indicating that the individual cares positively or negatively about others’ material payoff or well-being. Thus, a social preference means that the individual’s motives are other-regarding—that is, the individual takes the welfare of the other individuals into account” (p. 216). Theories of other-regarding preferences potentially diverge from welfare tradeoff theory on a number of points. First, except for other-regarding preference theories explicitly drawing on theories of selection pressures (e.g., Henrich et al., 121 2005), these theories do not usually have a principled reason to motivate their functional form aside from intuition and the ability to account for data in the circumscribed settings of experimental economics games (Fehr & Schmidt, 1999; Rabin, 1993). Second, models of other-regarding preferences usually require parameters to be estimated for each subject, but provide no principles to predict the values these parameters might take. Welfare tradeoff theory, in contrast, provides a number of principled predictions of individual differences in WTRs and related internal variables (e.g., Lieberman, et al., 2007; Sell, Tooby, et al., 2009). Third, and related to the first two points, because other-regarding preference theories do not usually look to the theoretical constraints provided by theories of selection pressures, there are an infinite number of possible other-regarding preference theories: Consider that other-regarding preference theories retain the basic structure of behavioral game theory— rational (but blinkered) computation operating over a preference structure (Camerer, 2003). Although preference structures specifying short-term self-interest (the usual assumption of past work) have been falsified, there are an infinite number of other possible preference structures the mind could, in principle, adopt. Without theoretical guidance and constraint, other-regarding preference theories are infinitely flexible to account for almost any data set. Finally, although other-regarding preference approaches allow for individual differences in preferences and strategy (Camerer & Fehr, 2006), it is not clear that the theory can be extended to relationshipspecific valuation (at the very least, I have never encountered the approach applied in this way). 122 This last issue, even if not a true theoretical difference, arises out of the behavioral economics-centric approach of other-regarding preference theories. In a recent review, Fehr (2009) makes strong statements about the appropriate methodological tools for studying other-regarding preferences (emphasis his): “Games in which an individual interacts repeatedly with the same partner—a repeated PD [prisoners’ dilemma] for example—are clearly inappropriate tools…[Moreover,] the game should NOT be a simultaneous move game (e.g., the simultaneous PD), but should be played sequentially with the target subject being the second-mover who is informed about the first mover’s choice” (p. 221). Because games cannot be repeated, this means also that the games must be anonymous (if this were not the case, the “game” could extend outside the laboratory). This rules out, by fiat, the possibility of studying valuation toward family and friends, or toward any individual the subject has knowledge of beyond the information directly given in a laboratory setting. Thus, even if other-regarding preference theories are capable of predicting target-specificity in valuation, the methodological approach of their practitioners prevents them from examining it. In contrast, in testing predictions of welfare tradeoff theory, I developed a novel procedure to allow real money to be at stake in a laboratory game where subjects answered about a friend and an acquaintance who were not present in the lab (see Study 4 in Chapter 2). Interdependence Theory Interdependence theory derives from decades of research in social psychology (Kelley et al., 2003; Thibaut & Kelley, 1959). This theory weds game theoretic 123 notions, such as payoff matrices, with concepts from social psychology, such as affect, attitudes, and expectancies. A major component of interdependence theory is that it provides a theory of situations (Reis, 2008). It analyzes situations (using game theory-like matrices) along a number of dimensions. The four most important dimensions are the following (Rusbult & Van Lange, 2008): (1) The level of dependence: the extent of influence of one person’s actions on another’s outcomes. (2) The mutuality of dependence: the degree to which the level of dependence is similar or different across interaction partners. (3) The basis of dependence: the extent to which one person’s outcomes are influenced by personal choices or the choices of others. (4) The covariation of interests: the extent to which outcomes correspond or conflict. Where interdependence theory diverges from typical game theory approaches is that instead of considering only objective payoffs, outcomes include not only payoffs but also emotional effects and other subjective payoffs that bear no direct relation to the objective payoffs. (This contrasts with the other-regarding preferences approach which, although it looks at preferences beyond self-interest, still ultimately deals only with subjective valuations of distributions of objective payoffs.) Although this broadening is in some ways a virtue of the approach, as it has the potential to explain phenomena not explainable by standard game theory approaches alone, it is also a weakness, as it drastically increases theoretical free parameters. Predicting someone’s behavior becomes not just a matter of analyzing how actions influence objective payoffs, but to what extent one actor cares about another, how an actor’s 124 emotional response to helping or exploiting another increases or decreases the subjective value they attach to their outcome, etc. Filling out these promissory notes requires more specific theories that make substantive predictions about cooperative behavior. Ultimately, interdependence theory may be more of a meta-theory, a language within which to talk about interdependent action, than a strongly predictive theory of behavior. (Consider that to even apply notions of, e.g., the level of dependence requires knowledge of how people value different actions and outcomes. But, outside of intuition, these valuations cannot be known without a prior theory to predict and interpret them.) Welfare tradeoff theory can help fill in these gaps by articulating the various determinants of interpersonal valuation (e.g., indices of relatedness) and by providing a concrete description of how one person’s welfare is translated into units of personal welfare. Combining this approach with the game theory-like, but psychologically realistic, approach of interdependence theory may allowed for more refined prediction. Social Contract Theory Multiple lines of evidence converge to show that the mind contains mechanisms that enable social exchange, the mutual and contingent provisioning of rationed benefit (reviewed in Cosmides & Tooby, 2005). Particular social exchanges can take many forms, such as “If you give me meat now, then I will give you meat later” or “If you give me stock options maturing within the next 10 years, then I will design the next-generation alternative to the iPad for you.” How does the logic of 125 social exchange and the logic of welfare tradeoffs relate? I break this into two questions: Are WTRs centrally involved in creating well-formed, sincere social exchanges? Do WTRs play a more indirect role in bounding the scope of social exchange obligations? Suppose that a social exchange involves two people, named “you” and “me.” A well-formed social exchange requires that I give you a benefit, byou, if you meet a requirement, cyou. (Although the requirement may be costly to you, that is not necessary.) Moreover, it requires that you give me a benefit, bme, if I meet a requirement, cme. If I assume that you are sincere in offering your side of the social contract, than I face a choice between being sincere also and following through on my side of the exchange, or attempting to cheat you by accepting a benefit from you without meeting the requirement you have set of me. Using welfare tradeoff theory, let’s determine what my WTR toward you would need to be to cause me to be sincere. The left side of the following equation represents my payoffs if I am sincere; the right side represents my payoffs if I attempt to cheat you: bme – cme + WTR * (byou – cyou) > bme – WTR* cyou Algebraic manipulation reduces this to: WTR * byou > cme Or: WTR > cme / byou What does this imply? In some cases, my WTR toward you does not need to be very high at all for me to be sincere in my offer. Consider the limiting case where your 126 requirement of me is not actually a cost (i.e., cme ≤ 0). If that if the case, then I should be sincere in my offer to you whenever my WTR is even somewhat greater than zero. But what happens if we consider a case where the requirement of me is quite high relative to the benefit to you? This would often be the case when transacting with large corporations: The subjective cost of purchasing a new Toyota is quite high to me, but the subjective benefits of single additional sale are quite low to Toyota. In this case, my WTR would have to be quite large for me to be sincere in my offer. Yet, humans (modern ones, at least) often engage in exchanges that would seem to require extremely high WTRs to be sincere about them. This suggests that welfare tradeoff theory does not strictly apply to the domain of social exchanges. Indeed, the creation of a psychology that enabled economic exchange independent of WTR considerations may be a central element in allowing human-style trade. Could welfare tradeoff considerations apply in a relatively indirect way to social exchanges, however? One possible route would be through (usually implicit) side-constraints on the entitlements and obligations created by a social contract (Cosmides & Tooby, 2008). Assume that I have agreed to give you a ride to the airport in exchange for you cleaning my apartment. What happens if at the last minute my mother needs to be rushed to the hospital? Would you expect me to follow through on my obligation? To the extent that most people’s intuition would excuse me from my obligation (though also excusing you from yours), this suggests there are side-constraints—conditions under which my costs from providing you with a benefits are so great (that is, exceeding an implicit WTR), that you release me from 127 our contract. It remains for future work to study whether such side-constraints exist and if they involve implicit WTRs. Parting Thoughts: Existentialism Meets Evolutionary Psychology Part of me hopes that this work frightens you. I know it frightens me. If the theory presented here is even broadly correct, then our devotion to our friends, our children, our spouses, and our families is ultimately the product of magnitudes represented in our heads. Love and affection dissolve into syntactic operations, necessarily conducted without thought or foresight or feeling. Such is the paradox of the modern science of the mind: Sometimes looking up at humanity’s highest peaks of warmth and compassion is no different than staring into the abyss. 128 Appendix 1: Example Materials for Study 1 Instructions In the following questions, imagine you are given a decision between two options. Your choice determines how much money you and another person receive. If you choose the first option, a sum of money will be given to you and no money will be given to the other person. If you choose the second option, a sum of money will be given to the other person and no money will be given to you. For example, consider the decisions on the right: You Other Option 1 25 0 Option 2 0 20 You Other 15 0 0 20 You Other -13 0 0 20 In the first decision, you must choose between $25 for you or $20 for the other person. If you choose the first option, then you get $25 and the other person gets $0. If you choose the second option, you get $0 and the other person gets $20. In the third decision, you must choose between -$13 for you or $20 for the other person. If you choose the first option, then you would have to pay $13 and the other person gets zero. Choosing a negative value for yourself means you lose money. If you choose the second option then you get $0 and the other person gets $20. decision-maker. They are shown on the right: As an example, consider the decisions made by a hypothetical Option 1 Option 2 You Other 25 0 0 20 129 You Other 15 0 0 20 You Other -13 0 0 20 Based on the choices in the example above, for the first decision the decision-maker would receive $25 and the other person $0. In the second decision the decision-maker would receive $0 and the other $20. In the third decision the decision-maker would have to pay $13 and the other would receive $0. Please try to make each of your decisions independently of your other decisions. That is, try not to let any decision you make influence any of the other decisions you make. As you work through the decisions, assume that you cannot share any money you receive with the other person and that they cannot share with you. Also assume that neither the other person nor anyone else will know what choices you make. This survey is anonymous so even the experimenter will not be able to connect your decisions to your identity. In the following pages, you will be faced with a series of such decisions. For each decision, please circle your preferred option. There are no “right” or “wrong” answers to the questions. Please respond based on what feels appropriate to you. 130 Please think of a same-sex acquaintance who is not a close friend, but is someone you see on a regular basis. Please write their initials in this space: _________________ Keep this person in mind as you answer the following questions. 131 Please answer the following questions with regard to the same-sex acquaintance who is not a close friend but you see regularly. Their initials: ______ As you work through the decisions, complete one column at a time. Option 1 Option 2 You 54 0 Other 0 37 Option 1 Option 2 You -8 0 Other 0 23 Option 1 Option 2 You -26 0 Other 0 75 Option 1 Option 2 You 46 0 Other 0 37 Option 1 Option 2 You -3 0 Other 0 23 Option 1 Option 2 You -11 0 Other 0 75 Option 1 Option 2 You 39 0 Other 0 37 Option 1 Option 2 You 1 0 Other 0 23 Option 1 Option 2 You 4 0 Other 0 75 Option 1 Option 2 You 31 0 Other 0 37 Option 1 Option 2 You 6 0 Other 0 23 Option 1 Option 2 You 19 0 Other 0 75 Option 1 Option 2 You 24 0 Other 0 37 Option 1 Option 2 You 10 0 Other 0 23 Option 1 Option 2 You 34 0 Other 0 75 Option 1 Option 2 You 17 0 Other 0 37 Option 1 Option 2 You 15 0 Other 0 23 Option 1 Option 2 You 49 0 Other 0 75 Option 1 Option 2 You 9 0 Other 0 37 Option 1 Option 2 You 20 0 Other 0 23 Option 1 Option 2 You 64 0 Other 0 75 Option 1 Option 2 You 2 0 Other 0 37 Option 1 Option 2 You 24 0 Other 0 23 Option 1 Option 2 You 79 0 Other 0 75 Option 1 Option 2 You -6 0 Other 0 37 Option 1 Option 2 You 29 0 Other 0 23 Option 1 Option 2 You 94 0 Other 0 75 Option 1 Option 2 You -13 0 Other 0 37 Option 1 Option 2 You 33 0 Other 0 23 Option 1 Option 2 You 109 0 Other 0 75 132 Please answer the following questions with regard to the same-sex acquaintance who is not a close friend but you see regularly. Their initials: ______ As you work through the decisions, complete one column at a time. Option 1 Option 2 You 54 0 Other 0 37 Option 1 Option 2 You -8 0 Other 0 23 Option 1 Option 2 You -26 0 Other 0 75 Option 1 Option 2 You 46 0 Other 0 37 Option 1 Option 2 You -3 0 Other 0 23 Option 1 Option 2 You -11 0 Other 0 75 Option 1 Option 2 You 39 0 Other 0 37 Option 1 Option 2 You 1 0 Other 0 23 Option 1 Option 2 You 4 0 Other 0 75 Option 1 Option 2 You 31 0 Other 0 37 Option 1 Option 2 You 6 0 Other 0 23 Option 1 Option 2 You 19 0 Other 0 75 Option 1 Option 2 You 24 0 Other 0 37 Option 1 Option 2 You 10 0 Other 0 23 Option 1 Option 2 You 34 0 Other 0 75 Option 1 Option 2 You 17 0 Other 0 37 Option 1 Option 2 You 15 0 Other 0 23 Option 1 Option 2 You 49 0 Other 0 75 Option 1 Option 2 You 9 0 Other 0 37 Option 1 Option 2 You 20 0 Other 0 23 Option 1 Option 2 You 64 0 Other 0 75 Option 1 Option 2 You 2 0 Other 0 37 Option 1 Option 2 You 24 0 Other 0 23 Option 1 Option 2 You 79 0 Other 0 75 Option 1 Option 2 You -6 0 Other 0 37 Option 1 Option 2 You 29 0 Other 0 23 Option 1 Option 2 You 94 0 Other 0 75 Option 1 Option 2 You -13 0 Other 0 37 Option 1 Option 2 You 33 0 Other 0 23 Option 1 Option 2 You 109 0 Other 0 75 133 Please think of your closest same-sex friend. Please write their initials in this space: _________________ Keep this person in mind as you answer the following questions. 134 Please answer the following questions with regard to your closest same-sex friend. Their initials: ______ As you work through the decisions, complete one column at a time. Option 1 Option 2 You 54 0 Other 0 37 Option 1 Option 2 You -8 0 Other 0 23 Option 1 Option 2 You -26 0 Other 0 75 Option 1 Option 2 You 46 0 Other 0 37 Option 1 Option 2 You -3 0 Other 0 23 Option 1 Option 2 You -11 0 Other 0 75 Option 1 Option 2 You 39 0 Other 0 37 Option 1 Option 2 You 1 0 Other 0 23 Option 1 Option 2 You 4 0 Other 0 75 Option 1 Option 2 You 31 0 Other 0 37 Option 1 Option 2 You 6 0 Other 0 23 Option 1 Option 2 You 19 0 Other 0 75 Option 1 Option 2 You 24 0 Other 0 37 Option 1 Option 2 You 10 0 Other 0 23 Option 1 Option 2 You 34 0 Other 0 75 Option 1 Option 2 You 17 0 Other 0 37 Option 1 Option 2 You 15 0 Other 0 23 Option 1 Option 2 You 49 0 Other 0 75 Option 1 Option 2 You 9 0 Other 0 37 Option 1 Option 2 You 20 0 Other 0 23 Option 1 Option 2 You 64 0 Other 0 75 Option 1 Option 2 You 2 0 Other 0 37 Option 1 Option 2 You 24 0 Other 0 23 Option 1 Option 2 You 79 0 Other 0 75 Option 1 Option 2 You -6 0 Other 0 37 Option 1 Option 2 You 29 0 Other 0 23 Option 1 Option 2 You 94 0 Other 0 75 Option 1 Option 2 You -13 0 Other 0 37 Option 1 Option 2 You 33 0 Other 0 23 Option 1 Option 2 You 109 0 Other 0 75 135 Initials of closest same-sex friend: ______ Option 1 Option 2 You 28 0 Other 0 19 Option 1 Option 2 You -16 0 Other 0 46 Option 1 Option 2 You 99 0 Other 0 68 Option 1 Option 2 You 24 0 Other 0 19 Option 1 Option 2 You -7 0 Other 0 46 Option 1 Option 2 You 85 0 Other 0 68 Option 1 Option 2 You 20 0 Other 0 19 Option 1 Option 2 You 2 0 Other 0 46 Option 1 Option 2 You 71 0 Other 0 68 Option 1 Option 2 You 16 0 Other 0 19 Option 1 Option 2 You 12 0 Other 0 46 Option 1 Option 2 You 58 0 Other 0 68 Option 1 Option 2 You 12 0 Other 0 19 Option 1 Option 2 You 21 0 Other 0 46 Option 1 Option 2 You 44 0 Other 0 68 Option 1 Option 2 You 9 0 Other 0 19 Option 1 Option 2 You 30 0 Other 0 46 Option 1 Option 2 You 31 0 Other 0 68 Option 1 Option 2 You 5 0 Other 0 19 Option 1 Option 2 You 39 0 Other 0 46 Option 1 Option 2 You 17 0 Other 0 68 Option 1 Option 2 You 1 0 Other 0 19 Option 1 Option 2 You 48 0 Other 0 46 Option 1 Option 2 You 3 0 Other 0 68 Option 1 Option 2 You -3 0 Other 0 19 Option 1 Option 2 You 58 0 Other 0 46 Option 1 Option 2 You -10 0 Other 0 68 Option 1 Option 2 You -7 0 Other 0 19 Option 1 Option 2 You 67 0 Other 0 46 Option 1 Option 2 You -24 0 Other 0 68 136 Relationship Quality Index, Acquaintance Version [Subjects did not see a title to this scale or any other scale] Please answer the following questions with regard to the same-sex acquaintance you listed before. Their initials: ______ How much do you like this person? 1 2 3 Not at All 4 5 6 Somewhat 7 Very Much How close do you feel towards this person? 1 2 3 Not at All 4 5 6 7 Somewhat Very How much could you rely on this person if you needed help? 1 2 3 Not at All 4 5 6 Somewhat 7 Very Much How much could this person rely on you if they needed help? 1 2 3 Not at All 4 5 6 Somewhat 7 Very Much How willing would you be to do this person a favor? 1 2 3 Not at All 4 5 6 Somewhat 7 Very How willing would this person be to do a favor for you? 1 Not at All 2 3 4 5 Somewhat 6 7 Very 137 Appendix 2: Screen Shots of Computerized Tasks 138 Appendix 3: Materials for Study 3 Script for Payment Condition Hi, thank you for coming today. My name is ______ and I’m going to be running today’s experiment. In today’s experiment, you’re going to be making a series of monetary decisions involving yourself and several other people and filling out some other survey questions. Before we begin, please read and sign this consent form. The first thing we need to do is determine who you will be making decisions about. We need you to select your closest-same sex friend and a same-sex acquaintance. However, they need to be people who are listed in the student directory. Please follow the instructions on the screen to do this. As you look through the directory, please mark the pages that list your friend and acquaintance with a stickynote. Raise your hand when you are done and I’ll come and introduce the next part of the experiment. (Go away and wait for them to raise their hand.) Okay, now let me show you how a decision works. (Hold up a large example.) Each decision includes two options. In the first option, you will get a sum of money and the other person will receive no money. In the second option, the other person will get a sum of money and you will get no money. All you need to do is click the option you’d prefer. There is no right or wrong answer, just pick whatever feels appropriate to you. In some of the cases, the sum of money for you will be negative. (Hold up example.) This means that you would have to pay money if you choose option 1. So if you choose option 1, the other person would get no money and you would lose money. If you choose option 2, you get no money and the other person gets a sum. You are going to be presented with many decisions like these. You, your friend, or your acquaintance may receive actual money (on top of your show-up fee) based on the decisions you make. Let me explain how payment works. Please follow along on the sheet. No matter what happens, you will receive $10 for coming today. After the experiment is over, you will role these dice. (Show them the dice.) If you roll double 6’s, two things happen. First, you will receive 30 additional dollars to your show up fee. Second, you will randomly select one of the decisions you made and we will make that one decision come true. Either you, your friend, or your acquaintance will receive money based on the decision you randomly select. Any gains or losses that you personally make will be added to or subtracted from the $30. Because of this, please take all the decisions seriously. Make them as if they were all real because you don’t know which one actually might be real. Just to make sure we’re ready to begin, I need to ask you a few questions: (Show the first example): What happens if you choose option 1? If you choose option 2? (Show the second example): What happens if you choose option 1? Option 2? Do any of your decisions actually affect you or others monetarily? 139 What happens if you roll double 6s? What happens if you roll anything but double 6s? Your participation is entirely voluntary and you are free to withdraw from the study at any time without losing your research credit or being penalized in any way. Your responses will not be associated with your identity. That is, you will remain anonymous. The computer will have all the instructions you need from this point on. If you have any questions as you go along, please let me know. Script for No Payment Condition Hi, thank you for coming today. My name is ______ and I’m going to be running today’s experiment. In today’s experiment, you’re going to be making a series of monetary decisions involving yourself and several other people and filling out some other survey questions. Before we begin, please read and sign this consent form. The first thing we need to do is determine who you will be making decisions about. We need you to select your closest-same sex friend and a same-sex acquaintance. However, they need to be people who are listed in the student directory. Please follow the instructions on the screen to do this. As you look through the directory, please mark the pages that list your friend and acquaintance with a stickynote. Raise your hand when you are done and I’ll come an introduce the next part of the experiment. (Go away and wait for them to raise their hand.) Okay, now let me show you how a decision works. (Hold up a large example.) Each decision includes two options. In the first option, you will get a sum of money and the other person will receive no money. In the second option, the other person will get a sum of money and you will get no money. All you need to do is click the option you’d prefer. There is no right or wrong answer, just pick whatever feels appropriate to you. In some of the cases, the sum of money for you will be negative. (Hold up example.) This means that you would have to pay money if you choose option 1. So if you choose option 1, the other person would get no money and you would lose money. If you choose option 2, you get no money and the other person gets a sum. You are going to be presented with many decisions like these. The decisions are hypothetical and you, your friend, and your acquaintance will not receive any money based on them. Let me explain how payment works. Please follow along on the sheet. No matter what happens, you will receive $10 for coming today. After the experiment is over, you will role these dice. (Show them the dice.) If you roll double 6’s, you will receive 30 additional dollars to your show-up fee. Although the decisions are hypothetical, please take them all seriously. Please try to make them as if they were all real because you don’t know which one actually might be real. Just to make sure we’re ready to begin, I need to ask you a few questions: 140 (Show the first example): What happens if you choose option 1? If you choose option 2? (Show the second example): What happens if you choose option 1? Option 2? Do any of your decisions actually affect you or others monetarily? What happens if you roll double 6s? What happens if you roll anything but double 6s? Your participation is entirely voluntary and you are free to withdraw from the study at any time without losing your research credit or being penalized in any way. Your responses will not be associated with your identity. That is, you will remain anonymous. The computer will have all the instructions you need from this point on. If you have any questions as you go along, please let me know. Examples You Other Option 1 25 0 Option 2 0 20 You Other Option 1 -13 0 Option 2 0 20 141 Appendix 4: Material for Study 4 Verbal Script Hi, thanks for coming today my name is ________ and I’ll be running today’s study. In today’s study we’re going to be asking you questions that involve yourself and several other people. These others might be friends, acquaintances, family members, or other people you know. Most the questions you will be answering will involve hypothetical monetary decisions. Let me give you a couple examples. In this first example, the decision involves yourself and another person. You task is to choose between two options. In this example, if you choose option 1, then you receive $25 and the other person receives nothing. But if you choose option 2, then you receive nothing and the other receives $20. Let’s look at another example. Notice in this example, option 1 has a negative amount for you. If you choose option 1 you would lose money, $13, and the other person gets nothing. But if you choose option 2, you would get nothing and the other person would get $20. Do you guys have any questions at this point? Let’s look at a third example. In this example, the decision involves two other people, but does not involve yourself. But still, your task is to choose the option that YOU prefer. Your task task is NOT to guess what you think the other people would choose. Your task is to choose the decision that you find most desirable. So you might prefer option 1 which means that Other 1 gets $25 and Other 2 gets nothing. Or you might prefer option 2 which means that Other 2 gets $20 and Other 1 gets nothing. Does anyone have any questions about that? I just want to make sure it’s clear: when a decision involves two other people and not you, your task is still to choose the option that you find most desirable, not the option you think one of the other people would choose. In these questions as well, negative numbers will also appear. Here, too, they mean that a person would lose money. As you work through the decisions, please keep something very important in mind. Do you best to make each decision independently of all the others. Do not let previous decisions you’ve made influence any decisions you are thinking about. To make this easier, imagine that at the end of the experiment we randomly select just one of the decisions that you made and we make this one, single decision real. If only one decision can become real, you want to make each decision independently of all others because you just don’t know which decision might be real. In other words, make each decision as if it were the only decision you were making and you had to live with that decision alone. This is very important, so does anyone have any questions at this point? Just a few more quick things. There are no right or wrong answers: please make whatever decisions feel appropriate to you. As you make your decisions, please assume there is no sharing allowed: you cannot give any money you would earn to the others and they cannot give any money they earn to you or to each other. The 142 decisions are hypothetical, so the people you make decisions about will never know what those decisions were. Computerized Instructions For this experiment we'd like you to think of six different people that you know. Please try to think of a range of people, from family members, to close friends, to notthat-close friends, to acquaintances, even to people you mildly dislike. Try to think of a broad range. On the following six screens we'd like you to enter these people's initials. We'll be using the initials to refer to these people later, so please do NOT select people with the same initials. We want to make sure that you know exactly who we're asking about. Make sure to enter only ONE set of initials on each screen. We recommend typing initials in all caps so it's easier to read later. Please enter the initials of ONE person that you know. Try to select from a broad range of people you know (family members, friends, acquaintances, even people you don't like that much). Make that you do not use the same person twice or the same set of initials twice. We'll be using the initials to refer to the person later. We recommend typing the initials in all caps as it makes it easier to read later. Remember, enter the initials of only ONE person on this screen. You have already entered: <person2> <person3> <person4> <person5> <person6> In the following questions, you are given a decision between two options. The decisions are all hypothetical, but please imagine that your choice determines how much money you and several other people will receive. Some of the decisions will involve yourself and one other person. If you choose one of the options, a sum of money will be given to you and no money will be given to the other person. If you choose the other option, a sum of money will be given to the other person and no money will be given to you. For example, consider the decisions below: Option 1 Option 2 You Other 25 0 0 20 143 You Other 15 0 0 20 You Other -13 0 0 20 In the first decision, you must choose between $25 for you or $20 for the other person. If you choose the first option, then you get $25 and the other person gets $0. If you choose the second option, you get $0 and the other person gets $20. In the third decision, you must choose between -$13 for you or $20 for the other person. If you choose the first option, then you would have to PAY $13 and the other person gets zero. Choosing a negative value for yourself means you LOSE money. If you choose the second option then you get $0 and the other person gets $20. As an example, consider these decisions made by a hypothetical decision-maker: You Other Option 1 25 0 Option 2 0 20 You Other 15 0 0 20 You Other -13 0 0 20 Based on the choices in the example above, for the first decision the decision-maker would receive $25 and the other person $0. In the second decision the decision-maker would receive $0 and the other $20. In the third decision the decision-maker would have to PAY $13 and the other would receive $0. Other decisions may involve two other people, but NOT you. In these decisions, we would still like you to pick the option that YOU would prefer. Do not worry about what the other people would prefer. For example, consider the decisions below: Other 1 Other 2 Other 1 Other 2 Other 1 Other 2 Option 1 25 0 15 0 -13 0 Option 2 0 20 0 20 0 20 In these decisions, your task is to choose the option that YOU prefer. So in the first decision above, you may prefer to give Other 1 $25 and Other 2 $0. If so, you would select Option 1. Or you may prefer to give Other 2 $25 and Other 1 $0. If so, you would select Option 2. Remember, please do not answer based on what you think the other people would choose. Negative numbers work the same here as in decisions involving yourself. If you choose an option with a negative number, one person would lose money and the other person would receive nothing. Please make sure to pay attention because which option is on top and which is on the bottom will change throughout the experiment. As you work through the decisions, keep the following in mind--it is very important: Please make all of your decision independently of the others. DO NOT LET ANY 144 DECISIONS INFLUENCE ANY OTHER DECISIONS. To help you do that, imagine that at the end of the experiment we randomly select JUST ONE of the decisions you made and we make only that ONE decision come true. Because only one of your decisions would become real in this scenario, please make each of your decisions independently of your other decisions. After all, if only one can come true, you should make each decision as if it were the only one you were making. Make every decision as if you would have to live with that decision made in isolation. As you work through the decisions, assume that you CANNOT share any money you receive with other people and that they CANNOT share with you or with each other. Also assume that neither the other people nor anyone else will know what choices you make. This survey is anonymous so even the experimenter will not be able to connect your decisions to your identity. In the following screens, you will be faced with a series of such decisions. For each decision, please choose your preferred option. There are no "right" or "wrong" answers to the questions. Please respond based on what feels appropriate to you. Please make sure to pay attention because which option is on top and which is on the bottom will change throughout the experiment. 145 Appendix 5: Materials for Study 5 In the following questions, you are given a decision between two options. The decisions are all hypothetical, but please imagine that your choice determines how much money you and your [friendacq], [friacqInitials], will receive. The decisions will involve yourself and this person. If you choose one of the options, a sum of money will be given to you and no money will be given to the other person. If you choose the other option, a sum of money will be given to the other person and no money will be given to you. Press any key to continue. (end screen) For example, consider the three decisions below: 25 vs 20 15 vs 20 -13 vs 20 In the first decision, you must choose between $25 for you or $20 for the other person. If you choose the first option, then you get $25 and the other person gets $0. If you choose the second option, you get $0 and the other person gets $20. In the third decision, you must choose between -$13 for you or $20 for the other person. If you choose the first option, then you would have to PAY $13 and the other person gets zero. Choosing a negative value for yourself means you LOSE money. If you choose the second option then you get $0 and the other person gets $20. (end screen) As an example, consider these decisions made by a hypothetical decision-maker. The decision-maker's preferred choices have been emphasized. 25 vs 20 15 vs 20 -13 vs 20 Based on the choices in the example above, for the first decision the decision-maker would receive $25 and the other person $0. In the second decision the decision-maker would receive $0 and the other $20. In the third decision the decision-maker would have to PAY $13 and the other would receive $0. (end screen) 146 As you work through the decisions, keep the following in mind--it is very important: Please make all of your decisions independently of the others. DO NOT LET ANY DECISIONS INFLUENCE ANY OTHER DECISIONS. To help you do that, imagine that at the end of the experiment we randomly select JUST ONE of the decisions you made and we make only that ONE decision come true. Because only one of your decisions would become real in this scenario, please make each of your decisions independently of your other decisions. After all, if only one can come true, you should make each decision as if it were the only one you were making. Make every decision as if you would have to live with that decision made in isolation. (end screen) As you work through the decisions, assume that you CANNOT share any money you receive with the other person and that they CANNOT share with you. Also assume that neither the other person nor anyone else will know what choices you make. This survey is anonymous so even the experimenter will not be able to connect your decisions to your identity. (end screen) In the following screens, you will be faced with a series of such decisions. For each decision, please choose your preferred option. There are no "right" or "wrong" answers to the questions. Please respond based on what feels appropriate to you. Please make sure to pay attention because which option is on top and which is on the bottom will change throughout the experiment. As you work through these questions, you may NOT write anything down. (end screen) You will now make decisions regarding yourself and the [friendacq], [friacqInitials], who came with you to the experiment. (end screen) In the following questions you will be asked to judge which of two sums of money is larger. However, the sums are not expressed in the same currency. One sum will be expressed in United States dollars, the other will be expressed in euros. (The euro is the unit of currency in many European countries.) 147 (end screen) To help you determine which sum is larger, we will give you an exchange rate for the two currencies. An exchange rate allows you to convert one currency to another. We will always be giving you an exchange rate that allows you to convert euros to dollars. For example, assume that the exchange rate that converts euros to dollars is [oneHalf] (one half). (We are only using [oneHalf] as an example. When we ask you questions, it is very likely the exchange rate will be something other than [oneHalf].) If the two sums of money were 5 US dollars and 20 euros, you would determine which sum was greater as follows. First, multiply the number of euros by the exchange rate: 20 euros * [oneHalf] = 10 dollars Then compare the number of US dollars (5) with the number of euros expressed as dollars (10). As you can see, the sum originally expressed as euros is a larger sum of money. So in this example you would select 20 euros as the larger. (end screen) Let’s do 3 more examples. In each case, your task is to select the option that has the larger sum of money. 20 vs 30 5 vs 14 -15 vs 22 In the first example, you must decide whether 20 dollars is greater or smaller than 30 euros. In the second example, you must decide if 5 dollars is greater or smaller than 14 euros. In the third example, you must decide if -15 dollars is greater or smaller than 22 euros. Be sure to notice when one of the numbers is NEGATIVE. You can think of 15 dollars as a loss of 15 dollars. Which options do you think have the largest sum of money. (end screen) We’ve highlighted the correct answers in red. The first example asks which is bigger, 20 US dollars or 30 euros? Our exchange rate is [oneHalf] (one half), so we multiply 30 euros by [oneHalf], which equals 15 US dollars. This means that 30 euros are worth 15 US dollars. The sum of 20 US dollars 148 available in Option 1 is greater than 15, so in this example you would select Option 1 as having the greater amount of money. In the second example, we multiply 14 euros by [oneHalf], which equals 7 US dollars. 7 dollars is greater than 5 dollars, so in this example you would select Option 2 as having the greater amount of money. In the third example, we multiply 22 euros by [oneHalf], which equals 11 US dollars. 11 dollars is greater than -15 dollars, so in this example you would select Option 2 as having the greater amount of money. Be sure to notice whether a sum is negative. Although 15 dollars is greater than 11 dollars, NEGATIVE 15 dollars is less than 11 dollars. (end screen) Although there is an actual exchange rate in the real world that allows you to convert euros into dollars, we will not be using that exchange rate in this experiment. Instead, we will be giving you a hypothetical exchange rate. For you, the exchange rate will be: [exchangeRate] We will present the exchange rate along with every question, so you do not need to memorize it. (end screen) As you work through these questions, please DO NOT write anything down. (end screen) If you are not sure of the answer, please take your best guess. Please make sure to pay attention because which option is on top and which is on the bottom will change throughout the experiment. (end screen) We would now like you to answer another set of questions about which of two sums is larger. As before one sum is expressed as US dollars and the other as euros. There are two changes that make this set of questions different from the first set: First, the exchange rate will be presented as a [secondRate] instead of as a [firstRate]. 149 Second, we will be giving you a new exchange rate to use in the questions. Your new exchange rate is: [exchangeRate] Following this screen are all the original instructions, with the new [secondRate] format of the exchange rate used in the examples. Please review the instructions before you begin. 150 Appendix 6: Probing the Sensitivity of Welfare Tradeoff Computations The experiments of Chapter 2 showed that welfare tradeoff decisions are made in a consistent fashion and presented evidence to rule out the possibility that WTR decisions were being implemented by conscious manipulation of numbers. For instance, despite decisions being randomly mixed together, Study 5 showed that subjects’ WTRs subtly decreased as the total amount at stake was increased. If WTR decisions were being produced by the conscious manipulation of numbers in an attempt to be consistent, why go through the trouble of systematically changing them as a function of different absolute magnitudes—especially when decisions of different magnitudes are all randomly mixed? The goal of the experiment presented in this appendix is to further probe the sensitivity of welfare tradeoff computations to apparently minor differences between different decisions, even when the different decisions are all randomly mixed. In the original “standard” version of the WTR task (“Do you want $5 to be allocated to you or $10 to be allocated to your friend?), both possible allocations involve one person staying at their baseline level of utility and the other person gaining utility. Although this describes one type of social interaction, there may be fundamentally different types of social interactions, each one defined by how different actors are moved up or down from their baseline utility (see also the section “The Elementary Forms of Social Interaction” in Chapter 4). For example, imposing a cost brings a person’s utility below what it was before a decision, and therefore affects them in a way that failing to change their utility does not. The consequences of 151 moving a person to a utility lower than in the absence of interacting with you may be more serious than not affecting them at all. Such a stance is consistent with optimal foraging theory (Rode, et al., 1999; Stephens, et al., 2007) and may explain why psychologists routinely find that losses and gains are treated differently (e.g., Tversky & Kahneman, 1992). Thus, imposing a cost on someone to benefit yourself might well lead to different inferences on the part of the other person, provoking different consequences (e.g., aggression), compared to failing to confer a benefit to gain a benefit (which their utility unchanged), or even inflicting a cost to avoid a cost (since in this case there is no way to avoid a situation where someone’s utility will go below baseline). Thus, as very simple test of these ideas, I modified the standard version of the WTR task, preserving the sign and magnitude of the ratio between the allocations, but changing whether the allocations represented positive or negative deviations from a person’s baseline. An indifference to the alternatives in all four of these variations would imply identical WTRs. But would the mind actually treat these tasks identically? In the “loss” version of the WTR task, both deviations are negative (allocate a loss of $5 to yourself or a loss of $10 to your friend?). In this version, someone’s utility must be decreased, making it symmetric to the standard version, which requires that someone’s utility increase. In the “entitlement” version, the deviation for the self is positive and the deviation for the other is negative (allocate a gain of $10 to yourself and simultaneously a loss of $10 to your friend, or leave both at baseline?). Causing another person to incur a loss has potentially more severe 152 consequences (e.g. eliciting aggression) than forgoing an opportunity to allocate them a gain. This may lead the mind to be more generous—to express a higher WTR—in the entitlement version of the task. In the “help” version, the deviation for the self is negative and the deviation for the other is positive (allocate a loss of $5 to yourself and simultaneously a gain of $10 to your friend, or leave both of you at baseline?). The mirror image of the entitlement task, the help task asks subjects to actively move themselves below baseline to increase another’s utility. Because this is especially costly for the self—and forgoing it would leave the other’s utility unchanged—this may lead the mind to be more selfish—to express a lower WTR—in the help version of the task. Because the differences between formats might depend on the nature of the subjects’ relationships with the target, I also examined four different types of relationships. To test these ideas, a group of 52 subjects at the University of California, Santa Barbara, completed the welfare tradeoff task used in Chapter 2, with several important differences. First, each of the specific 60 choices (e.g. $54 for the self or $37 for the other) was completed four times, once each in one of the above formats. Importantly, the instructions for the task did not describe it as involving four types of decisions. Instead, subjects learned that they would be making a number of decisions and learned how the mechanics of gaining or losing money based on the sign of the number worked. Second, for a specific target, all of the choices across all the formats, were randomly mixed together, presented one-by-one by a computer. This means that each subject made 240 decisions about, e.g., their friend. Third, all subjects answered 153 about an acquaintance, their best friend, and their mother (or “the female relative who raised” them, if they were not raised by their biological mother). Fourth, a subset of 20 subjects also completed the task regarding an enemy (described as “someone you do not like and feel is against you, a person you may even feel is an enemy”). The order of the first three targets was randomized. If a subject was asked about an enemy, this always came last. I included the enemy condition to further explore the boundaries of WTR computation. Unfortunately, because of the large number of decisions subjects were making, most did not have time within the allotted experimental session to complete the enemy portion. (Regardless, subjects’ WTRs toward an enemy were uniformly very low.) Despite the four formats being randomly mixed together within a target, are there systematic differences between how subjects complete the standard, loss, help, and entitlement versions of the task? Yes, as can be seen in Figure 4, subjects clearly revealed different welfare tradeoff ratios not only as a function of target, but, importantly, as a function of the format. Subjects’ sensitivity to the format and the target was tested statistically by using repeated-measures ANOVAs with target and format as factors and WTR scores as the dependent variable. Using data from all subjects (hence excluding data about enemies), there was a main effect of target type (Wilks’ λ = .40, F(2,50) = 37.67, p = 10-9, partial η2 = .60), a main effect of format (Wilks’ λ = .23, F(3,49) = 54.46, p = 10-14, partial η2 = .77), and an interaction between the two (Wilks’ λ = .51, F(6,46) = 7.38, p = 10-4, partial η2 = .49). This was also true using only subjects who also had 154 data regarding enemies (hence including the enemy data): There was a main effect of target type (Wilks’ λ = .13, F(3,17) = 39.39, p = 10-7, partial η2 = .87), a main effect of format (Wilks’ λ = .23, F(3,17) = 18.72, p = 10-4, partial η2 = .77), and an interaction between the two (Wilks’ λ = .18, F(6,11) = 5.57, p = .005, partial η2 = .82). 155 1.4 1.2 1 WTR 0.8 Standard 0.6 Loss Entitle Help 0.4 0.2 0 -0.2 Enemy Acq Friend Mom -0.4 Figure 4. Revealed welfare tradeoff ratios as a function of target and format. Error bars represent ±SEM. Put in words, subjects were most generous toward their mothers, moderately generous toward their friends, and the least generous toward their acquaintances. Moreover, subjects had negative WTRs toward their enemies, meaning (e.g.) that subjects would incur a cost to avoid allocating money to their enemy. The entitlement version elicited the most generosity: Subjects were relatively unwilling to reduce another person’s utility below baseline even if that would increase the subject’s utility. The help version elicited the least generosity: Subjects were relatively unwilling to reduce their own utility below baseline even if that would increase another’s utility. The standard and loss version both revealed moderate and roughly equivalent levels of generosity. The interaction between format and target is largely driven by the help format: The relationship with the target (mom vs. friend vs. 156 acquaintance) makes relatively little difference in expressed WTRs on the help task. For all other tasks, subjects’ expressed WTRs are strongly affected by the nature of the target. That there was a significant interaction, even when the data for enemies is excluded, suggests that target and format are not simply additive effects. Instead, the system for computing welfare tradeoffs simultaneously integrates both pieces of information in its decision-making process. This speaks to an important question: Does the mind really compute WTRs or does it only compute the upstream factors (such as kinship indices) and simply add or average these factors when making welfare tradeoffs? If the latter were true, then we would expect only additive effects of manipulating the format of the decisions. This would occur because the internal representations only add or subtract from one another to arrive at a final decision. By contrast, the mechanisms for making welfare tradeoffs are hypothesized to integrate multiple pieces of information, with some information potentially enhancing, moderating, or attenuating the effects of other information on the final output. Subjects clearly distinguish different formats and targets from each other. Despite the randomization, if we examine their decisions within a given format and target, do they make their decisions consistently? Yes: Analyzing the consistency maximization scores shows that subjects are consistent in their responses (see Table 6). Relative to the random null of 70.84%, consistency maximization scores were always at least 93% (all rs relative to the random null > .93, all ps < 10-10). Analyzing the perfect consistency scores shows also that subjects are consistent in their 157 responses (see Table 6). Relative to the random null of 1.1%, perfect consistency scores were always at least 57% (all rs relative to the random null > .84, all ps < 10-8). Note that consistency scores, especially on the perfect consistency measure, were lower in this study in than in the main studies. This may have occurred for at least two reasons: First, subjects answered so many more questions in this study than in the main studies that their attention may have wavered, leading to less consistency. Second, the welfare tradeoff system may not be designed to switch so quickly between decisions with different formats, leading to a decrement in performance. Nonetheless, subject clearly distinguished between the different formats despite the randomization within a target. The welfare tradeoff system appears sensitive to even apparently minor variations on the task. Table 6 Consistency Score Means (Standard Deviations) as a Function of Target and Format Consistency Maximization Mom Friend Acquaintance Enemy Perfect Consistency Mom Friend Acquaintance Enemy Standard 94% (07%) 94% (07%) 95% (07%) 96% (07%) Loss 96% (06%) 94% (07%) 96% (06%) 97% (05%) Entitlement 95% (07%) 93% (09%) 93% (07%) 95% (08%) Help 95% (07%) 95% (08%) 97% (06%) 98% (06%) 59% (32%) 61% (34%) 70% (31%) 78% (30%) 70% (30%) 60% (31%) 75% (30%) 78% (27%) 70% (36%) 58% (38%) 57% (31%) 70% (29%) 67% (31%) 70% (35%) 80% (26%) 88% (23%) 158 Appendix 7: Dissociating Welfare Tradeoff Computation from Other Intuitive Economic Computation: A Pilot Functional Magnetic Resonance Imaging Study3 The findings in Chapter 2 showed that the welfare tradeoff task dissociated from a logically identical task involving explicit conscious manipulation of magnitudes (the currency exchange task). But these data leave open an additional alternative to the hypothesis that the mind contains specialized mechanisms for computing welfare allocations and tradeoffs: Perhaps the mind has a general economic preference calculator. Although no one has proposed a computational model of a single mechanism that could adaptively compute all possible economic valuations, if such a mechanism did exist, it would operate over welfare tradeoffs. But it would also compute the valuation of hot dogs, of donating to charity, of investing in a dot-com, or of going Dutch on a date. Showing that welfare tradeoff calculations are handled, in part, by a separate system could not necessarily be shown by the type of data (e.g., reaction time data) used in Chapter 2. Even if there are numerous separate systems for computing different types of economic valuation, they may all operate with considerable speed and make all such calculations feel phenomenologically intuitive. Previous work using neuroimaging and behavioral measures has dissociated time preferences from risk preferences (Weber & Huettel, 2008) and work using only behavioral measures has dissociated WTR-like preferences from time preferences 3 Scott Grafton collaborated in design of the study presented here. Any errors of analysis or interpretation are completely mine, however. 159 (Rachlin & Jones, 2008b), suggesting that separate systems are involved. To directly address this issue, I instead turned to a very different approach from my previous studies: functional magnetic resonance imaging (fMRI). Briefly, fMRI measures changes in regional BOLD (blood-oxygenation-level dependent) signal in the brain. Greater BOLD signal implies that a population of neurons has been activated (Hashemi, Bradley, & Lisanti, 2004). For my purposes, I imaged subjects while they completed several experimental tasks. If a population of neurons shows significantly greater BOLD signal for one task relative to another, that would imply that the first task preferentially activates those neurons. This would demonstrate a neural dissociation between the two tasks. In this study, I compared the welfare tradeoff task to two versions of a temporal discounting task (see Chapter 2 on temporal discounting; details of the method are given at the end of this appendix). The basics of the welfare tradeoff task were retained from previous studies. Temporal discounting is an appropriate comparison because it is also an economic preference and tradeoffs involving temporal discounting are made intuitively; i.e., subjects do not consult a conscious magnitude; they instead choose what “feels” right. The first temporal discounting task asked subjects to choose between an earlier smaller benefit and a larger later benefit as they the subject would want (self temporal discounting). For instance, in the self temporal discounting task, subjects would need to decide whether they want $54 tomorrow or $98 in six months. The other temporal discounting task asked subjects to make the same choices except to complete them as they believe their closest friend 160 would want (friend temporal discounting). For example, in the friend temporal discounting task, subjects would need decide if their friend would want $54 tomorrow or $98 in six months. This latter task not only controls for the economic nature of the task, but also for the presence of another social agent. If a brain region is differentially active in the WTR task relative to both temporal discounting controls, then this would strongly suggest that the different task are handled by different neurocognitive systems. Before proceeding to the data, I note a major caveat: This study is only a pilot study consisting of just eight subjects and its results must be treated with extreme caution and only interpreted as preliminary. Despite this, do the data support a neurocognitive dissociation between welfare tradeoffs and temporal tradeoffs? First, note that reaction time data in this experiment did not reveal that the WTR task was either substantially easier or more difficult than the temporal discounting tasks. Subjects completed WTR decisions on average in 1.61sec (95% Confidence Interval: 1.22 to 2.00). Moreover, they completed the self temporal discounting task on average in 1.59sec (95% CI: 1.15 to 2.03) and the friend temporal discounting task on average in 1.71sec (95% CI: 1.22 to 2.19). (As a point of comparison, in Study 5 of Chapter 2, subjects completed the WTR in 1.8sec on average, but took about 3.1sec on average to complete the currency exchange tasks.) Interestingly, a paired-samples t-test revealed the friend temporal discounting task to be significantly slower than the self version, t(7) = 2.94, p = .041. The differences between the WTR and self temporal discounting task and the WTR 161 and other temporal discounting task were not significant, ps > .38. Thus, as expected, the three tasks employed in this pilot imaging study are roughly equivalent in ease for the subjects. Moreover, the WTR task was neither completed the fastest nor the slowest. Consistent with the hypothesis that there is a specialized system for computing welfare tradeoffs, a group-level analysis showed that the welfare tradeoff task was associated with significant activation in right dorsomedial prefrontal cortex (rDMPFC), Brodmann’s area (BA) 8, relative to both the self and friend temporal discounting tasks (see Figure 5 and the first rows of the top and bottom halves of Table 7). The peak activation for the cluster revealed in the self temporal discounting comparison was located at (23, 37, 44) and for the cluster revealed in the friend comparison at (21, 31, 49). The WTR/self discounting and WTR/other discounting activations are roughly 8mm apart. Do they really represent activation in the “same” place? Although fMRI involves measuring activity at many thousands of voxels (cube-shaped “volume elements” representing tiny portions of the brain), voxels are not statistically independent. Instead, there are only a much smaller number of statistically independent regions within the brain, called resels (for “resolving elements”). (Resels are not actual physical regions of the brain; instead, they are a theoretical estimate of the number of independent comparisons.) If two points fall within the same resel, then they represent activation of the “same” region of the brain. For the self temporal discounting comparison, the size of a resel is 239 voxels, with each voxel being 2mm 162 on a side. If a resel was perfectly spherical, then two points could be at most 16mm apart. (A spherical shape works against concluding that two points belong to the same resel.) For the friend comparison, the size of a resel is 204 voxels, implying that two points could be at most 14mm apart. In either case, the two peak activations in the rDMPFC in the self and friend comparisons are closer than this, suggesting that they do lie within the “same” region of the brain and may represent identical activations. (The calculations about maximum distance used the native coordinates calculated by SPM—not the Talairach coordinates presented in the tables—because the SPM coordinates are the coordinates that the resels are defined within. In SPM’s native coordinates, the two activations are roughly 9mm apart.) Consistent with the hypothesis that the self and friend temporal discounting task activate the same region, and this region differs from that activated by the WTR task, I found that the activation in the WTR–self temporal discounting comparison at (23, 37, 44) was in the “same” place as another activation in the WTR–friend temporal discounting comparison. This latter activation was located in right dorsomedial prefrontal cortex, BA 6, at (21, 31, 35). Both BA 6 and 8 have been implicated in various types of social reasoning tasks (Barbey, Krueger, & Grafman, 2009; Ermer, Guerin, Cosmides, Tooby, & Miller, 2006; Fiddick, Spampinato, & Grafman, 2005), including moral reasoning tasks that involve trading off welfare (Greene, Sommerville, Nystrom, Darley, & Cohen, 2001). 163 Finally, there was an additional shared activation in left caudate, located at (1, 1, 12) for the WTR–self temporal discounting comparison and (-3, -1, 17) for the WTR–friend comparison. For transparency, I also present significant activations comparing the two temporal discounting tasks to the WTR task (Table 8) and the two temporal discounting tasks to each other (Table 9). The most direct comparison to previous neuroscientific literature on intertemporal choice would be the self temporal discounting–WTR comparison at the top of Table 8. Several of these activations, such as in the caudate and cingulate cortex, are consistent with past work (Kable & Glimcher, 2007; McClure, Laibson, Loewenstein, & Cohen, 2004; Weber & Huettel, 2008). In sum, welfare tradeoff decisions dissociate from another intuitive economic preference, temporal discounting. This holds true relative to two controls tasks, discounting as the self prefers and discounting as a friend prefers. This result is not predicted by an alternative account holding that welfare tradeoff computations are made by a general economic preference calculation system. It is, however, consistent with the hypothesis that the mind contains specialized neurocognitive mechanisms for computing welfare allocations and tradeoffs. 164 WTR > Self Temporal Discounting 10 5 0 WTR > Friend Temporal Discounting 10 5 0 Figure 5. Neural activations comparing the welfare tradeoff task to the two temporal discounting tasks. The figure for the self temporal discounting contrast is positioned at (17, 34, 46) in Talairach coordinates; the activation in rDMPFC at Brodmann’s area (BA) 8 can be seen. The figure for the friend temporal discounting contrast is positioned at (21, 35, 34) in Talairach coordinates; the activations in rDMPFC at BAs 6 & 8 can be seen. Color map represents t-values. 165 Table 7 Significant Activations of the Welfare Tradeoff Task Relative to the Temporal Discounting Tasks Brain Area WTR > Self Temporal Discounting Superior Frontal Gyrus Medial Frontal Gyrus Middle Frontal Gyrus Precentral Gyrus Postcentral Gyrus Caudate WTR > Friend Temporal Discounting Superior Frontal Gyrus Medial Frontal Gyrus Hemi BA Voxels x y z t-value R L L 8 8 10 106 23 22 -18 16 -10 37 37 53 44 47 -2 10.4 7.79 5.1 L 10 11 -16 47 10 5.68 R R L R L L 6 6 9 5 - 17 49 6 11 45 2 16 -40 17 16 2 -40 25 1 1 12 -8 13 42 25 39 71 12 7 3.97 4.71 4.33 4.8 5.35 4.05 R L 8 6 29 39 21 -2 31 27 49 59 5.07 5.38 R L L 6 9 10 198 15 10 21 -7 -5 31 53 61 35 38 21 7.51 4.24 4.03 Middle Temporal Gyrus R 22 110 53 -35 0 7.96 R 39 44 45 -60 21 5.79 L 21 11 -54 -4 -13 4.98 Cingulate Gyrus R 24 18 13 3 30 11.06 L 32 111 -20 12 39 7.72 Caudate R 19 23 -22 22 4.99 L 38 -3 -1 17 4.98 Claustrum L 37 -27 11 12 6.72 Note. Hemi = Hemisphere. BA = Brodmann area based on stereotaxic coordinates. Voxels = number of voxels in a cluster. (x,y,z) are in Talairach coordinates. 166 Table 8 Significant Activations of the Temporal Discounting Tasks Relative to the Welfare Tradeoff Task Brain Area Self Temporal Discounting > WTR Caudate Caudate Tail Cerebellar Tonsil Cingulate Gyrus Culmen Cuneus Inferior Frontal Gyrus Lentiform Nucleus, Putamen Lingual Gyrus Lingual Gyrus Middle Occipital Gyrus Parahippocampal Gyrus Parahippocampal Gyrus, Hippocampus Posterior Cingulate Sub-Gyral, Hippocampus Superior Temporal Gyrus Friend Temporal Discounting > WTR Anterior Cingulate Culmen Cuneus Declive of Vermis Inferior Parietal Hemi BA Voxels x y z t-value R R L L R L R R 31 24 13 130 38 -29 83 23 -36 10 -25 -52 13 -16 -27 15 8 1 48 -1 -68 229 2 -92 14 34 9 -5 12 -41 47 37 -6 3 -14 7.56 6.46 5.15 6.71 4.74 4.25 6.61 4.84 R R R L 19 - 18 25 4 77 29 -10 62 25 -62 52 -16 -76 18 -9 1 -1 5.41 4.81 5.98 5.29 L 19 10 -27 -89 8 4.54 L R 30 30 203 -20 -47 10 16 -39 8 -3 5.09 4.23 R R 29 13 21 34 -13 4 -45 -18 7 4.41 4.36 R - 23 28 -41 3 9 R R R 42 38 38 19 13 10 62 -27 40 0 46 17 14 -17 -11 5.38 5.29 5.18 L L L R R L L 32 30 18 19 40 56 -1 11 27 83 -3 61 4 13 9 28 -3 13 -55 -6 -22 8 7 36 -10 24 7.69 4.35 5.94 4.78 4.51 7.13 4.17 167 46 -31 -74 -90 -86 -72 -43 Lobule Inferior Temporal Gyrus R 20 14 51 -49 -10 4.13 Lentiform Nucleus, Putamen R 19 31 -10 -7 6.22 Middle Occipital Gyrus R 18 106 32 -82 1 7.26 Paracentral Lobule R 5 21 8 -38 49 5.76 Parahippocampal Gyrus R 19 20 21 -54 0 6.11 Parahippocampal Gyrus, Amygdala R 20 21 -11 -13 4.93 Precentral Gyrus L 4 26 -28 -29 53 4.92 Precuneus R 7 13 15 -69 52 5.13 Superior Temporal Gyrus R 42 12 60 -29 12 5.15 Note. Hemi = Hemisphere. BA = Brodmann area based on stereotaxic coordinates. Voxels = number of voxels in a cluster. (x,y,z) are in Talairach coordinates. 168 Table 9 Significant Activations of the Temporal Discounting Tasks Relative to Each Other Brain Area Friend > Self Temporal Discounting Middle Frontal Gyrus Cingulate Gyrus Insula Self > Friend Temporal Discounting Caudate Tail Caudate Body Cingulate Gyrus Culmen Declive Inferior Parietal Lobule Insula Lentiform Nucleus, Putamen Lingual Gyrus Middle Frontal Gyrus Middle Temporal Gyrus Parahippocampal Gyrus, Hippocampus Postcentral Gyrus Posterior Cingulate Precentral Gyrus Superior Temporal Gyrus Thalamus Thalamus, VLN Transverse Temporal Gyrus Hemi BA Voxels x y R R L 6 31 13 12 43 -1 10 17 -22 13 -34 20 R R L L L L L 24 24 - 53 23 R R R 40 13 13 13 L L R R L z t-value 54 33 16 7.17 3.86 5.96 -37 -41 -3 -1 -6 -58 -79 16 5 20 38 29 2 -12 10.4 5.66 4 9.01 4.37 6.4 6.25 54 -42 34 -27 41 -14 44 10 11 4.8 5.37 5.11 18 19 19 9 28 -23 -7 -20 -76 15 25 -73 25 -63 17 -48 28 18 -7 -4 -1 30 5.12 4.86 4.96 3.66 5.11 L 37 18 -53 -66 10 8.68 R L R R R L 3 30 30 30 4 10 33 -61 2 23 4 13 42 -59 -11 -18 -53 -67 -65 -10 -14 34 7 12 11 31 4.93 4.08 4.41 5.92 5.18 6.78 R R L 38 - 12 31 42 6 19 -28 -18 -12 -23 0 12 4.92 5.57 5.1 R 41 66 43 -22 12 5.72 21 36 -12 291 -11 -3 35 -5 89 -16 169 Note. Hemi = Hemisphere. BA = Brodmann area based on stereotaxic coordinates. Voxels = number of voxels in a cluster. VLN = Ventral lateral nucleus. (x,y,z) are in Talairach coordinates. 170 Method Subjects Eight graduate and undergraduate students associated with the UCSB Psychology Department were recruited through email advertisements. Two subjects were left-handed; three were female. Subjects were compensated $30 for approximately 1.5 hours of their time. Their decisions were hypothetical; thus they received no additional compensation for their choices. Tasks Subjects completed three types of tasks: a welfare tradeoff task, a temporal discounting task according to their own preferences, and a temporal discounting task according to what they believed their closest friend’s preferences would be. Subjects completed three versions of the welfare tradeoff task: one with respect to subjects’ closest same-sex friend, one with respect to a same-sex acquaintance, and one with respect to a same-sex “stranger,” defined as someone the subject saw in their day-today life (as opposed to through television or other media), but with whom the subject had never interacted (e.g., a person in the subject’s class the subject had never talked to). Subjects also completed three versions each of the temporal discounting tasks: one with the larger benefit available at 6 months, one at 8 months, and one at 12 months; in all versions the smaller benefit was available tomorrow. Prior to entering the scanner, subjects determined the targets of their welfare tradeoff decisions and completed practice trials of all three task types. (The practice trials used a different target than the main experimental trials and involved a delay of 10 months.) 171 Subjects were scanned as they completed the task in nine blocks. Each block consisted of 42 trials of the same type. Thus, for example, all and only trials of welfare tradeoffs involving an acquaintance were completed within a single block. Similarly, all and only trials of temporal discounting according to personal preferences with a delay of 6 months were completed within a single block. Between blocks, subjects were given as much rest as they desired. The order of the blocks was randomized across subjects Individual trials proceeded as follows. For the welfare tradeoff task, subjects first saw a fixation/instruction screen. This screen had (a) the word “Choose” centered near the top, (b) near the middle at the left the word “You,” and (c) near the middle at the right the initials of the target (e.g., the initials of the subject’s friend). This screen appeared for 250, 500, 750, 1000, 1250, or 1500msec, with the exact length randomly determined. Next, specific values appeared below “You” and the initials; these represented the amounts that would be allocated to the subject or the target of their choices based on subjects’ decisions. Subjects responded with a fingerpress response box. Subjects had as long as they desired to make their decisions. As soon they made their decision, the fixation/instruction screen reappeared. (This means that timing of subjects’ decisions was in no way linked to time points within the scanner’s repetition time.) The temporal discounting tasks were similar. Instead of “You” and the initials, however, “Tomorrow” and the longer delay (e.g. “8 months) appeared. Moreover, instead of “Choose,” the word “You” appeared if the task was to 172 be completed according to the subject’s preferences and the friend’s initials appeared if it was to completed according the friend’s preferences. The same 42 pairs of numbers (e.g., $54 tomorrow or $98 in 6 months) were used in all blocks. The specific pairs used were chosen to allow WTRs to range between 0 and 1. The lengths of delay (6, 8, and 10 months) were chosen so that the specific numbers used would also be roughly centered on the distribution of discounting parameters normally found in the literature (Kirby & Marakovic, 1996). The numbers are shown in Table 10. Note that subjects did not get paid based on their decisions; decisions were hypothetical and subjects were aware of this. 173 Table 10 Numbers Used in the WTR and Temporal Discounting Tasks You/Tomorrow 92 87 82 82 73 79 77 66 60 54 53 45 38 39 28 24 19 14 9 5 1 Other/Later 93 92 91 96 91 105 110 101 100 98 105 101 94 110 94 97 97 93 94 96 100 You/Tomorrow 41 47 46 41 34 45 29 27 30 26 24 19 23 19 18 12 10 8 5 3 1 Other/Later 41 49 51 48 43 60 42 41 50 48 47 43 57 54 59 48 50 50 52 51 59 Imaging Methods and Analysis Imaging Details Images were acquired with a 3T Tim Siemens Magnetom Trio scanner with a 12 channel phased array head coil. Functional runs were acquired with an echo planar gradient-echo imaging sequence sensitive to BOLD contrast, with a 2000msec repetition time (TR), 37 interleaved slices per TR (3mm thickness, .5mm gap), a 30msec echo time (TE), a flip angle of 90°, a field of view (FOV) of 192mm, and a 64 x 64 matrix. Prior to functional runs, a high-resolution T1-weighted mprage image 174 of the whole brain was acquired (TR = 2300msec, TE = 2.98msec, flip angle = 9°, 3D acquisition, FOV = 256mm, slice thickness = 1.1mm, 256 x 256 matrix). Image Preprocessing Images were preprocessed using SPM8 (www.fil.ion.ucl.ac.uk/spm). All subjects were checked for motion artifacts; none were found. Functional images were realigned and then co-registered with each subjects’ anatomical image. (No slice timing correction was applied.) Subjects’ anatomical and functional images were normalized to the smoothed Montreal Averaged 152 atlas (template “T1” in SPM8), with anatomical images being resliced to 1 x 1 x 1mm voxels and functional images to 2 x 2 x 2mm voxels. Functional images were then smoothed with an 8 x 8 x 8mm FWHM Gaussian kernel filter. Statistical Analysis Images were analyzed using SPM8. I used a simple block design defined by three conditions: (1) the three blocks of the WTR task, (2) the three blocks of the personal temporal discounting task, and (3) the three blocks of the friend temporal discounting task. SPM’s canonical hemodynamic response function was used as a basis function (no derivatives were used). Classical estimation was used at both the individual and group level. Given that this was a pilot study with a limited number of subjects, I used a relatively liberal statistical threshold to define significant clusters at the group-level: p < .005 (uncorrected) with a cluster extent of 10. (No activations would be significant using either family-wise error correction or false discovery rate techniques.) The stereotaxic locations of significant voxels were converted from 175 SPM’s native MNI space to Talairach space using the Lancaster transform (icbm2tal), implemented in GingerALE (Laird et al., 2005). Anatomical regions and Brodmann’s areas were determined based on nearest gray matter using the Talairach Client program (Lancaster et al., 1997; Lancaster et al., 2000). 176 Appendix 8: Materials for Chapter 3 Cover Stories Cover Story (Studies 6, 7, and 9) In today's study you'll be learning about a group of people who were traveling together on a small chartered plane. While crossing the Pacific Ocean, the plane hit a violent storm and was forced very far off course. A bolt of lightning ripped through the plane and damaged the electrical system as well as one of the engines. With no radio and failing power, the pilot managed to crash land on a small island. The pilot died during the impact and many of the passengers were seriously injured. For two days the passengers waited by the plane for help. They eventually ate all the food they had. Realizing that they might be on the island for a while and that they needed supplies, those who were not injured decided to go out and collect food. They knew that they needed to work together and cooperate so that everyone, including those who were seriously injured, would survive. Everyone who was going out searching agreed that any and all food they found they would bring back and share with the entire group. Each person carried a bag to collect food as well as a spear made from wood found near the crash site. In order to cover more ground, each person went out on their own. Cover Story (Study 8) In today's study you'll be learning about a group of people who were traveling together on a small chartered plane. While crossing the Pacific Ocean, the plane hit a violent storm and was forced very far off course. A bolt of lightning ripped through the plane and damaged the electrical system as well as one of the engines. With no radio and failing power, the pilot managed to crash land on a small island. The pilot died during the impact and many of the passengers were seriously injured. For two days the passengers waited by the plane for help. They eventually ate all the food they had. Realizing that they might be on the island for a while and that they needed supplies, those who were not injured decided to go out and collect food. They knew that they needed to work together and cooperate so that everyone, including those who were seriously injured, would survive. Everyone who was going out searching agreed that most of the food they found they would bring back and share with the entire group, although they could also keep some for themselves. Each person carried a bag to collect food as well as a spear made from wood found near the crash site. In order to cover more ground, each person went out on their own. 177 Cover Story (Study 10) In today's study you'll be learning about a group of people who were traveling together on a small chartered plane. While crossing the Pacific Ocean, the plane hit a violent storm and was forced very far off course. A bolt of lightning ripped through the plane and damaged the electrical system as well as one of the engines. With no radio and failing power, the pilot managed to crash land on a small island. The pilot died during the impact and many of the passengers were seriously injured. For two days the passengers waited by the plane for help. They eventually ate all the food they had. Realizing that they might be on the island for a while and that they needed supplies, those who were not injured decided to go out and collect food. Food on the island was scarce and hard to come by. They knew that they needed to work together and cooperate so that everyone, including those who were seriously injured, would survive. Everyone who was going out searching agreed that most of the food they found they would bring back and share with the entire group, although they could also keep some for themselves. Each person carried a bag to collect food as well as a spear made from wood found near the crash site. In order to cover more ground, each person went out on their own. Sentences Standard Background Sentences Fruit He watched a flock of birds fly overhead and then took the coconuts he’d found back to camp. With some passion fruit in hand, he walked over a few fallen logs and went back to camp. He whistled to himself while walking back to camp with some papaya fruit. Monkeys chattered off in the distance as he walked back to camp carrying plums. He slung his bag, now full of apricots, over his shoulder and made the trip back to camp. While bringing some guava fruit back to camp, he felt the warm sun on the back of his neck. Carrying cantaloupes to camp, he thought about warming his hands in the fire that night. Before heading to camp with some honeydew melons, he drank from a spring. Meat He stopped to tie his shoe and then continued back to the camp with the oysters he’d collected. The sun was setting as he made his way back to camp with the wild pig he’d caught. 178 While returning to camp with a deer he had caught, he looked at some interesting rocks. He watched the white clouds overhead while taking the goose he had caught back to camp. He wiped the sweat from his brow and walked back to camp with the rabbit he had trapped. Brushing sand from his legs, he started the journey back to camp with the mussels he had found. He listened to his own footsteps while taking the pheasant he’d caught to camp. He could smell the salt in the air while taking the quail he’d caught back to camp. Vegetable He brushed some briars off of his clothes and took the lettuce he found to camp. He rinsed his hands and set off back towards camp with the yams he had dug up. He watched the ocean waves while walking back to camp with the zucchini he had found. He heard the buzzing of insects while taking the carrots he had gotten back to camp. Taking a path back to camp with the avocados he had picked, he heard a bird call high above. Going back to camp with the squash he collected, he noticed the island’s humidity. He saw some flowers starting to bloom as he took the spinach he’d collected back to camp. While walking back to camp with tomatoes he’d found, he felt the wind blowing through the island. High Cost (Studies 6 & 7) Fruit After the group ate dinner, he worked all night to gather peaches for everyone. He risked going to a dangerous part of the island to collect the kiwi fruit that grew there. After someone else dropped oranges into a cave, he ventured into the dark alone to get them back. After cougars had been seen by the pineapple tree, he was the only one willing to get fruit from them today. Meat He caught a sea bass using pieces of his watch as lures knowing that it would destroy the watch. He ruined his shirt by using it as a harness to climb a tree and collect the cashews. In order to catch some crabs, he waded into the shark-infested shallows off the bay. He risked drowning by holding his breath long enough to swim to the depth where shrimp lived. 179 Low Cost (Study 6) Fruit While he was taking bananas back to camp, the wind blew his hat away and he could not find it. A few dollar bills fell out of a hole in his pocket while he carried pears he’d found back to camp. While picking strawberries, he cracked and broke his watch against a rock. After setting down what he was carrying in order to drink, he took the mangos but forgot his comb and couldn’t find it later. Meat While bringing a lobster back to camp, he dropped his Walkman and it broke into pieces. While taking a duck back, a bat landed on his head knocking his sunglasses over the cliff. Carrying some snapper back, he tripped on a buried root and crushed his camera. While he was taking honey back to camp, his keys fell from his coat into a patch of ivy. Big Cost, Benefit to Group (Study 8) Fruit He risked going to a dangerous part of the island to collect the kiwi fruit that grew there and then took them to the group. After someone else dropped oranges into a cave, he ventured into the dark alone to get them and bring them to camp. Even after cougars had been seen by the pineapple trees, he went and got fruit from them to take back to the group. He climbed to the top of a perilous cliff in order to gather peaches from the grove there and then brought them to camp. Meat He exposed himself to the hazardous waves on the rocks of the bay so he could catch sea bass to bring to the group. In order to catch some crabs to bring back to the group, he waded into the sharkinfested shallows off the bay. To get food for the group, he risked drowning by holding his breath long enough to swim to the depth where shrimp lived. During a windstorm, he got food for the group by climbing the highest tree on the island to collect the cashews at the top. 180 Big Cost, Benefit to the Self (Study 8) Fruit When the group finished eating food he provided, he collected strawberries for himself in an area full of dangerous bears. After he brought food to camp, he went through a rapidly flooding valley during a rainstorm to get himself some mangos. He brought food to the camp and then collected some bananas for himself by swinging from tree-top to tree-top in the forest. When he was finished collecting food for the group, he scaled a tall, vertical mountain to get pears for himself. Meat After bringing food to the group, he collected snapper for himself to eat at the very edge of a quickly moving waterfall. After providing some food for the group, he waded through a river full of deadly piranhas to hunt duck for himself. After getting food to bring to camp, he swam far, far out into treacherous open waters to collect lobster for himself. Later, after bringing food to camp, he got honey for himself by carefully moving through a dense cloud of angry bees. Big Benefit (Studies 9 & 10) Fruit He risked going to a dangerous part of the island to collect the ample, ripe kiwi fruit he knew grew there and then took them to the group. After someone else dropped a great many juicy oranges into a cave, he ventured into the dark alone to get them and bring them to camp. Even after cougars had been seen by the pineapple trees, he went and got for the group the copious armfuls of fruit he had seen there earlier. He noticed a large patch of peaches in the grove at the top of a perilous cliff and climbed up to gather loads to bring back for the group. Late in the day he brought back to camp the bags and bags worth of strawberries he collected in an area full of dangerous bears. He went through a rapidly flooding valley during a rainstorm and gathered for the group the huge tasty mangos he had seen there. Moving from precarious tree-top to precarious tree-top, he collected for the group many bunches of yellow bananas he had seen from the ground. He scaled the sheer face of a tall vertical mountain and gathered for the group the tons of pears he saw growing there. 181 Meat Seeing many huge sea bass, he exposed himself to the hazardous waves on the rocks of the bay so he could catch them to bring to the group. To bring back to the group the large number of crabs he could see in the bay, he waded into the bay's shark-infested shallows. To get food for the group, he risked drowning by holding his breath long enough to swim to the depth where he saw that an enormous group of shrimp lived. He got food for the group by climbing one the highest trees on the island to collect the pounds and pounds of cashews he saw at the top. At the very edge of a quickly moving waterfall, he speared the twenty or thirty snapper he saw there and took them to camp. Moving carefully as he waded through a river full of deadly piranhas, he hunted a flock of ducks and took most to the group. Seeing numerous large lobsters there, he swam far out into treacherous open waters and collected them to bring to camp. He managed to get many cups worth of honey for the group by carefully moving through a dense cloud of angry bees. Small Benefit (Studies 9 & 10) Fruit He risked going to a dangerous part of the island to collect the few kiwi fruit he knew grew there and then took them to the group. After someone else dropped a pair of oranges into a cave, he ventured into the dark alone to get them and bring them to camp. Even after cougars had been seen by the pineapple trees, he went and got for the group the handful of fruit he had seen there earlier. He noticed a few peaches in the grove at the top of a perilous cliff and climbed up to gather them to bring back for the group. Late in the day he brought back to camp the handful of strawberries he collected in an area full of dangerous bears. He went through a rapidly flooding valley during a rainstorm and gathered for the group the little mangos he had seen there. Moving from precarious tree-top to precarious tree-top, he collected for the group a pair of finger-sized bananas he had seen from the ground. He scaled the sheer face of a tall vertical mountain and gathered for the group the two or three pears he saw growing there. Meat Seeing a small sea bass, he exposed himself to the hazardous waves on the rocks of the bay so he could catch it to bring to the group. To bring back to the group the few crabs he could see in the bay, he waded into the bay's shark-infested shallows. 182 To get food for the group, he risked drowning by holding his breath long enough to swim to the depth where he saw that a small group of shrimp lived. He got food for the group by climbing one the highest trees on the island to collect the few cashews he saw at the top. At the very edge of a quickly moving waterfall, he speared the single snapper he saw there and took it to camp. Moving carefully as he waded through a river full of deadly piranhas, he hunted a lone duck and took it to the group. Seeing a solitary lobster there, he swam far out into treacherous open waters and collected it to bring to camp. He managed to get a few thimbles worth of honey for the group by carefully moving through a dense cloud of angry bees. [Unlike other studies, subjects did not see all sentences in Studies 9 & 10. Whether a given resource was depicted as being in abundance or scarce was randomly determined for each subject. Thus, there are twice as many diagnostic sentences created for these studies.] Could not find food (Study 10) He searched all over the island but found no food to bring back. Everyone was hungry so he tried very hard to catch some food but did not manage to. After he heard an animal in the forest, he tracked it for several hours but did not find it again. He spent all day trying to get to fruit trees he saw off in the distance, but they turned out to just have flowers. He worked hard to get a beehive out of a tree only to find it abandoned and without honey. He quietly tracked an antelope all day, but someone walking loudly through the forest scared it off. He spent a long time making a fishing net, but an unusually strong current tore it before he caught anything. While climbing a tall palm to get coconuts, he slipped and twisted his ankle and couldn't search for food the rest of the day. After searching the entire day all through the deep ravine, he still came back empty handed. He scoured the underbrush of a large glen, hoping to find food, but found nothing instead. A flock of birds made noise in a pond ahead, but flew away before he could catch one to bring back. After a long walk, he came to a fruit grove, but the trees were long dead and had no fruit. Although he thought that taking a less traveled route might lead to some food, he found nothing. 183 He combed through the tall grass for anything edible but found nothing to take back to camp. He fished in the river all day, walking up and down it, but the fish were just not biting. After he heard an animal in the forest, he tracked it for several hours but did not find it again. He spent the day hiking to a place where someone else had seen fruit earlier, but it was gone. Out of all the shells on the beach, he could find none that still had a crab in them. Despite swimming all over the lagoon, he could not find any fish for the group. No matter where he went, no matter where he looked, he could not find any food for the group. He followed a winding stream, hoping to catch animals drinking from it, but none were there today. He checked the trunks of trees looking for edible mushrooms; unfortunately, animals had already eaten them. Someone thought edible tubers might be in the ground nearby, but no matter where he dug, he could not find any. He eventually went back to camp empty-handed after many hours climbing trees looking for bananas. 184 Questions Study 1 Does this person deserve to be punished for his actions on the island? 1 2 3 4 5 6 7 Not At All Very Much Does this person deserve to be rewarded for his actions on the island? 1 2 3 4 5 6 7 Not At All Very Much Would you be willing to have this person on your "team"? 1 2 3 4 5 6 7 Not At All Very Much To what extent is this person trustworthy? 1 2 3 4 5 6 7 Not At All Trustworthy Very Trustworthy To what extent is this person competent? 1 2 3 4 5 6 7 Not At All Competent To what extent is this person likeable? 1 2 3 4 5 6 7 Not At All Likeable Very Competent Very Likeable To what extent is this person altruistic? 1 2 3 4 5 6 7 Not At All Altruistic Very Altruistic To what extent is this person selfish? 1 2 3 4 5 6 7 Not At All Selfish Very Selfish How much do the other members of the group respect him? 1 2 3 4 5 6 7 Not At All Very Much How much do the other members of the group want him to be a leader? 1 2 3 4 5 6 7 Not At All Very Much 185 How much does he care about the group? 1 2 3 4 5 6 7 Not At All Very Much How much effort did this person put into getting food for the group? 1 2 3 4 5 6 7 Very Little Very Much Sometimes when people went out searching for food, they ended up paying a cost such as putting themselves in danger or losing personal items. When this happened to the person above, to what extent did he end up paying the cost on purpose? 1 2 3 4 5 6 7 Not At All Very Much Studies 2-5 How much does the person on the left care about the group as a whole? [pictures were shown] 1 2 3 4 5 6 7 Not at All Very Much How altruistic does the person on the left feel towards the group as a whole? [pictures were shown] 1 2 3 4 5 6 7 Not At All Altruistic Very Altruistic Would you be willing to work with this person in a group? 1 2 3 4 5 6 7 Not At All Very Much Would you be willing to work with this person one on one? 1 2 3 4 5 6 7 Not At All Very Much To what extent is this person trustworthy? 1 2 3 4 5 6 7 Not At All Trustworthy To what extent is this person competent? 1 2 3 4 5 6 7 Not At All Competent Very Trustworthy Very Competent 186 To what extent is this person likeable? 1 2 3 4 5 6 7 Not At All Likeable Very Likeable To what extent is this person willing to provide for the group? 1 2 3 4 5 6 7 Not At All Willing Very Willing How much effort did this person put into getting food for the group? 1 2 3 4 5 6 7 Very Little Very Much How much do the other members of the group respect him? 1 2 3 4 5 6 7 Not At All Very Much 187 References Aron, A., Aron, E. N., & Smollan, D. (1992). Inclusion of Other in the Self Scale and the Structure of Interpersonal Closeness. Journal of Personality and Social Psychology, 63(4), 596-612. Axelrod, R., & Hamilton, W. D. (1981). The Evolution of Cooperation. [Article]. Science, 211(4489), 1390-1396. Barbey, A. K., Krueger, F., & Grafman, J. (2009). An evolutionarily adaptive neural architecture for social reasoning. Trends in Neurosciences, 32(12), 603-610. Bechara, A., Damasio, H., & Damasio, A. R. (2000). Emotion, decision making and the orbitofrontal cortex. Cereb Cortex, 10(3), 295-307. Birnbaum, M. H. (2008a). Evaluation of the priority heuristic as a descriptive model of risky decision making: Comment on Brandstatter, Gigerenzer, and Hertwig (2006). Psychological Review, 115(1), 253-260. Birnbaum, M. H. (2008b). New paradoxes of risky decision making. Psychological Review, 115(2), 463-501. Boehm, C. (1993). Egalitarian Behavior and Reverse Dominance Hierarchy. Current Anthropology, 34(3), 227-254. Boyd, R., & Richerson, P. J. (1989). The Evolution of Indirect Reciprocity. Social Networks, 11(3), 213-236. Brandstatter, E., Gigerenzer, G., & Hertwig, R. (2006). The priority heuristic: Making choices without trade-offs. Psychological Review, 113(2), 409-432. 188 Budesheim, T., & DePoala, S. (1994). Beauty or the beast? The effects of appearance, personality, and issue information on evaluations of political candidates. Personality and Social Psychology Bulletin, 20, 339-348. Buss, D. M., Shackelford, T. K., Kirkpatrick, L. A., & Larsen, R. J. (2001). A half century of mate preferences: The cultural evolution of values. Journal of Marriage and the Family, 63(2), 491-503. Camerer, C. F. (2003). Behavioral game theory: Experiments in strategic interaction: Princeton University Press. Camerer, C. F., & Fehr, E. (2006). When does "economic man" dominate social behavior? Science, 311(5757), 47-52. Clutton-Brock, T. (2002). Behavioral ecology - Breeding together: Kin selection and mutualism in cooperative vertebrates. Science, 296(5565), 69-72. Clutton-Brock, T. (2009). Cooperation between non-kin in animal societies. Nature, 462(7269), 51-57. Connor, R. C. (1986). Pseudo-reciprocity: Investing in mutualism. Animal Behaviour, 34(5), 1562-1566. Cosmides, L. (1989). The Logic of Social-Exchange - Has Natural-Selection Shaped How Humans Reason - Studies with the Wason Selection Task. Cognition, 31(3), 187-276. Cosmides, L., & Tooby, J. (2005). Neurocognitive adaptations designed for social exchange. In D. M. Buss (Ed.), The Handbook of Evolutionary Psychology (pp. 584-627). Hoboken, NJ: Wiley. 189 Cosmides, L., & Tooby, J. (2008). Can evolutionary psychology assist logicians? A reply to Mallon. In W. Sinnott-Armstrong (Ed.), Moral psychology (pp. 131136). Cambridge, MA: MIT Press. Dehaene, S., Izard, V., Spelke, E., & Pica, P. (2008). Log or linear? Distinct intuitions of the number scale in western and amazonian indigene cultures. Science, 320(5880), 1217-1220. Delton, A. W., Cosmides, L., Guemo, M., Tooby, J., & Robertson, T. E. (2010). The psychosemantics of free riding: Dissecting the architecture of a moral concept. Unpublished manuscript. Downs, C., & Lyons, P. (1991). Natural observations of the links between attractiveness and initial legal judgments. Personality and Social Psychology Bulletin, 17, 541-547. Dunbar, R. I. M. (2004). Gossip in evolutionary perspective. Review of General Psychology, 8(2), 100-110. Ellsberg, D. (1961). Risk, ambiguity, and the Savage axioms. Quarterly Journal of Economics, 75(4), 643-669. Ermer, E., Guerin, S., Cosmides, L., Tooby, J., & Miller, M. (2006). Theory of mind broad and narrow: Reasoning about social exchange engages ToM areas, precautionary reasoning does not. Social Neuroscience, 1(3-4), 196-219. Fehr, E. (2009). Social preferences and the brain. In P. W. Glimcher, C. F. Camerer, E. Fehr & R. A. Poldrack (Eds.), Neuroeconomics: Decision making and the brain (pp. 213-232). Amsterdam: Academic Press. 190 Fehr, E., & Henrich, J. (2003). Is strong reciprocity a maladaptation? On the evolutionary foundations of human altruism. In P. Hammerstein (Ed.), Genetic and cultural evolution of cooperation (pp. 55-82). Cambridge, MA, US: MIT Press. Fehr, E., & Schmidt, K. M. (1999). A theory of fairness, competition, and cooperation. Quarterly Journal of Economics, 114, 817-868. Fessler, D. M. T., & Navarrete, C. D. (2004). Third-party attitudes toward sibling incest: Evidence for Westermarck's hypotheses. Evolution and Human Behavior, 25, 277-294. Fiddick, L., Spampinato, M. V., & Grafman, J. (2005). Social contracts and precautions activate different neurological systems: An fMRI investigation of deontic reasoning. Neuroimage, 28(4), 778-786. Fiske, A. P. (1992). The four elementary forms of sociality: framework for a unified theory of social relations. Psychol Rev, 99(4), 689-723. Gallistel, C. R. (1990). The organization of learning. Cambridge, MA: MIT Press. Gardner, A., West, S. A., & Barton, N. H. (2007). The relation between multilocus population genetics and social evolution theory. American Naturalist, 169(2), 207-226. Gigerenzer, G., Hell, W., & Blank, H. (1988). Presentation and Content - the Use of Base Rates as a Continuous Variable. Journal of Experimental PsychologyHuman Perception and Performance, 14(3), 513-525. 191 Grafen, A. (1990). Biological Signals as Handicaps. Journal of Theoretical Biology, 144(4), 517-546. Green, L., & Myerson, J. (2004). A discounting framework for choice with delayed and probabilistic rewards. Psychol Bull, 130(5), 769-792. Greene, J. D., Sommerville, R. B., Nystrom, L. E., Darley, J. M., & Cohen, J. D. (2001). An fMRI investigation of emotional engagement in moral judgment. Science, 293(5537), 2105-2108. Gros-Louis, J., Perry, S., & Manson, J. H. (2003). Violent coalitionary attacks and intraspecific killing in wild white-faced capuchin monkeys ( Cebus capucinus). Primates, 44(4), 341-346. Haidt, J. (2001). The emotional dog and its rational tail: A social intuitionist approach to moral judgment. Psychological Review, 108(4), 814-834. Haig, D. (1998). Genomic imprinting. American Journal of Human Biology, 10(5), 679-680. Hamilton, W. D. (1964). The genetical evolution of social behaviour. Journal of Theoretical Biology, 7(1), 1-52. Hammerstein, P., & Parker, G. A. (1982). The asymmetric war of attrition. Journal of Theoretical Biology, 96(4), 647-682. Hampton, J. A. (2007). Typicality, graded membership, and vagueness. Cognitive Science, 31(3), 355-384. Hashemi, R. H., Bradley, W. G., Jr., & Lisanti, C. J. (2004). MRI: The basics (2nd ed.). Philadelphia: Lippincott, Williams, & Wilkins. 192 Henrich, J. (2004). Cultural group selection, coevolutionary processes and large-scale cooperation. [Review]. Journal of Economic Behavior & Organization, 53(1), 3-35. Henrich, J., Boyd, R., Bowles, S., Camerer, C., Fehr, E., Gintis, H., et al. (2005). "Economic man" in cross-cultural perspective: Behavioral experiments in 15 small-scale societies. [Review]. Behavioral and Brain Sciences, 28(6), 795-+. Herrnstein, R. J., Rachlin, H., & Laibson, D. I. (Eds.). (1997). The matching law: Papers in psychology and economics. New York: Russell Sage Foundation. Hoffman, E., McCabe, K., & Smith, V. L. (1996). Social distance and other-regarding behavior in dictator games. American Economic Review, 86(3), 653-660. Jackendoff, R. (1983). Semanctics and cognition. Cambridge, MA: MIT Press. Jackendoff, R. (2002). Foundations of language: Brain, meaning, grammar, evolution. Oxford: Oxford University Press. Jackendoff, R. (2006). Language, consciousness, culture: Essays on mental structure. Cambridge, MA: MIT Press. Johnson, E. J., Schulte-Mecklenbeck, M., & Willemsen, M. C. (2008). Process models deserve process data: Comment on Brandstatter, Gigerenzer, and Hertwig (2006). Psychological Review, 115(1), 263-272. Jones, B. A., & Rachlin, H. (2006). Social discounting. Psychological Science, 17(4), 283-286. 193 Jones, B. A., & Rachlin, H. (2009). Delay, Probability, and Social Discounting in a Public Goods Game. Journal of the Experimental Analysis of Behavior, 91(1), 61-73. Kable, J. W., & Glimcher, P. W. (2007). The neural correlates of subjective value during intertemporal choice. Nature Neuroscience, 10(12), 1625-1633. Kameda, T., & Tamura, R. (2007). "To eat or not to be eaten?" Collective riskmonitoring in groups. Journal of Experimental Social Psychology, 43(2), 168179. Kaplan, H., & Hill, K. (1985). Food sharing among Ache foragers: Tests of explanatory hypotheses. Current Anthropology, 26(2), 223-246. Kaplan, H., Hill, K., Lancaster, J., & Hurtado, A. M. (2000). A theory of human life history evolution: Diet, intelligence, and longevity. Evolutionary Anthropology, 9(4), 156-185. Kelley, H. H., Holmes, J. G., Kerr, N. L., Reis, H. T., Rusbult, C. E., & Van Lange, P. A. M. (2003). An atlas of interpersonal situations. Cambridge University Press, New York. Killeen, P. R. (2009). An additive-utility model of delay discounting. Psychol Rev, 116(3), 602-619. Kirby, K. N., & Marakovic, N. N. (1996). Delay-discounting probabilistic rewards: Rates decrease as amounts increase. Psychonomic Bulletin & Review, 3(1), 100-104. 194 Kurland, J. A., & Gaulin, S. J. C. (2005). Cooperation and conflict among kin. In D. M. Buss (Ed.), Handbook of evolutionary psychology (pp. 447-482). Hoboken, NJ: Wiley & Sons. Laird, A. R., Fox, P. M., Price, C. J., Glahn, D. C., Uecker, A. M., Lancaster, J. L., et al. (2005). ALE meta-analysis: controlling the false discovery rate and performing statistical contrasts. Hum Brain Mapp, 25(1), 155-164. Lancaster, J. L., Rainey, L. H., Summerlin, J. L., Freitas, C. S., Fox, P. T., Evans, A. C., et al. (1997). Automated labeling of the human brain: a preliminary report on the development and evaluation of a forward-transform method. Hum Brain Mapp, 5(4), 238-242. Lancaster, J. L., Woldorff, M. G., Parsons, L. M., Liotti, M., Freitas, C. S., Rainey, L., et al. (2000). Automated Talairach atlas labels for functional brain mapping. Hum Brain Mapp, 10(3), 120-131. Leimar, O., & Hammerstein, P. (2001). Evolution of cooperation through indirect reciprocity. Proceedings of the Royal Society B-Biological Sciences, 268(1468), 745-753. Leslie, A. M. (1994). ToMM, ToBy, and agency: Core architecture and domain specificity. In L. A. Hirschfeld & S. A. Gelman (Eds.), Mapping the mind: Domain specificity in cognition and culture (pp. 119-148). Cambridge, U.K.: Cambridge University Press. 195 Leslie, A. M., Knobe, J., & Cohen, A. (2006). Acting intentionally and the side-effect effect - Theory of mind and moral judgment. Psychological Science, 17(5), 421-427. Lieberman, D., Tooby, J., & Cosmides, L. (2003). Does morality have a biological basis? An empirical test of the factors governing moral sentiments relating to incest. Proceedings of the Royal Society of London Series B-Biological Sciences, 270(1517), 819-826. Lieberman, D., Tooby, J., & Cosmides, L. (2007). The architecture of human kin detection. Nature, 44, 727-731. Lim, J., Sznycer, D., Delton, A. W., Robertson, T. E., Tooby, J., & Cosmides, L. (2010). Interactive welfare tradeoff games. Unpublished manuscript. Marr. (1982). Vision. San Francisco: W. H. Freeman & Company. Maynard Smith, J. (1982). Evolution and the theory of games. Cambridge, U.K.: Cambridge University Press. Maynard Smith, J., & Price, G. R. (1973). The logic of animal conflict. Nature, 246(5427), 15-18. McClure, S. M., Laibson, D. I., Loewenstein, G., & Cohen, J. D. (2004). Separate neural systems value immediate and delayed monetary rewards. Science, 306(5695), 503-507. McElreath, R., & Boyd, R. (2007). Mathematical models of social evolution: A guide for the perplexed. Chicago: University of Chicago Press. 196 Milinski, M., Semmann, D., Bakker, T. C. M., & Krambeck, H.-J. (2001). Cooperation through indirect reciprocity: Image scoring or standing strategy. Proceedings of the Royal Society B-Biological Sciences, 268(1484), 24952501. Mills, J., Clark, M. S., Ford, T. E., & Johnson, M. (2004). Measurement of communal strength. Personal Relationships, 11(2), 213-230. New, J., Krasnow, M. M., Truxaw, D., & Gaulin, S. J. C. (2007). Spatial adaptations for plant foraging: women excel and calories count. Proceedings of the Royal Society B: Biological Sciences, 274(1626), 2679-2684. Noe, R., & Hammerstein, P. (1994). Biological Markets - Supply-and-Demand Determine the Effect of Partner Choice in Cooperation, Mutualism and Mating. Behavioral Ecology and Sociobiology, 35(1), 1-11. Noe, R., & Hammerstein, P. (1995). Biological Markets. Trends in Ecology & Evolution, 10(8), 336-339. Nowak, M. A. (2006a). Evolutionary dynamics: Exploring the equations of life. Boston: Belknap Press. Nowak, M. A. (2006b). Five rules for the evolution of cooperation. [Review]. Science, 314(5805), 1560-1563. Nowak, M. A., & Sigmund, K. (2005). Evolution of indirect reciprocity. [Review]. Nature, 437(7063), 1291-1298. 197 Ohtsubo, Y., & Watanabe, Y. (2003). Contrast effects and approval voting: An illustration of a systematic violation of the independence of irrelevant alternatives condition. Political Psychology, 24(3), 549-559. Ohtsuki, H., & Iwasa, Y. (2006). The leading eight: Social norms that can maintain cooperation by indirect reciprocity. Journal of Theoretical Biology, 239(4), 435-444. Oliver, A. (2003). A quantitative and qualitative test of the Allais paradox using health outcomes. Journal of Economic Psychology, 24, 35-48. Pacheco, J. M., Santos, F. C., Souza, M. O., & Skyrms, B. (2009). Evolutionary dynamics of collective action in N-person stag hunt dilemmas. Proceedings of the Royal Society B-Biological Sciences, 276, 315-321. Panchanathan, K., & Boyd, R. (2003). A tale of two defectors: the importance of standing for evolution of indirect reciprocity. J Theor Biol, 224(1), 115-126. Pinker, S. (2007). The stuff of thought: Language as a window into human nature. New York: Viking. Preuschoff, K., Bossaerts, P., & Quartz, S. R. (2006). Neural differentiation of expected reward and risk in human subcortical structures. Neuron, 51(3), 381390. Price, G. R. (1970). Selection and covariance. Nature, 227(5257), 520-521. Price, G. R. (1972). Extension of covariance selection mathematics. Annals of human genetics, 35(4), 485-490. 198 Rabin, M. (1993). Incorporating fairness into game theory and economics. American Economic Review, 83, 1281-1302. Rachlin, H., & Jones, B. A. (2008a). Altruism among relatives and non-relatives. Behavioural Processes, 79(2), 120-123. Rachlin, H., & Jones, B. A. (2008b). Social discounting and delay discounting. Journal of Behavioral Decision Making, 21(1), 29-43. Reis, H. T. (2008). Reinvigorating the concept of situation in social psychology. Personality and Social Psychology Review, 12(4), 311-329. Rieger, M. O., & Wang, M. (2008). What is behind the priority heuristic? A mathematical analysis and comment on Brandstdtter, Gigerenzer, and Hertwig (2006). Psychological Review, 115(1), 274-280. Rode, C., Cosmides, L., Hell, W., & Tooby, J. (1999). When and why do people avoid unknown probabilities in decisions under uncertainty? Testing some predictions from optimal foraging theory. Cognition, 72(3), 269-304. Rosenthal, R., Rosnow, R. L., & Rubin, D. B. (2000). Contrasts and effect sizes in behavioral research: A correlational approach. Cambridge, U.K.: Cambridge University Press. Rusbult, C. E., & Van Lange, P. A. M. (2008). Why we need interdependence theory. Social and Personality Psychology Compass, 2/5, 2049-2070. Sell, A. (2005). Regulating welfare-tradeoff ratios: Three tests of an evolutionarycomputational model of human anger. Unpublished doctoral dissertation. 199 Sell, A., Cosmides, L., Tooby, J., Sznycer, D., von Rueden, C., & Gurven, M. (2009). Human adaptations for the visual assessment of strength and fighting ability from the body and face. Proceedings of the Royal Society B-Biological Sciences, 276(1656), 575-584. Sell, A., Tooby, J., & Cosmides, L. (2009). Formidability and the logic of human anger. Proceedings of the National Academy of Sciences of the United States of America, 106(35), 15073-15078. Shepard, R. N. (1984). Ecological Constraints on Internal Representation - Resonant Kinematics of Perceiving, Imagining, Thinking, and Dreaming. Psychological Review, 91(4), 417-447. Silverman, I., & Eals, M. (1992). Sex differences in spatial abilities: evolutionary theory and data. In J. Barkow, L. Cosmides & J. Tooby (Eds.), The Adapted Mind: evolutionary psychology and the generation of culture (pp. 533-549). New York, NY: Oxford University Press. Spelke, E. S. (2000). Core knowledge. American Psychologist, 55(11), 1233-1243. Stephens, D. W., Brown, J. S., & Ydenberg, R. C. (Eds.). (2007). Foraging. Chicago: University of Chicago Press. Stewart-Williams, S. (2007). Altruism among kin vs. nonkin: effects of cost of help and reciprocal exchange. Evolution and Human Behavior, 28(3), 193-198. Stewart, J. (1985). Appearance and punishment: The attraction-leniency effect in the courtroom. Journal of Social Psychology, 125(373-378). 200 Sugiyama, L. S. (2005). Physical attractiveness in adaptationist perspective. In D. M. Buss (Ed.), Handbook of evolutionary psychology (pp. 292-343). Hoboken, NJ: Wiley & Sons. Sznycer, D., Lim, J., Delton, A. W., Robertson, T. E., Tooby, J., & Cosmides, L. (2010). The mind accurately estimates others' welfare tradeoff ratios. Unpublished manuscript. Taylor, S. E., Fiske, S. T., Etcoff, N. L., & Ruderman, A. J. (1978). Categorical and Contextual Bases of Person Memory and Stereotyping. Journal of Personality and Social Psychology, 36(7), 778-793. Thibaut, J. W., & Kelley, H. H. (1959). The social psychology of groups. New York: John Wiley. Tooby, J., & Cosmides, L. (1989). Evolutionary Psychologists Need to Distinguish between the Evolutionary Process, Ancestral Selection Pressures, and Psychological Mechanisms. Behavioral and Brain Sciences, 12(4), 724-724. Tooby, J., & Cosmides, L. (1990). The Past Explains the Present - Emotional Adaptations and the Structure of Ancestral Environments. Ethology and Sociobiology, 11(4-5), 375-424. Tooby, J., & Cosmides, L. (1996). Friendship and the banker’s paradox: Other pathways to the evolution of adaptations for altruism. In W. G. Runciman, J. Maynard Smith & R. I. M. Dunbar (Eds.), Evolution of Social Behaviour Patterns in Primates and Man (Vol. 88, pp. 119-143). 201 Tooby, J., Cosmides, L., & Barrett, H. C. (2005). Resolving the debate on innate ideas: learnability constraints and the evolved interpenetration of motivational and conceptual functions. In P. Carruthers, S. Laurence & S. Stitch (Eds.), The innate mind: structure and content (pp. 305-337). New York, NY: Oxford University Press. Tooby, J., Cosmides, L., & Price, M. E. (2006). Cognitive adaptations for n-person exchange: the evolutionary roots of organizational behavior. Managerial and Decision Economics, 27(2-3), 103-129. Tooby, J., Cosmides, L., Sell, A., Lieberman, D., & Sznycer, D. (2008). Internal regulatory variables and the design of human motivation: A computational and evolutionary approach. In A. J. Elliot (Ed.), Handbook of approach and avoidance motivation (pp. 251-271). Mahwah, NJ: Lawrence Erlbaum Associates. Trivers, R. L. (1971). Evolution of Reciprocal Altruism. [Article]. Quarterly Review of Biology, 46(1), 35-&. Tversky, A. (1969). Intransitivity of preferences. Psychological Review, 76(1), 31-48. Tversky, A., & Kahneman, D. (1992). Advances in Prospect-Theory - Cumulative Representation of Uncertainty. Journal of Risk and Uncertainty, 5(4), 297323. Van Lange, P. A. M. (1999). The pursuit of joint outcomes and equality in outcomes: An integrative model of social value orientation. Journal of Personality and Social Psychology, 77(2), 337-349. 202 Van Lange, P. A. M., Otten, W., DeBruin, E. M. N., & Joireman, J. A. (1997). Development of prosocial, individualistic, and competitive orientations: Theory and preliminary evidence. Journal of Personality and Social Psychology, 73(4), 733-746. Weber, B. J., & Huettel, S. A. (2008). The neural substrates of probabilistic and intertemporal decision making. Brain Res, 1234, 104-115. Westermarck, E. (1891). The history of human marriage. London: Macmillan & Co. Williams, G. C. (1957). Pleiotropy, Natural-Selection, and the Evolution of Senescence. Evolution, 11(4), 398-411. Williams, G. C., & Williams, D. C. (1957). Natural-Selection of Individually Harmful Social Adaptations among Sibs with Special Reference to Social Insects. Evolution, 11(1), 32-39. Wilson, D. S., & Sober, E. (1994). Reintroducing Group Selection to the Human Behavioral-Sciences. [Review]. Behavioral and Brain Sciences, 17(4), 585608. Wilson, M., & Daly, M. (2004). Do pretty women inspire men to discount the future? Biology Letters, 271, S177-S179. Winterhalder, B., & Smith, E. A. (2000). Analyzing adaptive strategies: Human behavioral ecology at twenty-five. Evolutionary Anthropology: Issues, News, and Reviews, 9(2), 51-72. 203