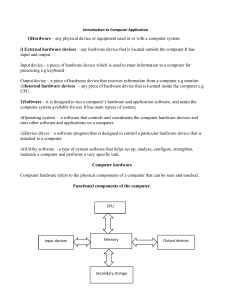

1/1 What is Operating System ? write advantages and disadvantage for the same ? 1/1 An Operating System (OS) is a software that manages computer hardware and software resources and provides common services for computer programs. It acts as an interface between the computer hardware and software, enabling applications to run on the computer. Advantages of Operating System: 1. Resource Management: The OS manages computer resources such as the CPU, memory, and storage devices, ensuring that each application receives the necessary resources to run efficiently. 2. Multi-tasking: The OS allows multiple applications to run simultaneously, allowing users to switch between programs and perform multiple tasks at once. 3. Security: The OS provides security features such as user authentication, access control, and encryption to protect sensitive data and prevent unauthorized access. 4. Device Drivers: The OS includes device drivers that enable the computer to communicate with hardware devices such as printers, scanners, and cameras. 5. User Interface: The OS provides a graphical user interface (GUI) that makes it easy for users to interact with the computer and its applications. Disadvantages of Operating System: 1. Cost: Operating systems can be expensive to purchase, especially if they are proprietary systems. 2. Complexity: Operating systems can be complex to use and configure, requiring users to have a certain level of technical knowledge. 3. Compatibility Issues: Applications may not be compatible with certain operating systems, requiring users to use different operating systems for different applications. 4. System Requirements: Some operating systems require powerful hardware to run efficiently, making them difficult to use on older computers. 5. Security Vulnerabilities: Operating systems can have security vulnerabilities that can be exploited by hackers to gain unauthorized access to computer systems or steal sensitive data. 1/1 Explain Computer System architecture. and refer a diagram link for the same 1/1 Computer system architecture refers to the structure or organization of a computer system, including its hardware components and how they are interconnected. It includes the design of the central processing unit (CPU), memory, input/output devices, and the communication channels that connect these components. A typical computer system architecture comprises several layers, including the following: 1. Hardware Layer: This layer includes the physical components of the computer system, such as the CPU, memory, input/output devices, and communication channels. 2. Operating System Layer: This layer includes the software that manages the computer system’s resources, such as the CPU, memory, and input/output devices. The operating system also provides a user interface for interacting with the computer system. 3. Application Layer: This layer includes the software applications that run on the computer system, such as word processors, web browsers, and media players. 4. User Layer: This layer includes the users who interact with the computer system, using the operating system and applications to perform tasks. Here’s a link to a diagram that illustrates a typical computer system architecture: https://en.wikipedia.org/wiki/File:Computer_System_Architecture.svg This diagram shows the various layers of a computer system architecture, including the hardware layer, operating system layer, application layer, and user layer. It also shows the communication channels that connect the various components of the computer system. 1/1 Explain Interrupt handling , Explain the general function of interrupt. 1/1 Interrupt handling is a process that occurs when a computer system receives an interrupt signal from a hardware device or a software program. An interrupt is a signal that temporarily stops the normal execution of a program and transfers control to a specific interrupt handler routine, which is designed to handle the interrupt request. The general function of an interrupt is to notify the computer system that an event has occurred that requires immediate attention, such as an input/output operation or a hardware malfunction. When an interrupt occurs, the computer system temporarily stops the current program’s execution and transfers control to the interrupt handler routine. The interrupt handler routine performs 1/1 next? 1/1 The interrupt handler routine performs specific actions to handle the interrupt request. The actions taken by the handler routine depend on the type of interrupt, the hardware device or software program generating the interrupt, and the specific requirements of the interrupt handler. For example, an interrupt generated by an input/output device may require the handler routine to read data from the device or write data to the device. An interrupt generated by a software program may require the handler routine to perform specific actions, such as updating the system clock or responding to user input. Once the interrupt handler routine has completed its task, control is returned to the original program, and it resumes execution from the point where it was interrupted. Interrupt handling is critical for real-time systems where immediate response to events is necessary. In summary, interrupt handling is a crucial mechanism in computer systems that allows hardware devices and software programs to communicate with the operating system and other applications efficiently. Interrupts provide a way for the computer system to handle events that require immediate attention, enabling the system to operate smoothly and efficiently. 1/1 Explain computer system organization in detail. 1/1 Computer system organization refers to the arrangement of components that make up a computer system and how they work together to perform various tasks. It includes hardware components such as the CPU, memory, input/output devices, and communication channels, as well as software components such as the operating system and applications. Here’s a detailed explanation of each component: 1. Central Processing Unit (CPU): The CPU is the “brain” of the computer system that performs arithmetic and logical operations and controls the flow of data between different parts of the system. It consists of two main components: the control unit and the arithmetic logic unit. 2. Memory: Memory is used to store data and instructions that are used by the CPU. There are two main types of memory: primary memory (or RAM) and secondary memory (or storage devices such as hard drives, solid-state drives, and optical drives). 3. Input/Output (I/O) Devices: I/O devices allow users to interact with the computer system by inputting data (such as a keyboard or mouse) or outputting data (such as a monitor or printer). I/O devices can be either internal (such as a hard drive) or external (such as a USB drive). 4. Communication Channels: Communication channels are used to transfer data and instructions between different parts of the computer system. These channels can be either wired (such as Ethernet or USB) or wireless (such as Wi-Fi or Bluetooth). 5. Operating System (OS): The OS manages the computer system’s resources, including the CPU, memory, and I/O devices. It also provides a user interface for interacting with the computer system and runs applications. 6. Applications: Applications are software programs that run on the computer system to perform specific tasks, such as word processing or web browsing. Overall, the organization of a computer system is critical to its efficient operation. The components must work together seamlessly to provide a fast, reliable, and user-friendly experience. Understanding the organization of a computer system is essential for system administrators, developers, and users to optimize its performance and troubleshoot any issues that may arise. 1/1 Explain the storage structure and represent the storage hierarchical structure. 1/1 The storage structure of a computer system refers to the various types of storage devices that are used to store data and program code. These storage devices are organized into a hierarchical structure based on their speed, capacity, and cost. The storage hierarchy can be divided into several levels, each of which has different characteristics and uses. The levels of the storage hierarchy are as follows: 1. Register: Registers are the fastest type of storage and are located inside the CPU. They are used to hold data and instructions that the CPU needs to access quickly. Registers are small in size and have a very limited capacity, typically a few bytes. 2. Cache: Cache is a type of memory that is used to store frequently used data and instructions. Cache is located close to the CPU and has a faster access time than RAM. There are typically several levels of cache, with each level increasing in size and decreasing in speed. 3. Random Access Memory (RAM): RAM is the main memory of a computer system and is used to store data and instructions that are currently being used by the CPU. RAM has a larger capacity than cache but is slower. 4. Solid-State Drives (SSDs): SSDs are a type of non-volatile storage device that is used to store data and program code. They are faster than traditional hard disk drives (HDDs) but have a smaller capacity and are more expensive. 5. Hard Disk Drives (HDDs): HDDs are a type of non-volatile storage device that is used to store data and program code. They have a larger capacity than SSDs but are slower and less reliable. 6. Optical Drives: Optical drives are used to read and write data to optical discs such as CDs, DVDs, and Blu-ray discs. They have a slower access time and lower capacity than SSDs and HDDs. 7. Magnetic Tape: Magnetic tape is a type of storage device that is used for long-term data storage. It has a very large capacity but is slow and has a low access speed. The storage hierarchy is organized in such a way that the fastest and most expensive storage devices are used for the most critical and frequently used data and instructions, while the slower and cheaper devices are used for less frequently accessed data and program code. Here’s a diagram that represents the storage hierarchical structure: markdown ___________________________________ | Register | |___________________________________| | Cache | |___________________________________| | RAM | |___________________________________| | SSD / HDD | |___________________________________| | Optical Drives | |___________________________________| | Magnetic Tape | |___________________________________| In summary, the storage structure of a computer system is organized into a hierarchical structure based on the speed, capacity, and cost of different storage devices. This hierarchy ensures that the most critical and frequently used data and instructions are stored in the fastest and most expensive storage devices, while less frequently accessed data and program code are stored in slower and cheaper devices. 1/1 Explain Bootstrap Programming. 1/1 Bootstrap programming is a process that is used to initialize a computer system when it is first turned on. It involves loading the operating system into memory and preparing the system to run applications. The bootstrap process typically involves several steps: 1. Power-On Self-Test (POST): When the computer is turned on, the BIOS (Basic Input/Output System) performs a self-test to ensure that all the hardware components are working correctly. If any issues are detected, an error message is displayed. 2. Boot Loader: After the POST is completed successfully, the BIOS looks for a boot loader program in the boot sector of the hard drive. The boot loader is responsible for loading the operating system into memory. 3. Operating System Initialization: Once the boot loader has loaded the operating system into memory, the operating system initializes the system components such as the CPU, memory, and I/O devices. The operating system also starts any necessary system services and loads device drivers for any hardware devices that are connected to the system. 4. User Login: Finally, the operating system presents the user with a login screen, allowing them to log in and begin using the system. The bootstrap process is critical to the operation of a computer system because it sets up the system for use by loading the operating system and initializing system components. Without the bootstrap process, the computer would not be able to run applications or perform any useful tasks. In summary, bootstrap programming is the process of initializing a computer system by loading the operating system into memory and preparing the system to run applications. This process is critical to the operation of a computer system and ensures that the system is ready for use by the user. 1/1 Explain the storage hierarchy and explain storage devices (Magnetic Disk / Optical disk /SDD/IO Structure, etc ) 1/1 The storage hierarchy is a structure that organizes various types of storage devices based on their speed, capacity, and cost. The hierarchy typically includes several levels, each with different characteristics and uses. Here is a brief explanation of each level of the storage hierarchy and the storage devices associated with them: 1. Registers: Registers are the fastest type of storage and are located inside the CPU. They are used to hold data and instructions that the CPU needs to access quickly. Registers are small in size and have a very limited capacity, typically a few bytes. 2. Cache: Cache is a type 1/1 next? 1/1 My apologies for the incomplete answer. Here is the complete answer: The storage hierarchy is a structure that organizes various types of storage devices based on their speed, capacity, and cost. The hierarchy typically includes several levels, each with different characteristics and uses. Here is a brief explanation of each level of the storage hierarchy and the storage devices associated with them: 1. Registers: Registers are the fastest type of storage and are located inside the CPU. They are used to hold data and instructions that the CPU needs to access quickly. Registers are small in size and have a very limited capacity, typically a few bytes. 2. Cache: Cache is a type of high-speed memory that is used to store frequently accessed data and instructions. It is located close to the CPU and is much faster than main memory. There are typically two levels of cache: L1 and L2. 3. Main Memory (RAM): Main memory, also known as Random Access Memory (RAM), is used to store data and instructions that the CPU is currently working with. It is relatively fast and has a higher capacity than registers and cache. RAM is volatile, which means that its contents are lost when the power is turned off. 4. Magnetic Disks: Magnetic disks, also known as hard disks or hard drives, are the most common type of secondary storage. They use magnetic storage to store data and are relatively inexpensive and offer large storage capacity. Magnetic disks are slower than main memory and have higher access times. 5. Optical Disks: Optical disks, such as CDs, DVDs, and Blu-ray discs, use optical storage to store data. They have a higher capacity than magnetic disks, but are slower and have a higher access time. 6. Solid State Drives (SSDs): SSDs are a type of storage that uses flash memory to store data. They are faster and more reliable than magnetic disks, but are more expensive and have a lower capacity. 7. Tape: Tape storage is a type of secondary storage that uses magnetic tape to store data. It is relatively inexpensive and has a very high storage capacity, but is slow and has a high access time. The choice of storage device depends on the application’s requirements for speed, capacity, and cost. For example, registers are used to hold frequently accessed data and instructions, while magnetic disks are used for storing large amounts of data that do not need to be accessed frequently. Optical disks and tape are commonly used for backup and archival purposes. The input/output (I/O) structure is the interface between the CPU and the storage devices. It includes the controller, which manages the communication between the CPU and the storage device, and the bus, which is used to transfer data between the CPU and the storage device. The I/O structure also includes device drivers, which are software programs that enable the operating system to communicate with the storage devices. The performance of the I/O structure is critical to the overall performance of the system, as slow or inefficient I/O can cause the CPU to wait for data and reduce system performance. 1/1 Explain the working of modern computers. 1/1 Modern computers are based on the von Neumann architecture, which is a theoretical design for a computer that was proposed by John von Neumann in the late 1940s. The von Neumann architecture consists of four main components: the CPU (Central Processing Unit), memory, input/output devices, and a bus that connects them. Here’s a brief overview of how modern computers work: 1. Input: The input devices, such as a keyboard, mouse, or microphone, are used to enter data into the computer. This data is converted into binary code, which can be understood by the computer. 2. Storage: The data entered by the user is temporarily stored in memory, which is also known as RAM (Random Access Memory). RAM is a volatile storage, meaning that its contents are lost when the power is turned off. For long-term storage, the data is saved to a non-volatile storage device, such as a hard drive or solid-state drive. 3. Processing: The CPU, which is often referred to as the “brain” of the computer, performs calculations and executes instructions. The CPU retrieves data from memory, performs operations on it, and then stores the results back in memory. 4. Output: The output devices, such as a monitor, printer, or speakers, are used to display or output the results of the processing. 5. Operating System: The operating system manages the resources of the computer and provides an interface between the user and the hardware. The operating system handles tasks such as memory management, file management, and process management. 6. Applications: Applications, such as word processors, web browsers, or video editors, are software programs that run on top of the operating system. These applications perform specific tasks or provide specific functionality to the user. The above process is known as the “fetch-decode-execute” cycle. The CPU retrieves an instruction from memory (fetch), decodes the instruction to determine what operation to perform (decode), performs the operation (execute), and then stores the result back in memory (writeback). This cycle is repeated for each instruction in the program until the program is completed. Overall, modern computers are highly complex machines that rely on a combination of hardware and software to perform a wide range of tasks. Advances in technology have led to smaller, faster, and more powerful computers that can handle increasingly complex tasks. 1/1 Explain the transition from user mode to kernel mode 1/1 In a computer system, the CPU operates in two modes: user mode and kernel mode. User mode is the mode in which most programs run, while kernel mode is the mode in which the operating system and device drivers run. When a program runs in user mode, it has limited access to system resources and cannot directly access hardware devices. If a program needs to access a system resource or hardware device, it must request the operating system to do so on its behalf. The operating system will then switch the CPU to kernel mode to perform the requested operation. The transition from user mode to kernel mode is initiated by an interrupt or a system call. An interrupt is a signal sent to the CPU by a hardware device, such as a keyboard or mouse, to request attention from the operating system. A system call is a request made by a program to the operating system for a service, such as file access or memory allocation. When an interrupt or system call occurs, the CPU saves the current state of the program, including the instruction pointer and the program stack, and switches to kernel mode. The operating system then takes over and performs the requested operation. Once the operation is completed, the CPU returns to user mode and resumes the program from where it left off. The transition from user mode to kernel mode is a critical operation that must be carefully controlled to ensure the security and stability of the system. If an unauthorized program is able to enter kernel mode, it could potentially access or modify sensitive system resources, leading to system instability or security breaches. To prevent unauthorized access to kernel mode, modern operating systems use a variety of security mechanisms, such as memory protection, privilege separation, and access control. 1/1 Explain the clustered system with the suitable diagrammatical representation. 1/1 A clustered system is a type of computer system in which multiple computers, called nodes, work together to perform a common task. The nodes are connected to each other through a high-speed network and share their resources, such as processors, memory, and storage, to provide a scalable and fault-tolerant computing environment. Here is a diagrammatic representation of a clustered system: lua +---------------------+ | Load | | Balancer | +-----------+---------+ | | +-------+-------+ | Node 1 | | | | +---------+ | | | Shared | | | | Storage | | | +---------+ | +-------+-------+ | | +-------+-------+ | Node 2 | | | | +---------+ | | | Shared | | | | Storage | | | +---------+ | +-------+-------+ | | ... ... | | +-------+-------+ | Node n | | | | +---------+ | | | Shared | | | | Storage | | | +---------+ | +---------------+ In a clustered system, the load balancer distributes the workload among the nodes, ensuring that each node receives an equal share of the workload. The load balancer monitors the performance of each node and redirects traffic to the most available and least busy node. Each node in the cluster contains its own set of processors, memory, and local storage. The nodes also share a common storage pool, called the shared storage, which is accessible by all nodes in the cluster. The shared storage can be implemented using a variety of technologies, such as a storage area network (SAN) or a network-attached storage (NAS). The nodes communicate with each other through a high-speed network, such as a local area network (LAN) or a fiber-optic network. The network provides a fast and reliable communication channel between the nodes, allowing them to exchange data and coordinate their activities. In a clustered system, if one node fails, the load balancer redirects the workload to the remaining nodes, ensuring that the system remains available and responsive. The use of redundant components, such as power supplies and network adapters, further enhances the fault tolerance of the system. Overall, a clustered system provides a highly scalable, fault-tolerant, and high-performance computing environment suitable for a wide range of applications, such as web hosting, data processing, and scientific simulations. 1/1 Explain the distributed computer system 1/1 A distributed computer system is a collection of independent computers that work together as a unified system. The computers in a distributed system communicate and coordinate their activities through a network, allowing them to share resources and collaborate on tasks. In a distributed system, the processing power, memory, and storage are distributed across multiple computers, known as nodes, which may be located in different geographical locations. The nodes communicate with each other through a communication network, such as a local area network (LAN) or the internet. A distributed system can be classified into two types: client-server and peer-to-peer. In a client-server system, one or more servers provide services to one or more clients. The server is responsible for providing resources or services, such as file storage or database management, to the clients, which are typically desktop or mobile devices. The clients request services from the server and receive the results back. The server may have a centralized database or file system, which is accessed by the clients through the network. In a peer-to-peer system, all nodes are equal and can act as both clients and servers. Each node can share its resources, such as processing power and storage, with other nodes in the network. The nodes in a peer-to-peer system communicate directly with each other without the need for a central server. Peer-to-peer systems are often used for file sharing and collaborative computing. Distributed systems have several advantages over centralized systems, including scalability, fault tolerance, and cost-effectiveness. Distributed systems can scale to handle large amounts of data and traffic by adding more nodes to the network. They are also faulttolerant, as the failure of one node does not necessarily affect the entire system. Finally, distributed systems can be more cost-effective than centralized systems, as they can use existing hardware and resources instead of requiring dedicated servers or mainframes. However, distributed systems also have some challenges, such as ensuring consistency and security across multiple nodes, managing data replication and synchronization, and dealing with network latency and bandwidth limitations. To overcome these challenges, distributed systems often use sophisticated algorithms and protocols, such as consensus algorithms, distributed locking, and replication techniques. 1/1 Explain the peer-to-peer and client-server computing environment . 1/1 A computing environment refers to the way in which multiple computers or devices are organized and work together to achieve a common goal. There are two common types of computing environments: peer-to-peer and client-server. In a peer-to-peer computing environment, all devices or nodes on the network are equal and can act as both clients and servers. Each device can share its resources, such as processing power, memory, and storage, with other devices in the network. The nodes in a peer-to-peer system communicate directly with each other without the need for a central server. Peer-to-peer systems are often used for file sharing, collaborative computing, and gaming. One advantage of a peer-to-peer environment is that it is easy to set up and does not require a central server. Each node can share its resources with other nodes on the network, which can lead to increased efficiency and performance. However, peer-to-peer systems can be more difficult to secure, as each node has access to the resources of other nodes on the network. Additionally, peer-to-peer systems can suffer from scalability issues, as the network can become slow and overloaded as more nodes are added. In a client-server computing environment, there is a central server that provides services or resources to one or more clients. The server is responsible for providing resources or services, such as file storage, database management, or application processing, to the clients, which are typically desktop or mobile devices. The clients request services from the server and receive the results back. The server may have a centralized database or file system, which is accessed by the clients through the network. One advantage of a client-server environment is that it is easier to manage and secure. The central server can control access to resources and data, and can enforce security policies and protocols. Additionally, client-server environments can be more scalable, as the server can handle requests from multiple clients simultaneously. However, client-server environments can be more expensive to set up and maintain, as they require dedicated hardware and software for the server. 1/1 Explain operating system Structure. 1/1 The structure of an operating system (OS) refers to the way in which the various components and modules of the OS are organized and interact with each other. There are several different structures for operating systems, but one common structure is the layered structure. The layered structure of an operating system is divided into several layers or levels, each of which is responsible for a different aspect of the system’s functionality. The layers are arranged in a hierarchical fashion, with the lower layers providing services to the higher layers. The following are the different layers in a typical layered operating system: 1. Hardware layer: This is the lowest layer in the system, and it is responsible for interacting directly with the computer hardware, including the CPU, memory, and input/output devices. 2. Kernel layer: This layer is responsible for managing the system’s resources, including memory, CPU, and input/output operations. The kernel also provides a bridge between the hardware layer and the other layers of the system. 3. Device driver layer: This layer provides the interface between the kernel layer and the various input/output devices, such as printers, keyboards, and mice. 4. System call layer: This layer provides an interface between user programs and the kernel layer. User programs can use system calls to request services from the kernel layer. 5. Library layer: This layer contains a set of libraries that are used by user programs to access system resources. 6. Application layer: This layer contains the user programs that interact with the system. Each layer in the system communicates with the layers above and below it, passing information and requests as needed. The layered structure of an operating system allows for a modular design, with each layer responsible for a specific set of tasks. This makes the system easier to maintain and modify, as changes can be made to individual layers without affecting the rest of the system. 1/1 Explain operating system operations 1/1 Operating systems perform a variety of operations to manage computer resources and provide a platform for running applications. The following are some of the key operations performed by operating systems: 1. Process Management: The operating system manages the execution of processes (programs in execution) by allocating system resources such as CPU time, memory, and I/O devices. It schedules processes, manages process communication and synchronization, and handles process termination. 2. Memory Management: The operating system manages the allocation and deallocation of memory to processes, manages virtual memory, and ensures that processes can access the memory they require. It also handles memory protection, which prevents one process from accessing or modifying the memory of another process. 3. File Management: The operating system manages the creation, deletion, and organization of files, as well as their access and modification by users and applications. It also handles file system security, ensuring that users and applications can only access files they are authorized to access. 4. Device Management: The operating system manages the allocation and deallocation of system resources, such as input/output (I/O) devices like printers and keyboards, and ensures that they are used efficiently by applications. 5. Security Management: The operating system provides security mechanisms to protect the system and its data from unauthorized access, viruses, and other threats. It enforces security policies and provides authentication and authorization mechanisms to control access to system resources. 6. Networking Management: The operating system manages network connections and communication between computers on a network. It provides protocols for data transfer, manages network resources, and ensures network security. 7. User Interface Management: The operating system provides a user interface for users to interact with the system and its applications. It manages the display of information, handles user input, and provides mechanisms for users to interact with running applications. Operating system operations are essential for managing computer resources and ensuring that applications can run efficiently and securely. By performing these operations, the operating system provides a stable and reliable platform for users and applications to operate on. 1/1 What is process management and explain the process management activities. 1/1 Process management is a key operation performed by operating systems, which involves managing the execution of processes or programs in a computer system. A process is an instance of a program in execution, and process management involves allocating system resources, scheduling processes, managing communication and synchronization between processes, and handling process termination. The following are the main activities involved in process management: 1. Process Creation: When a user starts a program, the operating system creates a process to execute that program. This involves allocating resources such as memory, CPU time, and I/O devices to the process. 2. Process Scheduling: The operating system schedules processes to run on the CPU, based on various scheduling algorithms. 1/1 next? 1/1 3. Process Communication and Synchronization: Processes may need to communicate with each other or synchronize their activities to ensure correct operation. The operating system provides mechanisms for inter-process communication (IPC) and synchronization, such as message passing, shared memory, semaphores, and mutexes. 4. Process Termination: When a process completes its execution or is terminated by the user or the operating system, the operating system releases the resources allocated to the process and removes it from the system. This involves deallocating memory, closing files, and releasing other resources held by the process. 5. Process State Management: The operating system tracks the state of each process, including its status (running, ready, blocked), its priority, and its resource usage. This information is used by the process scheduler to determine which processes to run on the CPU. 6. Process Control: The operating system provides mechanisms for controlling the behavior of processes, such as setting priorities, suspending or resuming processes, and limiting resource usage. 7. Process Monitoring and Debugging: The operating system provides tools for monitoring and debugging processes, such as system monitors, performance analyzers, and debuggers. Effective process management is critical for efficient use of system resources and for ensuring that applications operate correctly and securely. By providing mechanisms for process creation, scheduling, communication, synchronization, termination, state management, control, and monitoring, operating systems enable users and applications to run efficiently on computer systems. 1/1 Explain virtualization and services of virtualization 1/1 Virtualization is the creation of a virtual version of something, such as a computer hardware platform, an operating system, a storage device, or a network resource. It allows multiple virtual environments to run on a single physical computer system, enabling efficient use of resources, improved scalability, and better isolation of resources. The services of virtualization can be categorized into three main types: 1. Server Virtualization: Server virtualization involves creating multiple virtual machines (VMs) on a single physical server, each with its own operating system and applications. This enables efficient use of server resources, improves scalability, and provides high availability and disaster recovery capabilities. 2. Desktop Virtualization: Desktop virtualization allows multiple desktop environments to run on a single physical computer system, enabling users to access their desktops from anywhere and on any device. It provides better security, easier management, and improved flexibility and mobility for users. 3. Application Virtualization: Application virtualization involves creating a virtualized environment for individual applications, enabling them to run independently of the underlying operating system and hardware. This allows applications to be installed and run on any device without requiring specific dependencies or configurations. The benefits of virtualization include improved efficiency, scalability, flexibility, and costeffectiveness. It allows multiple operating systems and applications to run on a single physical system, reducing hardware costs, simplifying management, and improving resource utilization. It also provides better security and availability by enabling rapid deployment of backup and recovery solutions, and easier management of software updates and patches. 1/1 Explain the operating system services 1/1 Operating system services are the functionalities provided by the operating system to its users and applications. These services are essential for managing system resources, executing programs, and facilitating communication and data exchange between system components. The following are some of the key operating system services: 1. Process Management: The operating system provides services for creating, managing, and terminating processes. This includes managing process scheduling, synchronization, and communication between processes. 2. Memory Management: The operating system manages the allocation of memory resources to running processes, and ensures that each process has access to the memory it needs. This includes managing virtual memory, which allows programs to use more memory than is physically available. 3. File Management: The operating system provides services for managing files and directories 1/1 next? 1/1 4. Device Management: The operating system manages input/output (I/O) devices, such as keyboards, printers, and network adapters, and provides services for accessing and controlling these devices. 5. Security Management: The operating system provides services for managing system security, such as user authentication, access control, and data encryption. 6. Networking: The operating system provides services for managing network resources, such as network connections and protocols, and facilitates communication between systems. 7. System Monitoring and Management: The operating system provides services for monitoring system performance, managing system configurations, and diagnosing and resolving system errors and problems. 8. User Interface: The operating system provides a user interface for users and applications to interact with the system, including graphical user interfaces (GUIs), command-line interfaces (CLIs), and application programming interfaces (APIs). These services are essential for the effective and efficient operation of a computer system. By providing these services, the operating system enables users and applications to interact with the system, manage resources, and perform tasks. The specific services provided by an operating system can vary depending on the system architecture, hardware, and software requirements. 1/1 What is system call , Explain the types of system calls. 1/1 A system call is a mechanism provided by the operating system that enables applications to request services from the operating system kernel. These services may include accessing hardware devices, managing memory, managing files, and other operations that require privileged access to system resources. When an application makes a system call, it transfers control to the operating system kernel, which performs the requested operation and returns control back to the application. There are three main types of system calls: 1. Process Control System Calls: These system calls are used to create, manage, and terminate processes. Examples of process control system calls include fork(), exec(), wait(), and exit(). 2. File Management System Calls: These system calls are used to manage files and directories. Examples of file management system calls include open(), close(), read(), write(), and stat(). 3. Device Management System Calls: These system calls are used to manage input/output (I/O) devices, such as keyboards, printers, and network adapters. Examples of device management system calls include read(), write(), ioctl(), and select(). In addition to these main types, there are also other system calls that provide additional services, such as memory management, networking, and security. These system calls are essential for enabling applications to interact with the system and perform tasks that would not be possible without privileged access to system resources. 1/1 What are system program , Explain the same in detail. 1/1 System programs are software programs that are designed to interact with the operating system and provide services and functionalities to users and applications. These programs are an integral part of the operating system, and they are typically included with the operating system distribution. There are many different types of system programs, each with its own specific purpose and functionality. Some examples of system programs include: 1. Shell Programs: Shell programs are command-line interfaces that allow users to interact with the operating system by entering commands and receiving responses. Examples of shell programs include Bash, PowerShell, and Command Prompt. 2. Text Editors: Text editors are programs that allow users to create and modify text files. Examples of text editors include Vi, Emacs, and Notepad. 3. Compilers: Compilers are programs that translate high-level programming code into machine code that can be executed by the computer. Examples of compilers include GCC, Clang, and Visual Studio. 4. Debuggers: Debuggers are programs that allow developers to find and fix errors in their code. Examples of debuggers include gdb, lldb, and Visual Studio Debugger. 5. Device Drivers: Device drivers are programs that enable the operating system to communicate with hardware devices, such as printers, scanners, and network adapters. 6. System Utilities: System utilities are programs that perform system-level tasks, such as disk formatting, backup and recovery, and system optimization. Examples of system utilities include Disk Cleanup, Task Manager, and Disk Defragmenter. System programs are essential for the effective and efficient operation of a computer system. They provide users and applications with the tools and functionalities they need to interact with the system, manage resources, and perform tasks. The specific system programs included with an operating system can vary depending on the system architecture, hardware, and software requirements. 1/1 Explain the operating system structure (MS-DOS / Monolithic Structure / Traditional unix structure / Layered approach / Microkernel structure / Solar module appraoch) 1/1 There are several different operating system structures, each with its own advantages and disadvantages. Here are some of the most common operating system structures: 1. MS-DOS: MS-DOS is a classic example of a simple operating system structure. It uses a monolithic kernel, which means that all operating system functionality is contained in a single executable file. This approach makes the operating system relatively fast and efficient, but it also makes it difficult to modify or extend. 2. Monolithic Structure: The monolithic structure is similar to MS-DOS, but it separates the operating system into different modules that can be loaded and unloaded as needed. This approach allows for greater flexibility and easier modification, but it can also make the operating system slower and more complex. 3. Traditional Unix Structure: The traditional Unix structure uses a layered approach, with each layer providing a specific set of services and functionalities. The kernel provides the core operating system services, while other layers provide services such as device drivers, file systems, and networking protocols. This approach allows for greater modularity and flexibility, but it can also make the operating system more complex and difficult to manage. 4. Layered Approach: The layered approach is similar to the traditional Unix structure, but it uses a more strict layering model. Each layer provides a specific set of services, and higher layers can only access services provided by lower layers. This approach provides greater security and modularity, but it can also make the operating system slower and more complex. 5. Microkernel Structure: The microkernel structure separates the operating system into a small, highly-optimized kernel that provides only the most basic services, such as process scheduling and inter-process communication. Other services, such as file systems, networking, and device drivers, are implemented as separate processes that communicate with the kernel using standardized interfaces. This approach provides greater flexibility, security, and modularity, but it can also make the operating system slower and more complex. 6. Solar Module Approach: The Solar module approach is a variation of the microkernel structure that is used in the Solaris operating system. It separates the operating system into a small, highly-optimized kernel and a set of dynamically loadable modules that provide additional services and functionalities. This approach provides greater flexibility, modularity, and scalability, and it allows for easier customization and extension of the operating system. Overall, the choice of operating system structure depends on the specific requirements and constraints of the system being designed. Each structure has its own advantages and disadvantages, and designers must carefully consider these trade-offs when selecting an operating system structure. 1/1 draw diagram or provide diagram links for all structures 1/1 Here are some example diagrams for each of the operating system structures I mentioned: 1. MS-DOS: https://i.imgur.com/9YgDjyS.png 2. Monolithic Structure: https://i.imgur.com/flyhx2M.png 3. Traditional Unix Structure: https://i.imgur.com/XrFp1cx.png 4. Layered Approach: https://i.imgur.com/3chKLXg.png 5. Microkernel Structure: https://i.imgur.com/kzWXZnE.png 6. Solar Module Approach: https://i.imgur.com/ZZrGfoT.png Note that these are just example diagrams and the actual structure of an operating system can vary depending on the specific implementation. 1/1 Explain the difference between the threads and process 1/1 In an operating system, a process is an instance of a program that is being executed, while a thread is a subunit within a process that can be scheduled and executed independently. Here are some key differences between processes and threads: 1. Resource allocation: A process has its own memory space, file descriptors, and other resources allocated by the operating system, while threads share the same memory space and file descriptors as the parent process. 2. Communication: Inter-process communication (IPC) is required to communicate between different processes, while threads can communicate through shared memory or other shared resources. 3. Scheduling: Processes are scheduled independently by the operating system scheduler, while threads within a process share the same scheduling context and are scheduled by the thread scheduler. 4. Overhead: Creating a new process requires a lot of overhead in terms of memory and other resources, while creating a new thread is relatively lightweight and requires less overhead. 5. Parallelism: Multiple processes can run in parallel on a multi-core system, while threads within a single process can be run in parallel. Overall, processes and threads are both useful abstractions for organizing and executing code in an operating system, and the choice between them depends on the specific requirements of the application being developed.