A Beginning Course

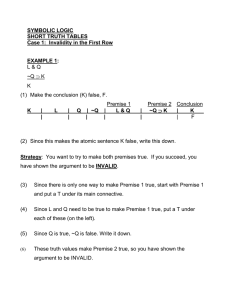

In Modern Logic

Michael B. Kac

University of Minnesota

Contents

A Note to the Student.....................................................................................................1

Introduction ....................................................................................................................3

Part I Getting Ready......................................................................................................6

1 Knowledge and Reasoning .........................................................................................7

2 Statements, Truth and Validity .................................................................................12

3 Logic and Language....................................................................................................35

Part II Sentential Logic .................................................................................................41

4 Negation and Conjunction .........................................................................................42

5 Arguments Involving Negation and Conjunction ....................................................51

6 Conditionals, Biconditionals and Disjunction ..........................................................64

7 Deduction in Sentential Logic ....................................................................................74

8 Indirect Validation ......................................................................................................92

9 Lemmas ........................................................................................................................103

10 Truth Tables ..............................................................................................................113

11 Validity Revisited ......................................................................................................122

12 Logical Relations .......................................................................................................129

13 Symbolization ............................................................................................................140

14 Evaluation of Natural Arguments ...........................................................................149

Part III Predicate Logic.................................................................................................160

15 Individuals and Properties .......................................................................................161

16 Quantification in Predicate Logic............................................................................171

A BEGINNING COURSE IN MODERN LOGIC

17 Deduction With Quantifiers ....................................................................................185

18 Relations and Polyadic Predication.........................................................................207

Part IV Further Topics…………………………………………………………………. 219

19 Logic and Switching Circuits………………………………………………………220

20 Sets and Set Algebra……………………………………………………………….248

21 Sentential Logic as Set Algebra……………………………………………………262

22 Summation and Epilogue…………………………………………………………..269

Appendix 1 Rules and Principles of Deductive Logic……………………………….274

Appendix 2 Solutions to Starred Problems…………………………………………..275

2

Acknowledgement

I would like to express my thanks to Rocco Altier, Jan Binder, and Jeffrey Kempenich, all of

whom have helped to make this text better than it was before their intervention; whatever

shortcomings remain are my fault, not theirs.

A Note to the Student

This course is likely to be different in a number of ways from others you have taken. One major

difference is that whereas in many courses your main job is to memorize information and then

regurgitate it at exam time, in this one the principal emphasis is on analysis and reasoning. There

are, to be sure, certain things you’ll have to learn and keep in memory for future use but the

emphasis is elsewhere. You will be frequently called upon to apply what you’ve learned to

situations you have not previously encountered and to use your own ingenuity in coping with

them. In this respect, the study of logic is a lot like mathematics. But that’s no accident: logic is a

mathematical discipline. This is so in two senses: logic is the underpinning of all mathematical

thinking, and it also has a structure which can only be studied from a mathematical point of

view.

It goes without saying that there will be times when the going will be difficult. Bear in mind,

however, that thousands of students have mastered this subject, and that this is is true even of

ones who didn’t think of themselves going in as much good at math. (I know what I’m talking

about, because I myself was one of those students.) This is one of those subjects which works on

what’s sometimes called the Lightning Principle: for a while it’s all dark and then there’s a flash

of light after which everything is clear. I can’t guarantee that you’ll have this experience, but

many people have had it who never thought they would.

Here are some suggestions that should prove helpful.

1. This course proceeds in cumulative fashion, everything building on what has come

before. For this reason IT IS ABSOLUTELY ESSENTIAL THAT YOU NOT FALL BEHIND. The loss of

even two days can be fatal.

2. Make sure you always have lots of paper on hand.

3. Always write in pencil rather than in ink. You’ll need to do a lot of erasing.

A BEGINNING COURSE IN MODERN LOGIC

2

4. While it’s a good idea to take notes, primarily to assure that you’re paying attention to

what’s being said in the lectures, don’t get carried away. Your focus should always be on

understanding what’s being said, not on getting it down on paper.

5. If you’re having trouble,

SEEK HELP IMMEDIATELY FROM THE INSTRUCTOR OR THE TEACHING

ASSISTANT FOR THE COURSE.

(This is especially important toward the beginning.) Many

failures that could have been avoided come about from letting things go until it’s too late.

The same is true about asking questions in class. The only stupid question is the one you

DON’T ask, and you can be sure that there are others who would like to ask the same one.

6. Practice makes perfect, so you should practice a lot. To help you do so, you can find

answers in Appendix 2 for all problems marked with an asterisk. There are also a number

of problems that are worked in the text itself; these are referred to as Demonstration

Problems. The idea is to do a problem and then check your answer against the one given.

But try to resist the temptation to look at the answer until you have one of your own and

are reasonably confident that it’s correct, or if you are truly, absolutely stumped. When a

problem gives you difficulty, a good strategy is to work through the solution and then go

back to the problem later and try it again on your own.

7. Study in a group if you can. Several heads are always better than one. The best approach

is for everyone in the group to work on the same problem and then compare answers —

and not to look at the answer (if there’s one given) until you’ve compared the ones

you’ve come up with on your own.

8. Be persistent. It’s often darkest just before the dawn.

There’s one more important thing to remember. In logic, things are always done the way they

are for a reason and the reasons ultimately can be traced back to a small number of basic

principles. After a while you’ll find the same ideas coming back again and again, the only

difference being that they’re being applied in new ways to new situations. When you reach the

point where you begin to see that happen, you’re well on your way.

Introduction

At the table next to you as you are having lunch you overhear Ms. Smith and Mr. Jones having a

conversation about computers, including the following exchange:

Ms. Smith: I bought a Newtown Pippin yesterday.*

Mr. Jones: Was it expensive?

Ms. Smith: All Newtown Pippins are expensive.

Note that Ms. Smith does not answer Mr. Jones’s question with a simple ‘yes’ or ‘no’. Ms. Smith

expects Mr. Jones to make an INFERENCE from what she’s said — specifically, to infer that the

answer to his question is affirmative. If Mr. Jones succeeds in doing this (as he no doubt will

unless he’s a complete dolt) we say that he has DEDUCED the CONCLUSION that Ms. Smith has

purchased an expensive computer from the PREMISES (a) that Ms. Smith has purchased a

Newtown Pippin computer, and (b) that all Newtown Pippin computers are expensive. The

conclusion is said to FOLLOW LOGICALLY FROM the premises, or, equivalently, the premises are

said to LOGICALLY ENTAIL (or to IMPLY) the conclusion. Mr. Jones’s inference consists in

recognizing that this is so.

We make inferences constantly in daily life, often without being aware that we are doing so.

(Chances are, for example, that you recognized without having to give it any prolonged thought

that in our little dialogue Ms. Smith was implying that the answer to Mr. Jones’s question is

‘yes’. If so, you were making an inference, possibly without knowing it.) In other situations,

often rather specialized, we may be called upon to make inferences that are more difficult and

require considerable thought. Solving mathematical and scientific problems, for example,

typically requires a highly developed inferential capability.

The process of making inferences is NOT a mechanical one. While certain parts of the process

can be carried out according to a prescribed method, there are many cases in which you have to

use your own imagination in deciding how to proceed. Indeed, part of the purpose of this course

is to develop your imagination in the required way.

*

The Newtown Pippin is a variety of apple — the fruit, not the computer. To my knowledge,

there is no actual make of computer so named.

A BEGINNING COURSE IN MODERN LOGIC

4

Not all the inferences we make are correct. Furthermore, there are those who will try to dupe

us into making incorrect ones. A ploy much favored by such people is illustrated by the

following example. A television commercial tells us that all sorts of successful people drive

Whizbangs. The implication is that if you’re successful then you drive a Whizbang, and it’s the

advertiser’s hope that you will think ‘If I drive a Whizbang, it means I’m successful’. But the

inference you have made — if you’re gullible enough to have made it (and advertisers depend on

there being people who are that gullible) — is not a correct one. If, for example, you have just

lost all your money in the stock market you’re about as unsuccessful as it’s possible to be but

you won’t get your money back by going out and buying a Whizbang.

If the maker of the commercial wanted to play fair, then the slogan should be ‘If you drive a

Whizbang then you’re successful’. If you then infer ‘Aha, if I go out and buy a Whizbang then

I’ll be successful too’ you would be correct in your inference. Unfortunately, the advertiser is

almost certainly lying to you: there are no doubt many unsuccessful people driving Whizbangs.

And since advertisers are not permitted to lie in this way, they try to capitalize on your lack of

sophistication in making inferences instead.

In the fourth century B.C. the Greek philosopher Aristotle turned his formidable intellect to

the task of elucidating the underlying principles of correct inference, thereby founding the

subject that, following the practice of the ancient Greeks, we call LOGIC. Why should anyone

study this subject? I can think of five main reasons:

1. As the Whizbang example shows, if you aren’t careful unscrupulous people can mislead

you by tempting you to reason incorrectly. The study of logic is a good corrective to the

tendency to do so.

2. Many intellectual disciplines require the ability to reason logically — mathematics, the

natural and social sciences and philosophy among them. The better you are at such

reasoning, the easier it will be to master those disciplines.

3. We live in a technological universe which would not be possible without logic — not

only because scientists and engineers engage in logical reasoning to do their jobs but also

because logic is built into the technology itself. For example, complex electronic circuitry

of the kind used in computers incorporates the principles of logic into its very design and

computer programming is partly an exercise in logic.

4. Doing logic is good mental exercise, helping to develop precision and clarity of thought

as well as the ability to deal with abstractions and to undertake complex mental tasks.

A BEGINNING COURSE IN MODERN LOGIC

5. The subject has an intrinsic beauty. Like music or architecture, it’s an art form.

Actually, I would personally give one more. It’s incumbent on an educated person to have

some knowledge of and appreciation for the major developments of human intellectual history.

Logic is one of these — perhaps the most important one. If you don’t know anything about it

then you’ve closed your mind off to any real understanding of what many people regard as the

most important single attribute of the human species: the ability to reason. If you’re willing to

grant that open minds are better than closed ones, then no further justification is needed.

New terms

inference

deduction

premise

conclusion

5

Part I

Getting Ready

1

Knowledge and Reasoning

We have three main ways of acquiring knowledge. One is by experience. For example, if you

want to know what steamed duck’s feet taste like, there is no sure way to find this out except to

eat some steamed duck’s feet.

Another way of acquiring knowledge is by reasoning. Mathematical knowledge is obtained

this way — when you try to solve a mathematical problem you do so by a process that goes on

entirely inside your own head. Suppose, for example, that your job is to solve for x in the

algebraic equation x + 3 = 5. You do it by applying a reasoning process which begins with the

observation that you can get x by itself on the left-hand side by subtracting 3 from both sides.

The next step is to actually do the subtraction, giving you x = 2.

The third and perhaps most common way to obtain knowledge is to combine the first two:

you learn certain things from experience but, once that knowledge is in hand, you make

inferences from it to learn other things. Here is a simple example. Suppose that you already

know that if steamed duck’s feet taste like swamp water then you won’t like them. Never having

tried them, however, you don’t know what they taste like. So you eat some and discover that they

do indeed taste like swamp water.* And from this you can infer that you don’t like them and,

armed with this new knowledge, you avoid them forever after.

This is an example of a kind of inferential process that is carried out quite easily — indeed,

people often make inferences like the one just described without even being aware that they’re

doing so. But sometimes the process becomes more subtle and more complex. Here is an

example.

Before us are three caskets one of which (but only one) contains a treasure. The caskets are

numbered I, II and III and on the lid of each is an inscription:

*

At least that’s what they tasted like the one (and only) time I ever tried them.

A BEGINNING COURSE IN MODERN LOGIC

I.

Lucky the one that chooseth me,

For here there doth a treasure be.

II.

Somewhere there is a treasure hid

But it is not beneath my lid.

III.

Somewhere beneath the shining sun

Is treasure found — but not in Casket Number One.

8

We are given one additional piece of information: at most one of the inscriptions is true. Is there

enough information here to enable us to find the treasure without actually opening the various

caskets and looking inside? Let’s see.

Before we undertake the task, let’s be sure that we understand exactly what we have been

told in advance. In particular, the statement that at most one of the inscriptions is true must be

clearly understood: it means that NO MORE THAN ONE IS TRUE, and IT IS ALSO POSSIBLE THAT ALL

THREE ARE FALSE. But the second of these possibilities is ruled out by the inconsistency of I and

III.

Although it isn’t explicitly stated, we also know one further fact: there are only three

possibilities as to where the treasure can be: it’s in Casket I, Casket II or Casket III. Let’s then

consider each of these in turn.

Possibility 1. If the treasure is in Casket I then the inscription on its lid is true, and so is

the one on the lid of Casket II. But we have been told in advance that no more than one

inscription is true, so this possibility is eliminated.

Possibility 2. If the treasure is in Casket II then the inscriptions on both this casket and

Casket I are false. This is consistent with everything we’ve been told.

Possibility 3. If the treasure is here then the inscriptions on Casket II and Casket III are

both true. This again is inconsistent with what we have been told in advance, so this

possibility is eliminated.

Since Possibility 2 is consistent with all the given information, and is the ONLY possibility

that is consistent with it, we can accordingly infer that Casket II contains the treasure.

Notice that consideration of each possibility requires making an inference. Furthermore, it

involves a special kind of reasoning called CONDITIONAL or HYPOTHETICAL reasoning. Reasoning

of this kind consists of saying ‘Suppose thus-and-such. If this is so then here are some other

A BEGINNING COURSE IN MODERN LOGIC

9

things which must also be true.’ The key word here is suppose. We’re not saying that we know

for a fact that thus-and-such is indeed so. What we’re doing is saying that IF it’s so then so are

certain other things. IF the treasure is in Casket I, then more than one inscription is true; IF the

treasure is in Casket II then only the inscription on the lid of Casket III is true; and so on. Having

investigated the consequences of the various possible suppositions — or HYPOTHESES, or

ASSUMPTIONS, as suppositions are also often called — we then find that only the consequences of

the second hypothesis are consistent with everything else we know. Therefore we are compelled

to accept that as the only viable alternative.

Another very important word has appeared several times in this example and our discussion

of it: consistent. We say above that the consequences of the hypothesis that the treasure is in

Casket II are consistent with what we have been told in advance; similarly, the consequences of

the other two hypotheses are inconsistent with what we have been told. This then gives us a

general way of characterizing hypothetical reasoning: make an assumption and see if its

consequences are consistent with what you’ve been told previously. If not, then that assumption

is wrong. If the consequences are consistent with the previously given information, then the

assumption has a chance of being right. If it turns out to be the ONLY assumption consistent with

the previously given information and if that information is itself correct, then you must accept

the truth of the assumption. If more than one assumption turns out to be consistent with the

previously given information, and if that information is correct, then you will have to do

something more to decide between the remaining alternatives, though you may have narrowed

the number of available possibilities.

In the preceding paragraph we used the qualifying phrase if the (previously given)

information is correct. This is very important. Strictly speaking, we have no GUARANTEE that

there is a treasure in any of the caskets, let alone in Casket II. We could have been lied to about

that. Or we might have been deceived as to how many of the inscriptions are true. Perhaps the

treasure is in fact in Casket I, in which case two inscriptions are correct. In other words, we’re

back to hypothetical reasoning again: IF it’s truly the case that one of the caskets contains a

treasure and that at most one of the inscriptions is true, then we can find the treasure by the line

of reasoning that we’ve adopted. But since we have no assurance that these assumptions are true

the best we can do is to show that only one of the available possibilities is consistent with them.

This is in fact a very common situation. There are many times when we don’t know for sure

whether what we’ve been told is true. If we believe what we’ve been told, this may be nothing

more than an act of faith. Reasoning can’t justify that faith — all it can do is help us determine

what other things are consistent with what we’ve agreed, for whatever reason, to accept as true.

A BEGINNING COURSE IN MODERN LOGIC

On the other hand, we can also learn that our faith was misplaced. Suppose we open Casket II

and find it empty. Then we know that we have been deceived.

10

The two ideas we have just discussed — hypothetical reasoning and consistency — are at the

heart of what we will be studying in this course. The goal is to see what things are (and aren’t)

consistent with prior assumptions; and sometimes it can be shown that there is something wrong

with the assumptions themselves because they are inconsistent with each other. Reasoning of this

general kind plays a role in many different situations and many different fields of study. But our

goal here is not to deal with the specifics of any one such — rather, it is to explore a kind of

intellectual process that plays a role in all of them regardless of the particular subject matter with

which they deal.

New Terms

conditional (hypothetical) reasoning

hypothesis

consistency

Problems

Here are four mathematical problems which can be solved even by people without much

mathematical background. Try to solve at least the first one. An answer for each problem is

given in the back of the book but try to avoid looking at it until you have made a serious effort

to solve the problem. The idea is not to test your problem-solving ability but to get your mind

working in the right way for this course.

*1.

Imagine that you have assembled, by the bank of a river, a wolf, a goat and a cabbage and

that you are to ferry them across the river in a boat so small that you can only take one at a

time. The difficulty is this: if you leave the wolf and the goat unattended, the wolf will eat

the goat; and if you leave the goat and the cabbage unattended, the goat will eat the

cabbage. How can you get all three to the other side of the river in such a way as to never

leave the wolf and the goat alone together, nor the goat and the cabbage, while still ferrying

only one across at a time? (You may make any number of trips back and forth.)

*2.

Suppose that you are given two containers, one with a capacity of 5 liters and another with

a capacity of 3 liters. There are no markings on either to indicate the point at which it

contains a specified amount less than its capacity. Show how, using just these containers,

A BEGINNING COURSE IN MODERN LOGIC

to measure out exactly 4 liters of water. (You can empty and refill a container any number

of times, and you can pour the contents of one into the other.)

*3.

A certain square is such that the number of centimeters equal to its perimter is the same as

the number of square centimeters equal to its area. What is the length of one side of this

square? (Assume that you are dealing exclusively with whole numbers.)

*4.

[CHALLENGE] A certain rectangle which is not a square is such that the number of

centimeters equal to its perimeter is the same as the number of square centimeters equal to

its area. What are the respective lengths of the base and the height of this rectangle?

(Again, assume that you are dealing with whole numbers.)

11

2

Statements, Truth and Validity

A STATEMENT (also known as a PROPOSITION,* ASSERTION or

linguistic expression which can be true or false. Some examples:

DECLARATIVE SENTENCE)

is a

All Newtown Pippin computers are expensive.

When demand exceeds supply, prices tend to rise.

The angles of a triangle sum to a straight angle.

Some expressions which are not statements:

Are all Newtown Pippin computers expensive?

Run away!

the angles of a triangle

Consider now the following group of statements:

(1)

a. All Newtown Pippin computers are expensive.

b. The computer just purchased by Ms. Smith is a Newtown Pippin.

c. The computer just purchased by Ms. Smith is expensive.

*

Some authors use this term to refer not to an actual statement but to its content. We will

normally use the term statement here.

A BEGINNING COURSE IN MODERN LOGIC

13

The third statement bears a certain relationship to the first two — namely that (1a-b) jointly

entail (1c). Put in a different way, IT IS IMPOSSIBLE FOR THE STATEMENTS (1a-b) TO BOTH BE TRUE

AND FOR (1c) TO BE FALSE.

The statements in (1) together form what in logic is called an ARGUMENT.* The statements

other than the last one are called the PREMISES of the argument (from Latin praemissum, meaning

‘that which is put before’), and the last statement the CONCLUSION. An argument is said to be

VALID if, but only if, the conclusion is entailed by the premises. The argument in (1) is thus one

example of a valid argument.

Although it’s probably obvious why it is impossible for the premises of this argument to be

true and the conclusion false, let us take a moment to consider just why this is so. First, look at

the diagram in Fig. 2.1:

Fig. 2.1

Diagrams of this kind are called VENN DIAGRAMS (after the nineteenth century British logician

John Venn). The two overlapping circles represent sets: the left one one represents the set of

Newtown Pippin computers, the right one the set of things that are expensive. The area of

overlap (the region numbered 2 in the diagram) represents the set of things which are BOTH

Newtown Pippin computers and expensive. The other parts of the diagram correspond to those

things which are Newtown Pippin computers and are not expensive (region 1) and those things

which are expensive but are not Newtown Pippin computers (region 3). Consider now the

*

This word is used in ordinary conversation in a number of different ways. For example,

sometimes it is used to mean ‘dispute’, as in ‘Sandy and I had an argument yesterday’. When we

use the term in this course, it will always be in the sense just explained. This will be true of a

number of other terms as well, which have special meanings in the context of the study of logic.

A BEGINNING COURSE IN MODERN LOGIC

14

statement (1a). What this statement says is that the set of non-expensive Newtown Pippin

computers has no members — is ‘empty’ or ‘null’, in the language of set theory. (We will have

more to say about this idea in Chapter 20.) We will indicate that a set is empty by shading the

relevant portion of the diagram, as shown below.

Fig. 2.2

Now consider the statement (1b). Because nothing is located in the shaded area, but because the

object purchased by Ms. Smith is a Newtown Pippin computer, this object must be located in the

area of overlap, as shown in Fig. 2.3.

Fig. 2.3

But since everything in the area of overlap is in the set of expensive things, that means that the

computer purchased by Ms. Smith is one of these things. Hence (1c) must be true if (1a) and (1b)

are.

A BEGINNING COURSE IN MODERN LOGIC

15

Here is a second way of demonstrating the validity of the argument. Suppose that we

consider (1b), the second premise, first. The associated diagram is as follows:

Fig. 2.4a

Here the branching (forked) arrow indicates that the computer is somewhere in the left-hand

circle, though we do not know in which of the two subregions: the statement (1b) speaks only to

the computer’s manufacturer, not its price. But now consider (1a) and recall that according to

this statement the extreme left area is empty. That means that the computer could not be in that

region of the diagram, which we indicate by placing an ‘X’ over the left fork of the arrow —

indicating that the associated possibility has been eliminated — as shown in Fig. 2.4b below.

Fig. 2.4b

A BEGINNING COURSE IN MODERN LOGIC

16

Notice that while Fig. 2.4b looks a little different from Fig. 2.3, the two diagrams convey the

same information: that the region in which the computer purchased by Ms. Smith is located,

according to statements (1a-b), is the region of overlap.

Here now is another example of a valid argument:

(2)

a. No viral disease responds to antibiotics.

b. Gonorrhea responds to antibiotics.

c. Gonorrhea is not a viral disease.

To see that (2) is valid, we again resort to a Venn diagram:

Fig. 2.5

Alternatively, we could have begun with the second premise, which tells us that gonorrhea is

somewhere in the right-hand circle, either in the area of overlap or to the extreme right. The first

premise then elimintes the first of these possibilities since it tells us that the first area is empty.

Consider now an example of an INVALID argument:

(3)

a. All Newtown Pippin computers are expensive.

b. The computer purchased by Ms. Smith is expensive.

c. The computer purchased by Ms. Smith is a Newtown Pippin.

The invalidity of this argument can be seen by consulting the diagram below.

A BEGINNING COURSE IN MODERN LOGIC

17

Fig. 2.6

As in argument (1), the first premise tells us that the region consisting of non-expensive

Newtown Pippin computers is empty. The second premise, statement (3b), tells us that the

computer purchased by Ms. Smith is expensive, and therefore somewhere in the right-hand

circle. Hence, it’s possible that this computer is in the area of overlap (as indicated by arrow A),

and is thus a Newtown Pippin; but there is nothing in statement (3b) to assure us that this is the

ONLY possibility. For all we know, Ms. Smith has purchased an expensive computer from another

manufacturer (as indicated by the right tine of the fork, which is not X-ed out). So it’s possible

for the conclusion to be false even though the premises are true, which makes the argument

invalid.

Let us now consider another example:

(4)

a. All national capitals are seaports.

b. Kansas City is a national capital.

c. Kansas City is a seaport.

Is this a valid argument? Yes it is. This might at first seem like an odd statement since both the

premises and the conclusion of this argument are false. Nonetheless, given the way we have

defined the term valid, (4) fits the definition. In case this is not immediately obvious, let’s

consider a little more closely just what the definition does — and does not — say. It says that in

a valid argument it’s impossible for the premises to all be true and the conclusion false. Notice

now that there is nothing in this statement which requires that any of the statements in the

argument — premises or conclusion — actually BE true. Rather, it says only that IF the premises

are all true then the conclusion must be as well. To put the matter in a slightly different way: in

A BEGINNING COURSE IN MODERN LOGIC

18

an imagined alternative universe in which (4a-b) were true, then (4c) would also have to be true.

What makes the argument valid is not that it tells us the truth about the world as it actually is, but

only that it tells us that were the world to be as depicted in (4a-b) then it would also have to be as

depicted in (4c). In other words we’re engaged here in hypothetical reasoning — just as we were

when we considered the various possibilities in the puzzle of the three caskets.

Another way of looking at the situation is to resort, as we have done previously, to diagrams.

Diagrammatically, if (4a) is presumed true then we have the situation shown below:

Fig. 2.7

and if (4b) is presumed true then we have the situation shown in the next diagram.

Fig. 2.8

A BEGINNING COURSE IN MODERN LOGIC

19

But then (4c) would also have to be true as well since Fig. 2.8 shows Kansas City located in the

right circle, representing the set of seaports. The argument is accordingly valid for exactly the

same reason as (1) — which, it will be noted, it closely resembles.

Here’s another way of making the point. First, let’s consider a consequence of the definition

of validity, to wit: IF AN ARGUMENT IS VALID AND ITS CONCLUSION FALSE, THEN AT LEAST ONE OF ITS

PREMISES IS ALSO FALSE. In fact, a little thought will reveal that this is really just another way of

saying that it’s impossible, in a valid argument, to have premises which are all true and a

conclusion which is false — a restatement, in other words, of the original definition of validity.

So the definition of validity really tells us two things:

1.

A valid argument is one in which the truth of the premises guarantees the truth of the

conclusion .

2.

A valid argument is one in which the falsity of the conclusion guarantees the falsity of

at least one of the premises.

We can now use the statement 2 as a way of showing the validity of (4) by reasoning as follows.

Since (4c) is false we can draw the following diagram:

Fig. 2.9

Both forks are X-ed, indicating that Kansas City is not anywhere in the right-hand circle. There

are now two possibilities, as shown below:

A BEGINNING COURSE IN MODERN LOGIC

20

Fig. 2.10

If Kansas City is not anywhere in the right-hand circle then it is in the non-overlap part of the

left-hand one OR in NEITHER of the two circles (as indicated by the leftmost forking branch, which

points, as it were, to ‘thin air’). If the former, then it’s not a seaport, which is inconsistent with

(4a); and if the latter, then it isn’t a national capital, which is inconsistent with (4b). In other

words, the falsity of (4c), the conclusion of the argument, tells us that one of the premises is false

also — so the argument is valid.*

Many beginning students of logic nonetheless find the point just made about validity

puzzling — even troubling. Nor is this feeling hard to understand. For even if you’re now

persuaded that (4) is valid, ACCORDING TO THE DEFINITION OF VALIDITY THAT WE HAVE GIVEN, that

leads only to another question, which is: why define validity this way? Doesn’t all of this simply

mean that there is something wrong with the definition? For surely if falsehood is bad and

validity good then how can three bad things (like the false statements of which (4) is composed)

*

In this argument, both of the premises are false but that isn’t necessary. If an argument is valid

and its conclusion false, that tells us that the premises are not all true — in other words, at least

one of the premises is false — but it’s perfectly possible for only one of them to be false.

Constructing an example of such an argument is left as an exercise for you to try on your own.

A BEGINNING COURSE IN MODERN LOGIC

21

add up to one good one — a valid argument? Let’s see what we can do to try to clarify what’s

going on.

First of all, there’s no question but that a person who concluded that (4c) is true on the basis

of a prior belief in the truth of (4a-b) would be making a serious mistake. But the error involved

isn’t one of REASONING — rather, it’s a lack of knowledge about certain facts pertaining to

geography. So the claim that (4) is a valid argument shouldn’t be taken as a claim to the effect

that there’s nothing whatsoever wrong with it — there’s just nothing LOGICALLY wrong with it.

The real issue is whether the premises would, IF TRUE, give enough information to justify

acceptance of the conclusion.

Now let’s come back to the definition of validity and consider what it’s supposed to

accomplish. Our interest is in justified inferences from premises. What makes such an inference

justified? Answer: THAT IN MAKING THE INFERENCE WE HAVE FOLLOWED A PROCEDURE WHICH, IF

APPLIED TO TRUE PREMISES, IS GUARANTEED TO YIELD A TRUE CONCLUSION: case in point, argument

(1). So the definition is in effect telling us that justified inferences ARE STRICTLY A MATTER OF

PROCEDURE. Since the procedure which gets us from the premises (4a-b) to the conclusion (4c) is

the same as the one which gets us from (1a-b) to (1c), then at least insofar as procedural issues

are concerned there is nothing wrong with (4) even though there might be plenty of other things

wrong with it.

Here is an analogy that may help in understanding this point. A housing inspector is

interested in determining whether a certain house is in compliance with the building code. This

requires an evaluation of such things as its construction, the current state of the materials, the

wiring, the plumbing and so on. A house which satisfies the requirements of the code in all

respects could nonetheless be deficient in others. It might, for example, be painted a garishly

unattractive color or decorated and furnished in appallingly bad taste. It might therefore be a

house that you wouldn’t want to live in, but it would at least be SAFE to live in. A valid argument

is rather like a house that is properly constructed. You may not like either the premises of the

argument or the conclusion, just as you might dislike the exterior color or the furnishings of a

house; but if the argument is valid, then it’s at least constructed in such a way as to assure that

the truth of the premises guarantees the truth of the conclusions just as a house that is up to code

will at least not fall down around your ears. Like the building inspector, who doesn’t care about

the furnishings or the décor but is interested only in the physical details, we’re interested in

whether an argument has been put together in the appropriate way regardless of whether or not

we agree with any of the statements of which it consists.

A BEGINNING COURSE IN MODERN LOGIC

22

But we can go farther: for it turns out that if we were to disallow arguments containing false

statements, we would severely limit our ability to make justified inferences. Indeed, there’s a

powerful method of reasoning which depends on the possibility of a valid argument having false

premises. The general strategy is as follows. To show that a statement is false, assume for the

sake of argument that it’s true and show that this assumption entails a falsehood. Hence the

assumption that the original statement is true becomes untenable. Here’s an example. Two

students studying for a zoology exam have a difference of opinion as to whether whales are fish.

One student, Pat, maintains that they are and the other, Sandy, seeks to persuade Pat otherwise

by the following argument. Let’s suppose, says Sandy, that whales are fish, as you claim. Now,

fish have gills, right? (Pat agrees.) So if whales are fish, then they have gills, right? Again Pat

agrees but suddenly remembers that the textbook says that whales do NOT have gills and is forced

to concede that Sandy is right — whales are not fish after all. Here’s an outline of what’s just

happened.

At issue: Are whales fish?

Sandy’s opening move: Suppose that whales are fish.

Further premise (accepted by Pat): If whales are fish, then they have gills.

Valid argument:

If whales are fish, then they have gills. (Premise —

agreed to by both Pat and Sandy)

Whales are fish. (Premise — assumed for sake of

argument)

Whales have gills. (Conclusion — recognized by Pat to be

false)

Since the conclusion of the argument is false, one of the premises must be false. The second

premise is agreed by both parties to be true so the first must be false. (You may find it helpful to

cast your mind back to the strategy used in the puzzle of the three caskets in Chapter 1.)

Later on we will see a variant of this strategy — called REDUCTIO AD ABSURDUM (Latin for

‘reduction to absurdity’) — which makes deliberate use of valid arguments with false premises

and false conclusions and which is one of the most common logical techniques used in

mathematical proof.

In the case of (4), we had a valid argument in which false premises entail a false conclusion.

But it is also possible for false premises to entail a true conclusion, as in

(5)

a. All national capitals are on the Missouri River.

A BEGINNING COURSE IN MODERN LOGIC

23

b. Kansas City is a national capital.

c. Kansas City is on the Missouri River.

Here, as in (4), both premises are false but the conclusion is true; furthermore, the conclusion can

be obtained from the premises by exactly the same procedure employed in regard to (1) and (4),

so this argument too is valid.

Another point of some importance is that in a valid argument with a false conclusion, not all

the premises need be false. Thus, compare

(6)

a. All cities on the Missouri River are national capitals.

b. Kansas City is on the Missouri River.

c. Kansas City is a national capital.

Here both the first premise and the conclusion are false, but the second premise is true.

An important point to bear in mind is that if valid reasoning is a matter of proper procedure,

then improper procedure will lead to invalidity EVEN IF THE PREMISES AND CONCLUSION OF AN

ARGUMENT ARE ALL TRUE. Thus, the following argument is invalid even though it contains only

true statements:

(7)

a. All state capitals are seats of government.

b. St. Paul, Minnesota is a seat of government.

c. St. Paul, Minnesota is a state capital.

This argument is the diametric opposite of (4): the geography is impeccable, but a person who

drew the conclusion (7c) on the basis of the premises (7a-b) would be committing a severe error

of reasoning. (Showing why this is so is left to you — see problem 10 at the end of this chapter.)

Let’s go back now to argument (1), which is valid, and note that it has the general structure

shown below.

a.

All __ are __ .

A

b.

B

__ is a(n) __ .

C

A

A BEGINNING COURSE IN MODERN LOGIC

c.

24

__ is __ .

C

B

Each blank is filled in by a grammatically appropriate word or phrase, and the idea is that if two

blanks have the same letter underneath them then they must be filled by the SAME word or

phrase. Thus, if we fill the blanks labelled A by Newtown Pippin computers, the ones labelled B

by expensive and the ones labelled C by the computer purchased by Ms. Smith then we obtain

argument (1). But we can show, by use of a Venn diagram, that ANY argument which conforms to

this general scheme is valid, no matter what choice is made for the fillers of the various blanks

(subject only to the requirement that they be grammatically appropriate). The diagram looks like

this:

Fig. 2.11

Now simply fill in for A, B and C whatever was filled into the corresponding blanks and you’ll

see that the result is always that statement (c) is true if (a) and (b) are.

Now, just for practice, we are going to consider some further arguments of a more

complicated kind. Here is another example.

(8)

a. All poodles are dogs.

b. All dogs are canines.

c. All poodles are canines.

A BEGINNING COURSE IN MODERN LOGIC

25

To show that this is a valid argument we will again resort to Venn diagrams — except that in this

case we must use more complex ones in which three overlapping circles are involved. The reason

is that whereas in the examples considered up to now we had to worry about only two sets, in

this example we have to deal with three: the set of poodles, the set of dogs and the set of canines.

The first premise concerns the relationship between the first and the second of these, the second

premise the relationship between the second and the third, and the copnclusion the relationship

between the first and the third. So our initial setup looks like this:

Fig. 2.12

The sets represented by the various numbered regions are as follows:

1. canines that are neither poodles nor dogs

2. canines that are poodles but not dogs

3. canines that are both poodles and dogs

4. canines that are dogs but not poodles

5. poodles that are neither canines nor dogs

6. poodles that are dogs but not canines

7. dogs that are neither poodles nor canines

To validate the argument we follow steps much like the ones that we followed before. Thus,

from (8a) we obtain

A BEGINNING COURSE IN MODERN LOGIC

26

Fig. 2.13

and from (8b) we obtain

Fig. 2.14

The one region of the circle representing the set of poodles that is left unshaded is region 3, so all

poodles are in this area. But region 3 happens to also be in the circle representing the set of

canines. Hence there are no noncanine poodles — that is, all poodles are canines.

A BEGINNING COURSE IN MODERN LOGIC

27

For Future Reference: To evaluate an argument in which two sets are involved — such as

(1) — a 2-circle diagram is sufficient. To evaluate an argument like (8), in which three sets

are involved, a 3-circle diagram is necessary.

Here is a valid argument that requires yet another extension of our diagrammatic method.

(9)

a. Some dogs are not poodles.

b. All dogs are canines.

c. Some canines are not poodles.

Notice that the first premise of this argument contains a word we have not encountered before:

some. For reasons that will become apparent in a moment, it’s better to defer consideration of

premises containing this word until premises containing the word all have been considered.

Hence, we begin by considering the second premise and obtain

Fig. 2.15

The first premise of (9) tells us that a certain region of the diagram in Fig. 2.15 is nonempty —

has at least one occupant. Whenever we wish to indicate that a region is nonempty we do so by

marking it with a plus sign:

A BEGINNING COURSE IN MODERN LOGIC

28

Fig. 2.16

Note that the region marked is one whose occupants are dogs which are not poodles — so the

argument is valid.

Now, why did we consider the second premise first? Consider what would happen if we had

begun with the first premise. According to (9a), in the absence of the information provided by

(9b), we must consider three possibilities, as shown in the next figure:

Possibility 1

Possibility 2

Fig. 2.17

Possibility 3

A BEGINNING COURSE IN MODERN LOGIC

29

But the second premise tells us that there are no noncanine dogs, which rules out both the second

and the third possibilities. Now, there is nothing wrong in principle with proceeding in this way,

but it clearly makes things more complicated. So, a word to the wise: IF AN ARGUMENT CONTAINS

TWO PREMISES ONE OF WHICH INVOLVES all WHILE THE OTHER INVOLVES some, CONSIDER THE ONE

CONTAINING all FIRST.

Here now is an example of an invalid argument involving both all and some.

(10) a. Some dogs are poodles.

b. All dogs are canines.

c. All poodles are canines.

Again we take the second premise first, since it contains all.

Fig. 2.18

From the first premise, we obtain

A BEGINNING COURSE IN MODERN LOGIC

30

Fig. 2.19

According to the conclusion, we should have the following situation:

Fig. 2.20

But this is not what Fig. 2.19 shows. Although part of the relevant area is shaded in Fig. 2.19,

part of it remains unshaded. Fig. 2.19 is thus insufficient to rule out the possibility of noncanine

poodles.

A BEGINNING COURSE IN MODERN LOGIC

31

New Terms

validity

argument

statement

Venn diagram

Problems

1. Construct a valid argument on the model of (1) in which the first premise is false and the

second true. Then construct one in which the second premise is false and the first true.

*2.

Draw Venn diagrams consistent with the following statements:

a. All those who bark are dogs.

b. Only dogs bark.

How do these diagrams differ?

*3.

Is the following argument valid? Explain why or why not, using Venn diagrams to support

your answer.

a. Only dogs sing soprano.

b. Fido sings soprano.

c. Fido is a dog.

*4.

Is the following argument valid? Explain why or why not, using Venn diagrams to support

your answer.

a. Only dogs sing soprano.

b. Fido is a dog.

c. Fido sings soprano.

A BEGINNING COURSE IN MODERN LOGIC

5. Is the following argument valid? Explain why or why not, using Venn diagrams to support

your answer.

a. No cat barks.

b. Puff does not bark.

c. Puff is a cat.

6. Is the following argument valid? Explain why or why not, using Venn diagrams to support

your answer.

a. No dog sings soprano.

b. Fido is a dog.

c. Fido does not sing soprano.

7. The following argument is valid. Explain why, using Venn diagrams to support your answer.

a. Every politician is a crook.

b. Leo is not a crook.

c. Leo is not a politician.

8. The following argument is invalid. Explain why, using Venn diagrams to support your

answer.

a. Every politician is a crook.

b. Leo is not a politician.

c. Leo is not a crook.

*9.

Here are two arguments:

Argument 1

a. Everything that is human and has a heartbeat is a person.

b. The fetus is human and has a heartbeat.

c. The fetus is a person.

32

A BEGINNING COURSE IN MODERN LOGIC

Argument 2

a. Only something viable outside the womb is a person.

b. The fetus is not viable outside the womb.

c. The fetus is not a person.

There are four possibilities:

1. Both arguments are valid.

2. Both arguments are invalid.

3. Argument 1 is valid but not Argument 2.

4. Argument 2 is valid but not Argument 1.

Which of the foregoing is correct, and why?

10.

Show the invalidity of argument (7) from this chapter. In doing so you might find it helpful

to consider the following equally invalid argument:

a. All state capitals are seats of government. (true)

b. Minneapolis, Minnesota is a seat of government. (true*)

c. Minneapolis, Minnesota is a state capital. (false)

*11.

Is the following argument valid or invalid? Explain your answer.

a. All dogs are canine.

b. Some dogs are canine.

*12.

*

Determine for each of the following whether it is valid or invalid, supporting your answer

by the use of Venn diagrams.

Minneapolis is the seat of Hennepin County.

33

A BEGINNING COURSE IN MODERN LOGIC

I. a. No dogs are felines.

b. Some mammals are dogs.

c. Some mammals are not felines.

II.

a. All dogs are canines.

b. Some mammals are dogs.

c. Some mammals are canines.

III.

a. All dogs are canines.

b. Some mammals are not dogs.

c. Some mammals are not canines.

13.

Determine for each of the following whether it is valid or invalid, supporting your answer

by the use of Venn diagrams.

I.

a. No dog is feline.

b. All poodles are dogs.

c. No poodle is feline.

II.

a. All dogs are canines.

b. Some mammals are not canines.

c. Some mammals are not dogs.

34

3

Logic and Language

Reasoning has both a private and a public side. Insofar as it is a mental process then it is private,

something that only the person engaging in it has access to. And although we have no way of

knowing exactly what is going on inside the head of another person, we do have a device at our

disposal by which one person can become aware of at least some aspects of the mental states of

another. This device is called language.

The importance of language for logic is that language is the vehicle by which we convey to

others the elements of our reasoning. An argument consists of statements, and statements are

linguistic objects. For this reason the study of logic can’t be separated from consideration of

certain facts about language.

Languages like English, French and Chinese are what are called NATURAL languages. They

are the ones that we use in the usual conduct of our daily lives and that we learn in the course of

the normal socialization process. But there are ‘non-natural’ languages as well — such as the

ones that we use to program computers. The practice has also developed among logicians of

using a special language that is particularly adapted to their purposes. Learning logic consists in

part of learning how to use this language, so we shall say a few words about it early on.

Let’s begin with an obvious question. Why make up a whole new language just for the

purpose of studying logic? What’s wrong with continuing to use a natural language like English?

The answer is that there’s nothing wrong IN PRINCIPLE with using natural language but for

many of the logician’s purposes there are a lot of difficulties IN PRACTICE with doing so. Let’s

consider just a couple of them.

As we saw from the preceding chapter, what makes an argument valid is a matter of

procedure. Depending on what language the argument is constructed in, the exact way in which

A BEGINNING COURSE IN MODERN LOGIC

36

you implement this procedure will have to be tailored to the particular language you choose. For

one thing the vocabulary is different, but so is the grammatical structure. At a certain level,

however, the same principles apply regardless of what language you’re dealing with. So the

choice was made to create a new language, a sort of Esperanto, that would be common to all

logicians regardless of the natural language that they speak natively and which makes it possible

to give just one formulation of the procedures.

To see the second difficulty, consider first the following simple argument, with only one

premise.

(1)

a. Pat and Sandy left early.

b. Pat left early.

It’s easy to see that this argument is valid. Now consider another, closely similar, case:

(2)

a. Pat and Sandy or Lou left early.

b. Pat left early.

Is this a valid argument? It turns out that there’s no way to tell. The reason is that the premise,

(2a), is AMBIGUOUS — can be interpreted in more than one way. One possible way of interpreting

this statement is as saying that two people left early: Pat and another person who is either Sandy

or Lou, though we don’t know which. If we assume this interpretation, then if (2a) is true so is

(2b) and the argument is valid. But this is not the only way to interpret (2a), which could just as

well be understood as saying that there are two possibilities as to who left early: Pat and Sandy

on the one hand, Lou on the other. If the sentence is interpreted this way, then the argument is

not valid, for the premise gives us no way of knowing which of the two possibilities actually

occurred.

To a degree we can get around this difficulty — in writing, at any rate — by care in the use

of punctuation. So, for example, we could perhaps use commas to indicate which of the two

senses of (2a) we intend:

Pat, and Sandy or Lou, left early. (first interpretation)

Pat and Sandy, or Lou, left early. (second interpretation)

A BEGINNING COURSE IN MODERN LOGIC

37

(I say ‘perhaps’ here because the puncutuation intended to force the second interpretation seems

rather unnatural.) Unfortunately, there are cases where this option is not open to us. So consider

the argument

(3)

a. The older men and women left early.

b. The women left early.

Here again we have an ambiguous premise. One possible interpretation is that the older men and

ALL of the women left early, in which case (3b) is true. But there is another interpretation on

which it is the older men and also the older women who left early, in which case (3b) need not be

true since the premise tells us nothing about any of the other women. But there is no obvious

way to indicate the intended interpretation of (3a) by using punctuation.

Now, it needs to be pointed out that this situation presents us only with a complication, not

with an insuperable difficulty. For we could take examples like (2) and (3) as telling us that

arguments aren’t valid or invalid in any absolute sense, but valid or invalid relative to specific

interpretations assumed for the statements of which they consist. So, for example, we could say

that (3) is valid relative to the interpretation of (3a) according to which all the women left but not

relative to the other interpretation. But it would be simpler to just set things up so that we never

get ambiguity in the first place, and the special language used by logicians is set up to enable us

to do just that.

The device that we will use for avoiding ambiguities of the kind just illustrated is familiar

from another context, namely ordinary arithmetic — where problems of the same kind arise. For

example, suppose you are asked to compute the value of

3+5´9

Notice that there are two ways in which you could proceed: first add 3 and 5 and then multiply

the result by 9 — as shown in the diagram below.

A BEGINNING COURSE IN MODERN LOGIC

38

Fig. 3.1

Suppose on the other hand that your first step is to multiply 5 by 9 and then to add 3 to the

result: in this case, your answer is different:

Fig. 3.2

Given the original expression, how are you to know in which order to carry out the addition and

multiplication operations? The answer is that you don’t know — the expression as given doesn’t

tell you. To pin it down precisely, you must use parentheses: if you write

(3 + 5) ´ 9

then this indicates that the operations are to be performed in the sequence shown in Fig. 3.1. If

you want the other sequence of operations, you must write

3 + (5 ´ 9)

There is yet another reason for the adoption of an artificial language, and it has to do with the

notion of validity. Recall that whether an argument is valid or invalid depends not on the truth of

either the premises or of the conclusion but on whether the premises (true or not) entail the

conclusion (true or not). Another way of saying the same thing is that the CONTENT of the

statements of which an argument is composed is irrelevant — we care only about the FORM of the

various statements, and of the argument as a whole. Here is a simple illustration. Both of the

following arguments are valid:

(4) a. Pat left early.

b. Lou left early.

c. Pat left early and Lou left early.

A BEGINNING COURSE IN MODERN LOGIC

39

(5) a. Roses are red.

b. Violets are blue.

c. Roses are red and violets are blue.

In fact, you can always construct a valid argument by taking any two statements you want as

premises and then stringing them together with the word and to form the conclusion. Here is a

somewhat different way of saying the same thing. Let S and T be statements. Then a valid

argument can always be formed by arranging them as shown below.

a. S

b. T

c. S and T

In our artificially constructed language we will strip away all the nonessentials pertaining to the

content of statements, which means that in general we will not have any way of knowing

whether they are are true or not. But that doesn’t matter as long as we have a way of knowing

when arguments in which these statements appear are valid or invalid.

The study of logic via the medium of artificially constructed languages is commonly termed

SYMBOLIC LOGIC — though, for reasons which will become clear later on a better term would be

SCHEMATIC LOGIC (or, perhaps, ABSTRACT LOGIC). The particular languages that we will be

introducing here are slight variants of the ones created for the same purposes by Alfred North

Whitehead and Bertrand Russell in their celebrated work Principia Mathematica (1910-1913). It

is perhaps also worth noting that there is now a computer language called Prolog which has wide

currency in the artificial intelligence research community and which very closely resembles the

second of the two languages that we will introduce in this course.

New Terms

natural language

ambiguity

symbolic logic

A BEGINNING COURSE IN MODERN LOGIC

40

Problems

1. Suppose that I wish to prove to you that my attorney is also a dry cleaner. I reason as follows:

All attorneys press suits and everyone who presses suits is a dry cleaner. Therefore my

attorney is a dry cleaner. What (if anything) is wrong with this argument?

*2.

The following English sentences are ambiguous. Describe the ambiguity in each case.

a. I believe you and Pat broke the window.

b. The woman who knows Pat believes Lou saw Sandy.

3. Here are two statements (slightly rewritten) that actually appeared in the news media:

a. The daughter of Queen Elizabeth and her horse finished third in the competition.

b. A convicted bank robber was recently sentenced to twenty years in Iowa.

Both of these sentences are ambiguous. Describe the ambiguity in each case.

4. The sentence All the people didn’t leave is ambiguous. Give paraphrases which indicate the

two meanings.

5. Give paraphrases that will show the two meanings of the sentence The logic final won’t be

difficult because the students are so brilliant.

6. Give paraphrases that show the two meanings of each of the following ambiguous sentences:

a. Mary is a friend of John and my sister.

b. Mary is a friend of John’s boss.

c. University regulations permit faculty only in the bar.

Part II

Sentential Logic

4

Negation and Conjunction

In Chapter 2, the validity or invalidity of the arguments presented there always depends on words

like all, some and no. These words are called QUANTIFIERS and the associated principles of logical

inference make up a branch of the subject called (appropriately enough) QUANTIFICATIONAL

LOGIC. By contrast, the arguments (4) and (5) from Chapter 3 are examples of a type of argument

in which the conclusion consists of the two premises connected by the word and. The validity of

this argument doesn’t depend on anything inside the two sentences (such as the presence of a

particular quantifier), but only on the two sentences TAKEN AS WHOLES being combined in a

certain way to form the conclusion. The branch of logic that deals with such arguments is called

SENTENTIAL LOGIC (sometimes PROPOSITIONAL LOGIC or STATEMENT LOGIC) and which we shall

refer to by the abbreviation SL. In this chapter we begin introducing the special language which

will be used for SL and to which we shall give the name LSL — short for ‘language for

sentential logic’. We will return to quantificational logic in Part III.

There are two kinds of symbols in LSL: letters of the alphabet, such as S and T — called

LITERALS — and additional symbols, which we will introduce gradually, called SIGNS. Literals

represent statements whose specific content and internal structure we choose to ignore, while

combining literals with signs enables us to create larger statements out of smaller ones.*

Statements in this language are commonly referred to as FORMULAS. A formula consisting of just

a literal by itself is said to be SIMPLE; all other formulas are COMPOUND.

The truth or falsity of a statement is called its TRUTH VALUE. If a statement is true, it is said to

have a truth value of 1, and if false to have a truth value of 0.

Pick a formula at random, say S. Then we can create a new (compound) formula in our

language by prefixing the symbol ¬ to it to form ¬ S. The symbol ¬ is called the NEGATION SIGN

*

In principle it is necessary to make provision for an infinite number of literals. We do this by

allowing not only each letter of the alaphabet to count as a literal but also any sequence of

repeated letters. Thus not only is S a literal of LSL, so are SS, SSS, SSSS and so on.

A BEGINNING COURSE IN MODERN LOGIC

43

or NEGATION OPERATOR and the formula ¬ S is called a NEGATION of S. The following rule tells us

how to interpret the negation sign:

a. If S is true (has a truth value of 1) then ¬ S is false (has a truth value of 0).

b. If S is false (has a truth value of 0) then ¬ S is true (has a truth value of 1).

English-speaking logicians often read the negation sign, for convenience, as the English word

not and ¬ S as ‘Not S’. Sometimes they will use the more prolix ‘It is not the case that S’.

Notice that our rule for forming negations says that we can do so by prefixing ¬ to any

formula whatsoever. Thus ¬¬S is also a formula/statement, as are ¬¬¬S, ¬¬¬¬S and so on. Nor

does the choice of literal matter: so ¬T, ¬¬T, ¬¬¬T and so on are also all statements in LSL.

Formulas containing large numbers of negation signs can be simplified by means of the

following rule: successive occurrences of ¬ ‘cancel out’ — for example, ¬¬S and S have the

same truth value. For suppose S is true; then ¬S is false and ¬¬S is accordingly true. Similarly, if

S is false, then ¬S is true and ¬¬S is false. So we can always replace ¬¬S by S (or vice-versa)

knowing that we have not changed anything crucial. We henceforth refer to this as the

CANCELLATION RULE (or CR).

Now pick two formulas, say S and T. Then we can create a new formula by connecting S and

T via the sign Ù: S Ù T. This compound formula is called a CONJUNCTION of S with T and is

interpreted according to the following rule:

a. If S is false, S Ù T is false;

b. if T is false, then S Ù T is false;

c. otherwise, S Ù T is true.

In other words, the conjunction of two statements is true if, BUT ONLY IF, both of the conjoined

statements (or CONJUNCTS, as they are commonly called) are true. Or, to put the same thing a

different way, a conjunction is false if, but only if, at least one of the conjuncts is false. That is,

to say that S Ù T is false is to say that:

a.

S alone is false; or

b.

T alone is false; or

c.

both are false.

A BEGINNING COURSE IN MODERN LOGIC

44

It accordingly follows that the conjunction of a false statement with any other statement is

always false.

The sign Ù is called, not surprisingly, the CONJUNCTION SIGN (also, because of its shape, the

INVERTED WEDGE). This sign is often read, again for convenience, as and by English-speaking

logicians. The reason is that in English when two statements are combined by and, as in

(1)

Ms. Smith owns a Newtown Pippin computer

and

Mr. Jones owns one too.

the entire statement is true if, and only if, both of the underscored statements are true.

Deciding what truth value to associate with a literal is completely arbitrary. Once you have

made a decision, however, then certain other things follow. Suppose, for example, that we have

decided to associate the value 1 with the literal S and 0 with the literal T. Then the compound

formula S Ù ¬ T has the truth value 1. This is so because our rule for interpreting the negation

sign tells us that if T is false then ¬ T is true — which means that S Ù ¬ T is a conjunction of true

statements — while our rule for interpreting the conjunction sign tells us that a conjunction of

true statements is itself true. Diagrammatically:

Fig. 4.1

As in the examples involving arithmetic from Chapter 3, the diagram shows the order in which

we carry out the computations, except that now we’re computing the truth values of compound

statements based on the previously assumed truth values of their literals. The first step is to

compute the value of ¬ T, which is 1 since T has a truth value of 0. We then compute the value

of the entire statement, which is also 1 since two true statements are linked by the conjunction

sign.

In the example just given, there is no problem about determining the order in which the

computations are supposed to be performed. But now consider a formula like ¬ S Ù T. There are

A BEGINNING COURSE IN MODERN LOGIC

45

two possibilities: first evaluate ¬ S and then compute the value for the entire formula or first

compute the value of S Ù T and then determine the effect of applying the negation sign to it.

Which order we choose can make a difference. Suppose, for example, that S and T are both false.

The results of the two different orders of evaluation are shown below.

Fig. 4.2

Fig. 4.3

Note that the final truth values are different in the two cases.

We resolve this ambiguity by adoption of the following convention: if the negation sign

directly precedes a literal then it applies ONLY to that literal. If we mean a formula to be so

interpreted that a negation sign applies to a larger ‘chunk’ of material, then we enclose the chunk

in parentheses, as in ¬ (S Ù T). Hence, leaving our example formula without parentheses tells us

that we are to evaluate in the manner shown in Fig. 4.2; placing parentheses around the

conjunction tells us that we are to evaluate the conjunction first and then the negation thereof —

as shown in Fig. 4.3. In a more complex case, like ¬ (S Ù T) Ù U, ¬ still applies only to S Ù T —

in other words, ¬ always applies to the SHORTEST applicable part of a formula.

A BEGINNING COURSE IN MODERN LOGIC

46

The portion of a formula to which a given sign is intended to apply is called the SCOPE of the

sign. In the formula ¬ S Ù T the scope of the negation sign is the literal S, and the scope of the

conjunction sign consists of ¬ S on the left and T on the right. In the parenthesized version of the

formula, ¬(S Ù T), the scope of the conjunction sign consists of T on the right and S on the left,

and that of the negation sign consists of the compound formula S Ù T.

In some cases, parenthesization does not matter and can accordingly be omitted. For

example, no matter how we parenthesize the formula S Ù T Ù U, we will always get the same

results. This is so because according to our rule for interpreting conjunctions, this formula is true

if all three literals are true, but only if this is so. It thus doesn’t matter whether we start by

computing the value of S Ù T and then that of the entire formula or whether we first compute the

value of T Ù U and then that of the entire formula. This fact about conjunction is called the LAW

OF ASSOCIATIVITY (or the ASSOCIATIVE LAW) for conjunction.

Warning. Although there are cases where parenthesization does not matter, it is clear that

there are many cases in which it does. Consequently, YOU MAY OMIT PARENTHESES ONLY IN

CASES WHERE YOU HAVE BEEN EXPLICITLY TOLD THAT IT DOES NOT MATTER — AS IN THE CASE

And if you are ever in doubt as to whether it matters or not, ALWAYS ASSUME THAT IT

DOES. You can’t go wrong by putting parentheses in, but you CAN go wrong by leaving them

out.

ABOVE.

Although the truth value of a compound formula depends on the truth values assumed for its

literals, we noted earlier that truth values are assigned to literals in a completely arbitrary

fashion. That is, we may choose whatever truth value we wish for a given literal, subject only to

the restriction that if there are multiple occurrences of that literal in a formula then the literal has

the SAME truth value in ALL occurrences. So, for example, in the formula ¬ (S Ù T) Ù ¬S, we are

free to select 0 or 1 as the truth value for S, although having selected one or the other we are

required to assume that this value applies in both places where the literal occurs. On the other

hand, the choice we make for T is completely independent of the choice we make for S: we

could, for example, assume S to be true and T false or vice-versa. There are, in fact, four

possibilities:

both S and T are false

S is true and T is false

S is false and T is true

both S and T are true

A BEGINNING COURSE IN MODERN LOGIC

What we do NOT allow is the assumption, say, that T is false and S is true in its first occurrence

in the formula but not its second. So, for example, if we assume that S is true and T is false then

the computation of the truth value for the entire formula has to look like this:

We can now describe some simple procedures for constructing valid arguments involving

negations and conjunctions. Example: given S Ù T as a premise we can validly infer the

conclusion S. For if S Ù T is true then neither S nor T is false; hence S must be true. In the next

chapter we will look in more detail at arguments in LSL in which negation and conjunction are

involved.

New Terms

literal

sign

formula

simple

compound

47

A BEGINNING COURSE IN MODERN LOGIC

truth value

48

negation

conjunction

conjunct

scope

associativity

Problems

*1.

Suppose that S and T are false and U is true. Compute the truth values for ¬(S Ù T) Ù U

and for ¬S Ù T Ù U. Show the steps in the computations via diagrams like the ones used in

the chapter.

2. Suppose that S is false and T and U are both true. Compute the truth values for each of the

following via diagrams like the ones used in the chapter.

a. ¬(S Ù T) Ù U

b. ¬S Ù T Ù U

c. S Ù T Ù ¬ U

d. ¬ S Ù ¬ T Ù ¬U

e. ¬ (S Ù T Ù U)

*3.

For each of the formulas in the previous problem, find a combination of truth values for S,

T and U which makes the formula as a whole come out with the opposite truth value of the

one it has given the values assumed previously.

4. Find a combination of truth values for S, T and U such that DIFFERENT truth values are

obtained for S Ù ¬T Ù U and for S Ù ¬(T Ù U). Then find a combination of truth values such

that the two formulas have the SAME truth value. Explain your answer, making use of

diagrams to show the steps in the various computations involved.

A BEGINNING COURSE IN MODERN LOGIC

49

5. In the early years of the twentieth century, a group of Polish mathematicians developed an

alternative symbolism for negation and conjunction which enables parentheses to be

dispensed with. In this ‘Polish notation’ as it is commonly called, negations are formed the

same way as in LSL but conjunctions are formed in a different way. Instead of writing S Ù T,

in Polish notation we would write ÙST. The understanding is that the negation sign always

takes THE SMALLEST COMPLETE FORMULA TO ITS IMMEDIATE RIGHT as its scope while the

conjunction sign takes the FIRST TWO SUCH FORMULAS TO ITS RIGHT as its scope. The

differences that are represented in LSL by parenthesization are represented in Polish notation

by the positions in which signs appear. Thus ¬ÙST corresponds to ¬(S Ù T) while Ù¬ST

corresponds to ¬S Ù T.

Here now are some examples of how to translate between LSL and Polish notation. Suppose

first that we are given the LSL formula

S Ù (¬T Ù U)

The best way to do the translation is in a series of steps beginning with the original and with

some intermediate stages which mix the two systems. The original is the conjunction of S

with ¬T Ù U so we start by pulling out the first conjunction sign:

ÙS[¬T Ù U]