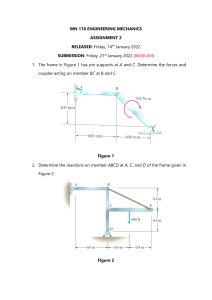

Corentin Cossettini Semestre de printemps 2022 Université de Neuchâtel Bachelor en sciences économiques 2ème année Statistical Learning Table of Contents 1 Introduction ............................................................................................................... 3 1.1 2 3 Review of Probability ................................................................................................. 3 2.1 Random Variables and Probability Distributions ............................................................. 3 2.2 Expected Values, Mean and Variance ............................................................................. 4 2.3 Two Random Variables .................................................................................................. 5 2.4 Different Types of Distributions ..................................................................................... 6 2.5 Random Sampling, Distribution of 𝒀 and Estimation ....................................................... 6 Review of Statistics .................................................................................................... 7 3.1 3.1.1 3.2 3.2.1 3.3 4 5 Hypothesis Tests ............................................................................................................ 7 Concerning the Population Mean ..................................................................................................... 7 Confidence Intervals ...................................................................................................... 9 Concerning the population mean ...................................................................................................... 9 Scatterplots, the Sample Covariance and the Sample Correlation .................................... 9 Linear Regression with One Regressor ...................................................................... 10 4.1 Linear Regression Model with a Single Regressor (population) ...................................... 10 4.2 Estimating the Coefficients of the Linear Regression Model .......................................... 10 4.3 Measures of Fit and Prediction Accuracy ...................................................................... 11 4.4 The Least Squares Assumptions (hypotheses) ............................................................... 11 4.5 The Sampling Distribution of the OLS Estimators .......................................................... 12 Regression with a Single Regressor: Hypothesis Tests and Confidence Intervals ........ 14 5.1 Testing Hypotheses About One of the Regression Coefficients ...................................... 14 5.2 Confidence Intervals for a Regression Coefficient ......................................................... 15 5.3 Regression When X Is a Binary Variable ........................................................................ 15 5.4 Heteroskedasticity and Homoskedasticity .................................................................... 15 5.4.1 6 Types of Data ................................................................................................................ 3 Mathematical Implications of Homoskedasticity ............................................................................ 16 5.5 The Theoretical Foundations of OLS ............................................................................. 16 5.6 Using the t-Statistic in Regression When the Sample Size Is Small ................................. 17 5.7 Summary and Assessment ........................................................................................... 18 Introduction to Data Mining ..................................................................................... 18 6.1 Data Mining - Concepts ................................................................................................ 18 6.2 Classification - Basic Concepts ...................................................................................... 20 6.3 Rule-Based Classifier: basic concepts ............................................................................ 21 1 Corentin Cossettini Semestre de printemps 2022 6.4 6.4.1 6.4.2 6.4.3 6.4.4 6.4.5 6.4.6 6.5 6.5.1 6.5.2 6.5.3 6.5.4 6.5.5 6.5.6 6.5.7 6.5.8 6.6 6.6.1 6.6.2 6.6.3 6.6.4 6.6.5 6.6.6 6.6.7 6.7 6.7.1 6.7.2 6.7.3 6.7.4 Université de Neuchâtel Bachelor en sciences économiques 2ème année Nearest Neighbors Classifier ........................................................................................ 24 Instance Based Classifiers................................................................................................................ 24 Basic Idea and concept .................................................................................................................... 24 Nearest Neighbor Classification ...................................................................................................... 24 Precisions ........................................................................................................................................ 25 Example: PEBLS (MVDM)................................................................................................................. 25 Proximity measures ......................................................................................................................... 25 Naïve Bayesian Classifier .............................................................................................. 26 Bayes Classifier ................................................................................................................................ 26 Example of Bayes Theorem ............................................................................................................. 27 Bayesian Classifiers ......................................................................................................................... 27 Naïve Bayes Classifier ...................................................................................................................... 27 Estimate Probabilities from Data .................................................................................................... 27 Examples of Naïve Bayes Classifier.................................................................................................. 28 Solution to the problem of a 𝒗𝒂𝒍𝒖𝒆 𝒐𝒇 𝒂𝒏 𝒂𝒕𝒕𝒓𝒊𝒃𝒖𝒕𝒆 = 𝟎 ......................................................... 28 Summary ......................................................................................................................................... 28 Decision Tree Classifier ................................................................................................ 29 Example ........................................................................................................................................... 29 Advantages of a Decision Tree Based Classification ........................................................................ 29 General Process to create a model ................................................................................................. 29 Hunt’s Algorithm: General Structure............................................................................................... 29 Tree Induction ................................................................................................................................. 30 Measures of impurity ...................................................................................................................... 31 Practical Issues of Classification ...................................................................................................... 33 Model Evaluation ........................................................................................................ 36 Metrics for Performance Evaluation ............................................................................................... 36 Methods for Performance Evaluation ............................................................................................. 38 Test of Significance (confidence intervals) ...................................................................................... 40 Comparing performance of 2 Algorithms........................................................................................ 42 2 Corentin Cossettini Semestre de printemps 2022 Université de Neuchâtel Bachelor en sciences économiques 2ème année General informations Textbook: Introduction to Econometrics, Stock & Watson chaps 2-7 Read and understand the material before the lesson at home Sum up the slides and ad material from the book Work on the exercises/notebooks before the class and finish them in class Sessions are organized in two parts: 1. Explanations of the chapter 2. Solving the notebooks Work on and sum up the material before class textbook + theory (repetition) Part I 1 Introduction Econometrics is the science and art of using economic theory and statistical techniques to analyze economic data. 1.1 Types of Data Cross-Sectional Data Consists of multiple entities observed at a single time period Time Series Data Consists of a single entity observed at multiple time periods Panel Data Consists of multiple entities observed at two or more time periods 2 Review of Probability 2.1 Random Variables and Probability Distributions Population Will consider populations as infinitely large Collection of all possible entities of interest Population distribution of Y Probability of different values of Y that occur in the population Random variable Y Numerical summary of a random outcome 2 types: o Discrete: takes a discrete set of values (0, 1, 2, …) o Continuous: takes a continuum of possible values Probability Distribution of a Discrete Random Variable List of all possible values of the variable and the probability that each value will occur. Probability of events: probability that an event occurs, comes from the probability distribution looks like an histogram because discrete variable Cumulative probability distribution: probability that the random variable is less than or equal to a particular value Fx Bernoulli distribution: the random discrete variable is binary so the outcome is 0 or 1. Probability Distribution of a Continuous Random Variable Cumulative Probability Distribution: probability that the random variable is less than or equal to a particular value Probability Density Function: the area under the probability density function between any two points is the probability that the random variable falls between those points. 3 Corentin Cossettini Semestre de printemps 2022 Université de Neuchâtel Bachelor en sciences économiques 2ème année 2.2 Expected Values, Mean and Variance Expected Value of a Random Variable Expected Value: denoted 𝐸(𝑌), represents the long-run average value of the random variable over many repeated trials or occurrences. For a discrete random variable, it’s computed as a weighted average of the possible outcomes of that random variable, where the weights are the probabilities of that outcome. For a Bernoulli random variable: 𝐸(𝐺) = 𝑝 Expected Value for a continuous Random Variable It’s the probability-weighted average of the possible outcomes of the random variable. Standard deviation and Variance The Variance of the discrete random variable 𝑌 is: 𝑘 𝜎𝑌2 = 𝑣𝑎𝑟(𝑌) = 𝐸[(𝑌 − 𝜇𝑌 )2 ] = ∑(𝑦𝑖 − 𝑢𝑌 )2 𝑝𝑖 𝑖=1 For a Bernoulli random variable: 𝑣𝑎𝑟(𝐺) = 𝑝(1 − 𝑝) The Standard Deviation of the discrete random variable 𝑌 is: 𝜎𝑌 = √𝜎𝑌2 Other Measures of the Shape of a Distribution Mean and sd are important measures for a distribution. Two others exist: Skewness Kurtosis 𝐸[(𝑌 − 𝜇𝑌 )3 ] 𝐸[(𝑌 − 𝜇𝑌 )4 ] 𝑆𝑘𝑒𝑤𝑛𝑒𝑠𝑠 = 𝐾𝑢𝑟𝑡𝑜𝑠𝑖𝑠 = 𝜎𝑌4 𝜎𝑌3 Measures the lack of symmetry of a Measures how thick and heavy are the tails distribution. of a distribution. Changes the weight of the observations: the smaller disappears and the bigger gets extremely big. Symmetric/normal Distribution: 𝑆𝑘𝑒𝑤𝑛𝑒𝑠𝑠 = 0 Symmetric/normal Distribution: 𝐾𝑢𝑟𝑡𝑜𝑠𝑖𝑠 = 3 Distribution w/ long right tail: 𝑆𝑘𝑒𝑤𝑛𝑒𝑠𝑠 > 0 Distribution heavytailed: 𝐾𝑢𝑟𝑡𝑜𝑠𝑖𝑠 > 3 Distribution w/ long left tail: 𝑆𝑘𝑒𝑤𝑛𝑒𝑠𝑠 < 0 𝐾𝑢𝑟𝑡𝑜𝑠𝑖𝑠 ≥ 0 The greater the kurtosis, the more likely are outliers. 4 Université de Neuchâtel Bachelor en sciences économiques 2ème année Corentin Cossettini Semestre de printemps 2022 2.3 Two Random Variables Joint and Marginal Distributions Random variables X and Z have a joint distribution. It’s the probability that the random variables simultaneously take on certain values. The marginal probability distribution of a random variable Y is the same as the probability distribution. Conditional distribution Conditional distribution: it’s the distribution of a random variable Y conditional on another random variable X taking on a specific value. It gives the conditional probability that Y takes on the value y when X takes the value x. Pr(𝑋=𝑥,𝑌=𝑦) Pr(𝑌 = 𝑦|𝑋 = 𝑥) = Pr(𝑋=𝑥) Conditional expectation/conditional mean It’s the mean of the conditional distribution of Y given X. It’s the expected value of Y, computed using the conditional distribution of Y given X 𝐸(𝑌|𝑋 = 𝑥) = ∑𝑘𝑖=1 𝑦𝑖 Pr(𝑌 = 𝑦|𝑋 = 𝑥) Conditional variance It’s the variance of the conditional distribution of Y given X 𝑉𝑎𝑟(𝑌|𝑋 = 𝑥) = ∑𝑘𝑖=1[𝑦𝑖 − 𝐸(𝑌|𝑋 = 𝑥)]2 Pr(𝑌 = 𝑦𝑖 |𝑋 = 𝑥) Bayes rule Says that the conditional probability of Y given X is the conditional probability of X given Y times the relative marginal probabilities of Y and X Pr(𝑋 = 𝑥 |𝑌 = 𝑦)Pr(𝑌=𝑦) Pr(𝑌 = 𝑦|𝑋 = 𝑥) = Pr(𝑋=𝑥) Independence Two random variables X and Y are independently distributed (independent) if: 1) Knowing the value of one of them provides no information about the other. 2) The conditional distribution of Y given X equals the marginal distribution of Y 3) Pr(𝑌 = 𝑦|𝑋 = 𝑥) = Pr(𝑌 = 𝑦) Covariance Measures the dependance of two random variables (how they move together) Covariance between X and Y is the expected value of 𝐸[(𝑋 − 𝜇𝑋 )(𝑌 − 𝜇𝑌 )], where 𝜇𝑋 is the mean of X and 𝜇𝑌 is the mean of Y. 𝐶𝑜𝑣(𝑋, 𝑌) = 𝐸[(𝑋 − 𝜇𝑋 )(𝑌 − 𝜇𝑌 )] = 𝜎𝑋𝑌 Measure of the linear association of X and Y: its units are (units of X)x (units of Y). 𝐶𝑜𝑣(𝑋, 𝑌) > 0 means a positive relation between X and Y (vice-versa) If X and Y are independent: 𝐶𝑜𝑣(𝑋, 𝑌) = 0 𝐶𝑜𝑣(𝑋, 𝑋) = 𝐸[(𝑋 − 𝜇𝑋 )(𝑋 − 𝜇𝑋 )] = 𝜎𝑋2 Correlation Covariance does have units and it can be a problem. Correlation solves this problem. Measure of dependance between X and Y 𝑐𝑜𝑣(𝑋,𝑌) 𝜎 𝑐𝑜𝑟𝑟(𝑋, 𝑌) = √(𝑣𝑎𝑟(𝑋)𝑣𝑎𝑟(𝑌) = 𝜎 𝑋𝑌 𝜎 𝑥 𝑌 If 𝑐𝑜𝑟𝑟(𝑋, 𝑌) = 0: X and Y are uncorrelated/independant Correlation is always between -1 and 1 5 Université de Neuchâtel Bachelor en sciences économiques 2ème année Corentin Cossettini Semestre de printemps 2022 2.4 Different Types of Distributions The Normal Distribution Normal Distribution with mean 𝜇 and variance 𝜎 2 : 𝑁(𝜇, 𝜎 2 ) Standard Normal Distribution: 𝑁(0,1) Standardize Y by computing 𝑍 = (𝑌 − 𝜇)/𝜎 Other types: Chi-Squared, Student t and F Distributions ̅ and Estimation 2.5 Random Sampling, Distribution of 𝒀 If 𝑌1 , … , 𝑌𝑛 are i.i.d and 𝑌̅ is the estimator of the mean of the population (𝜇𝑌 ) ̅ Sampling Distribution of 𝒀 ̅ The properties of 𝑌 are determined by its sampling distribution The individuals in the sample are drawn randomly the values of (𝑌1 , . . . , 𝑌𝑛 ) are random functions of (𝑌1 , . . . , 𝑌𝑛 ), such as 𝑌̅, are random (had a different sample been drawn, they would have taken on a different value) Sampling distribution of 𝑌̅ = the distribution of 𝑌̅ over different possible samples of size n The mean and variance of 𝑌̅ are the mean and variance of its sampling distribution: o 𝐸(𝑌̅) = 𝜇𝑌 𝜎2 o 𝑣𝑎𝑟(𝑌̅) = 𝑌 = 𝐸[𝑌̅ − 𝐸(𝑌̅)]2 𝑛 As results of these two: 𝑌̅ unbiased estimator of 𝜇𝑌 𝑣𝑎𝑟(𝑌̅) inversely proportional to 𝑛 The concept of the sampling distribution underpins all of econometrics. When 𝑛 is large: If 𝑛 is small complicated If 𝑛 is large simple As 𝑛 increases, distribution of 𝑌̅ becomes more tightly centered around 𝜇𝑌 Distribution of 𝑌̅ − 𝜇𝑌 becomes normal (CLT) Example of the sampling distribution: Mean of 𝑌̅: If 𝐸(𝑌̅) = 𝑡𝑟𝑢𝑒 = 𝜇 = 0.78 𝑌̅ is an unbiased estimator of 𝜇 If 𝑌̅ becomes close to 𝜇 when 𝑛 large: Law of Large Number 𝑌̅ is a consistent estimator of 𝜇 The Law of Large Numbers Estimator Consistent if the Pr that it falls within an interval of the true pop value tends to 1 as the sample size increases If (𝑌1 , … , 𝑌𝑛 ) are i.i.d. and 𝜎𝑌2 < ∞, then 𝑌̅ is a consistent estimator of 𝜇𝑦 , that is: Pr[|𝑌̅ − 𝜇𝑌 | < 𝜀] → 1 𝑎𝑠 𝑛 → ∞, 𝜀 is an infinitesimal change, so basically zero 𝑝 𝑌̅ → 𝜇𝑌 (= lim 𝑌̅ = 𝜇𝑦 ) 𝑛→∞ 6 Université de Neuchâtel Bachelor en sciences économiques 2ème année Corentin Cossettini Semestre de printemps 2022 Central Limit Theorem If (𝑌1 , … , 𝑌𝑛 ) are i.i.d. and 𝜎𝑌2 < ∞, then when 𝑛 is large, the distribution of 𝑌̅ is well approximated by a normal distribution. 𝜎2 𝑌̅~𝑁 (𝜇𝑌 , 𝑌 ) 𝑛 𝑌̅−𝐸(𝑌̅) √𝑣𝑎𝑟(𝑌̅) 𝑌̅−𝜇𝑌 𝑌 /√𝑛 Standardized, 𝑌̅ = The larger the n, the better the approximation =𝜎 is approximately 𝑁(0, 1) 3 Review of Statistics Estimators and Estimates Estimator: function of a sample of data to be drawn randomly from a population. Random variable Estimate: numerical value of the estimator when it is actually computed using data from a specific sample. Nonrandom number Bias, Consistency and Efficiency 𝑌̅ is an estimator of 𝜇𝑌 Bias: for 𝑌̅ it’s 𝐸(𝑌̅) − 𝜇𝑌 the difference between them! o 𝑌̅ is unbiased 𝐸(𝑌̅) = 𝜇𝑌 Consistency: when the sample size is large, the uncertainty of 𝜇𝑌 arising from random variations in the sample is very small. 𝑝 o 𝑌̅ is consistent 𝑌̅ → 𝜇𝑌 Efficiency: if an estimator has a smaller variance than another one, he’s more efficient. o 𝑌̅ is efficient 𝑣𝑎𝑟(𝑌̅) < 𝑣𝑎𝑟(𝑌̌) ̅ If 𝑌 is unbiased, 𝑌̅ is the Best Linear Unbiased Estimator (BLUE) and the most efficient. 3.1 Hypothesis Tests Method to choose between 2 hypotheses, in an uncertain context. 3.1.1 Concerning the Population Mean Null hypothesis: hypothesis to be tested 𝐻0 : 𝐸(𝑌) = 𝜇𝑌,0 Two-sided alternative hypothesis: holds if the null hypothesis does not 𝐻1 : 𝐸(𝑌) ≠ 𝜇𝑌,0 We use the evidence to decide whether to reject 𝐻0 or failing to do so (and accept 𝐻1 ) p-value Probability of drawing a statistic at least as adverse to the null as the value actually computed with your data, assuming that the null hypothesis is true. Significance level of a test: pre-specified probability of incorrectly rejecting the null, when the null is true. Some definitions: 𝜇𝑌,0 : specific value of the population mean under 𝐻0 𝑌̅: sample average 𝐸(𝑌): population mean, unknown 𝑌̅ 𝑎𝑐𝑡 : value of the sample average actually computed in the data set at hand To compute the p-value, it is necessary to know the sampling distribution of 𝑌̅ under 𝐻0 According to the CLT, the distribution is normal when the sample size (n) is large. 𝜎2 The sampling distribution of 𝑌̅ is 𝑁 (𝜇𝑌,0 , 𝑌 ) 𝑛 7 Université de Neuchâtel Bachelor en sciences économiques 2ème année Corentin Cossettini Semestre de printemps 2022 Calculating the p-value when 𝝈𝒀 is unknown In practice, we have to estimate the standard deviation from the sample because we don’t have it from the population. 𝜎2 𝜎2 We have: 𝑌̅~𝑁 (𝜇𝑌,0 , 𝑌 ) where 𝑌 = 𝜎𝑌̅2 𝑛 𝑛 Standard deviation of the sampling distribution of 𝑌̅: 𝜎𝑌̅ = 𝜎𝑌 /√𝑛 #built from the pop but we don’t know its variance to build the variance of the sampling distribution so we have to find an estimator. Estimator of 𝜎𝑌̅ : 𝑆𝐸(𝑌̅) = 𝜎̂𝑌̅ = 𝑠𝑌 /√𝑛 #see how 𝑠𝑌 is computed below QUESTION: we divide 2 times by N ? Sample variance and sample standard deviation 𝑛 1 2 𝑠𝑌 = ∑(𝑌𝑖 − 𝑌̅)2 𝑛−1 𝑖=1 𝑠𝑌 = √𝑠𝑌2 #dividing by n-1 makes the estimator unbiased, we lost 1 degree of freedom when estimating the mean Calculating the p-value with 𝜎𝑌̅2 estimated and n large: 𝑌̅ − 𝜇𝑌,0 𝑌̅ 𝑎𝑐𝑡 − 𝜇𝑌,0 𝑌̅ 𝑎𝑐𝑡 − 𝜇𝑌,0 𝑝 − 𝑣𝑎𝑙𝑢𝑒 ≅ 𝑃𝑟𝐻0 [| 𝑠 |) |>| |] = 2𝜙 (− | 𝑠𝑌 𝑌 𝑆𝐸(𝑌̅) √𝑛 √𝑛 The t-statistic It’s also called the standardized sample average, we use it as a test statistic to perform hypothesis tests quite often: 𝑡= 𝑌̅−𝜇𝑌,0 𝑠𝑌 √𝑛 once computed: 𝑡 𝑎𝑐𝑡 = 𝑌̅ 𝑎𝑐𝑡 −𝜇𝑌,0 𝑠𝑌 √𝑛 We can rewrite the formula for the p-value by substituting the equ° of the t-statistic: 𝑝 − 𝑣𝑎𝑙𝑢𝑒 = 𝑃𝑟𝐻0 [|𝑡| > |𝑡 𝑎𝑐𝑡 |] = 2𝜙(−|𝑡 𝑎𝑐𝑡 |) Link p-value – significance level and results The significance level is prespecified. Example: significance level = 5% Reject 𝐻0 if |𝑡| ≥ 1.96 and reject 𝐻0 if 𝑝 ≤ 0.05 A small p-value means that 𝑌̅ 𝑎𝑐𝑡 or 𝑡 𝑎𝑐𝑡 is far away from the mean under 𝐻0 and that it is very unlikely that this sample would have been drawn if 𝐻0 is true, which means if the population mean is equal to the population mean under 𝐻0 . reject 𝐻0 A big p-value means that 𝑌̅ 𝑎𝑐𝑡 or 𝑡 𝑎𝑐𝑡 is close to the mean under 𝐻0 and that it is very likely that this sample would have been drawn if 𝐻0 is true, which means if the population mean is equal to the population mean under 𝐻0 . not reject 𝐻0 P-value: marginal significance level One-sided Alternatives 𝐻1 : 𝐸(𝑌) > 𝜇𝑌,0 The general approach is the same, with the modification that only large positive values of the t-statistic reject 𝐻0 right side of the normal distribution 𝑝 − 𝑣𝑎𝑙𝑢𝑒 = 𝑃𝑟𝐻0 (𝑍 > 𝑡 𝑎𝑐𝑡 ) = 1 − 𝜙(𝑡 𝑎𝑐𝑡 ) or 𝐻1 : 𝐸(𝑌) < 𝜇𝑌,0 left side of the normal distribution 𝑝 − 𝑣𝑎𝑙𝑢𝑒 = 𝑃𝑟𝐻0 (𝑍 < 𝑡 𝑎𝑐𝑡 ) = 1 + 𝜙(𝑡 𝑎𝑐𝑡 ) 8 Corentin Cossettini Semestre de printemps 2022 Université de Neuchâtel Bachelor en sciences économiques 2ème année 3.2 Confidence Intervals Use data from a random sample to construct a set of values (confidence set) that contains the true population mean 𝜇𝑌 with a certain prespecified probability (confidence level). The upper and lower limits of the confidence set are an interval (confidence interval). 3.2.1 Concerning the population mean 95% CI for 𝜇𝑌 is an interval that contains the true value of 𝜇𝑌 in 95% of all possible samples. The CI is random because it will differ from one sample to the next. 𝜇𝑌 is NOT random, we just don’t know it. A 95% CI can be seen as the set of value of 𝜇𝑌 not rejected by a hypothesis test with a 5% significance level. For further informations, see “Résumé statistique inférentielle II » from the 3rd semester. 3.3 Scatterplots, the Sample Covariance and the Sample Correlation The 3 ways to summarize the relationship between variables! Scatterplots Sample Covariance and Correlation Estimators of the population covariance and correlation. Computed by replacing a population mean with a sample mean. They are, just as the sample variance, consisten. 1 Sample covariance: 𝑠𝑋𝑌 = 𝑛−1 ∑𝑛𝑖=1(𝑋𝑖 − 𝑋̅)(𝑌𝑖 − 𝑌̅) 𝑠 Sample correlation: 𝑟𝑋𝑌 = 𝑠 𝑋𝑌 𝑠 𝑋 𝑌 A high correlation (close to 1) means that the points in the scatter plot fall very close to a straight line ADD DIFFERENCE BETWEEN MEANS? NECESSARY? (chap. 3 SW). 9 Corentin Cossettini Semestre de printemps 2022 Université de Neuchâtel Bachelor en sciences économiques 2ème année 4 Linear Regression with One Regressor Allows us to estimate, and make inferences, about population slope coefficients. Our purpose is to estimate the causal effect on Y of a unit change in X. relationship between X and Y Linear regression (statistical procedure) can be used for: Causal inference: using data to estimate the effect on an outcome of interest of an intervention that changes the value of another variable. Prediction: using the observed value of some variable to predict the value of another variable. 4.1 Linear Regression Model with a Single Regressor (population) The problems of statistical inference for linear regression are basically the same as for estimation of the mean or of the differences between two means. We want to figure out if there is a relationship between the two variables (hyp test). Ideally, 𝑛 is large because we work with the CLT. General Notation of the Population Regression Model 𝑋𝑖 : independent variable or regressor 𝑌𝑖 : dependant variable or regressand 𝛽0 : intercept coefficient/parameter, value of the regression line when 𝑋 = 0 𝛽1 : slope coefficient/parameter, difference in Y associated with a unit difference in X 𝑢𝑖 = 𝑌𝑖 − (𝛽0 + 𝛽1 𝑋𝑖 ): the error term, difference between 𝑌𝑖 and its predicted value according to the regression line. 𝑌𝑖 = 𝛽0 + 𝛽1 𝑋𝑖 : population regression line/function relationship that holds between X and Y, on average, over the population 𝑌𝑖 = 𝛽0 + 𝛽1 𝑋𝑖 + 𝑢𝑖 𝑓𝑜𝑟 𝑖 = 1, … , 𝑛 4.2 Estimating the Coefficients of the Linear Regression Model We don’t know the coefficients so we have to find estimators for them from the available data! learn about the population using a sample of data. these values are estimated from a sample to make an inference about the population. The Ordinary Least Squares Estimator (OLS) Intuitively, we want to fit a line through the data, the line that makes the least error or squares. The OLS estimator chooses the regression coefficients so that the line is as close as possible to the data, you find them by following: 1) Let 𝑏0 and 𝑏1 be some estimators for 𝛽0 and 𝛽1 2) Regression line based on these estimators: 𝑏0 + 𝑏1 𝑋𝑖 3) 𝑀𝑖𝑠𝑡𝑎𝑘𝑒 𝑚𝑎𝑑𝑒 𝑤ℎ𝑒𝑛 𝑝𝑟𝑒𝑑𝑖𝑐𝑡𝑖𝑛𝑔 = 𝑌𝑖 − (𝑏0 + 𝑏1 𝑋𝑖 ) = 𝑌𝑖 − 𝑏0 − 𝑏1 𝑋𝑖 4) Sum of all these squared predictions for 𝑛 observations: ∑𝑛𝑖=1(𝑌𝑖 − 𝑏0 − 𝑏1 𝑋𝑖 )2 5) There is a unique pair of estimators that minimize this expression the OLS estimators ̂0 and the OLS estimator of 𝛽1 is 𝛽 ̂1 OLS estimator of 𝛽0 is 𝛽 General Notation of the Sample Regression Line ̂1 = 𝑠𝑋𝑌 𝛽 𝑠2 𝑋 ̂0 = 𝑌̅ − 𝛽 ̂1 𝑋̅ 𝛽 𝑢̂𝑖 = 𝑌𝑖 − 𝑌̂𝑖 : residual for the 𝑖 𝑡ℎ observation, 𝑌̂𝑖 is the predicted value. ̂0 + 𝛽 ̂1 𝑋: OLS regression line/sample regression line/function 𝛽 ̂0 + 𝛽 ̂1 𝑋𝑖 ̂𝑖 = 𝛽 𝑌 𝑓𝑜𝑟 𝑖 = 1, … , 𝑛 10 Corentin Cossettini Semestre de printemps 2022 Université de Neuchâtel Bachelor en sciences économiques 2ème année 4.3 Measures of Fit and Prediction Accuracy How well does the regression line fit the data? Is the regressor responsible for a little or big variation of the dependant variable? Are the observations tightly distributed around the regression line or spread out? The Regression 𝑹𝟐 Fraction of the sample variance of 𝑌 explained by 𝑋. 1) We have from above: 𝑢̂𝑖 = 𝑌𝑖 − 𝑌̂𝑖 ⟺ 𝑌𝑖 = 𝑌̂𝑖 + 𝑢̂𝑖 2) 𝑅 2 can be written as the ratio of the explained sum of squares to the total sum of squares: 2 𝐸𝑆𝑆 = ∑𝑛𝑖=1(𝑌̂𝑖 − 𝑌̅) #(sample predicted – sample average)^2 𝑇𝑆𝑆 = ∑𝑛𝑖=1(𝑌𝑖 − 𝑌̅)2 #(actual – sample average)^2 𝐸𝑆𝑆 𝑅2 = = 𝐶𝑜𝑟𝑟(𝑌, 𝑋)2 𝑇𝑆𝑆 3) 𝑅 2 can also be written in terms of the fraction of the variance of 𝑌𝑖 not explained by 𝑋𝑖 , aka the sum of squared residuals. 𝑛 𝑆𝑆𝑅 = ∑ 𝑢̂𝑖 2 𝑖=1 4) 𝑇𝑆𝑆 = 𝐸𝑆𝑆 + 𝑆𝑆𝑅 𝑅2 = 1 − 𝑆𝑆𝑅 𝑇𝑆𝑆 0 ≤ 𝑅2 ≤ 1 Complete with the exercises ! The Standard Error of the Regression (SER) Estimator of the standard deviation of the regression error 𝑢𝑖 𝑆𝐸𝑅 = 𝑠𝑢̂ = √𝑠𝑢̂2 1 𝑆𝑆𝑅 where 𝑠𝑢̂2 = 𝑛−2 ∑𝑛𝑖=1 û2𝑖 = 𝑛−2 #we divide by 𝑛 − 2 because two degrees of freedom were lost when estimating 𝛽0 and 𝛽1 . Predicting Using OLS in-sample prediction observation for which the prediction is made was also used to estimate the regression coefficients we are in the sample. out-of-sample prediction prediction for observations not in the estimation sample. The goal of prediction: provide accurate out-of-sample predictions 4.4 The Least Squares Assumptions (hypotheses) What, in a precise sense, are the properties of the OLS estimator? We would like it to be unbiased, and to have a small variance. Does it? Under what conditions is it an unbiased estimator of the true population parameters? To answer these questions, we need to make some assumptions about how Y and X are related to each other, and about how they are collected (the sampling scheme). These assumptions – there are three – are known as the Least Squares Assumptions. 𝑌𝑖 = 𝛽0 + 𝛽1 𝑋𝑖 + 𝑢𝑖 , 𝑖 = 1, … , 𝑛 11 Université de Neuchâtel Bachelor en sciences économiques 2ème année Corentin Cossettini Semestre de printemps 2022 1. The Conditional distribution of 𝒖𝒊 given 𝑿𝒊 has mean zero o That is, 𝐸(𝑢|𝑋 = 𝑥) = 0 ̂1 is unbiased o Implies that 𝛽 o Intuitively, consider an ideal randomized controlled experiment : 𝑋 is randomly assigned to people (ex : students randomly assigned to different size classes). Randomization is done by computer, using no information about the individual. Because 𝑋 is assigned randomly, all other individual characteristics (the things that make up 𝑢) are independently distributed of 𝑋. Thus, in an ideal randomized controlled experiment : 𝐸(𝑢|𝑋 = 𝑥) = 0 Recall: if 𝐸(𝑢|𝑋 = 𝑥) = 0, then 𝑢 and 𝑋 have 0 cov and are uncorrelated. In actual experiments, or with observational data, it’s not necessary true. 2. (𝑿𝒊 , 𝒀𝒊 ), 𝒊 = 𝟏, … , 𝒏 Are Independently and Identically Distributed o i.i.d arises automatically if sampled by simple random sampling: the entity is selected then, for that entity, X and Y are observed (recorded) o The main place we will encounter non-i.i.d. sampling is when data are recorded over time (“time series data”) extra complications 3. Large Outliers are Unlikely A large outlier can strongly influence the results! See this outlier of Y (red circle) that’s why it’s important to look at the data before doing any regression line. 4.5 The Sampling Distribution of the OLS Estimators ̂0 , 𝛽 ̂1 ) are computed from a randomly drawn sample random variables OLS Estimators (𝛽 with a sampling distribution that describes the values they could take over different possible random samples. ̂0 and 𝛽 ̂1 are 𝛽0 and 𝛽1 : The means of the sampling distributions of 𝛽 ̂ ̂ 𝐸(𝛽0 ) = 𝛽0 and 𝐸(𝛽1 ) = 𝛽1 unbiased estimators Normal Approximation to the Distribution of the OLS Estimators in Large Samples ̂ If the Least Squares Assumptions hold, then 𝛽̂ 0 , 𝛽1 have a jointly normal sampling distribution: 1 𝑣𝑎𝑟[(𝑋𝑖 −𝜇𝑋 )𝑢𝑖 ] 2 2 ̂1 ~𝑁 (𝛽1 , 𝜎̂ o 𝛽 ) where 𝜎𝛽̂ = 𝑛 [𝑣𝑎𝑟(𝑋 𝛽 )]2 1 o 1 𝑖 1 𝑣𝑎𝑟(𝐻𝑖 𝑢𝑖 ) 𝜇 2 2 ̂0 ~𝑁(𝛽0 , 𝜎̂ 𝛽 ) where 𝜎𝛽̂ =𝑛 where 𝐻𝑖 = 1 − [𝐸(𝑋𝑋2 ] 𝑋𝑖 𝛽 2 2 0 0 [𝐸(𝐻𝑖 )] 𝑖 ) Implications: o When 𝑛 is large, the distribution of the estimators will be tightly centred around their means. o Consistent estimators 2 o The larger the 𝑣𝑎𝑟(𝑋𝑖 ), the smaller 𝜎𝛽̂ 1 Intuitively, if there is more variation in 𝑋, then there is more information in the data that you can use to fit the regression line. easier to draw a regression line for the black dots (with 𝑣𝑎𝑟(𝑋𝑖 ) bigger!) 12 Corentin Cossettini Semestre de printemps 2022 Université de Neuchâtel Bachelor en sciences économiques 2ème année ########################################################################## 30.04.2022: bis jetzt We looked at probability: regression model 𝑌𝑖 = 𝛽0 + 𝛽1 𝑋𝑖 + 𝑢𝑖 𝛽0 + 𝛽1 𝑋𝑖 represents Z This model explains (X,Y) E[u|X]=0 o 𝐸[𝑢] = 0 o 𝐶𝑜𝑣(𝑋, 𝑌) = 0 Parameters of the model are B0 and B1 𝛽0 = 𝐸[𝑌] − 𝐵1 𝐸[𝑋] 𝐶𝑜𝑣(𝑋, 𝑌) 𝛽1 = 𝑉𝑎𝑟(𝑋) 𝑉𝑎𝑟(𝑌) = 𝑉𝑎𝑟(𝑍) + 𝑉𝑎𝑟(𝑢) 𝑉𝑎𝑟(𝑍) = [𝐶𝑜𝑟𝑟(𝑋, 𝑌)]2 𝑉𝑎𝑟(𝑌) All this probability tools are useful to do statistics, working with samples: We are going to try to estimate the parameters of the model and find how good the regression is. 𝑛 ̂ = 1 ∫ (𝑋𝑖 − 𝑋̅)2 𝑉𝑎𝑟(𝑋) 𝑛 𝑖=1 ̂ 𝑛 1̂ ̂ 𝐶𝑜𝑣(𝑋, 𝑌) = ∫ (𝑋𝑖 − 𝑋̅)(𝑌𝑖 − 𝑌̅) 𝑛 𝑖=1 ̂0 = 𝑌̅ − 𝐵 ̂1 𝑋̅ 𝛽0 𝐵 1 𝑛 ̅ )(𝑌𝑖 −𝑌̅) ∫ (𝑋̂ 𝑖 −𝑋 ̂1 = 𝑛 𝑖=1 𝛽1 𝐵 1 𝑛 ̅ 2 (𝑋𝑖 −𝑋) ∫ 𝑛 𝑖=1 We want to know the part of the regression that is good work with Z because the “u” is the error term 2 2 𝑉𝑎𝑟(𝑍) = (𝐵0 + 𝐵1 𝑋1 − 𝐸(𝑍)) + ⋯ + (𝐵0 + 𝐵1 𝑋𝑛 − 𝐸(𝑍)) 2 2 ̂ = (𝐵 ̂0 + 𝐵 ̂1 𝑋1 − 𝐸(𝑍)) + ⋯ + (𝐵 ̂0 + 𝐵 ̂1 𝑋𝑛 − 𝐸(𝑍)) 𝑉𝑎𝑟(𝑍) We still want to get rid of 𝐸(𝑍) ̂0 + 𝐵 ̂1 𝑋1 is named 𝑌̂1 in the book, 𝐵 ̂0 + 𝐵 ̂1 𝑋𝑛 is named 𝑌 ̂ 𝐵 𝑛 ̂ = 1 ∑(𝑌̂𝑖 − 𝑌̅̂) 𝑉𝑎𝑟(𝑍) 𝑛 2 ̂ 𝑉𝑎𝑟(𝑍) ̂ 𝑉𝑎𝑟(𝑌) = 2 1 ∑ (𝑌̂𝑖 −𝑌̅̂) 𝑛 1 𝑛 ∫ (𝑌 −𝑌̅)2 𝑛 𝑖=1 𝑖 = 𝑅2 SER: ̂𝑖 = 𝑌𝑖 − 𝐵 ̂0 − 𝐵 ̂1 𝑋𝑖 = 𝑢̂𝑖 it’s the estimate of the contribution of other terms 𝑌𝑖 − 𝑌 ̂ = 𝑠𝑞𝑟𝑡(𝑣𝑎𝑟(𝑢) ̂ ) 𝑆𝐸𝑅 = 𝑠𝑑(𝑢) ########################################################################## 13 Université de Neuchâtel Bachelor en sciences économiques 2ème année Corentin Cossettini Semestre de printemps 2022 5 Regression with a Single Regressor: Hypothesis Tests and Confidence Intervals 5.1 Testing Hypotheses About One of the Regression Coefficients The process is the same for 𝛽0 and 𝛽1 ! General form of the t-statistic 𝑒𝑠𝑡𝑖𝑚𝑎𝑡𝑜𝑟 − ℎ𝑦𝑝𝑜𝑡ℎ𝑒𝑠𝑖𝑧𝑒𝑑 𝑣𝑎𝑙𝑢𝑒 𝑡= 𝑠𝑡𝑎𝑛𝑑𝑎𝑟𝑑 𝑒𝑟𝑟𝑜𝑟 𝑜𝑓𝑡ℎ𝑒 𝑒𝑠𝑡𝑖𝑚𝑎𝑡𝑜𝑟 Two-Sided Hypotheses Concerning 𝜷𝟏 ̂1 also has a normal sampling distribution in large samples, hypotheses about the Because 𝛽 true value of the slope 𝛽1 can be tested using the same approach as for 𝑌̅. 𝐻0 : 𝛽1 = 𝛽1,0 𝐻1 : 𝛽1 ≠ 𝛽1,0 To test 𝐻0 against the Alternative 𝐻1 and just like for the population mean 𝑌, we have to follow 3 steps: ̂𝟏 1) Compute the standard error of 𝜷 2 ̂1 ) = √𝜎̂ 𝑆𝐸(𝛽 𝛽 1 Heteroskedasticity-robust standard errors 1 2 Where 𝜎̂𝛽̂ =𝑛 0 2 ̅ 1 𝑋 ∑𝑛 ̂𝑖2 𝑢 [1−( )𝑋 ] 𝑖 1 𝑛 𝑛−2 𝑖=1 ∑ 𝑋2 𝑛 𝑖=1 𝑖 2 2 ̅ 1 𝑛 𝑋 (𝑛 ∑𝑖=1[1−( 1 𝑛 )𝑋𝑖 ] ) ∑ 𝑋2 𝑛 𝑖=1 𝑖 2 𝜎̂𝛽̂ 1 ̂𝑖 = 1 − ( 1 𝐻 𝑛 𝑋̅ 2 ∑𝑛 𝑖=1 𝑋𝑖 ) 𝑋𝑖 1 𝑛 2 2 1 𝑛 − 2 ∑𝑖=1(𝑋𝑖 − 𝑋̅) 𝑢̂𝑖 = 2 𝑛 1 𝑛 [𝑛 ∑𝑖=1(𝑋𝑖 − 𝑋̅)2 ] 2) Compute the t-statistic 𝑡= ̂1 − 𝛽1,0 𝛽 ̂1 ) 𝑆𝐸(𝐵 3) Compute the p-value Rejecting the hypothesis at the 5% significance level if the p-value is less than 0.05 or, equivalently, if |𝑡 𝑎𝑐𝑡 | > 1.96. 𝑎𝑐𝑡 ̂ ̂1 − 𝛽1,0 𝛽 𝛽 − 𝛽1,0 𝑝 − 𝑣𝑎𝑙𝑢𝑒 = 𝑃𝑟𝐻0 [| |>| 1 |] = 2𝜙(−|𝑡 𝑎𝑐𝑡 |) ̂1 ) ̂1 ) 𝑆𝐸(𝛽 𝑆𝐸(𝛽 Note: a regression software computes automatically those values (see function “lmfit” in R) One-Sided Hypotheses Concerning 𝜷𝟏 𝐻0 : 𝛽1 = 𝛽1,0 𝐻1 : 𝛽1 < 𝑜𝑟 > 𝛽1,0 Because 𝐻0 is the same, the construction of the t-statistic is the same. The only difference between a one- and a two-sided hypothesis test is how you interpret the t-statistic: o For a left-tail test: 𝑝 − 𝑣𝑎𝑙𝑢𝑒 = 𝑃𝑟𝐻0 (𝑍 < 𝑡𝑎𝑐𝑡 ) = 1 + 𝜙(𝑡𝑎𝑐𝑡 ) o For a right-tail test: 𝑝 − 𝑣𝑎𝑙𝑢𝑒 = 𝑃𝑟𝐻0 (𝑍 > 𝑡𝑎𝑐𝑡 ) = 1 − 𝜙(𝑡𝑎𝑐𝑡 ) In practice, we use this kind of test only when there is a reason to do so 14 Corentin Cossettini Semestre de printemps 2022 Université de Neuchâtel Bachelor en sciences économiques 2ème année 5.2 Confidence Intervals for a Regression Coefficient The process is the same for 𝛽0 and 𝛽1 ! Confidence Interval for 𝜷𝟏 2 definitions: 1) Set of value that cannot be rejected using a two-sided hypothesis test with a 5% significance level 2) Interval that has a 95% probability of containing the true value of 𝛽1 : in 95% of possible samples that might be drawn, the CI will contain the true value. Link with hypothesis test: a hyp test w/ 5% significance level will reject the value of 𝛽1 in only 5% of all possible samples in 95% of all possible samples, the true value of 𝛽1 won’t be rejected (2nd definition above) ̂1 − 1.96𝑆𝐸(𝛽 ̂1 ), 𝛽 ̂1 + 1.96𝑆𝐸(𝛽 ̂1 )] When sample size is large: 95% 𝐶𝐼 𝑓𝑜𝑟 𝛽1 = [𝛽 Confidence Intervals for predicted effects of changing 𝑿 ̂1 − 1.96𝑆𝐸(𝛽 ̂1 )) ∆𝑥, (𝛽 ̂1 + 1.96𝑆𝐸(𝛽 ̂1 )) ∆𝑥] 95% 𝐶𝐼 𝑓𝑜𝑟 𝛽1 ∆𝑥 = [(𝛽 5.3 Regression When X Is a Binary Variable It’s also possible to make a regression analysis when the regressor is binary (takes only 2 values: 0 or 1). Binary variable = indicator variable = dummy variable Interpretation of the Regression Coefficients The mechanics are the same as with a continuous regressor The interpretation is different and is equivalent to a difference of means analysis. 𝑌𝑖 = 𝛽0 + 𝛽1 𝐷𝑖 + 𝑢𝑖 What is 𝐷𝑖 ? o It’s the binary variable that can take only 2 values What is 𝛽1 ? o Called “Coefficient on 𝐷𝑖 ” o 2 possible cases: 𝑫𝒊 = 𝟎 𝑫𝒊 = 𝟏 𝑌𝑖 = 𝛽0 + 𝑢𝑖 𝑌𝑖 = 𝛽0 + 𝛽1 + 𝑢𝑖 We know: 𝐸(𝑢𝑖 |𝐷𝑖 ) = 0 → We know: 𝐸(𝑌𝑖 |𝐷𝑖 = 1) = 𝛽0 + 𝛽1 𝐸(𝑌𝑖 |𝐷𝑖 = 0) = 𝛽0 𝛽0 + 𝛽1 is the population mean 𝛽0 is the population mean value when value when 𝐷𝑖 = 1 𝐷𝑖 = 0 Thus, 𝛽1 = 𝐸(𝑌𝑖 |𝐷𝑖 = 1) − 𝐸(𝑌𝑖 |𝐷𝑖 = 0) difference between population means! Hypothesis Tests and Confidence Intervals If the 2 population means are the same 𝛽1 = 0 We can test this 𝐻0 : 𝛽1 = 0 against 𝐻1 : 𝛽1 ≠ 0 5.4 Heteroskedasticity and Homoskedasticity We bother about this because it influences our hypothesis tests (the SE is not the same!) Definitions The error term 𝑢𝑖 is: homoskedastic if the variance of the conditional distribution of 𝑢𝑖 given 𝑋𝑖 , 𝑣𝑎𝑟(𝑢𝑖 |𝑋𝑖 = 𝑥) is constant for 𝑖 = 1, … , 𝑛 and in particular does not depend on 𝑋𝑖 ; heteroskedastic if otherwise 15 Université de Neuchâtel Bachelor en sciences économiques 2ème année Corentin Cossettini Semestre de printemps 2022 Homoskedastic data: particular case of heteroskedastic data where the variance of the ̂1 becomes simpler. estimator 𝛽 Visually Homoskedastic Heteroskedastic LSA 1 satisfied bc 𝐸(𝑢𝑖 |𝑋𝑖 = 𝑥) = 0 Variance of 𝑢 doesn’t depend on 𝑋 5.4.1 LSA 1 satisfied bc 𝐸(𝑢𝑖 |𝑋𝑖 = 𝑥) = 0 Variance of 𝑢 depends on 𝑋 Mathematical Implications of Homoskedasticity So far, we have allowed that the data is heteroskedastic. What if the error terms are in fact homoskedastic? Whether the errors are homoskedastic or heteroskedastic, the OLS estimator is unbiased, consistent and asymptotically normal. ̂1 and the OLS standard error simplifies The formula for the variance of 𝛽 Homoskedasticity-only Variance Formula 2 2 ̂1 ) = 𝜎𝑢2 ̂0 ) = 𝐸(𝑋𝑖2 ) 𝜎𝑢2 𝑣𝑎𝑟(𝛽 𝑣𝑎𝑟(𝛽 𝑛𝜎𝑋 𝑛𝜎𝑋 ̂1 ) is inversely proportional to 𝑣𝑎𝑟(𝑋): more spread in 𝑋 means more Note: we see that 𝑣𝑎𝑟(𝛽 information about 𝛽1 Homoskedasticity-only Standard Errors 1 1 2 ̂1 ) = √(𝜎̃̂ 𝑆𝐸(𝛽 =) 1 𝑛−2 𝑛 𝛽 1 ̂2 ∑𝑛 𝑖=1 𝑢𝑖 𝑛 ∑𝑖=1(𝑋𝑖 −𝑋̅)2 𝑛 1 2 2 ( ∑𝑛 𝑖=1 𝑋𝑖 )𝑠û 2 ̂0 ) = √(𝜎̃̂ 𝑆𝐸(𝛽 =) ∑𝑛𝑛 𝛽 0 ̅ 2 𝑖=1(𝑋𝑖 −𝑋) The usual standard errors, which we call heteroskedasticity – robust standard errors, are valid whether or not the errors are heteroskedastic Practical Implications In general, you get different standard errors using the different formulas. To be sure, always use the general formula (heteroskedastic-robust standard errors) because valid for the both cases. Warning: R uses the simpler formula If you don’t override the default and there is in fact heteroskedasticity, your standard errors (and wrong t-statistics and confidence intervals) will be wrong – typically, homoskedasticity-only SEs are too small. 5.5 The Theoretical Foundations of OLS What we already know: OLS is unbiased and consistent 16 Corentin Cossettini Semestre de printemps 2022 Université de Neuchâtel Bachelor en sciences économiques 2ème année A formula for heteroskedasticity-robust standard errors How to construct CI and test statistics We are using OLS is because it’s the language of the regression analysis! What we doubt: Is this really a good reason to use OLS? Other estimators with smaller variances are maybe better? What happened to the student t distribution? To answer these questions, we have to make stronger assumptions. The Extended Least Squares Assumptions 3 LSA + 2 others: 1) 𝐸(𝑢𝑖 |𝑋𝑖 = 𝑥) = 0 2) (𝑋𝑖 , 𝑌𝐼 ), 𝑖 = 1, … , 𝑛 are i.i.d. 3) Large outliers are rare More restrictive – apply in fewer cases. But lead 4) 𝑢 is homoskedastic to stronger results (under those assumptions) 5) 𝑢 is distributed 𝑁(0, 𝜎 2 ) because calculations simplify. Efficiency of OLS, part I: The Gauss-Markov Theorem ̂1 has the smallest variance among all linear estimators. Under the first 4 assumptions, 𝛽 “If the three least squares assumptions hold and if errors are homoskedastic, then the OLS ̂1 is the Best Linear conditionally Unbiased Estimator (BLUE).” estimator 𝛽 Efficiency of OLS, part II ̂1 has the smallest variance of all consistent estimators. Under the 5 assumptions, 𝛽 If the errors are homoskedastic and normally distributed + LSA 1-3, then OLS is a better choice than any other consistent estimator the best you can do! Some not-so-good thing about OLS Warning: that has important limitations! 1. Gauss-Markov Theorem has 2 limitations: Homoskedasticity is rare and often doesn’t hold Result only for linear estimators, which represent only a small subset of estimators 2. The strongest result (OLS is the best you can do, cf above “part II”) requires homoscedastic normal errors pretty rare in application 3. OLS is more sensitive to outliers than some other estimators. For example, in the case of the mean of the population with big outliers, we prefer the median. 5.6 Using the t-Statistic in Regression When the Sample Size Is Small 1) 2) 3) 4) 5) 𝐸(𝑢𝑖 |𝑋𝑖 = 𝑥) = 0 (𝑋𝑖 , 𝑌𝐼 ), 𝑖 = 1, … , 𝑛 are i.i.d. Large outliers are rare 𝑢 is homoskedastic 𝑢 is distributed 𝑁(0, 𝜎 2 ) ̂0 ,̂ If they all hold, 𝛽 𝛽1 are normally distributed and the t-statistic has 𝑛 − 2 degrees of freedom. Under those 5 assumptions and the null hypothesis, the t-statistic has a Student t distribution with 𝑛 − 2 degrees of freedom. 17 Corentin Cossettini Semestre de printemps 2022 Université de Neuchâtel Bachelor en sciences économiques 2ème année Why 𝑛 − 2? Because we estimated 2 parameters For 𝑛 < 30, the t critical values can be a faire bit larger than the 𝑁(0,1) critical values For 𝑛 > 50, the difference in 𝑡𝑛−2 and 𝑁(0,1) distribution is negligible. Recall the student t table: Degrees of freedom 5% t-distribution critical value 10 2.23 20 2.09 30 2.04 60 2.00 1.96 ∞ Practical Implications If n < 50 and you really believe that, for your application, u is homoskedastic and normally distributed, then use the t n−2 instead of the N(0,1) critical values for hypothesis tests and confidence intervals. In most econometric applications, there is no reason to believe that u is homoskedastic and normal – usually, there is good reason to believe that neither assumption holds. Fortunately, in modern applications, n > 50, so we can rely on the large-n results presented earlier, based on the CLT, to perform hypothesis tests and construct confidence intervals using the large-n normal approximation. 5.7 Summary and Assessment The initial policy question: Suppose new teachers are hired so the student-teacher ratio falls by one student per class. What is the effect of this policy intervention (“treatment”) on test scores? Does our regression analysis answer this convincingly? o Not really: districts with low student to teacher ratio tend to be ones with lots of other resources and higher income families, which provide kids with more learning opportunities outside school...this suggests that corr(ui , STR i ) > 0, so E(ui |X i) ≠ 0 o So, we have omitted some factors, or variables, from our analysis, and this has biased our results. Part II Data mining: deviner la valeur d’un attribut (variable) en utilisant d’autres attributs. 6 Introduction to Data Mining 6.1 Data Mining - Concepts Definitions Science of discovering structure and making predictions in large samples. Exploration and analysis by automatic or semi-automatic means of large quantities of data in order to discover meaningful patterns. Objectives Discovering structures/patterns: explore and understand the data understanding the past! Making predictions: given measurements of variables, learn a model to predict their future values. Predict the future! Data: definition 18 Corentin Cossettini Semestre de printemps 2022 Université de Neuchâtel Bachelor en sciences économiques 2ème année Collection of data objects and their attributes Attribute: property or characteristic of an object Object: described by a collection of attributes Attributes Types Distinctness: =, ≠ Nominal Ex: ID number, eye color, … Ordinal Ex: rankings, grades, height Interval Ex: dates, temperatures, … Ratio Ex: length, time, counts Description Properties Order: <,> Addition: +,Multiplication: *,/ The attribute provides only enough Distinctness information to distinguish one object from another The attribute provides enough info to Distinctness and order order objects The difference between values are Distinctness, meaningful addition order and Differences between the values and Distinctness, order, ratios are meaningful addition and multiplication Concepts Domain: set of objects from the real world about which knowledge is supposed to be delivered by data mining Target attribute: attribute to be predicted (‘YES’/’NO’) Input attributes: observable attributes that can be used for prediction Dataset: a subset of the domain, described by the set of available attributes (rows: instances; columns: attributes) Predictive model: chunks of knowledge about some domain of interest, that can be used to answer queries not just about instances from the data used for model creation, but also any other instances from the same domain Origins of Data Mining An analytic process that uses one or more available datasets from the same domain to create one or more models for the domain. The ultimate goal of data mining is delivering predictive models. Uses ideas from machine learning, stats and database systems Vocabulary differences between stats and machine learning: Inductive learning Most of data mining algorithms are using inductive learning approach: The algorithm (or learner) is provided with training information from which it has to derive knowledge via inductive inference. Any prediction uses knowledge to deduce the answer via deductive inference. 19 Corentin Cossettini Semestre de printemps 2022 Université de Neuchâtel Bachelor en sciences économiques 2ème année Knowledge: expected result of the learning process Inductive inference: discovering patterns in training information and generalizing them appropriately (“specific to general”) Query: a new cas or observation with unknown aspects Deductive inference: supplying the values of unknown aspects based on a “general to specific process” The learner isn’t informed and has no possibility to verify with certainty which of the possible generalizations are correct complicated to check if the prediction is true or not. Use feedback to improve the quality of the model. Data Mining Tasks Regression Predict a value of a numeric target attribute based on the values of other input attributes, assuming a linear or nonlinear model of dependency. Applications: predicting sales amount of new product based on advertising expenditure Numeric variable is the only difference between regression and classification. Classification In a lot of cases the variable is binary. Predict a discrete target attribute based on the values of other input attributes. Applications: fraud detection, direct marketing... Clustering Predict the assignment of instances to a set of clusters, such that the instances in any one cluster are more similar to each other than to instances outside the cluster. Applications: market segmentation Association Rule Discovery Given a set of records each of which contains some number of items from a given collection. Application: identify items that are bought together by sufficiently many customers… 6.2 Classification - Basic Concepts Definition Given a collection of records (training set) o Each record is defined as a tuple (x,y) where x is a set of attributes, and y is a special attribute, denoted as the class label (also called target) Find a model for class attribute y as a function f of the values of attributes x o The function maps each attribute set x to one of the predefined class label y o The function is a classification model Goal: previously unseen records should be assigned a class as accurately as possible o A test set is used to determine the accuracy of the model. Usually, the given data set is divided into training and test sets, with training set used to build the model and test set used to validate it. 20 Corentin Cossettini Semestre de printemps 2022 Université de Neuchâtel Bachelor en sciences économiques 2ème année Illustrating Classification Task Classification Techniques Neural networks Decision Tress based Methods are the most important! But others exist. 6.3 Rule-Based Classifier: basic concepts Classification model uses a collection of IF…THEN… rules o “𝐶𝑜𝑛𝑑𝑖𝑡𝑖𝑜𝑛 → 𝑦” o LHS: left hand side rule antecedent or condition, conjunction of tests related to attributes o RHS: right hand side rule consequent, class label (y) Rule A rule R covers an instance x if the attributes of the instance satisfy the condition of the rule Coverage of a rule: fraction of records that satisfy the antecedent of a rule all the instances covered by the rule (%) Accuracy of a rule: fraction of records that satisfy both the antecedent and consequent of a rule instances that satisfy the condition and look which ones are true and false. We don’t look at all the instances (only the ones that satisfy the rule)! If no rule applies, we give a default value Characteristics of Rule-Based Classifier Mutually exclusive rules Every possible record is covered by at most one rule Exhaustive rules Every possible record is covered by at least one rule We can mix them! (exhaustive +mutually exclusive, …) Rules Can Be Simplified Effects of a rule simplification Rules are no longer mutually exclusive A record may trigger more than one rule Solution: ordered set rule, unordered rule set- use voting scheme Rules are no longer exhaustive A record may not trigger any rules Solution: use a default class 21 Corentin Cossettini Semestre de printemps 2022 Université de Neuchâtel Bachelor en sciences économiques 2ème année Ordered Rule Set Known as a decision list Give an order to the rule set (the 1st applies /…) When the test record is presented to the classifier, it is assigned to the class label of the highest ranked rule it has triggered (if no rule applies, assigned to the default class!) Rule Ordering Schemes Rule-based ordering: individual rules are ranked based on their quality o Question: how to define quality? Several possibilities… Class-based ordering: rules that belong to the same class appear together Building Classification Rules Direct method: extract rules directly from data example of sequential covering Indirect method: extract rules from other classification models STOPPING conditions if the quality of the rule is not met; if there is no more instance to put in the Aspects of Sequential Covering How to find rules, linked with the direct method 1. Rule Growing 2 common strategies: 1) Top-down: general-to-specific 2) Down-up: specific-to-general 2. Rule Evaluation Needed to determine which conjunct should be added or removed during the rulegrowing process Metrics: For one rule The value of accuracy isn’t correct or certain for the future data, bc based on past data improve this measure and develop Laplace or M-estimate 2 corrections 22 Corentin Cossettini Semestre de printemps 2022 Université de Neuchâtel Bachelor en sciences économiques 2ème année Other measure: FOIL’s information gain Compare 2 rules (for a rule extension) but we had a measure to the second one 𝑚𝑐 mesure la précision de la ̅̅̅̅ 𝑚𝑐 +𝑚𝑐 deuxième règle, de même pour 𝑛𝑐 ̅̅̅𝑐̅ 𝑛𝑐 +𝑛 3. Rule Pruning Reduced Error Pruning remove one of the conjuncts in the rule comparer error rate on validation set before and after pruning. if error improves, prune the conjunct What is the validation set? Allows us to compute the accuracy after we created the rule in the training set. Training set rules verify with the validation set Laplace is also a way to evaluate the rules to choose which one on them is the best 4. Stopping criterion Compute the gain If gain is not significant, discard the new rule Direct method – summary 1. Grow a single rule 2. Remove instances from the rule 3. Prune the rule if necessary 4. Add rule to current rule set 5. Repeat Example of Direct method – RIPPER variant It’s a method (among others) that builds a Rule-Based Classifier. For a 2-class problem Choose one of the classes as positive class (the other is the negative class) o Learn rules for positive class o Negative class is the default class For a multi-class problem Order the classes according to increasing class prevalence (fraction of instances that belong to a particular class) o Learn the rule set for smallest class first, treat the rest as negative class o Repeat with next smallest class as positive class Growing a rule o Start form empty rule o Add conjuncts as long as they improve FOIL’s information gain o Stop when rule starts covering negative examples Prune the rule immediately using incremental reduced error pruning 23 Corentin Cossettini Semestre de printemps 2022 Université de Neuchâtel Bachelor en sciences économiques 2ème année o Measure for pruning: 𝑣 = (𝑝 − 𝑛)/(𝑝 + 𝑛) where 𝑝 is the number of positive examples covered by the rule in the validation set 𝑛 is the number of negative examples covered by the rule in the validation set o Pruning method: deletes any final sequence of conditions that maximizes 𝑣 Building a rule set o Use sequential covering algorithm Finds the best rule that covers the current set of positive examples Eliminate both positive and negative examples covered by the rule Stop adding a new rule if the error rate of the rule on the set exceeds 50% 6.4 Nearest Neighbors Classifier We don’t create a model this time, a contrario of the rule-based classifier. 6.4.1 6.4.2 Instance Based Classifiers We use instances no model Store the training records and use them to predict the class label of unseen cases. Examples: o Rote-learner: memorizes entire training data and performs classification only if attributes of record match one of the training examples EXACTLY, otherwise, doesn’t give results. o Nearest neighbor: uses 𝑘 closest points for performing classification. Basic Idea and concept Basic Idea: “If it walks like a duck, quacks like a duck, then it’s probably a duck.” 6.4.3 Nearest Neighbor Classification Requires 3 things: 1. Set of stored records 2. Distance metric to compute distance between records 3. Value of 𝑘, the number of nearest neighbors to retrieve To classify an unknown record: Distance: Compute distance to other training records o Distance between 2 points: Euclidian distance: 𝑑(𝑝, 𝑞) = √∑𝑖(𝑝𝑖 − 𝑞𝑖 )2 o Problem with Euclidian measure: high dimensional data curse of dimensionality If the number of points/objects is kept constant, higher the number of dimensions, larger the distance between points. Given a point, the ratio “distance to its nearest neighbor/distance to its farthest neighbor” tends to one for high dimensions. NN: Identify 𝑘 nearest neighbors o Choosing the value of 𝑘 o If 𝑘 too small sensitive to noise points o If 𝑘 too large neighborhood may include points from other classes 24 Université de Neuchâtel Bachelor en sciences économiques 2ème année Corentin Cossettini Semestre de printemps 2022 Class: Use class labels of nearest neighbors to determine the class label of unknown record o Determine class from nearest neighbor list o Take the majority vote of class labels among the 𝑘 nearest neighbors o Weigh the vote according to distance 1 o Weight factor: 𝑤 = 𝑑 𝑘 nearest neighbors of a record 𝑥 are data points that have the 𝑘 smallest distance to 𝑥 (1-2-3 nearest neighbors) 6.4.4 Precisions Scaling issues: attributes may have to be scaled to prevent distance measures from being dominated by one of the attributes. 𝑘-NN classifiers are lazy learners : No model is built, takes time to compute everything every time. 6.4.5 Example: PEBLS (MVDM) Parallel Exemplar-Based Learning System Works with continuous (size in cm, …) and nominal (male/female, …) features o Nominal features: distance between 2 nominal values (𝑉1 and 𝑉2 ) is calculated using modified value difference metric (MVDM) 𝑚 𝑛1𝑖 𝑛2𝑖 𝑑(𝑉1 , 𝑉2 ) = ∑ | − | 𝑛1 𝑛2 𝑖=1 o o 𝑚 – number of classes 𝑛𝑗𝑖 – number of examples from class 𝑖 with attribute value 𝑉𝑗 , 𝑗 = 1,2 𝑛𝑗 - number of examples with attribute value 𝑉𝑗 , 𝑗 = 1, 2 Distance between 𝑋 and 𝑌: ∆(𝑋, 𝑌) = ∑𝑑𝑖=1 𝑑(𝑋𝑖 , 𝑌𝑖 )2 Number of nearest neighbors, 𝑘 = 1 Distance between nominal attribute values: 2 0 2 4 𝑑(𝑆𝑖𝑛𝑔𝑙𝑒, 𝑀𝑎𝑟𝑟𝑖𝑒𝑑) = | − | + | − | = 1 4 4 4 4 Single and yes 2/4 Married and yes 0/4 Single and no 2/4 Married and no 4/4 2 1 2 1 𝑑(𝑆𝑖𝑛𝑔𝑙𝑒, 𝐷𝑖𝑣𝑜𝑟𝑐𝑒𝑑) = | − | + | − | = 0 4 2 4 2 0 1 4 1 𝑑(𝑀𝑎𝑟𝑟𝑖𝑒𝑑, 𝐷𝑖𝑣𝑜𝑟𝑐𝑒𝑑) = | − | + | − | = 1 4 2 4 2 0 3 3 4 𝑑(𝑅𝑒𝑓𝑢𝑛𝑑 = 𝑌𝑒𝑠, 𝑅𝑒𝑓𝑢𝑛𝑑 = 𝑁𝑜) = | − | + | − | = 6/7 3 7 3 7 6.4.6 Proximity measures Proximity refers to these 2: Similarity o Numerical measure of how alike 2 data objects are o Higher when objects are more alike o Fall in the range [0,1] 25 Corentin Cossettini Semestre de printemps 2022 Université de Neuchâtel Bachelor en sciences économiques 2ème année Dissimilarity o Numerical measure of how different are 2 data objects o Lower when objects are more alike o Min is 0, upper limit varies If Data: 1 p 2 q Distance Particular type if dissimilarity Euclidian Distance: 𝑑(𝑝, 𝑞) = √∑𝑖(𝑝𝑖 − 𝑞𝑖 )2 1 Minkowski Distance: 𝑑𝑚 (𝑝, 𝑞) = (∑𝑛𝑖=1|𝑝𝑖 − 𝑞𝑖 |𝑚 )𝑚 where 𝑚 = 1,2, … , ∞ o m = 1: Manhattant Distance/Taxicab distance o m = 2: Euclidian Distance o m = ∞: Supremum Distance 6.5 Naïve Bayesian Classifier Take the n attributes independently and try to look at them individually. “How often was it rainy when we go play? How often was it rainy when we go not play ?” Likelihood of going to play or not depending on each individual value. Then, just multiply all those values together to obtain a final likelihood value 6.5.1 Bayes Classifier Probabilistic framework for solving classification problems Conditional Probability 𝑃(𝐴, 𝐶) 𝑃(𝐶|𝐴) = 𝑃(𝐴) 𝑃(𝐴, 𝐶) 𝑃(𝐴|𝐶) = 𝑃(𝐶) Bayes Theorem 𝑷(𝑨|𝑪)𝑷(𝑪) 𝑃(𝑪|𝑨) = 𝑷(𝑨) 𝑃(𝐶) – prior probability of C 𝑃(𝐶|𝐴) – posterior (revised) probability of C, after observing a new event A 𝑃(𝐴|𝐶) – conditional probability of A, given C (likelihood) 𝑃(𝐴) – total probability of A (Probability to observe A independent of C) 26 Université de Neuchâtel Bachelor en sciences économiques 2ème année Corentin Cossettini Semestre de printemps 2022 6.5.2 Example of Bayes Theorem 6.5.3 Bayesian Classifiers Consider each attribute and class label as random variables Works only if the variables are independent. In general, no need to compute the denominator because it’s the same for all classes! 6.5.4 o Naïve Bayes Classifier “Naïve” because we assume that we are naïve to believe the attributes being independent we assume independence among attributes 𝐴𝑖 when class 𝐶 is given: 𝑃(𝐴1 = 𝑎1 , … , 𝐴𝑛 = 𝑎𝑛 |𝐶 = 𝑐𝑖 ) = 𝑃(𝐴1 = 𝑎1 |𝐶 = 𝑐𝑖 )𝑃(𝐴2 = 𝑎2|𝐶 = 𝑐𝑖 ) ∗ … ∗ 𝑃(𝐴𝑛 = 𝑎𝑛 |𝐶 = 𝑐𝑖 ) 6.5.5 Estimate Probabilities from Data For discrete attributes 𝑃(𝐶 = 𝑐𝑖 ) = 𝑁𝑐𝑖 /𝑁 𝑃(𝐴𝑗 = 𝑎|𝐶 = 𝑐𝑖 ) = 𝑁𝑗𝑖 /𝑁𝑐𝑖 𝑁𝑐𝑖 – number of instances from class 𝑐𝑖 𝑁 – total number of instances 𝑁𝑗𝑖 – number of instances having value a for attribute 𝐴𝑗 and belonging to class 𝑐𝑖 Examples For class 𝐶 “evade”: 4 𝑃(𝑆𝑡𝑎𝑡𝑢𝑠 = 𝑀𝑎𝑟𝑟𝑖𝑒𝑑|𝐶 = 𝑁𝑜) = 7 𝑃(𝑅𝑒𝑓𝑢𝑛𝑑 = 𝑌𝑒𝑠|𝐶 = 𝑌𝑒𝑠) = 0 𝑃(𝐶 = 𝑁𝑜) = 7/10 27 Université de Neuchâtel Bachelor en sciences économiques 2ème année Corentin Cossettini Semestre de printemps 2022 For continuous attributes 1. Discretize the range into bins o One ordinal attribute per bin o Violates independence assumption o Example: [60,75] cold; [76,80] mild; [81,90] hot 2. Probability density estimation o Assume attribute follows a normal distribution 2 𝑃(𝐴𝑗 = 𝑎|𝐶 = 𝑐𝑖 ) = o o o 6.5.6 − 1 (𝑎−𝜇𝑗𝑖 ) 2 2𝜎𝑗𝑖 𝑒 𝜎𝑗𝑖 √2𝜋 Use data to estimate parameters of distribution (mean, variance) Once probability distribution is known, can use it to estimate the conditional probability 𝑃(𝐴𝑖 = 𝑎|𝐶𝑗 ) Example: 𝐴𝑗 = 𝐼𝑛𝑐𝑜𝑚𝑒, 𝑐𝑖 = 𝑁𝑜 𝜇𝑗𝑖 ≈ 110 (sample mean of Income values for class No) 𝜎𝑗𝑖2 ≈ 2975 (sample var of Income value for class No) Probability Distribution! Estimate the conditional probability: (120−110)2 1 − 𝑃(𝐼𝑛𝑐𝑜𝑚𝑒 = 120|𝑁𝑜) = 𝑒 2(2975) = 0.0072 54.54√2𝜋 Examples of Naïve Bayes Classifier When multiplying numerical values, you should never have a zero. It makes the other attributes don’t count anymore problem with Naïve Bayes! But we have a solution… 6.5.7 Solution to the problem of a 𝒗𝒂𝒍𝒖𝒆 𝒐𝒇 𝒂𝒏 𝒂𝒕𝒕𝒓𝒊𝒃𝒖𝒕𝒆 = 𝟎 If one of the conditional probability is zero, then the entire expression becomes zero! we have to use other probability estimations (we already know them): 𝑁 The original (with the problem): 𝑃(𝐴𝑗 = 𝑎|𝐶 = 𝑐𝑖 ) = 𝑁𝑗𝑖 𝑁𝑗𝑖 +1 Laplace: 𝑃(𝐴𝑗 = 𝑎|𝐶 = 𝑐𝑖 ) = 𝑁 M-estimate: 𝑃(𝐴𝑗 = 𝑎|𝐶 = 𝑐𝑖 ) = 𝑁𝑐 : number of classes 𝑁𝐴𝑗 : number of values that the attribute takes 𝑝: prior probability 𝑚: parameter 6.5.8 𝑐𝑖 𝑐𝑖 +𝑁𝐴𝑗 𝑁𝑗𝑖 +𝑚𝑝 𝑁𝑐𝑖 +𝑚 Summary Robust to isolated noise points Handle missing values by ignoring the instance during probability estimate calculations Robust to irrelevant attributes 28 Corentin Cossettini Semestre de printemps 2022 Université de Neuchâtel Bachelor en sciences économiques 2ème année Independence assumption may not hold for some attributes o Use other techniques such as Bayesian Belief Networks (BBN) Even if attributes aren’t often independent, we use NB bc it’s quick to compute and very simple! 6.6 Decision Tree Classifier 6.6.1 Solve a classification problem by asking a series of carefully crafted questions about the attributes of the test record; After each answer, a follow-up question is asked; Stop when reach a conclusion about the class label of the record. The questions and their possible answers can be organized in the form of a decision tree o Hierarchical structure consisting of nodes (noeuds) and directed edges. o Root node: has no incoming edges and zero or more outgoing edges. o Internal nodes: have exactly one incoming edge and two or more outgoing edges o Leaf node: have exactly one incoming edge and no outgoing edges. Example If Refund = Yes Cheat = No, that’s why we don’t continue further If married = no cheat=No, that’s why we don’t continue further 6.6.2 6.6.3 Advantages of a Decision Tree Based Classification Inexpensive to construct Extremely fast at classifying unknown records Easy to interpret for small-sized trees Accuracy is comparable to other classification techniques for many simple data sets General Process to create a model Training Set Learn Model (induction): algorithm Model: in this case, decision tree Apply Model (deduction) Test Set To create the tree several algorithms. (Hunt’s, CART, ID3, …) 6.6.4 Hunt’s Algorithm: General Structure 𝐷𝑡 : set of training records that reach a node 𝑡 General Procedure: o If 𝐷𝑡 contains records that belong the same class 𝑦𝑡 : 𝑡 is a leaf node labeled as 𝑦𝑡 o If 𝐷𝑡 is an empty set: 𝑡 is a leaf node labeled by the default class 𝑦𝑑 o If 𝐷𝑡 contains records that belong to more than one class, use an attribute test to split the data into smaller subsets. Recursively apply the procedure to each subset 29 Corentin Cossettini Semestre de printemps 2022 6.6.5 Université de Neuchâtel Bachelor en sciences économiques 2ème année Tree Induction How to choose the question to ask from each node (inkl. the root node)? Greedy Strategy: Split the records based on an attribute test that optimizes certain criterion. Issues we are facing when doing this: Determine how to split the records o How to specify the attribute test condition? o How to determine the best split? Determine when to stop splitting Specify Test Condition Depends on attribute types. Nominal, Ordinal, Continuous Depends on number of ways to split: 2-way split, multi-way split (1) Split for Nominal attributes (2) Split for Ordinal attributes Numerical values binary splits This split is also possible, even if the order isn’t fully respected. (3) Split for Continuous attributes Different ways of handling the thing: Discretization to form an ordinal categorical attribute Transferring continuous functions into discrete counterparts Binary Decision: 𝐴 < 𝑣 𝑜𝑟 𝐴 ≥ 𝑣 Find the best cut possible among all the splits! 30 Université de Neuchâtel Bachelor en sciences économiques 2ème année Corentin Cossettini Semestre de printemps 2022 Determine the best split The data are the students: we create the tree to decide when receiving new data. Best variant: 2nd bc the data are the students and it helps classify them. The 3rd is too perfect bc every node is pure (one class is represented per node). Greedy Approach : nodes with homogeneous class distribution are preferred Non-homogeneous, high impurity Homogeneous, low impurity We need a measure of node impurity! How to Find the Best Split Compute M1 and M2, several ways to do it. After that, compare them with M0 to see if won or not (we should win). 6.6.6 Measures of impurity 1) GINI 𝟐 Gini Index for a given node 𝑡: 𝑮𝑰𝑵𝑰(𝒕) = 𝟏 − ∑𝒋(𝒑(𝒋|𝒕)) 𝑝(𝑗|𝑡): relative frequency of class 𝑗 at node 𝑡 1 𝑀𝑎𝑥. 𝑝𝑜𝑠𝑠𝑖𝑏𝑙𝑒 𝑣𝑎𝑙𝑢𝑒 = 1 − 𝑛𝑐 When records are equally distributed among all 𝑛𝑐 classes 𝑀𝑖𝑛. 𝑝𝑜𝑠𝑠𝑖𝑏𝑙𝑒 𝑣𝑎𝑙𝑢𝑒 = 0 When records belong to one class if it’s a pure node, GINI = 0 When a node t is split into k partitions (children), the aggregated GINI impurity measure 𝒏 for all children is computed as: 𝑮𝑰𝑵𝑰𝑺𝑷𝑳𝑰𝑻 = ∑𝒌𝒊=𝟏 𝒏𝒊 𝑮𝑰𝑵𝑰(𝒊) o 𝑛𝑖 : number of records at child i o 𝑛: number of records at note t Seek for the lowest GINIsplit the lowest impurity The quality of the split (GINI gain) is expressed as: 𝑮𝒂𝒊𝒏𝑮𝑰𝑵𝑰 = 𝑮𝑰𝑵𝑰(𝒕) − 𝑮𝑰𝑵𝑰𝑺𝑷𝑳𝑰𝑻 31 Université de Neuchâtel Bachelor en sciences économiques 2ème année Corentin Cossettini Semestre de printemps 2022 Example Binary Attributes Categorical Attributes Continuous Attributes 2) Entropy (information gain) Entropy at a given node 𝑡 : 𝑬𝒏𝒕𝒓𝒐𝒑𝒚(𝒕) = − ∑ 𝒑(𝒋|𝒕) 𝐥𝐨𝐠 𝟐(𝒑(𝒋|𝒕)) 𝒋 Measures homogeneity of a node 𝑀𝑎𝑥. 𝑣𝑎𝑙𝑢𝑒 = log(𝑛𝑐 ) When records are equally distributed among all classes 𝑀𝑖𝑛. 𝑣𝑎𝑙𝑢𝑒 = 0 When all records belong to one class When a node t is split into k partitions (children), the aggregated entropy measure for 𝒏 all children nodes is computed as: 𝑬𝑵𝑻𝑹𝑶𝑷𝒀𝑺𝑷𝑳𝑰𝑻 = ∑𝒌𝒊=𝟏 𝒏𝒊 𝑬𝒏𝒕𝒓𝒐𝒑𝒚(𝒊) o 𝑛𝑖 : number of records at child i o 𝑛: number of records at note t The quality of the split (Information gain) is expressed as: 𝑮𝒂𝒊𝒏𝑬𝑵𝑻𝑹𝑶𝑷𝒀 = 𝑬𝑵𝑻𝑹𝑶𝑷𝒀(𝒕) − 𝑬𝑵𝑻𝑹𝑶𝑷𝒀𝑺𝑷𝑳𝑰𝑻 Measures reduction in entropy achieved because of the split. Choose the split that achieves most reduction! 3) Classification Error Classification error at node 𝑡 : 𝑬𝒓𝒓𝒐𝒓(𝒕) = 𝟏 − 𝒎𝒂𝒙𝒋 𝒑(𝒋|𝒕) Measures misclassification error made by a node 1 𝑀𝑎𝑥. 𝑣𝑎𝑙𝑢𝑒 = 1 − 𝑛 𝑐 When records are equally distributed among all classes 𝑀𝑖𝑛. 𝑣𝑎𝑙𝑢𝑒 = 0 When all records belong to one class The aggregated classification error for all children nodes: 32 Université de Neuchâtel Bachelor en sciences économiques 2ème année Corentin Cossettini Semestre de printemps 2022 𝒌 𝑬𝑹𝑹𝑶𝑹𝑺𝑷𝑳𝑰𝑻 = ∑ o o 6.6.7 𝒊=𝟏 𝒏𝒊 𝑬𝒓𝒓𝒐𝒓(𝒊) 𝒏 𝑛𝑖 : number of records at child i 𝑛: number of records at note t 𝑮𝒂𝒊𝒏𝑬𝑹𝑹𝑹𝑶𝑹 = 𝑬𝑹𝑹𝑶𝑹(𝒕) − 𝑬𝑹𝑹𝑶𝑹𝑺𝑷𝑳𝑰𝑻 Practical Issues of Classification 6.6.7.1 Underfitting and Overfitting Underfitting: the model is too simple Not yet learned the true structure of data Error rates on training set and on test set are large Solution: créer un modèle un peu plus complexe (réduire les conditions : ex : minsplit passe de 20 à 2) Overfitting: the model is more complex than necessary Si on a essayé de trop apprendre les données par cœur. Noise dans le data (valeurs qui ne nous aident pas) Error rate on training set is small, but the error rate on test data is large Training error no longer provides a good estimate of how well the tree will perform on previously unseen records Need new ways for estimating errors l’arbre devient trop large Solution: see below Underfitting : when model is too simple, both training and test errors are large Overfitting due to Noise Overfitting due to Insufficient Examples Lack of data points in the lower half of the diagram makes it difficult to predict correctly the class labels of that region Insufficient number of training records in the region causes the decision tree to predict the test examples using other training records that are irrelevant to the classification task Estimating Generalization Errors 33 Corentin Cossettini Semestre de printemps 2022 Université de Neuchâtel Bachelor en sciences économiques 2ème année Reduced error pruning (REP), 3ème méthode: utiliser un jeu de donner séparé Occam’s Razor (principle) Given 2 models of similar generalization errors, one should prefer the simpler model over the more complex model For complex models, there is a greater chance that it was fitted accidentally by errors in data Therefore, one should include model complexity when evaluating a model Minimum Description Length (MDL) How to Adress Overfitting (solutions) 34 Corentin Cossettini Semestre de printemps 2022 Université de Neuchâtel Bachelor en sciences économiques 2ème année 1. Pre-Pruning On ne laisse pas croître l’arbre, on s’arrête avant qu’il soit trop grand. Stop the algorithm before it becomes a fully-grown tree Typical stopping conditions for a node o Stop if all instances belong to the same class o Stop if all the attribute values are the same More restrictive conditions o Stop if number of instances is less than some user-specified threshold o Stop if class distribution of instances are independent of the available features (ex: using a 𝜒 2 test) o Stop if expanding the current node does not improve impurity measures (ex: Gini of Information Gain) 2. Post-Pruning Grow decision tree to its entirety Trim the nodes of the decision tree in a bottom-up fashion If generalization error improves after trimming, replace sub-tree by a leaf node Class label of leaf node is determined from majority class of instances in the subtree Can use MDL for post-pruning Example 6.6.7.2 Missing values Affect decision tree construction in 3 different ways 1. How impurity measures are computed 2. How to distribute instance with missing value to child nodes 3. How a test instance with missing value is classified 6.6.7.3 Data Fragmentation Number of instances gets smaller as you traverse down the tree Number of instances at the leaf nodes could be too small to make any statistically significant decision 6.6.7.4 Search Strategy Finding an optimal decision tree is NP-hard The algorithm presented so far uses a greedy, top-down, recursive partitioning strategy to induce a reasonable solution Other strategies? Bottom-up Bi-directional 6.6.7.5 Expressiveness Decision tree provides expressive representation for learning discrete-valued function 35 Corentin Cossettini Semestre de printemps 2022 Université de Neuchâtel Bachelor en sciences économiques 2ème année But they do not generalize well to certain types of Boolean functions o Example: parity function Class = 1 if there is an even number of Boolean Attributes with true value = True Class = 0 if there is an odd number of Boolean Attributes with true value = True o For accurate modeling, must have a complete tree Not expressive enough for modeling continuous variables o Particularly when test condition involves only a single attribute at-a-time Decision Boundary Border line between 2 neighboring regions of different classes is known as decision boundary Decision boundary is parallel to axes because test condition involves a single attribute at-a-time Oblique Decision Trees Test condition may involve multiple attributes More expressive representation Finding optimal test condition is computationally expensive 6.7 Model Evaluation 6.7.1 Metrics for Performance Evaluation: “How to evaluate the performance of a model?” Methods for Performance Evaluation: “How to obtain reliable estimates?” Methods for Model Comparison: “How to compare the relative performance among competing models?” Metrics for Performance Evaluation Focus on the predictive capability of a model Confusion matrix 36 Université de Neuchâtel Bachelor en sciences économiques 2ème année Corentin Cossettini Semestre de printemps 2022 Metrics 𝑎+𝑑 𝑇𝑃+𝑇𝑁 𝐴𝑐𝑐𝑢𝑟𝑎𝑐𝑦 = 𝑎+𝑏+𝑐+𝑑 = 𝑇𝑃+𝑇𝑁+𝐹𝑃+𝐹𝑁 𝐸𝑟𝑟𝑜𝑟 𝑟𝑎𝑡𝑒 = 1 − 𝐴𝑐𝑐𝑢𝑟𝑎𝑐𝑦 = 𝑇𝑃+𝑇𝑁+𝐹𝑃+𝐹𝑁 𝑇𝑟𝑢𝑒 𝑃𝑜𝑠𝑖𝑡𝑖𝑣𝑒 𝑅𝑎𝑡𝑒 (𝑇𝑃𝑅)(𝑠𝑒𝑛𝑠𝑖𝑡𝑖𝑣𝑖𝑡𝑦) = 𝑇𝑃+𝐹𝑁 𝑇𝑟𝑢𝑒 𝑁𝑒𝑔𝑎𝑡𝑖𝑣𝑒 𝑅𝑎𝑡𝑒 (𝑇𝑁𝑅)(𝑠𝑝𝑒𝑐𝑖𝑓𝑖𝑐𝑖𝑡𝑦) = 𝑇𝑁+𝐹𝑃 𝐹𝑎𝑙𝑠𝑒 𝑃𝑜𝑠𝑖𝑡𝑖𝑣𝑒 𝑅𝑎𝑡𝑒 (𝐹𝑃𝑅) = 𝑇𝑁+𝐹𝑃 𝐹𝑎𝑙𝑠𝑒 𝑁𝑒𝑔𝑎𝑡𝑖𝑣𝑒 𝑅𝑎𝑡𝑒 (𝐹𝑁𝑅) = 𝑇𝑃+𝐹𝑁 𝐹𝑃+𝐹𝑁 𝑇𝑃 𝑇𝑁 𝐹𝑃 𝐹𝑁 Limitation of Accuracy Consider a 2-class problem o Number of Class 0 examples = 9990 o Number of Class 1 examples = 10 If model predicts everything to be class 0: 9990 o 𝐴𝑐𝑐𝑢𝑟𝑎𝑐𝑦 = 10000 = 99.9 Misleading because the model does not detect any class 1 example Cost Matrix Cost Matrix 𝐶(𝑖, 𝑗): cost of misclassifying class 𝑖 example as class 𝑗 𝐶𝑜𝑠𝑡 = 𝐶(𝑌𝑒𝑠, 𝑌𝑒𝑠)𝑎 + 𝐶(𝑌𝑒𝑠, 𝑁𝑜)𝑏 + 𝐶(𝑁𝑜, 𝑌𝑒𝑠)𝑐 + 𝐶(𝑁𝑜, 𝑁𝑜)𝑑 Example: 2 models with the same cost matrix Cost vs Accuracy 37 Université de Neuchâtel Bachelor en sciences économiques 2ème année Corentin Cossettini Semestre de printemps 2022 Cost-Sensitive Measures Mesures utilisées par Google dans son moteur de recherche Chercher quelque chose dans un grand nombre de documents 𝑎 𝑃𝑟𝑒𝑐𝑖𝑠𝑖𝑜𝑛(𝑝) = 𝑎+𝑐 𝑎 𝑅𝑒𝑐𝑎𝑙𝑙(𝑟) = 𝑎+𝑏 2𝑟𝑝 2𝑎 𝐹 − 𝑚𝑒𝑎𝑠𝑢𝑟𝑒(𝐹) = 𝑟+𝑝 = 2𝑎+𝑏+𝑐 𝑊𝑒𝑖𝑔ℎ𝑡𝑒𝑑 𝐴𝑐𝑐𝑢𝑟𝑎𝑐𝑦 = 𝑤 the bigger the f-measure, the better 𝑤1 𝑎+𝑤4 𝑑 1 𝑎+𝑤2 𝑏+𝑤3 𝑐+𝑤4 𝑑 Remarques Precision is biased towards 𝐶(𝑌𝑒𝑠, 𝑌𝑒𝑠) and 𝐶(𝑁𝑜, 𝑌𝑒𝑠) Recall is biased towards 𝐶(𝑌𝑒𝑠, 𝑌𝑒𝑠) and 𝐶(𝑌𝑒𝑠, 𝑁𝑜) F-measure is biased towards all except 𝐶(𝑁𝑜, 𝑁𝑜) o Combines precision and recall mean of the two 6.7.2 Methods for Performance Evaluation Comment obtenir une estimation de la performance ? Note : la performance du modèle peut dépendre d’autre facteurs que l’algorithme (class distribution (1%-99%) ; cost of misclassification ; size of training and test sets) Learning Curve Shows how accuracy changes with varying sample size Requires a sample schedule for creating one! /!/: ↓ 𝑠𝑎𝑚𝑝𝑙𝑒 𝑠𝑖𝑧𝑒 → 𝑒𝑟𝑟𝑜𝑟 𝑜𝑓 𝑡ℎ𝑒 𝑒𝑠𝑡𝑖𝑚𝑎𝑡𝑒 ↑ Methods of Estimation 38 Corentin Cossettini Semestre de printemps 2022 Université de Neuchâtel Bachelor en sciences économiques 2ème année 1. Holdout Reserve 2/3 for training and 1/3 for testing 2. Repeated Holdout Do it 3 times to test every subset 3. Cross validation o Partition data into 𝑘 disjoint subsets o 𝑘-fold: train on 𝑘−1 partitions, test on the remaining one o Leave-one-out: 𝑘 = 𝑛 4. Repeated cross validation Do it 𝑘 times ROC (Receiver Operating Characteristic) Characterizes the trade-off between positive hits (TPR) and false alarms (FPR) ROC curve plots TPR (y-axis) against FPR (x-axis) Performance of each classifier represented as a point on the ROC curve o Changing the threshold 𝑡 (seuile) of algorithm, sample distribution or cost matrix changes the location of the point ROC Curve 1-dimensional data set containing 2 classes (positive and negative) Any points located at 𝑥 > 𝑡 is classified as positive At threshold 𝑡 ≈ 0.3: 𝑇𝑃𝑅 = 0.5 𝐹𝑃𝑅 = 0.12 𝑇𝑁𝑅 = 0.88 𝐹𝑁𝑅 = 0.5 (𝑇𝑃𝑅, 𝐹𝑃𝑅) (0,0): declare everything to be negative class (1,1): declare everything to be positive class (1,0): ideal We have to find the nearest point to (1,0) Diagonal line: Random guessing Below diagonal line: prediction is opposite of the true class ROC for Model Comparison 39 Université de Neuchâtel Bachelor en sciences économiques 2ème année Corentin Cossettini Semestre de printemps 2022 No model consistently outperforms the other: 𝑀1 is better for small FPR 𝑀2 is better for large FPR Area under the ROC curve; Ideal: 𝐴𝑟𝑒𝑎 = 1 Random guess: 𝐴𝑟𝑒𝑎 = 0.5 How to construct a ROC curve Use classifier that produces posterior probability for each test instance 𝑃(+|𝐴) Sort the instances according to 𝑃(+|𝐴) in decreasing order Apply threshold 𝑡 at each unique value of 𝑃(+|𝐴) Count the number of TP, FP, TN, FN at each threshold 𝑇𝑃 TP rate, 𝑇𝑃𝑅 = FP rate, 𝐹𝑃𝑅 = 𝑇𝑃+𝐹𝑁 𝐹𝑃 𝐹𝑃+𝑇𝑁 Si 𝑡 = 0.7 on envoie à 7 personnes 6.7.3 Test of Significance (confidence intervals) Given 2 models: o 𝑀1 : accuracy = 85%, tested on 30 instances o 𝑀2 : accuracy = 75%, tested on 5000 instances Can we say 𝑀1 is better than 𝑀2 ? o (1) What’s the confidence we can place on the accuracy of 𝑀1 and 𝑀2 ? o (2) Is the difference in performance measure explained as a result of random fluctuations in the test set? (1) CI for Accuracy 40 Corentin Cossettini Semestre de printemps 2022 Université de Neuchâtel Bachelor en sciences économiques 2ème année Accuracy is the mean Prediction is a Bernoulli trial o 2 possible outcomes: correct or wrong for example o All the Bernoulli trials represent a Binominal Distribution: 𝑋~𝐵𝑖𝑛(𝑁, 𝑝), with 𝑋 the number of correct predictions Example: toss a faire coin 50 times Expected number of heads: 𝐸(𝑋) = 𝑁𝑝 = 50 ∗ 0.5 = 5 Variance: 𝑉𝑎𝑟(𝑋) = 𝑁𝑝(1 − 𝑝) = 50 ∗ 0.5 ∗ 0.5 = 12.5 Probability the head show up 20 times: 50 𝑃(𝑋 = 20) = 0.520 (1 − 0.5)30 ≈ 0.04 20 Comes from the binomial distribution 𝑥 Given 𝑥 (# of correct predictions) and 𝑁 (# of test instances): a𝑐𝑐 = 𝑁 Acc sample // p population Is it possible to predict p, the true accuracy of the model (for the whole population)? For large test sets (𝑁 > 30), acc has a normal distribution (𝜇 = 𝑝, 𝜎 2 = 𝑃 𝑍𝛼 < 𝑎𝑐𝑐 − 𝑝 √𝑝(1 − 𝑝) ( 𝑁 Confidence Interval for 𝑝: 2 < 𝑍1−𝛼 𝑝(1−𝑝) ) 𝑁 =1−𝛼 2 ) 2𝑁(𝑎𝑐𝑐) + 𝑍𝛼2 ± √𝑍𝛼2 + 4𝑁𝑎𝑐𝑐 − 4𝑁(𝑎𝑐𝑐)2 2 1−𝛼 𝐶𝐼[𝑝] = 2 2 (𝑁 + 𝑍𝛼2 ) 2 Example: (2) Comparing Performance of 2 Models with 2 different sizes. Is the bigger one really statistically different from the smaller one ? Test the difference of the 2 models 𝑀1 and 𝑀2 Given 2 models 𝑀1 and 𝑀2 , which one is better? o 𝑀1 is tested on 𝐷1 (𝑠𝑖𝑧𝑒 = 𝑛1), 𝑓𝑜𝑢𝑛𝑑 𝑒𝑟𝑟𝑜𝑟 𝑟𝑎𝑡𝑒 = 𝑒1 o 𝑀2 is tested on 𝐷2 (𝑠𝑖𝑧𝑒 = 𝑛2 ), 𝑓𝑜𝑢𝑛𝑑 𝑒𝑟𝑟𝑜𝑟 𝑟𝑎𝑡𝑒 = 𝑒2 o Assume 𝐷1 and 𝐷2 are indepenant o Assume 𝑛1 and 𝑛2 are sufficiently large, then: 𝑒 (1−𝑒 ) 𝑒1 ~𝑁(𝜇1 , 𝜎1 ), 𝑒2 ~𝑁(𝜇2 , 𝜎2 ), with 𝜎̂𝑖 = 𝑖 𝑛 𝑖 𝑖 To test if performance difference is statistically significant: 𝑑 = 𝑒1 − 𝑒2 o 𝑑~𝑁(𝑑𝑡 , 𝜎𝑡 ) where 𝑑𝑡 is the true difference o Since 𝐷1 and 𝐷2 are independent, their variance adds up: 41 Corentin Cossettini Semestre de printemps 2022 Université de Neuchâtel Bachelor en sciences économiques 2ème année 𝑒1 (1 − 𝑒1 ) 𝑒2 (1 − 𝑒2 ) + 𝑛1 𝑛2 = 𝑑 ± 𝑍𝛼/2 𝜎̂𝑡 𝜎𝑡2 = 𝜎12 + 𝜎22 ≅ 𝜎̂12 + 𝜎̂22 = 1−𝛼 o At (1 − 𝛼) confidence level, 𝐶𝐼[𝑑 𝑡] Example: If zero is in the interval, not statistically significant If zero is not in the interval, statistically significant 6.7.4 Comparing performance of 2 Algorithms With different models! 42