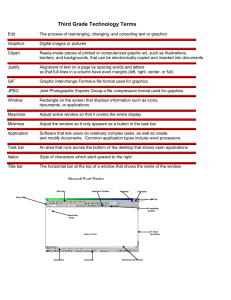

LIBRARY A compendium of articles from Electronic Design forma. 19 by In ht © 20 Copyrig ed. ts reserv All righ LIBRARY CONTENTS 02 Jon Peddie Biography CHAPTER 1 03 NEC µPD7220 Graphics Display Controller: The first graphics processor chip CHAPTER 2 05 EGA to VGA CHAPTER 3 09 Intel’s 82786 Intel’s First Discrete Graphics Coprocessor CHAPTER 4 13 Texas Instruments TMS34010 and VRAM CHAPTER 5 18 IBM’s professional graphics, the PGC and 8514/A RCES FROM CHAPTER 6 OUR SISTER BRANDS 22IBM’s VGA 27 GRAPHICS CONTROLLERS have been an important part of computer systems almost since their inception and they have steadily progressed from providing limited support for low resolution displays to platforms that provide real time ray tracing support. Early Bill Wong Editor, display controllers provided Senior Content features like bit blitting and Director sprites. Many of these features started out on PCs to support gaming and other applications. These technologies migrated into cell phones that turned into the smartphones of today that are essentially supercomputers-in-a-pocket. Staying abreast of the latest and greatest technology, including computer graphics, is what we do at Electronic Design but it is often interesting and useful to look back at what was and how we got to where we are at now. Plus, old technologies and methodologies are often useful now. For example, the 8-bit game programmers moved from PCs to cell phones as the PCs moved onto higher end graphics controllers but the cell phones were more limited at the time. I worked with many of these early graphics chips even before I was the first Lab Director at PC Magazine’s famous PC Labs many years ago. One name that stands out in the graphics world is Jon Peddie who has been following and reporting on this technology area as long as I have. I have known Jon Peddie for many years. He has always been my go-to person when it comes to graphics technology. Jon has been writing short articles about the graphics chips from a historical perspective and the articles can be found online but we have collected them here so you can easily find them. This is just the beginning of the series and starts with NEC µPD7220 Graphics Display Controller. We hope you enjoy this series of ebooks that delve into the history of graphics. More Resources from Electronic Design ☞ LEARN MORE @ electronicdesign.com | 1 LIBRARY DR. JON PEDDIE is one of the pioneers CAAD society. of the graphics industry and formed Peddie lectures at numerous Jon Peddie Research (JPR) to provide conferences and universities world- customer intimate consulting and wide on topics pertaining to graphics market forecasting services where he technology and the emerging trends explores the developments in computer in digital media technology, as well graphics technology to advance economic inclusion as appearing on CNN, TechTV, and Future Talk and improve resource efficiency. TV, and is frequently quoted in trade and business Recently named one of the most influential publications. analysts, Peddie regularly advises investors in Dr. Peddie has published hundreds of papers, the technology sector. He is an advisor to the has authored and contributed to no less than U.N., several companies in the computer graphics thirteen books in his career, his most recent, industry, an advisor to the Siggraph Executive Augmented Reality, where we all will live, and is Committee, and in 2018 he was accepted as an a contributor to TechWatch, for which he writes a ACM Distinguished Speaker. Peddie is a senior and series of weekly articles on AR, VR, AI, GPUs, and lifetime member of IEEE, and a former chair of the computer gaming. He is a regular contributor to IEEE Super Computer Committee, and the former IEEE, Computer Graphics World, and several other president of The Siggraph Pioneers. In 2015 he was leading publications. given the Life Time Achievement award from the ☞ LEARN MORE @ electronicdesign.com | 2 ☞ BACK TO TABLE OF CONTENTS LIBRARY CHAPTER 1: NEC µPD7220 Graphics Display Controller: The first graphics processor chip DR. JON PEDDIE T his is the first in a series of short articles about graphics chips, controllers and processors, that changed the course of the computer graphics (CG) industry. In buzzword talk, they were disruptive devices, and in addition to changing how things were done, the application of those chips made a lot of companies successful. Ironically, all of them gone now. In 1982, NEC changed the landscape of the emerging computer graphics market just as the PC was being introduced, which would be the major change to the heretofore specialized and expensive computer graphics industry. NEC Information Systems, the US arm of the Nippon Electric Company (now NEC), introduced the µPD7220 Graphics Display Controller (GDC) (see the figure). The project was started the in 1979 and published a paper on it in 1981 at the IEEE International Solid State Circuit Conference in February 1981. Prior to the PC graphics display systems came in two classes, high-end CAD systems hung on big NEC’s µPD7220 was the first integrated graphics controller IBM and DEC mainframes, chip (Drahtlos, Wikipedia) ☞ LEARN MORE @ electronicdesign.com | 3 LIBRARY CHAPTER 1: NEC µPD7220 Graphics Display Controller: The first g aphics processor chip and low-end microcomputer systems based on the fledgling Intel 4004 CPU, which were the precursor of the PC. VLSI technology was just getting rolling and devices with astounding numbers of transistors, 30,000 to 40,000, were being built with the precursor of CMOS, NMOS. CMOS was expensive and had larger feature sizes. An NMOS chip could have gates as small as 5-micron. But they paid for that with power dissipation challenges. The µPD7220, running on 5 V, drew 1.5 W and used a 40-pin ceramic package. The chip incorporated all the CRT control functions (known as the CRTC) as well as graphics primitives for arcs, lines, circles and special characters. Processor software overhead was minimized by the GDC’s sophisticated instruction set, graphics figure drawing, and DMA transfer capabilities. It supported a light pen and could drive up to four megabits of bit-mapped graphics memory, which was quite a lot for the time. Prior to the µPD7220 every graphics device had its own drawing primitives library, with IBM’s 2250 (1974), and Tektronix’s 4010 (1972) being the most popular. The µPD7220 established an easy to use, low-level set of instructions application developers could easily embed in their programs and thereby speed uip drawing time. The chip quickly became popular and was the basis for several “dumb” terminals and a few graphics terminals (a “dumb” terminal being one that couldn’t be programmed and just displayed images and/or text). The controller could support 1024 by 1024 pixel resolution with four-planes of color. Some systems employed multiple 7220s to get more color depth. In June 1983, Intel brought out the 82720, a clone of the µPD7220. The µPD7220 was produced until 1987 when it was replaced by a newer faster version, the µPD72120. Seeing its success, and the emerging market for computer graphics, Hitachi and TI also introduced graphics processors a few years later. ☞ BACK TO TABLE OF CONTENTS ☞ LEARN MORE @ electronicdesign.com | 4 LIBRARY CHAPTER 2: EGA to VGA DR. JON PEDDIE The initiation of bit-mapped graphics and the chip clone wars W hen IBM introduced the Intel 8080-based Personal Computer (PC) in 1981, it was equipped with an add-in board (AIB) called the Color Graphics Adaptor (CGA) (Fig. 1). The CGA AIB had 16 Kbytes of video memory and could drive either an NTSC-TV monitor or a dedicated 4-bit RGB CRT monitor, such as the IBM 5153 color display. It didn’t have a dedicated controller and was assembled using a half dozen LSI chips. The large chip in the center is a CRT timing controller (CRTC), typically a Motorola MC6845. Those AIBs were over 33-cm (13-in) long and 10.7-cm tall (4.2-in). IBM introduced the second-generation Enhanced Graphics Adapter (EGA) (Fig. 2) in 1984 that superseded and exceeded the capabilities of the CGA. The EGA was then superseded by the VGA standard in 1987. But the EGA established a new industry. It wasn’t an integrated chip; however, it’s I/O was well documented, and it became one of the most copied (“cloned”) AIBs in history. A year after IBM introduced the EGA AIB, Chips and Technologies came out with a chip set that duplicated what the IBM AIB could do. Within a year the low-cost clones had captured Fig 1. IBM’s CGA add-in board hat 16 Kbytes of video memory (hiteched) ☞ LEARN MORE @ electronicdesign.com | 5 CHAPTER 2: EGA to VGA LIBRARY Fig 2. IBM’s EGA add-in board superseded the CGA. Notice the similarity in form factor and layout to the CGA. (Vlask - Own work, CC BY-SA 3.0,) over 40% of the market. Other chip companies such as ATI, NSI, Paradise, and Tseng Labs also produced EGA clone chips, and fueled the explosion of clone-based boards (Fig. 3). By 1986 there were over two-dozen such suppliers and the list was growing. Even the clones got cloned and Everex took a license from C&T, so it could manufacture an EGA chip for its PCs. The EGA controller wasn’t anything special really. It offered 640 by 350 pixel resolution with 16 colors (from a 6-bit palette of 64 colors) and a pixel aspect ratio of 1:1.37. It had the ability to adjust the frame buffer’s output aspect ration by changing the resolution, giving it three additional, hard-wired, display modes: 640 by 350 resolution with two colors, with an aspect ratio of 1:1.37, 640 by 200 pixel resolution with sixteen colors and a 1:2.4 aspect ratio, and 320 by 200 pixel resolution with sixteen colors and a 1:1.2 aspect ratio. Some EGA clones extended the EGA features to include 640 by 400 pixel resolution, 640 by 480 pixel resolution, and even 720 by 540 pixel resolution along with hardware detection of the attached monitor and a special 400-line interlace mode to use with older CGA monitors. The big breakthrough for the EGA, and why it attracted so many copiers was its graphics modes were bit-mapped planar, as opposed to the previous generation interlaced CGA and Hercules AIBs. The video memory was divided into four pages (except 640 by 350 pixel resolution with two colors, which had two pages), one for each component of the RGBI color space. Each bit represented one pixel. If a bit in the red page is enabled, and none of the equivalent bits in the other pages were, a red pixel Fig 3. With the advent of an integrated EGA controller, the AIBs started to get smaller (Old Computers) ☞ LEARN MORE @ electronicdesign.com | 6 CHAPTER 2: EGA to VGA LIBRARY appeared in that location on-screen. If all the other bits for that pixel were also enabled, it would become white, and so forth. The EGA moved us out of character-based graphics and into true bit-mapped, based on a standard. Similar things had been accomplished with mods to micros computers such as Commodore PET and Radio Shack TRS80, and direct from the manufacturer of IMSI and Color Graphics, but they did not use an integrated VLSI chip. The EGA was the last AIB to have a digital output, with VGA came analog signaling and a larger color palette. EGA begets VGA to XGA With the introduction of the IBM PC, the personal/micro and even workstation-class graphics got a new segment or category — consumer/commercial. The users in the commercial segment were not too concerned with high-resolution, and certainly not graphics performance. Certain users of spreadsheets liked higher resolution, and a special class of desktop publishing had a demand for very high-resolution. But the volume market was commercial and consumers. Even that segment was subdivided. A certain class of consumers, gamers, did want high-resolution and performance, but wouldn’t pay the price the professional graphics (i.e., workstation) user were being charged. PGA The NEC 7220, and Hitachi 63484 ACRTC discussed in previous Famous Graphics Chips articles went to the professional market. IBM, the industry leader and standard setter recognized this and in the same year it introduced the commercial/consumer class EGA it also introduced a professional graphics AIB the PGA. The PGA offered a high resolution of 640 by 480 pixels with 256 colors out of a palette of 4,096 colors. Refresh rate was 60 Hz. Like the EGA, the PGA was not an integrated chip. 8514 IBM discontinued the PGA in 1987, replacing it with the much higher resolution 8514, and breaking with the acronym description of AIBs. The 8514 could generate 1024×768 pixels at 256 colors and 43.5 Hz interlaced. The 8514 was a significant development, and IBM’s first integrated high-resolution VLSI graphics chip. The 8514 will be discussed in a future article and is mentioned here for chronological reference. VGA IBM’s video graphics array was the most significant graphics chip to ever be produced in terms of volume and longevity. The VGA was introduced with the IBM PS/2 line of computers in 1987, along with the 8514. The two AIBs shared an output connector which became the industry standard for decades, the VGA connector. The VGA connector was among things, the catalyst that lead to the formation of the Video Electronics Standards Association (VESA) in 1989. This too is a significant device and will be covered separately and is listed her to show the complexity of the market at the time and how things were rapidly changing. Summary The EGA was really the foundation controller, and later the chip of the commercial and consumer PC graphics market. ☞ LEARN MORE @ electronicdesign.com | 7 CHAPTER 2: EGA to VGA LIBRARY Commercial/ Consumer 1982 1984 1985 NEC7220 Hitachi 63484 TMS34010 1986 1987 1990 graphics chips IBM 8514 started in 1982 XGA Professional EGA clones Fig 4. VLSI Intel 82786 VGA with the NEC7220. By 1990, the XGA was top dog. By 1984 the computer market had consolidated to two main platforms, PCs, and workstations. Microcomputer had died off in the early 1980s due to the introduction of the PC. Gaming (also called video) consoles stayed as living room TV-based devices, and big machines called servers were replacing what had been mainframes. Supercomputers were still being produced at the rate of three or four a year. All of those machines used some type of graphics, and a few graphics terminals were still produced to serve the small but consistent high-end markets. However, by 1988 they all used standard graphics chips, sometimes several of them (Fig 4). The EGA specification was the catalyst for the establish of some, and the increased success of other companies. One such company, AMD, is still with us, having acquired pioneer graphics company ATI (and a EGA clone maker). ☞ BACK TO TABLE OF CONTENTS ☞ LEARN MORE @ electronicdesign.com | 8 LIBRARY CHAPTER 3: Intel’s 82786 Intel’s First Discrete Graphics Coprocessor DR. JON PEDDIE I ntel saw the rise in discrete graphics controllers such as NEC’s µPD7220 (and even licensed it), Hitachi’s HD63484, and the several clones of IBM’s EGA, and conclude Intel was leaving a socket unfilled by them. Intel’s intention always was, and still is, to provide every bit of silicon in a PC, and a graphics controller would be no exception. In 1986 the company introduced the 82786 (Fig. 1) as an intelligent graphics coprocessor that would replace subsystems and boards that traditionally used discrete components and/or software for graphics functions. It was designed to be used with any microprocessor, including Intel’s l6-bit 80186 and 80286 and 32-bit 80386.(1) The company announced that the 82786 integrated a graphics processor was available in a single 88-pin grid array or leaded carrier, and that it contained a display processor with a CRT controller, and a bus interface unit with a DRAM/VRAM controller supporting 4 MB of memoFig 1. The Intel 82786 was the first graphics chip to ry, which can consist of both support multiple windows in hardware (Source Commons. graphics and system memory. Intel was in the game. wikimedia.org) ☞ LEARN MORE @ electronicdesign.com | 9 CHAPTER 3: Intel’s 82786 Intel’s First Discrete Graphics Coprocessor LIBRARY M82786 Graphics processor Display processor System address bus Display Bus interface unit (BIU) System memory DRAM/VRAM controller CPU M82786 data bus Transceiver System data bus Graphics memory Fig 2. Intel’s 82786 graphics controller included a graphics processor and display processor (source Intel) The Graphics Processor (GP) and the Display Processor (DP) were independent processors in the 82786 (Fig. 2). The Bus Interface Unit (BIU) with its DRAM/VRAM controller arbitrated bus requests between the Graphics Processor, Display Processor, and the External CPU or Bus Master. Intel made the argument that the integrated design of the 82786 would increase programming efficiency and overall performance while decreasing development and production time and costs of many microprocessor-based graphics applications such as personal computers, engineering workstations, terminals, and laser printers. Compatibility with Intel microprocessors, the many device independent standards, and IBM Personal Computer bitmap memory format, combined with support for international character sets, multi-tasking, and an 8- or 16-bit host would make programming the 82786 flexible and straightforward claimed the company. The extensive features of the 82786 said Intel, would accommodate many designs. The list below contains some of the main features of the 82786. • Integrated drawing engine with a high-level computer graphics interface instruction set • Supports multiple character sets (fonts) that can be used simultaneously for text display applications, rapid pattern fill and International characters • Hardware support for fast manipulation and display of multiple windows on the screen • DRAM/VRAM controller supporting up to 4 MB of graphics memory, shift registers, and DMA channel, supports sequential access DRAMs and dual port video DRAMs (VRAMs) • Fast bit-block transfers (bitbit) between system and graphics memory • Supports up to 200 MHz CRTs or other video interface • Up to 256 simultaneous colors per frame • Programmable video timing • IBM Personal Computer bitmap formats Support for high resolution displays using a 25 MHz pixel clock enabled the 82786 to display up to 256 colors simultaneously, that was a biggie at the time. Systems designed ☞ LEARN MORE @ electronicdesign.com | 10 CHAPTER 3: Intel’s 82786 Intel’s First Discrete Graphics Coprocessor LIBRARY CRT Bus Video VRAM VRAMs read and write simultaneously Bus CRT Video DRAM DRAMs read and write through a single port with multiple 82786s or a single 82786 with VRAMs could support virtually unlimited color and resolution said Intel. Key to the 82786 was its memory structure, it could access dual port video. either graphics memory directly supported by the integral DRAM/VRAM controller or external system memory that resided on the CPU bus. When the 82786 accessed system memory, it controlled the bus and operated in Master Mode. The chip could also operate as a Slave with the CPU accessing the 82786 graphics memory and the internal registers. From the software standpoint, the 82786 accessed graphics and external system memory in the same manner. However, performance increased when the 82786 accessed its own graphics memory because the 82786 DRAM/VRAM controller accessed it directly without encountering contention with the CPU. Conversely, the CPU could access its own system memory more quickly than graphics memory because it did not encounter contention from the Display Processor or Graphics Processor. Another feature of the 82786 was the bitmap organization. It replaced the traditional bit plane memory model and used sequential ordering (linear memory) which took advantage of the fast-sequential access modes of DRAMs or dual port video DRAMs (VRAMs) to gain performance (Fig. 3). The first commercial VRAM was introduced in 1983 by Texas Instruments, three years before the 82786, and was adopted by various graphics add-in board (AIB) suppliers, which was part of what Intel was reacting to. The 82786 supported a packed-pixel bitmap organization for color in which all color bits for each pixel were stored in the same byte in memory. In the traditional bit-plane model, each plane defined separate color information. For example, a 4-plane bitmap described a bitmap with four colors. Each byte of memory contained one bit of color information for each pixel in the 4-plane bitmap. In the 82786 packed-pixel model, each byte stored data for two pixels. The chip drew all geometric objects and characters and moved images within and between bitmaps. The GP created and updated the bitmap, executed commands placed in memory by the host CPU, and updated the bitmap memory for the DP. The GP high-level commands provided high speed drawing of graphics objects and text. It performed all those functions independently of the DP. The DP traversed the bitmaps generated by the GP or external CPU, organized the data, and displayed the bitmaps in the form of windows on the screen. Fig 3. DRAMs have a single data port while VRAMs are Fig 4. The first version of Microsoft Windows took advantage of the latest graphics hardware. (source: Hoolooh) ☞ LEARN MORE @ electronicdesign.com | 11 CHAPTER 3: Intel’s 82786 Intel’s First Discrete Graphics Coprocessor LIBRARY The DP had a video shift register that could assemble several windows on the screen from different bitmaps in memory and zoom any of the windows in the horizontal and/or vertical directions. When the DP detected a window edge, it automatically switched to the next bitmap to display the subsequent window. Microsoft’s Windows 1 (Fig. 4) had come out and in 1986 version 1.02 and 1.03 were released so windows management was now important. Essentially, the DP operated as an address generator that accessed appropriate portions of memory-resident bitmaps. The data fetched from bitmaps was passed to the DP CRT controlier, which displayed the bitmap data on the screen. The DP CRT controller generated and synchronized the horizontal synchronization (HSync), vertical synchronization (VSync), and Blank signals. The DP performed all those functions, independently of the GP. The DP could operate as a Master. or a Slave based on the horizontal synchronization (HSync) and vertical synchronization (VSync) signals, which were set with the S(ync) bit in the CRT mode display control register. When the S bit was set to one, the DP was a slave with the HSync and VSync signals as inputs. If the S bit is O, the DP operates as a Master with HSync and VSync as outputs. The 82786 could address 4 Mbytes of memory. In those days, most systems divided memory in at least two segments, in the case of the 82786 graphics memory, which used the DRAM/VRAM controller, and external system memory. Dividing memory can enhance the performance of graphics applications. The DRAM/VRAM controller allowed faster access to graphics memory than external system memory because it did not encounter contention from the CPU. The CPU accessed system memory and executed programs simultaneously, while the 82786 accessed graphics memory and executed its commands. However, when performance was not critical, the 82786 and CPU could share the same memory with the integral 82786 DRAM/VRAM controller managing memory accesses. With this configuration, target applications had to be able to tolerate the decreased bandwidth of system memory. Intel sold the chip as a merchant part and independent AIB suppliers built boards with it. in 1987 two companies were offering three AIBs using the 82786, and by 1988 ten companies were offering 15 AIBs using the chip. The chip wasn’t very powerful compared to others that entered the market, most noteworthy being Texas Instruments’ TSM34010, nor as popular as the IBM VGA and its many clones. Intel; withdrew the chip with the introduction of the 86486 microprocessor in 1989. Footnote: 1. Shires, Glen, “A New VLSI Graphics Coprocessor-The Intel 82786,” Journal IEEE Computer Graphics and Applications archive, Volume 6 Issue 10, October 1986 , Pages 49-55 , IEEE Computer Society Press Los Alamitos, CA, USA ☞ BACK TO TABLE OF CONTENTS ☞ LEARN MORE @ electronicdesign.com | 12 LIBRARY CHAPTER 4: Texas Instruments TMS34010 and VRAM The first programmable graphics processor chip DR. JON PEDDIE I n 1984, Texas Instruments (TI) introduced TI’s VRAM, the TMS4161. The TMS34010 (Fig. 1) and VRAMs are related but not in the way one might think — Karl Guttag had started the definition of what became the TMS34010, having previously worked on the TMS9918 “Sprite Chip” and two 16-bit CPUs and the issue of memory bandwidth was a critical problem. The basic VRAM concept of putting a shift register on a DRAM was floating around TI, but the way it worked was impractical to use in a system. So Guttag’s team worked out a deal with TI’s MOS Memory group on how Guttag’s team would help define the architecture of the VRAM to work in a system if the memory division of TI would build it. In between the VRAM design, and the release of the 34010 Guttag’s team also developed the TMS34061, a simple VRAM controller, that they could get out much faster than the 34010. In 1986 TI introduced the TMS34010, the first programmable graphics processor integrated circuit. It was a full 32-bit Fig 1. The Texas Instruments’ TMS3010 Graphics System Processor was the first programmable graphics chip. ☞ LEARN MORE @ electronicdesign.com | 13 CHAPTER 4: Texas Instruments TMS34010 and VRAM LIBRARY Graphics system processor chip boundary To host processor Graphics processor Host-graphics interface Host bus Conventional DRAMs Program and data storage Graphics memory bus Frame buffer DRAM refresh controller Screen refresh controller CRT timing control Video RAMs To CRT monitor Fig 2. The TMS34010 introduced a new software interface, the Texas Instruments Graphics Architecture (TIGA). processor which included graphics-oriented instructions, so it could serve as a combined CPU and GPU. The design took place at Texas Instruments facilities in Bedford, UK and Houston, Texas. First silicon was working in Houston in December 1985, and first shipment (a development board) was sent to IBM’s workstation facility in Kingston, New York, in January 1986. Karl Guttag also personally showed a working 34010 to Steve Jobs at NeXT in January 1986. The Intel 82786 was announced shortly after the TI TMS34010 in May 1986, and became available in the fourth quarter. It was a graphics controller capable of using either DRAM or VRAM, but it wasn’t programmable like the 34010. Along with the chip, TI introduced their new software interface, the Texas Instruments Graphics Architecture (TIGA). TI claimed the 34010 (Fig. 2) was faster as a general processor than the popular Intel 80286 in typical graphics applications. The 34010, said Guttag, waits on the host 90% to 95% of the time with the way Microsoft Windows was structured by passing mostly very low-level commands. TIGA was a graphics interface standard created by TI that defined the software interface to graphics processors, the API. Using this standard, any software written for TIGA should work correctly on a TIGA-compliant graphics interface card. The TIGA standard was independent of resolution and color depth which provided a certain degree of future proofing. This standard was designed for high-end graphics. The chip had several dedicated graphics instructions. They were implemented in hardware and consisted of essential graphics functions, such as filling a pixel array, drawing a line, pixel block transfers, and comparing a point to a window. The chip supported Pixel Block Transfers, Pixel Transfers, Transparency, Plane Masking, Pixel Processing, Boolean Processing Examples, Multiple-Bit Pixel Operations, and Window Checking. ☞ LEARN MORE @ electronicdesign.com | 14 CHAPTER 4: Texas Instruments TMS34010 and VRAM LIBRARY There was also a graphics-oriented register indirect in x, y addressing mode. In this mode, a register held a pixel’s address in x, y form—the pixel’s Cartesian coordinates on the screen. The mode relieved the software of the time-consuming job of working out the mapping of each pixel’s memory address to its screen location. The chip was built for graphics from the ground up. It had 30, 32-bit registers divided into an A bank and a B bank. The A bank registers were general-purpose; software could use them for temporary storage during computation. B bank registers were specialized; they held information like the location and dimensions of the current clipping window or the current fore ground and background colors. The decision to go to thirty 32-bit registers rather than the 16 or fewer found on most machines was driven by the desire to make time critical functions run faster and to ease assembly-level programming. Register-to-register operations could be done in a single cycle when running out of cache and could occur in parallel with the memory controller’s completion of previously started write cycles. This parallelism naturally occurs in routines in which the CPU is computing functions written to a series of memory locations. The example used as a model during the 34010’s definition was an ellipse-drawing routine, in which the address computations and data values are held in the register file and the pixels to be written are sent to the memory controller. A large register file means that all the parameters for most time-critical functions can be kept inside the processor, thus preventing the thrashing of parameters between the register file and memory. The chip had a core clock frequency of 40 MHz and later 50MHz, which was pretty high I/O registers External interrupt requests Instruction cache Interrupt registers Instruction decode Reset Host interface bus Host interface registers Sync and blanking Video timing registers Program counter Status register ALU Microcontrol ROM Barrel shifter Register file A Local memory control registers Register file B Stack pointer Execution unit Local memory control logic and buffers Internal clock circuitry Local memory interface bus Fig 3. TMS34010 internal architecture highlights its microprogramming support. ☞ LEARN MORE @ electronicdesign.com | 15 Clock output Clock input CHAPTER 4: Texas Instruments TMS34010 and VRAM LIBRARY Fig 4. NEC’s MVA TI TMS34010-based AIB with a Paradise VGA (clone) chip on board and a Brooktree LUTDAC (source: Vlask) for the time, and many OEMs over-clocked the chip to gain a little performance differentiation. Like all graphics controllers of the time, the chip required an external LUT-DAC to do color management and CRT control. The most popular LUT-DACs at the time were Brooktree’s 478 and TI’s 34075. The exception being Truevision which use the TMS34010 with a true color frame buffer. Although TI had planned for the 34010 to be a stand-alone system processor capable of running DOS or other OS directly, the designers built in special provisions for working in a coprocessing environment as well, giving OEMs the most flexibility. Twenty-eight I/O registers mapped to high memory locations in the 34010’s address range. Some of these registers were directly accessible by the host processor. Hardware designers were relieved to see this; it made it easier for them to design a microcomputer-to 34010 interface. The programmer’s job was also made easier through these I/O registers; the host microcomputer could read from and write to the coprocessor board’s memory, halt the 34010, and restart it at a known address thus maintaining state. However, TIGA was not widely adopted. Instead, VESA and Super VGA became the de facto standard for PC graphics devices after the VGA, and several AIB builders added a VGA chip to their board in order to compatible with all apps. Microsoft didn’t initially support the 34010 in their Windows interface but came around because the chip did an excellent job in display list processing and was easier to manage in Windows 2. Still Windows at the time was passing mostly low-level commands with a lot of overhead. Windows was originally structured to have the host do all the drawing, and that technique worked OK with EGA and VGA. And IBM’s 8514/A driver did an excellent job at BLT’ing, but wasn’t as good as the 34010 on line draws which was critical to CAD users. Nonetheless, Microsoft criticized the 34010 and said didn’t do as well as the 8514/A on 20 basic graphics functions in the way it was structured. The company later discovered it was the memory management of fonts on the host side. The AIB should be used for drawing and color expansion, while fonts should be cached in 34010 space. Microsoft came to realize that AIBs needed two to three screens worth of memory to run Windows applications — Presentation Manager (PM) needed a lot more. And at the time, Windows didn’t handle bit-maps very well. Windows 386 improved things by allowing ☞ LEARN MORE @ electronicdesign.com | 16 CHAPTER 4: Texas Instruments TMS34010 and VRAM LIBRARY applications to run in or out of Windows — it allowed multiple bit-maps for various applications simultaneously. As a result, Microsoft became a supporter and announced TI-based AIBs would be able to get new Windows and PM drivers via TIGA — it would be part of the TIGA package (Fig. 4). Windows, however, couldn’t benefit from a line drawing engine, and at the time Microsoft advised customers to not use Windows with AutoCAD. During those years there were three major market forces, high-end PCs where the TMS34010 found many homes, consumer and commercial PCs where VGA was the dominate standard, and a variety of game consoles and arcade machines. TI did well in the high-end, and in arcade and game machines, and was also used in several special purpose systems in scientific instruments, avionics, and process control systems. In 1991, Guttag was made a Texas Instruments fellow. He was also given the NCGA Award for Technical Excellence for his pioneering work on VRAM. ☞ BACK TO TABLE OF CONTENTS ☞ LEARN MORE @ electronicdesign.com | 17 LIBRARY CHAPTER 5: IBM’s professional graphics, the PGC and 8514/A DR. JON PEDDIE The 8514/A was IBM’s first discrete graphics coprocessor I BM for a long time offered two levels of display capabilities, one for general purpose business users doing word processing, database entry, and Lotus spreadsheets, and one for engineering users — the latter always having higher resolution, more expensive monitors and controllers. The IBM Professional Graphics Controller (PGC) Before the 8514/A, in 1984 IBM introduced a multi-board AIBs called the Professional Graphics Controller (PGC) often called Professional Graphics Adapter and sometimes Professional Graphics Array. The PGC consisted of three interconnected PCBs and contained a graphics processor and memory. Targeted for programs such as CAD and page-layout, the PGC was, at the time of its release the most advanced graphics board for the IBM XT. The PGC had a simple graphics controller chip and used an external DAC and discrete logic chips for many other functions and look-up tables (Fig. 1). The PCG supported 640 by 480 pixel graphics and produce 256 colors from a palette of 4096. The PGC had two modes of operation – CGA (320 by 200 pixels) and native. The PGC’s matching display was the IBM 5175, an analog RGB monitor that was unique to it and not compatible with any other graphics board (Fig. 2). Not widely used in commercial and consumer-class PCs, its price at $4,290 compared favorably to a $50,000 dedicated CAD workstation of the time, even including the cost of a PC XT model 87 ($4,995). Today that AIB would cost $9,910 — makes the AIBs today look cheap by comparison. In the late 1980s, the original IBM peripheral bus known as ISA (industry standard architecture) had evolved from a slow 4.7 MHz 8-bit bus to an 8 MHz 16-bit and opened up a gateway for clone AIB manufacturers. The clone PCs and accessories were marginalizing ☞ LEARN MORE @ electronicdesign.com | 18 CHAPTER 5: BM’s professional graphics, the PGC and 8514/A LIBRARY Fig 1. IBM’s Professional IBMs core PC business, and the company wanted to halt that. Diverting from its openness and reasonable licensing policy, in 1987 the company introduced a new PC, the PS/2 with a proprietary OS (OS/2) and a system bus, the Micro Channel; the micros channel was not backward compatible with ISA boards. The 8514/A high-resolution graphics adapter was the first AIB for the 10 MHz Micro Channel. Graphics Controller (PGC) was a 3-board set. (John Elliot Vintage The IBM 8514/A Introduced with the IBM Personal System/2 computers in April 1987. Rumors of the 8514/A project began circulating as early as 1985. The chip was developed in Hursley UK, not too far from the Texas Instruments Bedford development center that created the popular TSM34010, which I covered in my preceding article. The famous Video Graphics Array (VGA) and 8514/A Chips and add-in boards (AIBs) were all designed at IBM Hursley as were the monitors. The 8514/A was an optional upgrade to the Micro Channel architecture-based PS/2’s Video Graphics Array (VGA) and introduced within three months of PS/2’s introduction (Fig. 2). The 8514/A was the first fixed-function graphics accelerator for PCs with the support of 1024 by 768 pixel resolution and up to 256 colors. The basic 8514/A with 512KB VRAM only supported 16 colors; the 512 Kbytes memory expansion brought the total to 1 Mbytes VRAM and supported 256 colors. Along with the introduction of the 8514/A was a 16-in, 1024 by 768 pixel CRT monitor. The 8514 is the monitor; 8514/A is the AIB with the “/A” designating adapter, not version (there is no 8514/B). 8514/A was only a PS/2 accessory, and IBM didn’t produce any ISA versions; however, several clone suppliers did. In 1990 it was replaced with XGA, which was like VGA and 8514/A rolled in one. The 8514/A was the beginning of large-scale integration graphics chips and compared to the graphics controller of the PGC (Fig. 1) the 8514-controller chip (Fig. 3) was huge. The 8514/A was capable of four new graphics modes not available in VGA. IBM named them the advanced function modes, and in addition to 640 by 480 pixels. The three other modes got up to 1024 by 768 pixels; however, somewhat ironically it did not support the conventional alphanumeric or graphics modes of the other video standards since it executPCs) ☞ LEARN MORE @ electronicdesign.com | 19 CHAPTER 5: BM’s professional graphics, the PGC and 8514/A LIBRARY ed only in the advanced function modes. In a typical system VGA automatically took over when a standard mode was called for by an application or the OS. As a result, an interesting feature of the 8514/A was that a system containing it could operate with two monitors. In this case, the usual setup was to connect the 8514 monitor to the 8514/A and a standard monitor to the VGA (Fig. 4). Although often cited as the first PC mass-market fixed-function accelerator the IBM’s 8514 was not the first PC AIB to support hardware acceleration, that distinction goes to the NEC µ7220. What made the 8514/A interesting was the accelerator, which was known as the Sync Fig 2. IBM’s 8514/A AIB and memory board was a 2-board set. Microprocessor section Video control generator section System bus Shift register bus High function graphics scanner address Data bus System bus interface R/W control Display memory Lookup table and video output section Address bus Timing and control section Emulator address control Graphics emulator Character ROM row address Emulator RAM address bus Fig 3. IBM’s PGC supported 640 by 480 pixel graphics and produce 256 colors from a palette of 4096. It had two modes: CGA (320 by 200 pixels) and native. ☞ LEARN MORE @ electronicdesign.com | 20 PEL bus Video CHAPTER 5: BM’s professional graphics, the PGC and 8514/A LIBRARY VGA controller VGA bit planes VGA 8514/A 8514/A controller VGA monitor VGA DAC Mode switch 8514A bit planes 8614/A DAC 8514/A monitor EPROM Fig 4. The VGA/8514/A could drive two monitors at a time. draw(ing) engine. The 8514/A was the first widespread fixed-function accelerator, and therefore relatively inexpensive, and it was a fast accelerator for the time. Other graphics accelerators of the period used the Texas Instruments TMS34010/ TMS34020 chips—a RISC processor running at around 50MHz. They were more flexible, but more complex to program, more expensive, and generally about as fast as the simpler 8514/A. Up until the 8514/A’s introduction, PC graphics acceleration was relegated to expensive workstation-class, graphics coprocessor boards. One could get coprocessor boards using special CPU or digital signal processor chips which were programmable. However, fixed-function accelerators, such as the 8514/A, sacrificed programmability for better cost/performance ratio. At the time the IBM Display Adapter 8514/A sold for $1,290; today: it would cost $2,785. Those clones In the late 1980s and early 1990s, several companies reverse-engineered the 8514/A and offered clone chips (i.e., software compatible) with ISA support. Prominent among those was Paradise systems (which was acquired by Western Digital) PWGA-1 (also known as the WD9500), Chips & Technologies 82C480, and ATI’s Mach 8 and later Mach 32 chips. In the early 1990s compatible 8514 boards were also based on TI’sTMS34010 chip. All of the clones were faster, due in part to new higher density VRAM chips, and as a result pushed the display resolution up to 1280 × 1024 with 24-bit, 16 million colors — truly a workstation in a PC. IBM had one more graphics controller effort, the XGA which would be the superset of the VGA and 8414/A, I’ll cover that in a future edition, the next edition will be on widely popular and industry standard-setting Video Graphics Adaptor — the ubiquitous VGA. ☞ BACK TO TABLE OF CONTENTS ☞ LEARN MORE @ electronicdesign.com | 21 LIBRARY CHAPTER 6: IBM’s VGA The VGA was the most popular graphics chip ever DR. JON PEDDIE I t is said about airplanes that the DC3 and 737 are the most popular planes ever built, and the 737, in particular, the best-selling airplane ever. The same could be said for the ubiquitous VGA, and its big brother the XGA. The VGA, which can still be found buried in today’s modern GPUs and CPUs, set the foundation for a video standard, and an application programming standard. XGA expanded the video standard to a higher resolution, and with more performance. On April 2, 1987, when IBM rolled out the PS/2 line of personal computers, one of the hardware announcements was the VGA display chip, a standard that has lasted for over 25 years. While the VGA was an incremental improvement over its predecessor EGA (1984) and remained backward compatible with the EGA as well as the earlier (1981) CGA and MDA, its forward compatibility is what gives it such historical recognition. Integrated motherboard The IBM PS/2 Model 80 was the first 386 computer from IBM and was used to introduce several new standards. Most notably there was the onboard VGA graphics (Fig. 1) with 256 Kbytes RAM, the 32-bit bus Microchannel Architecture (MCA), card identification and configuration by BIOS, and RGB video signal route through. The MCA could accommodate the previous generation 8514/A graphics board, while the VGA chip was on the motherboard. One of the significant features of the VGA was the integration of the color look-up-table (cLUT) and digital to Fig 1. The VGA was the most popular graphics chip ever. ☞ LEARN MORE @ electronicdesign.com | 22 CHAPTER 6: IBM’s VGA LIBRARY Fig 2. This VGA board had an EISA tab on top and an ISA tab on the bottom. There was a VGA connector on each end of the board. (Source EISA/Wikipedia) analog converter (DAC). Before the VGA, LUT-DACs, as they were called, were separate chips supplier by Brooktree, TI, and others — those products were soon to be obsolete, but it didn’t happen overnight. The integrated logic of the VGA also contained the CRT controller and replaced five or more other chips; only external memory was needed. The VGA showed the path to future fully integrated devices. The VGA also sparked a new wave of cloning and made the fortunes of several companies such as Cirrus Logic, S3, Chips & Technologies, and three dozen others. The IBM 5162, more commonly known as the IBM PC XT/286 was an extremely popular PC and used a 16-bit expansion bus, which allowed upgraded graphics boards to be plugged in replacing the IBM EGA board. Because the PS/2 used the MCA, some board manufacturers offered a board with two tabs, one for ISA, and one for MCA. And shortly later in 1988 the Extended Industry Standard Architecture bus for IBM PC compatible computers was introduced. It had MCA and ISA signaling. It was developed by a consortium of PC clone vendors (the “Gang of Nine”) as a counter to IBM’s use of its proprietary Micro Channel Architecture (MCA) in its PS/2 series, and boards appeared with tabs for both ISA and ELISA socket (Fig. 2). The basic system video was generated by what IBM referred to as a Type 1 or Type 2 video subsystem, that is, VGA or XGA. The circuitry that provided the VGA function included a video buffer, a DAC, and test circuitry. Video memory was mapped as four planes of 64Kb by 8 bits (maps O through 3). The video DAC drove the analog output to the display connector. The test circuitry was used to test for the type of display attached, color or monochrome. The video subsystem controlled access to video memory from the system and the CRT controller. It also controlled the system addresses assigned to video memory. Up to three different starting addresses could be programmed for compatibility with previous video adapters. In the graphics modes, the mode determines the way video information was formatted into memory, and the way memory was organized. In alphanumeric modes the system wrote the ASCII character code and attribute data to video memory maps O and 1, respectively. Memory map 2 contained the character font ☞ LEARN MORE @ electronicdesign.com | 23 CHAPTER 6: IBM’s VGA LIBRARY loaded by BIOS during an alphanumeric mode set. The font was used by the character generator to create the character image on the display. Three fonts were contained in ROM: an 8-by-8 font, an 8-by-14 font, and an 8-by-16 font. Up to eight 256-character fonts could be loaded into the video memory map 2; two of those fonts could be active at any one time, allowing a 512-character font. In those days characters were an important feature/function, and not considered just another bit map. The video subsystem formatted the information in video memory and sent the output to the video DAC. For color displays, the video DAC sent three analog color signals (red, green, and blue) to the display connector. For monochrome displays, the BIOS translated the color information in the DAC, and the DAC drove the summed signal onto the green output. Thus, the green line or signal became the default synch signal for monitors that still used BNC connectors. The auxiliary video connector allowed video data to be passed between the video subsystem and an adapter plugged into the channel connector. This was a common technique Address Data Mux CRTC Memory maps 0 Four 8-bit memory maps 1 64 K address each Graphics controller 2 3 SEQ 8 Attribute controller Red Video DAC Green Blue Data Analog outputs to display Fig 3. The VGA lacked the hardware sprite support found in earlier graphics controllers. (Source IBM) ☞ LEARN MORE @ electronicdesign.com | 24 LIBRARY CHAPTER 6: IBM’s VGA Fig 4. The VGA introduced carried up until the late 1990s. Companies offering higher resolution and/or 3D capable graphics chips would not include a VGA controller to save costs and assumed a VGA controller would already be in a system as a default. IBM didn’t provide any high-resolution graphics drivers for the VGA. The original VGA specifications deviated from previous controllers by not offering hardware support for sprites (Fig. 3). The on-board specifications included 256 KB Video RAM (The very first systems could be ordered with 64 Kbytes or 128 Kbytes of RAM, at the cost of losing some or all high-resolution 16-color modes.) It had support for 16-color and 256-color paletted display modes and 262,144-color global palette (6 bits, and therefore 64 possible levels, for each of the red, green, and blue channels via the RAMDAC) as well. The clock was selectable at 25.175 MHz or 28.322 MHz for the master pixel clock, but the usual line rate was fixed at 31.46875 kHz. The VGA specified a maximum of 800 horizontal pixels and 600 lines, which was greater than the 640 × 480 monitors that were being offered at the time. Refresh rates could be as high as 70 Hz, with a vertical blank interrupt (not all the clone boards supported that in order to cut costs.) The chip could support a planar mode: up to 16 colors (four, bit planes), and a packed-pixel mode: 256 colors (Mode 13h as it was commonly referred to). The chip did not have bit-BLT capability (i.e., a Blitter), but did support very fast data transfers via “VGA latch” registers. There was some primitive Raster Ops support, a barrel shifter, and something IBM called hardware smooth scrolling support, which was just a bit of buffering. A barrel shifter is a digital circuit that can shift a data word by a specified number of bits without a CPU. A common usage of a barrel shifter is in the hardware implementation of floating-point arithmetic. In today’s modern GPUs there are thousands of 32-bit floating processors. the ubiquitous 15-pin VGA connector. VGA Connector The VGA specification included a resolution, a physical connector specification, and video signaling. Still supported today, one can find projectors with VGA connectors (Fig. 4) which require an adaptor cable when used with newer computers or graphics boards. VGA clone suppliers In addition to the clone chip suppliers, several other companies incorporated the VGA structure into their chips. Some of these suppliers include: ATI/AMD, Chips and Technologies, Cirrus Logic, Cornerstone Imaging, Gemini, Genoa, Headland Technologies, Hercules, Hualon, IIT, Intergraph, LSI, Matrox, NEC, Oak Technology, Paradise Systems/ Western Digital, Plantronics, Realtek, S3 Graphics, SiS, Tamerack, Texas Instruments, Trident Microsystems, Tseng Labs, Video 7 and WinBond. No other chip has had the profound impact on the computer business as the VGA has, and the industry owes a great debt to IBM for developing it; sadly, IBM didn’t profit as much from their invention as did other suppliers. ☞ BACK TO TABLE OF CONTENTS ☞ LEARN MORE @ electronicdesign.com | 25 CHECK OUT THESE RESOURCES FROM ELECTRONIC DESIGN AND OUR SISTER BRANDS WEBSITES MAGAZINES NEWSLETTERS You can also apply for a subscription to one of our traditional magazines available in both print and digital formats. Stay current with the industry with newsletters created by engineers and editors with inside connections! You’ll find coverage on a wide variety of electronics technologies from Analog & Mixed Signal to Embedded and more. ☞ Click Here to check out what more than 200,000 engineers are reading now. ELECTRONIC DESIGN – complimentary in US and Canada - ☞ Subscribe Now Non-qualified or Outside the US and Canada: you will be prompted to pay based on your location. MICROWAVES & RF – complimentary internationally☞ Subscribe Now Non-qualified: you will be prompted to pay based on your location. THE SMART HOME BUTLER The Real Smart IoT Electronic Design Inside Track with Klaus Werner, RF Energy Alliance|24 impedance tuners the authority on emerging technologies for design solutions march 2017 Smart Homes Delivering Sustainability|33 A collection of services Automated Automated Open/close doors temperature control grocery service and windows Turn on/ off lights Smart medical service Detect leaks and turn off water Control home appliances Turn on/off entertainment system Boosting Public Safety via Communications|45 trusted eNgiNeeriNg resource for oVer 50 years www.mwrf.com Impedance Tuners in control 100 APRIL 2017 electronicdesign.com consumer electronics build, buy, p| • $10 computing Price Point • trends in Poe or both? Consider these factors when designing an embedded system. p12 Corner Ad.pdf 1 4/19/2016 1:37:18 PM C M march 2017 • Vol. 56 •, No. 3 APRIL 2017 • Vol. 62, no. 11 $10.00 Periodicals Postage Paid • USPS 100 Approved Poly 161107_5Mill_ELECDES_US_Snipe.indd 1 Y CM MY CY FAST CMY K $10.00 Powered by Periodicals Postage Paid • USPS 100 Approved Poly 11/7/16 11:33 AM ABOUT US A trusted industry resource for more than 50 years, the Penton Electronics Group is the electronic design engineer’s source for design ideas and solutions, new technology information and engineering essentials. Individual brands in the group include Electronic Design, Microwaves & RF and Power Electronics. Also included in the group is a data product for engineers, SourceToday.com. An business ☞ REGISTER: electronicdesign.com | 27