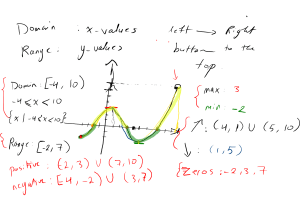

ENGLISH TO LUGANDA MACHINE TRANSLATION * FINAL TERM PAPER KISEJJERE RASHID BACHELORS OF SCIENCE IN SOFTWARE ENGINEERING) MAKERERE UNIVERSITY COURSE INSTRUCTOR: MR.GALIWANGO MARVIN KAMPALA, UGANDA rashidkisejjere0784@gmail.com Abstract—English to Luganda Translation using different machine classification models and deep learning. Their many machine translation sources right now but there isn’t any public English to Luganda Translation paper showing how the translation process occurs. Luganda is a very common language and it’s the mother language of Uganda. It has got a very big vocabulary of words which means that working on it requires a very big dataset. For the sake of this paper, am going to be going through different machine-learning models that can be implemented to translate a given English text to Luganda. Because Luganda is a new field in translation so am going to be experimenting with multiple machine learning classification models of SVMs, Logistic regression models, and many more, finally deep learning models of RNNs, LSTMs, and also incorporating advanced mechanisms of Attention, Transformers plus some additional techniques of transfer learning. Index Terms—Artificial Intelligence, machine translation, classification task, RNNs, transfer learning, LSTMs, attention I. I NTRODUCTION Machine translation is a field that was and is still in the research and so far there are multiple machine translation Approaches that researchers have come up with. These machine translation techniques mainly include; Rule-Based Machine Translation (RBMT), Statistical Machine Learning (SMT), and Neural Machine translation (NMT). A detailed explanation of these approaches is in the next chapter. Machine translation is one of the major subcategories of NLP as it involves a proper understanding of two different languages. This is always challenging as languages tend to have a very huge vocabulary so a lot of computer resources are needed for a machine translation system to come out as accurately as possible. Also, the data used in the process is supposed to be very accurate and this as result also tends to affect the accuracy of these models so coming up with a very accurate model is very tricky. Throughout this paper, I will be explaining how I was able to come up with a couple of translation models using different strategies of machine learning. II. BACKGROUND AND MOTIVATION The background of ML translation comes from majorly three translation processes I.e. the Rule Based Machine Translation (RBMT), Statistical Machine Learning (SMT) and Neural Machine translation (NMT) of this laptop A. Rule Based Machine translation(RBMT) Rule base machine translation (RBMT) as the name states it’s mainly about researchers coming up with different rules through which a text in a given can follow to come up with its respective translation. It is the oldest machine translation technique and it was used in the 1970s. B. Statistical Machine Learning (SMT) Statistical Machine translation (SMT) is an old translation technique that uses a statistical model to create a representation of the relationships between sentences, words, and phrases in a given text. This method is then applied to a second language to convert these elements into the new language.One of the main advantages of this technique is that it can improve on the rule-based MT while sharing the same problems. C. Neural Machine Translation(NMT) Neural network translation was developed using deep learning techniques. This method is faster and more accurate than other methods. Neural MT is rapidly becoming the standard in MT engine development. The translation of a given language to another different language is a machine classification problem. This type of problem can predict only values within a known domain. The domain in this case could be either the number of characters that make up the vocabulary of a given language or the number of words in that given vocabulary. So this shows that the classification model could be either word-based or character based. I will elaborate more on this issue in the next topics. III. LITERATURE REVIEW Translation is a crucial aspect of communication for individuals who speak different languages. With the advent of Artificial Intelligence (AI), translation has become more efficient and accurate, making it possible to communicate with individuals in other languages in real-time. There are basically two major learning techniques that can be used ; Supervised learning is a type of machine learning where the model is trained on a labeled dataset and makes predictions based on the input data and the labeled output. Supervised learning algorithms have been used to train AI-powered English to Luganda translation systems. The model is trained on a large corpus of bilingual text data, which helps it learn the relationships between English and Luganda words and phrases. This allows the model to make predictions about the Luganda translation of an English text based on the input data. This is the famous type of machine learning and it involves the famous deep neural networks. Unsupervised learning is a type of machine learning where the model is not trained on labeled data but instead learns from the input data. Unsupervised learning algorithms can also been used to develop AI-powered English to Luganda translation systems. The model uses techniques such as clustering and dimensionality reduction to learn the relationships between English and Luganda words and phrases. This allows the model to make predictions about the Luganda translation of an English text based on the input data. In conclusion, AI-powered English to Luganda translation has the potential to greatly improve the speed and accuracy of translations. IV. RESEARCH GAPS Below are some of the major research Gaps in the field of machine translation. • • • Limited Training Data: The quality of AI-powered translations is heavily dependent on the amount and quality of training data used to train the model. Further research is needed to explore methods for obtaining high-quality training data. Lack of Cultural Sensitivity: AI-powered translation systems can produce translations that are grammatically correct but lack the cultural sensitivity of human translations. This can result in translations that are culturally inappropriate or that do not accurately convey the original message. Vulnerability to Errors of the machine learning system. AI can only understand what it has been trained on. So in cases where the input is not similar to the data which it was trained on, AI then can easily create undesired results. V. C ONTRIBUTIONS OF THIS PAPER One of the major aim of this paper is lay a foundation for further and much more detailed research in the translation of large vocabulary languages like Luganda. Through showing the different machine learning techniques that can be used ti achieve this. VI. METHODOLOGY The problem being investigated in this project is to develop an AI-powered English to Luganda translation system. The significance of this problem lies in the growing demand for high-quality and culturally sensitive translations, particularly in the field of commerce and communication between English and Luganda-speaking communities. The scope of the project is to develop an AI system that is capable of accurately translating English text into Luganda text, while also preserving the meaning and cultural context of the original text. To address this problem, the proposed AI approach is to develop a neural machine translation (NMT) model. The NMT model will be trained on the English and Luganda parallel corpus dataset, and will use this data to learn the relationship between the two languages.The AI process can be summarized as follows: Data Collection: Collect a large corpus of parallel text data in English and Luganda. Pre-processing: Pre-process the data to remove irrelevant information and standardize the text. Model Selection: Choose the neural machine translation model that is best suited for the problem. Model Training: Train the NMT model on the pr-processed data. Model Evaluation: Evaluate the trained model on a held-out set of data to determine its performance. Deployment: Deploy the trained model for use in a real-world setting. Continuous Improvement: Continuously evaluate the performance of the model and make improvements as needed. The AI evaluation framework used in this project are the accuracy metrics mainly. This is a major of how the model will be able to translate a given text correctly. In conclusion, the proposed AI approach for this project is to develop a neural machine translation model that can accurately translate English text into Luganda text while preserving the meaning and cultural context of the original text. VII. DATASET DESCRIPTION through the visualization of the data. Below are the visualizations and their meanings; 1) Word Cloud: A word cloud is graphical representation of the words that are used frequently in the dataset. This is important as it shows that the model will highlt depend on those particular words . The dataset [15] I used was created by Makerere University and it contains approximately 15k English sentences with there respective Luganda translation. Below are the factors for considering this dataset. • Scarcity of Luganda datasets. Luganda isn’t a famous language world wide and it is mainly used in the Country Uganda only so the only major dataset I could find was this one. • Cost. The dataset is available for free for anyone to use and edit. • The accuracy of the dataset isn’t bad at all so it is the best option to use. • The dataset is relatively large and diverse enough to be able to create a very good model out of. For the Luganda sentences VIII. DATA PREPARATION AND EXPLORATORY DATA ANALYSIS. A. DATA PREPARATION Data preparation refers to the steps taken to prepare raw data into improved data which can be used to train a machine learning model. The data preparation process for my model was as follows; • Removal of any punctuation plus any unnecessary spaces this is necessary to prevent the model from training on a large amount of unnecessary data. • Converting the case of words in the dataset to lowercase. Since python is case-sensitive a word like “Hello” is different from “hello”. to avoid this dilemma I had to change the case. • Vectorization of the dataset. Vectorization is referred to as the process of converting a given text into numerical indices. This is necessary because the machine learning pipeline can only be trained on numerical data. • Removal of null values. Here all the rows that had null data had to be dropped because for textual data it is very difficult to estimate the value in the null spot. Those were the data preparation processes I used in the model creation process. 2) Correlation matrix: This is a matrix showing the correlation of the different values to each other. Plotting a 2d correlation matrix for the entire dataset is almost impossible but what is possible is the plot of a particular sentence. The matrix below shows the correlation for a given sentence. Here the model will have to pay a lot of attention to the words that are highly correlated to each other. B. DATA ANALYSIS Exploratory data analysis is referred to as the process of performing initial investigations on data to discover anomalies and patterns. Exploratory data analysis is mainly carried out For the it’s Luganda Sentence For the respective Luganda Sentence 3) Sentence Lengths plots: Through these plots, we are ale to determine what should all the sentences of the datasets be padded to because during the training process they are all supposed to be of the same length In a conclusion, data preparation and exploratory data analysis are key steps in the creation of a very accurate model. IX. AI MODEL SELECTION AND OPTIMIZATION These figures show the maximum sentence lengths for the English and the Luganda sentences receptively. 4) Box Plot: A box plot is visual representation that can be used to show the major outliers in the dataset. Plotting a box plot for the entire spot is also almost impossible but what is possible is the plotting of the box plot for a particular sentence, this as a result shows on the possible outliers in the sentence thus the model during the training process ends up not paying a lot of attention to those particular words. Box plot for one of the sentences in the dataset Throughout the project, I created three models. I.e one with recurrent neural networks, the other with the attention mechanism, and finally the last one with transfer learning on the per-trained hugging face transformer model. • The recurrent neural network model was a simple model that uses RNNs to translate the model. Its accuracy was very bad because the vocabulary for the two languages was very big. These types of RNNs are best for simple vocabularies. • The attention mechanism model. This happened to be much much better compared to the RNN model. Attention is a mechanism used in deep neural networks where the model can focus on only the important parts of a given text by assigning them more weights. • The other model I created used transformers. Transformers are also deep learning models that are built on top of attention layers. This makes them much more efficient when it comes to NLP tasks.. This information includes ram, processor, brand, storage, type, screen size and many more. X. ACCOUNTABILITY In this context of AI, “accountability” refers to the expectation that organizations or individuals will use to ensure the proper functioning, throughout the AI systems that they design, develop, operate or deploy, following their roles and applicable regulatory frameworks, and for demonstrating this through their actions and decision-making process (for example, by providing documentation on key decisions throughout the AI system lifecycle or conducting or allowing auditing were justified). AI accountability is very important because it’s a means of safeguarding against unintended uses. Most AI systems are designed for a specific use case; using them for a different use case would produce incorrect results. Through this am also to apply accountability to my model by making sure that Since my AI model mainly depends on the dataset. Hence, it’s best to make sure that the quality of the dataset is constantly improved and filtered. Because of any slight modifications in the spelling of the words then the model’s accuracy will decrease. XI. R ESULTS AND D ISCUSSION I spitted the data into training and the validation set below are the results; Words that were predicted with a very high probability are more coloured. XII. CONCLUSION AND FUTURE WORKS I hope this paper will give a basic understanding of the different machine learning methods that can be used to create a deep learning model capable of translating a given English text into Luganda. The same idea can be used to translate different languages. The model currently is overfitting the dataset. One way to overcome this is to increase the size of the data because the dataset contains of only about 15k sentences. So for the model to become much more accurate increasing the dataset to about a million sentences will tremendously improve on its accuracy. The training accuracy is of 92 A. Validation and Accuracy plot Usage of other machine learning techniques like transformers. The model illustrated above was based on the attention mechanism of neural networks. Using the transformers will improve the quality of the model even more. Though transformers are usually complicated to use instead fine tuning an already trained model is what I would recommend, this is called transfer learning. A. Dataset and python source code LINK to the Final Python Source Code https : //colab.research.google.com/drive 1N sRAxdf tGIzqzeIM w3N F Y 9xClLk49f LINK to the used dataset https : //zenodo.org/record/5855017 Its clear that the model is over fitting the dataset but it’s accuracy is still fairly good. LINK to the YouTube Video https : //youtu.be/RLXf M 0iLQag XIII. ACKNOWLEDGMENT B. ATTENTION PLOT An attention plot is a figure showing how the model was able to predict the given output. Special Thanks to Mr.Ggaliwango Marvin for his never ending support towards my research on this project. I also want to appreciate Dr. Rose Nakibuule for the provision of the foundation knowledge needed for this project. [4] R EFERENCES [1] M. Singh, R. Kumar, and I. Chana, ”Neural-Based Machine Translation System Outperforming Statistical Phrase-Based Machine Translation for Low-Resource Languages”, 2019 Twelfth International Conference on Contemporary Computing (IC3), 2019, pp. 1-7, DOI: 10.1109/IC3.2019.8844915. V. Bakarola and J. Nasriwala, ”Attention based Neural Machine Translation with Sequence to Sequence Learning on Low Resourced Indic Languages,” 2021 2nd International Conference on Advances in Computing, Communication, Embedded and Secure Systems (ACCESS), 2021, pp. 178-182, DOI: 10.1109/AC CESS51619.2021.9563317. . [2] Academy, E. (2022) How to Write a Research Hypothesis — Enago Academy, Enago Academy. Available at: https://www.enago.com/academy/how-to-developa-good-research-hypothesis/ (Accessed: 17 November 2022). What is the project scope? (2022). Available at: https://www.techtarget.com/searchcio/definition/project-scope (Accessed: 17 November 2022). [3] Machine translation – Wikipedia (2022). Available at: https://en.wikipedia.org/wiki/Machine translation (Accessed: 17 November 2022). [4] K. Chen et al., ”Towards More Diverse Input Representation for Neural Machine Translation,” in IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 28, pp. 1586-1597, 2020, doi: 10.1109/TASLP.2020.2996077. [5] O. Mekpiroon, P. Tammarattananont, N. Apitiwongmanit, N. Buasroung, T. Charoenporn and T. Supnithi, ”Integrating Translation Feature Using Machine Translation in Open Source LMS,” 2009 Ninth IEEE International Conference on Advanced Learning Technologies, 2009, pp. 403404, doi: 10.1109/ICALT.2009.136. [6] J. -W. Hung, J. -R. Lin and L. -Y. Zhuang, ”The Evaluation Study of the Deep Learning Model Transformer in Speech Translation,” 2021 7th International Conference on Applied System Innovation (ICASI), 2021, pp. 30-33, doi: 10.1109/ICASI52993.2021.9568450. [7] V. Alves, J. Ribeiro, P. Faria and L. Romero, ”Neural Machine Translation Approach in Automatic Translations between Portuguese Language and Portuguese Sign Language Glosses,” 2022 17th Iberian Conference on Information Systems and Technologies (CISTI), 2022, pp. 1-7, doi: 10.23919/CISTI54924.2022.9820212. [8] Machine Translation – Towards Data Science. (2022). Retrieved 24 November 2022, from https://towardsdatascience.com/tagged/machine translation [9] H. Sun, R. Wang, K. Chen, M. Utiyama, E. Sumita and T. Zhao, ”Unsupervised Neural Machine Translation With Cross-Lingual Language Representation Agreement,” in IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 28, pp. 1170-1182, 2020, doi: 10.1109/TASLP.2020.2982282. [10] Y. Wu, ”A Chinese-English Machine Translation Model Based on Deep Neural Network,” 2020 International Conference on Intelligent Transportation, Big Data and Smart City (ICITBS), 2020, pp. 828-831, doi: 10.1109/ICITBS49701.2020.00182. [11] L. Wang, ”Adaptability of English Literature Translation from the Perspective of Machine Learning Linguistics,” 2020 International Conference on Computers, Information Processing and Advanced Education (CIPAE), 2020, pp. 130-133, doi: 10.1109/CIPAE51077.2020.00042. [12] S. P. Singh, H. Darbari, A. Kumar, S. Jain and A. Lohan, ”Overview of Neural Machine Translation for English-Hindi,” 2019 International Conference on Issues and Challenges in Intelligent Computing Techniques (ICICT), 2019, pp. 1-4, doi: 10.1109/ICICT46931.2019.8977715 [13] R. F. Gibadullin, M. Y. Perukhin and A. V. Ilin, ”Speech Recognition and Machine Translation Using Neural Networks,” 2021 International Conference on Industrial Engineering, Applications and Manufacturing (ICIEAM), 2021, pp. 398-403, doi: 10.1109/ICIEAM51226.2021.9446474. [14] How to Build Accountability into Your AI. (2021). Retrieved 24 November 2022, from https://hbr.org/2021/08/how-to-build-accountability-intoyour-ai [15] Mukiibi, J., Hussein, A., Meyer, J., Katumba, A., and Nakatumba Nabende, J. (2022). The Makerere Radio Speech Corpus: A Luganda Radio Corpus for Automatic Speech Recognition. Retrieved 24 November 2022, from https://zenodo.org/record/5855017