HPE 3PAR StoreServ Management Console 3.4 Technical White Paper

advertisement

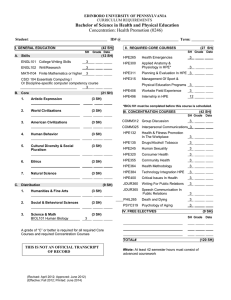

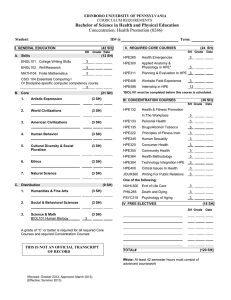

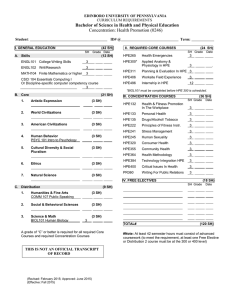

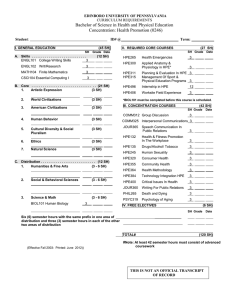

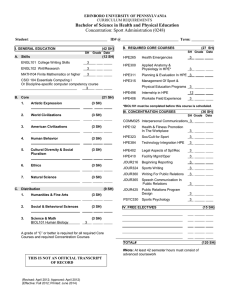

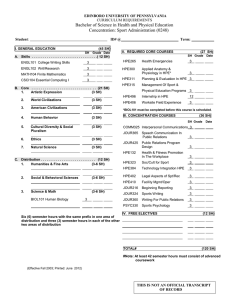

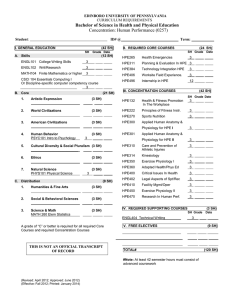

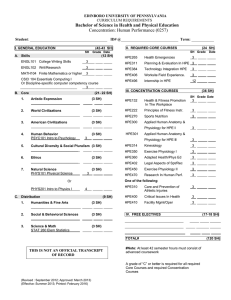

Technical white paper HPE 3PAR StoreServ Management Console 3.4 Bringing artificial intelligence to HPE 3PAR storage management Technical white paper Contents Executive summary .............................................................................................................................................................................................................................................................................................................. 3 What’s new in HPE StoreServ Management Console 3.4? .............................................................................................................................................................................................................. 3 Usability and dashboard .................................................................................................................................................................................................................................................................................................. 3 New panels to support Analytics in HPE SSMC 3.4 ........................................................................................................................................................................................................................ 3 Dashboard panels with an attitude ................................................................................................................................................................................................................................................................. 4 Performance & Saturation panel ....................................................................................................................................................................................................................................................................... 5 Systems menu ........................................................................................................................................................................................................................................................................................................................... 8 New selections under system menu .............................................................................................................................................................................................................................................................. 9 System analytics ............................................................................................................................................................................................................................................................................................................... 9 Saturation ............................................................................................................................................................................................................................................................................................................................ 10 Performance ...................................................................................................................................................................................................................................................................................................................... 10 Top 5 Volume Hotspots By Latency (ms) ............................................................................................................................................................................................................................................12 Top 5 Volumes By Latency (ms) ...................................................................................................................................................................................................................................................................13 HPE SSMC 3.4 deployment .......................................................................................................................................................................................................................................................................................16 config_appliance ............................................................................................................................................................................................................................................................................................................16 VMware ESXi™ or VC installation...................................................................................................................................................................................................................................................................17 Networking options .................................................................................................................................................................................................................................................................................................... 17 HPE SSMC appliance migration ............................................................................................................................................................................................................................................................................17 Upgrading the tool ............................................................................................................................................................................................................................................................................................................18 Conclusion .................................................................................................................................................................................................................................................................................................................................18 Technical white paper Page 3 Executive summary Storage management requires a complex balance of capacity planning, storage distribution, and active monitoring every day, every hour to maintain a high standard of storage operations. Management of assets is paramount in any organization. Also, data center management is the bedrock of a successful IT organization and allows users nonstop access to stored data. Within the data center, storage management is a quintessential element of data availability and data management. HPE 3PAR is a leader in storage technology with the industry-leading all-flash array (AFA). With HPE 3PAR being a leader in the storage industry, data center managers rely on effective storage management. The HPE 3PAR StoreServ Management Console (SSMC) using artificial intelligence is the next step forward to meeting the demands of efficient storage management in today’s ever-changing environment. HPE SSMC 3.4 introduces a new kind of storage management with adaptive analytic software that learns your storage operations and adapts to reporting anomalies accordingly. This new approach to storage management is continually learning and monitoring daily storage operations. Outliers, saturations levels, and advanced analytics are all part of the new approach to manage HPE 3PAR StoreServ arrays. Initially, only arrays configured with all solid-state drives (SSDs) are supported with Analytics. HPE 3PAR has brought a new wave of storage management with the release of HPE SSMC 3.4. What’s new in HPE StoreServ Management Console 3.4? • The next-generation of HPE SSMC demands greater functionality to meet the ever-changing demands of storage management. • Building on years of HPE 3PAR performance data collection allows an advanced analytical approach to report generation. • Demands on compute power to sustain analytical reporting demands a different approach from traditional HPE SSMC. • Beginning with HPE SSMC 3.4, the tool is now deployed as a storage management appliance. • Appliance analytics is now the new norm for HPE 3PAR storage reporting utilizing the following advancement in features: – New panels added to dashboard directly reflective of storage operations – Entire new section (Analytics) added to Storage section to assist with storage performance evaluation – New section added to System Reporter titled Advanced Analytics, which is dedicated to assist in isolating poor performance behavior Usability and dashboard New panels to support Analytics in HPE SSMC 3.4 Beginning with HPE SSMC version 3.0, users have had the ability to create and manage individualized dashboard settings. HPE SSMC 3.4 continues to expand on the usability of the dashboard by adding new features, which support new analytical reporting. Beginning with HPE SSMC 3.2, panels could be moved, removed, added, and rearranged to accommodate the needs of the user. Technical white paper Page 4 To support Analytics, HPE SSMC 3.4 added five additional optional panels to the dashboard inventory. The additional dashboard panels are covered in detail in the following sections for HPE SSMC 3.4. A brief overview is as follows. • Performance & Saturation panel: This panel shows performance and saturation scores for a single system at a 5-minute granularity over the past 24 hours or the user-selected period. • Top Systems by Saturation panel: This panel lists the top storage systems ranked by their saturation levels over the past 24 hours or the user-selected period. • Top Performance Outlier: This panel lists the top storage systems ranked according to their performance severity scores over the past 24 hours or the user-selected period. • Top Volume Hotspots by IOPS: This panel gives a listing of volumes ranked by IOPS at each 5-minute granularity. Volumes are ranked by weighted average based on number of times they experience high IOPS. This average is calculated over the past 24-hour period or the user-selected period. • Top Volume Hotspots by Latency: This panel gives a listing of volumes ranked by latency at each 5-minute granularity. Volumes are ranked by weighted average based on number of times they experience high latency. This average is calculated over the past 24-hour period or the user-selected period. Dashboard panels with an attitude New with HPE SSMC 3.4 is new scoring/visual indications related to performance, saturation levels, and volume metrics. Figure 1. Color and scoring indicators Displayed in Figure 1 are the variant colors and scoring indexes that are part of the new performance and saturation scoring. Shown on the left of the figure is an example of hotspots and the colors associated with the hotspots. Notice the color scale increases along with the scoring scale. The rule of thumb that is maintained throughout the tool is the darker the shade of color the poorer the performance score; this can be observed with both of the examples shown in Figure 2. Figure 2. Saturation level colors Technical white paper Page 5 In Figure 2, the level of color captured shows a clear view of the changes from green to yellow to a deep red when levels exceed expected levels. The user can also identify shades of orange within the chart indicating the various colors within the chart. Along both sides of the chart, there are numbers that are representative of either scoring level or saturation level. The chart in Figure 2 is double charted; the highly visible saturation level that the user is drawn to first gives clear indication of how high the saturation level is. The less visible line on the bottom is the scoring level assessed against the array, but there is less visibility as it blends in with the time chart on the bottom of the graph. The depiction of the line clearly highlights the difference in color levels. Each of the new panels contained within HPE SSMC 3.4 is covered in detail throughout the paper. Finally, each of the panels contains an informational help window, which corresponds to the panel currently viewed. The help window can be viewed by clicking information icon; an example of this can be viewed in Figure 1 to the right of the header Top Volume Hotspots by Latency. Performance & Saturation panel This is a panel that is common to the dashboard and viewed under the Systems heading in the mega menu is Performance & Saturation. This panel contains two separate reporting metrics. Figure 3 is an example of the reporting panel. Figure 3. Performance & Saturation reporting panel Saturation On the right side of the panel is the saturation level during the reporting period. The following metrics are part of calculating saturation. Saturation: The number of IOPS (and/or throughput) the system can deliver while maintaining a reasonable response time. Reasonable response time is termed as preferred IOPS (and/or preferred throughput). Preferred IOPS: Preferred IOPS (throughput) varies by configuration of the array and also by the type of workload subject to it. The graphed line is preferred IOPS. Configuration: Configuration of the array is defined by the model, number of nodes, drive type, port type, number of chassis, and volume type (compression, dedupe, thin). Workload: This is defined by the request size and the percentage of reads and writes that are serviced by the system. Graphed ratio: The ratio percentage provided in the chart gives the user insight about: • Saturation levels of the system in 5-minute granularity • Identifies different time frame for saturation levels • Periodicity in the change in saturation levels Over 100%: There are observed times (as illustrated in Figure 3) where the saturation level exceeds 100%. This behavior is present because the preferred IOPS (I/Os per second) is an indicator of the recommended threshold. This threshold is based on analysis data of similar system configurations, which were observed through data collection and data modeling with the Performance Estimation Module (PEM) tool. Using guidance provided by the toolsets, guidelines were incorporated into HPE SSMC 3.4. When measured performance is lower than 100% saturation, the systems are expected to perform optimally. When IOPS threshold exceeds 100% saturation, the system will still deliver IOPS with little or no latency. Technical white paper Page 6 It is expected that saturation levels above 100% will result in higher latency, which may result in slower response times. Identification of the contributing factors that lead to the high saturation level will be discussed later in the paper. Minor spike as illustrated in Figure 3 should not alarm the user, as all systems experience short bursts of high I/O usage. Prolonged bursts of high saturation need to be investigated using the tools within HPE SSMC 3.4; examples of these tools will be discussed later in the paper. Score The saturation is represented as a percentage value as observed in Figure 3. The saturation score is a weighted mean of the saturation levels for the storage system over the past 24 hours. In the saturation chart, the scores are presented as a numerical value rated between 0 and 10 with 10 representing the least optimal number. The latency score is a comparison between the following metrics: • System configuration. • Workload characteristics. • The score is based on data collected from all like HPE 3PAR systems reporting back to HPE 3PAR Central. In Figure 4, the scoring values are shown on the left side of the chart and the observed weighted value is shown within the circle on the chart. Note The circle on the chart is only used in the figure to highlight the example. Figure 4. Performance score Outliers Figure 5. Performance Outlier Technical white paper Page 7 Performance scores are representative of I/O response time or latency. The response of the system can be reliably determined to a certain level of approximation if the previously mentioned outlined parameters that influence the metric are known. When metrics fall outside of the defined boundaries, they are identified as Outliers. Outliers are observed in the Performance and Saturation panel as displayed in Figure 4. Another optional panel that a user can add to their dashboard is titled Top Performance Outliers and is illustrated in Figure 5. Same rule applies to the performance outlier with color. In this case, the bolder the color the higher the expected latency. As with all charted panels, the user can mouse over the graphed metric to view the charted value at the time of capture. Top Systems by Saturation One more additional panel can be added to the dashboard to highlight saturation level. The panel Top Systems By Saturation displays an average of the saturation level of the observed systems over the last 24-hour period or the user-selected period. Figure 6 illustrates the panel and highlights saturation level not only by color but also by value. The range value by which a user can modify the panel is 1 or 3 days, 1 or 2 weeks. Figure 6. Saturation level averaged Top hotspots The last two panels that can be added identify hotspots by IOPS and hotspots by latency. Figure 7. Hotspots Each of the view shown in Figure 7 represents different vantage points for data collection. The graph on the right is charting the highest observed latency in a 5-minute period. The panel identifies a listing of volumes that are ranked by latency. Each volume is ranked by a weighted average based on the number of times they experienced high latency. The base average is calculated over a 24-hour period. The weighted value shown in the text box identifies the rank with 10 representing the worst performing volume, the latency calculated over the last 5-minute period, and the occurrence or a percentage of time this volume reported high latency. Technical white paper Page 8 Figure 8. Latency comparison Figure 8 compares the top three volumes identified in the chart as latency hotspots. The first observation is that the graph represents both arrays in which data has been collected; therefore, the user must not assume that all graphed data is representative of just one array. The second observation is that the ranking is not just based on the latency. Comparison of the first two boxes illustrates a difference in latency values with the middle box having a higher latency value than either of the other two. What is important here is the number of occurrences for the latency. The last observation is the volumes itself; the pop-up box identifies, which volume reported the latency. Further investigation into these values on the reported volume is necessary to pinpoint time and host system responsible for the I/O at the time of occurrences. Hotspots by IOPS represent the other chart illustrated in Figure 7. This panel identifies a listing of volumes ranked by IOPS. The volumes are ranked by a weighted average based on the number of times they experienced high IOPS. The user should note that although the basis of the weighting of each of the charts is the same, the similarity of top volumes is not. A volume could be at the top of the list on one chart and not even register on the other chart. Each of the charted volumes should be viewed based on the performance standard it is measured against. Shortcuts Not all of the charts shown on the dashboard contain shortcuts to defined areas. Those that do have shortcuts are listed in the following: • Performance & Saturation: Each panel is associated with a singular array. The link for the panel opens Analytics panel associated with the specified HPE 3PAR array. • Top Volume Hotspots by Latency: Links the user to system’s home page associated with hotspots. If only one system is reporting hotspot, then link will be to the system reporting hotspot. If multiple systems are reporting hotspots, all systems associated with hotspots are displayed. • Underlined links: Under either hotspot panel, each volume that has reported a condition will be linked to the volume identified. Systems menu The first notable difference is the left-hand screen on the display. The left-hand screen display is a modified 24-hour saturation meter as illustrated in Figure 9. Figure 9. Saturation meter The meter is reflective of the saturation level, which was observed on the dashboard. To the right of the meter is the saturation level that has been averaged over the past 24 hours. The color contained within the meter is reflective of the colors discussed earlier in the paper. Note The meter is not representative of the current saturation level but just an average of the last 24 hours; customer should navigate to the right side of the screen for a thorough investigation of the array saturation level. Technical white paper Page 9 New selections under system menu HPE InfoSight There are two new additions to menu items under the Systems heading. The first addition is subtle in nature and is added under the heading Activity. When the user first opens this selection, HPE SSMC displays all the recent activities on the array. The addition to Activity is under the last column on the display. The last column on the display by default is titled Any Origin; using the drop-down, it exposes other options for Activity. HPE InfoSight is added to this menu list. HPE InfoSight offers cross-stack analytics for VMware® and a vast amount of telemetry data from the HPE 3PAR StoreServ array. Figure 10. HPE InfoSight link under Activity Part of the telemetry data provided by HPE InfoSight centers around possible negative circumstances that may impact the array to perform at an optimal level. As HPE 3PAR engineers are continually evaluating the telemetry any new concerns that are discovered will result in the creation of yellow box rule. If an array meets the criteria for the ruleset, a notification is sent to user advising of the issue prior to the array developing the issue. HPE SSMC using Activity drop-down is one more way in which the user is notified on potential issues. System analytics The major addition to the Systems screen is the from the menu selection of Analytics. As highlighted previously, HPE SSMC 3.4 now features an adaptive analytic software that learns your storage operations and adapts to reporting anomalies accordingly. Figure 11 is an illustration of this featured software. Figure 11. System analytics Technical white paper Page 10 Reporting in Analytics is comprised of four separate sections. Saturation The top section is a continuation of the saturation panel displayed on the dashboard. Before one can ascertain the scoring on the screen, a definition needs to be offered. By definition, computer saturation occurs when data throughput is pushed beyond it prescribed capacity. The result of this would mean that performance slows, latencies are observed, and data bandwidth is saturated. The bandwidth on a system is a measurement of data that a system can transmit in a given time. Figure 12. HPE 3PAR saturation Within HPE 3PAR StoreServ, we define saturation as the number of IOPS (I/Os per second) the system can deliver while maintaining a reasonable response time; this measurement is termed as preferred IOPS. The preferred IOPS varies by the configuration of the array and by the type of workload that is subjected to the array. The configuration of the array is defined by model, number of nodes, drive types, port speed and type, and volume type as referenced to compression, dedupe, thin, or thick volume. Definition for a workload is the amount of work that is handled by the array. It gives an estimate of the efficiency and performance of the array; the term workload refers to array’s ability to handle and process I/O. In Figure 12, the relevant data is collected at a 5-minute granularity and the preferred IOPS is calculated against the actual IOPS being serviced by the array. The saturation level ratio is represented as a percentage in the line chart. The objective of the chart is to provide insight about: • Saturation levels of the system at 5-minute granularity • Identify periods of high saturation levels • Identify change tendencies of the change levels Note As noted earlier in the paper, at times, the saturation level can be reported over 100%. Figure 13. Performance & Saturation Performance Performance is a scaled report as illustrated in Figure 13. The performance score as illustrated is a number from 0 to 10 and based on a number of different metrics. In the following, there are two separate Figures 14 and 15. Figure 14 is a capture of a single 24-hour period of reporting. Figure 13 is the same 24-hour period expanded. If the user mouse over the period of time, they would like to explore and do a left click. They can drag the mouse off the time frame and get an expanded capture of the time period. This view offers the user greater granularity of the time period. Technical white paper Page 11 Figure 14. Performance score Figure 15. Isolated time—Performance score Performance score Performance is an indication of latency on the array. Latency is the response time taken to service an I/O. Latency is not a fixed metric but rather a result of contributing factors. Response time or latency can be reliably determined to a certain level of approximation if the parameters that influence the metric are known. Metric variable This is the response time reported by the system when compared to a typical response time observed across similar systems in the HPE 3PAR install base. Similarity is dependent upon the configuration of the array based against other like or near-similar arrays. An example would be an HPE 3PAR StoreServ 8200 with 48 SSDs and like operating systems. Outliers (performance score) The measure of the performance outlier is what HPE terms as the latency score. The latency score measures the relative severity of the latency reported by the system when compared to what is typically reported by other collected like system metrics. The score ranges from 0 to 10 where 0 is the calculated score, which is representative of the values observed by like systems. The score of 10 is indicative of a high latency when compared to other systems with a given workload. Typically, when the system is close to 100% saturation, the performance score should be a number higher than 0; however, there are times when this rule does not apply. Figure 16 is an illustration when the saturation level is near 100%, but the performance level is still observed to be 0. This can occur when the response times are similar to the statistical model for like workloads. These models were formulated from data that has been collected and reported back to HPE 3PAR using phone home performance data. Response times can vary though the system is running a configuration that is resource intensive, for example, too many compressed volumes. The collected telemetry during the reporting period may reflect an outlier compared to the typical latency for a specific type of workload. In these scenarios, the performance score is likely to be a larger number close to 10. Figure 16. Outlier performance chart Technical white paper Page 12 In Figure 16, the saturation level is near 100% throughout the reporting period; however, there are no indications that the performance (latency) was directly affected during the reported period. This can be further verified using Advanced Analytics. Displayed in Figure 16 on the upper right of the screen is a link to advanced performance reporting, Advanced Analytics. Advanced Analytics in conjunction with the Analytics displayed in Figure 16 provides the user valuable insight to the performance of the array. An in-depth discussion of the Advanced Analytics HPE SSMC page is discussed later in the paper. Figure 17. Advanced Analytics performance Using Figure 17 in conjunction with Figure 16, it is identified that during the reported time period of 02:10, the saturation level was near 95% but the latency was near 2 ms for write data and less than 1 ms for read data. This supports the performance reporting value of 0 during the time frame observed. Top 5 Volume Hotspots By Latency (ms) The next chart under the section of System Analytics relates to hotspots observed. Hot spots are indications of I/O congestion on the array. Hot spots reporting provides information to the user identifying which entity or object is responsible for saturating the resources on the system. It should be noted that when referencing saturation and hotspots, the entities identified in Figure 17 are the only one contributing factor to the saturation level; there are other factors, which must be considered. Figure 18. Latency hotspots The chart shown in Figure 18 is a heat chart of the top 5 volume hotspots observed over the past 24-hour period. As mentioned throughout this document, the lighter the shading within the chart the lower the reported effect on the measured metric. In the illustration, the guide bar located in the lower right is a weighted chart identifying the relationship of color to latency in milliseconds (ms). The chart-weighted value can change during the reporting period. The displayed increments are shown in five colored increments and a weighted value is assigned by highest observed latency. Example, during a 24-hour period, the highest recorded latency was 5.76 ms; the chart would be adjusted to 8 as illustrated in Figure 18. If the array were to experience a burst of data and the latency were to change to 10.05 ms, then the weighted value of the chart would change to reflect a new value during the reported period. Technical white paper Page 13 The average reported values are shown on the right side of the charted area. The ranking of the values is determined by the highest 24-hour period reporting first and subsequently the lower observed areas ranked lower. To gain better insight into reporting, the user can mouse over any time frame within the charted area and the reported metric is isolated. In Figure 19, the two volumes that were affected by the higher latency are shown on the right with the corresponding charting of the color. Figure 19. Isolating hotspots Performance analytics Included in the Performance chart is analytical insight for factors that lead to the performance degradation. A user can left click any of the reported marks and a pop-up box will open providing the user an insight to the contributing factors. Figure 20 illustrates the use of the tool. Figure 20. Performance insight Top 5 Volumes By Latency (ms) The last chart displayed under the heading of Performance analytics is Top 5 Volumes By Latency. The charting is similar in comparison to Top 5 Volumes By Latency (ms) with two exceptions. The first difference is this chart does not include performance analytics pop-up box as illustrated in Figure 20. The second difference is that the reported volumes may differ in reporting. The reporting of volume hotspots, as illustrated in Figure 20, identifies hotspots at the time of reporting. The illustration in Figure 21 displays the differences in reporting. Technical white paper Page 14 Figure 21. Top 5 Volumes By Latency Throughput Figure 22. Advanced Analytics throughput This charted data was previously referred in the section when discussing isolation to read/write throughput and outliers. As illustrated in Figure 22, when a user uses their mouse to highlight a collection point, the values of the collection point are displayed in the upper right section of the chart. Along with the throughput value is the score associated with the particular collection point. The score reflects the outlier score, which will help in isolation of the troubled application. Latency The next section contained within the Advanced Analytics area is Latency. Throughout this tool, we have associated saturation observations with latency. Along with saturation, performance outliers are also associated with latency. Figure 23 illustrates latency observations during the reporting period. Figure 23. Latency observations Upon closer examination of the chart, it correlates with that of Figure 22 and throughput. We can observe that during higher throughput, we reported a throughput score of 7 and associated at the exact time is a latency value of 9.17 ms. This helps the user narrow down the area of concern. Technical white paper Page 15 Workload outliers Throughout the paper, we have used this word and identified areas that were considered as an outlier. We have, in the previous two charts, identified tendencies that used both analytics charts and advanced analytical reporting, but we have not narrowed down the contributors that have led to the anomaly. The very last charted area within the tool is a unique chart that is multilayered. Figure 24 illustrates the top layer of the chart. This chart centers around the hosts and the four categories of captured data. Figure 24. Host Outliers The four categories for hosts are as follows: • Host Service Time Read • Host Service Time Write • Host Read • Host Write When examining the chart, each horizontal line represents a category to examine. As with all the schemes represented in the tool, the darker the color the higher the value of observation. Figure 25. Expanded view of outliers Figure 25 is an expanded view of Figure 24; this helps the reader to examine the values collected at the time. It should be noted there is a 5-minute time difference in the screen shots from the other captures in this section. When examining the reported throughput values, a number appears to the right of the value in parenthesis. This number represents the weighted value of the observed results. Second screen Using Figure 24 as our starting point, choose to drill down to Host Service Times Read. Selecting a time frame from the horizontal line associated with Host Service Time Read will repaint the charted area to reveal there were five systems affected. This is illustrated in Figure 26. Figure 26. Host Service Time Read isolation The ordering on the right side of the illustration identifies the highest effected system to the lowest during the selected time frame. Technical white paper Page 16 Final screen Once again, the results illustrated in Figure 26 are horizontally associated with the host in the right column. Selecting the horizontal line associated with WINATCBL2 results in displaying the screen captured in Figure 27. Figure 27. Host Service Time Read isolation—Volume Identification The result of the findings is these two volumes contributed to the overall high read service times, which were recorded in previous screens. These two volumes, however, may not be the only factors where latency has been observed. The user should also examine the other three categories listed in Host Workload Outliers to get a complete picture of the array. HPE SSMC 3.4 deployment As discussed earlier in the paper, HPE SSMC now deploys as an appliance. Deployment of the tool is limited to VMware ESX® systems utilizing 5.5, 6.0, or 6.5 operating systems. The user can also deploy the tool on 2016 Windows® Hyper-V servers. A minimum of 500 GB of disk space is required for installation of the tool. There are three different deployment scenarios for the tool and are dependent upon the number of arrays and managed objects. The following are guidelines for deployment. Small • Small deployment option to manage up to 8 HPE 3PAR StoreServ arrays and 128K objects. • This deployment requires 4 vCPUs and 16 GB of memory. Medium • Medium deployment option to manage up to 16 arrays and 256K objects. • This deployment requires 8 vCPUs and 32 GB of memory. Large • Large deployment option to manage up to 32 arrays and 500K objects. • This deployment requires 16 vCPUs and 32 GB of memory. Currently, the number of objects for your deployed HPE SSMC instance can be known by inspecting the metrics.log file found under /var/opt/hpe/logs config_appliance Upon installation of the appliance, the user logs into the virtual machine using the default user name of ssmcadmin and password of ssmcadmin. The user is immediately challenged to provide a new password for the ssmcadmin account. After the password is changed, the user types in the command config_appliance and a Terminal User Interface (TUI) is displayed on the console. The TUI is used for all maintenance operations for HPE SSMC appliance. Figure 28 is an illustration of the interface. Technical white paper Page 17 Figure 28. Setting up appliance VMware ESXi™ or VC installation Packaging of the appliance for ESX is either as a stand-alone appliance or part of VMware vCenter®. If the appliance is installed as a separate ESX or a Hyper-V appliance, the user must assign network parameters through the TUI. If the appliance is installed as VMware vCenter appliance, separate screens for network assignments are displayed during installation. Networking options If the appliance is installed on a single network interface, either an IPv4 or IPv6 address can be supported for installation. If multihoming is required, then there should be two network interfaces configured for the appliance. Use Table 1 as a guide to supported network configurations. Table 1. Networking options Number of network interfaces IPv4 or IPv6 1 IPv4 1 IPv6 2 IPv4, IPv4 2 IPv4, IPv6 HPE SSMC appliance migration After installation of the appliance, the user can deploy an additional tool to assist user migration from HPE SSMC 3.2 or HPE SSMC 3.3. The migration tool supports migration from Windows or Red Hat® Enterprise Linux® for HPE SSMC instances. The migration tool is deployed where the existing HPE SSMC 3.2 or 3.3 tool is installed. The user clicks the desktop icon to deploy the tool from Windows. The migration tool will open a separate window, and the user must supply the IP address of the new appliance along with the login credentials. The tool will then migrate the following information. • HPE SSMC administrator credentials • HPE SSMC configured arrays and their credentials • HPE SSMC user configuration and settings • Certificates and Keystore information • FIPS mode configuration Technical white paper Page 18 • HPE 3PAR RMC configurations • System Reporter report configurations and reports The migration tool will not migrate the following information: • Previous HPE SSMC version log files • HPE SSMC port configuration (8443 will be the default port on HPE SSMC appliance.) • SR reports custom path information Migration process Migration is a 2-phase process with the first phase migrating all of HPE SSMC configurations and settings. Once the configurations are copied, the HPE SSMC appliance will restart to reflect the copied information. At this point, the user can log in to the new HPE SSMC on the appliance and verify all configuration were copied successfully. The second phase of the migration will only occur after the configurations and settings have completed. This phase will migrate all of the System Reporter reports currently on HPE SSMC 3.2 or 3.3. This process can take a long period of time to complete and is dependent on how many reports have been generated. If the second phase of the migration fails, the user can restart the migration and the tool will resume from the point where the migration failed. Upgrading the tool From time to time, it may be necessary to upgrade the tool. Instead of deploying the tool again from start, a section is added to HPE SSMC administrator console to help facilitate an upgrade. Under the Actions drop-down, an additional entry is added for Upgrade. The user selects Upgrade and a pop-up box will appear in the window as illustrated in Figure 29. Figure 29. HPE SSMC update The user simply drops the file into the browser and updates the tool. HPE SSMC appliance will restart once the tool is updated, and the user just logs back into resume operation. Conclusion HPE 3PAR has always been a leader in storage technology. The introduction of HPE StoreServ Management Console utilizing analytics is another example of the forward-looking vision HPE deploys in our technologies. HPE SSMC analytics provides the user insight into their storage platform, clearly identifies users of troubled areas, and provides helpful solutions to ensure the array operates in an efficient manner. Technical white paper Learn more at hpe.com/storage/3par Share now Get updates © Copyright 2018 Hewlett Packard Enterprise Development LP. The information contained herein is subject to change without notice. The only warranties for Hewlett Packard Enterprise products and services are set forth in the express warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. Hewlett Packard Enterprise shall not be liable for technical or editorial errors or omissions contained herein. Windows is either a registered trademark or trademark of Microsoft Corporation in the United States and/or other countries. Red Hat is a registered trademark of Red Hat, Inc. in the United States and other countries. Linux is the registered trademark of Linus Torvalds in the U.S. and other countries. VMware, VMware vCenter, VMware ESX, and VMware ESXi are registered trademarks or trademarks of VMware, Inc. in the United States and/or other jurisdictions. All other third-party marks are property of their respective owners. a00060582ENW, December 2018