University of Cape Town

Department of Statistical Sciences

Design of Experiments

Course Notes for STA2005S

J. Juritz, F. Little, B. Erni

3

1

5

2

4

5

2

1

4

3

1

5

4

3

2

August 31, 2022

2

4

3

5

1

4

3

2

1

5

sta2005s: design and analysis of experiments

2

Contents

1

Introduction

8

1.1 Observational and Experimental Studies

1.2 Definitions

8

9

1.3 Why do we need experimental design?

10

1.4 Replication, Randomisation and Blocking

1.5 Experimental Design

11

12

Treatment Structure

12

Blocking structure

14

Three basic designs

15

1.6 Design for Observational Studies / Sampling Designs

1.7 Methods of Randomisation

16

17

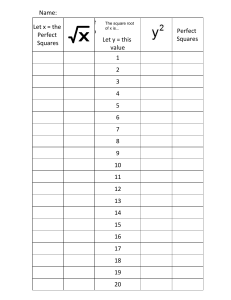

Using random numbers for a CRD (completely randomised design)

Using random numbers for a RBD

Randomising time or order

17

18

1.8 Summary: How to design an experiment

18

17

sta2005s: design and analysis of experiments

2

Single Factor Experiments: Three Basic Designs

2.1 The Completely Randomised Design (CRD)

2.2 Randomised Block Design (RBD)

2.3 The Latin Square Design

Randomisation

2.4 An Example

3

4

20

20

20

21

21

22

The Linear Model for Single-Factor Completely Randomized Design Experiments

3.1 The ANOVA linear model

24

3.2 Least Squares Parameter Estimates

Linear model in matrix form

Speed-reading Example

24

26

28

The effect of different constraints on the solution to the normal equations

Design matrices of less than full rank

32

Parameter estimates for the single-factor completely randomised design

3.3 Standard Errors and Confidence Intervals

38

Important estimates and their standard errors

Speed-reading Example

39

3.4 Analysis of Variance (ANOVA)

40

Decomposition of Sums of Squares

40

Distributions for Sums of Squares

41

F test of H0 : α1 = α2 = · · · = α a = 0

ANOVA table

Some Notes

44

44

Speed-reading Example

46

30

43

39

32

24

sta2005s: design and analysis of experiments

3.5 Randomization Test for H0 : α1 = . . . = α a = 0

47

3.6 A Likelihood Ratio Test for H0 : α1 = . . . = α a = 0

3.7 Kruskal-Wallis Test

4

50

53

Comparing Means: Contrasts and Multiple Comparisons

4.1 Contrasts

56

Point estimates of Contrasts and their variances

4.2 Orthogonal Contrasts

56

58

Calculating sums of squares for orthogonal contrasts

4.3 Multiple Comparisons: The Problem

60

63

4.4 To control or not to control the experiment-wise Type I error rate

Exploratory vs confirmatory studies

4.5 Bonferroni, Tukey and Scheffé

Bonferroni Correction

Tukey’s Method

Scheffé’s Method

65

67

67

69

70

Example: Strength of Welds

71

4.6 Multiple Comparison Procedures: The Practical Solution

4.7 Summary

77

4.8 Orthogonal Polynomials

4.9 References

5

77

81

Randomised Block and Latin Square Designs

Model

84

Sums of Squares and ANOVA

Example

55

88

86

83

75

64

5

sta2005s: design and analysis of experiments

5.1 The Analysis of the RBD

90

Estimation of µ, αi (i = 1, 2, . . . a) and β j (j = 1, 2 . . . b)

Analysis of Variance for the Randomised Block Design

Example: Timing of Nitrogen Fertilization for Wheat

5.2 Missing values – unbalanced data

Other uses of Latin Squares

Missing Values

104

105

106

Example: Rocket Propellant

106

Blocking for 3 factors - Graeco-Latin Squares

108

Power and Sample Size in Experimental Design

109

109

6.2 Two-way ANOVA model

Factorial Experiments

7.1 Introduction

99

103

Test of the hypothesis H0 : γ1 = γ2 = . . . = γ p = 0

7

95

102

Model for a Latin Square Design Experiment

6.1 Introduction

93

101

5.5 The Latin Square Design

6

91

96

5.3 Randomization Tests for Randomized Block Designs

5.4 Friedman Test

6

111

112

112

7.2 Basic Definitions

113

7.3 Design of Factorial Experiments

116

Example: Effect of selling price and type of promotional campaign on number of items sold

Interactions

Sums of Squares

117

119

116

sta2005s: design and analysis of experiments

7.4 The Design of Factorial Experiments

Replication and Randomisation

120

120

Why are factorial experiments better than experimenting with one factor at a time?

Performing Factorial Experiments

7.5 Interaction

122

123

7.6 Interpretation of results of factorial experiments

7.7 Analysis of a 2-factor experiment

7.8 Testing Hypotheses

124

126

128

7.9 Power analysis and sample size:

131

7.10 Multiple Comparisons for Factorial Experiments

7.11 Higher Way Layouts

7.12 Examples

8

132

132

133

Some other experimental designs and their models

8.1 Fixed and Random effects

137

8.2 The Random Effects Model

139

Testing H0 : σa2 = 0 versus Ha : σa2 > 0

Variance Components

An ice cream experiment

8.3 Nested Designs

142

143

144

Calculation of the ANOVA table

146

Estimates of parameters of the nested design

8.4 Repeated Measures

140

149

149

137

121

7

1

Introduction

1.1 Observational and Experimental Studies

There are two fundamental ways to obtain information in research:

by observation or by experimentation. In an observational study the

observer watches and records information about the subject of

interest. In an experiment the experimenter actively manipulates the

variables believed to affect the response. Contrast the two great

branches of science, Astronomy in which the universe is observed by

the astronomer, and Physics, where knowledge is gained through

the physicist changing the conditions under which the phenomena

are observed.

In the biological world, an ecologist may record the plant species

that grow in a certain area and also the rainfall and the soil type,

then relate the condition of the plants to the rainfall and soil type.

This is an observational study. Contrast this with an experimental

study in which the biologist grows the plants in a greenhouse in

various soils and with differing amounts of water. He decides on

the conditions under which the plants are grown and observes the

effect of this manipulation of conditions on the response.

Both observational and experimental studies give us information

about the world around us, but it is only by experimentation that

we can infer causality; at least it is a lot more difficult to infer causal

relationships with data from observational studies. In a carefully

planned experiment, if a change in variable A, say, results in a

change in the response Y, then we can be sure that A caused this

change, because all other factors were controlled and held constant.

In an observational study if we note that as variable A changes Y

changes, we can say that A is associated with a change in Y but we

cannot be certain that A itself was the cause of the change.

Both observational and experimental studies need careful planning to be effective. In this course we concentrate on the design of

experimental studies.

sta2005s: design and analysis of experiments

Clinical Trials1 : Historically, medical advances were based on

anecdotal data; a doctor would examine six patients and from this

wrote a paper and publish it. Medical practitioners started to become aware of the biases resulting from these kinds of anecdotal

studies. They started to develop the randomized double-blind clinical trial, which has become the gold standard for approval of any

new product, medical device, or procedure.

9

1

https://en.wikipedia.org/wiki/

Clinical_trial

1.2 Definitions

For the most part the experiments we consider aim to compare the

effects of a number of treatments. The treatments are carefully chosen and controlled by the experimenter.

1. The factors/variables that are investigated, controlled, manipulated in the experiment, are called treatment factors. Usually,

for each treatment factor, the experimenter chooses some specific levels of interest, e.g. the factor ‘water level’ can have levels

1cm, 5cm, 10cm; the factor ‘background music‘ can have levels

‘Classical’, ‘Jazz‘, ’Silence’.

2. In a single-factor experiment2 the treatments will correspond to

the levels of the treatment factor (e.g. for the water level experiment the treatments will be 1cm, 5cm, 10cm).

With more than one treatment factor, the treatments can be constructed by crossing all factors: every possible combination of

the levels of factor A and the levels of factor B is a treatment.

Experiments with crossed treatment factors are called factorial experiments3 . More rarely in true experiments, factors can be nested

(see Section 1.5).

3. Experimental unit: this is the entity to which a treatment is assigned. The experimental unit may differ from the observational

or sampling unit, which is the entity from which a measurement

is taken. For example, one may apply the treatment of ‘high

temperature and low water level’ to a pot of plants containing 5

individual plants. Then we measure the growth of each of these

plants. The experimental unit is the pot (this is where the treatment is applied), the observational units are the plants (this is

the unit on which the measurement is taken). This distinction

is very important because it is the experimental units which determine the (experimental) error variance, not the observational

units. This is because we are interested in what happens if we

independently repeat the treatment. For comparing treatments, we

obtain one (response) value per pot (average growth in the pot),

one value per experimental unit.

4. Experimental units which are roughly similar prior to the experiment, are said to be homogeneous. The more homogeneous the

Single-factor experiment: only a

single treatment factor is under investigation.

2

Factorial experiments are experiments with at least two treatment

factors, and the treatments are constructed as the different combinations

of the levels of the individual treatment factors.

3

Figure 1.1: Example of a 2 × 2 ×

2 = 23 factorial experiment with

three treatment factors (A, B and

C), each with two levels (low and

high, coded as - and +), resulting in

eight treatments (vertices of cube)

(Montgomery Chapter 6).

sta2005s: design and analysis of experiments

experimental units are the smaller the experimental error variance (variation between observations which have received the

same treatment = variance that cannot be explained by known

factors) will be. It is generally desirable to have homogeneous

experimental units for experiments, because this allows us to

detect the differences between treatments more clearly.

5. If the experimental units are not homogeneous, but heterogeneous, we can group sets of homogeneous experimental units

and thereby account for differences between these groups. This

is called blocking. For example, farms of similar size and in the

same region could be considered homogeneous / more similar

to each other than to farms in a different region. Farms (experimental units) in different regions will differ because of regional

factors such as vegetation and climate. If we suspect that these

differences will affect the response, we should block by region:

similar farms (experimental units) are grouped into blocks.

6. In the experiments we will consider, each experimental unit

receives only one treatment. But each treatment can consist of

a combination of factor levels, e.g. high temperature combined

with low water level can be one treatment, high temperature

with high water level another treatment.

7. The treatments are applied at random to the experimental units

in such a way that each unit is equally likely to receive a given

treatment. The process of assigning treatments to the experimental units in this way is called randomisation.

8. A plan for assigning treatments to experimental units is called an

experimental design.

9. If a treatment is applied independently to more than one experimental unit it is said to be replicated. Treatments must be

replicated! Making more than one observation on the same experimental unit is not replication. If the measurements on the

same experimental unit are taken over time there are methods

for repeated measures, longitudinal data, see Ch.8. If the measurements are all taken at the same time, as in the pot with 5

plants example above, this is just pseudoreplication. Pseudoreplication is a common problem (Hurlbert 1984), and will invalidate

the experiment.

10. We are mainly interested in the effects of the different treatments:

by how much does the response change with treatment i relative

to the overall mean response.

1.3

Why do we need experimental design?

An experiment is almost the only way in which one can control all

factors to such an extent as to eliminate any other possible expla-

10

sta2005s: design and analysis of experiments

11

nation for a change in response other than the treatment factor of

concern. This then allows one to infer causality. To achieve this,

experiments need to adhere to a few important principles discussed

in the next section.

Experiments are frequently used to find optimal levels of settings

(treatment factors) which will maximise (or minimise) the response

(especially in engineering). Such experiments can save enormous

amounts of time and money.

1.4 Replication, Randomisation and Blocking

There are three fundamental principles when planning experiments. These will help to ensure the validity of the analysis and to

increase power:

1. Replication: each treatment must be applied independently to

several experimental units. This ensures that we can separate

error variance from differences between treatments.

True, independent replication demands that the treatment is set

up anew for each experimental unit, one should not set up the

experiment for a specific treatment and then run all experimental

units under that setting at the same time. This would result in

pseudo-replication, where effectively the treatment is applied

only once.

For example, if we were interested in the effect of temperature on

the taste of bread, we should not bake all 180°C loafs in the same

oven at the same time, but prepare and bake each loaf separately.

Else we would not be able to say whether the particular batch or

particular time of day, or oven setting, or the temperature was

responsible for the improved taste.

2. Randomisation: a method for allocating treatments to experimental units which ensures that:

• there is no bias on the part of the experimenter, either conscious or unconscious, in the assignment of the treatments to

the experimental units;

• possible differences between experimental units are equally

distributed amongst the treatments, thereby reducing or eliminating confounding.

Randomisation helps to prevent confounding with underlying,

possibly unknown, variables (e.g. changes over time). Randomisation allows us to assume independence between observations.

Both the allocation of treatments to the experimental material

and the order in which the individual runs or trials of the experiment are to be performed must be randomly determined!

Confounding: When we cannot attribute the change in response to a

specific factor but several factors could

have contributed to this change, we

call this confounding.

sta2005s: design and analysis of experiments

12

3. Blocking refers to the grouping of experimental units into homogeneous sets, called blocks. This can reduce the unexplained (error) variance, resulting in increased power for comparing treatments. Variation in the response may be caused by variation in

the experimental units, or by external factors that might change

systematically over the course of the experiment (e.g. if the experiment is conducted on different days). Such nuisance factors

should be blocked for whenever possible (else randomised).

Examples of factors for which one would block: time, age, sex,

litter of animals, batch of material, spatial location, size of a city.

Blocking also offers the opportunity to test treatments over a

wider range of conditions, e.g. if I only use people of one age

(e.g. students) I cannot generalise my results to older people.

However if I use different blocks (each an age category) I will

be able to tell whether the treatments have similar effects in all

age groups or not. If there are known groups in the experimental

units, blocking guards against unfortunate randomisations.

Blocking aims to reduce (or control) any variation in the experimental material, where possible, with the intention to increase

power (sensitivity)4 .

Another way to reduce error variance is to keep all factors not

of interest as constant as possible. This principle will affect how

experimental material is chosen.

The three principles above are sometimes called the three R’s

of experimental design (randomisation, replication, reducing

unexplained variation).

1.5 Experimental Design

The design that will be chosen for a particular experiment depends

on the treatment structure (determined by the research question)

and the blocking structure (determined by the experimental units

available).

Treatment Structure

Single (treatment) factor experiments are fairly straightforward.

One needs to decide on which levels of the single treatment factor

to choose. If the treatment factor is continuous, e.g. temperature, it

may be wise to choose equally spaced levels, e.g. 50, 100, 150, 200.

This will simplify analysis when you want to fit a polynomial curve

through this, i.e. investigate the form of the relationship between

temperature and the response.

If there is more than one treatment factor, these can be crossed,

giving rise to a factorial experiment, or nested.

When we later fit our model (a

special type of regression model), we

will add the blocking variable and

hope that it will explain some of the

total variation in our response.

4

sta2005s: design and analysis of experiments

Often, factorial experiments are illustrated by a graph such as

shown in Figure 1.2. This quickly summarizes which factors, factor

levels and which combinations are used in an experiment.

One important advantage of factorial experiments over onefactor-at-a-time experiments, is that one can investigate interactions.

If two factors interact, it means that the effect of the one depends

on the level of the other factor, e.g. the change in response when

changing from level a1 to a2 (of factor A) depends on what level

of B is being used. Often, the interesting research questions are

concerned with interaction effects. Interaction plots are very helpful

when trying to understand interactions. As an example, the success

of factor A (with levels a1 and a2) may depend on whether factor

B is present (b2) or absent (b1) (RHS of Figure 1.3). On the LHS of

this figure, the success of A does not depend on the level of B. We

can only explore interactions if we explore both factors in the same

experiment, i.e. use a factorial experiment.

A and B interact

●

b1

●

b2

●

Y

Y

no interaction

●

b1

●

b2

●

●

●

a1

a2

a1

a2

Nested Factors: When factors are nested the levels of one factor,

B, will not be identical across all levels of another factor A. Each

level of factor A will contain different levels of factor B. These designs are common in observational studies; we will briefly look at

their analysis in Chapter 8.

Example of nested factors: In an animal breeding study we could

have two bulls (sires), and six cows (dames). Progeny (offspring) is

nested within dames, and dames are nested within sires.

progeny

dam 1

1 2

sire 1

dam 2

3 4

dam 3

5 6

dam 4

7 8

sire 2

dam 5

9 10

dam 6

11 12

Blinding, Placebos and Controls: A control treatment is often

b2

●

●

●

●

●

●

●

●

●

b1

●

●

●

●

●

●

●

●

●

Factor B

Factorial Experiments: In factorial experiments the total number

of treatments (and experimental units required) increases rapidly,

as each factor level combination is included. For example, if we

have temperature, soil and water level, each with 2 levels there are

2 × 2 × 2 = 8 combinations = 8 treatments.

13

low

medium

high

Factor A

Figure 1.2: One way to illustrate a

3 × 2 factorial experiment. The three

dots at each treatment illustrate three

replicates per treatment.

Figure 1.3: On the left factors A

and B do not interact (their effects

are additive). On the right A and B

interact, the effect of one depends

on the level of the other factor. The

dots represent the mean response

at a certain treatment. The lines join

treatments with the same level of

factor B, for easier reference.

sta2005s: design and analysis of experiments

necessary as a benchmark to evaluate the effectiveness of the actual

treatments. For example, how do two new drugs compare, but also

are they any better than the current drug?

Placebo Effect: The physician’s belief in the treatment and the

patient’s faith in the physician exert a mutually reinforcing effect;

the result is a powerful remedy that is almost guaranteed to produce an improvement and sometimes a cure (Follies and Fallacies

in Medicine, Skrabanek & McCormick). The placebo effect is a measurable, observable or felt improvement in health or behaviour not

attributable to a medication or treatment.

A placebo is a control treatment that looks/tastes/feels exactly

like the real treatment (medical procedure or pill) but with the

active ingredient missing. The difference between the placebo and

treatment group is then only due to the active ingredient and not

affected by the placebo effect.

To measure the placebo effect one can use two control treatments: a placebo and a no-treatment control.

If humans are involved as experimental units or as observers,

psychological effects can creep into the results. In order to pre-empt

this, one should blind either or both observer and experimental unit

to the applied treatment (single- or double-blinded studies). The

experimental unit and / or the observer do not know which treatment was assigned to the experimental unit. Blinding the observer

prevents biased recording of results, because expectations could

consciously or unconsciously influence what is recorded.

Blocking structure

The most important aim of blocking is to reduce unexplained variation (error variance), and thereby to obtain more precise parameter

estimates. Here one should look at the experimental units available:

Are there any structures/differences that need to be blocked? Do

I want to include experimental units of different types to make the

results more general? How many experimental units are available

in each block? For the simplest designs covered in this course, the

number of experimental units in each block will correspond to the

total number of treatments. However, in practice this can often not

be achieved.

The grouping of the experimental units into homogeneous sets

called blocks and the subsequent randomisation of the treatments

to the units in a block form the basis of all experimental designs.

We will study three designs which form the basis of other more

complex designs. They are:

14

sta2005s: design and analysis of experiments

15

Three basic designs

1. Completely Randomised Design

This design is used when the experimental units are all homogeneous. The treatments are randomly assigned to the experimental units.

2. Randomised Block Design

This design is used when the experimental units are not all homogeneous but can be grouped into sets of homogeneous units

called blocks. The treatments are randomly assigned to the units

within each block.

3. Latin Square Design

This design allows blocking for two factors without increasing

the number of experimental units. Each treatment occurs only

once in every row block and once in every column block.

In all of these designs the treatment structure can be a single

factor or factorial (crossed factors).

Completely Randomised Design Example: Longevity of fruiflies depending on sexual activity and thorax length5

125 male fruitflies were divided randomly into 5 groups of 25

each. The response was the longevity of the fruitfly in days. One

group was kept solitary, while another was kept individually with

a virgin female each day. Another group was given 8 virgin females

per day. As an additional control the fourth and fifth groups were

kept with one or eight pregnant females per day. Pregnant fruitflies

will not mate. The thorax length of each male was measured as this

was known to affect longevity.

Randomised Block Design Example: Executives and Risk

Executives were exposed to one of 3 methods of quantifying

the maximum risk premium they would be willing to pay to avoid

Figure 1.4: The three basic designs:

Completely Randomised Design (left),

Randomised Block Design (middle), Latin Square Design (right). In

each design each of five treatments

(colours) is replicated 5 times. Note

how the randomisation was done:

CRD: complete randomisation, RBD:

randomisation of treatments to experimental units in blocks, LSD: each

treatment once in each column, once in

each row. The latter two are forms of

restricted randomisation (as opposed

to complete randomisation).

Sexual Activity and the Lifespan of

Male Fruitflies. L. Partridge and M.

Farquhar. Nature, 1981, 580-581.

The data can be found in R package

faraway (fruitfly).

5

sta2005s: design and analysis of experiments

16

uncertainty in a business decision. The three methods are: 1) U:

utility method, 2) W: worry method, 3) C: comparison method.

After using the assigned method, the subjects were asked to state

their degree of confidence in the method on a scale from 0 (no

confidence) to 20 (highest confidence).

Block

1

2

3

4

5

(oldest executives)

(youngest executives)

Experimental Unit

1

2

3

C W

U

C U

W

U W

C

W U

C

W C

U

Table 1.1: Layout and randomization

for premium risk experiment.

The experimenters blocked for age of the executives. This is a

reasonable thing to do if they expected, for example, lower confidence in older executives, i.e. different response due to inherent

properties of the experimental units (which here are the executives). The blocking factor is age, the treatment factor is the method

of quantifying risk premium, the response is the confidence in

method. The executives in one block are of a similar age. The three

methods were randomly assigned to the three experimental units in

each block.

Latin Square Design Example: Traffic Light Signal Sequences

A traffic engineer conducted a study to compare the total unused

red light time for five different traffic light signal sequences. The

experiment was conducted with a Latin square design in which the

two blocking factors were (1) five randomly selected intersections

and (2) five time periods.

Intersection

1

2

3

4

5

1

15.2 (A)

16.5 (B)

12.1 (C)

10.7 (D)

14.6 (E)

2

33.8 (B)

26.5 (C)

31.4 (D)

34.2 (E)

31.7 (A)

Mean

13.82

31.52

Time Period

3

4

13.5 (C) 27.4 (D)

19.2 (D) 25.8 (E)

17.0 (E) 31.5 (A)

19.5 (A) 27.2 (B)

16.7 (B) 26.3 (C)

17.18

27.64

5

29.1 (E)

22.7 (A)

30.2 (B)

21.6 (C)

23.8 (D)

Mean

23.80

22.14

24.44

22.64

22.62

25.48

Ȳ... = 23.128

1.6 Design for Observational Studies / Sampling Designs

In observational studies, design refers to how the sampling is done

(on the explanatory variables), and is referred to as sampling design.

The aim is, as in experimental studies, to achieve the best possible

estimates of effects. The methods used to analyse data from observational or experimental studies are often the same. The conclusions will differ in that no causality can be inferred in observational

studies.

Table 1.2: Traffic light signal sequences.

The five signal sequence treatments are

shown in parentheses as A, B, C, D, E.

The numerical values are the unused

red light times in minutes.

sta2005s: design and analysis of experiments

1.7 Methods of Randomisation

Randomisation refers to the random allocation of treatments to the

experimental units. This can be done using random number tables or

using a computer or calculator to generate random numbers. When

assigning treatments to experimental units, each permutation must

be equally likely, i.e. each possible assignment of treatments to

experimental units must be equally likely.

Randomisation is crucial for conclusions drawn from the experiment to be correct, unambiguous and defensible!

For completely randomised designs the experimental units are

not blocked, so the treatments (and their replicates) are assigned

completely at random to all experimental units available (hence

completely randomised).

If there are blocks, the randomisation of treatments to experimental units occurs in each block.

In Practical 1 you will learn how to use R for randomisation.

Using random numbers for a CRD (completely randomised design)

This method requires a sequence of random numbers (from a calculator or computer; in the old days printed random number tables

were available). To randomly assign 2 treatments (A and B) to 12

experimental units, 6 experimental units per treatment, you can:

1. Decide to let odd numbers ≡ treatment A, and even numbers ≡

treatment B.

79

A

76

B

49

A

31

A

93

A

54

B

17

A

36

B

91

A

50

B

11

38

B

87

2. or decide to assign treatment A for two-digit numbers 00 - 49,

and treatment B for two-digit numbers 50 - 99.

67

B

49

A

72

B

48

A

95

B

39

A

03

A

22

A

46

A

87

B

71

B

16

A

70

B

Using random numbers for a RBD

Say we wish to randomly assign 12 patients to 2 treatments in 3

blocks of 4 patients each. The different (distinct) orderings of four

patients, two receiving treatment A, two receiving treatment B are:

79

97

69

22

B

17

sta2005s: design and analysis of experiments

A

A

A

B

B

B

A

B

B

A

A

B

B

B

A

A

B

A

B

A

B

B

A

A

to be chosen if random number between

01 - 10

11 - 20

21 - 30

31 - 40

41 - 50

51 - 60

Ignore numbers 61 to 99.

96

09

↓

AABB

↓

block 1

58

↓

BBAA

↓

block 2

89

23

↓

ABAB

↓

block 3

71

38

Coins, cards, pieces of paper drawn from a bag can also be used

for randomisation.

Randomising time or order

To prevent systematic changes over time from influencing results

one must ensure that the order of the treatments over time is random. If a clear time effect is suspected, it might be best to block for

time. In any case, randomisation over time helps to ensure that the

time effect is approximately the same, on average, in each treatment

group, i.e. treatment effects are not confounded with time.

For the same reason one would block spatially arranged experimental units, or if this is not possible, randomise treatments in

space.

1.8 Summary: How to design an experiment

The best design depends on the given situation. To choose an appropriate design, we can start with the following questions:

1. Treatment Structure: What is the research question? What is the

response? What are the treatment factors? What levels for each

treatment factor should I choose? Do I need a control treatment?

Are you interested in interactions?

2. Experimental Units: How many replicates (per treatment) do I

need? How many experimental units can I get, afford?

3. Blocking: Do I need to block the experimental units? Do I need

to control other unwanted sources of variation? Which factors

should be kept constant?

etc

18

sta2005s: design and analysis of experiments

4. Other considerations: ethical, time, cost. Will I have enough

power to find the effects I am interested in?

The treatment structure, blocking factors, and number of replicates required are the most important determinants of the appropriate design. Lastly, we need to randomise treatments to experimental units according to this design.

19

2

Single Factor Experiments: Three Basic Designs

This chapter gives a brief overview of the three basic designs.

2.1 The Completely Randomised Design (CRD)

This design is used when the experimental units are homogeneous.

The experimental units will of course differ, but not so that they

can be split into clear groups, i.e. no blocks seem necessary. This

is before the treatments are applied. Each treatment is randomly

assigned to r experimental units. Each unit is equally likely to

receive any of the a treatments. There are N = r × a experimental

units.

Some advantages of completely randomized designs are:

1. Easy to lay out.

2. Simple analysis even when there are unequal numbers of replicates of some treatments.

3. Maximum degrees of freedom for error.

An example of a CRD with 4 treatments, A, B, C and D randomly applied to 12 homogeneous experimental units:

Units

Treatments

1

B

2

C

3

A

4

A

5

C

6

A

7

B

8

D

9

C

10

D

11

B

2.2 Randomised Block Design (RBD)

This design is used if the experimental material is not homogeneous but can be divided into blocks of homogeneous material.

Before the treatments are applied there are no known differences

12

D

sta2005s: design and analysis of experiments

21

between the units within a block, but there may be very large differences between units from different blocks. Treatments are assigned

at random to units within a block.

In a complete block design each treatment occurs at least once

in each block (randomised complete block design). If there are

not sufficient units within a block to allow all the treatments to be

applied an Incomplete Block Design can be used (not covered here,

see Hicks & Turner (1999) for details).

Randomised block designs are easy to design and analyse. Usually, the number of experimental units in each block is the same

as the number of treatments. Blocking allows more sensitive comparisons of treatment effects. On the other hand, missing data can

cause problems in the analysis.

Any known variability in the experimental procedure or the

experimental units can be controlled for by blocking. A block could

be:

Table 2.1: Example of a randomised

layout for 4 treatments applied in 3

blocks.

Block 1

C

A

B

D

Block 2

B

C

A

D

Block 3

D

B

C

A

• A day’s output of a machine.

• A litter of animals.

• A single subject.

• A single leaf on a plant.

• Time of day or weekday.

2.3 The Latin Square Design

A Latin Square Design allows blocking for two sources of variation,

without having to increase the number of experimental units. Call

these sources, row variation and column variation. The p2 experimental units are grouped by their row and column position. The p

treatments are assigned so that they occur exactly once in each row

and in each column.

Table 2.2: A 4 × 4 Latin Square Design.

C1

C2

C3

C4

R1

A

B

C

D

R2

B

C

D

A

R3

C

D

A

B

R4

D

A

B

C

Randomisation

The Latin Square is chosen at random from the set of standard

Latin squares of order p. Then a random permutation of rows is

chosen, a random permutation of columns is chosen, and finally,

the letters A, B, C, ..., are randomly assigned to the treatments.

Latin square designs are efficient in the number of experimental units used when there are two blocking factors. However, the

number of treatments must equal the number of row blocks and the

Table 2.3: Latin square designs can

be used to block for time periods and

order of presentation of treatments.

Order

Period 1

ABCD

Period 2

BCDA

Period 3

CDAB

Period 4

DABC

sta2005s: design and analysis of experiments

22

number of column blocks. One experimental unit must be available

at every combination of the two blocking factors. Also, the assumption of no interactions between treatment and blocking factors

should hold.

2.4 An Example

This example gives a brief overview of how the chosen design will

affect analysis, and conclusions. The ANOVA tables look similar to

the regression ANOVA tables you are used to, and are interpreted

in the same way. The only difference is that we have a row for each

treatment factor and for each blocking factor.

An experiment is conducted to compare 4 methods of treating

motor car tyres. The treatments (methods), labelled A, B, C and D

are assigned to 16 tyres, four tyres receiving A, four others receiving B, etc.. Four cars are available, treated tyres are placed on each

car and the tread loss after 20 000 km is measured.

Consider design 1 in Table 2.4.

This design is terrible! Apparent treatment differences could also

be car differences: Treatment and car effects are confounded.

We could use a Completely Randomized Design (CRD). We

would assign the treated tyres randomly to the cars hoping that

differences between the cars will average out. Table 2.6 is one such

randomisation.

To test for differences between treatments, an analysis of variance (ANOVA) is used. We will present these tables here, but only

as a demonstration of what happens to the mean squared error

(MSE) when we change the design, or account for variation between

blocks.

Table 2.7 shows the ANOVA table for testing the hypothesis of

no difference between treatments, H0 : µ A = µ B = µC = µ D . There

is no evidence for differences between the tyre brands.

Is the Completely Randomised Design the best we can do? Note

that A is never used on Car 3, and B is never used on Car 1. Any

variation in A may reflect variation in Cars 1, 2 and 4. The same

remarks apply to B and Cars 2, 3 and 4. The error sum of squares

will contain this variation. Can we remove it? Yes - by blocking for

cars.

Even though we randomized, there is still a bit of confounding

(between cars and treatments) left. To remove this problem we

should block for car, and use every treatment once per car, i.e. use

a Randomised Block Design. Differences between the responses to

the treatments within a car will reflect the effect of the treatments.

Table 2.4: Car design 1.

Car 1 Car 2 Car 3

A

B

C

A

B

C

A

B

C

A

B

C

Car 4

D

D

D

D

Table 2.5: Car design 2: Completely

Randomised Design. The numbers in

brackets are the observed tread losses.

Car 1

Car 2

Car 3

Car 4

C(12)

A(14) D(10) A(13)

A(17)

A(13)

C(10)

D(9)

D(13)

B(14)

B(14)

B(8)

D(11)

C(12)

B(13)

C(9)

Table 2.6: Car design 2 (CRD), with

rearranged observations.

Treatment

Tread loss

A

17 14 13 13

B

14 14 13

8

C

12 12 10

9

D

13 11 10

9

Table 2.7: ANOVA table for car design

2, CRD.

Source

df

SS Mean Square

Brands

3

33

11.00

Error

12

51

4.25

Table 2.8: Car design 3: Randomised

Block Design.

Car 1

Car 2

Car 3

Car 4

B(14)

D(11)

A(13)

C(9)

C(12)

C(12)

B(13)

D(9)

A(17)

B(14)

D(10)

B(8)

D(13)

A(14)

C(10)

A(13)

F stat

2.59

sta2005s: design and analysis of experiments

The treatment sum of squares from the RBD is the same as in

the CRD. The error sum of squares is reduced from 51 to 11.5 with

the loss of three degrees of freedom. The F-test for treatment effects

now shows evidence for differences between the tyre brands (Table

2.10).

Another source of variation would be from the wheels on which

a treated tyre was placed. To have a tyre of each type on each wheel

position on each car would mean that we would need 64 tyres for

the experiment, rather expensive! Using a Latin Square Design

makes it possible to put a treated tyre in each wheel position and

use all four treatments on each car (Table 2.11).

Within this arrangement A appears in each car and in each wheel

position, and the same applies to B and C and D, but we have not

had to increase the number of tyres needed.

Blocking for cars and wheel position has reduced the error sum

of squares to 6.00 with the loss of 3 degrees of freedom (Table 2.12).

The above example illustrates how the design can change results. In reality one cannot change the analysis after the experiment

was run. The design determines the model and all the above considerations, whether one should block by car and wheel position

have to be carefully thought through at the planning stage of the

experiment.

References

1. Hicks CR, Turner Jr KV. (1999). Fundamental Concepts in the Design of Experiments. 5th edition. Oxford University Press.

23

Table 2.9: Rearranged data for car

design 3, RBD.

Tread loss

Treatment

Car 1 Car 2 Car 3

A

17

14

13

B

14

14

13

C

12

12

10

D

13

11

10

Table 2.10: ANOVA table for car

design 3, RBD.

Source df

SS

Mean Square

Tyres

3

33

11.00

Cars

3

39.5

13.17

Error

9

11.5

1.28

Car 4

13

8

9

9

F stat

8.59

10.28

Table 2.11: Car design 4: Latin Square

Design.

Wheel position

Car 1 Car 2 Car 3

1

A

B

C

2

B

C

D

3

C

D

A

4

D

A

B

Table 2.12: ANOVA table for car

design 4, LSD.

Source

df

SS

Mean Square

Tyres

3

33

11.00

Cars

3

39.5

13.17

Wheels

3

5.5

1.83

Error

6

6.0

1.00

Car 4

D

A

B

C

F stat

11.00

13.17

3

The Linear Model for Single-Factor Completely Randomized Design Experiments

3.1 The ANOVA linear model

A single-factor completely randomised design experiment results

in groups of observations, with (possibly) different means. In a

regression context, one could write a linear model for such data as

yi = β 0 + β 1 L1i + β 2 L2i + . . . + ei

with L1, L2, etc. dummy variables indicating whether response i

belongs to group j or not.

However, when dealing with only categorical explanatory variables, as is typical in experimental data, it is more common to write

the above model in the following form:

Yij = µ + αi + eij

(3.1)

The dummy variables are implicit but not written. The two models are equivalent in the sense that they make exactly the same

assumptions and describe exactly the same structure of the data.

Model 3.1 is sometimes referred to as an ANOVA model, as opposed to a regression model.

Both models can be written in matrix notation as Y = Xβ + e (see

next section).

3.2 Least Squares Parameter Estimates

Example: Three different methods of instruction in speed-reading

were to be compared. The comparison is made by testing the comprehension of a subject at the end of one week’s training in the

sta2005s: design and analysis of experiments

given method. Thirteen students volunteered to take part. Four

were randomly assigned to Method 1, 4 to Method 2 and 5 to

Method 3.

After one week’s training all students were asked to read an

identical passage on a film, which was delivered at a rate of 300

words per minute. Students were then asked to answer questions

on the passage read and their marks were recorded. They were as

follows:

Mean

Std. Dev.

Method 1

82

80

81

83

Method 2

71

79

78

74

81.5

1.29

75.5

3.70

Method 3

91

93

84

90

88

89.2

3.42

We want to know whether comprehension is higher for some

of these methods of speed-reading, and if so, which methods work

better.

When we have models where all explanatory variables (factors)

are categorical (as in experiments), it is common to write them as

follows. You will see later why this parameterisation is convenient

for such studies.

Yij = µ + αi + eij

where Yij is the jth observation with the ith method

µ is the overall or general mean

αi is the effect of the ith method / treatment

Here αi = µi − µ, i.e. the change in mean response with treatment i relative to the overall mean. µi is the mean response with

treatment i: µi = µ + αi .

By effect we mean here: the change in response with the particular

treatment compared to the overall mean. For categorical variables, effect

in general refers to a change in response relative to a baseline category or an overall mean. For continuous explanatory variables, e.g.

in regression models, we also talk about effects, and then mostly

mean the change in mean response per unit increase in x, the explanatory variable.

Note that we need 2 subscripts on the Y - one to identify the

group and the other to identify the subject within the group. Then:

Y1j = µ + α1 + e1j

Y2j = µ + α2 + e2j

25

sta2005s: design and analysis of experiments

Y3j = µ + α3 + e3j

Note that there are 4 parameters, but only 3 groups or treatments.

Linear model in matrix form

To put our model and data into matrix form we string out the data

for the groups into an N × 1 vector, where the first n1 elements are

the observations on Group 1, the next observations n2 , etc.. Then

the linear model, Y = Xβ + e, has the form

Y=

Y11

Y12

Y13

Y14

Y21

Y22

Y23

Y24

Y31

Y32

Y33

Y34

Y35

=

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

0

0

0

0

0

0

0

0

0

0

0

0

0

1

1

1

1

0

0

0

0

0

0

0

0

0

0

0

0

0

1

1

1

1

1

×

µ

α1

α2

α3

+

e11

e12

e13

e14

e21

e22

e23

e24

e31

e32

e33

e34

e35

Note that

1. The entries of X are either 0 or 1 (because here all terms in the

structural part of the model are categorical). X is often called the

design matrix because it describes the design of the study, i.e. it

describes which of the factors in the model contributed to each

of the response values.

2. The sum of the last three columns of X add up to the first column. Thus X is a 13 × 4 matrix with column rank of 3. The

matrix X′ X will be a 4 × 4 matrix of rank 3.

3. From row 1: Y11 = µ + α1 + e11 . What is Y32 ?

To find estimates for the parameters we can use the method of

least squares or maximum likelihood, as for regression. The least

squares estimates minimise the error sum of squares:

SSE = (Y − Xβ)′ (Y − Xβ)

= ∑ ∑(Yij − µ − αi )2

i

j

26

sta2005s: design and analysis of experiments

β′ = (µ, α1 , α2 , α3 ) and the estimates are given by the solution to

the normal equations

X ′ Xβ = X ′ Y

Since the sum of the last three columns of X is equal to the first

column there is a linear dependency between columns of X, and

X ′ X is a singular matrix, so we cannot write

β̂ = ( X ′ X )−1 X ′ Y

The set of equations X ′ Xβ = X ′ Y are consistent, but have an

infinite number of solutions. Note that we could have used only

3 parameters µ1 , µ2 , µ3 , and we actually only have enough information to estimate these 3 parameters, because we only have 3

group means. Instead we have used 4 parameters, because the

parametrization using the effects αi is more convenient in the analysis of variance, especially when calculating treatment sums of

squares (see later). However, we also know that

Nµ = n1 µ1 + n2 µ2 + n3 µ3 = n1 µ + n1 α1 + n2 µ + n2 α2 + n3 µ + n3 α3 .

The RHS becomes (n1 + n2 + n3 )µ + ∑i ni αi = Nµ + ∑i ni αi . From

this follows that ∑i ni αi = 0. The normal equations don’t know

this so we add this additional equation (to calculate the fourth

parameter from the other three) as a constraint in order to get the

unique solution.

In other words, if we have ∑i ni αi = 0 then the αi ’s have exactly

the meaning intended above: they measure the difference in mean

response with treatment i compared to the overall mean; µi =

µ + αi .

We could define the αi ’s differently, by using a different constraint, e.g.

Yij = µ + αi + eij ,

α1 = 0

Here the mean for treatment 1 is used as a reference category

and equals µ. Then α2 and α3 measure the difference in mean between group 2 and group 1 and between group 3 and group 1

respectively. This parametrization is the one most common in regression, e.g. when you add a categorical variable in a regression

model the β estimates are defined like this: as differences relative to

the first/baseline/reference category.

Now, back to a solution for the normal equations:

27

sta2005s: design and analysis of experiments

1. A constraint must be applied to obtain a particular solution β̂.

2. The constraint must remove the linear dependency, so it cannot

be any linear combination of the rows of X. Denote the constraint

by Cβ = 0.

3. The estimate of β subject to the given constraint is unique. For

this reason the constraint should be specified as part of the

model. So we write

Yij = µ + αi + eij

∑ ni αi = 0

or in matrix notation

Y = Xβ + e

Cβ = 0

where no linear combination of the rows of C is a linear combination of the rows of X.

4. Although the estimates of β depend on the constraints used, the

following quantities are unique.

(a) The fitted values Ŷ = X β̂.

(b) The Regression or Treatment Sum of Squares.

(c) The Error Sum of Squares, (Y − X β̂)′ (Y − X β̂).

(d) All linear combinations, ℓ′ β̂, where ℓ = L′ X (predictions fall

under this category).

These quantities are called estimable functions of the parameters.

Speed-reading Example

Y=

82

80

81

83

71

79

78

74

91

93

84

90

88

=

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

0

0

0

0

0

0

0

0

0

0

0

0

0

1

1

1

1

0

0

0

0

0

0

0

0

0

0

0

0

0

1

1

1

1

1

×

µ

α1

α2

α3

+

e11

e12

e13

e14

e21

e22

e23

e24

e31

e32

e33

e34

e35

= Xβ + e

28

sta2005s: design and analysis of experiments

The normal equations, X ′ Xβ = X ′ Y are

1

1

0

0

1

1

0

0

1

1

0

0

1

1

=

0

0

1

1

0

0

1

1

0

0

1

0

1

0

1

1

0

0

1

0

1

0

1

1

0

0

1

0

1

0

1

0

1

0

1

0

1

0

1

0

1

0

1

0

0

1

1

0

0

1

1

0

1

0

1

0

0

1

1

0

1

0

1

0

0

1

1

0

0

1

1

0

0

1

1

0

0

1

1

0

0

1

1

0

0

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

0

0

1

1

1

1

1

0

0

0

0

0

0

0

0

0

0

0

0

0

1

1

1

1

0

0

0

0

0

82

80

81

83

71

79

78

74

91

93

84

90

88

0

0

0

0

0

0

0

0

1

1

1

1

1

Multiply X ′ Xβ = X ′ Y

13

4

4

5

µ

4 4 5

4 0 0 α1

0 4 0 α2

0 0 5

α3

=

1074

326

302

446

=

∑ij Yij

∑ j Y1j

∑ j Y2j

∑ j Y3j

The sum of the last three columns of X ′ X = column 1, hence the

columns are linearly dependent.

So

• X ′ X is a 4 × 4 matrix with rank 3.

• X ′ X is singular

• ( X ′ X )−1 does not exist

• There are an infinite number of solutions that satisfy the equations! To find the particular solution we require, we add the

constraint, which defines how the parameters are related to each

other.

µ

α1

α2

α3

29

sta2005s: design and analysis of experiments

The effect of different constraints on the solution to the normal equations

We illustrate the effect of different sets of constraints on the least

squares estimates using the speed-reading example. The normal

equations are:

X ′ Xβ =

13

4

4

5

µ

4 4 5

α

4 0 0

1

0 4 0 α2

α3

0 0 5

=

1074

326

302

446

= X′Y

The sum-to-zero constraint ∑ ni αi = 0:

This constraint can be written as

Cβ = 0µ + 4α1 + 4α2 + 5α3 = 0

Using this constraint the normal equations become

13

4

4

5

µ

0 0 0

α

4 0 0

1

0 4 0 α2

α3

0 0 5

=

1074

326

302

446

1074

326

302

446

and their solution is

µ̂ = 82.62

α̂1 = −1.12

α̂2 = −7.12

α̂3 = 6.58

β̂′ X ′ Y =

82.62

−1.12 −7.12 6.58

= 89153.2

β̂′ X ′ Y = SSmean + SStreamtment = ∑ ∑(Y¯i. − Ȳ.. )2 + ∑ ∑(Ȳ.. − 0)2 .

This assumes that the total sum of squares was calculated as ∑ Yi′ Yi .

β̂′ X ′ Y is used here because it is easier to calculate by hand than the

usual treatment sum of squares β̂′ X ′ Y − N1 Y ′ Y.

The error sum of squares is (Y − X β̂)′ (Y − X β̂) = 92.8.

The fitted values are

Ŷ1j = µ̂ + α̂1 = 81.5 in Group 1

Ŷ2j = µ̂ + α̂2 = 75.5 in Group 2

30

sta2005s: design and analysis of experiments

Ŷ3j = µ̂ + α̂3 = 89.2 in Group 3

The corner-point constraint α1 = 0:

This constraint is important as it is the one used most frequently

for regression models with dummy or categorical variables, e.g.

regression models fitted in R.

Now

Cβ =

0

1

0

0

µ

α1

α2

α3

= α1 = 0

This constraint is equivalent to removing the α1 equation from

the model, so we strike out the row and column of the X’X corresponding to the α1 and the normal equations become

13

4

5

µ

1074

4 5

4 0 α2 = 302

446

0 5

α3

µ̂ = 81.5

α̂1 = 0

α̂2 = −6.0

α̂3 = 7.7

β̂′ X ′ Y =

81.5

0

−6 7.7

1074

326

302

446

= 89153.2

The error sum of squares is (Y − X β̂)′ (Y − X β̂) = 92.8

The fitted values are

Ŷ1j = µ̂ = 81.5 in Group 1

Ŷ2j = µ̂ + α̂2 = 75.5 in Group 2

Ŷ3j = µ̂ + α̂3 = 89.2 in Group 3

which are the same as previously. However, the interpretation

of the parameter estimates is different. µ is the mean of treatment

1, α2 is the difference in means between treatment 2 and treatment

1, etc.. Treatment 1 is the baseline or reference category. This is the

parametrization typically used when fitting regression models, e.g.

in R, which calls it ’treatment contrasts’.

The constraint µ = 0 will result in the cell means model: yij =

αi + eij or µi + eij .

We summarise the effects of using different constraints in the

table below:

31

sta2005s: design and analysis of experiments

Model

Constraint

µ

α̂1

α̂2

α̂3

Ŷ1j

Ŷ2j

Ŷ3j

β̂′ X ′ Y

Error SS

µ + αi

µ + αi

αi

∑ ni αi = 0

α1 = 0

µ=0

82.6

81.5

0

-1.1

0

81.5

-7.1

-6

75.5

6.6

7.7

89.2

81.5

81.5

81.5

75.5

75.5

75.5

89.2

89.2

89.2

89153.2

89153.2

89153.2

92.8

92.8

92.8

32

We will be using almost exclusively the sum-to-zero constraint

as this has a convenient interpretation and connection to sums of

squares, and the analysis of variance.

Design matrices of less than full rank

If the design matrix X has rank r less than p (number of parameters), there is not a unique solution for β. There are three ways to

find a solution:

1. Reducing the model to one of full rank.

2. Finding a generalized inverse ( X ′ X )− .

3. Imposing identifiability constraints.

To reduce the model to one of full rank we would reduce the

parameters to µ, α2 , α3 , . . ., with α1 implicitly set to zero1 .

1

This is what R uses by default in its

lm() function (corner-point constraint).

We won’t deal with generalized inverses in this course.

To impose the identifiability constraints we write the constraint

as Hβ = 0. And then solve the augmented normal equations:

X ′ Xβ = X ′ Y and H ′ Hβ = 0

"

# "

#

X′ X

X′Y

β = Gβ

=

0

H′ H

(X′ X + H′ H )β = X′ Y

β̂ = ( X ′ X + H ′ H )−1 X ′ Y

Parameter estimates for the single-factor completely randomised design

Suppose an experiment has been conducted as a completely randomised design: N subjects were randomly assigned to a treatments, where the ith treatment has ni subjects, with ∑ ni = N, and

Yij = jth observation in ith treatment group. The data have the form:

sta2005s: design and analysis of experiments

Group

II

...

I

Y11

Y12

..

.

Y1n1

Means

Totals

Variances

Y 1·

Y1·

s1 2

a

Y21

Y22

Ya1

Ya2

..

.

Yana

Y2n2

Y 2·

Y2·

s2 2

Y a·

Ya·

sa 2

Y ··

Y··

The first subscript is for the treatment group, the second for

the replication. The group totals and means are expressed in the

following dot notation:

ni

group total Yi· =

∑ Yij

j =1

group mean Y i· = Yi· /ni

and

overall total

Y·· =

∑ ∑ Yij

i

overall mean

j

Y ·· = Y·· /N

Let Yij = jth observation in ith group. The model is:

Yij = µ + αi + eij

∑ ni αi = 0

where

µ

αi

eij

= general mean

= effect of the ith level of treatment factor A

= random error distributed as N (0, σ2 ).

The model can be written in matrix notation as

Y = Xβ + e

with

e ∼ N (0, σ 2 I )

33

sta2005s: design and analysis of experiments

Cβ = 0

where

Y=

Y11

Y12

..

.

Y1n1

Y21

Y22

..

.

Y2n2

..

.

Ya1

..

.

Yana

=

1

1

..

.

1

1

1

..

.

1

..

.

1

..

.

1

1

1

0

0

1 0

0 1

0 1

0

1

0

0

0

0

...

...

...

0

0

0

0

0

×

0

1

µ

α1

α2

..

.

..

.

αa

+

1

e11

e12

..

.

..

.

..

.

..

.

..

.

..

.

..

.

eana

= Xβ + e

and the constraint as

Cβ =

0

n1

n2

...

na

µ

α1

α2

..

.

αa

=0

There are a + 1 parameters subject to 1 constraint. To estimate

the parameters we minimize the residual/error sum of squares

S = (Y − Xβ)′ (Y − Xβ)

= ∑(Yij − µ − αi )2

ij

where ∑ij = ∑i ∑ j . Let’s put numbers to all of this and assume

a = 3, n1 = 4, n2 = 4 and n3 = 5. Then

34

sta2005s: design and analysis of experiments

Y11

Y12

Y13

Y14

Y21

Y22

Y23

Y24

Y31

Y32

Y33

Y34

Y35

1

1

1

1

1

1

1

1

1

1

1

1

1

=

1

1

1

1

0

0

0

0

0

0

0

0

0

0

0

0

0

1

1

1

1

0

0

0

0

0

0

0

0

0

0

0

0

0

1

1

1

1

1

×

µ

α1

α2

α3

+ eij

subject to Cβ = 0

Cβ =

0

4

4

5

µ

α1

α2

α3

=0

1

0

1

0

1

0

1

0

4 4 5

µ

4 0 0 α1

0 4 0 α2

0 0 5

α3

X′X β =

=

13

4

4

5

1

1

0

0

1

1

0

0

1

1

0

0

1

1

0

0

1

0

1

0

=

1

0

1

0

1

0

0

1

13µ

4µ

4µ

5µ

1

0

0

1

1

0

0

1

1

0

0

1

+ 4α1

+ 4α1

1

0

0

1

+ 4α2

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

0

0

0

0

0

0

0

0

0

0

0

0

0

1

1

1

1

0

0

0

0

0

0

0

0

0

0

0

0

0

1

1

1

1

1

+ 5α3

+ 4α2

+ 5α3

µ

α1

α2

α3

35

sta2005s: design and analysis of experiments

1

1

X′Y =

0

0

1

1

0

0

1

1

0

0

1

1

0

0

1

0

1

0

∑ij Yij

∑ j Y1j

∑ j Y2j

∑ j Y3j

=

1

0

1

0

1

0

1

0

1

0

1

0

1

0

0

1

1

0

0

1

1

0

0

1

NY ··

n 1 Y 1·

n 2 Y 2·

n 3 Y 3·

=

1

0

0

1

1

0

0

1

which results in the normal equations:

13µ

4µ

4µ

5µ

+ 4α1

+ 4α1

+ 4α2

+ 5α3

+ 4α2

+ 5α3

= 13Y ··

= 4Y1·

= 4Y2·

= 5Y3·

The constraint says that:

0µ

+ 4α1

+ 4α2

+ 5α3

Which implies that

µ̂

= 13Y ··

=⇒

Y ··

=

α̂i

= Y i· − Y ··

13µ

And that

So to summarize , the normal equations are

X ′ Xβ = X ′ Y

=0

Y11

Y12

Y13

Y14

Y21

Y22

Y23

Y24

Y31

Y32

Y33

Y34

Y35

36

sta2005s: design and analysis of experiments

where

X ′ Xβ =

N

n1

n2

..

.

nn

n1

n1

0

..

.

0

n2

0

n2

..

.

0

na

0

0

..

.

na

...

...

µ

α1

..

.

αa

= X′Y =

NY ··

n 1 Y 1·

n 2 Y 2·

..

.

n a Y a·

Using the constraint

Cβ =

0

n1

n2

na

...

µ

α1

..

.

αa

=0

the set of normal equations are

n1 µ

Nµ

+ n1 α1

..

.

= NY ··

= n 1 Y 1·

..

.

na µ

+ na αa

= n a Y a·

Solving these equations gives the least squares estimators

µ̂ = Y ··

µ̂i = Y i·

and

α̂i = Y i· − Y ··

for i = 1, . . . , a. Parameter estimation for many of the standard

experimental designs is straightforward! From general theory we

know that the above are unbiased estimators of µ and the αi ’s. An

unbiased estimator of σ2 is found by using the minimum value of

the residual sum of squares, SSE, and dividing by its degrees of

freedom.

min(SSE) =

∑(Yij − µ̂ − α̂i )2

ij

= ∑(Yij − Y i· )2

ij

E(Yij − Ȳi. )2 = Var (Yij − Ȳi. ) = σ2 (1 −

1

)

n

37

sta2005s: design and analysis of experiments

Hint: Cov(Yij , n1 ∑ j Yij ) = n1 σ2

Then

E[SSE] = E

h

∑ ∑(Yij − Ȳi. )2

1

= anσ2 (1 − ) = a(n − 1)σ2

n

i

SSE

E[ MSE] = E

= σ2

N−a

So

s2 =

1

N−a

∑(Yij − Yi· )2

ij

is an unbiased estimator for σ2 , with ( N − a) degrees of freedom

since we have N observations and (a + 1) parameters subject to 1

constraint. This quantity is also called the Mean Square for Error

or MSE.

3.3 Standard Errors and Confidence Intervals

Mostly, the estimates we are interested in are linear combinations

of treatment means. In such cases it is relatively straightforward to

calculate the corresponding variances (of the estimates):

Var (µ̂)

= Var (Y ·· ) = Var (∑i ∑ j Yij /N )

= N12 ∑i ∑ j Var (Yij )

=

=

1

Nσ2

N2

σ2

N

2

The estimated variance is then sN where s2 is the mean square for

error (least squares estimate, see above).

Var (α̂i )

= Var (Y i· − Y ·· )

= Var (Y i· ) + Var (Y ·· ) − 2Cov(Y i· Y ·· )

Consider

Cov(Y i· Y ·· )

= Cov(Y i· , ∑ nNk Y k· )

= ∑k nNk Cov(Y i· Y k· )

But since the groups are independent cov(Y i· Y k· ) is zero if i ̸= k.

2

If i = k then cov(Y i· Y k· ) = Var (Y i· ) = σn . Using this result and

summing we find cov(Y i· Y ·· ) =

σ2

N.

i

Hence

38

sta2005s: design and analysis of experiments

Var (α̂i )

=

=

=

σ2

σ2

2σ2

ni + N − N

( N − ni ) σ2

ni N

σ2

σ2

ni − N

Important estimates and their standard errors

A standard error is the (usually estimated) standard deviation of

an estimated quantity. It is the square root of the variance of the

sampling distribution of this estimator and is an estimate of its

precision or uncertainty.

Parameter

Estimate

Standard Error

√S

N

Overall mean

µ

Y ··

Experimental error variance

σ2

s2 =

ith

αi

Y i· − Y ··

Effect of the

treatment

Difference between two treatments

α1 − α2

µi = µ + αi

Treatment mean

1

N −a

∑ij (Yij − Y i· )2

Y 1· − Y 2·

q

Y i·

q

How do we estimate σ2 ?

s2

=

=

=

=

q

1

N −a

∑ij (Yij − Y i· )2

Mean Square for Error = MSE

Within Sum of Squares/(Degrees of Freedom)

SSresidual /d f residual

Assuming (approximate) normality of an estimator, a confidence

interval for the corresponding population parameter has the form

estimate ± tα/2

× standard error

ν

Where tα/2

is the α/2th percentile of Student’s t distribution with

ν

ν degrees of freedom. The degrees of freedom of t are the degrees

of freedom of s2 .

Speed-reading Example

Estimates, standard errors and confidence intervals:

ni 2

( Nn−

)s

iN

s2 ( n1 +

1

s2

ni

1

n2 )

= sed

39

sta2005s: design and analysis of experiments

Effect (αi )

Method I

Method II

Method III

Mean (µi )

Method I

Method II

Method III

Overall mean

Estimate

α̂1 = −1.12

α̂2 = −7.12

α̂3 = 6.58

Standard Error

1.27

1.27

1.25

µ̂1 =

µ̂2 =

µ̂3 =

µ = 82.62

95% Confidence Interval

0.85

3.4 Analysis of Variance (ANOVA)

The next step is testing the hypothesis of no treatment effect: H0 :

α1 = α2 = . . . = 0. This is done by a method called Analysis of

Variance, even though we are actually comparing means.

Note that so far we have not used the assumption of eij ∼

N (0, σ2 ). The least squares estimates do not require the assumption of normal errors! However, to construct a test for the above

hypothesis we need the normality assumption. In what follows,

we assume that the errors are identically and independently distributed as N (0, σ2 ). Consequently the observations are normally

distributed, though not identically. We must check this assumption

of independent, normally distributed errors, else our test of the

above hypothesis could give a very misleading result.

Decomposition of Sums of Squares

Let’s assume Yij are data obtained from a CRD, and we are assuming model 3.1:

Yij = µ + αi + eij

In statistics, sums of squares refer to squared deviations (from

a mean or expected value), e.g. the residual sum of squares is the

sum of squared deviations of observed from fitted values. Lets

rewrite the above model by substituting observed values and

rewriting the terms as deviations from means:

Yij − Ȳ·· = (Ȳi. − Ȳ.. ) + (Yij − Yi. )

Make sure you agree with the above. Now square both sides and

sum over all N observations:

40

sta2005s: design and analysis of experiments

∑ ∑(Yij − Y·· )2 = ∑ ∑(Yij − Yi· )2 + ∑ ∑(Yi· − Y·· )2 + 2 ∑ ∑(Yij − Yi· )(Yi· − Y·· )

i

j

i

j

i

j

i

j

The crossproduct term is zero after summation over j since it can

be written as

2 ∑(Y i· − Y ·· ) ∑(Yij − Y i· )

i

j

The second term is the sum of the observations in the ith group

about their mean value, so the sum is zero for each i. Hence

∑ ∑(Yij − Y·· )2 = ∑ ∑(Yi· − Y·· )2 + ∑ ∑(Yij − Yi· )2

i

j

i

j

i

j

So the total sum of squares partitions into two components: (1)

squared deviations of the treatment means from the overall mean,

and (2) squared deviations of the observations from the treatment

means. The latter is the residual sum of squares (as in regression,

the treatment means are the fitted values). The first sum of squares

is the part of the variation that can be explained by deviations of

the treatment means from the overall mean. We can write this as

SStotal = SStreatment + SSerror

The analysis of variance is based on this identity. The total sum

of squares equals the sum of squares between groups plus the sum

of squares within groups.

Distributions for Sums of Squares

Each of the sums of squares above can be written as a quadratic

form:

Source

treatment A

residual

total

SS

df

Y′ ( H

1

n J )Y

−

SSA =

SSE = Y ′ ( I − H )Y

SST = Y ′ ( I − n1 J )Y

a−1