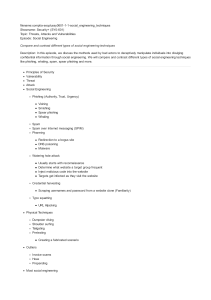

Cover 1 Abstract Phishing includes a method of impersonating websites to monitor as well as obtain confidential information. data on users online. Through social engineering methods including SMS, phone, mail, websites, and viruses, the hacker deceives the victim. To identify different phishing assaults, several strategies have indeed been put forth and put into practice. Some of these strategies include the usage using blacklists as well as whitelists. Inside this document, it provides a desktop program named Phish Saver that concentrates just on phishing web page Addresses and webpage information. It emphasizes using the computer program Phish Saver to identify phishing websites. Phish Saver employs a mix of heuristic characteristics and blacklists to identify various phishing scams. It utilized Google's safe surfing blacklist, GOOGLE API SERVICES, again for blacklist since it is continually refreshed and monitored via Google. 2 Acknowledgments 3 Contents Abstract ......................................................................................................................................................... 2 Acknowledgments......................................................................................................................................... 3 List of Figures ............................................................................................................................................ 6 List of Tables ................................................................................................................................................. 6 Chapter 1 Introduction ................................................................................................................................. 7 1.1 Introduction ........................................................................................................................................ 7 1.2 Survey Outcomes ................................................................................................................................ 8 1.3 Problem Background........................................................................................................................... 9 1.4 Finding Solutions ............................................................................................................................... 10 1.5 Anomalies across phishing websites are good indicators of phishing. ............................................. 12 1.6 Research Question ............................................................................................................................ 13 1.7 Research Motivation ......................................................................................................................... 14 1.8 Research Aim and Objectives............................................................................................................ 15 1.9 Heuristics Methodology for the Scenario ......................................................................................... 15 1.10 Rich picture of the proposed solution ............................................................................................ 17 1.11 Project scope................................................................................................................................... 18 Chapter 2 Literature Review ....................................................................................................................... 19 2.1 Chapter overview .............................................................................................................................. 19 2.2 Conceptual taxonomy of the literature organization ....................................................................... 19 2.3 Existing Systems / Frameworks / Designs ......................................................................................... 26 2.4 Technological Analysis ...................................................................................................................... 29 4 2.5 Reflection .......................................................................................................................................... 31 3. Methodology- ......................................................................................................................................... 32 3.1 Feasibility study................................................................................................................................. 32 3.2 Operations Feasibility ....................................................................................................................... 33 3.3 Technology Feasibility ....................................................................................................................... 33 3.4 Research Approach ........................................................................................................................... 33 3.5 Requirement Specification ................................................................................................................ 36 Chapter Overview ............................................................................................................................... 36 Questionnaires .................................................................................................................................... 37 3.6 Testing and results ............................................................................................................................ 43 Chapter 4 Results and Observations ........................................................................................................... 43 4.1 Chapter Overview ............................................................................................................................. 43 4.2 Proposed Method ............................................................................................................................. 44 4.3 The Architecture ............................................................................................................................... 47 Algorithms ........................................................................................................................................... 48 4.4. Implementation ............................................................................................................................... 51 4.5 Chapter Summary ............................................................................................................................. 61 Chapter 5 Conclusion .................................................................................................................................. 61 5.1 Chapter Overview ............................................................................................................................. 61 5.2 Accomplishment of the research objectives..................................................................................... 62 5.3 Limitations of the research and problems encountered .................................................................. 64 5.4 Discussion and Future improvements/recommendations ............................................................... 66 5.5 Chapter Summary ............................................................................................................................. 67 Reference .................................................................................................................................................... 68 5 Appendices A: Survey Total Results ............................................................................................................ 70 Appendices B: Gantt Chart.......................................................................................................................... 75 List of Figures Figure 1 Structure for the URL 44 Figure 2 Detection Method 47 Figure 3 Survey Result 70 Figure 4 Survey Result 71 Figure 5 Survey Result 72 Figure 6 Survey Result 73 Figure 7 Survey Result 74 Figure 8 Gantt Chart 75 Figure 9 Gantt Chart 76 List of Tables Table 1 TP, TN, FP, FN Matrixes .................................................................................................................. 50 6 Chapter 1 Introduction 1.1 Introduction A new type of cyber threat has emerged as a result of the revolution in the current technological era. Phishing websites have become a huge issue for cyber security and have become a huge case on online websites. In most cases, phishing websites have become a serious problem for online finance-related websites. Vulnerabilities that are available on the websites have become the reason behind Phishing attacks. The vulnerabilities in the websites have exposed the web servers to vulnerabilities. Phishers use these opportunities to target their phishing attacks without disrupting the owners of those websites. In this research, methods for detecting phishing websites are discussed and the background of phishing websites and their harmfulness is explained. Finding a good method to recognize phishing websites is the primary goal and purpose of the research. This study proposes a program called Phish Shield for spotting phishing URLs. The computer languages Java and JavaScript were utilized to create the Phish Shield utility. Java Spring boot is used for back-end development, while React framework is utilized for front-end development. Developers and academics may include anti-phishing data using open APIs provided by Google and PhishTank. For this study, phishing URLs are detected using the PhishTank open API. This method takes an HTTP GET request and responds with information on the PhishTank database's state of a URL. Some phishers attempt to direct phishing website attacks by hosting new servers for this purpose. As a result of these phishing website attacks, most researchers are working to find solutions to prevent phishing attacks, especially by detecting phishing websites. With the rise of cybercrime, phishers are also starting to register their phishing websites. A phishing website can be described as a cloned website that looks like a legitimate website and spoofs the users with 7 fake ones to fulfill the various types of attackers' goals. Phishing attacks can be a big case within financial websites or based on legitimate websites specifically designed by the government. As technology has increased, phishing websites and their attackers have become tougher than in the past and more diverse. It can cause various types of privacy breaches related to phishing websites and exploit vulnerabilities to perform ransomware attacks as well. Therefore, this research looks at the factors behind phishing websites and the way they are detected. 1.2 Survey Outcomes A survey has been done to recognize the awareness of phishing detection among online users. The sample who participated in the survey was selected from students and the sample consisted of fifty-two people. The survey was done as an online questionnaire. There are 12 multiple-choice questions, and one answer writing question was included in the questionnaire. Survey Questions 1. What is your gender? 2. Please select your age range 3. What is your education level? 4. How is your daily usage of the internet? 5. What is your main purpose for using the internet? 6. Rate your basic computer knowledge 7. Do you have a virus protection program running on your computer? 8. Have you faced any internet fraud? 9. Do you know about online phishing attacks and phishing types? 10. Can you detect phishing emails or websites before scamming you? Example of a phishing attack: Updating NETFLIX payment details 11. Do you have any knowledge about phishing prevention methods? 12. If someone scams you, what can you do? 13. Would you like us to introduce new ways to detect phishing websites? According to the survey result, most of the participants know to identify online phishing attacks and phishing types but, they have minor knowledge about phishing prevention methods. 95% of participants use the internet frequently and the majority answered "Yes" for Question 13 (Would you like us to introduce new ways to detect phishing websites?) 8 35% of participants answered “Excellent” for basic computer knowledge, and the majority answered "Yes" for Question 10 (Can you detect phishing emails or websites before scamming you?). But other participants don’t know how to detect phishing sites before scamming. Therefore, this research looks at the factors behind phishing websites and establishes an effective way to detect phishing attempts. 1.3 Problem Background There are several reasons to research phishing website detection. With the current advancement in technology, using the internet and visiting websites has become essential. As a result, the number of crimes committed in the internet realm is increasing. Phishing websites are another type of cybercrime that is becoming more prevalent by the day. Phishing websites provide a variety of risks to users. There are several harmful consequences linked with phishing websites. Phishing websites can cause money loss on business websites, particularly those relating to financial situations. Phishing websites have the potential to damage the reputations of wellknown companies. Users frequently struggle to distinguish between legitimate websites and phishing scams. These factors make research on phishing website detection crucial in today's technology environment. The shift in the contemporary technological era has led to the emergence of a new sort of cyber threat. Phishing websites have grown to be a major problem for online websites and cyber security. Phishing websites have typically become a significant issue for online financial businesses. Phishing attacks are now caused by vulnerabilities that are present on websites. The web servers are vulnerable because of the flaws in the websites. These changes allow phishers to target their attacks without upsetting the website owners. The background of phishing websites and their harmfulness is becoming an important subject to be discussed. Some phishers attempt to redirect phishing website attacks by running new servers specifically for this purpose. Due to these phishing website attacks, the majority of academics are trying to come up with ways to stop phishing attacks, particularly by identifying phishing websites. Phishers are registering their phishing websites in response to the growth in cybercrime. A phishing website is a copy of a legal website that spoofs users with phony ones to achieve the 9 objectives of different types of attackers. Attacks based on genuine websites expressly created by the government or financial websites can be a major target for phishing attacks. Technology advancements have made phishing websites and their attackers more resilient and diversified than in the past. It can lead to several privacy violations connected to phishing websites and leverage flaws to launch ransomware attacks. As a result, this study examines the causes of phishing websites and how to spot them. There are several strategies for detecting phishing websites. To assist the study, approaches for identifying phishing websites are explored in this research. The research is more crucial to do since the number of harmful websites is growing, and as a result, so is their negative influence on users. With the use of this research, it will be possible to identify any suspicious websites before they worsen the situation of online crimes. As a result of the research, it can detect phishing websites and protect against several other cyber-attacks that are linked to phishing websites. It can shield both corporate and private material from phishing attacks. 1.4 Finding Solutions Finding a fundamental solution for detecting phishing websites may be done in several ways. By applying the fundamental procedures before comparing them to the scenario's typical answer, the user can avoid phishing websites. Ensure that the URL is legitimate It should be able to determine the legitimacy of the relevant web addresses to recognize phishing websites and check their URLs. More security may be provided by HTTP:// web addresses than HTTPS:// connections. The hazards of websites that use SSL encryption are small. The URLs of phishing websites can be used to identify them. However, modern hackers and criminals have their techniques for accessing phishing websites, even though HTTPS:// connections. 10 Verify the website's content quality. Standard and well-known websites frequently have well-written, high-quality material on them. They are devoid of spelling, grammar, and punctuation faults. Phishers occasionally replicate the entire online material identically. In similar circumstances, it is important to examine the visual media quality because fake websites may use visuals with lower resolutions. Discover any missing content To identify fake websites, one has to look at the contact us page. The contact us page on the fake websites is typically empty. Requesting personal information A variety of pop-up windows may appear when using a web browser, requesting personal information such as contact information, a home address, an email address, and bank information. If a pop-up message requests personal information, it may be dangerous to click on it. Does the website lack security? When users attempt to access a webpage, a safety warning such as "This connectivity is not safe" may appear. It's indeed critical to know how else to spot phishing websites in this type of scenario. The locking symbol that displays just on the left edge of a URL should be clicked initially. Users will be able to obtain data about security certificates as well as cookies inside this way. A cookie is a file format wherein information about a user is saved and forwarded to the webpage administrator. It generally provides a better customer experience, although phishers frequently abuse this data. 11 Make Up a Password Use the incorrect password when a dubious website requests one. It was a completely phony webpage if users log in and see a point you've entered the right password. You may avoid those social engineering attempts by using this approach. Verify the Payment Technique One should exercise caution if a webpage requests a direct cash deposit in place of prepaid debit cards, multiple credit cards, or even other payment methods like PayPal. This might mean that no banks have authorized credit card capabilities for the name of the website, and therefore they may engage in illicit behavior. 1.5 Anomalies across phishing websites are good indicators of phishing. Even though phishing sites are typically inexpensive and simple to make, the webpages developed are frequently badly planned and programmed, and they frequently fall short of established norms like the World Wide Web Consortium (W3C) guidelines and Google standards and don't sufficiently adhere to them. Its critical level was discovered to be extremely poor or zero in Google's crawling database. Additionally, phishing websites are extremely fleeting, with a median domain staying live for three days, 31 minutes, plus 8 seconds. For such a brief period, it stands to reason that phishing would rather collaborate on much more profitable endeavors rather than improve the quality and appearance of websites. These involve promoting so many emails as well as webpages to possible suspects, able to infect consumers' Computers with computer viruses so they can be used as proxy servers, and designing a layered approach with the registration system of diverse fields from numerous authorities to control traffic to a particular one’s domain names if the majority of one’s domains were removed. Furthermore, phishing services frequently imitate other legitimate websites and make fake identity claims, which would not be conceivable without the introduction of some oddities. Thus, 12 it is possible to identify fraudulent activity using these abnormalities. The advantages of leveraging these irregularities discovered in URLs as well as DOM Website entities during phishing 1.6 Research Question Phishing websites have become a serious problem on the Internet and in cyberspace. There are many different effects associated with phishing websites. The issues and inquiries draw people to phishing websites. Therefore, the purpose of the research is to identify and answer questions about phishing websites. A consistent result of every phishing incident in history has been a monetary loss. The first is the direct loss resulting from funds transferred by workers duped by hackers. Finally, the cost of investigating the breach and paying affected consumers would compound the company's financial losses. In the event of a phishing attack, companies have to fear more than just financial damage. Losing customer information plus trade secrets, project findings, and drafts considering far much dangerous. For companies within the technical sector, pharmaceutical services, or security field, an unauthorized patent can mean millions of money in research and development costs. Although it is fairly easy to recoup direct financial losses, it is. Direct financial losses can be recovered fairly quickly, while indirect losses are harder to come by and direct financial losses can be recovered fairly easily, but losing confidential business knowledge is more difficult to replace. It was created to compensate for the loss of important company information. Businesses often try to disguise the existence of any phishing attacks they may have experienced. This is mainly due to reputational damage. Customers often buy from companies they believe to be trustworthy and reliable. Revealing a violation not only damages the reputation of the brand but also destroys that mutual trust. Regaining a customer's trust is no easy task, and the value of a brand is directly related to the size of its customer base. 13 The company's reputation with investors can suffer if a breach attack is made public. Throughout the project development process, cyber security is of vital importance. Investor confidence is therefore reduced when a company encounters data and some form of a data breach. A successful phishing attack could hurt investor and consumer confidence if it does both at the same time. Successful phishing attacks can destroy hundreds of millions of dollars worth of market capitalization by damaging investor and consumer confidence combined. 1.7 Research Motivation To safeguard consumers against falling prey to internet scams, giving their personally identifiable information to a hacker, and some other effective applications of phishing as just a suspect's tactic, phishing-based monitoring solutions are essential. However, a majority of current phishing sensing technologies, especially all those that depend on an established blacklisted, include flaws including poor detection precision and high false positive rates. Such issues are frequently brought about due to either a latency in refreshing the revoking report based on the classification's subjective verification or, more rarely, by personal categorization mistakes that may lead to inaccurate class categorization. Numerous academics have been inspired to create various detection-enhancing techniques as a result of these significant hurdles. Many academics have created many ways to enhance the effectiveness of phishing detection mechanisms and decrease the false positive rate in response to these significant problems. The reference architecture requires an immediate upgrade due to the hackers' rare activity and the continual evolution of URL spoofing trends. To enable the algorithm of machine learning to actively react to shifts in phishing patterns, an effective method of controlling relearning is required. The objective of this project is to recognize phishing scams by investigating improved detection methods and creating a collection of classifiers. 14 1.8 Research Aim and Objectives There are several reasons to investigate phishing website detection. With the current advances in technology, using the internet and visiting websites has become essential. As a result, the number of crimes committed online is increasing. Phishing websites are another type of cybercrime that is becoming more prevalent every day. Phishing websites pose a variety of risks to users. There are several harmful consequences associated with phishing websites. Phishing websites have become a serious problem on the Internet and in cyberspace. There are many different effects associated with phishing websites. The issues and requests draw people to phishing sites. Therefore, the aim and goal of the investigation are to identify and answer questions about phishing websites. The aims and objectives associated with the research are developed using references to current cases involving phishing websites. The main aim and objective of the research are to find a suitable way to detect phishing websites by reducing the impact to ton online platforms. 1.9 Heuristics Methodology for the Scenario Such techniques, despite being minor, can be used to detect if a webpage is legal or even a phishing-related scam. Heuristic-based solutions, as opposed to blacklist techniques, may continually help detect phishing websites. The overall success of heuristic-based procedures, also known as feature-based tactics, is contingent on the choice of several differentiating factors that might be useful for Web site type badges. A Computer program named Phish Shield, which addresses URLs as well as Web information for phishing sites had run by Rao R.S. and Ali S.T. [15]. Phish Shield recognizes the URL as data and indicates if it is a legitimate website or a phishing website. Comment links containing data, a zero hyperlink inside the HTML content, property material, title content, and website identification are some of the criteria used to spot phishing. The software is quicker even than showcase 15 assessment methods now used here to prevent phishing and thus can differentiate the lowest phishing attempts which blacklists are unable to differentiate. To get beyond the aforementioned obstacles, it has presented a heuristic method employing its TWSVM or the (twin support vector machine) classifiers to detect maliciously created phishing web pages also websites that are hosted on several hosts. This method looks at the login screen as well as the online site of both the visited websites to identify phishing sites located on fixed addresses. The attributes depending on links as well as URLs are used to identify dangerous fraud patterns. Several support vector machines are often used to organize phishing web pages (SVM). Twin support vector machine classifier (TWSVM) is found to outperform several variations. The suggested approach employs a method of character selection and a non-linear relapsing algorithm using meta-heuristics for just an anti-phishing webpage. Researchers chose 20 items to be isolated from the referred sites from a database of reasonable pages between legitimate and phishing sites throughout order to approve the proposed approach. To select the most suitable selection of elements, with work used two image methods: decision trees and covering. The other increased the identification overall accuracy to 96.32%. Furthermore, relevant concepts algorithms, support vector machine (SVM) and search for harmony (HS), which was communicated relying on a nonlinear relapsing approach, are successfully used to predict and discriminate erroneous sites (SVM). These sites were then arranged to utilize the nonlinear relapsing technique, and the HS method has been used to determine the limitations of the suggested relapsing model. A novel harmony was developed using the suggested HS algorithm, which uses a strong pitch rate of variation of this, the analysis shows that relapse-based nonlinear HS works better than SVM. A method proposed based on such a search engine is described that accurately detects phishing on the app's pages while giving little consideration to the literary form employed there. the suggestion to determine whether the shady URL is legitimate, a strategy based on online search functions employs a quick, accurate, and independent scan of the asked respondents. Like some newly formed valid sites might not work in the web index, those who have indeed organized 5 16 heuristics (source code-based sorting, input tag verifying, null as well as cracked URLs, Anchors, fake user form) with both the tool predicated on the website search feature to even further improve the precision of recognizing. Additionally, the approach may successfully arrange freshly developed lawful websites that aren't defined by the availability of internet search engine-based tactics. 1.10 Rich picture of the proposed solution The architecture that underlies the growing consideration. It has combined a banned with several heuristic criteria to check the legitimacy of both URLs. Google can suggest utilizing GOOGLE API SERVICES for both the blacklist and secure browsing since it regularly updates and maintains the blacklist, which is made up of 5 categories. These 5 categories are thought of as these 5 levels of detection. When a URL is entered, Phish Saver shows if the website is phishing, legitimate, or unidentified. It identified the URL by utilizing the domains with the highest frequency of HTML linkages. The five modules of the software and how they are utilized are as follows: Making use of blacklists This initial level of identification examines the URL's domains to either a listing of well-known websites to establish this legitimacy against the whole blacklist. It has been utilizing the GOOGLE SAFE BROWSING blacklist for it though because it is a reliable and often updated list of websites that have been prohibited. In doing so, it utilized Google Safe Browsing API Version 4. There are two different ways that this listing might be compared. Perhaps it will verify online, or else it will manually analyze the listing's URL shortener. It searches to the best of our ability; thus, an internet connection is required with this inquiry. If the analysis is successful and just a connection is found, identifying the website as more than simply a phishing site, the procedure comes to an end. The algorithm then moves on to the next module if not. Before continuing, the website is evaluated and saved as a DOM (Document Object Model) component. 17 1.11 Project scope Along with this proposed research on detecting the phishing website, the advantages and the related scope is having various sectors. The whole project scope is belonged to detecting phishing websites. The major beneficial sector for the research can be introduced as the users of the internet. By having this research about detecting the web pages it can have more advantages to the users by not getting victims for various kinds of websites which are working as phishing sites. The users may lose their sensitive data, valuable information, and their bank details with those phishing websites. Because of those factors, the scope within the project as the normal users can reach more benefits. Companies and organizational leaders can get advantages from this research as they can protect their businesses and organizations by not getting victims to phishing websites. most companies get lost because of phishing attempts. Many of them are getting happen with lower-level employees who are not having enough knowledge of phishing attempts and social engineering activities. Having this research, a successful one the business leaders and the organizational authorities can have protection over their work. Consider investigating phishing website detection for several reasons. Utilizing the internet and accessing websites has become necessary due to recent technological advancements. As a result, more crimes are being perpetrated online. Another sort of cybercrime that is on the rise daily is phishing websites. Users who visit phishing websites run several hazards. Phishing websites can have non-valuative effects. By preventing users from falling prey to online fraud, giving personal detail to a scammer, plus other suitable advantages of phishing just as a hacker’s weapon, phishing recognizing technologies work as a crucial part to seek are having a secured online activity. But, a lot of the current phishing detection technologies, especially those that depend on an existing blacklist, have flaws including poor detection accuracy and high false positive rates. These issues are frequently brought about by either a delay in updating the revocation list based on the classification's human confirmation or, more rarely, by human classification mistakes that may 18 lead to inaccurate class categorization. Numerous academics have been inspired to create various detection-enhancing techniques as a result of these significant hurdles. These significant difficulties have inspired. The prevalence of phishing websites on the Internet and in cyberspace has significantly increased. Phishing websites have many distinct negative outcomes. People visit phishing sites in response to problems and demands. As a result, the purpose of the study is to find phishing websites and provide information about them. References to recent instances of phishing websites are used to determine the goals and objectives of the research. Finding an appropriate method to identify phishing websites while lessening the impact on online platforms is the primary goal and target related to the research. Chapter 2 Literature Review 2.1 Chapter overview Phishing websites are a significant issue in the online world. As a result, several research organizations are looking at how to identify phishing websites. Various sorts of contemporary methods exist to identify phishing websites. The majority of researchers have experimented with their solutions as well as machine learning and algorithmic usage. In this chapter the existing systems, frameworks and designs are discussed. According to discussing the methodology for detecting phishing websites, it is better to study the existing methodologies. In this chapter, the current and existing systems, frameworks, and designs for detecting phishing websites are discussed. Some systems, frameworks, and designs are having several disadvantages when they are used with the methodologies. Within this chapter, the reviews on existing systems, designs, and frameworks are documented. 2.2 Conceptual taxonomy of the literature organization 19 Wenyin proposed (Wenyin, 2005) in this research, they provide a unique method for visual similarity detection of phishing sites. The method divides the websites into important sections using visual cues. It then uses three factors to compare the aesthetic similarities of the two sitblock-relatedated comparisons, layout suitability, and whole style similarities. If any of these connections to the real website are more than a minimum, a website is identified as suspected phishing. They used a test database with 328 dubious websites as a basis. The eight phishing websites are retrieved from the test data using queries that retrieve the six genuine websites they targeted. Results so far indicate that the method can identify phishing websites with very few false positives. The performance is also sufficient for practical use. They think that the method can be used as part of a corporate solution with an anti-phishing approach. The method can be used by website owners to identify phishing websites. In addition, using the proposed strategy does not only focus on detecting this type of phishing attack. It could be used to identify all malicious activities. fake websites that look exactly like every company and person. In addition, the researchers believe that the pattern measures generally proposed in this study can be used in additional industries. Research would later be conducted to investigate such possibilities. Kalaharsha proposed a way for (Kalaharsha, 2016) Phishing recently developed Website identification techniques based on machine learning classifiers with a wrapper function selection approach to address the issues raised here. Classification artificial related neural type networks, random level forests, and supportive vector machines are the algorithms applied. The provided URL is used to gather dynamic features, and the trained model is then applied to find phishing URLs. The first step in the procedure is to obtain the raw data set from websites held by UCI as well as Kaggle. To increase its dependability and value for both the machine learning algorithms to learn upon, the acquired raw set of data has been pre-processed. Data cleansing is usually done to get rid of garbage, lost, or incorrect information that might make ML algorithms work more difficult. Improved data management makes it simpler for machine learning algorithms to produce superior outcomes. The creation of ML models utilizing flexible algorithms comes later. Artificial neural networks, random forests, and support vector machines are among the 20 algorithms employed. Every algorithm is utilized to get the desired outcome and comes with its benefits. Each algorithm would generate an output, which is a prediction of whether the data given contains traits that point to a real website or even a phishing website. The suitable method is then selected as the model by evaluating the outcomes produced. Dynamic feature extraction is applied to obtain a trace of each recently input URL by the user and the algorithm can deliver correct results with recently record recorded order to boost reliability and effectiveness. In conclusion, the software which automates the aforementioned procedure has to be capable of figuring out if the URL of the website the user is attempting to reach is legitimate or phishing. Using has proposed a way for phishing website detection. (Ubing, 2019). The program chooses the 30 initial dataset attributes that have a significant impact on the result Forecast. Hence, save from a few characteristics, irrelevant Variables have no impact on that model's as well as its Forecast's reliability. Additionally, a variety of learning techniques are used in supervised methods to develop forecasting models. When making predictions, many categories are used so that the outcomes are not consistent with any single model. Thus, they demonstrate how the outcomes of all models are used and tabulated to calculate the vote's plurality. The ensemble's final prediction reveals when a webpage is acting, such instance if somehow the majority of models suggest that they are. The majority of the outcomes have an impact on the final prediction; thus, this study work presents the enhancement of reliability utilizing a method for selecting features and a predictive model employing ensemble learning. Then, the ensemble's highest significant findings from across all models are reviewed. They have indeed verified the accuracy Beof benchmarking involves comparing several learning models that have been evaluated using the Azure Machine Learning Studio. They have given a summary of the test run for this study. They begin only using a list of 177 characteristics, of these 38 are content-based and the remaining 177 are dependent on URLs. The majority of information features are generated from websites' technical (HTML) material. both external and internal links are included. The number of IFRAME tags, Blacklists as well as search engines should be checked to see if the source URLs for the IFRAME tag exist. examining login sections, and testing how the content is sent to the hosts (such as if TLS is utilized or if the GET and POST method has been used to send the applications’ passcode, among. others). 21 (Aljofey, 2018) Aljofey presented a way of attacks against phishing sites that present a significant issue for academics, particularly since they have been on the rise recently. Strategies like blacklisting and whitelisting are indeed the conventional means of reducing such hazards. Furthermore, those techniques fail to identify phishing sites that are not banned (i.e., 0-day attacks). To enhance machine learning methods are employed to enhance ability is required and decrease the percentage of incorrect classifications. Nevertheless, a few companies take complex and challenging to be using specialties via third-party providers, search browsers, website-related traffic, and many more. Throughout this paper, they provide a rapid and simple machine learningbased method. Utilizing every webpage's URL and HTML information, users may correctly recognize malicious URLs. This suggested method is indeed an entire customer alternative with no reliance on other resources. It employs clickable link properties and attributes in the Query string to automatically identify how a web publication's information, as well as URL, relate to one another. Additionally, their procedure removes TF-IDF character-level characteristics from either the HTML of the selected web publication's hectic and clear text portions. Additional Classifiers are utilized, but a large sample would be developed to gauge how well the phishing detection method is working. Additionally, the effectiveness of every subcategory of the suggested function Sentence is assessed. The Boost classifier delivers the greatest moment with the incorporation of many many different types of functions, as shown by practical and analytical data derived from the used classifiers. With their collected data, it had a rate of erroneous negatives of 1.39 percent and a high efficiency of 96.76 percent generally. 98.48 percent efficiency with a 2.09 percent percentage of false positives in reference collected data. (Zhang, 2021) Zhang presents a phishing website sensor system relying upon this CNN BiLSTM algorithm which may address the issues of current ways of identifying phishing URLs, Edge Detection, inability to recognize several phishing webpages, or inadequate edge detection. To find, some new phishing URLs, automatically generate URL attributes rather than using a blended neural network. The approach begins by parsing the URL's words according to sensitive keyword extraction. After which it transforms it into a vector matrix, involves extracting the local methods using CNN, and obtains its again be includes sustainability using BiLSTM. The activation 22 function softmax categorizes and enters the multi-level functions into the whole connection layer. The findings demonstrate that the suggested phishing web identification method is founded on CNN-BiLSTM and produces successful performance in correctness, average accuracy, and F1 value when compared to character stage CNN, word stage CNN, as well as certain many techniques of analysis for malicious links. Additional research will construct phishing URLs using the adverse generating network as feed to assess the resilience of the developed framework. Using previous research, they present a phishing identification mechanism utilizing CNN along with Bi-directional Long Short-Term Memory known as Bi-LSTM, built on sensitivity phrase splitting - significant utilization 2 different URL feature extraction while transforming the URL to an eigenvector matrix; add Bi-LSTM depending on a convolutional neural model to acquire URL distant includes sustainable According to experimental findings, this approach can provide high F1 values, recall rates, and accuracy levels. (Marchal, 2019) Marchel presented a way for those who ho have a lower likelihood of being identified by their algorithm. The versatility that DNS allow lows phishers to alter the server site of most phishing data whilst maintaining a similar link, but relying on IP addresses instead of domains robs them of such an ability. Additionally, IP blacklisting would be frequently often used to block entry to illegal stability and durability, meaning that phishers would encounter additional issues. Limiting the amount of data on a website page by using fewer external links, avoiding loading other material, and using shorter URLs is yet another alternative strategy [30]. They looked A few of these methods are applied singly on the web pages for the two phishing data sources included in the assessment. This had no impact on that classifier's effectiveness since, even though certain characteristics cannot be calculated. Ones dependent on the headlines, take-off URL, and recorded links, can still result in accurate phishing identification. Separately Utilizing various avoidance methods might compromise the classifier's ability to perform. Nevertheless, employing such deceptions will affect the phishing wewebpage’sffectiveness and lower the number of offenders. One way to limit textual content on websites is through graphics information. While these aspects of this approach can be used to identify these sites, implementing them would make the identification process easier. Using OCR to extract the text from the webpage screenshot is one way to handle this. The usage of individuals who may 23 experience websites and misused phrases in the many data evaluated is a potential avoidance method. This distributed analysis measure should indicate no resemblance when phrases like Paypal, paypaI, or paipal are found in many sites yet are unique yet comparable. Thus, the Classification will probably conclude that now the website is legitimate. The genuine objective would, though, become clear if there were allusions to the objective. Additionally, spelling errors might serve as victims'-clues. This isn't the ideal avoidance tactic for target selection, but instead, concentrate on the inclusion of baits inside the post that contains the hyperlink to a fictitious webpage. But this comes with two major drawbacks: Firstly, that makes the phishing web seem less than trustworthy, and secondly, it opens the phisher to threatening detection methods used with material apart from websites. (Abusaimeh, 2021) Throughout this document, Abusaimeh suggested examining the issue of phishing Websites by incorporating multiple detection systems, known as (Random Forest, Decision tree as well as Support vector machine), throughout furthermore for employing shapes individually for contrast with both the developed framework. The proposition was put in place and analyzed only with aid of the data. The findings revealed that although the three methods particularly form different outcomes, they were all less accurate than the suggested approach in terms of overall phishing site identification. Classifiers were used for categorization in this study. The predictor was Manuel. Findings using a recording feature connection data file demonstrated that the suggested model's reliability increase over the detection is sufficient (1.2). (ARFF). The efficiency of phishing site identification sites using the conceptual approach and the various methods separately were compared. The findings revealed that perhaps the suggested model outperformed the decision tree classifier by 2.584%, the SVM model by 3.0996%, and ultimately the random forest model by 1.2% in terms of accuracy. As a result, the suggested methodology has been demonstrated to be quite successful in identifying phishing websites. According to the findings of each unit, the suggested model had the best average accuracy (98.5256%), followed by the random forest level (97%), supportive vector machine model (95%), and decision tree model (95%). This leads to the conclusion that the 3 kinds of recognition are used to check the proposed framework. Additionally, they also illustrated in this research the drawbacks of employing URL algorithms to discover phishing websites. The durations of addresses (URLs) are 24 one instance that, while they currently provide accuracy in the identification of phishing sites, may cease from doing so in the later. Also, with extreme phishing created intended to deceive experienced users, this research could be quite successful. (Basnet, 2021) Basnet suggested the categorization of phishing Websites in research work using modern browsers, and reputational, and quantity key phrase rate algorithms. The suggested attributes are highly significant for the automated identification and categorization of phishing URLs, as was the experiment. They compared the outcomes of their technique with those of many well-known methods of guided training to assess it. The suggested anti-phishing system could accurately identify phishing URLs significantly greater than 99.4%, according to scientific results. keeping the incorrect affirmative and incorrect negative percentages to 0.5%. They have demonstrated that their initial prototype, after being educated, could quickly and accurately determine if a given URL was phishing or not. Except for the Naïve Bayes classifier, the majority of classifications displayed substantially comparable metrics. The Random Forest (RF) classifier offered the optimum balance among classifier and learning and testing time for their challenge. Inside the majority of studies, RF greatly exceeded all other Classifiers. We've demonstrated that choosing a relevant trained model and continually updating algorithms with new data are essential steps in properly adjusting to the flow of URLs as well as their functionalities that are always changing. (Shareef, 2020) Shareef recommended his work in this research, 3 detections are merged to look at the issue of online phishing. These are Decision Tree, SVM, and Random Forest (individually). Those 3 monitors produced answers that were easily differentiated from one another, but they all had lower error rates than the entire group when it came to applying to the phishing issue. The suggestion will be put into practice and assessed Record. In this study, categorization is accomplished using the SVM multiclass classifier. The accuracy enhancement predicted in comparison to the detector was shown by experimental findings upon that ARFF dataset to be roughly 1.2. The randomized forest detector is 1.2% less accurate than the three-level model. Comparing it to SVM alone, its accuracy is 3.0996% higher. Moreover, the accuracy in identifying phishing websites is significantly 2.584% greater while utilizing the Decision Tree as a whole than 25 while using it alone. As a result, it is excellent at identifying phishing websites. As they display the findings for every detector, Random Forest, SVM, and Decision Tree, which were assessed, have respective identification accuracy values of 97.25%, 95.35%, and 95.87%. The ensemble, however, outperformed the maximum accuracy score (98.52%) of the group. By utilizing the variety of the three detectors, it can be safely stated that the composition has established its reliability. Furthermore, it emphasized the drawbacks of adopting URL characteristics like URL Lengths, which seem to provide higher accuracy but might not do so relatively soon. Their component and categorization speeds are incredibly fast, demonstrating the real-time operation capability of their method. This method is probably quite successful versus contemporary phishing techniques like severe phishing, which aims to trick highly seasoned customers. 2.3 Existing Systems / Frameworks / Designs Phishing websites are a serious problem across cyberspace. Therefore, several research groups are researching the case of detecting phishing websites. There are different types of current solutions to detect phishing websites. Most researchers have tried their solutions along with machine learning and the use of algorithms. According to [1], they have found a way to detect phishing websites as follows. Using visual clues, the approach splits the web pages into relevant components. It then compares the visual similarities of the two sites using three criteria: block-level comparison, layout likelihood, and whole-style likelihood. When those links to the genuine website exceed a certain threshold, a website is flagged as potentially phishing. With the research done by [3], they suggested a system according to the following techniques. To solve the challenges stated above, phishing recently created Website recognition approaches based on machine learning classifiers with a wrapper function selection approach. The techniques used for classification include random forests, supportive vector machines, and artificial neural networks. The given URL is utilized to collect dynamic information, after which the trained model is used to identify phishing URLs. 26 The research done by [4], they have invented a solution to detect phishing websites. A synopsis of the trial run throughout the entire research has been provided. The only qualities they begin with are a list of 177 where 38 considering as content related also the other 177 become reliant on URLs. The bulk of informational elements on websites is created using specialized (HTML) content. There are both internal and external links. To determine whether the source URLs for the IFRAME tag exist, it is important to examine the number of IFRAME tags, Blacklists, and search engines. scrutinizing the login pages, and checking the process used to send the material to the hosts. With the research done with [5], the solution to detect phishing websites can take as an existing system. Machine learning-based techniques getting to improve classification accuracy and limit the frequency of false positives. However, some businesses find it complicated to use capabilities via third-party suppliers, search engines, website traffic, and many more. They offer a quick and easy machine learning-based solution in this study. Users may accurately identify fraudulent URLs by using each webpage's URL and HTML information. The recommended approach is a customeronly option that doesn't rely on outside sources. It uses clickable link characteristics and properties in the Query string to figure out how an online publication's URL and content connect. Within [6], Researchers describe a phishing website sensor system based on the CNN BiLSTM algorithm that may address difficulties with current methods of detecting phishing URLs, Edge Detection, inability to recognize a large number of phishing webpages, or insufficient Edge Detection, instead of employing a combined neural network, automatically construct URL characteristics to locate some new phishing URLs. Beginning with sensitive keyword extraction, the method parses the words in the URL. [7] have their system for detecting phishing websites. A phishing identification that depends on the headline, rip-off URL, and recorded links can nevertheless be successful as their research. Separately employing different avoidance techniques could impair the classifier's performance. Using such tricks will lessen the number of offenders and alter how successful phishing websites are. Through the use of graphical data, text material on websites may be restricted. Although 27 these elements of the technique can be utilized to locate these locations as their research, putting them into practice would facilitate location. One solution to this is to get OCR for extracting the character from a screenshot of the webpage. A last potential avoidance strategy is the use of people who may encounter websites and terms that are abused in the numerous datasets that are evaluated. As the research done by [8] The authors of this publication advised using several detection technologies to investigate the problem of phishing Websites. Along with using each form separately to contrast with the created framework, moreover. Only the data were used to implement and assess the suggestion. The results showed that even though each of the three strategies produced distinct results, they were all less reliable than the proposed strategy for identifying phishing sites in general. In this work, categorization was carried out using classifiers. The methodology worked as Manuel served as the predictor. According to [9] Researchers employ reputational, quantitative key phrases, and current browsers to categorize phishing websites. The experiment revealed that the proposed qualities are crucial for the automated detection and classification of phishing URLs. They evaluated their strategy by comparing its results to those of many widely used guided training techniques. According to research, the proposed anti-phishing system could detect phishing URLs with an accuracy rate that was much higher than 99.4%. limiting the wrong positive and wrong negative percentages to 0.5% each. They have shown that their original model can rapidly and reliably assess whether a given URL is phishing or not after being taught. Within that study [10], three detectors are combined to examine the problem of online phishing. These are, in fact, Random Forests, SVM, and Decision Trees. These 3 monitors provided responses that were noticeably diverse from one another, yet when it came to applying to the phishing issue, they all had lower mistake rates than the rest of the group. The idea will be implemented and evaluated Record. The SVM multiclass classifier is used in this work to categorize data. Experiment-related results relying on the ARFF dataset show their accuracy 28 improvement expected relative to the detector was about 1.2. And 3 level model was considered 1.2% more accurate than their randomized forest detection. 2.4 Technological Analysis Phishing websites are a serious problem across cyberspace. There are different types of current solutions to detect phishing websites. Most researchers have tried their solutions along with machine learning and the use of algorithms. Phishing experts have developed a method to identify phishing webs. They use block-level comparison, layout likeliness, and whole style likeliness to assess the similarity between the two sites. If any of these links to the genuine website exceed a certain threshold, a website is flagged as potentially phishing. Phishing recently created Website recognition approaches based on machine learning classifiers with a wrapper function selection approach. The techniques used for classification include random forests, support vector machines, and artificial neural networks. Phishing researchers suggested a system according to the following techniques. The given URL is utilized to collect dynamic information, after which the trained model is used to identify phishing URLs. The research done by [4], they have invented a solution to detect phishing websites. The bulk of HTML elements on websites is created using specialized (HTML) content. To determine whether the source URLs exist, it is important to examine the amount of IFFAME tags, Blacklists, and search engines scrutinizing the login pages, and check the process used to send material to the hosts. To determine whether the source URLs for the IFRAME tag exist, it is important to examine the number of IFRAME tags, Blacklists, and search engines. Some businesses find it complicated to use features via 3rd party suppliers, search browsers, web traffic, and many more. They offer a quick and easy machine learning-based solution in this study. Users may accurately identify fraudulent URLs by using each webpage's URL and HTML information. Machine learning techniques are used to reduce the frequency of false positives. 29 Researchers describe a phishing website sensor system related to the CNN BiLSTM algorithm within [6]. The system may address issues with current methods of detecting phishing URLs, such as Edge Detection, the inability to recognize a large number of phishing web pages, or insufficient Edge Detection. A phishing website sensor system based on the CNN BiLSTM algorithm is described by researchers as a potential solution to the problems with the present techniques of phishing URL detection. To find some novel phishing URLs, autonomously create URL attributes rather than using a mixed neural network. The technique parses the text in the URL after extracting sensitive keywords first. A phishing identification that depends on the headline, rip-off URL, and recorded links can nevertheless be successful as their research. One solution to this is to get OCR for extracting the character from their screenshot of the webpage. Using such tricks will lessen the number of offenders and alter how successful phishing websites are. The methodology worked as Manuel served as the predictor. The results showed that, even though each of the three strategies produced distinct results, they were all less reliable than the proposed strategy for identifying phishing sites in general. In this work, categorization was carried out using classifiers. Researchers employ reputational, quantitative key phrases and current browsers to categorize phishing websites. They have shown that their original model can rapidly and reliably assess whether a given URL is phishing or not after being taught. According to research, the proposed anti-phishing system could detect phishing URLs with an accuracy rate that was much higher than 99.4%. limiting the wrong positive and wrong negative percentages to 0.5%. Random Forest, SVM, and Decision Tree are combined to examine the problem of online phishing. Results on the ARFF dataset indicated that the accuracy improvement expected relative to the detector was about 1.2%. The three-level model is 1% more accurate than the randomized forest detector. 30 2.5 Reflection To increase the average accuracy, certain tasks are culled out from a few already in use. 1. Decrease false results A linear model is provided by machine learning for the categorization challenge. Many classifications have significant false-positive rates, which means that while the domains are valid, the system identifies these as fraudulent websites. As just a result, people are prevented from accessing the specific website. End customers might have no trouble accessing reliable sites if they are diminished. End customers might have no trouble accessing reliable sites if they are diminished. 2. Do away with false negatives By forecasting efficiency, the classifications provide false negatives, meaning that although the domains are fraudulent, the models label these as genuine, which causes harm such as network infection and reputation damage. 3. The time required to analyze datasets Sets of data are crucial for supervised learning. Because the training set is unaware of several phishing threat vectors, it might not be capable of anticipating accurately when utilizing old information. The answer is to use existing large datasets. Since there are few data points, modeling Because the classifications would be taught more quickly, their training duration is unknown. The modeling duration would change if indeed the size of the sets varies, thus when can utilize data sets, the modeling time will be accurately recognized. 4. Function identification and application Any website may be identified by a variety of aspects, including its URL, page, resource activities, domain functional areas, source code, and so forth. It is challenging to choose which characteristics may be employed to create a model to achieve greater detection performance. 31 Predictions outcomes could not be precise even though only a single function was employed for detection. Utilizing a website's many features provides additional knowledge well about the site, which aids in detection. 5. Strong words The use of delicate phrases like mail, bank, SMS, and other similar terms will affect site prediction. The outcomes provide a hint of the east. 6. Embedded Objects If the site contains embedded items including I-frames, flash, and so forth., an identification system that utilizes a website's program code to forecast websites might not be able to recognize them adequately. 3. Methodology3.1 Feasibility study Internet retail as well as internet banking are popular ways to pay. E-banking phishing services are widespread. The technology employs a powerful heuristics algorithm for identifying ebanking phishing websites. Any phishing website for digital transactions may be identified using several key characteristics including the URL but also Domain Identity, as well as security and encryption standards. The financial Feasibility of the e-commerce corporation may utilize such a system to administer every aspect of the transactions. securely This e-commerce business's efficiency, as well as revenue, will grow thanks to this method. East will provide financial advantages. It covers the estimation and description of all anticipated advantages. 32 3.2 Operations Feasibility That method is now more inexpensive, better readily feasible, and so more dependable. The following are the criteria taken into account in the planning and creation of this work. Engineering, as well as managerial activities,e applied appropriately as well as on schedule throughout this program's design and growth stages to achieve the above. 3.3 Technology Feasibility The design allows utilizing a secure surfing blacklisted list, which is a collection of URLs and descriptions of webpages that have been confirmed to host infection or phishing scams. This program needs a certain minimum set of hardware to function. Java was used to construct this system. East Internet access is necessary for the app and location to do an internet search. 3.4 Research Approach Phishing identification using heuristics Instead of depending on which was before lists, heuristicbased algorithms gather features out of a website page to assess the validity of the site. The majority of these methods are taken out from the target web publication's URL and HTML document object model (DOM). To assess site validity, the retrieved attributes are checked to include those gathered from genuine and phishing websites. Several of these methods compute the spoof rating and authenticate a certain URL using heuristics. This architecture underlying increasingly considering. To confirm the validity of both the URL, it has combined a blacklisted as well as a variety of heuristic criteria. Since Google continuously updates as well as maintains its secure surfing blacklist, which itself is comprised of 5 groups, it can propose to be using GOOGLE API SERVICES for both the blacklist. These 5 categories are regarded as such 5 detecting stages. Phish Saver accepts a URL as an input and displays the website's state, such as phishing, authentic, or unidentified. Using the highest occurrence of 33 domains derived using HTML hyperlinks, it determined the URL's identity. Listed below are the five modules of the program and how they are used: Utilization using blacklists to confirm this authenticity against the whole blacklist, this initial stage of identification checks the URL's domains to either a listing of well-known websites. Because it is a trustworthy and regularly updated list of websites that have been banned, it has been using the GOOGLE SAFE BROWSING blacklist for it though. With that, it made advantage of the Google Safe Browsing API Version 4. This listing can be compared in two distinct manners. Maybe it checks online else it gets the URL shortener of the listing to evaluate manually. It performs a search to our capacity; thus, an internet link is necessary for such a query. The process ends if somehow the analysis is effective and just a connection is discovered, marking the website as more than just a phishing site. If not, then the algorithm moves on to the following module. The website is examined before continuing and stored as a DOM (Document Object Model) component. Login page detection To develop bogus registration forms and collect confidential data, phishers employ phishing development tools. Internet consumers frequently divulge critical information on login pages, as is universally acknowledged. The best way to identify phishing websites would be to search for those that have an authentication server. A webpage cannot be classified as just a phishing site if it lacks a user account due to the lack of a mechanism for a consumer to divulge personal details. Besides examining the website's HTML for the input element = "password," it's indeed possible to determine whether the login page seems to be present. This Phish Saver application can keep running if the password referring to the technique is available; else, this will halt because the consumer will not be given the chance to fill in any sensitive data. By blocking the identification of phishing on regular web pages without login fields, such filtration could lower the identification application's mistake frequency. 34 Footer links leading to NULL Null footer links are those with a missing value or character and therefore don't point toward any other websites. A NULL anchoring is a label that has an anchoring and refers again to a NULL result. This alludes to the hyperlink that leads to its page. It arrived at level 3 of the identification heuristic after this data point. There examine webpage footer links, mainly ones with null values, in stage 3 of identification. Phishers prioritize keeping customers just on the signup form. As a consequence, designers create the application such that it contains these zero-footer hyperlinks, which causes users to be continually redirected to a page with a sign-up box. Consequently, to identify phishing sites, many researchers looked at the ratio of zero links to any links. However, there is a catch Since some of the trustworthy websites could also have links to null, such as business logos linking to null. However, the truth is that neither of the trustworthy websites has links toward null in their footers. That finding led us here to construct a heuristic factor, H, to exclude phishing websites, which stands for "if the anchoring tag inside the footer region links to null," or "if the anchoring tag is empty." <a href = “#”> <a href = “#skipping” <a href = “#insight”> when this is the case, Phish Saver treats this URL as just a phishing URL; else, it moves on to the subsequent stage of identification. Using the content for title and copyrights Within the detection level, the tag of <div> is used for the section in copyright as well as it used the tag of <title> to provide the content with the extraction via DOM objectives to identify domain-related datasets. It utilizes that data to spot phishing because it is customary for all respectable websites and provide domains inside the copyright as well as title box. Extracting and 35 tokenizing all copyright as well as title material onto terms. This URL is checked for every tokenized phrase. A reputable website can be identified based on the match between the copyright as well as domain info. Whenever there are no similarities, each URL is flagged for phishing and the processed content is sent to the following filter since the copyright includes destination data and thus is distinction URL domains, which is suspicious on anything. Irrespective of both the outcomes of this unit, this algorithm continues to another, Website identity Depending on how frequently there are connections just on the website, the identification of such a website is established. On something like a trustworthy website, the number of hyperlinks connecting to your domain is much more frequent than those referring to other domains. Links pointing to the particular domain are inserted on phishers' websites because fraudsters attempt to imitate the actions of trustworthy websites. Through computing the domains of the connection also with max frequency, the data is utilized to determine the identification of the webpage at the supplied URL. The entrance URL is regarded as a phishing site seeking the domain also with max frequency if indeed the domain of both the Phish Saver app input URL doesn't meet any domain also with max frequency (web identification). In addition to spotting malicious URLs, the filtering also recognizes the destination domain that has been imitated. Even if phishing has just been flagged by earlier filtering, processed HTML material is forcedly pushed thru this filtering to ensure that the user may see the targeted server. 3.5 Requirement Specification Chapter Overview This section explains an overview of requirement-gathering techniques and procedures. According to survey results, it was helpful to gather information for implementing to Phish Shield tool. Phish Shield is a web application, it can be verifying a URL is phishing or legitimate. 36 Questionnaires According to the survey result, most of the participants know to identify online phishing attacks and phishing types but, they have minor knowledge about phishing prevention methods. 95% of participants use the internet frequently and the majority answered "Yes" for Question 13 (Would you like us to introduce new ways to detect phishing websites?) 35% of participants answered “Excellent” for basic computer knowledge, and the majority answered "Yes" for Question 10 (Can you detect phishing emails or websites before scamming you?). But other participants don’t know how to detect phishing sites before scamming. Therefore, this research looks at the factors behind phishing websites and establishes an effective way to detect phishing attempts. 37 System Functional Design Functional Requirements Req.ID Name 1 Open the Phish Shield tool 2 Paste the URL 3 View the result Description The user must open the Phish Shield tool. When found the unsafe URL user can paste and search. Users can view the search URL is safe or unsafe. Non-Functional Requirements Efficiency Maintainability Usability Response time Availability Portability Reliability Platform compatibility User Friendly 38 Priority Essential Essential Desirable Use case diagram 39 Design Architecture Diagram Frontend (React JS) Respond Request Backend Web Service (Java Spring Boot) Google Safe Browsing Lookup API (v4) PhishTank downloadable databases JavaScript and Java programming languages were used to develop this project. React framework is used for the front-end development and Java Spring boot is used for the back-end development. According to the architecture diagram of Google Safe Browsing Lookup API (v4) and PhishTank, a downloadable database check takes the URL as input from the UI level and displays the result if the URL is safe or not. The application's priority for the Google Safe Browsing Lookup API and if not identified anything the application check the PhishTank downloadable database. 40 The following data can be displayed from the API and downloadable database • • • • • • • Submission Time. Verified. Verification Time. Online. Target Threat Type Platform Type UI Main Interface 41 Phishing URL Detection Safe URL Detection 42 3.6 Testing and results The Secure Browser Blacklisted API and URL site content, including such null footer hyperlinks, copyright as well as title text, are all used by "Phish Saver." The utility was created using NetBeans 8.2 IDE, the Java Compiler, the JSoup API, and indeed the Chrome Driver. The API was utilized to retrieve HTML elements including footer links, copyright, titles, and CSS from the web page's HTML code. A third-party driver program is known as Chrome Driver. Chrome Driver is a third-party source utility that enables Java applications to launch the Chrome browser. As such main method of identification again for the blacklist, it does an internet search using Google's Safe Browsing API. It uses the portal Phish Tank when referencing phishing URLs for assessment. On the anti-phishing website Phish Tank, anybody may publish, confirm, follow, or exchange phishing data. It has a list of recognized, legitimate, online, and offline phishing in its phishing file. After this website, a collection of 250 legitimate, invalid, offline, as well as online phishing site URLs were collected to assess the effectiveness of the Phish Saver program in identifying phishing websites. Chapter 4 Results and Observations 4.1 Chapter Overview This study introduced a heuristic-related phishing identification method that makes use of URLrelated features. The methodology includes URL-related aspects from past research with new ones by looking at the URLs of phishing websites. Additionally, it used a variety of machine learning algorithms to produce classifications and discovered that the random forest was now, 43 in fact, the superior model. Excellent accuracy (98%) plus a minimum rate of false positives were both achieved. The recommended method is capable in discover modern and temporary state phishing websites that get conventional approaches to identification, such as blacklist-related methods, and can protect sensitive data and decrease the damage caused by phishing attacks. A subsequent study intends to address the shortcoming of the moment heuristic-based approach. This heuristic-based method takes a while to establish classes and carry out categorization with so much data. As a result, it plans to use strategies to streamline the features and increase speed. It will also examine a cutting-edge phishing detection technique that improves performance by using JavaScript components rather than HTML elements in addition to URL-based features. 4.2 Proposed Method URL Composition The protocol used only to determine the exact location of information on such a network is indeed the URL. The protocol, the protocol name, and the URL http://drive.google.com/phishing Protocol TLD Path domain Sub domain Primary domain Figure 1 Structure for the URL Top-level domain (TLD), path domain, parent domain, and subdomain. [6]. The term "domain" throughout this research refers to the combination of the primary domain, the TLD, and thus any 44 subdomains. The parts of a URL are displayed individually in Figure 1. The term "protocol" describes a set of rules for communicating among computers, such as HTTP, FTP, HTTPS, etc. Different kinds of protocols may employ depending just on the preferred transmission medium. The subdomain is indeed an additional domain assigned towards the domain which comes in a variety of forms based on the features that the domain n page offers. The domain is indeed the name assigned by the Domain Name System (DNS) to the actual Internet Protocol (IP) address (DNS). The much more crucial component of such a domain is indeed the primary level domain. The top-level domain known as TLD, such as .net,.com or, Up, and many more., is indeed the domain that occupies certainly stands in the domain name hierarchy structure [7]. All URL module's attributes are defined; these features are utilized to identify phishing websites. URL Specifications • Google Suggestions are related to Attributes. Whenever a client inserts a single phrase, authorities provide a recommended word. By inputting the URLs of authentic as well as phishing sites, it assesses the Google Suggestion outcomes. The inputted URL is questionable when a search word is identical to a recommended result since the recommended site can be copying an already existing structure. To identify phishing websites, it employs the Levenshtein distance among the 2 phrases (the Google recommendation result and indeed the search query). Additionally, a keyword search website could be impersonating a trustworthy website if a proposed result matches one from a domain that would be on the recognized whitelist., it may use this functionality to find malicious websites. As a result, several URL functions have indeed been utilized in different finding effective investigations. To detect current phishing sites, it develops 2 additional functions as well as merge features from earlier studies into the first. 45 • Using the suggested method, the function was constructed to detect newly formed phishing sites. Nowadays, phishing sites frequently conceal the primary domain; most URLs of many phishing sites contain abnormally lengthy subdomains, preventing users from detecting that somehow a site is not authentic. To assess whether a URL is a phishing site, it thus implemented a method that measures the duration of subdomains. Because phones have tiny screens and it might be challenging to view the complete URL, phishing websites targeting target mobile vulnerabilities can also be found using this function. One recent update that matches contemporary phishing practices. Eight phrases that have been designated as phishing phrases are part of this functionality. Several phishing phrases are verified in the URL of either a search request. Prior research revealed that such a characteristic performed well, although it discovered that alterations had subsequently taken place. As a result, it developed a phishing detection mechanism that included 8 new phishing phrases that it discovered via trials. As mentioned above, our suggested strategy uses novel aspects that haven't been applied in earlier studies. It also improves the capabilities of earlier tasks to deliver improved phishing detection accuracy. 46 4.3 The Architecture The suggested phishing detecting procedure is shown in Figure, and now it comprises 2 stages: training as well as detection. Legitimat e site URLs Phishing site URLs Request Site URL Feature Extraction Feature Extraction Classifier Generator Classifier Training Phase Phishing Legitimat e Detection Phase Figure 2 Detection Method 47 A classification is created during the learning stage utilizing URLs that were previously gathered both from authentic as well as phishing websites. The functional extraction receives the gathered URLs and uses preset URL-based methods to retrieve objective functions. This classification generator creates a classification that uses the input data as well as the learning algorithm after receiving the retrieved features. The classification assesses whether a request website is indeed a phishing site during the detection process. The functional extraction receives the URL of the requesting website whenever a page request is made, and it uses that URL to retrieve objective functions using preset URL-based algorithms. The classification receives these selected features. Basis of knowledge acquired; the classification assesses if a website is a phishing website. The person who requested the site is then informed of the rank outcome. Algorithms It explores several different machine learning methods, including the supportive vector machine (SVM), decision tree, naive Bayes, k Nearest neighbor (KNN) as well as random level forest, finding the classification with the greatest performance for the application of URL-based functions. Boser, and Gyon, with Vapnik proposed the SVM classification technique around 1992. It is indeed a statistic learning method that categorizes the data utilizing support vectors, a subset of both the training images. To identify a judgment area that really can divide the pieces of data into two groups with a possibility of allowing between them, SVM is supported by the theory of structural risk reduction. SVM has the benefit of learning in huge open areas with a small number of training examples. • Quinlan presented the decision tree as just a classification technique in 1992. To categorize the data, make a tree form. A characteristic is represented by each inner tree node, and the node edges split the input according to the site's values. A leaf node and then a decision region are both present inside the decision tree. The prescribed circumstances divide the data at every leaf 48 node or just the associated decision area after determining their status. Although the decision tree seems quick and simple to use, there is a chance of classifier. • Regarding classification problems, Naive Bayes is indeed a classifier that does reasonably well. This basic Bayes hypothesis serves as its foundation. It is among the most effective learning systems for categorizing text. The naive Bayes is managed to train in guided learning thanks to the conditional models feature. He points out the benefit of learning elements of good from limited training sets. The non-parametric categorization technique KNN is used. It has already been effectively used to solve several data-gathering issues. use k training data that seem to be comparable to the inputs to evaluate the inputs. The connection between both the intake and trained data is calculated by KNN using the Distance measure. It gathers the URLs of trustworthy and phishing sites to assess their effectiveness using a set of trial data. It gathered 3,000 URLs for real sites using DMOZ and 3,000 URLs for phishing sites via Phish Tank. A k-fold pass was used to evaluate the results. With K Fold crossing validation, all incoming data is separated into k parts, with k1 parts being gathered for the r train and the rest of the sets being utilized for verification. Because all samples can be utilized for both training as well as validation, this procedure is repeated k times, where k is the number of split samples. With such a short cell, the technique is often used to evaluate classification performance. Throughout this research, it evaluated the identification method using ten-fold cross-validation. He ran the experiments using the free machine learning program WEKA and evaluated how well each of the methods specified in the Subsection worked. The terms TP - true positive, FP (false positive), TN (true negative), as well as FN were used to determine accuracy (false negative). He used computed accuracy to evaluate every classifier's effectiveness. This matrix in Figure 3 is shown as TP, TN, FP, as well as FN. 49 Prediction Actual Positive Negative True True Positive False Negative False False Positive True Negative Table 1 TP, TN, FP, FN Matrixes This phishing site is unquestionably a phishing site, and indeed the proportion FN indicates the likelihood that a particular phishing site is truly a legitimate website. Additionally, FP seems to be the ratio that denotes the likelihood that a certain genuine site is a phishing site, whereas TN indicates the likelihood that a specific reputable site is indeed authentic. The TN, FP, TP, and FN ratios for every machine-learning algorithm are displayed in Table 2. He calculated the TP, TN, FP as well as FN ratios from the tests to compute three metrics that he utilized to evaluate the effectiveness of each method. The real negative rate's specificity was the subject of the initial measurement. The next was the genuine positive rate's responsiveness. The complicating component was indeed the general ratio correctness of the forecast that a particular lawful site is considered objective compared to a corresponding phishing site. 50 4.4. Implementation Tools and Technologies Java and JavaScript are selected as the main development language. Description Software Version Comment Windows Os Java Runtime Environment (JDK/JRE) IDE- Visual Studio Code (For web interface development) Framework (React) IDE-IntelliJ IDEA (For web service development) Gradle Build tool 10 17.0.5 N/A N/A 1.74.2 N/A 18.2.0 2022.3.1 (Community Edition) N/A N/A 7.6 N/A URL Detection component Figure 3_URL Detection component 51 The Phish Shield Home page is the main part of the application. On this page, the URL is input from the UI level and displays the result if the URL is safe or not. User Interface codes Forms.js Interface level Figure 4_Forms.js Interface level 52 Form.js Results viewer Figure 5_Form.js Results viewer Backend Level Google safe browsing and PhishTank provide open APIs and databases for developers and researchers to integrate anti-phishing data into their application development. Google Safe Browsing Lookup API(v4) and PhishTank downloadable database are used for Phishing URL identifications. 53 Google Safe Browsing Lookup API(v4) The Safe Browsing APIs (v4) check URLs against Google's constantly updated lists of unsafe web resources. Examples of unsafe web resources are social engineering sites (phishing and deceptive sites) and site that host malware or unwanted software. Any URL found on a Safe Browsing list is considered unsafe. Lookup API (v4) The Lookup API send URLs to the Google Safe Browsing server to check their status. The API is simple and easy to use, as it avoids the complexities of the Update API. Checking URLs To check if a URL is on a Safe Browsing list, send an HTTP POST request to the threat matches. find method: • The HTTP POST request can include up to 500 URLs. • The HTTP POST response returns the matching URLs along with the cache duration 54 Method: threat matches. find Request header The request header includes the request URL and the content type. API key for API_KEY in the URL. Figure 6_Request header API key and API URL Figure 7_API key and API URL 55 Request Body The request body includes the client information (ID and version) and the threat information (the list names and the URLs). HTTP request Figure 8_HTTP request The thread type, platform type, and threatEntryType fields are combined to identify (name) Safe Browsing lists. According to the above figure, two lists are identified: MALWARE/WINDOWS/URL and SOCIAL_ENGINEERING/WINDOWS/URL. The threatEntries array contains URLs that will be checked against the two Safe Browsing lists. 56 Respond Body The response body includes the match information (the list names and the URLs found on those lists, the metadata, if available, and the cache durations). Figure 9_Respond Body 57 The matches object lists the names of the Safe Browsing lists and the URLs—if there is a match. The threatEntryMetadata field is optional and provides additional information about the threat match. Currently, metadata is available for the MALWARE/WINDOWS/URL Safe Browsing list. PhishTank downloadable database PhishTank provides downloadable databases for phishing detection. Available in multiple formats and updated hourly. Figure 10_PhishTank downloadable database 58 PhishingURLDetail file and PhishingURLInput file are used to read and get details from the Phishtank downloadable database (URLs.json). PhishingURLDetail Figure 11_PhishingURLDetail Data-PhishingURLInput Figure 12_Data-PhishingURLInput 59 Column Definitions phish_id The ID number by which Phishtank refers to a phish submission. phish_detail_url PhishTank detail URL for the phish, where you can view data about the phish, including a screenshot and the community votes. URL The phishing URL. This is always a string, and in the XML feeds may be a CDATA block. submission_time The date and time at which this phish was reported to Phishtank. This is an ISO 8601 formatted date. verified Whether or not this phish has been verified by our community. In these data files, this will always be the string 'yes' since we only supply verified phishes in these files. verification_time The date and time at which the phish was verified as valid by our community. This is an ISO 8601 formatted date. online Whether or not the phish is online and operational. In these data files, this will always be the string 'yes' since we only supply online phishes in these files. target The name of the company or brand the phish is impersonating, if it's known. 60 4.5 Chapter Summary It presented a heuristic-based phishing detection method in this research that uses URL-based functionality. Through examining the URLs of phishing websites, the technology utilizes URLbased elements from earlier research with novel ones. Additionally, it created classifications using several machine-learning methods and found now the random forest was indeed the better model. The accuracy was excellent (98%) and the False positive rate was minimal. The suggested method can protect sensitive data and lessen the harm done by phishing assaults by being able to identify fresh and transient phishing sites which elude traditional approaches to detection, like blacklist-based methods. It plans to overcome the drawback of the moment heuristic-based method in further work. This heuristic-based technique requires a long time to create classifications and conduct categorization with such a lot of information. As a result, it intends to employ techniques to simplify the features as well as boost speed. He will also look at a novel phishing detection method that enhances efficiency by utilizing HTML but instead JavaScript elements in addition to URL-based characteristics. Chapter 5 Conclusion 5.1 Chapter Overview The shift in the contemporary technological era has led to the emergence of a new sort of cyber danger. Phishing websites have grown to be a significant cybersecurity issue and a concern in internet domains. Phishing websites have often become a big issue for online financial businesses. Phishing attacks have been made possible by the rough vulnerabilities on websites. The web servers are vulnerable because of the flaws in the websites. These changes allow phishers to target their assaults without upsetting the website owners. In this study, strategies for identifying phishing websites are reviewed, as well as the history of phishing websites and their dangers. Technology advancements have made phishing websites and their attackers more resilient and diversified than in the past. It can lead to several privacy violations connected to phishing websites and leverage flaws to launch ransomware attacks. This research study, it is to examine the causes of and 61 methods for identifying phishing websites. The methodologies and their impact to reduce phishing websites are discussed in the study. 5.2 Accomplishment of the research objectives. Heuristics for identifying phishing attacks Heuristic-based algorithms take features from a website page to evaluate the legitimacy of the site rather than relying on what came before listings. The bulk of these techniques is derived from the URL and HTML document object model of the target web publication (DOM). The returned characteristics are evaluated to see if they include information from legitimate and phishing websites to determine the legitimacy of the website. Several of these techniques use heuristics to validate a specific URL and compute the spoof rating. The foundational architecture is what more people are thinking about. It has incorporated blacklisting as well as several heuristic criteria to validate the legitimacy of both URLs. Since Google constantly updates and maintains its secure surfing blacklist, which is made up of 5 categories, it may suggest utilizing GOOGLE API SERVICES for both the blacklist and safe browsing. These 5 classifications are thought of as 5 detecting phases. When a URL is entered, Phish Saver reveals the website's status, including whether it is phishing, legitimate, or unidentified. It identified the URL by utilizing domain names with the highest frequency that were obtained from HTML hyperlinks. This initial level of identification verifies the URL's domains to either a list of well-known websites, utilizing blacklists to certify this legitimacy against the whole blacklist. It has been utilizing the GOOGLE SAFE BROWSING blacklist for it though because it is a reliable and often updated list of websites that have been prohibited. In doing so, it utilized Google Safe Browsing API Version 4. There are two different ways that this listing might be compared. It may do an online inspection or obtain the URL shortener for the listing to perform a manual evaluation. It searches to the best of our ability; therefore, this kind of inquiry requires an internet connection. If the analysis is successful and just a connection is found, identifying the website as more than simply a phishing site, the procedure comes to an end. The algorithm then moves on to the next module if not. 62 Before continuing, the website is evaluated and saved as a DOM (Document Object Model) component. Phishers use phishing development tools to create fake registration forms and gather private information. It is well-accepted that online users regularly expose sensitive information while logging in. Searching for websites with an authentication server is the best technique to spot phishing sites. Without a user account, a website cannot be solely categorized as a phishing site because there is no way for users to give sensitive information. It is possible to check if the login page appears to be available in addition to looking at the website's HTML for the input element = "password." If a password for the method is available, the Phish Saver application will continue to operate; otherwise, it will stop because the user won't have the chance to enter any important information. Such filters might reduce the number of errors made by the identification program by preventing the identification of phishing on typical web pages without login forms. Null footer links don't point to any other websites since they lack value or character. A label that has an anchoring and again refers to a NULL result is said to have a NULL anchoring. This refers to the link that opens its page. After this data point, it reached level 3 of the identification heuristic. In the third step of identification, links in the footers of web pages are examined, particularly those with null values. Phishers place a high priority on keeping users on the signup form. As a result, developers build the program using these zero-footer hyperlinks, which effectively direct users to a page with a sign-up box continuously. As a result, numerous researchers examined the ratio of zero links to any connections in an attempt to detect phishing websites. However, there is a catch because some reliable websites could also have connections to null, such as company logo links. However, the fact is that none of the reliable websites' footers have connections to null. This discovery prompted us to develop the heuristic factor H, which stands for "if the anchoring tag inside the footer section connects to null" or "if the anchoring tag is empty," to rule out phishing websites. 63 5.3 Limitations of the research and problems encountered The method's primary strength—its language individualism also its primary flaw. To obtain words, I wouldn’t like to rely on any dictionary. To eliminate repeating phrases and short words with little significance, it decides to separate strings using any non-English letter dictionary and therefore only take into consideration terms with at least 3 characters. That presented some difficulties when comparing distributions. Longer subdomain like sample element displays and integer counting is regarded as completed originals. These outcome dates were also disregarded as being too short when the brief website domain string matched to mark and made up of dividing characters (number, semicolon, etc.) like bk4y, go8h, or if” was divided. The detection of certain blank websites as well as parked domains, such as phishing, is indeed a further restriction. The lack of data on blank or inaccessible web pages explains the very first. The header and body of the text are essentially empty, and there aren't many exterior or outbound connections. Resources (registration hyperlinks) are retrieved on such sites. Many Parked domains are disguised Registration FQDNs which have been exploited for harmful activities like phishing to trick consumers. Additionally, the identities of the parked domains employ concealment methods including typo squatting and composing techniques that are comparable to those used for phishing domains. Along with this closeness in the structure of the domain names as well as Links, parked domain names as well as phishing domains Additional similarities between the names can be found. Parked domains participate in ad networks [28], and the advertisements that are provided with the content are frequently connected to the RDN of the parked domain. For instance, Amazon Inc. advertisements are provided for both RDN amaaon.com. These parked pages share the same traits as phishing pages from the perspective of the categorization method. Because the system doesn't forbid access to the earlier material due to the Blank resources endpoints, this incorrect categorization of unavailable and parked web addresses is not a serious problem. For either, Google views domain parking as just a spam-like behavior that offers such little original material. 64 However, several given skill methods or a targeted recognition system may be utilized to eliminate these phishing ID internet sites. The prediction accuracy found in the categorization of Internet protocol phishing URLs was the ultimate drawback. 25 Addresses of this kind, out of Only 19 properly identified samples passed the phish Test, a lower number. capacity (0.76), which the system's overall capacity displayed (>0.95). Due to term allocations depending on FQDN since these URLs go to numerous null features and thus are blank. Nevertheless, those URLs only account for 41% fewer of the total URLs included throughout all phishing databases, hence they are not a significant problem. restriction. Even though it could not notice this in any large datasets, the website’s URL is in the language but which contents are in others can be miscategorized. It has only evaluated websites on so far European dialects. Evasion Strategies As it has been seen, using IP-based URLs is one method of avoiding discovery. Our system is less likely to pick them up. The versatility that DNS offers to alter the hosting point of any phishing material while maintaining the same link is lost if you rely on IP addresses rather than domain names, though. Phishers also would face other issues because IP blacklisting is frequently used to block access to harmful stability and durability. Limiting the amount of text that may be found on a web page by using a few external links, not loading external material, and creating short URLs is yet another method of avoiding problems. It observes several tactics being utilized singly inside the internet pages of each of the phishing databases being evaluated. It had no impact on the classifier's effectiveness because, despite preventing the calculation of certain characteristics, everyone else as those related to the title, home/landing URL, and linked connections still result in successful phishing identification. The effectiveness of the classifier may be impacted by the concurrent usage of various evasion tactics. However, the implementation of such deception would affect the phishing web page's attractiveness and reduce the number of offenders. 65 This suggested anti-phishing method is implemented on a computer with a core i7 CPU running at 3.4 GHz plus 16 GB of RAM. The suggested method is applied by using Python programming language because it offers compile time and great support because of its libraries. The HTML of a provided URL is parsed using the BeautifulSoup package. The recognition time is the period of latency from the entry URL to outputs generation. The technique attempts to collect all the characteristics again from the URL plus the HTML code of something like the web page whenever the URL is supplied as an input, as mentioned in the extracting features chapter. The assessment of the current URL as harmless or phishing on the value of the retrieved function follows next. The method for identifying phishing websites takes approximately 2-3 seconds to complete, which represents a fair and acceptable amount of time in a practical situation. The amount of input, the bandwidth of the Internet, as well as the server setup are some of the variables that affect responsiveness. It attempts for estimating the time required for the training, identification as well as testing of the suggested technique (possible set of functions) to classify the web browser utilizing the D1 dataset. 5.4 Discussion and Future improvements/recommendations The difficulty is figuring out ways to tell the phishing website from its legitimate innocuous counterpart. With previously unconsidered components (URL, hyperlink, and text), the document presented a revolutionary strategy to combat phishing. This suggested strategy is a whole customer alternative. When it used those parameters in several machine learning algorithms, it discovered that XGBoost had the best results. Designing a clear strategy with a high percentage of genuine exclusions as well as a lower incidence of wrongful convictions is the key goal. The findings demonstrate that the method effectively filtered away innocuous online sites with a small percentage of innocuous web pages mistakenly labeled as phishing. In the face of fresh phishing attempts, users would like to investigate how reliable machine learning techniques for phishing detection are. Additionally, those who are creating a real-time browser extension that would alert users whenever users visit dubious websites. Even though there are many remedies accessible, the review finds that phishing assaults are growing more frequent day by day based 66 on their literature research. Nevertheless, in addition to phishing detection methods, it might be difficult to inform and teach users. 5.5 Chapter Summary Phishing websites have become a huge issue for cyber security. Vulnerabilities in the websites have exposed the web servers to vulnerabilities. Phishers use these opportunities to target their phishing attacks without disrupting the owners of those websites. In this research, methods for detecting phishing websites are discussed and the background of phishing sites and their harmfulness is explained. With the rise of cybercrime, phishers are also starting to register their phishing websites. A phishing website can be described as a cloned website that looks like a legitimate website and spoofs the users with fake ones. Phishing attacks can be a big case within financial websites or based on legitimate websites designed by the government. Phishing websites are another type of cybercrime that is becoming more prevalent by the day. Phishing websites can cause money loss on business websites, particularly those relating to financial situations. Users frequently struggle to distinguish between legitimate websites and phishing scams. Research on phishing website detection is crucial in today's technology environment. Phishing websites have grown to be a major problem for online websites and cyber security. Phishing attacks are now caused by vulnerabilities that are present on websites. The background of phishing websites and their harmfulness is becoming an important subject of discussion in the UK. Academics are trying to come up with ways to stop phishing attacks. Phishers are registering their phishing websites in response to the growth in cybercrime. Some phishers attempt to redirect phishing website attacks by running new servers specifically for this purpose. Attacks based on genuine websites expressly created by the government or financial websites can be a major target of these attacks. A study examines the causes of phishing websites and how to spot them. Phishing websites are a growing problem, and as a result, so is their negative influence on users. This research can help identify phishing websites before they worsen the situation of online crimes. 67 Reference Wenyin, L., 2005. Phishing Web page detection. [online] Available at: https://www.researchgate.net/publication/4214799_Phishing_Web_page_detection (Accessed 18 August 2022). Kalaharsha, P., 2016. Detecting Phishing Sites - An Overview. [online] Jetir.org. Available at: https://www.jetir.org/papers/JETIR2006018.pdf (Accessed 18 August 2022). Ubing, A., 2019. Phishing Website Detection: An Improved Accuracy through Feature Selection and Ensemble Learning. [online] Pdfs.semanticscholar.org. Available at: https://pdfs.semanticscholar.org/8b97/ae0ba551083056536445d8c2507bb94b959f.pdf?_ga=2. 2686904.961605819.1658985707-1241532269.1656924723 (Accessed 18 August 2022). Aljofey, A., 2018. An effective detection approach for phishing websites using URL and HTML features. [online] Nature.com. Available at: https://www.nature.com/articles/s41598-02210841-5.pdf?origin=ppub (Accessed 18 August 2022). Zhang, Q., 2021. Research on phishing webpage detection technology based on CNN-BiLSTM algorithm. [online] Iopscience.iop.org. Available at: https://iopscience.iop.org/article/10.1088/1742-6596/1738/1/012131/pdf (Accessed 18 August 2022). Marchal, S., 2019. Know Your Phish: Novel Techniques for Detecting Phishing Sites and their Targets. [online] Arxiv.org. Available at: https://arxiv.org/pdf/1510.06501.pdf (Accessed 18 August 2022). Abusaimeh, H., 2021. Detecting the Phishing Website with the Highest Accuracy. [online] Temjournal.com. Available at: 68 https://www.temjournal.com/content/102/TEMJournalMay2021_947_953.pdf (Accessed 18 August 2022). Basnet, R., 2021. LEARNING TO DETECT PHISHING URLs. [online] Available at: https://citeseerx.ist.psu.edu/viewdoc/download;jsessionid=5A68056E4782D3DCAEBC73F5E3D 93110?doi=10.1.1.673.3391&rep=rep1&type=pdf (Accessed 18 August 2022). Shareef, Y., 2020. How to Detect Phishing Websites Using ThreeModel Ensemble Classification. [online] Meu.edu.jo. Available at: https://meu.edu.jo/libraryTheses/How%20to%20Detect%20Phishing%20Website.pdf (Accessed 18 August 2022). https://phishtank.org/developer_info.php https://developers.google.com/safe-browsing/v4 69 Appendices A: Survey Total Results Figure 13 Survey Result 70 Figure 14 Survey Result 71 Figure 15 Survey Result 72 Figure 16 Survey Result 73 Figure 17 Survey Result 74 Appendices B: Gantt Chart Figure 18 Gantt Chart 75 Figure 19 Gantt Chart 76 77 78