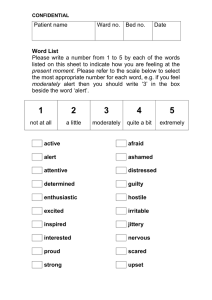

Lecture 1: Use cases, Ideas and Vocabulary, Model Performance evaluation, Algorithms and Ethics EF308: Econometrics and Forecasting Dr. Parvati Neelakantan 1 Introduction: AI, ML and Alert Models • Artificial Intelligence (AI) is a concept which relates to machines that endeavour to simulate human thinking capability and, in particular, decision making behavior. • Machine learning is an application of AI which allows machines (i.e., statistical learning models) to interpret (i.e., learn from) patterns in historical data without being programmed explicitly to do so. • Machine learning algorithms learn iteratively from the data – they derive pragmatic rules from the data. • As opposed to the traditional approach – whereby analysts devise a rule, from their experience, which is then programmed for implementation. 2 Introduction: AI vs Machine Learning; [Artificial Intelligence in Europe, Ernst & Young, 2019] 3 Machine learning vs traditional rule based alert model approach • Alert models generate a warning (‘a flag’) that a scenario of concern is likely to arise or have arisen – e.g., an instance of fraud in banking. • Machine learning alert models seek to systematically generate high quality alerts. • Traditional rule based anti fraud approach: Analyst determines a rule from experience which when broken generates an alert • Example: if an account level cash outflow occurs in a country other than the country of residence of a bank client and if it exceeds, say, two times the average daily outflow, generate an alert. • Machine learning approach, same scenario: A statistical learning algorithm devises from the data – labelled fraud events and a vector of predictors – a rule which when broken generates an alert • Example: a complex function of customer, account and transaction traits can arise which when it takes any of a certain interval of values generates an alert. 4 Machine learning vs traditional rule based alert model approach • Notice that the latter rule for alert generation is devised by a machine learning algorithm via patterns in historical data and not determined by an analyst directly. • Both approaches are automated for implementation but the latter machine learning approach uses a rule which is inferred from the data for the algorithm. 5 Machine Learning: Why now? Provenance Three key new developments have enabled machine learning techniques to address a spate of important problems, across industry sectors. 1. New insights in statistical modeling 2. Exponential rise in computer processing power and storage 3. Voluminous data (“datafication”), McKinsey (2018): ‘An Executive’s Guide to AI’ gives us an interactive time line for this confluence of trends. 6 Data produced in one day: World of data 7 Can AI really improve the decisions of experts? Yes! AI can predict when you will die, No idea how it works. New Scientist Nov, 2019. 8 Can AI really add value in a business setting? Potential (and active) commercially viable applications across industry sectors • Customer Focus • customer profiling • recommendation systems • dynamic pricing • product positioning • sales forecasting • Employees • recruitment, promotion and cessation 9 Perspectives from Corporations 10 Ernest & Young: Finance sector is a frontrunner in AI applications 11 Investment Bank; Importance in Banking and Finance? Investment ‘How will Big Data and Machine Learning change the investment landscape? We think the change will be profound. ...the market will start reacting faster and will anticipate traditional data sources e.g. quarterly corporate earnings, low frequency macroeconomic data. This gives an edge to quant managers and those willing to adopt and learn new datasets and methods. Eventually ‘old’ datasets will lose most predictive value and new datasets that capture ‘Big Data’ will increasingly become standardized.’ JP Morgan, 2017 12 How is machine learning evaluated by end users across the financial services industry sector? BoE 2019: Prevalence of ML in Financial Services 13 BoE 2019: Pipeline for ML applications 14 BoE 2019: Anticipated ML applications 15 Ideas and Vocabulary What should an AI process look like in a business setting? 16 How machine learning works Bank of England Chapter 5 Machine Learning in UK Financial Services 17 Training and Test Samples Machine • Training sample: a subset of the sample to train the model on. • Test sample: a subset of the sample to test the trained model. Divide a single data set into training and test sub-samples. Test set should be large enough to reveal statistically meaningful results. It should be representative of the population is at all possible. Idea is that a model fit to the training sample can ideally generalize well to the test sample. Test set serves as a proxy for new data. 18 Technical and Pictorial representations of Machine Learning Models General functional form Objective Understanding the data: eliciting, from the data, the data generating process (the function 𝑓) which has generated the data. In this setting we denote the input variable (features/ traits/ predictors/ independent variables/ regressors) by 𝑋 and the output (predicted/ dependent/ regressand or response) variable by 𝑌 . In most cases, 𝑋 takes various values – observed in the cross-section or over time, hence, we use 𝑋1 , 𝑋2 , 𝑋3 and so on. 19 General functional form Assume that we observe a quantitative response variable 𝑌 for some predictor variables 𝑋1 , 𝑋2 , … , 𝑋𝑝 . We assume the existence of some relation, f , that can be written as 𝑌 =𝑓 𝑋 +𝜖 where 𝑋 = (𝑋1 , 𝑋2 , … , 𝑋𝑝 ) and 𝜖 is a random error term. Understanding the data: We seek to estimate f . 20 General functional form Prediction and Inference The error term averages to 0, thus, we predict 𝑌 using 𝑌 = 𝑓(𝑋) where 𝑌 is the estimate of 𝑌 and 𝑓 the resulting estimate of 𝑓. 𝑌 can be a binary variable indicative of an alert occurring or not occurring. Our estimate of 𝑓 , 𝑓, can be provided by a range of algorithms e.g. linear regression models, decision trees, neural nets, support vector machines etc. 21 Can we envisage the functional form 𝑓 pictorially? Let’s do this in income - education space. The Income data set. Left: The red dots are the observed values of income (in tens of thousands of dollars) and years of education for 30 individuals. Right: The blue curve represents the true underlying relationship between income and years of education, which is generally unknown (but is known in this case because the data were simulated). The black lines represent the error associated with each observation. Note that some errors are positive (if an observation lies above the blue curve) and some are negative (if an observation lies below the curve). Overall, these errors have approximately 22 mean zero. Pictorial representation of 𝑓 : A surface in 3-d space The plot displays income as a function of years of education and seniority in the Income data set. The blue surface represents the true underlying relationship between income and years of education and seniority, which is known since the data are simulated. The red dots indicate the observed values of these quantities for 30 individuals. Black vertical lines represent errors. 23 What is this error term? 𝜖 = 𝑌 − 𝑓(𝑋) is the irreducible error, i.e. even if we know 𝑓(𝑋), we would still make errors in prediction, since at each X = x, there is typically a distribution of possible Y values. 𝜖 contains: • unmeasurable predictor variables (e.g., quality of management) • or unmeasured (i.e. omitted/ overlooked) predictor variables • or unspecified variation in observed predictor variables. • inappropriate functional form relating the predictor to the outcomes. 24 Mean Squared Error criterion Typically algorithms tend to minimize MSE. If 𝑓 and 𝑋 are assumed to be constant, then the expected value of the squared difference between predicted and actual value of 𝑌 is where 𝑉𝑎𝑟(𝜖) is the variance of the error term . 𝐸(𝑌 − 𝑌)2 is the mean squared error (MSE) in the sample (i.e., the training sample). 25 Alert model error criterion: Proportion of incorrect predictions. Model selection: a popular point of departure is to minimize the training MSE. Ultimately, we will see that this is a naive approach, in particular, in context of formulating predictions. In the context of ‘binary classifier’ e.g. alert models, the proportion of incorrect predictions is a preferable error criterion - especially this proportion in a test sample. 26 Supervised v unsupervised learning • Supervised learning: Supervised learning is about (i) the prediction of a response variable or (ii) the inference of the importance of a predictor on a response variable. • Learning process of an algorithm is ‘supervised’ by the observed response variable. • Illustrative application: an anti-fraud model learns from iteratively inferring the association between suspected fraud events and a set of features in the data. • Indicative machine learning algorithms: decision trees, neural nets, support vector machines etc. For the most part, this course pertains to supervised learning techniques, with a focus on binary classifier type alert models. 27 Unsupervised learning • Unsupervised learning involves no response variable • Cluster analysis to establish the relationship between the variables. • Illustrative application: one might identify distinct groups of customers, even without seeing their spending patterns. • Indicative machine learning algorithms: Principal Components Analysis, K-Means Clustering etc. • Technically, unsupervised learning is about addressing a high dimensional scatter plot challenge. • If there are p variables, then there are p(p-1)/2 distinct scatter plots. • As p grows visual inspection while feasible, is challenging to interpret: What are the commonalities across variables? • Criterion for success, unlike in supervised learning, is comparatively unclear. 28 Supervised learning • Supervised learning: Regression vs Classification Broadly speaking, problems with a quantitative (qualitative) response are regression (classification) problems but • Quantitative response variable: A monetary loss due to an operational event such as an instance of fraud • Qualitative response variable: Whether a fraudulent event is said to have occurred or not. 29 Regression versus Classification problems • In practise, it is more complex as indicated by the examples below. Nonetheless, the language on the latter slide is useful. Specifically, the same method can cater for both regression and catering problems • K nearest neighbour and gradient boosting methods can be used with either quantitative or qualitative response variables • Logistic regressions and linear probabilty models have a qualitative response, but estimates via regression the probability of an observation being in each select category. • While this course will principally focus on binary classification methods, note that, on the whole, the discussions also relate to the regression setting. 30 Black box algorithms Accountability in the context of a ‘Black Box’ algorithm 1. Impact of Model Flexibility 2. Correlation v Causality 31 Black box algorithms Impact of Model Flexibility Interpretability-Explainability-Predictive Accuracy 32 Model Flexibility and Interpretability • Flexibility of an algorithm is related to the range of shapes with which it can estimate the true f (section 2.3). • More flexible model: more candidate features, more complex candidate functional forms relating the features to the response. • Technically: Flexibility of a curve is summarized in the number of degrees of freedom - the number of free parameters to be fit. • Example, there are 2 coefficients to be fit in an inflexible simple regression model. • Interpretability • Meaning: relates to our capacity to interpret, in intuitive and pragmatic (i.e. accurate) terms, how a model has produced its predictions. • Technical measurement rule: Capacity of a human (expert/ layman) to consistently predict a ML model’s result. (A proxy - a short tree can receive a higher interpretabilty score.) • Weakness of this rule: A shortcoming of this approach is the arbitrary nature of the individual recruited for the assessment. 33 Interpretability-Flexibility ‘Trade-Off’ • How can flexibility impact Interpretability? • More flexible models tend to be more complex and, thus, more difficult to explain/ interpret. • Examples of highly flexible and difficult to interpret models: Neural nets, Bagging, boosting and SVMs (with non linear kernels) and nearest neighbour approaches. 34 Interpretability-Flexibility ‘Trade-Off’ A representation of the trade-off between flexibility and interpretability, using different statistical learning methods. In general, as the flexibility of a method increases, its interpretability decreases. 35 Importance of Interpretability • Interpretability permits confidence that the predictive capacity of a model is reliable (accurate and equitable), and thus is fit to be deployed. • Interpretability poses little issue in low-risk scenarios. E.g. If a model is recommending movies to watch, that can be a low-risk task (for recipient). • If a model decides on a propensity to recidivism/ if a person has cancer/ if money laundered, these are high-risk scenarios where interpretability is critical for engendering trust. • As data scientists and financial institutions are held accountable for model mistakes, a necessary step will be to interpret and validate a model (and training data) before it is deployed. • Expectation: Models will ultimately provide not only a prediction and performance metrics but also an interpretable account of a decision or indecision (e.g. self driving cars, Alexa the personal assistant). 36 Model Explainability vs Model interpretability • Explainability, in contrast, is about what each and every aspect of a model represents (e.g. a node in a neural network) and its importance to a model’s performance. (Term of transparency is sometimes used instead of explainability.) • Note: Chris Molnar in his book ’ A guide for making black box models explainable’ uses the terms interpretable and explainable interchangeably. 37 The Trade-Off Between Prediction Accuracy and Model Explainability. Sources: US Department of Defense’s ’Explainable AI’ and IIF report Nov 2018, Explainability in Predictive Modeling. 38 Predictive Accuracy: e.g. the proportion of correct predictions in the test (training) set. Black box algorithm: Correlation v Causality • Machine learning can play an integral role in tests for causality but it pertains directly only to correlation. • In causality tests, machine learning models can estimate a ’counterfactual’ scenario. • Machine learning requires expert business knowledge insight whether used in tests for correlation or causality. • Commercially, the cost in time and resources of testing for causality is usually prohibitive. • Key reference: Varian, 2014; Big Data: New Tricks for Econometrics. Journal of Economic Perspectives. 39 Causality and Correlation • Machine learning is principally about correlation and prediction, typically it has little to directly say about causality. • Illustration of Correlation vs Causation • If we look at the historical data, using extrapolating machine learning models, for instance; we find that the number of compliance officers deployed is strongly positively associated with rule violations. • But this doesn’t (necessarily!) mean that assigning additional compliance officers causes more rule violations. 40 Is establishing causality unnecessary? Correlations often suffice. • Not necessary to understand why a mechanism works as it does: why is a customer loyal?, why does an individual commit a fraud? • We might not know why an outcome arises but it can still be highly valuable to know what happens next • Amazon & Netflix: recommendation algorithms • Google flu trends: propagation of a virus • Electrocardiograms for premature baby and adult fatality predictions • Banking industry: machine learning informed alert models. 41 Is establishing causality unnecessary? Correlations often suffice. • Tests of causality are about understanding why a particular outcome • takes place: why does a customer churn? why do ales figures increase? • This can be useful for two main reasons: 1. To identify features for input to a machine learning model • Machine learning builds on domain area expertise: Domain experts can suggest predictors and machine learning can discern between these predictors and select useful functions of them for making predictions. • Machine learning can substitute for, in significant part, professional skills and years of experience. It can free up time for skilled personnel to engage in more challenging problems. 42 Is establishing causality unnecessary? Correlations often suffice. 2. To estimate the causal impact of an intervention can prove a highly pragmatic and commercially relevant data related question. For instance, • Can the recruitment of compliance officers reduce rule violations? • Can an increased budget for advertising increase sales? • The case for an end to theory (i.e. subject experience and domain area knowledge) is unpersuasive 43 Performance evaluation of Alert Models A Manager’s Guide to Alert Model Performance Evaluation • This module focuses on binary classifiers: i.e. Alert Models. • Our initial measures of accuracy are the training and test classification error rates. • In first instance, let’s think of a good classifier as one which has a small classification test error rate • A bias-variance trade-off concept proves critical to selecting model flexibility in this setting. • Finally we turn to commercially sensitive performance measures: e.g., true positive and false positive rates as calculated in a confusion matrix. 44 Assessing Model Accuracy: Error Rates Test (Training) classification error rate: the proportion of incorrect predictions in the test (training) set 45 Assessing Model Accuracy: Test Error Rates • To quantify the accuracy of the estimate 𝑓 , we use the training error classification rate • 𝑦 is the predicted class label (using 𝑓 ) for the ith observation in the training sample • y is the actual label observed in the data • 𝐼(𝑦𝑖 ≠ 𝑦𝑖 ) is an indicator variable that equals 1 if 𝑦𝑖 ≠ 𝑦𝑖 and 0 if 𝑦𝑖 = 𝑦𝑖 • The test error classification rate associated with a set of test observations of the form (𝑥0 , 𝑦0 ) is given by 𝐴𝑣𝑒(𝐼(𝑦0 ≠ 𝑦0 ) . 46 Illustrative classification algorithm: K Nearest Neighbours • K-Nearest Neighbors, KNN The K-Nearest Neighbors Classifier estimates the conditional probability for class 𝑗 as a fraction of points in 𝑁0 whose response values equal 𝑗 fraction of observations in the ’neighbourhood’ of a test observation with a specific class j outcome. KNN algorithm classifies a test observation such that it belongs to the most commonly-occurring class. Next slide: Let’s consider two classes of outcome: orange and blue circles. Imagine that the axes (next slide) correspond to features: ’feature space’ and that there is a hypothetical point ’x’ in this space to which we wish to ascribe a class of outcome. Let’s use KNN, K=3. 47 KNN Algorithm in action The KNN approach, using K = 3, is illustrated in a simple situation with six blue observations and six orange observations. Left: a test observation at which a predicted class label is desired is shown as a black cross, x. The three closest points to the test observation are identified, and it is predicted that the test observation belongs to the most commonly-occurring class, in this case blue. Right: The KNN decision boundary for this example is shown in black. The blue grid indicates the region in which a test observation will be assigned to the blue class, and the orange grid indicates the region in which it will be assigned to the orange class. 48 Performance of the K-NN algorithm: In a simulation setting • Let’s examine the performance of the K-NN algorithm, i.e. the training and test errors across as we allow model flexibility, K, to vary. • Broken line (technically a so-called ’Bayes decision boundary’) in feature space is analogous to the true model f, where errors in regard to the boundary represent irreducible error. A simulated data set consisting of 100 observations in each of two groups, indicated in blue and in orange. The purple dashed line represents the Bayes decision boundary. The orange background grid indicates the region in which a test observation will be assigned to the orange class, and the blue background grid indicates the region in which a test observation will be assigned to the blue class. The Bayes error rate is analogous to the irreducible error. The Bayes classifier is an optimal classifier which is possible as we know the true data generating process. 49 The Classification Setting The black curve indicates the KNN decision boundary on the data from Figure 2.13, using K = 10. The Bayes decision boundary is shown as a purple dashed line. The KNN and Bayes decision boundaries are very similar. 50 The Classification Setting A comparison of the KNN decision boundaries (solid black curves) obtained using K = 1 and K = 100 on the data from Figure 2.13. With K = 1, the decision boundary is overly flexible – low bias and high variance, while with K = 100 it is not sufficiently flexible - high bias and low variance. The Bayes decision boundary is shown as a purple dashed line. 51 The Classification Setting: A “U” shape in the test error curve The KNN training error rate (blue, 200 observations) and test error rate (orange, 5,000 observations) on the data from Figure 2.13, as the level of flexibility (assessed using 1/K) increases, or equivalently as the number of neighbors K decreases. The black dashed line indicates the Bayes error rate. The jumpiness of the curves is due to the small size of the training data set. 52