su(n) Lie Algebra: Theory and Representations

advertisement

The su(n) Lie Algebra

Haonan Liu

December 2, 2022

Contents

1 The SU(n) Lie group

1.1 The SU(n) group . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1.2 The SU(n) Lie group . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3

3

3

2 The su(n) Lie algebra

2.1 Lie algebra . . . . . . . . . . . . . . . . .

2.2 Basis, structure constants, and generators

2.2.1 Basis and generators of su(n) . . .

2.2.2 Structure constants of su(n) . . . .

2.3 States and operators . . . . . . . . . . . .

2.3.1 General definition . . . . . . . . .

2.3.2 Operators in Lie algebra . . . . . .

2.4 The Lie correspondence . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

3

3

5

6

7

7

7

8

8

3 Representations of su(n)

3.1 Representation theory of Lie algebras . . . . . . . .

3.2 Examples of reps . . . . . . . . . . . . . . . . . . .

3.2.1 Trivial/zero rep . . . . . . . . . . . . . . . .

3.2.2 Defining/natural/standard/tautological rep

3.2.3 Adjoint/regular rep . . . . . . . . . . . . .

3.3 Morphism between reps . . . . . . . . . . . . . . .

3.3.1 Intertwining operator . . . . . . . . . . . .

3.3.2 Equivalent reps . . . . . . . . . . . . . . . .

3.3.3 Reps of su(n) and sl(n, C) . . . . . . . . . .

3.4 Operations on reps . . . . . . . . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

9

9

10

10

11

11

13

13

13

13

13

4 Structure theory of sl(n, C) and its reps

4.1 Simple and semisimple Lie algebras . . .

4.1.1 Ideals and commutativity . . . .

4.1.2 Simplicity and semisimplicity . .

4.1.3 Levi decomposition . . . . . . . .

4.2 Reducible and irreducible reps . . . . . .

4.2.1 Reducibility . . . . . . . . . . . .

4.2.2 Complete reducibility . . . . . .

4.3 Complete reducibility and symmetry . .

4.3.1 Schur’s lemma . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

15

15

15

16

16

17

17

17

18

18

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

1

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

4.4

4.5

4.6

4.3.2 Hamiltonian & first definition of symmetry . . . .

4.3.3 Unitary rep & the second definition of symmetry .

4.3.4 Multiplet . . . . . . . . . . . . . . . . . . . . . . .

4.3.5 Characters . . . . . . . . . . . . . . . . . . . . . .

Reps of sl(n, C) . . . . . . . . . . . . . . . . . . . . . . . .

4.4.1 Reps of sl(2, C) . . . . . . . . . . . . . . . . . . . .

4.4.2 Cartan subalgebra . . . . . . . . . . . . . . . . . .

4.4.3 Root decomposition . . . . . . . . . . . . . . . . .

4.4.4 Cartan-Weyl basis . . . . . . . . . . . . . . . . . .

4.4.5 Root system . . . . . . . . . . . . . . . . . . . . .

4.4.6 Classification of semisimple Lie algebras . . . . . .

4.4.7 Weight decomposition . . . . . . . . . . . . . . . .

4.4.8 Reps of sl(n, C) . . . . . . . . . . . . . . . . . . . .

4.4.9 Casimir operators . . . . . . . . . . . . . . . . . .

Direct product to direct sum . . . . . . . . . . . . . . . .

4.5.1 Symmetric and antisymmetric tensors . . . . . . .

4.5.2 Direct product into direct sum . . . . . . . . . . .

4.5.3 The Clebsch-Gordan coefficients (CG coefficients)

Summary of properties of sl(n, C) and su(n) . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

18

19

20

21

22

22

24

25

28

30

36

37

39

40

41

41

41

41

42

5 The su(2) Lie Algebra and its reps

5.1 Generators and structure constants . . . . . . . .

5.2 The Lie correspondence between SU(2) and su(2)

5.2.1 From SU(2) to su(2) . . . . . . . . . . . .

5.2.2 From su(2) to SU(2) . . . . . . . . . . . .

5.3 Reps . . . . . . . . . . . . . . . . . . . . . . . . .

5.3.1 Defining rep . . . . . . . . . . . . . . . . .

5.3.2 Adjoint rep . . . . . . . . . . . . . . . . .

5.3.3 Irrep . . . . . . . . . . . . . . . . . . . . .

5.3.4 CG coefficients . . . . . . . . . . . . . . .

5.4 Cartan subalgebra . . . . . . . . . . . . . . . . .

5.4.1 Casimir operators . . . . . . . . . . . . .

5.4.2 Fundamental rep . . . . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

44

44

45

45

45

45

45

45

46

48

49

49

50

6 The su(3) Lie algebra and its reps

6.1 Generators and structure constants . . . . . . .

6.2 Reps . . . . . . . . . . . . . . . . . . . . . . . .

6.2.1 Fundamental and antifundamental reps

6.2.2 Tensor product rep . . . . . . . . . . . .

6.2.3 Multiplets . . . . . . . . . . . . . . . . .

6.2.4 Cartan subalgebra . . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

50

50

50

50

50

50

50

.

.

.

.

.

.

7 The su(4) Lie algebra and its reps

51

8 Others

51

9 References

52

2

The SU(n) Lie group

1

The SU(n) group

1.1

1

.

Definition 0 [U(n) group]: A unitary group of degree n, U(n), is the group of complex linear automorphisms

of an n-dimensional complex vector space Cn preserving a positive definite Hermitian inner product H on

Cn .

In Def. 0, if the Hermitian form H is “standard”, meaning it is defined associated with the identity

matrix 1 such that H(v, w) = v † · 1 · w, which is usually the case, then the group U(n) is just the group of

n × n unitary matrices U , i.e., U † = U −1 . It can be proved that |det(U )| = 1 for any U ∈ U(n).

Definition 0 [SU(n) group]: A special unitary group, SU(n), is the subgroup of U(n) with determinant 1.

Easy to see, SU(m) is a subgroup of SU(n) for any m < n. Since SU(1) only contains the 1-dimensional

identity, it is trivial and not interesting. Thus we will always have n > 1 for SU(n) in this work.

The SU(n) Lie group

1.2

The SU(n) group is more than just a group, but a Lie group.

Definition 0 [Lie group]: A Lie group is a set endowed simultaneously with the compatible structures of a

group and a C ∞ (infinitely differentiable) manifold, such that both the multiplication and inverse operations

in the group structure are smooth maps. A morphism between two Lie groups is just a map that is both

differentiable and a group homomorphism.

We have already defined SU(n) as a group. Multiplication is smooth because the matrix entries of the

product U1 U2 are polynomials in the entries of U1 and U2 for U1 , U2 ∈ SU(n). Inversion is smooth by

Cramer’s rule. Therefore SU(n) is a smooth manifold and thus a Lie group.2 One can go further and show

that the SU(n) Lie group is compact, simply connected, and nonabelian.3

The benefit of understanding the Lie group nature of the SU(n) group is immediate once we introduce the

one-to-one correspondence between Lie groups and Lie algebra in the next section. With the correspondence,

most of the properties of the SU(n) group that we will use for our physical problems can be derived using

the su(n) or sl(n, C) Lie algebra structure theory.

Some useful properties of SU(n):

1. The matrix elements of an n × n matrix U ∈ SU(n) are analytic functions of d real parameters

x1 , x2 , · · · , xd , with d = n2 − 1. This directly follows from the fact that SU(n) is smooth and that a

unitary matrix with determinant 1 has n2 − 1 degrees of freedom in real numbers. Therefore we also

call an SU(n) group a real Lie group of dimension n2 − 1.

2. More will be added as we complete this note.

The su(n) Lie algebra

2

2.1

Lie algebra

A Lie algebra4 is defined as the following.

1 All

the ??? notations in the work need to be settled. All the "it can be shown" needs to be shown or cited.

SU(n) ⊆ U(n) ⊆ GL(n, C) are all Lie groups and form smooth embeddings.

3 These properties are somewhat “nice” properties in representation theory.

4 Sometimes called a tangent space in literature, related to the smooth manifold nature of the corresponding Lie group.

2 Generally,

3

Definition 0 [Lie algebra]: A Lie algebra g is a vector space together with a skew-symmetric bilinear map 5

(called the Lie bracket)

[ , ]:g×g→

− g

(1)

satisfying the Jacobi identity

[a, [b, c]] + [b, [c, a]] + [c, [a, b]] = 0

(2)

with a, b, c ∈ g.

Note that, in this work, whenever we mention a Lie algebra, we will always assume that the Lie

algebra is finite-dimensional over an algebraically closed 6 field F of characteristic 7 0 such as

R or C unless explicitly noted. We can then define the Lie algebra su(n) as follows.

Definition 0 [u(n), sl(n, C), and su(n) Lie algebras]: Let gl(n, C) be the Lie algebra that constitutes all the

n × n complex matrices. The u(n) Lie algebra is a Lie algebra that constitutes all the anti-Hermitian n × n

complex matrices, i.e.,

u(n) = U ∈ gl(n, C) | U † = −U .

The sl(n, C) Lie algebra is a Lie algebra that constitutes all the traceless n × n complex matrices, i.e.,

sl(n, C) = {U ∈ gl(n, C) | Tr[U ] = 0}.

The su(n) Lie algebra is a Lie algebra that constitutes all the traceless anti-Hermitian n × n matrices, i.e.,

su(n) = U ∈ gl(n, C) | Tr[U ] = 0 and U † = −U .

For all these algebras, the skew-symmetric bilinear map is the commutator.8

Some comments on these algebras:

1. Notation. In this work, we write

gl(n, C) = gl(Cn ).

(3)

More generally, we write gl(V ) to represent the set of automorphisms (matrices) from vector space V

to V .

2. Both u(n) and su(n) are real Lie algebras. “Real” means that the algebra is a vector space over the

field R, although the matrices themselves can contain complex numbers as entries. On the other hand,

sl(n, C) is a complex Lie algebra.

3. Lie algebra su(n) is a subalgebra of u(n). Here, a subalgebra g0 of Lie algebra g is a subset of g of which

the elements form a Lie algebra with the same commutator over the same field as those of g.

4. Complexification of a real Lie algebra. A real Lie algebra can be complexified if its basis elements stay

linearly independent and the field R the Lie algebra is defined over is replaced by C. Both u(n) and

su(n) satisfy this condition and can be complexified. Specifically, we define

suC (n) ≡ su(n) ⊗ C = su(n) ⊕ isu(n),

5 “Skew-symmetric”,

(4)

or antisymmetric, means [X, Y ] = −[Y, X].

field F is algebraically closed if every polynomial with coefficients in F has a root in F

7 A field has characteristic 0 if the sum of its multiplicative identity never reaches its additive identity.

8 Definition (0) can also be phrased another way using the notion of exponential maps. The anti-Hermitian and traceless

nature of matrices can be derived following rules of exponential maps and the definition of SU(n) Lie groups. However, following

our logic in this work, we will define SU(n) and su(n) separately first, and introduce the Lie correspondence in the end together

with the exponential map.

6A

4

Realizing that the Lie algebras su(n) and isu(n) are isomorphic and that they combined contain all

the traceless n × n complex matrices, we have

suC (n) = sl(n, C),

(5)

i.e., sl(n, C) is the complexification of su(n).

It turns out that sl(n, C) will be very important in the theory. In fact, many of the properties of su(n)

will be studied as a result of sl(n, C).

5. We mention Ado’s theorem here.

Theorem 1 [Ado]: Any finite-dimensional Lie algebra over F is isomorphic to a Lie subalgebra of

gl(n, F).

Ado’s theorem tells us that without loss of generality Lie algebras can be studied with their elements

defined as matrices.

The su(n) Lie algebra is finite-dimensional. Another example of a finite-dimensional Lie algebra is the

Heisenberg algebra which is spanned by the coordinate functions over R, i.e., {1, q, p}, with the skewsymmetric bilinear map defined as the Lie bracket. The Heisenberg algebra is useful in physics. See Miller

or Woit for more.

2.2

Basis, structure constants, and generators

We first discuss the properties of general Lie algebras. For a Lie algebra of dimension d over field F we

can always find a basis of dimension d that we label as ej , j ∈ N. The Lie algebra is then denoted as

spanF {e1 , e2 , · · · , ed } ≡ {e1 , e2 , · · · , ed }F .

(6)

Given a nonsingular matrix R, a new basis can be built using e0k = Rkj ej . Here, for simplicity, we have

assumed the Einstein’s summation convention, and we do not care about the upper or lower indices of a

tensor unless otherwise noted. From now on, we will also use the basis notation (6) to label a Lie algebra.

If the field F is clear from context [R for su(n), C for sl(n, C), etc.] we will discard it as well.

Applying the skew-symmetric bilinear map to basis elements yields another element of the Lie algebra

that can be expanded in the basis, i.e.,

[ej , ek ] = fjkl el .

(7)

Here, the coefficients fjkl are called structure constants relative to the chosen basis. Several properties of

the structure constants are:

1. Given a basis, the structure constants can describe the Lie algebra completely.

2. If the structure constants are all real, then the Lie algebra is real.

3. The structure constants transform between basis in the following way fj0 0 k0 l0 = Rj 0 j Rk0 k fjkl R−1

ll0

4. The structure constants are antisymmetric in the first two indices as a result of the Jacobi identity.

We next focus on the su(n) algebra.

5

.

2.2.1

Basis and generators of su(n)

By definition, the su(n) algebra is spanned by all the traceless anti-Hermitian matrices of dimension

n × n. It follows by counting the real degrees of freedom that su(n), as a vector space, has a finite dimension

d = n2 − 1.

(8)

Therefore, we can find a basis of dimension n2 − 1 for su(n), n > 2. The most common choice of basis in

literature can be constructed in the following way.

We first define a set {λi } as the set of the following traceless Hermitian matrices

1 0

1 0

0

1 0

0

0

0 1

s

1 0 1

0 −1

2

(9)

{λi } =

−2

.

.

,··· ,

,

, √

n(n − 1)

3

.

1

.

0

0

0

−(n − 1)

0

0

0 1

0

0 0 1

0

0 0

0

0 0

1 0

0 0

(10)

.

.

.

, 1

,··· ,

,

.

.

. 1

0

0

0

0

0

1 0

0 0 −i

0

0 0

0

0 −i

0

0 0

0 0

i 0

(11)

.

.

.

, i

,··· ,

.

.

. −i

.

0

0

0

i 0

0

0

In more concise expressions, the set {λi } can be constructed from the following three classes of n×n matrices:

s

for µ < k

1

i

h

2

(1)

=

λk

(12)

δµν × −k for µ = k, k = 1, 2, · · · , n − 1 ;

k(k + 1)

µν

0

for µ > k

h

i

(2)

λjk

= δkµ δjν + δkν δjµ ,

j, k = 1, 2, · · · , n, and j < k;

(13)

µν

h

i

(3)

λjk

= −i(δjµ δkν − δjν δkµ ),

j, k = 1, 2, · · · , n, and j < k.

(14)

µν

Here the superscripts (1), (2), and (3) represent the three classes. We can check that the total number of λi

n(n − 1) n(n − 1)

+

= n2 − 1 = d.

matrices is (n − 1) +

2

2

The matrices λi are called the generators of su(n).9 A basis of the su(n) is then just given by the set of

anti-Hermitian traceless matrices {ei } defined from {λi }

1

ej = − iλj ,

2

j = 1, 2, · · · , d.

(15)

The multiplication by i creates anti-Hermitian basis elements from the Hermitian generators. The coefficient

1

− is chosen for historical reasons. As mentioned above, our choice of generators and basis is not unique,

2

but has been shown convenient from literature.

9 Notice that the choice of generators is not unique, as is true for basis. Our choice is only one of the fundamental

representations??? of su(n).

6

2.2.2

Structure constants of su(n)

By the definition of structure constants in Eq. (7), using Eq. (15), we have

[λj , λk ] = 2ifjkl λl .

(16)

With the choice of generators given by Eqs. (9)–(11) or Eqs. (12)–(14), we get a nice normalization condition

for the generators λi , i.e., 10

Tr[λj λk ] = 2δjk

(17)

Now, using Eqs. (16) and (17) together, we get

Tr [λj , λk ], λl = 2ifjkm Tr[λm λl ] = 2ifjkm 2δlm = 4ifjkl ,

(18)

which gives the structure constants as

fjkl =

1

Tr [λj , λk ]λl .

4i

(19)

On the other hand, using Tr[AB] = Tr[BA] in Eq. (18), we get

4ifjkl = Tr [λj , λk ]λl = Tr[λj λk λl − λk λj λl ] = − Tr[λj λl λk − λl λj λk ] = − Tr [λj , λl ]λk = −4ifjlk . (20)

Similarly, we can prove that every odd permutation of the indices of fjkl changes its sign.11 In other words,

fikl is completely antisymmetric in all indices. Therefore all structure constants

2 with

the same indices vanish.

n −1

To find all the nonvanishing terms of structure constants, we only need

independent calculations,

3

given the completely antisymmetric property.

For future use, we can also define the anticommutation relations for the generators λi . Without proof,

for su(n), we have

4

+

λl ,

(21)

[λj , λk ]+ = δjk 1n×n + 2fjkl

n

where

1 +

(22)

fjkl

= Tr [λj , λk ]+ λl .

4

2.3

States and operators

2.3.1

General definition

We first discuss the concepts of states and linear operators in general. For an m-dimensional vector space

over field F, we sometimes refer to the vectors as states or kets. The basis vectors 12 {ψ1 , ψ2 , · · · , ψm } can

also be denoted as {|ψ1 i , |ψ2 i , · · · , |ψm i}. A linear operator is a morphism 13 that maps states from a vector

space to another. For an operator Ω̂ : V → U , with {vj } and {uj } being bases of V and U , we can define

the operator as

k

Ω̂vj = (Ωvj ) uk ,

(23)

k

where we have used the ˆ· notation to label operators. We call Ωkj = (Ωvj ) the matrix of the operator Ω̂.

Ignoring the upper and lower indices (assuming Euclidean metrics for v and u), we get

Ω̂vj = Ωkj uk ,

(24)

where we have chosen the order of the lower indices on purpose. The reason will be explained shortly.

10 This

actually why we chose this basis in the beginning.

arbitrary Lie algebra, fjkl is only antisymmetric in the first two indices. For compact and semisimple Lie algebras

such as su(n), fikl is completely antisymmetric, which is nice.

12 In this work we follow the notation in Eq. (6) such that a set of basis vectors can mean either the basis or the vector space

spanned by the basis. The exact meanings should be clear from context.

13 A morphism is a structure-preserving map used in category theory.

11 For

7

2.3.2

Operators in Lie algebra

Now we go back to the discussion on Lie algebra. So far we have seen that the Lie algebra elements of

su(2) are matrices. Also we know that matrices are morphisms acting on certain vector spaces. Therefore,

we can think of a Lie algebra g as a vector space consisting of linear operators that act on an m-dimensional

vector space V . We assert that the operators that form the Lie algebra are morphisms from V to V . Let

{v1 , v2 , · · · , vm } be a orthonormal basis of V , then we have, for Ω̂ ∈ g, using Eq. (24)

Ω̂vj = Ωkj vk .

(25)

Furthermore, we demand the vector space V to be an inner product space. This allows us to define the inner

product hvj |vk i, where hvj |’s are called bras that are a linear functional of kets living in the dual vector space

V ∗ of V and satisfy

†

hvj | = |vj i .

(26)

In an inner product space, it is always possible to find orthonormal basis for the kets such that

hvj |vk i = δjk ,

j, k ∈ {1, 2, · · · , m}.

(27)

Therefore we will always assume our basis of V and V ∗ to be orthonormal from now on.

With orthonormal basis chosen, we can immediately appreciate our choice of the order of indices in

Eq. (24). Realizing that different orders of indices are equivalent to matrix transpositions, our choice ensures

the matrix Ωjk to have the nice property such that

E

D

(28)

hvk |Ω̂|vj i ≡ vk Ω̂vj = Ωlj hvk |vl i = Ωkj ,

where we have defined a left action. In other words, the elements of the matrix Ωkj are just the operator Ω̂

acted by bra hvk | on the left and ket |vj i on the right following the left action convention. Another nice

property is that

D

E D

ED

E

(ΩΩ0 )jk = hvj |Ω̂Ω̂0 |vk i = vj Ω̂Ω̂0 vk = vj Ω̂vl vl Ω̂0 vk = Ωjl Ω0lk ,

(29)

i.e., the matrices of operators indeed follow our usual convention of matrix multiplications, which assumes

left action.

From now on, we will always think of elements of Lie algebra g as linear operators that act on some inner

vector space V .

2.4

The Lie correspondence

It is no coincidence that the SU(n) Lie group and su(n) Lie algebra have the same dimension d = n2 − 1.

It can be proved that the linear SU(n) Lie group corresponds to the real su(n) Lie algebra, meaning that for

every SU(n) Lie group, there exists a corresponding su(n) Lie algebra, and vice versa. This in general is called

the Lie correspondence given by three fundamental theorems of Lie theory, and the one-to-one correspondence

is only true for simply connected Lie groups, such as SU(n). Mathematically, the correspondence can be

described using the following theorem.

Theorem 2 [Lie correspondence]: The categories of finite-dimensional Lie algebras and connected, simply

connected Lie groups are equivalent. 14

Since SU(n) is connected and simply connected, the categories of SU(n) and su(n) are equivalent, i.e.,

they correspond to each other.

The Lie correspondence between SU(n) and su(n) have the following consequences:

14 I

do not fully understand the equivalence of categories and will not go into details, but this is the correct statement.

8

1. Given a matrix U ∈ SU(n) parameterized by a set of real parameters {xj }, j ∈ {1, 2, · · · , d}, a basis

of the corresponding su(n) Lie algebra can be found by

(el )jk =

∂

Ujk (x1 , x2 , · · · , xd )|x1 =x2 =···=xd =0 .

∂xl

(30)

This relation is local in the parameter space. It directly relates the d = n2 − 1 degrees of freedom of

SU(n) and su(n). From geometry point of view, su(n) is the tangent space to the Lie group SU(n) at

the identity.

2. Given a n × n-dimensional basis {ej } of the su(n) Lie algebra, j ∈ {1, 2, · · · , d}, any matrix U ∈ SU(n)

can be found with parameterization {xj }, j ∈ {1, 2, · · · , d}, by 15

i

U (x) = exp(xj ej ) = exp − xj λj .

(31)

2

This relation is nonlocal since it relies on the exponential map. Following Eq. (31), we can use the basis

(or generators) of su(n) to reconstruct the group elements of SU(n). In other words, the generators of

the su(n) Lie algebra are the infinitesimal generators of the SU(n) Lie group.

In the next section we introduce the representation theory of Lie algebras. Due to the Lie correspondence,

we will mainly focus on the su(n) Lie algebra. However, we will also introduce the representation theory of

Lie groups in times of need.

Representations of su(n)

3

3.1

Representation theory of Lie algebras

Definition 0 [representation of Lie algebras]: A representation (or a rep) of a Lie algebra g over F on a

vector space V (of dimension m) is a homomorphism ρ : g → gl(V ) = End(V ).

Definition. 0 has the following consequences:

1. Notation. In many books the reps are defined to be the vector spaces they act on directly. In our

notation, we will refer to ρ as the rep, and the vector space V as the g-module associated with the rep

ρ of Lie algebra g.

2. Rep ρ is linear (by homomorphism), i.e., for α, β ∈ F and a, b ∈ g

ρ(αa + βb) = αρ(a) + βρ(b).

(32)

3. Rep ρ preserves the Lie brackets (by homomorphism), i.e.,

ρ([a, b]) = [ρ(a), ρ(b)].

(33)

4. Rep ρ can be written as m×m matrices if we specify the elements of the vector space V as m-dimensional

vectors. Then we say rep ρ is m-dimensional.

5. Due to linearity, for a Lie algebra, it suffices to find the corresponding matrices of the basis to define

a specific rep.

15 Surjective,

proof not shown.

9

6. The operators we constructed in Sec. 2.3.2 are just another way to define reps. We will unify the

concepts of operators and reps from now on. A Lie algebra g contains elements that satisfy the

Jacobi identity. Once we say these elements are also linear operators acting on a vector space V , that

automatically means that there is a homomorphism that maps these elements to the endomorphisms

on V .

As an add-on to our last point above, here

n we show

o that the set of operator matrices defined in Sec. 2.3.2

(Ωjk , etc.) is a rep of the Lie algebra g = Ω̂, · · · .

Proof. The linearity is trivial to show. The commutation-preserving property can be shown as follows. Let

Â, B̂ ∈ g be two operators of the Lie algebra. Then their operator matrices are just Ajk and Blm . Thus

([A, B])jk = (AB)jk − (BA)jk = Ajl Blk − Bjl Alk .

What we have done above is more of a consistency check. We specify that for a Lie algebra composed

of operators that act on the inner product space V , the corresponding operator matrices always form a rep

of the Lie algebra. With the different inner product spaces chosen, the operator matrices have different

matrix forms, i.e., the Lie algebra has different reps. From now on, for simplicity, we abandon the ˆ· operator

notation. All the Lie algebra elements we talk about in this work will be taken as linear operators acting

on the same vector space V , which is the g-module of the corresponding rep that maps these Lie algebra

elements to the operators.

Some other useful definitions regarding reps of Lie algebras.

1. Consider a rep ρ : g → gl(V ) of a Lie algebra g. A subspace U ⊂ V is invariant or stable if ∀u ∈ U

and ∀a ∈ g, ρ(a)u ∈ U . Then we say ρ : g → gl(U ) is a subrepresentation (subrep).

2. A rep is faithful if it is injective.

The rep of a Lie group is defined as below.

Definition 0 [representation of Lie groups]: A rep of a Lie group G over F on a vector space V (of dimension

m) is a homomorphism ρG : G → GL(V ) = Aut(V ).

Here GL(V ) is the group of all m × m invertible matrices on the m-dimensional vector space V .

In general, representations allow us to construct elements of the algebra or group as matrices acting

on different spaces. In the cases of SU(n) and su(n), the one-to-one Lie correspondence between them is

naturally inhibited by their reps. Therefore we will still focus on the reps of su(n) with comments on SU(n)

reps when necessary.

We next look at some examples of reps. 16

3.2

3.2.1

Examples of reps

Trivial/zero rep

The trivial/zero rep of a Lie algebra g is the rep that takes all elements of g to the zero linear map, i.e.,

ρ : g → gl(V )

(34)

ρ(a) = 0.

(35)

such that for arbitrary V and all a ∈ g,

Similarly, the trivial rep of a Lie group sends group elements to the identity matrix, so is also called the

identity rep.

16 I was very overwhelmed by all these different concepts so I make sure to include all the names I can find. These concepts

are not mutually exclusive.

10

3.2.2

Defining/natural/standard/tautological rep

The defining/natural/standard/tautological rep is the rep for which the Lie algebra is naturally defined.

For example, we have defined the su(n) Lie algebra as in Def. 0. Therefore, the defining rep is

ρ : su(n) → gl(Cn )

(36)

ρ(ej ) = (ej )kl

(37)

such that

for j ∈ {1, 2, · · · , d} and k, l ∈ {1, 2, · · · , n}, where ej ’s are the basis elements of su(n) and (ej )kl ’s are their

matrix forms on Cn given by Eqs. (12)–(14) and (15).

The defining/natural/standard/tautological rep of a Lie group is defined likewise. For finite groups like

S3 , the defining rep also means that there is a natural geometry interpretation.

3.2.3

Adjoint/regular rep

The adjoint/regular rep of a Lie algebra g of dimension d is the rep for which the g-module is g itself, i.e.,

ad : g → gl(g)

(38)

ad(a)b = [a, b].

(39)

such that for all a, b ∈ g,

Clearly, the effect of the adjoint rep is to take the Lie bracket.

We can define the adjoint operator of a ∈ g as ad(a) by

ad(a)b = [a, b] = ad(a)jk bj ,

(40)

where j, k ∈ {1, 2, · · · , d} since by definition the dimension of the adjoint matrices is d×d. Comparing Eqs. (7)

and (40), we find that the adjoint matrices of basis elements are connected to the structure constants by

ad(ej )lk el = fjkl el .

(41)

This is also the explicit form of the adjoint rep in basis {el }.

The fact that the adjoint rep is a rep is equivalent to the Jacobi identity (2).

Proof. Consider

ad([a, b]) = ad(a)ad(b) − ad(b)ad(a)

(42)

ad([a, b])c = ad(a)ad(b)c − ad(b)ad(a)c

[[a, b], c] = [a, [b, c]] − [b, [a, c]]

[a, [b, c]] + [b, [c, a]] + [c, [a, b]] = 0.

For a compact Lie group G and its corresponding Lie algebra g, the adjoint rep of the Lie group G is the

homomorphism Ad : G → GL(g) such that for b ∈ g and A ∈ G

Ad(A)b = AbA−1 ∈ g,

(43)

i.e., the adjoint operation is given by the conjugation of b by A. Let A = exa for x ∈ F and a ∈ g, then we

have by BCH

AbA−1 = exa be−xa = b + [a, b]x + O x2 .

(44)

11

Therefore, using the Lie correspondence we have

ad(a)b = [a, b]

(45)

which is exactly Eq. (39).

The adjoint rep is useful in physics since it is closely related to invariant theory, which can be seen from

its form in Eq. (43). 17 In Sec. 4.3.2 we will see how we can relate adjoint reps to symmetry through this

reasoning.

3.2.3.1

Killing form

Another useful tool constructed from the adjoint rep is the Killing form K(a, b).

Given a rep ρ of g on V , a bilinear form 18 B on V is invariant under the action of g if

B(ρ(a)v, w) + B(v, ρ(a)w) = 0

(46)

for v, w ∈ V and a ∈ g. Now let ρ be the adjoint rep with V = g. Then we say a bilinear form B on g is

invariant if

B(ad(a)b, c) + B(b, ad(a)c) = 0

(47)

B([a, b], c) + B(b, [a, c]) = 0

(48)

or

for a, b, c ∈ g.

One important example of such invariant bilinear forms on g is the Killing form K(a, b), defined as

K(a, b) = Tr [ad(a)ad(b)]

(49)

for a, b ∈ g. One can check that K(a, b) is also symmetric. For this reason, if the Killing form is also

invertible, it can be interpreted as a metric tensor. This property will be used in Sec. 4.4.

The Killing form of two basis elements of g, following Eq. (41), can be given by the structure constants,

i.e.,

K(ej , ek ) = fjlm fkml .

(50)

Specifically for the su(n) Lie algebra, the Killing form can be further simplified and yields

K(ej , ek ) = fjlm fkml = −fjlm fklm .

(51)

K(ej , ej ) = −fjlm fjlm ,

(52)

Therefore the diagonal elements are

which means Killing form on su(n) is negative definite.

We say a Lie algebra is compact if its Killing form is negative definite. 19 A compact Lie algebra can be

seen as the smallest real form of a complexification. In our case, su(n) is the real form of sl(n, C).

We can also directly calculate the Killing form of su(n) using their defining reps. For x, y ∈ su(n), the

Killing form is given by

K(x, y) = 2n Tr[xy].

(53)

Equation (53) also works for sl(n, C) although technically we have not given its defining rep (see Sec. 4.4.8).

Killing forms are useful in the structure theory of Lie algebras in Sec. 4.

17 Is

this correct???

bilinear form B(x, y) can have a coordinate representation as B(x, y) = xT Ay where A is the matrix of the bilinear

form. This footnote is a self reminder and has nothing to do with the context.

19 There is some ambiguity here regarding whether the definition of a compact Lie algebra should correspond to a Killing

form being negative definite or negative semidefinite. But su(n)’s Killing form is negative definite anyway so we do not care.

18 A

12

3.3

Morphism between reps

In this section we discuss morphisms between reps.

3.3.1

Intertwining operator

Definition 0 [intertwining operator]: An intertwining operator between two reps ρV and ρW of g (respectively, G) is a morphism f : V → W that commutes with the action of g (respectively, G), i.e.,

f ρV (a)v = ρW (a)f (v)

(54)

for a ∈ g (respectively, G) and v ∈ V .

Notice that here we follow the convention that intertwining operators map one g-module to another

g-module, instead of from rep ρV to rep ρW . The space of all g-morhisms between V and W is denoted as

Hom(V, W ).

3.3.2

(55)

Equivalent reps

If f is bijective (isomorphism), then V and W are equivalent. The two reps ρV and ρW are then called

equivalent reps. When two reps are equivalent reps, we say they are the same rep up to isomorphism, denoted

by

∼ ρW .

ρV =

Equivalent reps can be transformed to one another using similarity transformations.

3.3.3

(56)

20

Reps of su(n) and sl(n, C)

Theorem 3: Let g and gC be a real Lie algebra and its complexification. Then categories of complex reps

of g and gC are equivalent.

Theorem 3 means that any complex rep of g has a unique corresponding rep of gC , and Hom(V, W )g =

Hom(V, W )gC . In other words, if we classify the reps of gC , the reps of g are also classified.

In our case, Thm. 3 tells us that categories of complex reps of su(n) and sl(n, C) are equivalent. In fact,

considering Thm. 2, the categories of finite-dimensional reps of SL(2, C), SU(2), sl(2, C), and su(2) are all

equivalent. 21

3.4

Operations on reps

Theorem 4: Let V and W be g-modules associated with reps ρV and ρW , respectively. Then there is a

canonical structure 22 of a rep on V ⊕ W , V ⊗ W , and V ∗ . Specifically, the actions of g on these spaces are:

1. Action of g on V ⊕ W is given by ρV ⊕W : g → gl(V ⊕ W ) such that for a ∈ g, v ∈ V , and w ∈ W ,

ρV ⊕W (a)(v ⊕ w) = ρV (a)v ⊕ ρW (a)w.

(57)

2. Action of g on V ⊗ W is given by ρV ⊗W : g → gl(V ⊗ W ) such that for a ∈ g, v ∈ V , and w ∈ W ,

ρV ⊗W (a)(v ⊗ w) = ρV (a)v ⊗ w + v ⊗ ρW (a)w.

20 A

similarity transformation is diagonalization if the transformed matrix becomes diagonal.

is true for n right???

22 I think this means we can define a rep following the definition???

21 This

13

(58)

3. Action of g on V ∗ is given by ρV ∗ : g → gl(V ∗ ) such that for a ∈ g, v ∈ V , and v ∗ ∈ V ∗ ,

ρV ∗ (a)v ∗ = −ρ∗V (a)v ∗ ,

(59)

where ρ∗V (a) is the adjoint operator of ρV (a).

Comments on Thm. 4:

1. The corresponding Lie group actions on V ⊕ W , V ⊗ W , and V ∗ for A ∈ G are given by

ρV ⊕W (A)(v ⊕ w) = ρV (A)v ⊕ ρW (A)w,

(60)

ρV ⊗W (A)(v ⊗ w) = ρV (A)v ⊗ ρW (A)w,

(61)

ρV ∗ (A)v =

∗

ρ∗V

(A

−1

)v .

∗

(62)

2. The definition of ρV ⊗W is a natural result of linearity and the Lie correspondence.

3. The definition of ρV ∗ can be understood as follows. Consider the bilinear form h·, ·i which is given by

h·, ·i : V × V ∗ → C,

(63)

where V ∗ is the dual space of V . Then following from the fact that the natural pairing V × V ∗ and the

tensor product V ⊗ V ∗ have a universal property 23 , we can take V × V ∗ as the vector space V ⊗ V ∗

and understand the bilinear form h·, ·i as an intertwining operator between V ⊗ V and C (trivial rep).

Using the definition of the intertwining operator and Eq. (58), we get

hρV (a)v, v ∗ i + hv, ρV ∗ (a)v ∗ i = 0.

(64)

Equation (59) thus follows. Here the adjoint operator is the Hermitian adjoint or Hermitian conjugate

operator in physics (usually labeled with “†”). In Dirac notation, Eq. (64) is just saying

hv|ρV ∗ (a)ui = − hρV (a)v|ui

E

D

hv|ρV ∗ (a)ui = − v ρ†V (a)u .

(65)

Thus we get Eq. (59).

4. Observing Eqs. (78) and (59), we see that ρV is a unitary rep if ρV = ρV ∗ .

5. As a direct result of Thm. 4, the following vector spaces are all g-modules if V and W are g-modules:

(a) any tensor space V ⊗j ⊗ (V ∗ )

⊗k

for j, k ∈ N.

(b) Hom(V, W ), with the action given by

ρ(a)h = ρW (a)h − hρV (a)

(66)

for h ∈ Hom(V, W ).

Specifically, when W = V , Hom(V, V ) = End(V ) ' V ⊗ V ∗ , i.e., we now have

ρ : g → End(End(V )) = gl(gl(V )) = gl(V ⊗ V ∗ )

(67)

ρ(a)h = ρV (a)h − hρV (a)

(68)

with

where in Dirac notation h is just |vihu| for |vi ∈ V and hu| ∈ V ∗ . But Eq. (68) is just Eq. (39)!

So we have recovered the adjoint rep.

23 See

category theory.

14

Structure theory of sl(n, C) and its reps

4

We study the structure theory of Lie algebras with sl(n, C) as an example. With sl(n, C) being the

complexification of su(n), all of the useful results regarding su(n) can be derived from those regarding

sl(n, C). Mathematically, as we have mentioned, this means that categories of complex finite-dimensional

reps of su(n) and sl(n, C) are equivalent. Therefore, with su(n) as our target, we investigate the classification

theory of sl(n, C).

We start from formalizing the simplicity of Lie algebra.

4.1

4.1.1

Simple and semisimple Lie algebras

Ideals and commutativity

The first property of the structure of a Lie algebra we discuss is its commutativity (or abelianity), i.e.,

how close a Lie algebra is to an abelian Lie algebra. The tool we use to study commutativity is called the

ideal of a Lie algebra. A subalgebra g0 is invariant if [a, b] ∈ g0 , ∀a ∈ g0 and ∀b ∈ g. Then we define ideals

as follows.

Definition 0 [ideal]: An ideal i of a Lie algebra g is its invariant subalgebra, i.e., ∀g ∈ g, a ∈ i, [a, g] ∈ i.

24

Obviously, {0} and g are both ideals of g. Also, given i1 and i2 as ideals of g, i1 ∩ i2 , i1 + i2 , and [i1 , i2 ]

are all ideals, where

[i1 , i2 ] = {[a, b]}

(69)

for a ∈ i1 and b ∈ i2 .

With the ideal defined, we now examine the commutativity of a Lie algebra. Specifically, we make the

following definitions given a Lie algebra g:

1. The commutant of g is the ideal [g, g]. The smaller the commutant is, the closer g is to being abelian.

We say that a Lie algebra g is abelian if [a, b] = 0, ∀a, b ∈ g. Then g is abelian when [g, g] = 0.

2. Lie algebra g is solvable if Dn g = 0 for some n ∈ N, where

( j+1

D g = Dj g, Dj g

D0 g = [g, g]

.

(70)

3. A radical rad(g) is the unique solvable ideal that contains any other solvable ideal of g.

4. Lie algebra g is nilpotent if Dn g = 0 for some n ∈ N, where

(

Dj+1 g = [g, Dj g]

D0 g = g

.

(71)

Both nilpotent and solvable Lie algebras are “almost abelian” algebras, meaning that an abelian algebra can

be made by successive extensions of abelian algebras. As an example, let b ⊂ gl(n, F) be the subalgebra of

upper triangular matrices and n ⊂ gl(n, F) be the subalgebra of all strictly upper triangular matrices. Then

b is solvable, and n is nilpotent. We mention here there is a Cartan criterion for solvability, i.e., g is solvable

iff K([g, g], g) = 0.

24 Since

the commutator is skew-symmetric, the left ideal and right ideal are the same.

15

4.1.2

Simplicity and semisimplicity

We next introduce semisimplicity and simplicity to describe how far a Lie algebra is from being abelian.

Definition 0 [semisimplicity of Lie algebras]: A Lie algebra g is semisimple if any of the following equivalent

statements is true:

1. Lie algebra g does not have a nonzero abelian ideal.

2. Lie algebra g does not have a nonzero solvable ideal.

3. The radical rad(g) = 0.

4. (Cartan criterion) The Killing form K(a, b) for a, b ∈ g is nondegenerate, i.e., det [K(a, b)] 6= 0.

25

Definition 0 [simplicity of Lie algebras]: A Lie algebra g is simple if it is not abelian and does not possess

an ideal other than {0} and g.

Here we give several useful criteria for semisimplicity of a Lie algebra:

1. A Lie algebra is semisimple iff it is a direct sum of simple Lie algebras.

2. Any compact Lie algebra is semisimple.

3. If a Lie algebra is simple, then it is semisimple.

4. gC is semisimple iff g is semisimple, where g and gC are a Lie algebra and its complexification.

We will see in Sec. 4.2 that semisimple Lie algebras are completely reducible, which is a very desired property

in physics. Semisimplicity means far away from commutativity. If a Lie algebra g is semisimple, then its

commutant [g, g] = g.

All of the classical Lie algebras (also the most useful Lie algebras in physics) [see Sec. 4.4.6] except for D1

and D2 are simple. 26 Since sl(n, C) is a classical Lie algebra, it is simple, thus semisimple. Therefore su(n)

is semisimple. One counterexample is the Heisenberg algebra {X, P, 1} that is neither simple nor semisimple.

4.1.3

Levi decomposition

Given the tools to describe a Lie algebra’s commutativity, we have the Levi theorem.

Theorem 5 [Levi decomposition]: Any Lie algebra g can be written as a direct sum

g = rad(g) ⊕ gss

(72)

where gss is a semisimple Lie algebra (not necessarily an ideal).

The Levi theorem states that any Lie algebra can be written as a direct sum of an abelian part and a

semisimple part.

We can add more structure to the abelian part. If rad(g) = z(g), where z(g) = {a ∈ g | [a, b] = 0, ∀b ∈ g}

is the center of g, then we say g is reductive. 27 Any simple Lie algebra is reductive.

25 Recall that a symmetric bilinear form on a finite-dimensional vector space V is nondegenerate/invertible if for all a 6= 0

there exists b such that K(a, b) 6= 0.

26 What is D ???

1

27 Reductivity is a nice property for Lie algebras since it ensures [g, rad(g)] = 0, i.e., elements in an irrep will act as zero only

when they are zeros.

16

4.2

4.2.1

Reducible and irreducible reps

Reducibility

We now turn from Lie algebras to their reps. We make the following definitions.

Definition 0 [reducibility of reps]: A nontrivial rep ρ : g → gl(V ) of a Lie algebra g is irreducible if it has

no subreps other than the trivial rep or itself. A rep is reducible if it is not irreducible.

For convenience, we say the V is an irreducible sl(2, C)-module, or V is irreducible, if rep ρ : g → gl(V )

is irreducible.

As an example, we have the following theorem.

Theorem 6: The rep ρ : sl(n, C) → gl(Cn ) is irreducible.

Theorem. 6 also means the defining reps of sl(n, C) are irreducible. Following Thm. (6), we clarify the

difference between the direct sum of vector spaces Cl and Cm and the direct of sum of g-modules Cl and

Cm . For arbitrary vector spaces Cl and Cm , we have Cl+m = Cl ⊕ Cm . However, for vector spaces Cl and

Cm acted upon by reps of g, we have

Cl+m 6= Cl ⊕ Cm (g-modules).

(73)

u

with a ∈ g, u ∈ Cl , and

This is because for rep ρCl+m : g → gl Cl+m , we require action ρCl+m (a)

v

v ∈ Cm . However for reps ρCl : g → gl Cl and ρCm : g → gl(Cm ), following Eq. (57), the rep of their direct

u

0

u

+ ρCm (a)

. Clearly, by definition ρCl ⊕Cm is reducible, while ρCl+m

= ρCl (a)

sum acts as ρCl ⊕Cm (a)

0

v

v

can be irreducible. In this sense, we have Eq. (73). Therefore Thm. 6 tells us that any rep of sl(n, C) on Cn

is irreducible, n ∈ Z and n ≥ 2. Nevertheless any rep of sl(n, C) on Cm ⊕ Cn−m is obviously reducible, for

m, n − m ∈ Z+ .

4.2.2

Complete reducibility

Irreducible reps, or irreps, of semisimple Lie algebras have nice properties that they can be classified

completely as we will see later. In fact, one of the most commonly used technique in physics is to decompose

a direct product of irreps as a direct sum of irreps, which will then be useful due to their block diagonal

nature. We formalize this process by introducing the complete reducibility of reps.

Definition 0 [complete reducibility of reps]: A rep of g acting on V is completely reducible if it is isomorphic

to a direct sum of irreps, i.e., V ' ⊕nj Vj for nj ∈ N. Here nj ’s are called multiplicities, and Vj ’s are gmodules of pairwise nonisomorphic reps.

For convenience, we say V is a completely reducible g-module, or V is completely reducible, if the rep

on V is completely reducible.

Not every rep of a Lie algebra is completely reducible. Mathematically, we want to answer the following

three questions:

1. For what Lie algebras are all the reps completely reducible?

2. For a given completely reducible rep of a Lie algebra, what is the decomposition into irreps?

3. For a given Lie algebra, classify all irreps.

In this section, we answer the first question. The second question will be answered theoretically use characters

in Sec. 4.3.5, but a practical method using weight decomposition will be given in Sec. 4.4. The third question

will be briefly answered in Sec. 4.4.6.

The answer to the first question is the following theorem.

17

Theorem 7: Any complex finite-dimensional rep of a semisimple Lie algebra is completely reducible.

Since sl(n, C) is semisimple, any complex finite-dimensional rep of sl(n, C) is completely reducible, and

so is that of su(n). In Sec. (4.4), we will focus on the decomposition of reps of semisimple Lie algebras, with

sl(n, C) as an example.

In the next section, we examine other theorems that are related to complete reducibility. This is a

deviation from purely Lie algebra structure theory since we discuss compact Lie groups and their associated

real Lie algebras such as SU(n) and su(n). However, the contents are very important in physics.

4.3

4.3.1

Complete reducibility and symmetry

Schur’s lemma

One useful tool to decompose completely reducible reps into irreps is the use of intertwining operators.

Specifically, there is Schur’s lemma.

Theorem 8 [Schur’s lemma]: Let ρV and ρW be complex irreps of g (respectively, G).

1. If ρV ∼

= ρW (V = W ), then Hom(V, W ) = Hom(V, V ) = C1.

2. If ρV ρW , then Hom(V, W ) = 0.

Schur’s lemma tells us how inequivalent irreps are fundamentally different from each other. Part 1 says

equivalent irreps only differ by coefficients. Part 2 says inequivalent irreps cannot be intertwined unless

trivially.

The following theorems are direct results of Schur’s lemma.

Theorem 9: If g (respectively, G) is commutative, then any complex irrep of g (respectively, G) is onedimensional.

Theorem 10: Let ρV be a completely reducible rep of g (respectively, G).

L

1. If V =

Vj , ρVj irreducible and pairwise nonisomorphic, then any intertwining operator Φ : V → V

L

is of the form Φ =

λj 1Vj .

L

L nj

2. If V =

nj Vj =

C ⊗ Vj , ρVj irreducible and pairwise nonisomorphic, then any intertwining

L

operator Φ : V → V is of the form Φ =

Aj ⊗ 1Vj , for Aj ∈ End(Cnj ).

Theorem 10 gives us a very effective way to analyze intertwining operators. Specifically, it is extremely

useful in physics as the Hamiltonian can be thought of as an intertwining operator.

4.3.2

Hamiltonian & first definition of symmetry

Consider a Hilbert space V , which is a complex vector space, and a Hamiltonian operator H : V → V .

Then the following statements are equivalent:

1. There is a symmetry described by a Lie group G.

2. There is an action of G on V that leaves H invariant.

3. For all A ∈ G,

AHA−1 = H.

4. H is an intertwining operator that commutes with actions of G.

18

(74)

The above statements can be taken as a first definition of symmetry. Specifically, it predicts a very nice

physics picture as illustrated below.

Given H as an intertwining operator H : V → V , if the Lie group G has a completely reducible rep on

L

V such that V =

nj Vj is the G-module, then immediately from Thm. 10 we see that H has the form

···

c11 1Vj · · · c1nj 1Vj

M

(75)

H=

Aj ⊗ 1Vj =

···

···

···

.

cnj 1 1Vj · · · cnj nj 1Vj

···

If all the nj ’s are 1, then

H=

M

Ej 1Vj

···

=

(76)

.

Ej 1Vj

···

Since H has a block diagonal form, the following statements are true:

1. If the H commutes with a rep of G, then the energy eigenspaces are irreps. Group actions of G on an

energy eigenstate will not change its energy.

2. A state that lives in the vector space of an irrep will stay there under time evolution.

28

All this seems nice, only if G has a completely reducible rep.

4.3.3

Unitary rep & the second definition of symmetry

It turns out that in our case G does have a completely reducible rep. To see this we first introduce the

notion of unitary reps.

Definition 0 [unitary rep]: A complex rep ρ : g → gl(V ) of a real Lie algebra g is unitary if there is an

inner product that is g-invariant, i.e., for all a ∈ g and v, w ∈ V

(ρ(a)v, w) + (v, ρ(a)w) = 0,

(77)

ρ(a)† = −ρ(a).

(78)

or equivalently, if ρ(a) ∈ u(V ), i.e.,

A complex rep ρG : G → GL(V ) of a real Lie group G is unitary if there is an inner product that is

G-invariant, i.e., for all A ∈ G and v, w ∈ V ,

(ρG (A)v, ρG (A)w) = (v, w),

(79)

ρG (A)† = ρG (A)−1 .

(80)

or equivalently, if ρG (A) ∈ U(V ), i.e.,

Unitary reps are nice because they are completely reducible.

Theorem 11: Every unitary rep is completely reducible.

Therefore if we can show that Lie groups have unitary reps then we are done. This is given by the

following theorem.

28 We

will discuss this vector space, the multiplet, in the next section.

19

Theorem 12 [Peter-Weyl, Part II]: Any finite-dimensional rep of a compact Lie group G (respectively, g)

is unitary and thus completely reducible.

Notice that Thm. 12 discusses a narrower class of Lie algebras than Thm. 7, since compact Lie algebras

[e.g., su(n)] are the compact real forms of the corresponding complex semisimple Lie algebras [e.g., sl(n, C)].

However, Thm. 12 does provide the unitarity property that Thm. 7 does not guarantee.

From Thm. 12 we get the following statement.

Theorem 13: Any finite-dimensional rep of SU(n) and su(n) is unitary and completely reducible.

We can now collect our thoughts. Given a compact Lie group G with its associated real Lie algebra g and

the complexification gC , we know that any finite-dimensional rep of G, g, and gC is completely reducible.

Therefore, given a Hamiltonian operator, which is also an intertwining operator H : V → V , all the results

from Sec. 4.3.2 will stand.

This is nice, but where did the unitarity of reps of G and g play a role?

Recall in Eq. (43) we have introduced the adjoint rep of a Lie group that leaves a Lie algebra element

invariant, i.e., for a ∈ g and A ∈ G,

AaA−1 ∈ g.

(81)

Now that we know any finite-dimensional rep of G or g is unitary from Thm. 12, we can define the unitary

reps ρG : G → U(V ) and ρ : g → u(V ). Then for A ∈ G and a ∈ g we have

ρG (A)ρ(a)ρG (A)−1 ∈ u(V ).

(82)

Let us look at Eq. (82) and think about symmetry again. If states in a Hilbert space transform under a Lie

group action that is unitary, then we say the group is a group of symmetry of the Hilbert space since the

group action preserves the inner product of states. Then observables, as linear operators on the Hilbert space,

are well-defined if their transformation under the group action follows the transformation of the states in

such a way that if the observables and states are transformed together then no information should be able to

get measured. Since states transform with ρG (A), the observables should transform by a conjugation of these

unitary reps, i.e., exactly given by Eq. (82). Considering Hermitianity, a Hermitian operator (observable)

acting on V can thus be constructed as iρ(a), which are the generators of the Lie algebra. From Eq. (82),

after transformation under the group action, the observables remain Hermitian.

In this sense, we give the second definition of symmetry in quantum mechanics.

Definition 0 [symmetry]: We say that there is a symmetry when there is a unitary rep of a Lie group acting

on the Hilbert space.

The Lie algebra generators both generate the unitary Lie group rep, and also transform under it as

observables via conjugation. Normally, observables transform into other generators of the Lie algebra.

Specifically, when the observable is the Hamiltonian H, and the group action leaves H invariant, we retain

the first definition of symmetry in Sec. 4.3.2.

4.3.4

Multiplet

L

In Sec. 4.3.2 we see that for a completely reducible rep, its g-module can be written as V =

nj V j .

A state that lives in one of the g-modules of the irreps Vj remains in Vj when acted by the intertwining

operator H. These g-modules of the irreps are called multiplets in physics.

Definition 0 [multiplet]: The g-module of an irrep ρ of Lie algebra g is called the multiplet of ρ.

The concept of multiplets appears everywhere in quantum physics. Here are some important properties:

1. Stability. Let ρ be a rep. If ρ is irreducible, then by definition states transform in the same multiplet

under Lie algebra elements. If ρ is completely reducible, then due to the block diagonal form, states

20

that are in a certain multiplet will transform within the same multiplet under actions of Lie algebra

elements.

2. State multiplets. We refer to the multiplet expressed in a specific state basis {vj } as the state multiplet

associated with the basis.

3. The su(n) and sl(n, C) Lie algebras have the same multiplets, as a result of Thm. 3.

Next we define the fundamental multiplets of the su(n) algebras.

29

Definition 0 [fundamental multiplet]: If the sl(n, C)-module is Cn , then we call this sl(n, C)-module the

fundamental multiplet of sl(n, C). Similarly for su(n).

By Thm. 6, the fundamental multiplet of of sl(n, C) is well-defined. The fundamental multiplet of sl(n, C)

is the defining multiplet of sl(n, C), as well as the multiplet of lowest dimension corresponding to the irreps

of sl(n, C).

4.3.5

Characters

So far we have seen that a large class of reps are completely reducible. However, we have not talked

about the decomposition of completely reducible reps or the multiplicity yet. In this section we introduce

the concept of characters.

Definition 0 [characters of real compact Lie groups and algebras]: Let G and g be a real compact Lie group

and its associated Lie algebra.

The character XV of a finite-dimensional rep ρG : G → GL(V ) is the function on G defined by

XV (A) = Tr[ρG (A)].

The character χV of a finite-dimensional rep ρ : g → gl(V ) is the function on g defined by

h

i

χV (a) = Tr eρ(a) .

(83)

(84)

Definition .0 has the following consequences:

1. Characters are basis free.

2. The characters are connected by

χV (a) = XV (ea ).

(85)

Since the characters of G and g are defined to be equal for corresponding elements, below we only

discuss XV .

3. If ρG is trivial rep, then XV (A) ≡ 1.

4. For A, B ∈ G,

XV ⊕W = XV + XW ,

(86)

XV ⊗W = XV XW ,

(87)

XV ∗ =

X(ABA

29 I

−1

XV∗ ,

(88)

) = XV (B).

(89)

do not know if this definition is useful at all. See Greiner for more on multiplets.

21

Characters offer a theoretical way to determine the multiplicities through an inner product on C ∞ (G, C)

given by

ˆ

(f1 , f2 ) =

f1 (A)f2∗ (A) dA

(90)

G

where dA is the Haar measure. From Schur’s lemma in Thm. (8), we can prove

Theorem 14: Let ρV and ρW be nonisomorphic complex irreps of a compact Lie group G. Then

1. (XV , XW ) = 0, which means the characters XV and XW are orthogonal with respect to the inner

product.

2. (XV , XV ) = 1.

This implies the following theorem.

Theorem 15: Let ρV be a complex rep of a compact real Lie group G. Then

1. ρV is irrep iff (XV , XV ) = 1.

L

2. V can be uniquely written in the form V =

nj Vj , where Vj ’s are G-modules of pairwise nonisomor

phic irreps and the multiplicities nj are given by nj = XV , XVj .

Theorem. 15 gives a way to calculate nj . In reality, it is usable only for finite groups and special cases of

Lie groups due to the difficulty to calculate the inner product (90). In Sec. 4.4 we will develop a much more

practical method using weight decomposition.

4.4

Reps of sl(n, C)

In this section we answer the second question we asked in Sec. 4.2, i.e., how to find the decomposition

of a completely reducible rep. We will assume g to be a finite-dimensional complex semisimple

Lie algebra for the remainder of Sec. 4. Specifically, we use sl(n, C) as an example, which is also our

primary goal. For simplicity, whenever we specify the g-module, we will write ρ(a)v = av for a ∈ g, i.e., we

treat a not only as the Lie algebra element, but also as an operator. This notation is closer to the one used

in physics.

We start from the simplest case of sl(n, C), the sl(2, C) Lie algebra.

4.4.1

Reps of sl(2, C)

One most commonly used basis of sl(2, C) is {e, f, h} that satisfies

[h, e] = 2e,

[h, f ] = −2f,

[e, f ] = h.

In the defining rep, which is on C2 , these basis elements are commonly chosen as

0 1

0 0

1 0

e = σ+ =

, f = σ− =

, h = σz =

.

0 0

1 0

0 −1

(91)

(92)

Notice that h is diagonal and has eigenvalues ±1.

More generally, in an arbitrary rep, we know by the fundamental theorem of algebra that h can be

diagonalized with complex eigenvalues. This can be formalized as the weight decomposition.

22

Definition 0 [weight, sl(2, C)]: Let V be an sl(2, C)-module. A vector v ∈ V is called a vector of weight λ,

λ ∈ C, if it is an eigenvector of h with eigenvalue λ, i.e.,

hv = λv ⊂ Vλ ,

(93)

where the subspace with eigenvalue λ is denoted as Vλ ⊂ V .

Then the actions of e and f on Vλ can be derived from commutation relations (91), yielding

ev ⊂ Vλ+2 ,

(94)

f v ⊂ Vλ−2 .

(95)

Since e brings a vector from Vλ to Vλ+2 , operator e is usually called the raising operator. Similarly, since f

brings a vector from Vλ to Vλ−2 , operator f is usually called the lowering operator.

We can thus apply the weight decomposition to reps of sl(2, C).

Theorem 16 [weight decomposition, sl(2, C)]: Every finite-dimensional rep of sl(2, C) has a weight decomposition, i.e.,

M

V =

Vλ .

(96)

λ

By Thm. 7, any complex finite-dimensional rep of sl(2, C) is completely reducible. Therefore, it suffices

to discuss only the weight decomposition of irreps. Specifically, we define the highest weight of V .

Definition 0 [highest weight]: Let V be irreducible. A weight λ of V (Vλ 6= 0) is the highest weight if for

every weight λ0 of V we have

Re λ ≥ Re λ0 .

(97)

We can now write down the classification of irreps of sl(2, C).

Theorem 17 [classification, irreps of sl(2, C)]: Let {h, e, f } be the basis of sl(2, C).

1. For any n ∈ N, let Vn be a (n + 1)-dimensional vector space with basis {v0 , v1 , · · · , vn }. Define the

action of sl(2, C) by

hvk = (n − 2k)vk ,

(

f vk = (k + 1)vk+1 ,

(98)

k < n;

f vn = 0,

(

evk = (n + 1 − k)vk−1 ,

k > 0;

ev0 = 0,

(99)

(100)

Then ρVn : sl(2, C) → gl(Vn ) is an irrep. We call this irrep the irrep with highest weight n.

2. For n 6= m, reps ρVn and ρVm are nonisomorphic.

3. Every finite-dimensional irrep of sl(2, C) is isomorphic to one of the reps ρVn .

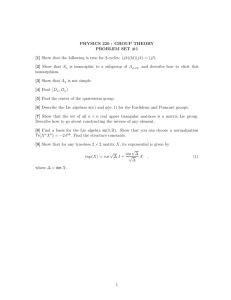

See Fig. 1 for a figure presentation of Thm. 17.

The classification of irreps of sl(2, C) provides the fundamental intuition for the classification of irreps

of semisimple Lie algebras. To generalize this weight decomposition to sl(n, C) and other semisimple Lie

algebras, we require the concept of the Cartan subalgebra.

23

1

vn

n−2k

−n+2

−n

n

2

v n−1

n+1−k

n−k

vk

···

n−1

n−2

k+1

n

n

n−1

v1

···

2

k

v0

1

Figure 1: Action of sl(2, C) on Vn in the irrep ρVn . Operator h sends a vector vk back to itself via the self

loop arrows. Operators e and f act as the top and bottom arrows, respectively.

4.4.2

Cartan subalgebra

For a finite-dimensional complex semisimple Lie algebra, the Cartan subalgebra can be defined as follows.

Definition 0 [Cartan subalgebra of complex semisimple Lie algebras]: A Cartan subalgebra h is the maximal

abelian subalgebra of Lie algebra g consisting semisimple elements. Semisimple elements are elements x ∈ g

such that the adjoint operator ad(x) : g → g is semisimple (diagonalizable).

In short, the Cartan subalgebra is the maximal abelian subalgebra with diagonalizable elements in the

adjoint rep. In every complex semisimple Lie algebra, there exists a unique Cartan subalgebra up to isomorphism. The dimension l of this unique Cartan subalgebra h is called the rank of a Lie algebra.

Definition 0 [rank]: The rank l of g is the dimension of the Cartan subalgebra h, i.e.,

l = dim h.

(101)

For sl(n, C),

h=

diag{c1 , c2 , · · · , cn } | cj ∈ C,

X

j

cj = 0

(102)

is a Cartan subalgebra. This will be the most important Cartan subalgebra we use in this work.