Chapter 3

Process Concepts

Chapter 3

This study source was downloaded by 100000843328917 from CourseHero.com on 11-23-2022 12:56:52 GMT -06:00

Process Concepts 16

Exercise Solutions

3.1

Give several definitions of process.

Ans:

See Section 3.1.1, Definition of Process.

Why, do you suppose, is there no universally accepted definition?

Ans: Probably because a process is an abstraction that is simply hard to describe, and

because its definition is sensitive to particular environments. If the students have difficulty

buying this, ask them to define “life.”

3.2 Sometimes the terms user and process are used interchangeably. Define each of these

terms.

Ans: User: The person who uses the computer. The manifestation of the user inside the

computer is often a process or a group of processes working together in the user’s behalf.

Process: see Section 3.1.1, Definition of Process.

In what circumstances do they have similar meanings?

Ans: Trivially, in the case of a single-user, single-tasking personal computer system these

terms are often considered to be identical.

3.3

Why does it not make sense to maintain the blocked list in priority order?

Ans: Because processes do not unblock in priority order, rather they unblock unpredictably

in the order in which their I/O completions or event completions occur.

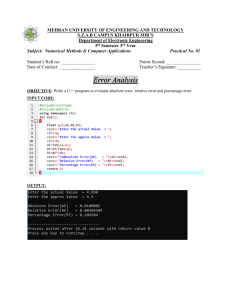

3.4 The ability of one process to spawn a new process is an important capability, but it is

not without its dangers. Consider the consequences of allowing a user to run the process in

Fig. 3.11. Assume that fork() is a system call that spawns a child process.

a. Assuming that a system allowed such a process to run, what would the consequences be?

Ans:

The program in Fig. 3.11 could quickly saturate the system with new processes.

This is not all that different from what happened in the now legendary Internet

Worm incident which occurred in November of 1988. Worms are discussed in

Chapter 19, Security.

b. Suppose that you as an operating systems designer have been asked to build in safeguards against such processes. We know (from the “Halting Problem” of computability theory) that it is impossible, in the general case, to predict the path of

execution a program will take. What are the consequences of this fundamental

result from computer science on your ability to prevent processes like the above

from running?

1

2

3

4

5

6

7

int main() {

while( true ) {

fork();

}

}

Figure 3.11 | Code for Exercise 3.4.

This study source was downloaded by 100000843328917 from CourseHero.com on 11-23-2022 12:56:52 GMT -06:00

17

Process Concepts

Ans:

Quite simply, we cannot write a general program that will predict in the general

case the path of execution a program will take. Therefore, we could never be

assured of automatically detecting such programs. One could certainly, however, write a program to detect specific program segments that could cause devastation (anti-virus software, covered in Section 19.6.3, Antivirus Software,

does something similar). This approach could take substantial processor time.

c. Suppose you decide that it is inappropriate to reject certain processes, and that the

best approach is to place certain runtime controls on them. What controls might the

operating system use to detect processes like the above at runtime?

Ans:

We know we cannot detect such programs in the general case. We might choose

to limit the number of processes each process can spawn either in total or in a

period of time. If the limit is exceeded, the system could terminate the process

or notify the system administrator.

d. Would the controls you propose hinder a process’s ability to spawn new processes?

Ans:

Yes. We might require that processes wishing to spawn large numbers of processes notify the operating system or the operating system administrator in

advance and receive permission to do so.

e. How would the implementation of the controls you propose affect the design of the

system’s process handling mechanisms?

Ans:

Clearly they would need to be able to determine that process spawning is occurring within the parameters of the proposed mechanism. There are some limitations imposed by operating systems whose per-user process tables are not

designed to grow indefinitely. These systems return an error code or throw an

exception to the process attempting to spawn the new process. To see how

Linux addresses this problem, see Section 20.5.2, Process Scheduling.

3.5 In single-user dedicated systems, it is generally obvious when a program goes into an

infinite loop. But in multiuser systems running large numbers of processes, it cannot easily be

determined that an individual process is not progressing.

a. Can the operating system determine that a process is in an infinite loop?

Ans:

Perhaps in some obvious cases. But again, the Halting Problem of computability

theory tells us that we cannot predict in the general case the path of execution a

program will take, so we can never build a program to answer this question

automatically for all possible programs. Also, just because a program has been

looping for a long time does not necessarily imply that it is indeed in an infinite

loop.

b. What reasonable safeguards might be built into an operating system to prevent processes in infinite loops from running indefinitely?

Ans:

Operating systems typically handle this by using timeout mechanisms.

3.6 Choosing the correct quantum size is important to the effective operation of an operating system. Later in the text we will consider the issue of quantum determination in depth.

For now, let us anticipate some of the problems.

Consider a single-processor timesharing system that supports a large number of interactive users. Each time a process gets the processor, the interrupting clock is set to interrupt

after the quantum expires. This allows the operating system to prevent any single process

from monopolizing the processor and to provide rapid responses to interactive processes.

Assume a single quantum for all processes on the system.

This study source was downloaded by 100000843328917 from CourseHero.com on 11-23-2022 12:56:52 GMT -06:00

Process Concepts 18

a. What would be the effect of setting the quantum to an extremely large value, say 10

minutes?

Ans:

The obvious problem would be that a processor-bound program would monopolize the system during each quantum it received, thus preventing rapid

responses for the interactive processes.

b. What if the quantum were set to an extremely small value, say a few processor

cycles?

Ans:

The problem here would be that the operating system would be doing such an

enormous amount of context switching, that the entire system would become

soggy and few, if any, processes, whether processor bound or I/O -bound, would

be making efficient progress.

c. Obviously, an appropriate quantum must be between the values in (a) and (b). Suppose you could turn a dial and vary the quantum, starting with a small value and

gradually increasing. How would you know when you had chosen the “right” value?

Ans:

Ideally when all the I/O-bound and processor-bound processes were progressing most efficiently. Interactive responses would be fast, while processor-bound

jobs would still be progressing well.

d. What factors make this value right from the user’s standpoint?

Ans:

The user wants fast interactive response times and fast turnaround on jobs.

e. What factors make it right from the system’s standpoint?

Ans:

The system wants to minimize overhead, maximize resource utilization and specifically deliver most of the computing resources to users.

3.7 In a block/wakeup mechanism, a process blocks itself to wait for an event to occur.

Another process must detect that the event has occurred, and wake up the blocked process.

It is possible for a process to block itself to wait for an event that will never occur.

a. Can the operating system detect that a blocked process is waiting for an event that

will never occur?

Ans:

Again, in some specific cases, yes, but in the general case, no. Many events are

such that they may indeed never occur, or that they may not occur for a long

time. In Chapter 7, Deadlock and Indefinite Postponement, we consider both

deadlock and indefinite postponement, and we see that indefinite postponement can be indistinguishable from deadlock in the eyes of the user who is not

sure if an event will not happen for a long time or if it will never happen.

b. What reasonable safeguards might be built into an operating system to prevent processes from waiting indefinitely for an event?

Ans:

Two common ways of handling this are to use nonblocking waits so that a process may go about its business and be notified asynchronously if and when the

event occurs (see Chapter 5, Asynchronous Concurrent Execution). Another

technique is to associate a timer with the blocking mechanism so that if the

event does not occur within the designated amount of time, the process will

regain control and continue about its business., possibly retrying the portion of

the program that issued the event wait.

3.8 One reason for using a quantum to interrupt a running process after a “reasonable”

period is to allow the operating system to regain the processor and dispatch the next process.

Suppose that a system does not have an interrupting clock, and the only way a process can

This study source was downloaded by 100000843328917 from CourseHero.com on 11-23-2022 12:56:52 GMT -06:00

19 Process Concepts

lose the processor is to relinquish it voluntarily. Suppose also that no dispatching mechanism

is provided in the operating system.

a. Describe how a group of user processes could cooperate among themselves to

effect a user-controlled dispatching mechanism.

Ans:

Each user could use a reasonable amount of processor time, then pass control to

the next user. This could be accomplished by hardcoded transfers or system

calls.

b. What potential dangers are inherent in this scheme?

Ans:

Certainly the scheme is vulnerable to either accidental or malicious monopolization of the processor. A program that enters an infinite loop could bring down

the system, as could a program that simply decides to continue using the processor rather than passing control to the next user.

c. What are the advantages to the users over a system-controlled dispatching mechanism?

Ans:

The problem with a system-controlled dispatching mechanism is that individual

users could suffer in the dispatching that is occurring “for the greater good” of

all the users on the system. The kind of group-cooperative system proposed

here might be more effective for situations in which a group of processes needs

to have more precise control over its operation. As we will see in Chapter 8,

Processor Scheduling, various “fair-share” scheduling schemes can guarantee

that groups of processes receive designated portions of the processor resource.

3.9 In some systems, a spawned process is destroyed automatically when its parent is

destroyed; in other systems, spawned processes proceed independently of their parents, and

the destruction of a parent has no effect on its children.

a. Discuss the advantages and disadvantages of each approach.

Ans:

This is sensitive to the nature of the applications being attempted. Suppose that

the parent truly is a boss process responsible in some hierarchical sense for controlling all its child processes. The parent represents the activity. If the parent

spawns many child processes, and if the master activity represented by the parent is terminated, then it would be appropriate to simply terminate the parent

process and have all child processes be terminated automatically. The next

answer gives a situation in which this kind of automatic termination is not desirable.

b. Give an example of a situation in which destroying a parent should specifically not

result in the destruction of its children.

Ans:

When a parent process terminates, one of its child processes may be modifying

data in a file system. If that child is terminated prematurely, the data stored in

the file system may be in an inconsistent state (i.e., invalid). Depending on when

the child process is terminated, this could result in loss of data or inconsistent

data. In this case it is better to allow the child process to continue to execute

until file-system data integrity is assured.

3.10 When interrupts are disabled on most devices, they remain pending until they can be

processed when interrupts are again enabled. No further interrupts are allowed. The functioning of the devices themselves is temporarily halted. But in real-time systems, the environment that generates the interrupts is often disassociated from the computer system. When

interrupts are disabled on the computer system, the environment keeps on generating interrupts anyway. These interrupts can be lost.

This study source was downloaded by 100000843328917 from CourseHero.com on 11-23-2022 12:56:52 GMT -06:00

Process Concepts 20

a. Discuss the consequences of lost interrupts.

Ans:

A good answer for many of the questions in the text is: “It depends.” A realtime system could be monitoring an industrial process control application. The

scheme could be one in which interrupts are generated many times per second

to report the temperature in a vat, or the pressure in a line. These interrupts

provide values to keep the monitoring process up to date. If a value or two is

lost, it is normally of little consequence. There are, however, situations where

losing an interrupt could be devastating, such as in a nuclear power plant where

an interrupt is being generated to indicate that unless the temperature is immediately lowered there will be an explosion.

b. In a real-time system, is it better to lose occasional interrupts or to halt the system

temporarily until interrupts are again enabled?

Ans:

The real problem here is that it may simply not be feasible to halt the system.

For example, in an air traffic control system we certainly could not cause the

planes to stop flying; in a nuclear reactor we could not alter the reaction for the

convenience of the computer system that monitors it. So, in these cases we

might simply lose some interrupts when the system is preoccupied. Another

reason that losing interrupts might be acceptable is that one interrupt might

provide information that updates information provided by another interrupt. In

this case, it is acceptable if occasional interrupts are lost because the next interrupt will quickly “bring the system up to date.”

3.11 As we will see repeatedly throughout this text, management of waiting is an essential

part of every operating system. In this chapter we have seen several waiting states, namely

ready, blocked, suspendedready and suspendedblocked. For each of these states discuss how a

process might get into the state, what the process is waiting for, and the likelihood that the

process could get “lost” waiting in the state indefinitely.

Ans: A process becomes ready when it is capable of using the processor when one next

becomes available; the process that is waiting for the processor will surely get the processor

in the near future. In any responsible system, processes waiting for a long time often receive

a priority boost from the operating system. A process becomes blocked when it requests

input/output or when it does an event wait; it is waiting for I/O completion or event completion; I/Os normally complete unless the device goes down, while events could be of any

arbitrary type, some of which may never occur or not occur for a long time. A process

becomes suspendedready if it is either running or ready and it is then suspended; it is waiting

to be resumed; these resumptions normally come within an intermediate period of time such

as several minutes. A process becomes suspendedblocked if it is in the blocked state and then

is suspended; it is waiting for either an I/O completion, in which case it becomes suspendedready, or a resume in which case it becomes blocked; the I/O completion is typically far

more likely to occur before the resumption.

What features should operating systems incorporate to deal with the possibility that processes could start to wait for an event that might never happen?

Ans: Since we cannot know the future, there is no way to tell in the general case whether an

event will ever happen. There are two general approaches to dealing with this kind of situation. One is to use a timeout mechanism; when a process goes blocked pending an event completion, the process specifies a timeout value, and if the event does not happen before the

timeout, the process regains control. Another approach is to use a nonblocking wait; when

the process issues the I/O request or event wait, it asks to be notified asynchronously of the

This study source was downloaded by 100000843328917 from CourseHero.com on 11-23-2022 12:56:52 GMT -06:00

21 Process Concepts

event completion (a topic we will discuss in depth in Chapter 5, Asynchronous Concurrent

Execution) and it simply goes about its business.

3.12 Waiting processes do consume various system resources. Could someone sabotage a

system by repeatedly creating processes and making them wait for events that will never happen?

Ans:

This depends on the system architecture. Certainly creating a large number of processes that do almost anything could potentially bring down a system. An example of this is

discussed in Chapter 19, Security, when we study the Sapphire/Slammer Worm that was

released in January of 2003 (see www.caida.org/outreach/papers/2003/sapphire/).

What safeguards could be imposed?

Ans: Such situations are normally dealt with by using various “reasonableness criteria.” The

system might set some threshold value below which it continues to allow processes to be created, and above which it notifies a system administrator or simply refuses to create any more

processes.

3.13 Can a single-processor system have no processes ready and no process running?

Ans:

In theory, we might have a single blocked process waiting for an I/O completion; when

the completion occurs, the blocked process proceeds to the ready state (or even directly to

the running state). However, in practice, most architectures require a valid process to execute

at all times. Therefore, many operating systems assign a process created by the kernel to execute when no other processes are ready.

Is this a “dead” system? Explain your answer.

Ans: Although this system may appear not to be doing anything, it is not dead in the sense

that as soon as the I/O completion interrupt arrives, the system will indeed resume operation.

3.14 Why might it be useful to add a dead state to the state-transition diagram?

Ans: A parent process might want to retrieve some data from an exiting process before the

process exits. The process may also contain modifications to a file that has been temporarily

placed in memory. Those modifications must be applied to the copy of the file on secondary

storage before the process’s memory can be freed.

3.15 System A runs exactly one process per user. System B can support many processes per

user. Discuss the organizational differences between operating systems A and B with regard

to support of processes.

Ans:

System A simplifies many process management concerns. For example, the process’s

identifier could simply be the ID of the user that runs the process. Moreover, the operating

system does not need to track information such as parent and child processes for each process. When a process terminates in System A, the operating system need not worry about

how to manage its child processes. In system B, the operating system must assign each process (regardless of the user who created it) a unique process identifier. It should also determine relationships between processes (e.g., parent and child) for each user.

3.16 Compare and contrast IPC using signals and message passing.

Ans:

Signals are a simple and efficient way to notify another process than an event has

occurred. However, because signals cannot send data, processes are limited by how much

information a signal relays to another process. Traditionally, signals are limited to IPC within

a single operating system. Message passing allows a process to send data to another process.

This study source was downloaded by 100000843328917 from CourseHero.com on 11-23-2022 12:56:52 GMT -06:00

Process Concepts 22

However, message passing can incur substantial overhead compared to signals due to its allocating memory for the messages in addition to naming and locating senders and receivers.

3.17 As discussed in Section 3.6, Case Study: UNIX Processes, UNIX processes may change

their priority using the nice system call. What restrictions might UNIX impose on using this

system call, and why?

Ans:

UNIX systems allow all processes to possibly decrease their scheduling priority; doing

so does not negatively impact the level of service given to other processes in the system.

However, if a process is allowed to boost its scheduling priority, it will receive higher levels of

service, possibly to the detriment of other processes in the system. Therefore, UNIX systems

prevent a process from increasing its scheduling priority unless the process has been created

by the system administrator (also called the superuser).

Suggested Projects

3.18 Compare and contrast what information is stored in PCBs in Linux, Microsoft’s Windows XP, and Apple’s OS X. What process states are defined by each of these operating systems?

3.19 Research the improvements made to context switching over the years. How has the

amount of time processors spend on context switches improved? How has hardware helped

to make context switching faster?

3.20 The Intel Itanium line of processors, which are designed for high-performance computing, are implemented according to the IA-64 (64-bit) specification. Compare and contrast the

IA-32 architecture’s method of interrupt processing discussed in this chapter with that of the

IA-64 architecture (see developer.intel.com/design/itanium/manuals/245318.pdf).

3.21 Discuss an interrupt scheme other than that described in this chapter. Compare the

two schemes.

This study source was downloaded by 100000843328917 from CourseHero.com on 11-23-2022 12:56:52 GMT -06:00

Powered by TCPDF (www.tcpdf.org)