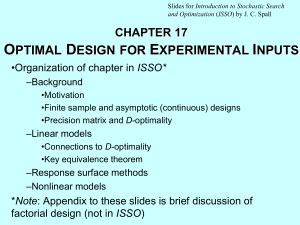

Slides for Introduction to Stochastic Search and Optimization (ISSO) by J. C. Spall CHAPTER 17 OPTIMAL DESIGN FOR EXPERIMENTAL INPUTS •Organization of chapter in ISSO* –Background •Motivation •Finite sample and asymptotic (continuous) designs •Precision matrix and D-optimality –Linear models •Connections to D-optimality •Key equivalence theorem –Response surface methods –Nonlinear models *Note: Appendix to these slides is brief discussion of factorial design (not in ISSO) Optimal Design in Simulation • Two roles for experimental design in simulation – Building approximation to existing large-scale simulation via “metamodel” – Building simulation model itself • Metamodels are “curve fits” that approximate simulation input/output – Usual form is low-order polynomial in the inputs; linear in parameters – Linear design theory useful • Building simulation model – Typically need nonlinear design theory • Some terminology distinctions: – “Factors” (statistics term) “Inputs” (modeling and simulation terms) – “Levels” “Values” – “Treatments” “Runs” 17-2 Unique Advantages of Design in Simulation • Simulation experiments may be considered special case of general experiments • Some unique benefits occur due to simulation structure • Can control factors not generally controllable (e.g., arrival rates into network) • Direct repeatability due to deterministic nature of random number generators – Variance reduction (CRNs, etc.) may be helpful • Not necessary to randomize runs to avoid systematic variation due to inherent conditions – E.g., randomization in run order and input levels in biological experiment to reduce effects of change in ambient humidity in laboratory – In simulation, systematic effects can be eliminated since analyst controls nature 17-3 Design of Computer Experiments in Statistics • There exists significant activity among statisticians for experimental design based on computer experiments – T. J. Santner et al. (2003), The Design and Analysis of Computer Experiments, Springer-Verlag – J. Sacks et al (1989), “Design and Analysis of Computer Experiments (with discussion),” Statistical Science, 409–435 – Etc. • Above statistical work differs from experimental design with Monte Carlo simulations – Above work assumes deterministic function evaluations via computer (e.g., solution to complicated ODE) • One implication of deterministic function evaluations: no need to replicate experiments for given set of inputs • Contrasts with Monte Carlo, where replication provides variance reduction 17-4 General Optimal Design Formulation (Simulation or Non-Simulation) • Assume model z = h(, x) + v , where x is an input we are trying to pick optimally • Experimental design consists of N specific input values x = i and proportions (weights) to these input values wi : 1 2 w1 w 2 N wN • Finite-sample design allocates n N available measurements exactly; asymptotic (continuous) design allocates based on n 17-5 D-Optimal Criterion • Picking optimal design requires criterion for optimization • Most popular criterion is D-optimal measure • Let M(,) denote the “precision matrix” for an estimate of based on a design – M(,) is inverse of covariance matrix for estimate and/or – M(,) is Fisher information matrix for estimate • D-optimal solution is arg max det M (, ) 17-6 Equivalence Theorem • Consider linear model zk hkT v k , k =1,2,..., n • Prediction based on parameter estimate ̂ n and “future” measurement vector hT is zˆ = hT ˆ n • Kiefer-Wolfowitz equivalence theorem states: D-optimal solution for determining to be used in forming ̂ n is the same that minimizes the maximum variance of predictor ẑ • Useful in practical determination of optimal 17-7 Variance Function as it Depends on Input: Optimal Asymptotic Design for Example 17.6 in ISSO 17-8 Orthogonal Designs • With linear models, usually more than one solution is D-optimal • Orthogonality is means of reducing number of solutions • Orthogonality also introduces desirable secondary properties – Separates effects of input factors (avoids “aliasing”) – Makes estimates for elements of uncorrelated • Orthogonal designs are not generally D-optimal; D-optimal designs are not generally orthogonal – However, some designs are both • Classical factorial (“cubic”) designs are orthogonal (and often D-optimal) 17-9 Example Orthogonal Designs, r = 2 Factors xk2 xk2 xk1 Cube (2r design) xk1 Star (2r design) 17-10 Example Orthogonal Designs, r = 3 Factors xk2 xk2 xk1 xk1 xk3 xk3 Cube (2r design) Star (2r design) 17-11 Response Surface Methodology (RSM) • Suppose want to determine inputs x that minimize the mean response z of some process (E(z)) – There are also other (nonoptimization) uses for RSM • RSM can be used to build local models with the aim of finding the optimal x – Based on building a sequence of local models as one moves through factor (x) space • Each response surface is typically a simple regression polynomial • Experimental design can be used to determine input values for building response surfaces 17-12 Steps of RSM for Optimizing x Step 0 (Initialization) Initial guess at optimal value of x. Step 1 (Collect data) Collect responses z from several x values in neighborhood of current estimate of best x value (can use experimental design). Step 2 (Fit model) From the x, z pairs in step 1, fit regression model in region around current best estimate of optimal x. Step 3 (Identify steepest descent path) Based on response surface in step 2, estimate path of steepest descent in factor space. Step 4 (Follow steepest descent path) Perform series of experiments at x values along path of steepest descent until no additional improvement in z response is obtained. This x value represents new estimate of best vector of factor levels. Step 5 (Stop or return) Go to step 1 and repeat process until final best factor level is obtained. 17-13 Conceptual Illustration of RSM for Two Variables in x; Shows More Refined Experimental Design Near Solution Adapted from: Montgomery (2005), Design and Analysis of Experiments, Fig. 11-3 17-14 Nonlinear Design • Assume model z = h(, x) + v , where enters nonlinearly and x is r-dimensional input vector • D-optimality remains dominant measure – Maximization of determinant of Fisher information matrix (from Chapter 13 of ISSO: Fn(, X) is Fisher information matrix based on n inputs in n × r matrix X) • Fundamental distinction from linear case is that Doptimal criterion depends on • Leads to conundrum: Choosing X to best estimate , yet need to know to determine X 17-15 Strategies for Coping with Dependence on • Assume nominal value of and develop an optimal design based on this fixed value • Sequential design strategy based on an iterated design and model fitting process. • Bayesian strategy where a prior distribution is assigned to , reflecting uncertainty in the knowledge of the true value of 17-16 Sequential Approach for Parameter Estimation and Optimal Design • Step 0 (Initialization) Make initial guess at , ˆ 0 . Allocate n0 measurements to initial design. Set k = 0 and n = 0. Step 1 (D-optimal maximization) Given Xn , choose the nk inputs in X = X nk to maximize det[Fn (ˆ n , X n ) Fnk (ˆ n , X )] . • • Step 2 (Update estimate) Collect nk measurements based on inputs from step 1. Use measurements to update from ̂n to ˆ n +nk . Step 3 (Stop or return) Stop if the value of in step 2 is satisfactory. Else return to step 1 with the new k set to the former k + 1 and the new n set to the former n + nk (updated Xn now includes inputs from step 1). 17-17 Comments on Sequential Design • Note two optimization problems being solved: one for , one for • Determine next nk input values (step 1) conditioned on current value of – Each step analogous to nonlinear design with fixed (nominal) value of • “Full sequential” mode (nk = 1) updates based on each new inputouput pair (xk , zk) • Can use stochastic approximation to update : ˆ n 1 ˆ n anYn ˆ n | zn 1, x n 1 where Yn ( | zn 1, x n 1) 12 zn 1 h(, x n 1)2 17-18 Bayesian Design Strategy • Assume prior distribution (density) for , p(), reflecting uncertainty in the knowledge of the true value of . • There exist multiple versions of D-optimal criterion • One possible D-optimal criterion: E logdet Fn (, X ) logdet Fn (, X ) p() d • Above criterion related to Shannon information • While log transform makes no difference with fixed , it does affect integral-based solution • To simplify integral, may be useful to choose discrete prior p() 17-19 Appendix to Slides for Chapter 17: Factorial Design (not in ISSO; see ref. [1] below) • Classical experimental design deals with linear models • Factorial design is most popular classical method – All r inputs (“factors”) changed at one time (note: ref. [1] uses notation m instead of r) • Factorial design provides two key advantages over one-ata-time changes: 1. Greater efficiency in extracting information from given number of experiments 2. Ability to determine if there are interaction effects • Standard method is 2r factorial; “2” comes about by looking at each input at two levels: low () and high (+) – E.g., if r = 3, then have 23 = 8 input combinations: ( ), (+ ), ( + ), ( +), (+ + ), (+ +), ( + +), (+ + +) [1] Spall, J. C. (2010), “Factorial Design for Choosing Input Values in Experimentation: Generating Informative Data for System Identification,” IEEE Control Systems Magazine, vol. 30(5), pp. 38−53. 17-20 Appendix to Slides (cont’d): Factorial Design with 3 Inputs • Consider r = 3 linear model zk = 0 + 1xk1 + 2xk2 + 3xk3 + 4xk1xk2 + 5xk1xk3 + 6xk2xk3 + 7xk1xk2xk3 + noise, where = [0, 1,…, 7]T represents vector of (unknown) parameters and xki represents i th term in input vector xk • 23 factorial design allows for efficient estimation of all parameters in • In contrast, one-at-a-time provides no information for estimating 4 to 7 • However, 23 factorial design must be augmented in some 2 way if wish to add quadratic (e.g., xk 1) or other higherorder polynomial terms to model 17-21 Appendix to Slides (cont’d): Illustration of Interaction with 2 Inputs • Example responses for r = 2: no interaction and interaction between input variables • Left plot (no interaction) shows that change in zk with change in xk2 does not depend on xk1; right plot (interaction) shows change in zk does depend on xk1 No interaction Interaction zk zk (+ +) X = high Xk1= high (+ ) k1 ( +) ( +) (+ ) Xk1= low ( ) ( ) xk2 Xk1= low (+ +) xk2 17-22 Appendix to Slides (cont’d): Efficiency of Factorial Design for Main Effects Ratio of number of runs needed: one-at-a-time / factorial • Factorial design estimates “main effects” (non-interaction) with greater efficiency than one-at-a-time changes • Plot below based on same accuracy in estimation for the two methods 8 7 6 5 4 3 2 1 2 4 6 8 10 Input dimension r 12 14 16 17-23