This article was downloaded by: [130.113.86.233] On: 23 February 2015, At: 06:45

Publisher: Institute for Operations Research and the Management Sciences (INFORMS)

INFORMS is located in Maryland, USA

Management Science

Publication details, including instructions for authors and subscription information:

http://pubsonline.informs.org

Data Shuffling—A New Masking Approach for Numerical

Data

Krishnamurty Muralidhar, Rathindra Sarathy,

To cite this article:

Krishnamurty Muralidhar, Rathindra Sarathy, (2006) Data Shuffling—A New Masking Approach for Numerical Data. Management

Science 52(5):658-670. http://dx.doi.org/10.1287/mnsc.1050.0503

Full terms and conditions of use: http://pubsonline.informs.org/page/terms-and-conditions

This article may be used only for the purposes of research, teaching, and/or private study. Commercial use

or systematic downloading (by robots or other automatic processes) is prohibited without explicit Publisher

approval, unless otherwise noted. For more information, contact permissions@informs.org.

The Publisher does not warrant or guarantee the article’s accuracy, completeness, merchantability, fitness

for a particular purpose, or non-infringement. Descriptions of, or references to, products or publications, or

inclusion of an advertisement in this article, neither constitutes nor implies a guarantee, endorsement, or

support of claims made of that product, publication, or service.

Copyright © 2006, INFORMS

Please scroll down for article—it is on subsequent pages

INFORMS is the largest professional society in the world for professionals in the fields of operations research, management

science, and analytics.

For more information on INFORMS, its publications, membership, or meetings visit http://www.informs.org

MANAGEMENT SCIENCE

informs

Downloaded from informs.org by [130.113.86.233] on 23 February 2015, at 06:45 . For personal use only, all rights reserved.

Vol. 52, No. 5, May 2006, pp. 658–670

issn 0025-1909 eissn 1526-5501 06 5205 0658

®

doi 10.1287/mnsc.1050.0503

© 2006 INFORMS

Data Shuffling—A New Masking Approach for

Numerical Data

Krishnamurty Muralidhar

Gatton College of Business and Economics, University of Kentucky, Lexington, Kentucky 40506, krishm@uky.edu

Rathindra Sarathy

Spears School of Business, Oklahoma State University, Stillwater, Oklahoma 74078, sarathy@okstate.edu

T

his study discusses a new procedure for masking confidential numerical data—a procedure called data

shuffling—in which the values of the confidential variables are “shuffled” among observations. The shuffled

data provides a high level of data utility and minimizes the risk of disclosure. From a practical perspective, data

shuffling overcomes reservations about using perturbed or modified confidential data because it retains all the

desirable properties of perturbation methods and performs better than other masking techniques in both data

utility and disclosure risk. In addition, data shuffling can be implemented using only rank-order data, and thus

provides a nonparametric method for masking. We illustrate the applicability of data shuffling for small and

large data sets.

Key words: camouflage; confidentiality; data masking; data swapping; obfuscation; privacy; perturbation

History: Accepted by Ramayya Krishnan, information systems; received August 26, 2004. This paper was with

the authors 3 months for 1 revision.

1.

Introduction

values that have been “modified” (Wall Street Journal

2001). This is likely to be particularly true of a typical

user in commercial organizations who may not have

the statistical sophistication of users of governmental data. Thus, techniques are needed that will foster

greater acceptance of masked data among the common user.

One data masking approach with the potential to

satisfy this requirement is data swapping. As the

name implies, data swapping exchanges values of

confidential variables between records (Dalenius and

Reiss 1982) without modifying the original values

of the confidential variables. Data swapping has the

intuitive appeal and ease of explanation that is not

available with other masking techniques, but compared with perturbation methods, existing data swapping methods for numerical variables have low data

utility or high disclosure risk (or both). Fienberg

(2002) observes that other techniques have surpassed

data swapping because it has performed poorly.

However, given its potential for greater user acceptance, we believe that data swapping techniques must

be brought to perform on par with other data masking techniques. Hence our objective in this paper is to

develop a technique that provides data utility and disclosure risk that is similar to perturbed data, without modifying the original values of confidential variables. In this

study, we develop such a new procedure and discuss

its underlying theory. Referred to as data shuffling,

this procedure will allow organizations to disseminate

Organizations derive many benefits from gathering,

analyzing, and disseminating data regarding customers, suppliers, and other entities. Simultaneously,

these activities raise issues of privacy and confidentiality of sensitive information. Unrestricted analysis, dissemination, and sharing of sensitive data could lead

to disclosure of confidential information, so organizations need analytically valid data that do not disclose

confidential information. Until recently, this problem

was important only for a few governmental agencies

(such as the Census Bureau) that released specialized

data sets for sophisticated users. Recently, however,

the scope of the problem has expanded to cover practically all organizations.

Research in statistical disclosure limitation techniques has led to the development of several tools and

techniques that enable disseminated data to be analyzed while protecting individual privacy and confidentiality (Willenborg and de Waal 2001). We shall

refer to these broadly as data masking techniques.

Most of these techniques were developed in the context of data dissemination by a governmental agency.

One such technique that enables data to be analyzed while preserving a high level of confidentiality is data perturbation. Perturbation techniques rely

on “perturbing” or changing the original values in

a manner that preserves analytical usability without

compromising confidentiality. Unfortunately, many

users look unfavorably (or possibly suspiciously) on

658

Muralidhar and Sarathy: Data Shuffling—A New Masking Approach for Numerical Data

659

Management Science 52(5), pp. 658–670, © 2006 INFORMS

and share data for analysis, with minimal disclosure risk.

Downloaded from informs.org by [130.113.86.233] on 23 February 2015, at 06:45 . For personal use only, all rights reserved.

2.

Data Utility, Disclosure Risk, and

the Conditional Distribution

Approach

Important in the development of new masking techniques is an evaluation of the associated level of

data utility and disclosure risk. In this section, we

formally define measures of data utility and disclosure risk. Similar to Duncan and Pearson (1991), we

assume that users must be provided access to microdata (values of variables at the record level) without

access restrictions. Data masking techniques attempt

to provide users with access to microdata that provides analytical validity, but prevents disclosure of sensitive information.1

Analytical validity or data utility represents the

extent to which analyzing the masked data provides

similar results to those obtained by analyzing the

original data. A masking technique provides the highest possible level of data utility if, for any type of analysis, the results using the original and masked data

are identical. In practice, achieving this ideal is difficult. Instead, masking techniques are evaluated based

on how closely they maintain the joint distribution

of the confidential variables and nonconfidential variables. From a statistical perspective, it is important

that inferences reached using the masked data must

be the same as those using the original data.

In terms of disclosure risk, while all masking techniques prevent exact disclosure of values, they are

susceptible to inferential disclosure. Inferential disclosure risk (or simply disclosure risk) is measured by the

ability of an intruder or snooper to infer the identity of an entity to whom a particular record belongs

and/or the value of a confidential variable for a particular record. Inferential disclosure will occur even

without access to masked microdata (using nonconfidential microdata and aggregate information) and

cannot be prevented unless access to the entire data

set is prevented. Hence, Dalenius (1977) and Duncan

and Lambert (1986) define disclosure as the improvement in an intruder’s predictive ability when access is

provided to the masked microdata. This improvement

in predictive ability represents the disclosure risk that

can be attributed exclusively to the masking procedure. By this definition, disclosure risk occurs when

(1) the probability of correctly matching a particular deidentified record to an individual is higher with

1

The term “disclosure” is used to refer to disclosure of sensitive

information to authorized users who use their legitimate access to

compromise confidentiality. It does not refer to unauthorized users

(or hackers).

access to microdata, compared to the same probability

without access to the masked microdata, and/or

(2) the error bounds for the estimated value of a

confidential variable are smaller with access to microdata compared to the error bounds without access to

the masked microdata.

2.1.

The Conditional Distribution Approach for

Generating Masked Data

Muralidhar and Sarathy (2003a, b) have shown that in

order to satisfy the above requirements, the masked

variables must be generated in a specific manner.

Consider a data set with i = 1 N observations,

consisting of a set of j = 1 M confidential variables X and a set of k = 1 L nonconfidential variables S.2 It is assumed that the variables X and S have

a known pdf f X S . Let Y represent the masked variables. Using f X S , if the individual values in Y are

generated from the conditional density of X given S;

that is,

(1)

yi ∼ fXS X S = si then Y will be an independent realization from the

conditional distribution of fXS X S . In other words,

X and Y are conditionally independent given S. From

Equation (1), we can show

fY S Y S = fX S X S (2)

thereby maintaining ideal data utility, and that

fXS Y X S Y = fXS X S (3)

thereby minimizing disclosure risk (Muralidhar and

Sarathy 2003a). This ensures low disclosure risk for

such procedures. In other words, S provides the same

information about X as {S and Y} together. Hence, any

procedure that generates masked values based on the

conditional distribution in Equation (1) provides the

lowest possible level of disclosure risk, since access to

microdata with the masked variables Y provides the

intruder with no additional information (Dalenius 1977,

Duncan and Lambert 1986). We will refer to masking

2

For the remainder of this manuscript, we will use the following notation. Let xi j and x i j represent the ith (unordered)

and ith-ordered observation for the jth confidential variable (i.e.,

rank(x i j = i). Similarly, let si k and s i k represent the ith

(-unordered) and ith-ordered observation of the kth nonconfidential

variable. Let YP represent the perturbed set of j = 1 M confidential variables and Y represent the shuffled variables. As with X,

yiP j and yi j represent the ith observations of the jth perturbed

and shuffled variables, respectively, and y Pi j and y i j the ordered

observations. Let xi , si , and yiP , yi , represent 1×M , 1×L , 1×M ,

and 1×M single-observation vectors from X, S, YP , and Y, respectively. Let Xj , Sk , YjP , and Yj represent the N × 1 vector of a single

variable in X, S, YP , and Y, respectively, and X j , S k , Y Pj , and Y j

to represent the rank-ordered N × 1 vector of a single variable.

Let f and F represent the probability density and cumulative

distribution functions, respectively.

Muralidhar and Sarathy: Data Shuffling—A New Masking Approach for Numerical Data

Downloaded from informs.org by [130.113.86.233] on 23 February 2015, at 06:45 . For personal use only, all rights reserved.

660

Management Science 52(5), pp. 658–670, © 2006 INFORMS

procedures that satisfy Equation (1) as being based on

the conditional distribution approach. Using the conditional distribution fXS X S for generating masked

values has been suggested by Fienberg et al. (1998a),

Fuller (1993), Little (1993), and Rubin (1993).

Our objective is to present a data swapping methodology based on the conditional distribution approach

(albeit with certain assumptions). Prior to describing

our new approach, we briefly describe existing methods for swapping numerical data.

2.2.

3.

Issues in Implementing the Conditional

Distribution Approach

There are several issues in implementing the conditional distribution approach in practice. The first issue

is that the joint distribution f X S is almost never

known. Second, even if f X S is known, deriving

the conditional density fXS X S may not be possible except in a few cases such as the multivariate normal. It is common to assume a joint density

for the original data and generate the perturbed values (Fienberg et al. 1998a, Muralidhar et al. 1999,

Sarathy et al. 2002, Raghunathan et al. 2003, Burridge

2003). Hence the implementation of almost all masking approaches is heuristic, relying on estimates of

the joint and/or conditional distribution of the variables. However, in these cases, we cannot truly claim

that the resulting perturbed values strictly satisfy

all the data utility requirements specified earlier. We

can ensure only that the masked data maintain certain properties based on the underlying assumptions

made in generating the masked values.

Note, however, that whether Y is generated from

the true, estimated, or assumed conditional distribution, as long as it is of the form

yi = g S = s i (4)

where g · is a function that represents the empirical

conditional density fXS X S estimated from the data

and the noise term is independent of X and S, we

can show that the resulting Y satisfies ideal disclosure risk requirements similar to Equation (3). In other

words, Equation (4) ensures that X and Y are conditionally independent given S, since the values of Y are

generated as function of S and an independent noise

term . The data shuffling procedure that we describe

in the following sections satisfies this requirement.

Another important consideration is the added variability because of random sampling from the conditional distribution and its impact on statistical

inference. Even if the true underlying distribution is

known, the random generation of the perturbed values results in variability that must be accounted for.

This variability approaches 0 as n → , and is relatively small for large n. Regardless the size of the data

set, the impact of the added variability on statistical

inferences reached using the masked data (compared

to that using the original data) must be addressed.

Only a few techniques address this issue directly

(Rubin 1993, Fienberg et al. 1998a, Burridge 2003).

Existing Methods for Swapping

Numerical Data

Dalenius and Reiss (1982) originally proposed data

swapping for masking confidential variables. Fienberg

and McIntyre (2005) comprehensively discuss data

swapping and its relationship to other methods. In this

section, we focus on methods for swapping numerical variables. Reiss et al. (1982) made the first attempt,

swapping the data using an optimization approach to

maintain the first- and second-order moments. This

approach is not based on the conditional distribution

approach. It is also known to be computationally difficult and its disclosure risk remains to be evaluated.

Moore (1996) describes the best-known data swapping procedure based on the data swapping algorithm Brian Greenberg proposes in an unpublished

manuscript. The rank-based proximity swap (hereafter referred to simply as data swapping) for numerical variables can be described as follows:

Sort the data by confidential variable j. Set the value

of y k j = x i j and y i j = x k j . Repeat the process for

every i and j to result in Yj and repeat the process of

every j to result in Y.

Moore (1996, p. 6) uses a masking parameter called the

“swapping distance” parameter, defined as follows:

Determine a value P a , with 0 ≤ P a ≤ 100. The intent

of the procedure is to swap the value of ai with that

of aj , so that the percentage difference of the indices,

i and j, is less than P a of N . That is i − j < P a ∗

N /100.

The larger the value of P a , the larger the value

of i − k, and the greater the distance between the

swapped values, and vice versa. Data swapping’s

biggest advantage is that the marginal distributions

of the individual confidential variables are identical to

those of the original variables. Assuming a uniform

distribution, Moore (1996) also shows an inverse relationship between swapping distance and data utility,

and a direct relationship between swapping distance

and disclosure risk, resulting in a trade-off.

Recently, Carlson and Salabasis (2002) (hereafter

referred to as C&S) describe a new method for

data swapping. For this procedure, first consider a

database D consisting of a set of variables S and X.

Assume that the database is randomly divided into

two (or even more) data sets D1 and D2 containing

S1 X1 and S2 X2 , respectively. C&S (2002) suggest

ranking both databases with respect to variable Xj .

Muralidhar and Sarathy: Data Shuffling—A New Masking Approach for Numerical Data

Downloaded from informs.org by [130.113.86.233] on 23 February 2015, at 06:45 . For personal use only, all rights reserved.

Management Science 52(5), pp. 658–670, © 2006 INFORMS

Since the two databases are independent samples,

the ordered values of Xj1 should be approximately

equal to Xj2 and the values of the masked variable Yj1

are created by replacing the ordered values of Xj1

by Xj2 . Similarly, another set of masked variables Yj2

may be created by replacing the ordered values of

Xj2 by Xj1 . The process is then repeated for all j to

∗

∗

result in Y1 and Y2 . Two new databases, D1 and D2 ,

are then created by combining S1 Y1 and S2 Y2 (Fienberg 2002). The two databases can then be potentially recombined to form D∗ . The authors also provide proof for this procedure as well as simulation

results demonstrating their asymptotic properties.

The C&S (2002) method, while innovative, focuses

almost exclusively on data utility and does not

directly address disclosure risk. The authors acknowledge that “the swap may not provide an acceptable

level of perturbation and effectively mask the data.”

Beyond this broad statement, no specific disclosure

risk measures were evaluated. As we show in §5, this

procedure provides practically no protection from either

identity or value disclosure.

Liew et al. (1985) proposed a method closely resembling the C&S (2002) method. They suggest that the

univariate marginal distribution of each confidential

variable be empirically identified. Using the respective distributions, n observations are generated for

each confidential variable. The rank ordered original

values are then replaced by the rank-ordered generated values for each variable. The Liew et al. (1985)

procedure also focuses on data utility without appropriate consideration of disclosure risk. Consequently,

it has been shown to have very high disclosure risk

(Adam and Wortmann 1989, Muralidhar et al. 1999).

The C&S (2002) method has two major advantages

compared with the Liew et al. (1985) procedure. First,

the Liew et al. (1985) procedure does not use the

original values in the database, but instead replaces

the values by randomly generated values. Second, the

Liew et al. (1985) procedure requires the identification of the underlying univariate distribution of each

confidential variable. The C&S (2002) method overcomes both these problems by dividing the database

and using the rank-ordered values within the subsets.

Dandekar et al. (2002) proposed a method called

Latin Hypercube sampling for data swapping. This

method attempts to recreate an independent data set

with the same marginal characteristics and the same

rank-order correlation as the original data set. Unfortunately, this procedure can be used only when all

the variables in the database are confidential and

must be swapped. When nonconfidential variables are

present, this procedure would require either swapping the nonconfidential variables also, or swapping

the confidential variables independently of the nonconfidential variables. Hence, this limits the method’s

applicability.

661

Thus the literature on data swapping reveals that

current procedures for swapping numerical variables

do not simultaneously address data utility and disclosure risk, because they are not based on the

conditional distribution approach. The conditional

distribution approach provides significant advantages

that outweigh the disadvantages. Fienberg et al.

(1998b) argue that this approach is preferable to using

ad hoc procedures, even with certain assumptions.

Our objective in this paper is to develop a data swapping procedure based on the conditional distribution

approach—a procedure that provides utility comparable with existing swapping methods without the

adverse disclosure risks.

4.

The Data Shuffling Procedure

The data shuffling procedure is based on the conditional distribution approach and can be described as

follows:

Step 1. Generate an observation yiP from the conditional distribution fXS X S = si such that given S =

si , YP is independent of X.

Step 2. Repeat the process for every observation in

the data set to obtain YP .

Step 3. Replace y Pi j with x i j , i = 1 N , j =

1 M to obtain Y. That is, perform reverse mapping Y j = Y Pj ← X j .

Step 4. Release the reordered (or shuffled) data set.

Steps 1 and 2 of the shuffling procedure are similar to

perturbation and “generate” a new set of values for

the confidential variables using the conditional density fXS X S . However, yiP j values generated in this

manner will, in general, be different from the observations in X. This “shortcoming” can be addressed

by taking advantage of the fact that FY YjP = FX Xj ,

j = 1 M. Hence, in Step 3, for each confidential

variable, we replace the ordered values of YjP with

the ordered original values of Xj , ensuring that the

shuffled data consists of the original values. Since

both YjP and Xj are independent realizations from the

same distribution, both have the same characteristics

in terms of both data utility and disclosure risk (C&S

2002, p. 38). In other words, the reverse mapping does

not alter the disclosure risk characteristics of the perturbed data. Thus, theoretically, the data shuffling procedure satisfies the ideal data utility and disclosure

risk requirements. However, as discussed earlier, the

joint distribution of X and S is unknowable in practice. Hence, heuristic approaches are needed to implement data shuffling.

4.1. Implementation of Data Swapping

We propose a heuristic implementation of data

shuffling based on the perturbation method proposed

Muralidhar and Sarathy: Data Shuffling—A New Masking Approach for Numerical Data

662

Management Science 52(5), pp. 658–670, © 2006 INFORMS

Downloaded from informs.org by [130.113.86.233] on 23 February 2015, at 06:45 . For personal use only, all rights reserved.

by Sarathy et al. (2002) using the multivariate copula

to model the joint density of a data set, where the

variables have arbitrary marginal distributions and

specified dependence characteristics (Nelsen 1999).

Let R represent the rank-order correlation matrix of

X S. Define variables X∗ and S∗ as follows:

xi∗ j = −1 FXj xi j j = 1 M i = 1 N

si∗ k = −1 FSk si k k = 1 L i = 1 N and

(5)

where FXj and FSk represent the cumulative distribution functions of variables Xj and Sk , respectively, and

−1 represents the inverse of the standard normal distribution. The joint density of X∗ and S∗ is described

by a multivariate standard normal distribution with

correlation matrix , and the relationship between R

and can be described by (Clemen and Reilly 1999)

rij

ij = 2 sin

(6)

6

where rij are the elements R. Since X∗ and S∗ have a

joint multivariate normal distribution, it is now possible to generate the perturbed values Y∗ as

yi∗ = X∗ S∗ S∗ S∗

−1 ∗

si

+ ei (7)

where ei ∼ MVN 0 X∗ X∗ − XS S∗ S∗ −1 S∗ X∗ . The

values of Y∗ can then be retransformed back to the

original marginal distribution of YP by

yiP j = FX−1

yi∗ j j

j = 1 M i = 1 N (8)

The copula-based perturbation approach requires that

for a given data set X S with a rank-order correlation matrix R, the marginal distributions of X and S

are known, a random observation can be generated

from the specified marginal distribution. This step is

needed to generate a new value for the perturbed variable using Equation (8). However, in the data shuffling approach, we do not need to generate a new

value; we only need the rank of the perturbed value.

Hence, this procedure can be simplified further by

using the following transformation in place of one

used in Equation (5):

i − 05

−1

∗

si k = N

k = 1 L i = 1 N (9)

where i represents the rank order of si k . The values

of yi∗ are generated from

∗

yi∗ = X∗ S∗ −1

S∗ S∗ si + ei (10)

where e ∼ MVN 0 X∗ S∗ −1

S∗ S∗ S∗ X∗ . Since rank of

y ∗i j = rank of y Pi j , replacing y ∗i j with x i j , j =

1 M, i = 1 N results in Y. Note that Equation (10) maintains conditional independence, ensuring that given S, X, and Y are independent. Thus the

reverse-mapped values retain the same data utility

and disclosure risk as the original perturbed values

(C&S 2002). If all the variables in the data set are confidential, an independent, multivariate normal data

set with correlation matrix is generated, and reverse

mapping is performed on this data set. The simulation experiments to assess the disclosure risk characteristics of the data shuffling procedure (§§5.1 and 5.2)

provide empirical verification of this result.

The data shuffling approach does not require the

marginal distributions of X and S to be identified, nor

does it require any of the actual values in the data set

to be used in the process of masking. The only data

that is required is the rank-order correlation matrix of

the original variables and the ranks of the values of

the nonconfidential variables s i j . In many cases, the

owners of the data set may not have the necessary

expertise to perform the shuffling. The nonparametric

data shuffling approach provides a methodology by

which the shuffling can be performed securely using a

third party that would never have access to any actual

confidential data values.

4.2.

Impact of Sample Size and Sampling Error on

Data Shuffling

As with other techniques, data shuffling results add

variability to parameter estimates potentially affecting statistical inferences. This variability arises from

the noise term used to generate perturbed values

from the conditional distribution fXS X S and the

reverse mapping process. The variability is likely very

small for large data sets, but may be significant for

small data sets. The extent of this variability is difficult to predict theoretically. We provide an empirical evaluation in the next section. Just as perturbation

procedures have been modified to account for such

variability (Rubin 1993, Fienberg et al. 1998a, Burridge

2003), modifications may be developed for the data

shuffling procedure as well.

In summary, we view the data shuffling procedure presented in this study to be part of the evolution of data swapping. Data perturbation approaches

have also evolved from simple noise addition to

current approaches. As Fienberg (2002) suggests, an

important step in this evolution is developing a data

swapping technique with underlying principles that

are the same as perturbation techniques. Data shuffling combines the most recent (rank-based) copula

perturbation approach with the most recent swapping

approach (C&S 2002 method).3

3

Although we originally proposed data shuffling independently

of C&S (2002), we believe that the rank-ordered swapping of the

marginal represents the common link between the two methods.

Muralidhar and Sarathy: Data Shuffling—A New Masking Approach for Numerical Data

Management Science 52(5), pp. 658–670, © 2006 INFORMS

Downloaded from informs.org by [130.113.86.233] on 23 February 2015, at 06:45 . For personal use only, all rights reserved.

5.

An Empirical Comparison of Data

Swapping Methods

In this section, we describe simulation experiments

to evaluate the relative performances of data swapping, the C&S (2002) method, and data shuffling. Data

swapping requires specifying a masking (or the proximity) parameter. Three different values of the proximity parameter were used P a = 010 050 100 ,

hereafter referred to as DS10%, DS50%, and DS100%.

Two sets of experiments were conducted to evaluate

data utility and disclosure risk.

5.1.

Impact of Data Set Size on Utility of

Masked Data

The objective of the first experiment was to investigate the impact of the size of the data set on

the product moment correlation of the masked

data compared to the original data (similar to the

experiment in C&S 2002, §5.4, p. 48). In the experiment, a data set of a size n =30 100 300 1000

was generated from a bivariate normal distribution with a specified population correlation coefficient =00 02 04 06 08 095 . It was assumed

that both variables in the data set were to be masked.

Five different masked data sets (C&S 2002 method,

data shuffling, and three different swaps) were generated. In implementing the C&S (2002) method, in

addition to the generated data set, it was assumed

that second independent data sets of the same size

were available (and generated for this purpose). The

rank-ordered values of both variables in the first set

were swapped for the rank-ordered values of the

respective variables in the second set. We chose this

implementation because C&S (2002) show that this

procedure results in lower bias than the swapping

from two different data sets. As in the C&S study,

we measured both the bias (which results in attenuation in correlation) and standard error (SE) of bias

using the sample correlation coefficient as the benchmark (see C&S 2002, p. 49). We replicated each combination of data set size and correlation coefficient

10,000 times, and computed the average bias and SE

of the sample correlation coefficient from the 10,000

replications.

Table 1 shows the results of the simulation experiment, which are almost identical to the results provided by C&S (2002, Tables 2–5, pp. 50–51). In terms

of data utility, both the C&S (2002) method and data

shuffling show attenuation in sample correlation coefficient for small n that quickly approaches zero as

n increases. For all three swapping methods, the

attenuation is higher and does not approach zero as

n increases. The attenuation is highest (and almost

equal to the specified correlation) for DS100%, followed by DS50%, and DS10%. This is to be expected

since DS100% represents the case where the values are

663

swapped randomly and almost completely destroys

any relationships that might have existed.

The results are similar when we evaluate SE. For

small n, the SE is rather high even for the C&S (2002)

method and data shuffling. Fienberg (2002) observes

the same with the C&S (2002) method. This indicates

that even though both the C&S method and data shuffling maintain correlations between variables asymptotically, for small data sets the correlation attenuation

in the masked data could be significant. Hence, for

small data sets, these methods must be used with

caution. The SEs of DS10%, DS50%, and DS100% are

higher than those observed for the C&S (2002) method

and data shuffling. Thus, purely from the perspective

of data utility as measured by the attenuation in correlation and the SE, it is hard to distinguish between

data shuffling and the C&S (2002) method. In terms

of relative performance, data shuffling and the C&S

(2002) method perform the best, followed by DS10%,

DS50%, and DS100%.

The similarity in performance of the C&S (2002)

method and data shuffling for the multivariate normal case is not surprising because the shuffled values

are generated from the true conditional distribution.

Generally, the C&S (2002) method does not make

assumptions regarding the joint distribution of X

and S, so it may provide better data utility than the

data shuffling procedure. However, in data shuffling,

the values of the confidential variables are perturbed prior

to the rank-ordered replacement. This is not the case for

the C&S (2002) method. As the following discussion

shows, this difference has a major impact on the disclosure risk of the two methods.

5.2. Assessing Risk of Value Disclosure

In this experiment, as a surrogate measure of value

disclosure, we computed the proportion of variability

that is explained in the confidential variable by using

the corresponding masked variable (R2X1 Y1 and R2X2 Y2

for all five masking methods (Fuller 1993). To satisfy

Equation (3), it is necessary that R2XS Y = R2XS . In this

case, since S is null, to minimize disclosure risk, it is

necessary that R2X1 Y1 = R2X2 Y2 = 0. Table 2 provides the

average R2X1 Y1 and R2X2 Y2 resulting from each combination of correlation coefficient and data set size for

all five masking methods.

From Table 2 it is evident that the C&S (2002)

method provides practically no security against risk

of value disclosure. Even for a data set of 30, the

proportion of variability in the confidential variable

explained using the masked variable is more than

93% in all cases. When the data set is 1,000, the

proportion of variability in the confidential variable

using the masked variable is more than 99.95% in

all cases. The poor disclosure risk performance of

the C&S (2002) method is not surprising. C&S (2002,

Muralidhar and Sarathy: Data Shuffling—A New Masking Approach for Numerical Data

664

Table 1

Management Science 52(5), pp. 658–670, © 2006 INFORMS

Bias and Standard Error Resulting from Simulation Experiment

Data set size

30

0.00

Downloaded from informs.org by [130.113.86.233] on 23 February 2015, at 06:45 . For personal use only, all rights reserved.

0.20

0.40

0.60

0.80

0.95

100

0.00

0.20

0.40

0.60

0.80

0.95

300

0.00

0.20

0.40

0.60

0.80

0.95

1,000

0.00

0.20

0.40

0.60

0.80

0.95

C&S (2002) method

Data shuffling

Data swapping (10%)

Data swapping (50%)

Data swapping (100%)

Bias

SE

Bias

SE

Bias

SE

Bias

SE

Bias

SE

Bias

SE

−0000641

0066915

−0013962

0066355

−0027262

0063791

−0036869

0057457

−0043728

0046396

−0034909

0030778

−0001352

0068458

−0013758

0067250

−0026777

0063063

−0037706

0057452

−0043730

0046919

−0035300

0031542

0000551

0108844

−0032443

0107363

−0065604

0101812

−0095700

0093522

−0118208

0079000

−0129913

0062850

−0001045

0233072

−0157984

0225624

−0307165

0217107

−0460840

0202012

−0610875

0186656

−0723215

0177532

−0000950

0263763

−0200020

0259527

−0396881

0245209

−0594337

0224294

−0793003

0201219

−0946191

0189804

Bias

SE

Bias

SE

Bias

SE

Bias

SE

Bias

SE

Bias

SE

−0000107

0022496

−0005065

0022104

−0010030

0021204

−0014155

0019108

−0016616

0015279

−0013024

0008828

−0000260

0022872

−0005090

0022358

−0009880

0021248

−0014178

0019010

−0016775

0015314

−0013062

0009120

−0000989

0050805

−0025164

0050074

−0049637

0047616

−0071887

0042576

−0090536

0036116

−0099643

0028103

−0001064

0124219

−0149044

0121077

−0296934

0116631

−0441791

0106818

−0582097

0096673

−0684039

0089905

−0000050

0142618

−0198753

0140435

−0397648

0133167

−0597483

0120205

−0797881

0106751

−0950754

0102096

Bias

SE

Bias

SE

Bias

SE

Bias

SE

Bias

SE

Bias

SE

−0000018

0007890

−0002102

0007752

−0004086

0007396

−0005704

0006826

−0006736

0005494

−0005026

0002974

0000340

0007869

−0001658

0007840

−0003557

0007498

−0005220

0006721

−0006326

0005365

−0004868

0002844

0000105

0027860

−0022744

0027705

−0045264

0026525

−0065764

0023619

−0082758

0019968

−0091490

0014765

0001819

0069805

−0146747

0067926

−0291108

0066483

−0434570

0059363

−0575702

0054769

−0674581

0051489

0001969

0082571

−0199422

0080740

−0398856

0075039

−0599187

0070232

−0800379

0061630

−0948211

0058183

Bias

SE

Bias

SE

Bias

SE

Bias

SE

Bias

SE

Bias

SE

−0000077

0002642

−0000823

0002587

−0001332

0000006

−0001884

0002223

−0002268

0001793

−0001740

0000916

0000031

0002622

−0000745

0002598

−0001518

0000006

−0001976

0002264

−0002264

0001676

−0001675

0000884

0000070

0015727

−0022539

0014937

−0043130

0000196

−0064120

0012245

−0079229

0010176

−0087542

0007910

−0001482

0038503

−0147750

0038802

−0292616

0001266

−0435326

0032524

−0572165

0030401

−0670493

0027450

0000286

0042941

−0200895

0042614

−0402256

0001493

−0600270

0035648

−0799787

0032682

−0949409

0031424

p. 38) observe, “Furthermore, since E Xr"1 = E Xr"2 ,

one would expect Xr"1 to be approximately equal

to Xr"2 for large n ” Conversely, this statement

also means that the masked value would be approximately equal to the original value. As the results in

Table 2 show, for large n, the level of masking would

be negligible, resulting in high risk of value disclosure. By contrast, Table 2 shows that data shuffling

provides good security against risk of value disclosure. In almost all cases, the proportion of variability explained in the confidential variable using the

masked variable is close to 0. The performance of

DS100% compares with that of data shuffling with

R2 close to 0 in all cases. Thus, data shuffling and

DS100% almost completely eliminate risk of value disclosure. DS50% and DS10% provide some information

Muralidhar and Sarathy: Data Shuffling—A New Masking Approach for Numerical Data

665

Management Science 52(5), pp. 658–670, © 2006 INFORMS

Table 2

Value Disclosure Results from Simulation Experiment

Proportion of variability explained

C&S (2002) method

Downloaded from informs.org by [130.113.86.233] on 23 February 2015, at 06:45 . For personal use only, all rights reserved.

Data set size

Data shuffling

Data swapping (10%)

Data swapping (50%)

Data swapping (100%)

X1 Y1

X2 Y 2

X1 Y 1

X2 Y2

X1 Y1

X2 Y 2

X1 Y 1

X2 Y 2

X1 Y1

X2 Y2

30

0.00

0.20

0.40

0.60

0.80

0.95

0.934529

0.934529

0.934529

0.935262

0.935456

0.934534

0.934854

0.934612

0.934200

0.935353

0.935104

0.934669

0.000000

0.000000

0.000000

0.000003

0.000001

0.000003

0.000001

0.000005

0.000000

0.000024

0.000001

0.000000

0.828411

0.828411

0.828411

0.827240

0.829357

0.828431

0.829045

0.829571

0.829046

0.828568

0.829117

0.828306

0.215126

0.215138

0.215148

0.215452

0.215700

0.214481

0.218040

0.217683

0.217049

0.216955

0.217574

0.217094

0.001025

0.001025

0.001025

0.001467

0.001096

0.000955

0.001145

0.001127

0.001121

0.001129

0.000835

0.001187

100

0.00

0.20

0.40

0.60

0.80

0.95

0.975144

0.975167

0.975144

0.975167

0.975139

0.975131

0.974946

0.974961

0.974972

0.974993

0.974975

0.975073

0.000000

0.000002

0.000001

0.000000

0.000000

0.000000

0.000017

0.000001

0.000000

0.000005

0.000001

0.000000

0.871473

0.871176

0.871473

0.871176

0.871086

0.871382

0.871500

0.871048

0.871071

0.871274

0.871613

0.871811

0.256386

0.256094

0.256386

0.256094

0.255945

0.256096

0.257803

0.257500

0.257524

0.257529

0.257399

0.257585

0.000108

0.000107

0.000108

0.000107

0.000113

0.000112

0.000097

0.000121

0.000102

0.000126

0.000101

0.000100

300

0.00

0.20

0.40

0.60

0.80

0.95

0.990210

0.990230

0.990233

0.990213

0.990236

0.990222

0.990387

0.990459

0.990371

0.990316

0.990177

0.990117

0.000001

0.000004

0.000004

0.000006

0.000000

0.000000

0.000000

0.000003

0.000001

0.000005

0.000002

0.000001

0.882647

0.882726

0.882849

0.882670

0.882766

0.882731

0.883192

0.883334

0.883464

0.883645

0.883278

0.882752

0.266160

0.266230

0.266252

0.266144

0.266151

0.266178

0.267111

0.267310

0.267737

0.267684

0.267329

0.267108

0.000012

0.000012

0.000014

0.000013

0.000012

0.000013

0.000006

0.000006

0.000006

0.000006

0.000006

0.000005

1,000

0.00

0.20

0.40

0.60

0.80

0.95

0.996733

0.996668

0.996650

0.996651

0.996690

0.996739

0.996673

0.996644

0.996619

0.996584

0.996558

0.996637

0.000000

0.000001

0.000000

0.000000

0.000000

0.000001

0.000000

0.000009

0.000005

0.000000

0.000001

0.000000

0.887913

0.887913

0.887913

0.887935

0.887943

0.887913

0.886925

0.886911

0.887015

0.887288

0.887658

0.887864

0.272723

0.272723

0.272723

0.272724

0.272744

0.272723

0.271392

0.271262

0.271304

0.271375

0.271563

0.271674

0.000000

0.000000

0.000000

0.000000

0.000000

0.000000

0.000033

0.000032

0.000031

0.000032

0.000030

0.000030

to the intruder (the proportion of variability is greater

than 0), but perform better than the C&S (2002)

method.

5.3. Assessing Risk of Identity Disclosure

We conducted an additional simulation experiment

to assess the risk of identity disclosure. In this simulation, a multivariate normal data set with k variables

and n observations was generated with a mean vector of 0 and identity covariance matrix. As before, the

data was masked using the five masking methods. For

each observation, we assumed that the intruder has

the vector of the original (unmasked) values. Using

this information, the intruder attempts to match the

true value vector to the masked data that is released

(see Fuller 1993, p. 388, Equation 11). The procedure

is then repeated for each observation. The percentage

of successful matches for each masking technique was

recorded. The entire procedure was replicated 1,000

times. The simulation experiment was conducted for

n = 30, 100, 300, and 1,000 and for k = 2 to 6. This

assessment of disclosure risk is similar to those used

by Fuller (1993) and Winkler (1998).

The results of the simulation experiment, provided

in Table 3, clearly show that the C&S (2002) method

performs poorly in preventing identity disclosure. Even

with just two variables, an intruder could reidentity

more than 60% of all observations in the data set. With

five or more variables, the probability of reidentification exceeds 99% for all data sets. In these cases, the

C&S (2002) method provides practically no security.

By contrast, data shuffling provides excellent security against risk of identity disclosure. In almost all

cases, the percentage of observations reidentified is

close to 1/n , the probability of reidentification by

chance alone. We performed a hypothesis test (with

the null hypothesis that the proportion of reidentified observations = 1/n versus the alternative hypothesis that the proportion of reidentified observations

is > 1/n) for the results of data shuffling. In all cases,

we were unable to reject the null hypothesis, indicating that the proportion of reidentified observations is

not significantly different from 1/n.

The reidentification results for the three data swapping techniques follow the expected pattern. DS100%

provides the best results, almost comparable to those

of data shuffling, followed by DS50%, and finally

DS10%. However, all three data swapping procedures

provide higher security than the C&S (2002) method.

An intruder could use more sophisticated techniques

for reidentification such as those described in Winkler

(1995a, b) and Fienberg et al. (1997). However, given

that data shuffling and DS100% outperform the other

methods by a significant margin, our overall conclusion is unlikely to change.

Muralidhar and Sarathy: Data Shuffling—A New Masking Approach for Numerical Data

666

Management Science 52(5), pp. 658–670, © 2006 INFORMS

Table 3

Identity Disclosure Results

Data set

size

Number of

variables

C&S (2002)

method (%)

Data

shuffle (%)

Data

swapping (10%)

Data

swapping (50%)

Data

swapping (100%)

30

2

3

4

5

6

7242

9366

9857

9973

9997

3.55

3.98

4.20

4.25

3.89

60.04

82.43

91.05

94.83

96.66

2661

4575

5606

6102

6182

4.29

3.98

4.03

4.10

4.10

100

2

3

4

5

6

6646

9385

9901

9986

9996

1.03

1.03

1.06

1.11

1.01

55.32

87.15

96.56

99.11

99.61

1037

2695

5032

7098

8431

1.14

1.14

1.21

1.24

1.21

300

2

3

4

5

6

6262

9513

9944

9993

9999

0.32

0.34

0.35

0.35

0.32

48.68

89.51

98.33

99.74

99.94

390

1155

2726

5055

7240

0.38

0.38

0.47

0.40

0.42

1,000

2

3

4

5

6

6118

9693

9966

9997

10000

0.11

0.09

0.10

0.10

0.12

37.01

88.97

98.63

99.88

99.99

133

443

1172

2550

4607

0.11

0.12

0.12

0.12

0.13

The results in Tables 2 and 3 also confirm another

important aspect of data shuffling. A key aspect

of perturbation based on the conditional distribution approach is the conditional independence of

X and Y given S. The results, shown in Tables 2

and 3, confirm empirically that the conditional independence assumption holds well even for small data

sets. Hence, data shuffling provides very high security against risk of both value and identity disclosure.

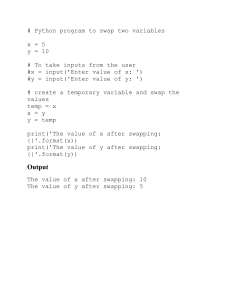

We also constructed the R-U confidentiality map

(Duncan et al. 2001) (see Figure 1) to illustrate the relative performance of the different methods for n = 100

and specified population correlation coefficient = 04.

As a surrogate measure of data utility, we used the

percentage absolute bias Bias/04 . We used value

disclosure as the measure of disclosure risk. The R-U

confidentiality map clearly shows that data shuffling

is superior to the other approaches. For the data

swapping methods, a trade-off appears between data

utility and disclosure risk. The C&S (2002) method

provides high data utility, but also high disclosure

risk. Data shuffling is the only method that simultaneously provides the highest possible data utility and

lowest disclosure risk. Although we present only one

graph, it can be easily verified that other n and combinations would provide similar results. In conclusion, data shuffling is preferable to the other techniques used in this study.

Figure 1

We conducted extensive simulation experiments to

investigate the sensitivity of data shuffling to the

number of nonconfidential variables in the data set

and the underlying distribution of the confidential

variables. Note that data shuffling was implemented

using only the values of the nonconfidential variables and

rank-order correlation among the variables. No prior information regarding the confidential variables was assumed,

and no attempt was made to estimate the marginal distribution or other characteristics of any of the variables.

In the simulation experiments, the number of nonconfidential variables was varied between 2, 4, and 6.

All nonconfidential variables were assumed to be

binary key variables and to be independent of one

another. The number of confidential variables was

100%

R-U Confidentiality Map for n = 100 and = 040

C&S (2002) method

Data swap 10%

Value disclosure risk

Downloaded from informs.org by [130.113.86.233] on 23 February 2015, at 06:45 . For personal use only, all rights reserved.

Percentage of observations reidentified

75%

50%

Data swap 50%

25%

Data shuffling

0%

0%

10%

Data swap 100%

20%

Absolute bias

30%

40%

6.

Sensitivity of Data Shuffling to

Data Set Characteristics

Muralidhar and Sarathy: Data Shuffling—A New Masking Approach for Numerical Data

667

Downloaded from informs.org by [130.113.86.233] on 23 February 2015, at 06:45 . For personal use only, all rights reserved.

Management Science 52(5), pp. 658–670, © 2006 INFORMS

specified as two. Several distributions were used to

generate the confidential variables (normal, gamma,

lognormal, and Weibull). Three levels of correlation (0.0, 0.10, 0.20, and 0.30) were specified for the

relationships between the confidential variables and

between the nonconfidential and confidential variables. The rank-order correlations between nonconfidential variables were specified as 0.0. Four different

sample sizes were used (30, 100, 300, and 1,000). The

data were generated using the procedure suggested

by Clemen and Reilly (1999). Note that it is not possible to generate data sets for all correlation values

for the 4 and 6 categorical variables. For each generated data set, the rank-order correlation between each

nonconfidential and confidential variable was computed for the original and masked data. The difference in the rank-order correlation was computed and

recorded. The entire experiment was repeated 10,000

times. Because the objective of the procedure was to

investigate the sensitivity of data shuffling, only this

procedure was implemented.

Table 4 provides select results of the experiment for

all three correlation levels, two different sample sizes

(30 and 100), and one specification for the marginal

distribution of the confidential variables (Gamma and

Table 4

Lognormal). The results in Table 4 show no specific attenuation in the rank-order correlation. The difference between the correlation of the original and

masked data is negative in some cases and positive

in others. The results also indicate that both bias

and standard error approach zero as the sample size

increases. Table 4 shows that for a given sample size

and correlation and regardless of the number of nonconfidential variables, the bias and SE are the same.

The results of the other experiments also verified this

conclusion. A comparison of the results across experiments using different specifications for the marginal

distribution of the confidential variables also revealed

no differences. This is not surprising because we do

not need information regarding the marginal distribution of the variables to perform data shuffling; we

need only the ranks of the nonconfidential variables.

As with the nonconfidential variables, the bias and SE

were inversely related to sample size and independent of the marginal distribution of the confidential

variables. Hence, we can conclude that in terms of

maintaining relationships among variables, data shuffling is not sensitive to the number of nonconfidential

variables or the underlying distribution of the confidential variables.

Sensitivity of Data Shuffling in Maintaining Rank-Order Correlation

= 00

k

Rank-order

correlation

between

n = 30

= 02

n = 100

n = 30

= 030

n = 100

n = 30

Bias

SE

Bias

SE

Bias

SE

Bias

SE

Bias

SE

Bias

SE

00041

00045

00039

00039

−00044

0.0420

0.0421

0.0424

0.0418

0.0629

00064

00065

00066

00060

−00003

0.0205

0.0204

0.0206

0.0202

0.0303

2

S1

S1

S2

S2

X1

& X1

& X2

& X1

& X2

& X2

−00003

−00001

00001

00005

−00002

0.0452

0.0452

0.0454

0.0453

0.0635

−00001

00001

−00001

00003

−00001

0.0222

0.0222

0.0226

0.0225

0.0312

00017

00019

00024

00028

−00041

0.0440

0.0439

0.0449

0.0442

0.0635

00038

00035

00031

00034

−00007

0.0215

0.0217

0.0215

0.0212

0.0316

4

S 1 & X1

S 1 & X2

S 2 & X1

S 2 & X2

S 3 & X1

S 3 & X2

S 4 & X1

S 4 & X2

X 1 & X2

00001

−00002

00000

00003

−00001

00000

00003

00007

−00005

0.0458

0.0454

0.0464

0.0458

0.0455

0.0454

0.0460

0.0456

0.0633

−00003

−00002

00002

00003

00000

−00004

00001

−00001

00000

0.0228

0.0224

0.0229

0.0227

0.0223

0.0226

0.0226

0.0226

0.0312

00035

00026

00017

00015

00031

00032

00022

00018

−00022

0.0440

0.0438

0.0438

0.0439

0.0433

0.0435

0.0432

0.0442

0.0629

00036

00037

00038

00038

00034

00036

00039

00038

−00001

0.0216

0.0218

0.0218

0.0217

0.0215

0.0217

0.0217

0.0219

0.0307

6

S1

S1

S2

S2

S3

S3

S4

S4

S5

S5

S6

S6

X1

00007

−00004

00002

00002

−00001

−00006

00003

00001

−00006

00003

−00004

00003

00007

0.0455

0.0456

0.0459

0.0458

0.0454

0.0456

0.0453

0.0454

0.0452

0.0453

0.0452

0.0454

0.0627

−00001

00000

−00001

−00001

00000

−00003

00000

−00001

00000

−00001

00001

00003

00000

0.0226

0.0223

0.0224

0.0222

0.0224

0.0222

0.0220

0.0227

0.0227

0.0224

0.0226

0.0224

0.0314

& X1

& X2

& X1

& X2

& X1

& X2

& X1

& X2

& X1

& X2

& X1

& X2

& X2

n = 100

668

Downloaded from informs.org by [130.113.86.233] on 23 February 2015, at 06:45 . For personal use only, all rights reserved.

7.

Muralidhar and Sarathy: Data Shuffling—A New Masking Approach for Numerical Data

Management Science 52(5), pp. 658–670, © 2006 INFORMS

Application in a Database Context

In this section, we illustrate the data shuffling procedure for a large data set such as, perhaps, for

data stored in data warehouses. The example consists of six variables (three nonconfidential and

three confidential variables). The three nonconfidential variables represent Gender (Male = 0, Female = 1),

Marital Status (Married = 0, Other = 1), and an ordinal discrete variable Age with integer values between

1 and 6 (in increasing order of Age). The confidential

variables consist of three continuous variables representing Home Value (Lognormal), Mortgage Balance (Gamma), and Total Net Asset Value (Normal).

A data set with 50,000 observations was created

using the procedure suggested by Clemen and Reilly

(1999) for generating a multivariate data set with nonnormal marginal distributions.4 Data shuffling was

performed using only the values of the nonconfidential variables and rank-order correlation (and without

any knowledge of the underlying characteristics of the population from which the data was generated). No attempt

was made to estimate the characteristics of any of the

variables.

Table 5 provides the rank-order correlation between

the variables pre- and postmasking. In these cases,

the results clearly show that the shuffling procedure

maintains rank-order correlation that is very close to

the original values. We also know from the results in

the previous section that the bias is likely to be close

to zero and the standard error is likely to be very

small. Considering that all rank-order correlations are

maintained, analyses performed on this data should

yield results that are very similar to those using the

original data. Users often issue ad hoc queries to organizational databases. To envision every type of query

that might be issued would be impossible. However,

the marginal characteristics of the variables remain

unmodified, and the results of all experiments consistently show that data shuffling maintains relationships among variables that are the same as before

masking. Hence we believe that responses to ad hoc

queries using the masked data would yield results

that are similar to the results that would be obtained

using the original data.

8.

Limitations and Future Research

The data shuffling approach is limited mainly by two

factors that were discussed in detail in §2; namely,

the assumption regarding the joint distribution of the

variables and the performance of the procedure for

small data sets. The results in this study show that

when we consider data utility and disclosure risk

4

The entire data set can be found at http://gatton.uky.edu/

faculty/muralidhar/MSShuffle.xls.

Table 5

Rank-Order Correlations for Original and Masked Data in

Example Database

Correlation between

Original

Shuffled

Home value and

Gender

Marital status

Age

Mortgage balance

Total net value of assets

−000373

−000187

057146

058229

068129

−000889

−000025

058779

058008

067958

Mortgage balance and

Gender

Marital status

Age

Total net value of assets

−000409

−000093

028334

078156

−000881

000210

028591

078105

Total net value of assets and

Gender

Marital status

Age

−000510

007426

037367

−000554

008377

037829

simultaneously, the data shuffling procedure outperforms existing swapping procedures. In practice, the

variables may have complex nonmonotonic relationships that data shuffling would not preserve. In addition, given the nature of the data shuffling (or for

that matter all masking approaches), it will be difficult

to implement the procedure on a constantly changing data base. Hence we envision implementing the

procedure on historical data sets such as those stored

in data warehouses rather than on “transactional”

databases that involve frequent changes. In such situations, alternative approaches such as the confidentiality via camouflage procedure (Gopal et al. 2002)

may be considered.

Another important issue worthy of further investigation is the added variability that results from the

data shuffling procedure and its impact on statistical inference using the masked data. Three studies have directly addressed this issue (Rubin 1993,

Fienberg et al. 1998a, Burridge 2003). The data shuffling approach proposed in this study can possibly be

modified according to the procedures used in those

studies.

An interesting related line of research is to formulate the entire data shuffling procedure as a mathematical programming problem. This problem is rather

complex, involving a large number of 0 1 variables

and nonlinear constraints. More important, this problem is also NP-complete (Riess et al. 1982). However, for small data sets, the optimization approach

may provide sufficient statistics and yield better solutions than the data shuffling procedure. For larger

problems, the shuffled data may provide a good initial solution to the optimization problem. The problem also has some unique structural aspects that

may benefit from a closer evaluation by optimization

experts.

Muralidhar and Sarathy: Data Shuffling—A New Masking Approach for Numerical Data

Management Science 52(5), pp. 658–670, © 2006 INFORMS

Downloaded from informs.org by [130.113.86.233] on 23 February 2015, at 06:45 . For personal use only, all rights reserved.

9.

Conclusions

In this study, we have developed a new shuffling

procedure for masking confidential, numerical data—

a procedure that is based on the conditional distribution approach. The advantages of this approach can

be summarized as follows:

(1) The released data consist of the original values

of the confidential variables (i.e., the marginal distribution is maintained exactly).

(2) All pairwise monotonic relationships among the

variables in the released data are the same as those in

the original data.

(3) Providing access to the masked microdata does

not increase the risk of disclosure.

Existing methods of data swapping do not provide

these benefits. In addition, by using the conditional

distribution in generating the masked values, data

shuffling allows data swapping techniques to perform on par with perturbation techniques. The issue

of the added variability and its impact on inferences

obtained from the masked data remains unanswered

and is worthy of further investigation.

Selecting the appropriate method of masking

depends on the users’ needs. If the user desires that

the original values of the confidential variables are not

modified (i.e., univariate characteristics of the masked

and original data are identical), existing methods fail

to provide the same level of data utility and disclosure

risk characteristics that are available with perturbation methods. By combining the strengths of the most

recent method of data perturbation (copula-based perturbation) and data swapping (C&S 2002 method), the

data shuffling procedure described in this study fills

this gap—providing data utility and disclosure risk

comparable to perturbation methods.

Finally, as calls for preserving privacy and confidentiality increase, the needs for masking techniques

accelerate rapidly. Data swapping techniques appeal

intuitively because they do not modify original values. Data swapping is also easier to explain to the

common user than other techniques. Potentially, these

characteristics can make data swapping the masking

technique of choice. Among data swapping methods, only data shuffling provides the same data utility and disclosure risks as other advanced masking

techniques, particularly for large data sets where the

asymptotic requirements are likely to be satisfied.

Using data shuffling in these situations would not

only provide optimal results, but would also foster

greater user acceptance of masked data for analysis.

Acknowledgments

The authors thank the reviewers and editors for their

valuable comments and suggestions. They also thank

Dr. William Winkler of the U.S. Census Bureau for providing them with the record linkage software.

669

References

Adam, N. R., J. C. Wortmann. 1989. Security-control methods for

statistical databases: A comparative study. ACM Comput. Surveys 21 515–556.

Burridge, J. 2003. Information preserving statistical obfuscation.

Statist. Comput. 13 321–327.

Carlson, M., M. Salabasis. 2002. A data swapping technique for

generating synthetic samples: A method for disclosure control.

Res. Official Statist. 6 35–64.

Clemen, R. T., T. Reilly. 1999. Correlations and copulas for decision

and risk analysis. Management Sci. 45 208–224.

Dalenius, T. 1977. Towards a methodology for statistical disclosure

control. Statistisktidskrift 5 429–444.

Dalenius, T., S. P. Reiss. 1982. Data-swapping: A technique for disclosure control. J. Statist. Planning Inference 6 73–85.

Dandekar, R. A., M. Cohen, N. Kirkendall. 2002. Sensitive microdata protection using Latin hypercube sampling technique.

J. Domingo-Ferrer, ed. Inference Control in Statistical Databases.

Springer-Verlag, New York.

Duncan, G. T., D. Lambert. 1986. Disclosure-limited data dissemination. J. Amer. Statist. Assoc. 81 10–18.

Duncan, G. T., R. W. Pearson. 1991. Enhancing access to microdata while protecting confidentiality: Prospects for the future.

Statist. Sci. 6 219–239.

Duncan, G. T., S. A. Keller-McNulty, S. L. Stokes. 2001. Disclosure risk vs. data utility: The R-U confidentiality map. Technical report LA-UR-01-6428, Los Alamos National Laboratory,

Los Alamos, NM.

Fienberg, S. E. 2002. Comment on paper by Carlson and Salabasis:

“A data swapping technique for generating synthetic samples:

A method for disclosure control.” Res. Official Statist. 6 65–67.

Fienberg, S. E., J. McIntyre. 2005. Data swapping: Variations on a

theme by Dalenius and Reiss. J. Official Statist. 21 309–323.

Fienberg, S. E., U. E. Makov, A. P. Sanil. 1997. A Bayesian approach

to data disclosure: Optimal intruder behavior for continuous

data. J. Official Statist. 13 75–89.

Fienberg, S. E., U. E. Makov, A. P. Steele. 1998a. Disclosure limitation using perturbation and related methods for categorical

data. J. Official Statist. 14 485–502.

Fienberg, S. E., U. E. Makov, A. P. Steele. 1998b. Rejoinder. J. Official

Statist. 14 509–511.

Fuller, W. A. 1993. Masking procedures for microdata disclosure

limitation. J. Official Statist. 9 383–406.

Gopal, R., R. Garfinkel, P. Goes. 2002. Confidentiality via camouflage: The CVC approach to disclosure limitation when answering queries to databases. Oper. Res. 50 501–516.

Kooiman, P. 1998. Comment. J. Official Statist. 14 503–508.

Liew, C. K., U. J. Choi, C. J. Liew. 1985. A data distortion by probability distribution. ACM Trans. Database Systems 10 395–411.

Little, R. J. A. 1993. Statistical analysis of masked data. J. Official

Statist. 9 407–426.

Moore, R. A. 1996. Controlled data swapping for masking public

use microdata sets. Research report series no. RR96/04, U.S.

Census Bureau, Statistical Research Division, Washington, D.C.

Muralidhar, K., R. Sarathy. 2003a. A theoretical basis for perturbation methods. Statist. Comput. 13 329–335.

Muralidhar, K., R. Sarathy. 2003b. A rejoinder to the comments by

Polettini and Stander. Statist. Comput. 13 339–342.

Muralidhar, K., R. Parsa, R. Sarathy. 1999. A general additive data

perturbation method for database security. Management Sci. 45

1399–1415.

Muralidhar, K., R. Sarathy, R. Parsa. 2001. An improved security requirement for data perturbation with implications for

e-commerce. Decision Sci. 32 683–698.

Nelsen, R. B. 1999. An Introduction to Copulas. Springer, New York.

Downloaded from informs.org by [130.113.86.233] on 23 February 2015, at 06:45 . For personal use only, all rights reserved.

670

Muralidhar and Sarathy: Data Shuffling—A New Masking Approach for Numerical Data

Raghunathan, T. E., J. P. Reiter, D. B. Rubin. 2003. Multiple imputation for statistical disclosure limitation. J. Official Statist. 19 1–6.

Reiss, S. P., M. J. Post, T. Dalenius. 1982. Non-reversible privacy

transformations. Proc. ACM Sympos. Principles Database Systems,

Los Angeles, CA, 139–146.

Rubin, D. B. 1993. Discussion of statistical disclosure limitation.

J. Official Statist. 9 461–468.

Sarathy, R., K. Muralidhar, R. Parsa. 2002. Perturbing non-normal

confidential variables: The copula approach. Management Sci.

48 1613–1627.

Wall Street Journal. 2001. Bureau blurs data to keep names confidential. (February 14) B1–B2.

Management Science 52(5), pp. 658–670, © 2006 INFORMS

Willenborg, L., T. de Waal. 2001. Elements of Statistical Disclosure

Control. Springer, New York.

Winkler, W. E. 1995a. Advanced methods for record linkage. Proc.

Survey Res. Methods Section, American Statistical Association,

Alexandria, VA.

Winkler, W. E. 1995b. Matching and record linkage. B. G. Cox,

ed. Business Survey Methods. John Wiley and Sons, New York,

355–384.

Winkler, W. E. 1998. Producing public-user microdata that are analytically valid and confidential. Statistical research report series

no. RR98/02, U.S. Census Bureau, Statistical Research Division,

Washington, D.C.