Aircraft Inspection Human Factors Study: Individual Differences

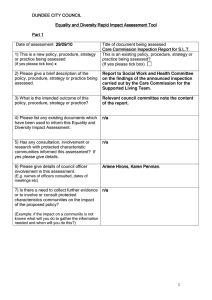

advertisement