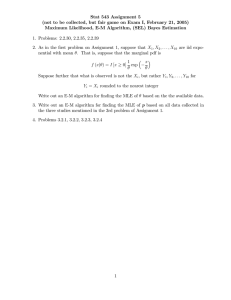

Tsinghua-Berkeley Shenzhen Institute

Learning from Data

Fall 2019

Written Assignment 1

Issued: Sunday 29th September, 2019

Due: Sunday 13th October, 2019

1.1. A data set consists of m data pairs (x(1) , y (1) ), . . . , (x(m) , y (m) ), where x ∈ Rn is the

independent variable, and y ∈ {1, . . . , k} is the dependent variable. The conditional

probability Py|x (y|x)1 estimated by the softmax regression is

exp(θlT x + bl )

Py|x (l|x) = ∑k

,

T

j=1 exp(θj x + bj )

l = 1, . . . , k,

where θ1 , . . . , θk ∈ Rn and b1 , . . . , bk ∈ R are the parameters of softmax regression.

The term bi is called a bias term.

The log-likelihood of the softmax regression model is

ℓ=

m

∑

log Py|x (y (i) |x(i) ).

i=1

(a) Evaluate

∂ℓ

.

∂bl

The data set can be described by its empirical distribution P̂x,y (x, y) defined as

1 ∑

P̂x,y (x, y) =

1{x(i) = x, y (i) = y},

m i=1

m

where 1{·} is the indicator function. Similarly, the empirical marginal distributions

of this data set are

1 ∑

P̂x (x) =

1{x(i) = x},

m i=1

m

1 ∑

P̂y (y) =

1{y (i) = y}.

m i=1

m

(b) Suppose we have set the biases (b1 , . . . , bk ) to their optimal values, prove that

∑

P̂y (l) =

Py|x (l|x)P̂x (x),

x∈X

where X = {x(i) : i = 1, . . . , m} is the set of all samples of x.

∂ℓ

∂ℓ

∂ℓ

=

= ··· =

= 0.

Hint: The optimality implies

∂b1

∂b2

∂bk

1.2. We have mentioned maximun likelyhood estimation (MLE) in the class.

(a) Now let’s do it on a very simple case.

The notation Py|x (y|x) stands for P(y = y|x = x), i.e., the conditional probability of y = y given

x = x.

1

Written Assignment 1

Learning from Data

Page 2 of 2

Suppose we have N samples (x1 ,x2 ,...,xn ) independently drawn from a normal distribution with KNOWN variance σ 2 and UNKNOWN mean µ

)

(

1

(xi − µ)2

P (xi |µ) = √

,

exp −

2σ 2

2πσ 2

please find the MLE for µ

(b) It is always a task to find a satisfying loss function.

However MLE is not always the best. To explain MLE simply, it is trying to maximize

the possiblity the events under the parameter you want to compute. Think about

it, you have known the N samples. Why should we consider them as the events that

should be estimated instead of the conditions we have known. Based on Bayes Rule,

we can cultivate another method called Maximum A Posteriori (MAP).

P (µ|x1 , x2 , ..., xn ) =

P (x1 , x2 , ..., xn |µ) P (µ)

P (x1 , x2 , ..., xn )

There exist some assumptions on P (µ). If µ is purely at random, it will make no

difference between MLE and MAP. Here suppose

(

)

1

(µ − ν)2

P (µ) = √

exp −

,

2θ2

2πθ2

do the calculation to find the estimator for µ. Also, compare the estimators of MLE

and MAP when n is very large.

1.3. We want to talk about Multivariate Least squares in this problem.

A data set consists of m data pairs (x(1) , y (1) ), . . . , (x(m) , y (m) ), where x ∈ Rn is

the independent variable, and y ∈ Rl is the dependent variable. Denote the design

matrix by X ≜ [x(1) , . . . , x(m) ]T , and let Y ≜ [y (1) , · · · , y (m) ]T . Please write down

the square loss J(Θ), where Θ ∈ Rn×l is the parameter matrix you want to get, and

then compute the solution.

Hint: Hopefully you can write down the square loss without confusion. Just in case,

we will write it as

m

l

)2

1 ∑ ∑ ( T (i)

(i)

(Θ x )j − yj

J(Θ) =

2 i=1 j=1

You can do the following things.

1.4. The multivariate normal distribution can be written as

(

)

1

1

T −1

− (y − µ) Σ (y − µ) ,

Py (y; µ, Σ) =

n

1 exp

2

(2π) 2 |Σ| 2

where µ and Σ are the parameters. Show that the family of multivariate normal

distributions is an exponential family, and find the corresponding η, b(y), T (y), and

a(η).

Hints: The parameters η and T (y) are not limited to be vectors, but can also be

matrices. In this case, the Frobenius inner product can be used to define the inner

product between two matrices, which is represented as the trace of their products. The

properties of matrix trace might be useful.