Uploaded by

Dominique Fablauty

Error Detection & Correction for Digital Data Lesson Notes

advertisement

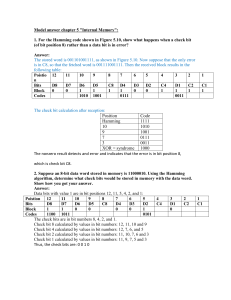

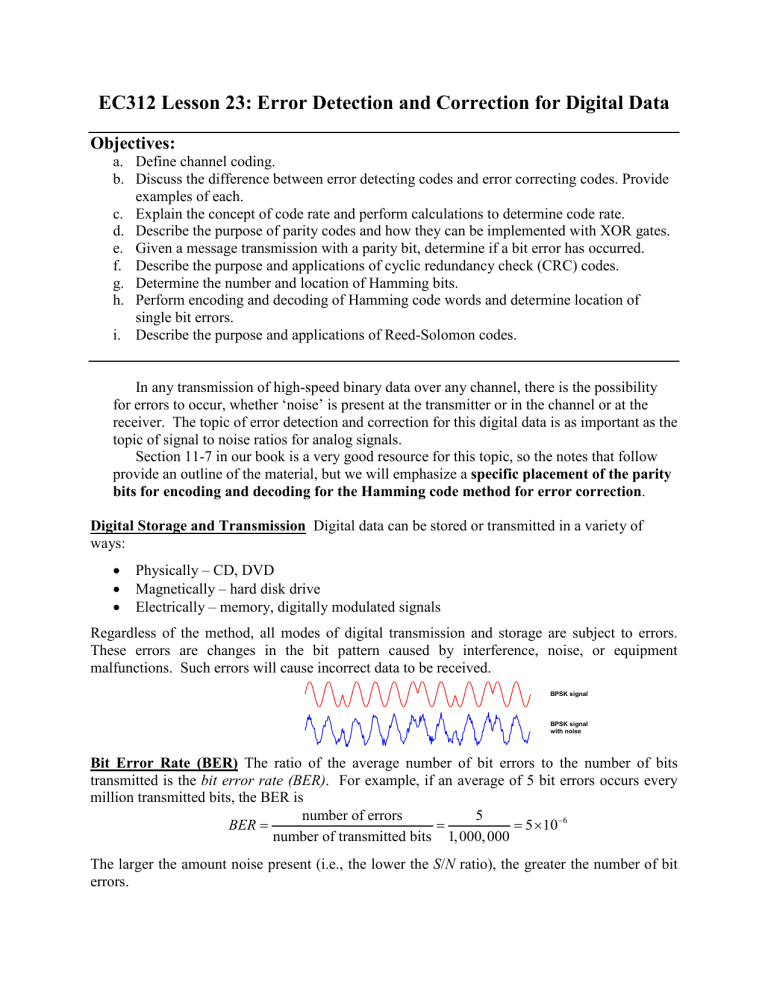

EC312 Lesson 23: Error Detection and Correction for Digital Data Objectives: a. Define channel coding. b. Discuss the difference between error detecting codes and error correcting codes. Provide examples of each. c. Explain the concept of code rate and perform calculations to determine code rate. d. Describe the purpose of parity codes and how they can be implemented with XOR gates. e. Given a message transmission with a parity bit, determine if a bit error has occurred. f. Describe the purpose and applications of cyclic redundancy check (CRC) codes. g. Determine the number and location of Hamming bits. h. Perform encoding and decoding of Hamming code words and determine location of single bit errors. i. Describe the purpose and applications of Reed-Solomon codes. In any transmission of high-speed binary data over any channel, there is the possibility for errors to occur, whether ‘noise’ is present at the transmitter or in the channel or at the receiver. The topic of error detection and correction for this digital data is as important as the topic of signal to noise ratios for analog signals. Section 11-7 in our book is a very good resource for this topic, so the notes that follow provide an outline of the material, but we will emphasize a specific placement of the parity bits for encoding and decoding for the Hamming code method for error correction. Digital Storage and Transmission Digital data can be stored or transmitted in a variety of ways: • • • Physically – CD, DVD Magnetically – hard disk drive Electrically – memory, digitally modulated signals Regardless of the method, all modes of digital transmission and storage are subject to errors. These errors are changes in the bit pattern caused by interference, noise, or equipment malfunctions. Such errors will cause incorrect data to be received. BPSK signal BPSK signal with noise Bit Error Rate (BER) The ratio of the average number of bit errors to the number of bits transmitted is the bit error rate (BER). For example, if an average of 5 bit errors occurs every million transmitted bits, the BER is number of errors 5 BER= = = 5 ×10−6 number of transmitted bits 1, 000, 000 The larger the amount noise present (i.e., the lower the S/N ratio), the greater the number of bit errors. What is the effect of a single bit error in a binary number (e.g., your checking account balance), in a character’s ASCII representation (say in a text file), in a digital file that contains a speech recording, or in executable computer code? Some of the time, these errors are just important for us to know about, but most of the time we would also like to correct them. Error detection/correction Error detection codes only have the ability to confirm that bit error(s) has/have occurred, however they cannot tell you which bit was in error. • Parity codes • Cyclical Redundancy Check (CRC) Error correcting codes have the ability to correct bit errors without requiring a retransmission. • Longitudinal Redundancy Check (LRC) • Hamming codes • Reed-Solomon codes Channel Coding To ensure reliable communications, techniques have been developed that allow bit errors to be detected and corrected. The process of error detection and correction involves adding extra redundant bits to the data to be transmitted. This process is generally referred to as channel coding. Channel coding methods fall into to two separate categories: • Error detection codes only have the ability to confirm that bit error(s) has occurred, however they cannot tell you which bit was in error. To fix the error, the receiver must request a retransmission. • Error correcting codes or forward error correction (FEC) codes have the ability to detect some bit errors and fix them without requiring a retransmission. Block Codes In block codes, the encoder takes in m information or message bits and produces a codeword of length m+n. Since m+n > m, the quantity n represents the number of extra bits (redundancy) added. This would be referred to as an (m+n, m ) linear code. Example: Linear block code (7,4) with m= 4 and m+n = 7 Messages (0 0 0 0) (0 0 0 1) (0 0 1 0) (0 0 1 1) (0 1 0 0) (0 1 0 1) (0 1 1 0) (0 1 1 1) (1 0 0 0) (1 0 0 1) (1 0 1 0) (1 0 1 1) (1 1 0 0) (1 1 0 1) (1 1 1 0) (1 1 1 1) Code words (0 0 0 0 0 0 0) (1 0 1 0 0 0 1) (1 1 1 0 0 1 0) (0 1 0 0 0 1 1) (0 1 1 0 1 0 0) (1 1 0 0 1 0 1) (1 0 0 0 1 1 0) (0 0 1 0 1 1 1) (1 1 0 1 0 0 0) (1 1 0 1 0 0 1) (0 0 1 1 0 1 0) (1 0 0 1 0 1 1) (1 0 1 1 1 0 0) (0 0 0 1 1 0 1) (0 1 0 1 1 1 0) (1 1 1 1 1 1 1) A key point about channel coding is that there is a cost to be paid of increasing reliability. The extra n bits added by encoding result in: • • Larger file sizes for storage. Higher required transmission data rates. This cost is represented by the code rate. The code rate Rc is the ratio of the number of information bits m to the number of bits in the codeword m+n. Parity codes The simplest kind of error-detection code is the parity code. To construct an even-parity code, add a parity bit such that the total number of 1’s is even. 1-bit parity codes can detect single bit errors, but they do not detect 2 bit errors. The parity bit can generated using several XOR gates. Cyclical Redundancy Check A common, powerful method of error-detection is the cyclic redundancy check (CRC). Cyclic codes are widely used because they can be implemented at high speeds using simple components (XOR gates and shift registers). 16- and 32-bit CRCs are widely used. The CRC technique can effectively detect 99.9% of transmission errors. The concept of a cyclic redundancy check is this: the entire string of bits in a block of data is considered to be one giant binary number, which is divided by some pre-selected constant. This division results in a quotient and a remainder. The remainder is compared to the remainder, which is transmitted with the data bit stream. If there is a difference, an error has occurred. Note again the CRC is an error detection scheme. While the CRC will show that errors have occurred, it cannot determine which particular bits are in error. This code is used in the internet packets. Example Problem 1 Consider the (7,4) linear code depicted to the right: a. What is the code rate? b. If a message consists of 128 bits, how many bits will be produced by the encoder? c. Of the bits produced by the encoder, what percentage are added for redundancy? Messages (0 0 0 0) (0 0 0 1) (0 0 1 0) (0 0 1 1) (0 1 0 0) (0 1 0 1) (0 1 1 0) (0 1 1 1) (1 0 0 0) (1 0 0 1) (1 0 1 0) (1 0 1 1) (1 1 0 0) (1 1 0 1) (1 1 1 0) (1 1 1 1) Code words (0 0 0 0 0 0 0) (1 0 1 0 0 0 1) (1 1 1 0 0 1 0) (0 1 0 0 0 1 1) (0 1 1 0 1 0 0) (1 1 0 0 1 0 1) (1 0 0 0 1 1 0) (0 0 1 0 1 1 1) (1 1 0 1 0 0 0) (1 1 0 1 0 0 1) (0 0 1 1 0 1 0) (1 0 0 1 0 1 1) (1 0 1 1 1 0 0) (0 0 0 1 1 0 1) (0 1 0 1 1 1 0) (1 1 1 1 1 1 1) Example Problem 2 The serial port on a computer is set to transmit a file using 8-data bits followed by 1 parity bit. The port is set for even parity. Another computer receives the following 9-bit strings. Which are valid and which contain an error? a. 001011010 b. 111111111 c. 101010100 Hamming codes Hamming codes are a popular example of an error correcting linear block code. In 1950, the mathematician Richard W. Hamming published a general method for constructing error-correcting codes with a minimum distance of 3. • The Hamming code is capable of correcting only one bit error. Hamming codes are commonly used in computer memory systems. A Hamming code word consists of m message bits and n Hamming bits. m+n bit codeword h1 h2 h3 hn d1 d2 d3 n Hamming bits dm m message bits The required of number Hamming bits (n) is given by the smallest value of n ≥ 3 that satisfies following expression 2n ≥ m + n + 1 The Hamming bits should not be grouped together. They are parity bits, which cover groups of bits throughout the message. They can be placed in different ways and the book has chosen one way, but we will stick with this specific placement of the Hamming bits. Consider the following arrangement in which the Hamming bits are located at integer powers of 2. (Start with 2^0 =1, so h1 is placed at bit coding position 1, and 2^1=2, so h2 is placed at bit position 2, and 2^2=4, so h3 is placed at bit position 4, and so on…..). If we have a 7 bit message, so the 4 Hamming bits are placed in positions 1,2,4 and 8. 11 10 9 8 7 6 5 4 3 d7 d6 d5 h4 d4 d3 d2 h3 d1 2 1 h2 h1 bit positions Hamming encoding We now need to determine the value of the Hamming bits for a given binary message. Consider transmitting the 7-bit ASCII code corresponding to the letter “K” “K” = 1 0 0 1 0 1 1 11 10 1 0 9 0 8 h4 7 1 6 5 4 0 1 h3 1 3 2 1 Bit positions h2 h1 The following process is used to compute the Hamming bits: • Express all the bit positions that contain a value of “1” as binary numbers. o In the case below, positions 11, 7, 5, and 3. • XOR these binary numbers together. • The resulting four bit binary number correspond the values of the Hamming bits. From the previous example (positions 11,7,5,3) Bit position 11 7 XOR 5 XOR 3 XOR Binary 1011 0111 1100 0101 1001 0011 1010 So the result is the 4 bits of Hamming code, which now gets placed/interleaved with the data into the specific positions we have chosen, the MSB is h4 and the LSB is h1. Hamming decoding Decoding follows a similar process: • Express all the bit positions in the received codeword that contain a value of “1” as binary numbers (not including the Hamming bits) o In the case below, positions 11, 7, 5, and 3. • XOR these binary numbers together with 4-bit string formed by the Hamming bits (in this case 1010) • If no error is present, the resultant will be all zeros. Bit position Binary Hamming decoding (no errors) From the previous example (positions 11,7,5,3) Hamming decoding (with errors) Let’s consider the case in which a single bit error occurs. Say an error occurs in bit position 7 1 0 0 1 1 0 1 0 1 11 7 XOR 5 XOR 3 XOR Hamming bits XOR 1011 0111 1100 0101 1001 0011 1010 1010 0000 1 0 and the following codeword is received. Apply the Hamming decoding process. A “1” is present in bit positions 11, 5 and 3 and the Hamming bits are 1010. The resulting 4 bit number reveals the bit position of the error. (0111 => bit position 7) In the previous examples using an (11,7) Hamming code, the code rate is Rc = and the minimum Hamming distance between code words is 3. • With a minimum distance of 3, this code can correct all single bit error Bit position Binary 11 1011 5 0101 3 0011 H 1010 ___XORs______________ 7 0111 Reed-Solomon codes Reed-Solomon codes were introduced in 1960 to help correct more errors. Like Hamming codes, they are a forward error correcting code, however Reed-Solomon codes are capable of correcting 16 bit errors and bursts of errors as it adds parity bits to blocks of data. A very popular Reed-Solomon codes is RS(255,223) 255 bytes data parity 223 32 Uses include CDs, DVDs, barcodes, DSL modems, cellular phones, satellite communications, digital television Example Problem 3 It is desired to use a Hamming code to encode a 7 bit ASCII character. How many Hamming bits are required? What is the resulting code rate Rc? n+7 bit h1 h2 hn d1 d2 d3 d4 d5 d6 d7 n Hamming 7 message Example Problem 4 Use the previous encoding process ( Hamming) to encode the 7-bit ASCII code corresponding to letter “X” 11 10 9 8 7 6 5 4 3 d7 d6 d5 h4 d4 d3 d2 h3 d1 2 1 bit positions h2 h1 Example Problem 5 The following codeword is received. Use the Hamming decoding process to determine whether an error exists. If an error exists, determine which bit is in error.