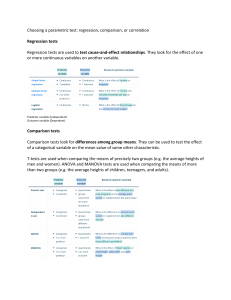

Correlation and Simple Linear Regression Analysis Lecture Number 12 March 7, 2017 Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 1 / 48 Outline of Lecture 12 1 Introduction 2 Scatter Plots for Two Quantitative Variables 3 Correlation Analysis 4 Calculating the Sample Correlation Coefficient r 5 Assumptions of Correlation Analysis 6 Simple Linear Regression Analysis 7 ANOVA for Simple Linear Regression Analysis 8 Inferences Concerning β1 , the Slope of the Line 9 Measuring the Strength of the Relationship: Coefficient of Determination R 2 Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 2 / 48 Introduction Biologists, and researchers in general, commonly record or observe more than one variable from each sampling or experimental unit. For example, a forester may measure tree height and dbh on each tree, an aquaculturist may measure the length and weight of each fish in an aquarium, a physiologist may record blood pressure and body weight from experimental animals, or an ecologist may record the abundance of a particular species of shrub and soil pH from a series of plots during vegetation sampling. When two variables are measured on a single experimental unit, the resulting data are called bivariate data and multivariate when we have more than two random variables recorded from each unit. The focus for this lecture will be bivariate quantitative data. Note that: ”Bi” means ”two”; and thus bivariate data generates pairs of measurements or observations. Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 3 / 48 Introduction...cont’d With such kind of data (bivariate), we may ask the following questions: (1) (2) (3) (4) Are the two variables related? If so, what is the strength of the relationship? What type of relationship exists? What kind of predictions can be made from the relationship? The first two questions can be answered by carrying out a statistical technique called Correlation Analysis while the last two can be answered using Regression Analysis. Correlation is a statistical method used to determine whether a relationship between variables exists and the strength of the relationship. Regression is a statistical method used to describe the nature of the relationship between variables, that is, positive or negative, linear or nonlinear. Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 4 / 48 Introduction...cont’d The relationship between variables may be simple or multiple. In a simple relationship, there are two variables - an independent variable, also called an explanatory variable or a predictor variable, and a dependent variable, also called a response variable. Simple relationships can also be positive or negative. A positive relationship exists when both variables increase or decrease at the same time. In a negative relationship , as one variable increases, the other variable decreases, and vice versa. Thus, in simple correlation and regression studies, the researcher collects data on two numerical or quantitative variables to see whether a relationship exists between the variables. Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 5 / 48 Scatter Plots for Two Quantitative Variables Consider a random sample of n observations of the form (x1 , y1 ), (x2 , y2 ), ..., (xn , yn ), where x is the independent variable and y is the dependent variable, both being scalars. A preliminary descriptive technique for determining the form of relationship between x and y is the scatter diagram or scatter plot. Each pair of data values is plotted as a point on this two-dimensional graph (so that the graph takes the form of a plot on the (x, y ) axes), called a scatter plot. Scatter Plot A scatter plot is a graph of the ordered pairs (x, y ) of numbers or values consisting of the independent variable x and the dependent variable y . A scatter plot is the two dimensional extension of the dotplot we use to graph one quantitative variable. Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 6 / 48 Scatter Plots for Two Quantitative Variables After the scatter plot is drawn, it should be analyzed to determine which type of relationship, if any, exists. You can describe the relationship between two variables, x and y , using the patterns shown in the scatterplot: (i) What type of pattern do you see?Is there a constant upward or downward trend that follows a straight-line pattern? Is there a curved pattern? Is there no pattern at all, but just a random scattering of points? (ii) How strong is the pattern? Do all of the points follow the pattern exactly, or is the relationship only weakly visible? (iii) Are there any unusual observations (outliers)? An outlier is a point that is far from the cluster of the remaining points. Do the points cluster into groups? If so, is there an explanation for the observed groupings? The next slide shows some examples of scatter plots and the pattern of relationship suggested by the data. Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 7 / 48 Scatter Plots for Two Quantitative Variables Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 8 / 48 Correlation Analysis As earlier stated, correlation is used to determine if a relationship exists between two quantitative variables. A numerical measure used to determine whether two or more variables are related and to determine the strength of the relationship between or among the variables is called a correlation coefficient. There are several types of correlation coefficients but our focus will be on the Pearson product moment correlation coefficient (PPMC). The correlation coefficient computed from the sample data measures the strength and direction of a linear relationship between two variables. The symbol for the sample correlation coefficient is r while the symbol for the population correlation coefficient is ρ (Greek letter rho). The range of the correlation coefficient is from −1 to +1. If there is a strong positive linear relationship between the variables , the value of r will be close to +1. Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 9 / 48 Correlation Analysis If there is a strong negative linear relationship between the variables , the value of r will be close to −1. When there is no linear relationship between the variables or only a weak relationship , the value of r will be close to 0. Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 10 / 48 Calculating the Sample Correlation Coeffienct r Let (x1 , y1 ), (x2 , y2 ), ..., (xn , yn ) be a random sample (of size n) from a bivariate normal distribution. The unbiased estimator of ρ is the sample correlation coefficient defined by ρ̂ or r , Pn (xi − x̄)(yi − ȳ ) sxy = r = pPn i=1 P n 2 2 sx sy i=1 (xi − x̄) i=1 (yi − ȳ ) The quantities in the denominator, i.e. sx and sy are the standard deviations for the variables x and y , respectively, which can be found by using the statistics function on your calculator or the computing formulas earlier discussed. The new quantity, in the numerator, sxy is called the covariance between x and y and is defined as: P P Pn Pn ( ni=1 xi )( ni=1 yi ) x y − (x − x̄)(y − ȳ ) i i i i i=1 n sxy = i=1 = n−1 n−1 Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 11 / 48 Calculating the Sample Correlation Coeffienct r When r > 0, values of y increase as the values of x increase, and the data set (for the two variables) is said to be positively correlated. When r < 0, values of y decrease as the values of x increase, and the data set is said to be negatively correlated Example (1) Construct a scatter plot for the data obtained in a study on the number of absences and the final grades of seven randomly selected students from a Biometry class. (2) Compute the correlation coefficient for the data. The data are shown on the next slide. Note that r can easily be computed using the formula: P P P n ni=1 xi yi − ( ni=1 xi )( ni=1 yi ) r = q P P P P n ni=1 xi2 − ( ni=1 xi )2 n ni=1 yi2 − ( ni=1 yi )2 Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 12 / 48 Calculating the Sample Correlation Coeffienct r Student Number of absences x Final grade y (%) A B C D E F G 6 2 15 9 12 5 8 82 86 43 74 58 90 78 For the scatter plot, (1) Draw and label the x and y axes using an appropriate scale, e.g. for % you could use a 10% interval from 30% to 100%; while for number of absences, from 0 to 15, with a 1 unit interval. (2) Plot each point on the graph, Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 13 / 48 Calculating the Sample Correlation Coeffienct r Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 14 / 48 Calculating the Sample Correlation Coeffienct r For computations of r , proceed as follows: (1) Make a table comprising of at least 5 columns; for x, y , xy , x 2 and y 2 . (2) Compute the sum of values for x, y , xy , x 2 and y 2 and place these values in the corresponding columns of the table. Student x y xy x2 y2 A B C D E F G P 6 2 15 9 12 5 8 82 86 43 74 58 90 78 492 172 645 666 696 450 624 36 4 225 81 144 25 64 6,724 7,396 1,849 5,476 3,364 8,100 6,084 x = 57 y = 511 xy = 3, 745 x 2 = 579 y 2 = 38, 993 (3) Substitute in the formula and solve for r . Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 15 / 48 Calculating the Sample Correlation Coeffienct r r is calculated as: Pn P P xi yi − ( ni=1 xi )( ni=1 yi ) i=1 q P P P P n ni=1 xi2 − ( ni=1 xi )2 n ni=1 yi2 − ( ni=1 yi )2 n =p 7(3745) − (57)(511) [(7)(579) − (57)2 ] [(7)(38, 993) − (511)2 ] = −0.944 The value of r suggests a strong negative relationship between a student’s final grade and the number of absences a student has. That is, the more absences a student has, the lower is his or her grade. Note: We can conduct a test of hypothesis concerning the correlation coefficient. In that case: H0 : ρ = 0 and Ha : ρ 6= 0 We can also construct a confidence interval for ρ. Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 16 / 48 Assumptions of Correlation Analysis The methods used to estimate and test a hypothesis on a population correlation are based on assumptions that: (1) the sample of data sets is a random sample from the population; and (2) the measurements or observations have a bivariate normal distribution in the population. A bivariate normal distribution is a bell-shaped probability distribution in two dimensions rather than one. A bivariate normal distribution has the following features: (1) the relationship between the two variables, say x and y , is linear; (2) the cloud of points in a scatter plot of x and y has an elliptical shape; and (3) the frequency distributions of x and y separately are normal. Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 17 / 48 Calculating the Sample Correlation Coeffienct r Exercise Consider the set of bivariate data given below: x 1 2 3 4 5 6 y 5.6 4.6 4.5 3.7 3.2 2.7 (a) Draw a scatter plot to describe the data. (b) Does there appear to be a relationship between x and y ? If so, how do you describe it? (c) Calculate the correlation coefficient, r . Does the value of r confirm your conclusions in part (b)? Explain. Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 18 / 48 Simple Linear Regression Analysis If one of the two variables can be classified as the dependent variable y and the other independent variable x, and if the data exhibit s straight line pattern (i.e. if the value of the correlation coefficient is significant), it is possible to describe the relationship relating y to x using a straight line given by the equation: y = a + bx The relationship can be shown as below: Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 19 / 48 Simple Linear Regression Analysis From the figure on previous slide, we see that a is where the line crosses or intersects the y -axis: a is called the y -intercept. We can also see that for every one-unit increase in x, y increases by an amount b. The quantity b determines whether the line is increasing (b > 0), decreasing (b < 0), or horizontal (b = 0) and is appropriately called the slope of the line. The scatter diagrams or plots we constructed showed that not all the data values (x, y ) fall on a straight line but they do show a trend that could be described as a linear pattern. We can describe this trend by fitting a line as best we can through the points. Thus, given a scatter plot, you must be able to draw the line of best fit. Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 20 / 48 Simple Linear Regression Analysis Best fit means that the sum of the squares of the vertical distances from each point to the line is at a minimum. The reason you need a line of best fit is that the values of y will be predicted from the values of x; hence, the closer the points are to the line, the better the fit and the prediction will be. Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 21 / 48 Simple Linear Regression Analysis The basic idea of simple linear regression is to use data to fit a prediction line that relates a dependent variable y and a single independent variable x. Assuming linearity, we would like to write y as a linear function of x: y = β0 + β1 x However, according to such an equation, y is an exact linear function of x; no room is left for the inevitable errors (deviation of actual y values from their predicted values). Therefore, corresponding to each y we introduce a random error term εi and assume the model to be: yi = β0 + β1 xi + εi ; i = 1, 2, ..., n. In the above model, we assume the random variable y to be made up of a predictable part (a linear function of x) and an unpredictable part (the random error εi ). Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 22 / 48 Simple Linear Regression Analysis The coefficients β0 and β1 are interpreted as the true, underlying intercept and slope respectively. The error term ε includes the effects of all other factors, known or unknown, i.e. the combined effects of unpredictable and ignored factors yield the random error terms ε. In regression studies, the values of the independent variable (the xi values) are usually taken as predetermined constants, so the only source of randomness is the εi terms. Thus, when we assume that the xi s are constants, the only random portion of the model for yi is the random error term εi . With these definitions, the formal assumptions of simple linear regression analysis are as given on the next slide. Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 23 / 48 Simple Linear Regression Analysis Assumptions of Simple Linear Regression Analysis (1) The relation is, in fact, linear, so that the errors all have expected value or mean zero: E (εi ) = 0 for all i. (2) The errors all have the same variance: Var (εi ) = σ 2 for all i. (3) The errors are independent of each other; Var (εi , εj ) = 0 (4) The errors, εi , are all normally distributed. Because we have assumed that E (εi ) = 0, the expected value of y is given by: E (y ) = β0 + β1 x. The estimator of the E (y ), denoted by ŷ , can be obtained by using the estimators βˆ0 and βˆ1 of the parameters β0 and β1 , respectively. Then, the fitted regression line we are looking for is given by: ŷ = βˆ0 + βˆ1 x Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 24 / 48 Simple Linear Regression Analysis The assumptions about the random error ε are shown in the Figure below for three fixed values of x - say, x1 , x2 , and x3 . Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 25 / 48 Simple Linear Regression Analysis For observed values (xi , yi ), we obtain the estimated value of yi as: yˆi = βˆ0 + βˆ1 xi . The deviation of observed yi from its predicted value yˆi , called the ith residual, is defined by: h i εi = (yi − yˆi ) = yi − (βˆ0 + βˆ1 xi ) . The residuals, or errors εi , are the vertical distances between observed and predicted values of yi ’s The regression analysis problem is to find the best straight-line prediction. The most common criterion for ”best” is based on squared prediction error. Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 26 / 48 Simple Linear Regression Analysis We find the equation of the prediction line - that is, the slope βˆ1 and intercept βˆ0 that minimize the total squared prediction error or total sum of squares for errors (SSE ) or sum of squares of the residuals for all of the n data points. The method that accomplishes this goal is called the least-squares method or Ordinary Least Squares (OLS) because it chooses βˆ0 and βˆ1 to minimize the SSE : SSE = n X i=1 εi = n X i=1 (yi − yˆi )2 = n h X yi − (βˆ0 + βˆ1 xi ) i2 i=1 The least-squares estimates of slope and intercept are obtained as follows: sxy βˆ1 = and βˆ0 = ȳ − βˆ1 x̄ sxx Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 27 / 48 Simple Linear Regression Analysis sxy P P n n X X ( ni=1 xi ) ( ni=1 yi ) = (xi − x̄)(yi − ȳ ) = xi yi − n i=1 sxx = i=1 n X i=1 2 (xi − x̄) = n X i=1 xi2 − ( Pn i=1 xi ) 2 n Thus, sxy is the sum of x deviations times y deviations and sxx is the sum of x deviations squared. Example Find the equation of the regression line for the data in Example on number of absences and final grades, and graph the line on the scatter plot. Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 28 / 48 Simple Linear Regression Analysis The values equation are: Pn needed forPthe Pn Pn n 2 n = 7, i=1 xi = i=1 xi yi = 3, 745, Pn i=12xi = 57, i=1 yi 1=P511, n 1 579, i=1 xi = 7 ∗ 57 = 8.1429 and ȳ = i=1 yi = 38, 993, x̄ = n 1 Pn 1 i=1 yi = 7 ∗ 511 = 73 n Substituting in the formulas, we get: Pn Pn Pn n x y − ( x ) ( s 7(3, 745) − (57)(511) xy i i i i=1 i=1 i=1 yi ) = = βˆ1 = Pn P 2 n sxx 7(579) − (57)2 n i=1 xi2 − ( i=1 xi ) βˆ1 = −3.622 βˆ0 = ȳ − βˆ1 x̄ = 73 − (−3.622 ∗ 8.1429) = 102.494 Hence, the equation of the regression line ŷ = βˆ0 + βˆ1 x is: ŷ = 102.494 − 3.622x Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 29 / 48 Simple Linear Regression Analysis The sign of the correlation coefficient and the sign of the slope of the regression line will always be the same. That is, if r is positive, then βˆ1 will be positive; if r is negative, then βˆ1 will also be negative. The reason is that the numerators of the formulas are the same and determine the signs of r and βˆ1 , and the denominators are always positive! When you graph the regression line, always select x values between the smallest x data value and the largest x data value. The regression line will always pass through the point whose x coordinate is the mean of the x values and whose y coordinate is the mean of the y values, that is, (x̄, ȳ ). The regression line can be used to make predictions for the dependent variable. We can use the regression line to predict, for example, the final grade for a student with 7 absences. Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 30 / 48 Simple Linear Regression Analysis To make such a prediction, we substitute 7 for x in the equation, i.e. ŷ = 102.494 − 3.622x = 102.494 − 3.622(7) = 77.14 Hence, a student who has 7 absences will have approximately 77.14% as the final grade. The magnitude of the change in one variable when the other variable changes exactly 1 unit is called a marginal change. The value of slope βˆ1 of the regression line equation represents the marginal change. For example, in our regression line constructed, the slope of the regression line is −3.622, which means for each increase in number of absences, the value of y (final grade) changes by −3.622 on the average. For valid predictions, the value of the correlation coefficient, r , must be significant. Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 31 / 48 Simple Linear Regression Analysis When r is not significantly different from 0, the best predictor of y is the mean of the data values of y . Extrapolation, or making predictions beyond the bounds of the data, must be interpreted cautiously! Remember that when predictions are made, they are based on present conditions or on the premise that present trends will continue. This assumption may or may not prove true in the future! Note that: A scatter plot should be checked for outliers. An outlier is a point that seems out of place when compared with the other points. Some of these points can affect the equation of the regression line. When this happens, the points are called influential points or influential observations. Points that are outliers in the x direction tend to be influential points and judgement has to be made as to whether they should be included in the final analysis. Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 32 / 48 ANOVA for Simple Linear Regression Analysis We can use ANOVA and test of hypothesis to make inferences about regression parameters for the simple regression model. In a regression analysis, the response y is related to the independent variable x. Hence, the total variation in the response variable y , given by: P n n X X ( ni=1 yi )2 2 2 SSTotal = syy = (yi − ȳ ) = yi − n i=1 i=1 SSTotal is divided into two portions: (1) SSR (sum of squares for regression) - measures the amount of variation explained by using the regression line with one independent variable x n SSR = X (sxy )2 = (ŷi − ȳ )2 sxx i=1 (2) SSE (sum of squares for error) - measures the ”residual” variation in the data that is not explained by the independent variable x. Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 33 / 48 ANOVA for Simple Linear Regression Analysis Note that in the figure above, y 0 stands for the ŷ . Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 34 / 48 ANOVA for Simple Linear Regression Analysis Since SSTotal = SSR + SSE , we can complete the partition by calculating: (sxy )2 SSE = SSTotal − SSR = syy − sxx Remember from our previous discussions on CRD and RCBD that each of the various sources of variation, when divided by the appropriate degrees of freedom, provides an estimate for mean squares - MS = SS df . The ANOVA table for simple linear regression is as shown below: Source df Regression 1 Error Total n−2 n−1 Lecture Number 12 SS (sxy )2 sxx (s )2 syy − sxyxx MS MSR = MSE = F SSR (1) SSE (n−2) MSR MSE syy Correlation and Simple Linear Regression Analysis March 7, 2017 35 / 48 ANOVA for Simple Linear Regression Analysis For the data on number of absences and final grade, we can compute the quantities in the ANOVA table as follows: P n n X X ( ni=1 yi )2 2 2 SSTotal = syy = (yi − ȳ ) = yi − n i=1 = 38, 993 − i=1 (511)2 = 1, 690 7 (sxy )2 = βˆ1 ∗ sxy = −3.622 ∗ −416 = 1, 506.752 sxx SSE = SSTotal − SSR = 1, 690 − 1, 506.752 = 183.248 SSR = MSR = SSR SSE 183.248 = 1, 506.752 and MSE = = = 36.6496 1 n−2 5 MSR 1, 506.752 F = = = 41.1124 MSE 36.6496 Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 36 / 48 ANOVA for Simple Linear Regression Analysis The ANOVA table for our example is thus summarized as follows: Source df SS MS F Regression Error Total 1 5 6 1,506.752 183.248 1,690 1,506.752 36.6496 41.1124 The calculated F is then used to determine whether the regression model constructed is significant. The rejection region is; reject H0 if: F > Fα (1, n − 2). For our example, suppose we take α = 0.05, the critical F value is: F0.05 (1, 5) = 6.608 Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 37 / 48 Inferences Concerning β1 , the Slope of the Line In considering simple linear regression, we may ask two questions: (1) Is the independent variable x useful in predicting the response variable y? (2) If so, how well does it work? The first question is like asking: is the regression equation that uses information provided by x substantially better than the simple predictor ȳ that does not rely on x? If the independent variable x is not useful in the population model, y = β0 + β1 x + ε, then the value of y does not change for different values of x. The only way that this happens for all values of x is when the slope β1 of the line of means equals 0. This would indicate that the relationship between y and x is not linear, so that the initial question about the usefulness of the independent variable x can be restated as: Is there a linear relationship between x and y ? Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 38 / 48 Inferences Concerning β1 , the Slope of the Line You can answer this question by using either a test of hypothesis or a confidence interval for β1 . Both of these procedures are based on the sampling distribution of β̂1 , the sample estimator of the slope β1 . It can be shown that, if the assumptions about the random error ε are valid, then the estimator β̂1 has a normal distribution in repeated sampling with mean E (β̂1 ) = β1 , and standard error (SE) given by: s SEβˆ1 = σ2 sxx where, σ 2 is the variance of the random error ε. Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 39 / 48 Inferences Concerning β1 , the Slope of the Line Since the value of σ 2 is estimated with s 2 = MSE , you can base inferences on the statistic given by β̂1 − β1 β̂1 − 0 t=p or t = p MSE /sxx MSE /sxx which has a t distribution with df = (n − 2), the degrees of freedom associated with MSE . A summary of statistical test for the slope β1 is outlined on the next slide. The F test (given by F = MSR MSE ) obtained in regression analysis ANOVA table can be used as an equivalent test statistic for testing the hypothesis: H0 : β1 = 0. The F test , F = MSR MSE , has df1 = 1 and df2 = n − 2. It is a more general test of the usefulness of the model and can be used when the model has more than one independent variable. Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 40 / 48 Inferences Concerning β1 , the Slope of the Line Test of Hypothesis concerning the slope of the Line (1) H0 : β1 = 0 and Ha : β1 6= 0(two-tailed test), and Ha : β1 > 0 or Ha : β1 < 0 (both one-tailed tests). (2) Test statistic: t = √ β̂1 −0 MSE /sxx When the assumptions for simple linear regression are satisfied, the test statistic will have a Student’s t distribution with df = (n − 2). (3) Rejection region: reject H0 when: t > tα or t < −tα (when Ha : β1 < 0) for one-tailed tests and t > tα/2 or t < −tα/2 , for two-tailed test. Alternatively, reject H0 when p−value< α. The values of tα and tα/2 are found in t− distribution tables; use df = n − 2. Note: A 100(1 − α)% confidence interval for β1 is found as: p β̂1 ± tα/2 ∗ MSE /sxx Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 41 / 48 Inferences Concerning β1 , the Slope of the Line Example Using the data for the number of absences and final grades, (i) Test for the significance of the relationship between the two variables using α = 0.05. (ii) Construct a 95% confidence interval for the slope of the regression line. Solution For testing the significance of the relationship between the two variables, the test of hypothesis is as follows: (1) H0 : β1 = 0 and Ha : β1 6= 0 (2) α has been given as 0.05 and the test is two-tailed; the critical values for t are: t0.025 (5) = ±2.571. Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 42 / 48 Inferences Concerning β1 , the Slope of the Line Solution...Cont’d (3) Rejection region: Reject H0 if t < −2.571 or t > 2.571. (4) Compute the test statistic −3.622 β̂1 − 0 =p = −16.965 t=p MSE /sxx 36.6496/804 (5) Make the decision. We reject H0 at α = 0.05 since −16.965 < −2.571. (6) Conclusion: there is sufficient evidence that there is a significant linear relationship between the final grades and number of absences for the Biometry class. Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 43 / 48 Inferences Concerning β1 , the Slope of the Line Solution...Cont’d 100(1 − α)% confidence interval for β1 = β̂1 ± tα/2 ∗ p MSE /sxx = −3.622 ± 2.571 ∗ 0.214 = [−4.161, −3.083] The resulting 95% confidence interval does not contain 0; thus we would conclude that the true value of β1 is not 0, and thus would reject H0 : β1 = 0 in favour of Ha : β1 6= 0. This conclusion is the same as the one we arrived at when we conducted the test using the critical value approach. Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 44 / 48 Measuring the Strength of the Relationship: Coefficient of Determination R 2 The strength of the relationship measures how well the regression model fits the data. We earlier on stated that the correlation coefficient r can be used to measure the strength of relationship between two variables. Closely related to the r is what is called the Coefficient of Determination R 2 - the coefficient of determination is simply the square of the correlation coefficient. The coefficient of determination is the ratio of the explained variation to the total variation and is denoted by either r 2 or R 2 : Explained Variation SSR SSTotal − SSE R2 = = = Total Variation SSTotal SSTotal Thus, R 2 is a measure of the variation of the dependent variable that is explained by the regression line and the independent variable. R 2 is usually expressed as a percentage (%). Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 45 / 48 Measuring the Strength of the Relationship: Coefficient of Determination R 2 For our example on number of absences and final grade, R2 = SSR 1, 506.752 = = 0.892; and as a % = 0.892∗100 = 89.2%. SST 1, 690 Thus, 89.2% of the variation in the final grades is explained by the variation in the number of absences. In other words, 89.2% of the variation in final grades for the Biometry class is explained by the linear relationship between final grade and number of absences. The rest of the variation, i.e. 0.108 or 10.8%, is unexplained variation; i.e., is due to other factors. Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 46 / 48 Exercise An experiment was designed to compare several different types of air pollution monitors.The monitor was set up, and then exposed to different concentrations of ozone, ranging between 15 and 230 parts per million (ppm) for periods of 8 - 72 hours. Filters on the monitor were then analyzed, and the amount (in micrograms) of sodium nitrate (NO3 ) recorded by the monitor was measured. The results for one type of monitor are given in the table below. Ozone(ppm/hr) NO3 (µg ) Lecture Number 12 0.8 2.44 1.3 5.21 1.7 6.07 2.2 8.98 Correlation and Simple Linear Regression Analysis 2.7 10.82 2.9 12.16 March 7, 2017 47 / 48 Exercise...cont’d (a) Calculate the correlation coefficient for the data and interpret. (b) Find the least-squares regression line relating the monitor’s response to the ozone concentration. (c) Using the estimated regression line, estimate the amount of NO3 recorded for ozone concentration of 2.0ppm/hr. (d) Construct an ANOVA table for simple linear regression for the data. (e) Do the data provide sufficient evidence to indicate that there is a linear relationship between the ozone concentration and the amount of sodium nitrate detected? Test at α = 0.05. (f) Construct a 95% confidence interval for the slope of the regression line. (g) Calculate R 2 . What does this value tell you about the effectiveness of the linear regression analysis? Lecture Number 12 Correlation and Simple Linear Regression Analysis March 7, 2017 48 / 48