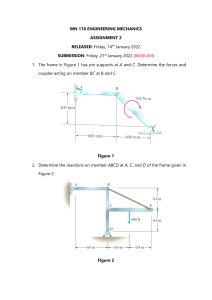

COE 292 Introduction to Artificial Intelligence Machine Learning Content on these slides was mostly developed by Dr. Akram F. Ahmed, COE Dept. 9/13/2022 INTRODUCTION TO AI 1 Outline • Introduction • AI and ML • ML Strategies and Paradigms • ML Training and Evaluation • Supervised Learning • Unsupervised Learning 9/13/2022 INTRODUCTION TO AI 2 Introduction • Learning is one of the most important activities of human beings and living beings in general, which help us in adapting to the environment. • Learning involves making changes in the way of learning to improve inference and knowledge acquisition. • Most of the knowledge in the real world is not formalized and not available in textual form which makes it difficult for computers to learn and infer. • The concept of learning is based on the principles of training the computing machines, and enabling them to teach themselves. 9/13/2022 INTRODUCTION TO AI 3 Machine Learning (ML) • Machine learning aims to create theories and procedures -learning algorithms, that allow machines to learn. • The computation undertaken by a learning system can be viewed as occurring at two distinct times, training time and consultation time. • Training Time: ◦ the time prior to consultation time where data points are presented to train the machine learning algorithm. In other words, its the time spent by new systems training to get ready to consultation time. • Consultation Time: ◦ the time between when a data point is presented to the system and the time when the inference is completed. 9/13/2022 INTRODUCTION TO AI 4 AI and Machine Learning 9/13/2022 INTRODUCTION TO AI 5 Learning Strategies • In the learning process, the learner transforms the information provided by a teacher (or environment) into some new form that can be used in the future. • The nature of this knowledge and transformation are the deciding factors for the type of learning strategy used. 9/13/2022 INTRODUCTION TO AI 6 Learning Strategies • Rote Learning: When learning, the original knowledge is copied in the same form and stored in the knowledge Base (KB), when needed in the future it will be retrieved as it was sored without change (Memorization). • Learning by Instruction: requires the knowledge to be transformed into an operational form before it can be integrated into the knowledge base. ◦ Example are students in a class where the teacher presents a number of facts. The basic transformations performed by a learner are selection and reformation of information provided by the teacher. 9/13/2022 INTRODUCTION TO AI 7 Fundamental Strategies of Learning • Learning by Deduction: is carried out through a sequence of deductive inference steps using known facts. ◦ Example: if we have the sentence in our KB (i.e. a rule), saying "a father's father is a grand father," and we have the case that 𝑎 is the father of 𝑏 and 𝑏 is the father of 𝑐 , then we can deduce that 𝑎 is the grand father of 𝑐 . • Learning by Analogy: provides learning of new concepts through the use of similar known concepts or solutions. ◦ Example: solving exam questions where based on the solution of somewhat similar solutions we have already done at home or in class we attempt to solve the new problem. 9/13/2022 INTRODUCTION TO AI 8 Fundamental Strategies of Learning • Learning by Induction (or Similarity): is often observed after the learner experiences a number of instances or examples regarding the same problem and formulating a general concept. ◦ Example: Learning that those students who complete their homework by themselves and attend all classes in time get more Cumulative Percentile Index (CPI) is an example of Inductive learning. • Reinforcement Learning: is the study of decision making over time with consequences. ◦ Example: Whenever, a teacher appreciates the student's efforts by saying "very good", for asking an interesting question, or answering a question, it is reinforcement-based learning for the student. 9/13/2022 INTRODUCTION TO AI 9 Machine Learning Paradigms • Machine Learning can be divided into the following classes: ◦ Supervised Ground Truth when learning is achieved while there is a teacher present for learning to take place There is ground truth available for the given training data Algorithm learns relationship between features and labels during training The algorithm iteratively makes predictions on the training data and is corrected by the teacher 9/13/2022 INTRODUCTION TO AI The real (true) label(s), value(s) or class(es) associated with the given data. Examples: • Given Ahmed’s face picture, the ground truth label could be his Name and/or ID# 10 Machine Learning Paradigms • Machine Learning can be divided into the following classes: ◦ Unsupervised Ground Truth Ground truth is not available Learning is achieved without a teacher Algorithm learns patterns* or groupings in the data during training The real (true) label(s), value(s) or class(es) associated with the given data. Examples: • Given Ahmed’s face picture, the ground truth label could be his Name and/or ID# Example: clustering methods such as 𝑘 means. 9/13/2022 INTRODUCTION TO AI 11 Machine Learning Paradigms ◦ Reinforcement “learning what to do—how to map situations to actions— so as to maximize a numerical reward signal.” [Sutton & Barto]. ◦ Semi-Supervised The ground truth data may be scarce or partially available ◦ Self-Supervised Ground Truth The real (true) label(s), value(s) or class(es) associated with the given data. Examples: • Given Ahmed’s face picture, the ground truth label could be his Name and/or ID# Ground truth data not available, but pseudo-labels are generated to conduct the learning process “Obtains supervisory signals from the data itself, often leveraging the underlying structure in the data” [Meta AI] 9/13/2022 INTRODUCTION TO AI 12 Machine Learning Paradigms ◦ Self-Supervised Examples: Ground Truth ◦ “we can hide part of a sentence and predict the hidden words from the remaining words.” ◦ “We can also predict past or future frames in a video (hidden data) from current ones (observed data).” 9/13/2022 INTRODUCTION TO AI The real (true) label(s), value(s) or class(es) associated with the given data. Examples: • Given Ahmed’s face picture, the ground truth label could be his Name and/or ID# 13 Typical Problems for ML? Classification: Predict a class label for an input INTRODUCTION TO AI 14 Supervised vs. Unsupervised • Supervised • Unsupervised ◦ Example: distinguishing between two plant types 9/13/2022 ◦ Example: grouping data into classes INTRODUCTION TO AI 15 Supervised vs. Unsupervised – Examples • You’re running a real-estate company, and you want to develop ML algorithms to address each of two problems: ◦ Problem 1: You have a large database of housing prices for houses of different sizes. You want to predict the prices of houses given their sizes. ◦ Problem 2: You’d like the software to examine specific houses in your database, and put each house decide in one of two categories; highly desirable or undesirable. • Should you treat these as classification or as regression problems? 9/13/2022 INTRODUCTION TO AI 16 Supervised vs. Unsupervised – Examples • Of the following examples, which would you address using an unsupervised ML algorithm? (Check all that apply.) Given an email labeled as spam/not spam, learn a spam filter. Given a set of news articles found on the web, group them into set of articles about the same story. Given a dataset of patients diagnosed as either having heart disease or not, learn to classify new patients as having heart disease or not. Given a database of university students, automatically discover sport skills and group students into different sport segments. 9/13/2022 INTRODUCTION TO AI 17 ML Training and Evaluation • Cross Validation • Underfitting and Overfitting 9/13/2022 INTRODUCTION TO AI 18 ML Training and Evaluation – Cross Validation • In any Machine Learning problem, we are given a set of data with labels that tell us what this data means to the expert of the field. ◦ Example: Lets assume that we have collected data about heart disease from a large sample that cover all possible causes of having the disease. Furthermore, assume that we represent the entire collected data by the blue bar below where each dot in the bar represents the data set collected from one person. 9/13/2022 INTRODUCTION TO AI 19 ML Training and Evaluation – Cross Validation • In Machine Learning we need to do two things with this data: 1. Estimate the parameters of the machine learning method, i.e. use it to guess the shape of the curve that best fits the data if a 2D estimator is used. ◦ In Machine Learning, parameters estimation is called Training the algorithm. 2. Evaluate how well do the learned parameters work, i.e. we need to test how good a job will the estimated curve do when we present it with data it has never seen before. ◦ In Machine Learning, evaluating a method is called Testing the algorithm. 9/13/2022 INTRODUCTION TO AI 20 ML Training and Evaluation – Cross Validation • Therefore in Machine learning: ◦ We need the data to train the machine learning algorithm. ◦ We need to test the trained ML model on data it hasn’t seen in training, to make sure that the algorithm performs well. • Question: where can we get this data? ◦ Using the same data for training and testing does not work since we do not know how the algorithm performs when it is given a set of data it has not been trained on. ◦ Using all the data for training will not leave any data for testing 9/13/2022 INTRODUCTION TO AI 21 ML Training and Evaluation – Cross Validation • Answer: we use the labeled data provided by an expert. In the example of the heart disease we will divide the collected data into a training set and testing set. ◦ A common practice in Machine Learning is to use 75% of the data for training and 25% of data for testing. ◦ The Question is which 25% to choose for testing and which 75% to choose for training? 9/13/2022 INTRODUCTION TO AI 22 ML Training and Evaluation – Cross Validation • We use cross validation method : ◦ divide the data into a number of subsets ◦ In each fold, assign some (different) subsets for training and leave the rest for testing • Example: a Four-Fold cross validation: the data is divided into FOUR equal sets. For the heart disease example they are shown below: 9/13/2022 INTRODUCTION TO AI 23 ML Training and Evaluation – Cross Validation • We then train the Machine Learning Algorithm and test the result as follows: ◦ Sets 1,2,3 training and Set 4 for testing ◦ Sets 1,2,4 training and Set 3 for testing ◦ Sets 2,3,4 training and set 1 for testing ◦ ...etc (all possible combinations) • At the end, we find which testing sets results with the least error and we choose the respective trained model as the final one. • In practice, its is common to use 10-fold Cross validation where the data is divided into 10 sets and all combinations are then tested to yield the winner training set. 9/13/2022 INTRODUCTION TO AI 24 ML Training and Evaluation – Underfitting and Overfitting • Suppose we have the dataset as shown below ◦ Data is labeled, red circles and blue circles • How can we train and obtain the best classifier? 9/13/2022 INTRODUCTION TO AI 25 ML Training and Evaluation – Underfitting and Overfitting • Idea 1: Let us try a linear classifier represented by a straight line: ◦ As can be seen that there are many blue points above the line that are misclassified ◦ No matter how we rotate or shift the line, we will always have high misclassification rate ◦ This is known as Underfitting 9/13/2022 INTRODUCTION TO AI Over simplifies the complexity in the data 26 ML Training and Evaluation – Underfitting and Overfitting • Idea 2: ◦ Lets use a curve that best can separate the red from the blue classes ◦ Let us divide our data into training and testing as shown below |Training | Testing| |---------|--------| | 9/13/2022 INTRODUCTION TO AI 27 ML Training and Evaluation – Underfitting and Overfitting • Idea 2: ◦ We can find the "wavey" curve that best fits all the points in the training set as shown below: Fits the training data very well 9/13/2022 INTRODUCTION TO AI 28 ML Training and Evaluation – Underfitting and Overfitting • Idea 2: ◦ Now if we use the curve to test with data we get the following: Does not do well with the testing data ◦ As can be seen that many test points are not classified correctly. ◦ This is what we call Overfitting 9/13/2022 INTRODUCTION TO AI 29 ML Training and Evaluation – Underfitting and Overfitting • Idea 3: ◦ Allow for some misclassification and we can get: ◦ This curve does not over fit nor under fit ◦ There are some misclassifications but we can live with this error 9/13/2022 INTRODUCTION TO AI 30 Supervised Learning - Classification • Classification predicts the classes (categories) to which the given examples would belong and then classifies the examples in those categories. • It assumes the following: ◦ Existence of some teacher (environment), ◦ A fitness function to measure the fitness of an example for a class, and ◦ Some external method of classifying the training instances. • A classifier typically learns with the help of a training set containing examples in which any given target word has been manually tagged with the sense from the sense inventory of some reference dictionary. 9/13/2022 INTRODUCTION TO AI 31 Supervised Learning - Classification • An example of supervised learning for text classification is a classifier which picks one word at a time, performs its Word Sense Disambiguation, and then performs a classification task in order to assign the appropriate sense to each instance of the word. ◦ Disadvantage: the learning process requires some intervention from the user. ◦ pre-process and tag the examples occurring in the training set, or ◦ online process and dynamically tag examples as needed during the learning process. 9/13/2022 INTRODUCTION TO AI 32 Supervised Learning - Classification • Examples of Supervised Learning algorithms: ◦ 𝑘 Nearest Neighbor (𝑘 -NN) ◦ Support Vector Machines (SVM) 9/13/2022 INTRODUCTION TO AI 33 K-Nearest Neighbor (k-NN) • Uses 𝑘 closest points (nearest neighbors) for performing classification • 𝑘 -Nearest Neighbor algorithms classify a new example by comparing it to all previously seen examples. • The classifications of the 𝑘 most similar previous cases are used for predicting the classification of the current example. 9/13/2022 INTRODUCTION TO AI 34 K-Nearest Neighbor (k-NN) • The training examples are used for ◦ providing a library of sample cases ◦ re-scaling the similarity function to maximize performance 9/13/2022 INTRODUCTION TO AI 35 K-Nearest Neighbor Algorithm 1. Calculate the distance between a test point and every training instance. 2. Pick the 𝑘 closest (nearest) training examples and assign the test instance to the most common category amongst these nearest neighbors. ◦ Voting multiple neighbors (𝑘) helps decrease susceptibility to noise. ◦ Usually use odd value for 𝑘 to avoid ties. 9/13/2022 INTRODUCTION TO AI 36 K-Nearest Neighbor (k-NN) – Examples • Example: Given training data (shown as solid circles) to classify two different attributes, a new point (hollow circle) has to be classified. ◦ It is assigned the most frequent label of its 𝑘 nearest neighbors as shown below ◦ Note that changing 𝑘 may lead to different classification 9/13/2022 INTRODUCTION TO AI 37 K-Nearest Neighbor: Distance Metrics • 𝑘-NN methods assume a function for determining the similarity or distance between any two instances. • Euclidean distance is the generic choice. • Considering two patterns and • The Euclidean distance between them is given by: where m is the number of dimensions 9/13/2022 INTRODUCTION TO AI 38 K-Nearest Neighbor: Distance Metrics • Example: Find the distance between 𝑥=(3,5) and 𝑧=(1,2). ◦ The Euclidean distance in 2-dimensions is • Euclidean distance in higher dimension ◦ Example: Find the Euclidean distance between 784-dimensional vectors x; z? 9/13/2022 INTRODUCTION TO AI 39 K-Nearest Neighbor: Distance Metrics • For 1-NN, we can identify surfaces where if a point lies within a specific surface, it will be classified to its nearest neighbor. • In the following example the dots represents the nodes we will be using for classification and the X is the new point that needs to be classified. • Different distance metrics can change the decision surface as shown on the right where the used metric is shown under each picture 9/13/2022 INTRODUCTION TO AI 40 K-Nearest Neighbor: Examples • Example: Given the following data of a diseased patient, find the survivability of the new patient (shown in green) using: ◦ 1-NN ◦ 3-NN ◦ 48-NN • As we can see the 1-NN classifies the survivability to "Did Not Survive" while 3-NN classifies it to "Survived." ; underfitting • Selecting 48-NN will compare with average and so on. 9/13/2022 INTRODUCTION TO AI 41 K-Nearest Neighbor: Overfitting and Underfitting • Based on the dataset that you may have, the value of 𝑘 determines if you are facing under or over fitting • In this example: ◦ If the value of 𝑘=1, the result may be considered as underfitting since outliers may misclassify some new points especially if they are close to the outlier. ◦ If the value of 𝑘 is set to be too large then you may have overfitting. 9/13/2022 INTRODUCTION TO AI 42 K-Nearest Neighbor: Overfitting and Underfitting • When 𝑘 is equal to the number of samples in the data, 𝑘 -NN becomes an average comparator. • Good value of 𝑘 maybe 3, 4, 5, 6, and 7. 9/13/2022 INTRODUCTION TO AI 43 K-Nearest Neighbor: Overfitting and Underfitting • Conclusion ◦ The selection of the value of 𝑘 is crucial to ensure that the classifier works correctly and as per our needs. 9/13/2022 INTRODUCTION TO AI 44 K-Nearest Neighbor: Efficiency • Very efficient in training ◦ Only store the training data • Not so efficient in testing ◦ Computation of distance measure to every training example ◦ Much more expensive than, e.g., rule learning • Simplest way of finding nearest neighbor: ◦ Linear scan of the data ◦ Classification takes time proportional to the product of the number of instances in training and test sets 9/13/2022 INTRODUCTION TO AI 45 K-Nearest Neighbor: Pros and Cons • Pros ◦ It is extremely easy to implement ◦ Requires no training prior to making real time predictions. ◦ This makes the 𝑘-NN algorithm much faster than other algorithms that require training, e.g SVM, linear regression, etc. ◦ Since the algorithm requires no training before making predictions, new data can be added seamlessly. ◦ There are only two parameters required to implement 𝑘-NN, the value of 𝑘 and the distance function (e.g. Euclidean or Manhattan etc.) 9/13/2022 INTRODUCTION TO AI 46 K-Nearest Neighbor: Pros and Cons • Cons ◦ The 𝑘-NN algorithm doesn't work well with high dimensional data because with large number of dimensions, it becomes difficult for the algorithm to calculate distance in each dimension. ◦ The 𝑘-NN algorithm doesn't work well with categorical features since it is difficult to find the distance between dimensions with categorical features. 9/13/2022 INTRODUCTION TO AI 47 K-Nearest Neighbor: Implementation • On Jupyter Notebook: kNN-Implementation.ipynb 9/13/2022 INTRODUCTION TO AI 48 K-Nearest Neighbor: Evaluating the Algorithm • For evaluating an algorithm, confusion matrix, precision, recall and f1 score are the most commonly used metrics. ◦ Confusion Matrix: is a matrix in which (m,n)th element is the number of examples of the mth class which were labeled, by the classifier, as belonging to the nth class ◦ For the k-nn example we have Iris-setosa Iris-setosa 9/13/2022 Iris-versicolor Iris-virginica 12 Iris-versicolor 11 Iris-virginica 1 INTRODUCTION TO AI 6 49 K-Nearest Neighbor: Evaluating the Algorithm • Accuracy: Overall performance of model • Precision: How accurate the positive predictions are • Recall: Coverage of actual positive sample 9/13/2022 INTRODUCTION TO AI 50 Confusion Matrix for a Binary Classifier • Suppose that the correct label is either 0 or 1. Then the confusion matrix is just 2x2 • In this box, you would write the # examples of class 1 that were misclassified as class 0 Correct Label: Classified As: 9/13/2022 0 1 0 1 INTRODUCTION TO AI 51 False Positives & False Negatives • TP (True Positives) = examples that were correctly labeled as “1” • FN (False Negatives) = examples that should have been “1”, but were labeled as “0” • FP (False Positives) = examples that should have been “0”, but were labeled as “1” labeled as “0” 9/13/2022 INTRODUCTION TO AI Correct Label: • TN (True Negative) = examples that were correctly Classified As: 0 1 0 1 TN FP FN TP 52 False Positives & False Negatives 9/13/2022 INTRODUCTION TO AI 53