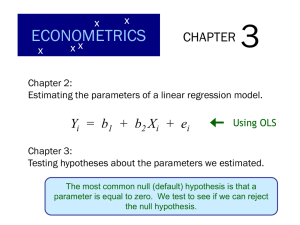

Chapter 3

Multiple Regression Analysis: Estimation

1 / 29

Where are we in the course?

Part 1— Introduction and basics of regression analysis

• Chapter 1— The nature of econometrics and economic data

• Chapter 2— The simple regression model

• Chapter 3— Multiple regression analysis: estimation

• Chapter 4— Multiple regression analysis: inference

• Chapter 5— Multiple regression analysis: OLS asymptotics

Part 2— Some advanced topics

• Chapter 8— Heteroskedasticity

• Chapter 10— Basic regression analysis with time series data

• Chapter 12— Serial correlation and heteroskedasticity in time series regressions

• Chapters 15 & 16— Instrumental variables estimation, two-stage least squares, and

simultaneous equations

2 / 29

Overview

• Motivation for multiple regression

• Mechanics and interpretation of ordinary least squares

• The expected value of the OLS estimators

• The variance of the OLS estimators

• Efficiency of OLS: the Gauss-Markov theorem

3 / 29

Overview

• Motivation for multiple regression

• Mechanics and interpretation of ordinary least squares

• The expected value of the OLS estimators

• The variance of the OLS estimators

• Efficiency of OLS: the Gauss-Markov theorem

4 / 29

Motivation for multiple regression

Better suited for ceteris paribus analysis

• In a simple linear regression (SLR) model like

log(wage) = β0 + β1 educ + u

the zero conditional mean assumption can be controversial

• β̂1 is a biased/inconsistent estimator of β1 if not E[u|educ] = 0

• Multiple regression analysis allows to explicitly control for factors that simultaneously

affect the dependent variable, as in

log(wage) = β0 + β1 educ + β2 exper + u

with E[u|educ, exper ] = 0

Other motivation

• More flexible functional forms; e.g., Mincer’s (1974) regression

log(wage) = β0 + β1 educ + β2 exper + β3 exper2 + u

5 / 29

Motivation for multiple regression

Multiple (linear) regression model

y = β0 + β1 x1 + β2 x2 + · · · + βk xk + u

Analogous to SLR:

• Terminology for variables and parameters

• Linear in parameters

• Possibly nonlinear in variables; e.g.

log(wage) = β0 + β1 educ + β2 exper + β3 exper2 + u

(How does this affect interpretation of “slope” parameters β2 , β3 ?)

• Key assumption E[u|x1 , x2 , . . . , xk ] = 0 establishes ceteris paribus interpretation of slope

parameters

6 / 29

Overview

• Motivation for multiple regression

• Mechanics and interpretation of ordinary least squares

• The expected value of the OLS estimators

• The variance of the OLS estimators

• Efficiency of OLS: the Gauss-Markov theorem

7 / 29

Mechanics and interpretation of ordinary least squares

Obtaining the OLS estimates

Suppose we have a random sample {(xi1 , xi2 , . . . , xik , yi ) : i = 1, . . . , n} from the population,

with yi = β0 + β1 xi1 + β2 xi2 + · · · + βk xik + ui

• We want to estimate β0 , β1 , . . . , βk and obtain the sample regression function (SRF)

ŷ = β̂0 + β̂1 x1 + β̂2 x2 + · · · + β̂k xk

• Ordinary least squares (OLS) estimates β̂0 , β̂1 , . . . , β̂k minimize the sum of squared

residuals (SSR)

n

X

i=1

ûi2 =

n

X

i=1

(yi − ŷi )2 =

n

X

(yi − β̂0 − β̂1 xi1 − β̂2 xi2 − · · · − β̂k xik )2

i=1

where ûi is the residual and ŷi the fitted value for observation i

8 / 29

Mechanics and interpretation of ordinary least squares

Obtaining the OLS estimates

OLS estimates satisfy the OLS first order conditions

n

n

X

X

ûi =

(yi − β̂0 − β̂1 xi1 − β̂2 xi2 − · · · − β̂k xik ) = 0

i=1

n

X

xi1 ûi =

i=1

i=1

n

X

xi1 (yi − β̂0 − β̂1 xi1 − β̂2 xi2 − · · · − β̂k xik ) = 0

i=1

..

.

n

X

i=1

xik ûi =

n

X

xik (yi − β̂0 − β̂1 xi1 − β̂2 xi2 − · · · − β̂k xik ) = 0

i=1

• These are sample analogs of E[u] = 0, E[x1 u] = 0, . . . , E[xk u] = 0

(Where do these population moment conditions come from?)

9 / 29

Mechanics and interpretation of ordinary least squares

Interpreting the OLS regression equation

Sample regression function

ŷ = β̂0 + β̂1 x1 + β̂2 x2 + · · · + β̂k xk

• Intercept β̂0 equals predicted value y when x1 = x2 = · · · = xk = 0

• Slopes β̂1 , . . . , β̂k have partial effect (ceteris paribus) interpretations:

so that

∆ŷ = β̂1 ∆x1 + β̂2 ∆x2 + · · · + β̂k ∆xk

∆ŷ = β̂1 ∆x1

when ∆x2 = · · · = ∆xk = 0

(Does this also work if e.g. x1 = exper and x2 = exper 2 ?)

• Multiple regression mimics controlled laboratory setting with nonexperimental data, by

keeping other factors fixed

10 / 29

Mechanics and interpretation of ordinary least squares

Examples

• Determinants of college GPA (GPA1.DTA)

\ = 1.29 + 0.453 hsGPA + 0.0094 ACT

colGPA

• Hourly wage equation (WAGE1.DTA)

\ = 0.284 + 0.092 educ + 0.0041 exper + 0.022 tenure

log(wage)

11 / 29

Mechanics and interpretation of ordinary least squares

OLS fitted values and residuals

Recall that the fitted or predicted value of y for observation i is

ŷi = β̂0 + β̂1 xi1 + β̂2 xi2 + · · · + β̂k xik

and that the corresponding residual for observation i equals

ûi = yi − ŷi

The sample moment conditions (again) imply

Pn

•

ûi = 0 (⇒ sample average OLS residuals zero), so that ȳ = ŷ¯

Pi=1

n

•

xij ûi = 0 (⇒ sample covariance regressors and residuals zero), so that (why?)

Pi=1

n

i=1 ŷi ûi = 0

• ȳ = β̂0 + β̂1 x̄1 + β̂2 x̄2 + · · · + β̂k x̄k (⇒ (x̄1 , . . . , x̄k , ȳ ) on regression line)

12 / 29

Mechanics and interpretation of ordinary least squares

Recall from SLR

13 / 29

Mechanics and interpretation of ordinary least squares

Goodness-of-fit

Recall that SST = SSE + SSR, with

P

• SST = ni=1 (yi − ȳ )2 the total sum of squares

P

• SSE = ni=1 (ŷi − ȳ )2 the explained sum of squares

P

• SSR = ni=1 ûi2 the residual sum of squares (or sum of sq. res.)

The R-squared (or coefficient of determination)

Pn

¯ 2

SSE

SSR

i=1 (yi − ȳ )(ŷi − ŷ )

2

Pn

R =

=1−

= Pn

¯ 2

SST

SST

[ i=1 (yi − ȳ )2 ]

i=1 (ŷi − ŷ )

measures the fraction in the sample variation in y explained by ŷ

• 0 ≤ R2 ≤ 1

• R 2 never decreases when variable is added (so..?)

• Regression with low R 2 common in the social sciences, but may estimate ceteris paribus

relation well

14 / 29

Overview

• Motivation for multiple regression

• Mechanics and interpretation of ordinary least squares

• The expected value of the OLS estimators

• The variance of the OLS estimators

• Efficiency of OLS: the Gauss-Markov theorem

15 / 29

The expected value of the OLS estimators

Statistical properties of OLS

Distribution OLS estimator(s) over random sample from population

Assumptions

• MLR1 (Linear in parameters):

y = β0 + β1 x1 + β2 x2 + ... + βk xk + u

• MLR2 (Random sampling): We have a random sample

{(xi1 , xi2 , ..., xik , yi ) ; i = 1, ..., n} following MLR1’s population model

• MLR3 (No perfect collinearity): In the sample (and therefore in the population), none

of the independent variables is constant, and there are no exact linear relationships

among the independent variables

• MLR4 (Zero conditional mean): E (u|x1 , x2 , . . . , xk ) = 0 (Explanatory variables are

exogenous, as opposed to endogenous)

16 / 29

The expected value of the OLS estimators

Theorem 3.1: Unbiasedness of OLS

Under Assumptions MLR1–MLR4, E(β̂j ) = βj , j = 0, 1, . . . , k

Including irrelevant variables

Suppose we overspecify the model by including an irrelevant variable x3 :

y = β0 + β1 x1 + β2 x2 + β3 x3 + u

Assumptions MLR1–MLR4 hold, but β3 = 0

• Theorem 3.1 implies that OLS estimators are unbiased

• In particular, E(β̂3 ) = 0

• May have undesirable effects on the variances of the OLS estimators

17 / 29

The expected value of the OLS estimators

Omitted variable bias

Suppose we underspecify the model by excluding a relevant variable

• True population model y = β0 + β1 x1 + β2 x2 + u satisfies MLR1–MLR4, e.g.

wage = β0 + β1 educ + β2 abil + u

• Estimate β1 with the SLR estimator β̃1 of β1 in

wage = β0 + β1 educ + v

where v = β2 abil + u

18 / 29

The expected value of the OLS estimators

Omitted variable bias

It can be shown that

β̃1 = β̂1 + β̂2 δ̃1

where

• β̂1 , β̂2 are the slope estimators from the MLR of wage on educ, abil

• δ̃1 is the slope estimator from the SLR of abil on educ

so that (implicitly conditional on the independent variables)

• E(β̃1 ) = β1 + β2 δ̃1 (because MLR1–MLR4 hold)

• the omitted variable bias equals β2 δ̃1

19 / 29

The expected value of the OLS estimators

Omitted variable bias

Bias equals β2 δ̃1 , so no bias if

• ability does not affect wages (β2 = 0)

• ability and education are not correlated in the sample (δ̃1 = 0)

Bias is positive if ability has a positive effect on wages (β2 > 0) and more able people take

more education in the sample (δ̃1 > 0)

20 / 29

The expected value of the OLS estimators

Omitted variable bias: some terminology

• Upward bias: E(β̃1 ) > β1

• Downward bias: E(β̃1 ) < β1

• Bias toward zero: E(β̃1 ) closer to 0 than β1

Omitted variable bias: general case

With two or more explanatory variables in the estimated (underspecified) model, typically all

estimators will be biased, even if only one explanatory variable is correlated with the omitted

variable

21 / 29

Overview

• Motivation for multiple regression

• Mechanics and interpretation of ordinary least squares

• The expected value of the OLS estimators

• The variance of the OLS estimators

• Efficiency of OLS: the Gauss-Markov theorem

22 / 29

The variance of the OLS estimators

Additional assumption for establishing variances and efficiency OLS

• MLR5 (Homoskedasticity): var (u|x1 , x2 , . . . , xk ) = σ 2

MLR1–MLR5 are the Gauss-Markov assumptions (for cross sections)

Theorem 3.2: Sampling variances of the OLS slope estimators

Under Assumptions MLR1–MLR5 (and, implicitly, conditional on the independent variables),

var(β̂j ) =

σ2

,

SSTj 1 − Rj2

j = 1, . . . , k;

P

where SSTj = ni=1 (xij − x̄j )2 is the total sample variation in xj and Rj2 is the R-squared from

regressing xj on all other independent variables

23 / 29

The variance of the OLS estimators

Multicollinearity: a value of Rj2 close to, but not equal to, one

24 / 29

The variance of the OLS estimators

Estimating the error variance

The error variance σ 2 can be estimated with

n

σ̂ 2 =

X

1

SSR

ûi2 =

n−k −1

n−k −1

i=1

Here, n − k − 1 are the degrees of freedom (df) for multiple regression, the number of

observations (n) minus the number of parameters (k + 1)

Theorem 3.3: Unbiased estimation of σ 2

Under Assumptions MLR1–MLR5, E(σ̂ 2 ) = σ 2

Important remark

When n is large relative to k, multiplying by 1/n leads to virtually the same answer

25 / 29

Standard errors

Substituting σ̂ 2 for σ 2 in the appropriate expressions gives

√

• the standard error of the regression, σ̂ = σ̂ 2

• an estimate of the standard deviation of β̂j , sd(β̂j ) =

q

var(β̂j ):

h

i1/2

the standard error of β̂j , se(β̂j ) = σ̂/ SSTj 1 − Rj2

Example: hourly wage equation (WAGE1.DTA)

\ =

log(wage)

0.284+

(0.104)

0.092 educ+

(0.007)

0.0041 exper +

(0.0017)

0.022 tenure

(0.003)

Heteroskedasticity

If MLR1–MLR4 hold, but MLR5 is violated, then

• β̂j remains unbiased, but

• the standard errors above are incorrect

See Chapter 8

26 / 29

Interpretation standard errors

Standard error of β̂j gives an idea about the possible variation in the estimate

• recall that we are actually interested in (the population quantity) βj

• β̂j is a random variable with, see above,

• mean βj (it is unbiased)

• some standard deviation/standard error

• once we know that this random variable is (approximately) normally distributed (see

Chapter 4 and 5), we can calculate a confidence interval

Confidence intervals versus t-tests

• confidence intervals and t-tests both give a idea about the uncertainty in estimates

• some researchers prefer confidence intervals as they are more easily interpreted

• nevertheless, you have to know both

27 / 29

Overview

• Motivation for multiple regression

• Mechanics and interpretation of ordinary least squares

• The expected value of the OLS estimators

• The variance of the OLS estimators

• Efficiency of OLS: the Gauss-Markov theorem

28 / 29

Efficiency of OLS: the Gauss-Markov theorem

BLUE

Many unbiased estimators of βj exist, but the OLS estimator is the best linear unbiased

estimator

• Estimator: rule that can be applied to any sample of data to produce estimate

• Unbiased: expectation estimator equals population parameter

• Linear: estimator is linear function of the data on the dependent variable:

P

β̃j = ni=1 wij yi , where each wij can depend on the sample values of all independent

variables (note: in this sense, β̂j is linear)

• Best: here, an estimator is best if it has the smallest variance

Theorem 3.4: Gauss-Markov theorem

Under Assumptions MLR1–MLR5, β̂0 , β̂1 , . . . , β̂k are the BLUEs of β0 , β1 , . . . , βk

29 / 29