SLAC REPORT-355

STAN-LCS-106

UC-405

(Ml

INTERPRETABLE

PROJECTION

SALLY

CLAIRE

PURSUIT*

MORTON

Stanford

Linear Accelerator Center

Stanford University

Stanford, California 94309

OCTOBER

1989

Prepared for the Department of Energy

under contract number DE-AC03-76SF00515

Printed in the United States of America.

Available from the National Technical Information

Service, U.S. Department

of Commerce, 5285 Port Royal Road, Springfield,

Virginia 22161. Price: Printed Copy A06; Microfiche AOl.

*Ph.D

Dissertation

Abstract

The goal of this thesis is to modify

for interpretability.

The modification

standable model without

sacrificing

projection

pursuit

by trading

produces a more parsimonious

the structure

The method retains the nonlinear versatility

which projection

of projection

accuracy

and under-

pursuit

seeks.

pursuit while clarifying

the results.

Following

an introduction

which outlines the dissertation,

chapters contain the technique

projection

pursuit

regression respectively.

measured as the simplicity

Several interpretability

of rotation

different

as applied to exploratory

in factor

analysis

description,

algorithms

and entropy.

weighting

with

and

of a description

is

projection

a rotationally

invariant

replacing

smoothness.

exploratory

projection

regression are described.

projection

in the original

require

slightly

approach is used to search for a more par-

interpretable

pursuit

The two methods

goals.

interpretability

for both

interpretable

alterations

The interpretability

pursuit

indices for a set of vectors are defined based on the ideas

A roughness penalty

tational

projection

of the coefficients which define its linear projections.

indices due to their contrary

simonious

the first and second

are required.

pursuit

In the former

index is needed and defined.

algorithm

The compu-

Examples

and

case,

In the latter,

of real data are

considered in each situation.

The third

cation

chapter deals with

the connections

between the proposed modifi-

and other ideas which seek to produce more interpretable

models.

The

Abstract

modification

Page iv

as applied to linear regression is shown to be analogous to a nonlin-

ear continuous

method of variable selection.

selection techniques

It is compared with other variable

and is analyzed in a Bayesian context.

Possible extensions

to other data analysis methods are cited and avenues for future research are identified.

The conclusion

addresses the issue of sacrificing

in general.

An example of calculating

pretability

due to a common simplifying

for a histogram,

illustrates

accuracy for parsimony

the tradeoff between accuracy

the applicability

action, namely rounding

of the approach.

and inter-

the binwidth

Acknowledgments

I am grateful

to my principal

I also thank

enthusiasm.

advisor Jerry Friedman

my secondary

Persi Diaconis,

Art Owen, Ani Adhikari,

Kirk Cameron,

David Draper,

Knowles,

Michael

Sheehy, David

Martin,

Siegmund,

advisors and examiners:

Pregibon,

Hal Stern;

and

Brad Efron,

Joe Oliger; my teachers and colleagues:

Tom DiCiccio,

Daryl

for his guidance

Jim Hodges, Iain Johnstone,

Mark

John Rolph,

Anne

and my friends:

Joe Romano,

Mark

Barnett,

Ginger

Brower, Renata Byl, Ray Cowan, Marty

Dart, Judi Davis, Glen Diener, Heather

Gordon,

Arla

Holly

Marincovich,

This

work

Haggerty,

Curt

Lasher,

LeCount,

Alice

Lundin,

Michele

Mike Strange and Joan Winters.

was supported

in part

by the Department

DE-AC03-76F00515.

I dedicate this thesis to

my parents, sister, and brothers,

who inspire me by example.

of Energy,

Grant

Table

of Contents

..*

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . aaz

Abstract

Introduction

1. Interpretable

V

................................

Acknowledgments

1

....................................

Exploratory

1.1 The Original

Projection

Exploratory

...........

Pursuit

Projection

Pursuit

4

......

Technique

4

4

............................

1.1.1 Introduction

1.1.3 The Legendre Projection

1.1.4 The Automobile

1.2 The Interpretable

1.2.1 A Combinatorial

Projection

Strategy

Index

1.3.1 Factor Analysis

11

....................

Example

Optimization

1.3 The Interpretability

9

.................

Index

Exploratory

1.2.2 A Numerical

7

..........................

1.1.2 The Algorithm

Pursuit

Approach

15

..............

17

........................

Background

14

14

....................

Strategy

....

17

...................

1.3.2 The Varimax

Index For a Single Vector

............

19

1.3.3 The Entropy

Index For a Single Vector

............

$2

1.3.4 The Distance

Index For a Single Vector

............

,24

1.3.5 The Varimax

Index For Two Vectors

1.4 The Algorithm

1.4.1 Rotational

26

..............

L7

..............................

Invariance

1.4.2 The Fourier Projection

of the Projection

Index

Vi

Index

........

28

. . . . . . . . , . . . . . . . . . 29

Page vii

Tableof Contents

1.4.3 Projection

Axes Restriction

1.4.4 Comparison

With

. . . . . . . . . . . . . . . . . . . $5

Factor Analysis

1.4.5 The Optimization

1.5.2 The Automobile

2. Interpretable

.42

Pursuit

Projection

57

58

60

....................

Pursuit

62

Example

.....................

82

as a Prior

3.4 FutureWork

...............................

88

90

.......................

.............................

3.4.3 Algorithmic

Improvements

91

...................

92

..........................

3.5 A General Framework

Example

92

93

.....................

.............................

97

............................

Exploratory

86

90

3.4.2 Extensions

Gradients

: ........

.........

........................

3.3 Interpretability

3.5.2 Conclusion

73

82

Ridge Regression

3.5.1 The Histogram

68

......................

Linear Regression

Connections

65

.......................

and Conclusions

With

..........

..................

Procedure

62

63

to Include the Number of Terms

2.3 The Air Pollution

.....

Regression Approach

....................

Index

2.2.3 The Optimization

A.1 Interpretable

.......

..........................

2.2.1 The Interpretability

3.4.1 Further

57’

............................

2.2 The Interpretable

3.2 Comparison

............

Regression Technique

2.1.3 Model Selection Strategy

3.1 Interpretable

46

Regression

Pursuit

2.1.2 The Algorithm

2.2.2 Attempts

42

....................

Example

Projection

2.1.1 Introduction

A.

$9

.........................

Projection

2.1 The Original

Appendix

..................

Procedure

1.5.1 An Easy Example

Connections

$7

................................

1.5 Examples

3.

...............

Projection

99

Pursuit

Gradients

.......

99

Page viii

Tableof Contents

A.2 Interpretable

References

Projection

Pursuit

Regression Gradients

. . . . . . 102

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

Figure

[l.l]

Most structured projection scatterplot of the automobile

according to the Legendre index. .....................

Captions

data

12

[1.2] Varimax

interpretability

index for q = 1, p = 2. ..............

20

[1.3] Varimax

interpretability

index for q = 1, p = 3. ..............

21

[1.4] Varimax

interpretability

index contours for q = 1, p = 3. ........

21

[1.5] Simulated

data with

43

n = 200 and p = 2. ..................

[1.6] Projection and interpretability

indices versus X for the simulated data. ..................................

44

Cl.71 Projected simulated data histograms

A. .......................................

45

for various

values of

[1.8] Most structured projection scatterplot of the automobile

......................

according to the Fourier index.

data

[1.9] Most structured projection scatterplot of the automobile

according to the Legendre index. .....................

data

[l.lO]

[l.ll]

47

48

Projection and interpretability

indices versus X for the automobile data. .................................

49

P ro j ec t e d automobile data scatterplots

A. .......................................

50

[1.12] Parameter

[1.13] Country

data..

trace plots for the automobile

of origin projection

....................................

scatterplot

for various values of

data.

..............

53

of the automobile

55

[2.1] Fraction of unexplained variance U versus number of terms

m for the air pollution data. ........................

74

[2.2] Model paths for the air pollution data for models with numberoftermsm=l,

... . 6. .........................

76

iX

Pagex

Figure Captions

[2.3] Model paths for the air pollution data for models with numberoftermsm=7,8,9.

. . . . . . . . . . , . . . . . . . . . . . . . . .

[2.4] Draftsman’s

display for the air pollution

mearregression.

[3.1] I ner

t p reat bl e 1’

[3.2] Interpretability

[3.3] Percent change in

binwidth example.

data . . . . . . . . . . . . . . . 79

. . . . . . . . ... . . . . . . . . . . . . .

prior density for p = 2.

77

84

. . . . . . . . . . . . . . . . . . 89

IMSE

versus multiplying fraction e in the

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

96

List

[l.l]

of Tables

Most structured Legendre plane index values for the automobile data. . . . . . . . . . . . . . . . . . , . . . . . . . . . . . . . . . . .

[1.2] Linear combinations

[1.3] Abbreviated

for the automobile

linear combinations

data. ...............

for the automobile

xi

data. ........

29

51

52

Introduction

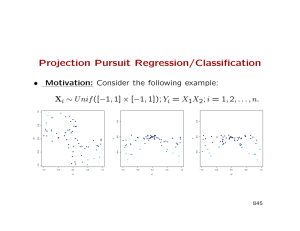

The goal of this th esis is to modify projection

more interpretability

projection

pursuit

and Stuetzle

accuracy for

in the results. The two techniques examined are exploratory

(Friedman

1981).

1987) and projection

Th e f ormer is an exploratory

produces a description

procedure

pursuit by trading

of a group of variables.

which determines

the relationship

pursuit

regression (Friedman

data analysis method

The latter

is a formal

of a dependent variable

which

modeling

to a set of

predictors.

The common

outcome

of all projection

vectors which define the directions

ponent

is nonlinear

jection

linear combinations

projection

pursuit

projection

pictorially

pursuit

representation.

that

is faced with a collection

q projections

row corresponding

are made.

The resulting

to a projection.

data points.

the linear combinations

of p variables

direction

The statistician

In

and

predictors.

of vectors and a nonlinear

Given a dataset of n observations

com-

contains the pro-

of the projected

the model contains

of

rather than mathematically.

the smooths of the dependent variable versus the projected

The statistician

is a collection

The remaining

the description

and the histograms

regression,

methods

of the linear projections.

and is summarized

For example, in exploratory

pursuit

matrix

A

graphic

each, suppose

is

q X p,

must try to understand

each

and

explain these vectors, both singly and as a group, in the context of the original p

variables and in relation

is to illustrate

a method

to the nonlinear

for trading

components.

The purpose of this thesis

some of the accuracy in the description

or

Page 2

Introduction

model in return

for more interpretability

object is to retain the versatility

increasing the clarity

parsimony

and flexibility

A.

in the matrix

of this promising

The

technique while

of the results.

In this dissertation,

than parsimony,

or simplicity

interpretability

is used in a similar

which may be thought

is that as few parameters

yet broader sense

of as a special case. The principle

of

as possible should be used in a description

or model. Tukey stated the concept in 1961 as ‘It may pay not to try to describe

in the analysis the complexities

that are really present in the situation.’

methods exist which choose more parsimonious

Several

models, such as Mallows’ (1973)

Cp in linear regression which balances the number of parameters

and prediction

error. Another example is the work of Dawes (1979), who restricts

the parameters

in linear models based on standardized

descriptions

data to be 0 or fl,

calling the resulting

‘improper’ linear models. His conclusion is that these models predict

almost as well as ordinary

based on experience.

of the prediction

Throughout

but rather refers to the goodness-of-fit

to the particular

while considering

shares the general philosophical

intuition

this thesis, accuracy is not measured in terms

of future observations

the model or description

Parsimony,

linear regressions and do better than clinical

of

data.

solely the number of parameters

goals of interpretability.

in a model,

These goals are to

produce results which are

(;) easier to understand.

(ii)

easier to compare.

(G)

easier to remember.

(iv)

easier to explain.

The adjective

plex’ with

terpretability

‘simple’ is used interchangeably

‘uninterpretable’

throughout

this thesis.

is included in part to distinguish

which receives extensive treatment

with

‘interpretable’

as is ‘com-

However, the new term in-

this notion from that of simplicity

in the philosophical

and Bayesian literature.

Page 3

Introduction

The quantification

of interpretability

not easy to define or measure.

our intuitions

. . . presents a veritable

The concept is

pursuit

‘the diversity

chaos of opinion.’

are easier than others. In particular,

as produced by projection

of an interpretability

linear combinations

index.

by a computer

more interpretable

Exploratory

rather than a human, in an automatic

projection

pursuit

is considered first

method motivates

approach chosen is supported

terpretability

indices are developed.

requires changes to the original.

ploratory

which can

search for

results.

review of the original

rithmic

lend

This mathe-

index can serve as a ‘cognostic’ (Tukey 1983), or a diagnostic

be interpreted

of

Fortu-

and many other data analysis methods

themselves readily to the development

matical

problem.

As Sober (1975) comments,

about simplicity

nately, some situations

is a difficult

projection

pursuit

in Chapter

1.

the simplifying

modification.

versus alternative

strategies.

The modification

algorithm

An example of the resulting

method is examined.

Projection

A short

The algo-

Various in-

to be employed

interpretable

pursuit

ex-

regression

is considered in a similar manner in Chapter 2. The differing goals of this second

procedure compel changes in the interpretability

index.

The chapter concludes

with an example.

Chapter 3 connects the new approach with established techniques of trading

accuracy

might

for interpretability.

benefit

from

general application

Extensions

this modification

of the tradeoff

to other data analysis

are proposed.

between accuracy

methods

that

The thesis closes with

and interpretability.

a

This

work is an example of an approach which simplifies

the complex results of a novel

statistical

described within

method.

by others in similar

The hope is that the framework

circumstances.

will be used

Chapter

1

Interpretable

Exploratory

In this chapter, interpretable

Section

1.1 presents

projection

pursuit

provides the motivation

projection

and goals of the original

and outlines

for the strategy

requires that

a simplicity

Pursuit

pursuit is demonstrated.

the algorithm.

exploratory

An example

for the new approach is included.

described and support

new method

exploratory

the basic concepts

technique

Projection

which

The modification

chosen is given in the next section.

is

The

index be defined, which is discussed in

Section 1.3. Section 1.4 details the algorithm,

and its application

to the example

is described in the final section.

1.1 The Original

Exploratory

projection

ment of the algorithm

intensive

method

Exploratory

Projection

pursuit

Technique

(Friedman 1987) is an extension and improve-

presented by Friedman and Tukey in 1974. It is a computer

data analysis tool for understanding

helps the statistician

any initial

Pursuit

assumptions,

look for structure

while providing

high dimensional

datasets.

in the data without

The

requiring

the basis for future formal modeling.

1.1.1 Introduction

Classical multivariate

criminant

methods such as principal

analysis can be used successfully

4

components

analysis or dis-

when the data is elliptical

or normal

1.1 The Original Exploratory Projection Pursuit Technique

and well-described

.in nature

pursuit

Page 5

by its first few moments.

Exploratory

is designed to deal with the type of nonlinear structure

niques are ill-equipped

to handle.

onto a line (one dimensional

dimensional

exploratory

the problem

The method linearly

exploratory

projection

while maintaining

projection

pursuit).

these older tech-

projects

pursuit)

the data cloud

or onto a plane (two

By reducing the dimensionality

the same number of datapoints,

overcomes the ‘curse of dimensionality’

(Bellman

1961). This malady

definition

combinations

is chosen for two reasons (Friedman

is easier to understand

of the original variables.

gerate structure

Initially,

interactively

projection

an exhaustive

mathematical

Second, a linear projection

projection

1982, Asimov

numerical

human pattern recognition

consequently

Structure

projection

consider principal

pursuit

components

classical

for specific projection

analysis.

is no longer

scale but rather on a

means that numerous

Thus the scheme, while making

many

A

of a view is defined

feasible for large datasets, requires the careful choice of a projection

(1985) p oin t s out,

views

the method was automated.

optimization.

one. This simplification

which exhibit

choose interesting

index which measures the structure

possible indices may be defined.

ploratory

the

1985). because the time required

search was prohibitive,

measured on a multidimensional

As Huber

First,

does not exag-

pursuit is to find projections

and the space is searched via a computer

univariate

1987).

as it consists of one or two linear

the idea was to let the statistician

by eye (McDonald

to perform

is revealed in

in the data as it is a smoothed shadow of the actual datapoints.

The goal of exploratory

structure.

is due to

space.

A linear projection

projection

of

the technique

the fact that a huge number of points is required before structure

high dimensional

projection

techniques

the analysis

index.

are forms

index choices.

of ex-

For example,

Let Y be a random vector in

RP. The

Page 6

1. InterpretableExploratory Projection Pursuit

definition

of the jth principal

component

is the solution

of the maximization

problem

“p”x

i

Var[PTY]

3

@T&=1

and p:Y

is uncorrelated

all previous principal

Thus, principal

projection

contrast,

with variance (Var) as the projection

is a global

the novelty

its ability

components

.

components analysis is an example of one dimensional

pursuit

Variance

with

to recognize nonlinear

many definitions

index.

or general measure of how structured

and applicability

of structure

of exploratory

or local structure.

and corresponding

exploratory

projection

a view is.

pursuit

As remarked

projection

In

lies in

previously,

index choices exist.

All present indices, however, depend on the same basic premise. The idea is that

though

‘interesting’

is difficult

to define or agree on, ‘uninteresting’

is clearly

normality.

Friedman (1987) and Huber (1985) p rovide theoretical

Effectively,

methods

the normal distribution

which explain

attempting

The statistician

can be explored adequately using traditional

covariance structure.

to address situations

structure

metric

Desirable

computing

is

so far in this discussion

properties

also affect the decision.

is detailed in the next subsection.

The

preference for the type of

and invariance

in the concrete context of a particular

algorithm

pursuit

to views she holds interesting.

choice is thus based on individual

have been neglected

original

projection

must choose a method by which to measure distance from

to be found.

considerations

Exploratory

for which these methods are not applicable.

this normal origin that leads the algorithm

distance

support for this choice.

which

These

index are discussed as the

Page 7

1.1 The Original Exploratory Projection Pursuit Technique

1.1.2 The Algorithm

Two dimensional

exploratory

ful than one dimensional,

situation

pursuit

is more interesting

problems when interpretable

exploratory

plane.

data may have several interesting

index optima

views or local projection

should find as many as possible.

The original

stract

projec-

is considered which need not be addressed in the one dimensional

case. For the present, the goal is to find one structured

algorithm

and use-

so the former is discussed solely. The two dimensional

also raises intriguing

tion pursuit

projection

version

algorithm

(1985), thereby

though the data consists of n observations

variable Y E W.

simplifying

the notation.

Thus,

of length p, consider first a random

= Va?@Y]

and Cov[p~Y,&‘Y]

(/?I, ,&) which

= 1

= 0

where G is the projection

index which measures the structure

density.

on the linear combinations

The constraints

and the

(1987) is reviewed in the ab-

The goal is to find linear combinations

3 Var[PTY]

the

Section 1.5.3 addresses this point.

presented by Friedman

due to Huber

Actually,

of the projection

ensure that

the structure

seen in the plane is not due to covariance (Cov) effects which can be dealt with

by classical methods.

Initially,

the original data Y is sphered (Tukey and Tukey 1981). The sphered

variable 2 E RP is defined as

2 f D- W(Y

with

matrix

U and D resulting

- E[Y])

from an eigensystem

decomposition

EW

of the covariance

of Y. That is,

c = E[(Y - E[Y])(Y - E[Y])*]

= UDU*

P.31

Page 8

1. InterpretableExploratory Projection Pursuit

with U the orthonormal

matrix

of associated eigenvalues.

ponent directions

be translated

of eigenvectors of C, and D the diagonal matrix

The axes in the sphered space are the principal

of Y. The previous optimization

to one involving

problem [l.l]

linear combinations

max

involving

al, a2 and projections

comY can

of 2:

G(cuTZ, o$Z)

QI>q

3

a&

and LVTQ~= 0

The fact

that

structure,

ditions

the standardization

which the technique

calculations

actually

imposed

work required

are performed

In subsequent notation,

in the original

covariance

are now geometric

(Friedman

the parameters of a projection

the value of the index is calculated

1987).

con-

Thus,

all

index G are (,0TY, /3lY)

for the sphered data [1.2].

convenience and is invisible

to

She associates the value of the index with the visual projection

data space.

After the maximizing

translated

to exclude

on the sphered data 2.

The sphering, however, is merely a computational

the statistician.

.

is not concerned with,

reduces the computational

numerical

though

constraints

WI

= 1

4cv2

=

sphered data plane is found, the vectors o1 and CQ are

to the unsphered original variable space via

/31= UD- ia,

p2

Since only the direction

= UD-+a2 .

of the vectors matter,

these combinations

are usually

normed as a final step.

The variance and correlation

pf-cp,

constraints

= 0

.

on ,L?iand @2 can be written

as

D.61

1.1 The

Original Exploratory

Projection

Pursuit

Thus, ,& and ,& are orthogonal

orthogonal

Page 9

Technique

in the covariance metric

while (Ye and ~2 are

geometrically.

The optimization

method used to solve the maximization

coarse search followed by an application

optimization

procedure.

The initial

problem

[1.4] is a

of steepest descent, a derivative-based

survey of the index space via a coarse step-

ping approach helps the algorithm

avoid deception by a small local maximum.

The second stage employs the derivatives of the index to make an accurate search

in the vicinity

of a good starting

The numerical optimization

sess certain computational

compute,

point.

procedure requires that the projection

The index should be fast and stable to

properties.

and must be differentiable.

to the interpretability

1.1.3 The Legendre

Friedman’s

transforming

index pos-

These criteria

surface again with respect

index defined in Section 1.3.

Projection

Index

(1987) Legendre index exhibits

the sphered projections

these properties.

He begins by

to a square with the definition

Rl E 2G+I!;Z)

- 1

R:! E 2@(aTZ) - 1

where @ is the cumulative

random variable.

uninteresting,

probability

density function

of a standard

Under the null hypothesis that the projections

the density p(R1,

As a measure of nonnormality,

R2)

is uniform

normal

are normal and

on the square [-1, I] X [-l,l].

he takes the integral of the squared distance from

the uniform

G&?;Y,

/3;Y)

He expands the density

orthogonal

tion,

-

J’

-1

[p(&,

R2)

-

+I2

d&dR2

respect to a uniform

subsequent integration

taking

P.71

.

p(R1, R2) using Legendre polynomials,

on the square with

along with

J’

-1

weight function.

which

are

This ac-

advantage of the orthogonality

Page 10

1. InterpretableExploratory Projeciion Pursuit

.relationships,

yields an infinite

sum involving

polynomials

in R1 and R2, which are functions

application,

the expansion is truncated

moments.

Thus, instead of E[f(Y)]

n observations

expected values of the Legendre

of the random variable

f or a function

f, the sample mean over the

yl, ~2, . . . , yn is calculated.

rather than in the tails.

Thus, it identifies

rather than heavy-tailed

densities.

variables

2.

covariance structure,

which exhibit

GL also has the property

The index has this characteristic

Since exploratory

in the center of distribution,

projections

(Huber 1985), w h ic h means that it is invariant

of Y.

projection

this property

the area of projection

of ‘affine invariance’

as it is based on the sphered

pursuit

should not be drawn in by

is desirable for any projection

indices but so far alternatives

normality

have proved less computato take advantage

or use alternate methods of measuring distance from

as discussed in Section 1.4.2.

In the following

section, an example of the original algorithm

cussed in order to provide the motivation

considering

.index.

Research continues in

feasible. Other indices use different sets of polynomials

of different weight functions

clustering

under scale changes and location

The Legendre index is also stable and fast to compute.

tionally

In

and sample moments replace theoretical

This index has been shown to find structure

shifts

Y.

how to modify

pretable, the projection

using GL is dis-

for the proposed modification.

After

the method in order to make the results more inter-

index clearly needs a new theoretical

does not have. Consequently,

property

a new index is defined in Section 1.4.2.

which GL

1.1 The Original Exploratory Projection Pursuit Technique

1.1.4 The Automobile

The automobile

Example

dataset (Friedman

1987) consists of ten variables

on 392 car models and reported in ‘Consumer

Yl

Y2

Y3

Y*

Ys

:

:

:

:

:

:

:

:

:

:

Y6

., Y7

Y8

fi

Ylo

The second variable

dicating

Page 11

collected

Reports’ from 1972 to 1982:

gallons per mile (fuel inefficiency)

number of cylinders in engine

size of engine (cubic inches)

engine power (horse power)

automobile weight

acceleration (time from 0 to 60 mph)

model year

American (0,l)

European (0,l)

Japanese (0,l)

has 5 possible values while the last three are binary,

the car’s country

of origin.

As Friedman

suggests, these variables

inare

gaussianized, which means the discrete values are replaced by normal scores after

any repeated observations

their discrete marginals

are randomly

The object is to ensure that

do not overly bias the search for structure.

All the variables are standardized

the analysis.

ordered.

In addition,

to have zero mean and unit variance before

the linear combinations

which define the maximizing

plane (PI, P2) are normed to length one. As a result, the coefficient

in a combination

represents its relative importance.

The definition

of the solution

p1 = (-0.21,-0.03,-0.91,

p2 = (-0.75,

-0.13,

plane is

0.16,

0.43,

0.30,-0.05,-0.01,

0.45,-0.07,

0.03, 0.00,-0.02)T

0.04,-0.15,-0.03,

That is, the horizontal

coordinate

ficiency

-0.03 times the number of cylinders

(standardized)

standardized)

of each observation

and so on. The scatterplot

In the projection

horizontal

for a variable

and vertical

scatterplot,

0.02,-0.01)T

is -0.21 times fuel inef(gaussianized

and

of the points is shown in Figure 1.1.

the combinations

(PI,&)

are the orthogonal

axes and each observation is plotted as (/?TY, PZY).

These

1. Interpretable

Exploratory

Projection

Page 12

Pursuit

2

.

.

..

..

. * *.*.

.

.I .I .* :* .

: .

’c

. 7. ‘*.y

- .,* #.:.* .,.

,’ -: *

*‘>..i\..*

. . .*..

r.*,$s?:.. :..: .

**. : :$ ,I’

. . ., . - “.:“.

. ., :

. .

-1

8

I

I

I

I

I

I

I

Most structured projection scatterplot

according to the Legendre index.

vectors are orthogonal

1

I

I

I

1

0

-1

Fig. 1.1

.

1

of the automobile

in the covariance metric due to the constraint

ever, in the usual reference system, the orthogonal

data

[l.S].

How-

axes correspond to the vari-

ables. For example, the x axis is Yl, the y axis is Y2, the z axis is Y3, and so

on. The combinations

are not orthogonal in this reference frame. This departure

from common graphic convention

is discussed further

The first step is to look at the structure

of the projected

case, the points are clustered along the vertical

out to the upper right corner with a few outliers

cern is whether

fluctuation.

this structure

actually

in Section 1.4.3.

In this

axis at low values and straggle

to the left.

The obvious con-

exists in the data or is due to sampling

The value of the index GL for this particular

question is whether

points.

this value is significant.

view is 0.35 and the

Friedman (1987) approximates

the

answer to this question by generating values of the index for the same number

Page 13

1.1 The Original Exploratory Projection Pursuit Technique

of observations

Comparison

and dimensions

(n and p) under the null hypothesis

of the observed value with

of normality.

these generated ones gives an idea of

how unusual the former is. Sun (1989) d e1ves deeper into the problem,

an analytical

significance

approximation

level. The structure

Given the clustering

attempt

to interpret

Though by projecting

tion pursuit

for a critical

providing

value given the data size and chosen

found in this example is significant.

exhibited

along the vertical

the linear combinations

axis, the second stey)is to

which define the projection

the data from ten to two dimensions,

plane.

exploratory

projec-

has reduced the visual dimension of the problem, the linear projec-

tions must still be interpreted

in the original number of variables.

The structure

is represented by a set of points in two dimensions but understanding

points represent in terms of the original

data requires considering

what these

all ten vari-

ables .

An infinite

straints

number of pairs of vectors (pi, ,&) exist which satisfy

[1.6] and define the most structured

vectors can be spun around the origin

rotation

of the scatterplot

In other words,

in the plane rigidly

and they still satisfy the constraints

The orientation

plane.

and maintain

is inconsequential

the conthe two

via an orthogonal

the structure

found.

to its visual representation

of the structure.

These facts lead to the principle

or most interpretable

linear combinations

contribution

way possible.

have length

Given that the data is standardized

one, the coefficients

of each variable to the combination.

find the simplest

variance

that a plane should be defined in the simplest

representation

This action forces the coefficients

as possible.

represent the individual

Friedman

of the plane by spinning

of the squared coefficients

of the vertical

(1987) attempts

the vectors until

coordinate

of the second combination

and parsimonious

vector is easier to understand,

to

the

are maximized.

p2 to differ as much

As a consequence, variables are forced out of the combination.

more interpretable

and the

vector has fewer variables

compare, remember and explain.

in it.

This

Such a

Page 14

1. InterpretableExploratoy Projection Pursuit

The goal of the present work is to expand this approach.

of interpretability

binations

is discussed in Section 1.3. Criteria

are considered.

More importantly,

in the plane allowed but also the solution

slightly

away from the most structured

of the vectors

plane may be rocked in

p dimensions

plane. The resulting

Exploratory

Projection

sessed but attaching

difficult.

is

Pursuit

Approach

projection

pursuit

produces

(/3TY, PCY), the value of the index GL(PTY, ,%$Y), and the linear

(,&, pz). Th e scatterplot’s

combinations

loss in structure

is deemed sufficient.

As shown in the preceding example, exploratory

the scatterplot

which involve both com-

not only is rotation

acceptable only if the gain in interpretability

1.2 The Interpretable

A precise definition

nonlinear

structure

much meaning to the actual numerical

As remarked earlier, the linear combinations

in terms of the original number of variables.

stand these vectors may involve ‘rounding

may be visually

as-

value of the index is

must still be interpreted

In fact, a mental attempt

to under-

them by eye’ and dropping

variables

which have ‘too small’ coefficients.

The question to be considered is whether the linear combinations

more interpretable

without

losing too much observed structure

The idea of the present modification

the scatterplot

If initially

of variable

might

is linked with parsimony,

be considered.

regression is all subsets regression.

applied to principal

restricts

or parsimony

found in

in (pi, ,f?2).

Strategy

interpretability

selection

in the projection.

is to trade some of the structure

in return for more comprehensibility

1.2.1 A Combinatorial

can be made

components

the linear transformation

A similar

(Krzanowski

matrix

a combinatorial

method

The analogous approach

in linear

idea, principal

variables,

has been

1987, McCabe 1984). This method

to a specific form, for example each row

has two ones and all other entries zero. The result is a variable selection method

with each component

formed from two of the original

variables.

Both variable

1.2 The Interpretable

Exploratory

Projection

selection methods, all subsets and principal

only consider whether

Applying

variables, are discrete in nature and

a variable is in or out of the model.

a combinatorial

in the following

Page 15

Approach

Pursuit

strategy

number of solutions,

to exploratory

projection

pursuit

results

each of which consists of a pair of variable

subsets. Given p variables and the fact that each variable is either in or out of a

combination,

symmetric,

2P possible single subsets of variables exist.

The combinations

are

meaning that the (,f3i, ,&) plane is that the same as the (&, ,&) plane.

Thus, the number of pairs of subsets with unequal members is (‘,‘).

However,

this count does not include the 211pairs with both subsets identical,

which are

permissible

solutions.

It does include 2P -p

degenerate pairs in which one subset

is empty, or both subsets consist of the same single variable.

These degenerate

pairs do not define planes. The total number of solutions is

0

2p

+ 2P _ 2P - p = 9-1

- 2p-1

- p

.

P

Some planes counted may not be permissible

due to the correlation

as discussed in Section 1.4.3, so the actual count may be slightly

constraint

less. However,

the principal

term remains of the order of 2 2P-1 . The number of subsets grows

exponentially

with p and for each subset the optimization

redone.

Unlike

all subsets regression, no smart search methods exist for elimi-

nating poor contenders which do not produce interesting

time required to produce a single exploratory

combinatorial

must be completely

projections.

projection

pursuit

Due to the

solution,

this

approach is not feasible.

1.2.2 A Numerical

Optimization

Strategy

Given that a numerical optimization

is already being done, an approach is to

consider whether a variable selection or interpretability

in the optimization.

The objective

function

used is

criterion

can be included

Page 16

1. InterpretableExploratory Projection Pursuit

for X E [0, 11. Th is f unction

.contribution

pretability

and an interpretability

parameter

its maximum

index S contribution,

X. The interpretability

manner.

exploratory

projection

pursuit

index

index S is defined to

index is divided by

is also E [0, 11.

algorithm

is applied in an

First, find the best plane using the original exploratory

algorithm.

G

indexed by the inter-

or simplicity

possible value in order that its contribution

The interpretable

tion pursuit

sum of the projection

A na 1o g ously, the value of the projection

have values E [O,l].

iterative

is the weighted

projec-

Set max G equal to the index value for this most struc-

tured plane. For a succession of values (Xi, X2, . . . , Xl), such as (0.1,0.2, . . . , 1 .O),

solve [1:9] with X = Xi. In each case, use the previous Xi-1 solution

as a starting

point.

One way to envision the procedure is to imagine beginning at the most structured solution

and being equipped with

an interpretability

turned,

the value of X increases as the weight on simplicity,

plexity,

is increased.

The plane rocks smoothly

If the projection

index is relatively

loss in structure

is gradual.

As the dial is

or the cost of com-

away from the initial

flat near the maximum

When to stop turning

dial.

(Friedman

solution.

1987), the

the dial is discussed in Section

1.5.3.

The additive functional

drawing a parallel with

1984).

In that

goodness-of-fit

fitted

context,

criterion

form choice for the objective function

roughness penalty

the problem

[1.9] is made by

methods for curve-fitting

is a minimization.

The first

(Silverman

term is a

such as the squared distance between the observed and

values, and the second is a measure of the roughness of the curve such

as the integrated

square of the curve’s second derivative.

the curve becomes more rough.

comparable

to interpretability.

As the fit improves,

The negative of roughness, or smoothness,

is

Page 17

1.3 The Interpretability Index

An alternate

idea is to think

of solving a series of constrained

maximization

subproblems

2%

,

GM%

P2TY)

[l.lO]

3

2 G

S(Pl,P2)

for values of ci such as (0.0,O.l) . . . , 1.0). Th’is p ro bl em may be rewritten

as an

unconstrained

Rein-

maximization

using the method of Lagrangian

sch (1967) d escribes the relationship

the relationship

between [1.9] and [l.lO]

The computational

pursuit

solution

[1.8] to

I.

approach [1.9] is linear in

savings for this numerical method versus a combinatorial

can be substantial.

The inner loop, namely finding

an exploratory

I.

one

projection

is the same for either. The outer loop, however, is reduced from

1.3 The Interpretability

The interpretability

Index

index $ measures the simplicity

(pi, pz). It has a minimum

of the pair of vectors

value of zero at the least simple pair and a maximum

value of one at the most simple.

1.3.1 Factor

and consequently

between ci and Xi.

The amount of work required by the functional

differentiable

multipliers.

Like the projection

index G, it needs to be

and fast to compute.

Analysis

For two dimensional

Background

exploratory

projection

pursuit,

the object is simplify

a

2 X p matrix

.

Consider a general q X p matrix

sional exploratory

matrix

projection

0 with entries wij, corresponding

pursuit.

have? When is one matrix

What characteristics

to

q dimen-

does an interpretable

more simple than another?

Researchers have

Page 18

1. InterpretableExploratory Projection Pursuit

considered

such questions with respect to factor loading matrices.

factor analysis is to explain the correlation

tor model.

The solution

structure

in the variables via the fac-

is not unique and the factor matrix

make it more interpretable.

Comments

ence between this rotation

The goal in

often is rotated

are made regarding the geometric

and the interpretable

to

differ-

rocking of the most structured

plane in Section 1.4.4. Though the two situations

are different,

goal is the same and so factor

research is used as a starting

point in the development

Intuitively,

analysis rotation

of a simplicity

the interpretability

‘Local’ interpretability

the philosophical

index S.

of a matrix

may be thought

measures how simple a combination

of in two ways.

or row is individually.

In a general and discrete sense, the more zeros a vector has, the more interpretable

it is as less variables are involved.

a collection

‘Global’ interpretability

measures how simple

of vectors is. Given that the vectors are defining a plane and should

not collapse on each other, a simple set of vectors is one in which each row clearly

contains its own small set of variables and has zeros elsewhere.

Thurstone

(1935) advocated

‘simple structure’ in the factor loading matrix,

defined by a list of desirable properties

ple, each combination

which were discrete in nature.

For exam-

(row) should have at least one zero and for each pair of

rows, only a few columns should have nonzero entries in both rows. In summary,

his requirements

correspond

to a matrix

for, only a subset of variables (columns).

which involves,

or has nonzero entries

Combinations

should not overlap too

much or have nonzero coefficients for the same variables and those that do should

clearly divide into subgroups.

These. discrete notions of interpretability

measure which

is tractable

for computer

must be translated

optimization.

into a continuous

Local simplicity

for a

single vector is discussed first and the results are extended to a set of two vectors.

Page 19

1.9 The Interpretability Index

1.3.2 The Varimax

Consider

pretability

Index

For a Single Vector

a single vector w = (wi, ~2,. . . , w~)~.

translates

entries as possible.

In a discrete

into as few variables in the combination

The goal is to smooth

sense, inter-

or as many zero

the discrete count interpretability

index

D(W)

Z

f:

I(Wi

=

0}

[Ml]

i=l

where

I{+}

is the indicator

Since the exploratory

function.

projection

pursuit

linear combinations

represent direc-

tions and are usually normed as a final step, the index should involve the normed

coefficients.

In addition,

in order to smooth the notion that a variable is in or

out of the vector, the general unevenness of the coefficients

The sign of the coefficients

is inconsequential.

measure the relative mass of the coefficients

should be measured.

In conclusion,

irrespective

the index should

of sign and thus should

involve the normed squared quantities

u”

i=l,...,p

WTW

.

In the 1950’s, several factor analysis researchers arrived separately

criterion

(Gorsuch

1983, Harman

at the same

1976) which is known as ‘varimax’ and is the

variance of the normed squared coefficients.

(1987) used as discussed in Section

1.1.3.

This is the criterion

which Friedman

The corresponding

interpretability

index is denoted by S, and is defined as

s~(w)z-&f-(*-;)2

i=1 ww

.

[1.12]

The leading constant is added to make the index value be E [0, 11. Fig. 1.2 shows

the value of the varimax

index for a linear combination

w in two dimensions

Page 20

1. InterpretableExploratory Projection Pursuit

0.8

0.8

0.2

0.0

angle

Fig. I.2

of the vector

in radians

Varimax interpretability

index for q = 1, p = 2. The value of

the index for a linear combination w in two dimensions is plotted

versus the angle of the direction in radians over the range [0, TIT].

(p = 2) versus the angle of the direction arctan(w2/wl)

of the linear combination

W.

Fig. 1.3 shows the varimax

mensions (p = 3).

to symmetry.

Only vectors with

all positive

components

one in three diare plotted

Fig. 1.3 shows the value of the index as the vertical

versus the values of (WI, ~2).

be graphed.

index for vectors w of length

The value of w3 is known

due

coordinate

and does not need to

Fig. 1.4 shows contours for the surface in Fig. 1.3. These contours

are just the curves of points w which satisfy the equation formed when the left

side of [1.12] is set equal to a constant

ity index is increased,

three points

the contours

el = (l,O,O),

value. As the value of the interpretabil-

move away from (-&, -$, -$=) toward

e2 = (O,l,O)

and es = (O,O, 1). The centerpoint

the

is

1.3 The Interpretability

Index

Page 21

Fig. I.3

Varimax interpretability

index for q = 1, p = 3. The surface of

the index is plotted as the vertical coordinate versus the first two

coordinates (~1, wz) of vectors of length one in the first quadrant.

Fig. J.4

Varimax interpretability

index contours for q = 1, p = 3. The

axes are the components (WI, ~2, ws). The contours, from the

center point outward, are those points which have varimax values S,(w) =(O.O, 0.01, 0.05, 0.2, 0.3, 0.6, 0.8).

1. Interpretable

Exploratory

Projection

the contour for S,(w)

S,(w)

Pursuit

= 0.0 and the next three joined curves are contours for

= 0.01,0.05,0.2.

The next three sets of lines going outward

ei’s are contours corresponding

toward

the

to S,(w) = 0.3,0.6,0.8.

Since w is normed, the varimax

criterion,

Page 22

criterion

S, is equivalent to the ‘quartimax’

which derives its name from the fact it involves the sum of the fourth

powers of the coefficients.

S, is also the coefficient

of variation

squared of the

squared vector components.

1.3.3

The Entropy

Index

For a Single Vector

The vector of normed squared coefficients has length one and all entries are

positive,

similar to a multinomial

set of probabilities

measures how nonuniform

If a vector is more simple,

from one another.

probability

vector.

The negative entropy of a

the distribution

the more uneven or distinguishable

Thus a second possible interpretability

is (Renyi

1961).

its entries are

index is the negative

entropy of the normed squared coefficients or

S,(w)-l+lf:

W

’2lnW

’2

lnp

wTw

wTw *

i=l

The usual entropy

simplicity

Property

measure is slightly

altered to have values E [0, 11. The two

measures S, and Se share four common properties.

1. Both are masimized when

&

where the ei,j

= l,...,p

=

fej

j = l,...,p

are the unit axis vectors.

occurs when only one variable is in the combination.

Thus, the maximum

value

1.3 The Interpretability

Property

2. Both are m inimized

or when the projection

made that

Page 23

Index

when

is an equally weighted average. The argument

an equally weighted average is in fact simple.

deciding which variable most clearly affects the projection,

could be

However, in terms of

it is the most difficult

to interpret.

Property

3. Both are symmetric in the coefficients wi. No variable counts more

than any other.

Property

explanation

Definition.

4. Both are strictly Schur-convex as defined below.

follows Marshall

The following

and Olkin (1979).

Let 5 = (C, &, . . . , &) and y = (ri, 72,. . . , yP) be any two vectors

E RP. Let Cpl 2 $21 2 . - - 2 Cb] ad

qll

1 721 2 . . . 2 ~1 denote their

components in decreasing order. The vector < mujorizes y (C F -y) if

The above definition

holds

w

7 = CP where P is a doubly stochastic

matrix,

that is P has nonnegative

entries, and column and row sums of one. In other

words, if y is a smoothed

or averaged version of C, it is majorized

example of a set of majorizing

(l,O,...

,O) + (go

)...)

by C. An

vectors is

0) h *.. I+ (-

p-

1

l’““P-

-J- 1’ 0) s ($,)

.

1. Interpretable

Definition.

ProjectionPursuit

Exploratory

A function

with strict inequality

Page24

f : RP H R is strictly Schur-convex if

if y is not a permutation

of <.

This type of convexity is an extension of the usual idea of Jensen’s Inequality.

Basically,

if a vector C is more spread out or uneven than y, then S(c) > S(y).

This intuitive

idea of interpretability

now has an explicit

The two indices S, and Se rank all majorizable

ing the theory of Schur-convexity,

mathematical

meaning.

vectors in the same order.

a general class of simplicity

Us-

indices could be

defined.

1.3.4 The Distance

Index

For a Single Vector

Besides the variance interpretation,

S, measures the squared distance from

the normed squared vector to the point (k, k, . . . , t), which m ight thus be called

the least simple or most complex point.

Let the notation

for the Euclidean norm

of any vector 6 be

If V, is defined to be the squared and normed version of w,

lJ, z WTW7W T W ”“’ W T W ’

and t/c is the most complex point, then

&J(w)= --/$l”y

- hII

-

*

[1.13]

1.3 The Interpretability

Page 25

Index

.This index can be generalized.

In contrast to having one most complex point, an

V

alternate index can be defined by considering a set

points.

= {or, . . . , VJ} of m simple

This set must have the properties

Uji>O

i = l,...,p

j=l,...,J

P

Uji = 1

c

j=l,...,J

[1.14]

.

i=l

The u;‘s are the squares of vectors on the unit sphere E

example is

V

= {cj : j = 1,. . . ,p},

used with exploratory

projection

with

pursuit,

J

RP as are u,

and vC. An

= p. In the event that this index is

the statistician

set of simple points rather than be restricted

could define her own

to the choice of V, used in S,.

This distance would be large when w is not simple, so the interpretability

index should involve the negative of it. An example is

[1.15]

The constant

c is calculated

so that the values are E [O,l].

Any distance norm

can be used and an average or total distance could replace the m inimum.

If

V

is chosen to be the ej’s and the m inimum

Euclidean norm is used, the

distance index becomes

s;(w)

where k corresponds

G 1 - $@&)2-&+1]

to the maximum

I w; I, i =

tion does not need to be done at each step, though

efficient

similar

in the vector

choices of

ros ((i, i,O,.

V

must be found.

Analogous

such as all permutations

1,. . . , p.

The m inimiza-

the largest absolute

results

are obtainable

of two $ entries and

cofor

p - 2 ze-

. * , O), ($7 o,;, 0, - ’* , 0), . . .) corresponding to simple solutions of two

variables each.

1. InterpretableExploratory Projection Pursvii

Since the ej’s maximize

relationship

Page 26

S, and are the simple points associated with Sl, the

between the two indices proves interesting.

m4

The minimum

= --St&)

+ --&

(P-&

wi

Algebra reveals

- 1)

.

of the second term occurs when

W:=f

WTW p

or all coefficients

are equal and S:(w) = S,(w) = 0. The maximum

occurs when

fL=l

WTW

and S;(w) = &(w)

The difficulty

= 1. Th e relationship

between the interim

values varies.,

with the distance index S’s is that its derivatives

and present problems

when a derivative-based

Given that the entropy index S, and the varimax

optimization

are not smooth

procedure is used.

index S, share common prop-

erties and the latter is easier to deal with computationally,

the varimax

approach

is generalized to two dimensions.

1.3.5 The Varimax

The varimax

vectors

Wj

=

(Wjl,

Index

For Two Vectors

index SV can be extended to measure the simplicity

Wjz,

for one combination

. . . ) Wjp),

j

=

1,. . . , Q. In the following,

of a set of Q

the varimax

[1.12] is called Si. In order to force orthogonality

index

between

the squared normed vectors, the variance is taken across the vectors and summed

over the variables to produce

[1.16]

1.4 The Algorithm

Page 27

-If the sums were reversed and the variance was taken across the variables

and

summed over the vectors, the unnormed index would equal

WWl) +

with

each element the one dimensional

vector.

simplicity

[1.12] of the corresponding

The previous approach [ 1.161 results in a cross-product

For two dimensional

with appropriate

appropriate

exploratory

norming,

= 12p [iP -

~&l,~2)

with

[1.17]

+ * * - + &(wp)

wJ2)

q

pursuit,

= 2 and the index,

reduces to

+ (P -

vu~l)

norming.

projection

term.

+

W(w2)

21 - $

, [1.181

-&-&

1

1

2

The first term measures the local simplicities

vectors while the second is a cross-product

term measuring the orthogonality

the two normed squared vectors.

This cross-product

orthogonality’,

groups of variables appear in each vector.

maximized

so that different

of the

of

forces the vectors to ‘squared

S, is

when the normed squared versions of w1 and w2 are ek and el, L # 1

and minimized

when both are equal to (i, f, . . . , 5).

1.4 The Algorithm

In this section, the algorithm

jection

pursuit

problem

used to solve the interpretable

[1.9] with

general approach of the original

interpretability

exploratory

exploratory

pro-

index S,, is discussed.

The

projection

pursuit

algorithm

is fol-

lowed but two changes are required, as described in the first three subsections.

1. InterpretableExploratory Projection Pursuit

1.4.1 Rotational

Invariance

Page 28

of the Projection

As discussed in Section 1.1.4, the orientation

and a particular

observed structure

index G no matter

stating

from which

of a scatterplot

is immaterial

should have the same value of the projection

direction

this is that the projection

Index

it is viewed.

An alternative

index should be a function

of the plane, not

of the way the plane is represented (Jones 1983). The interpretable

pursuit

algorithm

should always describe a plane in the simplest

as measured by S,,.

algorithm

should have the property

Definition.

where (PI,

Given these two facts,

A projection

P2)

of

rotation

matrix

projection

way possible

index used by the

rotationalinvariance.

if

e or

is (771,~2) rotated through

with Q the orthogonal

any projection

rotationallyinvariant

index G is

way of

associated with the angle 8 or

[1.19]

Rotational

invariance should not be confused with afhne invariance.

in Section 1.1.3, the latter

Friedman’s

property

is a welcome byproduct

Legendre index GL [l. 71 is not rotationally

erty is not required for his algorithm.

of sphering.

invariant.

This prop-

As described in Section 1.1.4, he simplifies

the axes after finding the most structured

plane by maximizing

varimax index S1 for the second combination.

rock away from the most structured

As remarked

the single vector

He does not allow the solution to

plane in order to increase interpretability.

1.4 The Algorithm

Page29

Recall that the first step in calculating

the projected

the marginals

sphered data to a square. Under the null hypothesis

tion is N(0, I), which means the projection

orientation

GL is to transform

of the axes is immaterial.

is not true, the placement

scatterplot

Intuitively,

of

the projec-

looks like a disk and the

however, if the null hypothesis

of the axes affects the marginals

and thus the index

value.

Empirically,

this lack of rotational

invariance

is evident.

. bile example from Section 1.1.4, the most structured

GL(,BFY, ,BgY) = 0.35. The projection

In the automo-

plane was found to have

index values for the scatterplot

as the axes

are spun through

a series of angles are shown in Table 1.1. A new index, which

seeks to maintain

the computational

properties

and to find the same structure

as GL, is developed in the next subsection.

e

G(/3;Y&Y)

Table1.1

0.0

fi

zi

3*

ZiT

t

L

4

%

7*

x

F

3

t

0.35 0.36 0.34 0.32 0.30 0.29 0.29 0.29 0.30 0.32 0.35

Most structured Legendre plane index values for the automobile

data. Values of the Legendre index for different orientations of

the axes in the most structured plane are given.

1.4.2 The Fourier

Projection

Index

The Legendre index GL is based on the Cartesian coordinates of the projected

sphered data ($2,

coordinates

natural

o$‘Z). Th e index is based on knowing the distribution

under the null hypothesis

alternative

of these coordinates

given that rotational

Polar coordinates

polynomials

are the

invariance is desired. The distribution

is also known under the null hypothesis.

the density via orthogonal

GL.

of normality.

of these

The expansion of

is done in a manner similar

to that of

1. Interpretable

Exploratory

The polar coordinates

Actually,

Pursuit

Projection

Page 30

of a projected

the usual polar coordinate

sphered point (aT2, ac.Z) are

definition

rather than its square but the notation

Under the null hypothesis

involves the radius of the point

is easier given the above definition

that the projection

is N(0, I),

R

of

R.

and 0 are inde-

pendent and

R-

Exp

0

;

0 - Unif[-7r,7r]

.

The proposed index is the integral of the squared distance between the density

of

(R, 0)

and the null hypothesis density, which is the product of the exponential

and uniform

densities,

The density pR,e is expanded as the tensor product

onal polynomials

properties.

chosen specifically

The weight functions

der to utilize the orthogonality

0 portion

R

for their

weight functions

both Cartesian coordinates

and rotational

must match the densities f~ and fe

relationships

and the polynomials

of the expansion must result in rotational

is not affected by rotation.

of two sets of orthog-

Friedman

chosen for the

invariance.

By definition,

(1987) uses Legendre polynomials

as his two density functions

are identical

is Lebesgue measure, and his algorithm

require rotational

Hall (1989) considered Hermite

developed a one dimensional index. The following

the two authors’ approaches.

Throughout

for

(Unif[-l,l]),

the Legendre weight function

invariance.

in or-

does not

polynomials

and

discussion combines aspects of

the discussion, i, j, and k are integers.

1.4 The Algordhm

Page31

The set of polynomials

for the R portion

are defined on the interval

is the Laguerre polynomials

[O,oo) with weight function

W(U) = e-“.

which

The polyno-

mials are

Lo(u) = 1

L,(u)

= u - 1

L;(u)

= (u - 2i + lpi-l(u)

[1.21]

The associated Laguerre functions

- (i - 1)2L44

.

are defined as

Z;(U)f .L;(u)e-i” .

The orthogonality

relationships

O"

J

between the p.olynomials are

Zi(U)Zj(U)dU

=

where 6ij is the i(ronecker

delta function.

Any piecewise smooth function

these polynomials

;,j>Oandi#j

Sij

0

f

: R H R may be expanded in terms of

as

f(U)

= e%&(u)

i=O

where the ai are the Laguerre coefficients

a; Z

O” Li(U)t?-3uf(U)dU

-

J0

The smoothness property

derivatives

of f means the function

has piecewise continuous first

and the Fourier series converges pointwise.

has density f, the coefficients

can be written

Ui = Ef [Zi(W)]

as

.

If a random variable W

1. Interpretable

Exploratory

The 63 portion

Pursuit

Projection

Page 32

of the index is expanded in terms of sines and cosines. Any

piecewise smooth function

f : R H R may be written

as

f(w)= y + 5 [ahcos(kw)+ bksin( kv)]

k=l

with pointwise

convergence.

The orthogonahty

relationships

between these trigonometric

7r

%

J

xJ

J

r

J

cos2(kv)dv =

--r

-%

sin2(kv)dv

bk are

k>l

= 0

k>Oandj>l

cos(kw)sin(jv)dv

dv=2r

.

the Fourier coefficients

1

ak E -~

1

bk E =

=

J

_ cos(kv)f(v)dv = ;Ef [cos(kW )]

J

=

-%

sin(

kv)f(v)dv

= i.Ef

[sin(kW)]

The density pR,e can be expanded via a tensor product

.

of the two sets of poly-

nomials as

i- 2 [aikcos(kv)+ b;ksin(ku)])

k=l

The Uik and

are

--x

--I

The ak and

= ?r

functions

bik are

the coefficients defined as

C&k 3

:Ep [ii(R) cos(kO)]

i,k>O

bik G

:Ep

i>Oandk>l

[Ii(R) sin(kO)]

.

.

Page 33

1.4 The Algorithm

The null distribution,

the uniform

which is the product

density over [-‘ir,~]

of the exponential

density

and

is

h(u>fo(~)

= ($+) (+-) =

.

-&O(U)

The index [1.20] becomes

The further

condition

tion, integration,

that pR,e is square-integrable

and use of the orthogonality

and subsequent

relationships

multiplica-

show that the index

G(PTY, /3,‘Y) equals

+(27r)(&)

~(271)~+~~1T(u~k+b~k)-(2r)(~)(~)

i=O k=l

i=l

[1.22] is equivalent

Maximizing

to maximizing

G&-Y&Y)

The definitions

[I.221

the Fourier index defined as

= ~G(Pf-y,&‘y)

of the coefficients

.

- f

-

in GF yield

GF(PTY,p,‘Y) =f 2 Ep”[Ii(R)] + 2 2 (Ei [ii(R) cos(k@)I+ Ep”k(R)sin(k@)l)

i=O k=l

i=O

- ;Ep[lo(R)].

[1.23]

This index

closely resembles the form of GL in Friedman

i = 0 term in the first sum and the last subtracted

function

is the exponential

polynomials.

In application,

instead

(1987).

The extra

term appear since the weight

of Lebesgue measure as it is for Legendre

each sum is truncated

at the same fixed value and

1. Interpretable

Explorato y

Projection

Pursuit

Page 34

the expected values are approximated

data points.

For example, Ei

by the sample moments taken over the

[Ii(R) cos(kO)]is approximated

by

where rj and Oj are the radius squared and angle for the projected jth observation.

The Fourier index is rotationally

spun by an angle of r.

invariant.

Suppose the projected

The radius squared R is unaffected

points are

by the shift so the

first sum and final term in [1.23] d o not change. The sine and cosine of the new

angle are

cos( 0 + T) = sin 0 cos 7 + cos 0 sin 7

sin(O + r) = cosOcos7

Each component

.

- sin@sinr

in the second term of [1.23] is

Ei[Zi(R) cos(k(0 + T))] +

E~[Zi(R)sin(k(O

cos2(kT)Ei[Zi(R)

sin(kO)]

+ r))] =

+ sin2(kr)E~[Zi(R)

cos(k@ )]

+ 2 sin( kT) cos(kT)Ep[Zi(R)

sin(kO) cos(k@ )]

+

cos2(kT)Ei[Zi(R) cos(k@ )]+

-2sin(kr)

[1.24]

sin2(kr)Ei[Zi(R)

cos(k~)E,[Z;(R)sin(kC3)cos(kO)]

= Ei[Zi(R)cos(kO)]+