Linear Systems Theory

1

1

Notation

The n-dimensional vector space over the field of real numbers is denoted Rn . For example,

2

3 ∈ R3

1

where ∈ indicates set membership and is read “an element of.” Real-valued matrices with

n rows and m columns are elements of Rn×m . For example

"

#

1 2 4

∈ R2×3

0 1 5

The fact that A ∈ Rn×m is a mapping from Rm to Rn is denoted

A : Rm 7→ Rn

Sets are also defined using standard notation. For example, let Ω be the set of all real

numbers that are less than 2. Then we write

Ω = {x ∈ R : x < 2}

2

Vector Spaces

Definition 2.1 The vector space V over the scalar field F (the field of real or complex

numbers in this course) is a set of objects (usually called vectors) which is closed under

addition and closed under scalar multiplication by elements of the scalar field. The elements

of the vector field V must satisfy the following: for all x, y, and z in V , and all α and β in

the scalar field F ,

1. x + y = y + x

2. (x + y) + z = x + (y + z)

3. There exists and additive identity e such that x + e = x

4. α(x + y) = αx + αy

5. (α + β)x = αx + βx

6. (αβ)x = α(β)x

7. There exist elements 0 and 1 in F such that 0x = e, 1x = x

The formal definition of a vector space is not directly utilized in this course, except to

underscore the properties of real-valued vectors with which students are already familiar.

- DRAFT -

Linear Systems Theory

2.1

2

Linear Independence and Dependence

Definition 2.2 The set of vectors v1 , . . . , vn is linear independent if the only scalars α1 , . . . , αn

for which

α1 v1 + α2 v2 + · · · + αn vn = 0

are α1 = α2 = · · · = αn = 0.

Definition 2.3 The set of vectors v1 , . . . , vn are linear dependent if it is not linear independent.

Example 2.4 Determine if the following set of vectors are linearly dependent or independent.

(" # " #)

1

4

,

1. V1 =

2

8

1

1

2. V2 = 2 , 2

3

0

Both problems are addressed by inspection. Note that

" # " # " #

1

4

0

−4

+

=

2

8

0

which implies that the vectors in V1 are linearly dependent. There are no scalars α1 and α2

except α1 = α2 = 0 such that

1

1

0

α1

2 + α2 2 = 0

3

0

0

Definition 2.5 The span of vectors v1 , v2 , . . . , vp , denoted span{v1 , v2 , . . . , vp }, is the subspace consisting of all linear combinations of the vectors v1 , v2 , . . . vp .

1 1

, 2 . If x ∈ V1 , then there exist scalars α1 and α2

For example, let V1 = span

2

3

0

such that

1

1

x = α1

2 + α2 2

3

0

- DRAFT -

Linear Systems Theory

3

Example 2.6 Are the vectors

3

x1 =

6 ,

6

1

x2 =

3

0

in V1 ?

By inspection, we note that

1

1

x1 = 2

2 + 2

3

0

Thus x1 ∈ V1 . For x2 , there exist no such scalars and x2 ∈

/ V1 .

Definition 2.7 A linearly independent set of vectors v1 , v2 , . . . , vp that span the vector space

V are a basis for V .

Bases are highly non-unique. For example, both

(" # " #)

(" # " #)

1

0

1

1

,

, and

,

0

1

1

2

are a basis for R2 since they are both composed of linearly independent vectors that span

R2 .

3

Matrices

The notation A ∈ Rn×m denotes a real-valued n × m matrix. For example,

1 2 3 0

A=

0

1

2

2

0 0 0 −1

is an element in R3×4 . To emphasize the columns of a matrix, we may write A ∈ R3×4 as

h

i

A = a1 | a2 | a3 | a4

where a1 , a2 , a3 , a4 ∈ R3 . The transpose of the matrix A, denoted AT , is an ordered swapping of rows and columns. For example, if

a11 a12 a13

A=

a

a

a

21

22

23

a31 a32 a33

- DRAFT -

Linear Systems Theory

4

then

a11 a21 a31

AT =

a12 a22 a32

a13 a23 a33

The n × n identify matrix, denoted In , consists of all zeros except for ones on the main

diagonal. For example,

1 0 0

I3 =

0 1 0

0 0 1

The subscript is often omitted when the dimension of I is clear in context. The inverse of

a matrix A, if it exists, is denoted A−1 and satisfies AA−1 = I. It is extremely important

to emphasize that not every matrix has an inverse. If a matrix has an inverse, then it is

called invertible or nonsingular.

3.1

Range, Domain, and Null Space

The matrix A ∈ Rn×m can be thought of as a linear transformation (mapping) from the

vector space Rm to Rn . This idea is made more clear by writing

y = Ax

from which it is seem that A maps the vector x ∈ Rm to the vector y ∈ Rn .

Definition 3.1 The domain of the matrix A ∈ Rn×m is the vector space Rm .

Definition 3.2 The range (or image) of A ∈ Rn×m is the vector space

{y ∈ Rn : y = Ax for some x ∈ Rm }

and is denoted Ra(A) (or Im A).

The range of a matrix is the span of its columns. Let

1 2 3 0

A=

0 1 2 2

0 0 0 −1

Then if

x1

x2

x=

x

3

x4

- DRAFT -

Linear Systems Theory

5

the linear equation y = Ax can be written

1

2

3

0

y = x1

0 + x2 1 + x3 2 + x4 2

0

0

0

−1

Thus the range is equal to

1 2 3 0

, 1 , 2 , 2

span

0

0

0

0

−1

In this case, Im A = R3 .

Definition 3.3 The null space (or Kernel) of the matrix A ∈ Rn×m is the vector space

{x ∈ Rm : Ax = 0}

and is denoted N(A) or ker A.

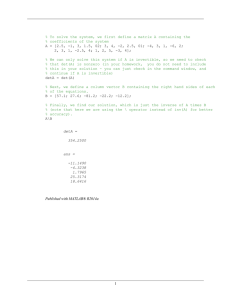

Example 3.4 Find the null space of

"

A1 =

1 0

#

,

2 0

A2 =

"

#

1 0

0 2

The null space of A1 is

span

(" #)

0

1

The null space of A2 is the zero vector

" #

0

0

3.2

Rank

Definition 3.5 The rank of the matrix A ∈ Rn×m is the number of linearly independent

columns of A.

The set of linearly independent columns may not be unique, but the cardinality (number of

elements of the set) is unique. It is a remarkable fact that rank A = rank AT , so that rank

can be defined equivalently in terms of row or columns. In other words, row rank equals

column rank. From this observation, it is clear that the rank of a matrix can be no greater

than the minimum of the number of columns or the number of rows. For example, a 2 × 3

matrix can have rank no greater than 2.

- DRAFT -

Linear Systems Theory

3.3

6

Determinant

Definition 3.6 The cofactor cij of the matrix A ∈ Rn×n is (−1)i+j times the determinant

of the (n − 1) × (n − 1) matrix that results from deleting the ith -row and j th -column of A.

Definition 3.7 Consider the matrix A ∈ Rn×n with entries aij . The determinant A is

det A =

n

X

aij cij

j=1

for any fixed i, 1 ≤ i ≤ n. If A ∈ R (A is a scalar), then det A is simply A.

Example 3.8 Compute the determinant of

1 2 3

A=

4 1 2

1 2 1

"

det A = 1 det

1 2

#

2 1

"

− 2 det

4 2

1 1

#

+ 3 det

"

#

4 1

1 2

= (1)(−3) − (2)(2) + (3)(7)

= 14

Note that if A, B ∈ Rn×n , then det(AB) = det(A) det(B).

3.4

Trace

The trace of a matrix A ∈ Rn×n with elements aij is the sum of the diagonal elements

trace(A) =

n

X

aii

i=1

3.5

Eigenvalues and Eigenvectors

Definition 3.9 For a given matrix A ∈ Rn×n , an eigenvalue λ ∈ C and eigenvector pair

v ∈ Cn (v 6= 0) satisfies the relationship

Av = λv

(1)

By rearranging (1),

(A − λI)v = 0

Thus eigenvectors are in the null space of A − λI. Each n × n matrix has n eigenvalues.

The set of eigenvalues for the matrix A is the spectrum of A, denoted σ(A). The largest

- DRAFT -

Linear Systems Theory

7

absolute value of the eigenvalues of the matrix A is known as the spectral radius of A. The

eigenvector v in (1) are sometimes called the right eigenvectors. Each eigenvalue λ also has

a left eigenvector w ∈ Rn that satisfies

wT A = λwT

Note that the left and right eigenvectors are not the same.

Definition 3.10 The characteristic polynomial of the matrix A ∈ Rn×n is the polynomial

det(λI − A) = 0

For example, the characteristic polynomial of

"

A=

1 2

#

0 4

is computed

"

det(λI − A) = det

λ−1

−2

0

λ−4

#

= (λ − 1)(λ − 4)

The n roots (solutions) of the characteristic polynomial det(λI − A) = 0 are precisely the

n eigenvalues of A.

Lemma 3.11 For A ∈ Rn×n , det A = 0 if and only if λ = 0 is an eigenvalue of A.

Proof : Since this is an “if and only if” statement, we must prove it in both directions.

Suppose det A = 0. Then det(λI − A)|λ=0 = 0, which implies that λ = 0 is an eigenvalue

of A. Conversely, suppose that λ = 0 is an eigenvalue of A. Then

0 = det(λI − A)

= det(A)

Theorem 3.12 (Cayley-Hamilton) Suppose pA (λ) = det(λI − A). Then pA (A) = 0.

In other words, the Cayley-Hamilton Theorem states that if

det(λI − A) = λn + αn−1 λn−1 + · · · + α1 λ + α0 = 0

then

An + αn−1 An−1 + · · · + α1 A + α0 I = 0

One of the utilities of the Cayley-Hamilton Theorem is that powers of A greater than n − 1

can be expressed in terms of lower-order powers of A. For example,

An = −αn−1 An−1 − · · · − α1 A − α0 I

T∗

- DRAFT -

Linear Systems Theory

3.6

8

Matrix Inverse

The inverse of the matrix A ∈ Rn×n , written A−1 , satisfies AA−1 = I. It is very important

to note that not every matrix has an inverse. A formula for the matrix inverse that is useful

for analysis is

adj A

det A

where adj A is the adjugate of A whose ij th entry is the cofactor cji of A. From this

A−1 =

definition, the following fact is immediate:

Lemma 3.13 The matrix A is invertible (nonsingular) if and only if det A 6= 0.

The following result is equivalent to Lemma 3.13

Lemma 3.14 The matrix A ∈ Rn×n is invertible (nonsingular) if and only if it has no

eigenvalues at zero.

proof : From Lemma 3.11, det A = 0 if and only if λ = 0 is an eigenvalue of A. Since Lemma

3.13 states that det A = 0 is equivalent to singularity of A, we obtain the desired result.

That is, we have shown that A ∈ Rn×n is singular if and only λ = 0 is an eigenvalue of A.

This is equivalent to A is invertible if and only if A has no eigenvalues at zero.

From the review presented thus far, it should be fairly straightforward to determine that

the following are equivalent.

1. A ∈ Rn×n is nonsingular.

2. A−1 exists.

3. 0 ∈

/ σ(A).

4. det(A) 6= 0.

5. The rows of A are linearly independent.

6. The columns of A are linearly independent.

7. The only solution of Ax = 0 is x = 0 (trivial null space).

8. The range of A is Rn .

Other properties of note are

1. (AB)−1 = B −1 A−1 if the inverses exist

2. trace(A) =

Pn

i=1 λi

where λi , i = 1, . . . , n are the eigenvalues of A.

3. det(A) = Πni=1 λi

- DRAFT -

Linear Systems Theory

4

9

Symmetric and Sign-Definite Matrices

Definition 4.1 The matrix Q ∈ Rn×n is symmetric if Q = QT .

Definition 4.2 The symmetric matrix Q ∈ Rn×n is symmetric positive definite if xT Qx >

0 for all x ∈ Rn such that x 6= 0. That Q is positive definite is sometimes denoted Q > 0.

Definition 4.3 The symmetric matrix Q ∈ Rn×n is symmetric positive semi-definite if

xT Qx ≥ 0 for all x ∈ Rn . That Q is positive definite is sometimes denoted Q ≥ 0

Definition 4.4 The symmetric matrix Q ∈ Rn×n is symmetric negative definite (symmetric negative semi-definite) if −Q > 0 (−Q ≥ 0). That Q is negative-definite (negative

semi-definite) is sometimes denoted Q < 0 (Q ≤ 0).

Further, we say that symmetric matrices Q and P satisfy Q > P if and only if Q − P > 0.

Lemma 4.5 The symmetric matrix Q has real-valued eigenvalues.

Proof : Suppose λ, v are an eigenvalue/vector pair for Q and that kvk = 1. Then

Qv = λv

Let v ∗ be the complex conjugate transpose of v (v ∗ = v̄ T ). Then

v ∗ Qv = λv ∗ v = λ

Taking the complex conjugate transpose of both sides yields,

(v ∗ Qv)∗ = λ̄

Since (v ∗ Qv)∗ = v ∗ Qv, we have λ̄ = λ, which implies that λ is real-valued.

Lemma 4.6 Suppose λ ∈ σ(Q) where Q ∈ Rn×n is symmetric positive definite. Then

λ > 0.

Proof : Since λ ∈ σ(Q), there exists v ∈ Rn , kvk = 1, such that

Qv = λv

Thus

v T Qv = λv T v = λ

Since Q is positive definite, v T Qv > 0 which confirms that λ > 0.

Similar results are available for Q ≥ 0, Q < 0 and Q ≤ 0. Suppose λ ∈ σ(Q). Then

- DRAFT -

Linear Systems Theory

10

1. λ > 0 if Q > 0.

2. λ ≥ 0 if Q ≥ 0.

3. λ < 0 if Q < 0.

4. λ ≤ 0 if Q ≤ 0.

All of these results are confirmed in a manner similar to the proof of Lemma 4.6.

Exercises

1. For the symmetric matrix Q ∈ Rn×n and the scalar > 0, suppose that Q > I. Show

that the smallest eigenvalue of Q is greater than .

2. For the symmetric positive semi-definite matrix Q ∈ Rn×n , show that kQk = λmax (Q)

where λmax (Q) is the largest eigenvalue of Q.

3. Suppose Q ∈ Rn×n is such that Q = QT > 0. Show that Q is invertible and that

Q−1 > 0.

- DRAFT -

Linear Systems Theory

5

11

Exercises

1. Suppose that T ∈ Rn×n is invertible and that λ is an eigenvalue of A ∈ Rn . Prove

that λ is an eigenvalue of T −1 AT .

Solution: Since λ is an eigenvalue of A, there exists v 6= 0 such that

Av = λv

Define y = T −1 v, then

T −1 AT y = T −1 AT T −1 v

= T −1 Av

= λT −1 v

= λy

Thus λ is an eigenvalue of T −1 AT with corresponding eigenvector y.

2. Suppose the A ∈ Rn×n has rank n − 1. Show that A has a non-trivial null space. That

is, show that there exists y ∈ Rn such that y 6= 0 and Ay = 0.

Solution: We first write A in terms of its columns

h

i

A = a1 | a2 | · · · | an

Since A has rank n−1, this means that the n columns of A are not linearly independent

and that the first column (or any other) can be written as a linear combination of the

other columns.

a1 = α2 a2 + α3 a3 + · · · + αn an

or equivalently

a1 − α2 a2 − α3 a3 − · · · − αn an = 0

Define

1

−α2

y= .

..

−αn

then Ay = 0.

3. Show that if A ∈ Rn×n has rank n − 1, then A is singular.

Solution: Since rank A = n − 1, there exists y ∈ Rn , y 6= 0, such that Ay = 0. This

implies that Ay = λy where λ = 0. Thus λ = 0 is an eigenvalue of A.

- DRAFT -

Linear Systems Theory

12

4. Suppose A ∈ Rn×n is invertible and B ∈ Rn×n is not invertible. Show that AB and

BA are not invertible.

Solution: Since B is not invertible, det(B) = 0. Thus det(AB) = det(BA) =

det(A) det(B) = 0. Thus both AB and BA are not invertible.

- DRAFT -