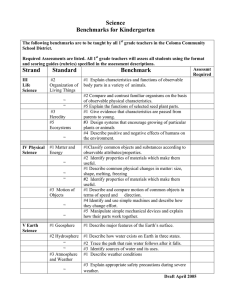

Artificial Intelligence Course Code: ITT451 By Ms.C.B.Thaokar Assistant Professor Department of Information Technology RCOEM, Nagpur Syllabus Unit I: Introduction: Introduction, What Is AI?, The Foundations of Artificial Intelligence, The History of Artificial Intelligence, The State of the Art. Intelligent Agents: Agents and Environments Good Behavior: The Concept of Rationality, The Nature of Environments, The Structure of Agents. Unit II : Problem-solving: Solving Problems by Searching, Problem-Solving Agents, Example Problems, Searching for Solutions, Uninformed Search Strategies, Informed (Heuristic) Search Strategies, Heuristic Functions. Beyond Classical Search: Local Search Algorithms and Optimization Problems: Hillclimbing search Simulated annealing, Local beam search, Genetic algorithms, Local Search in Continuous Spaces, Searching with Non-deterministic Actions: AND-OR search trees, Searching with Partial Observations Unit III : Constraint Satisfaction Problems: Defining Constraint Satisfaction Problems, Constraint Propagation: Inference in CSPs, Backtracking Search for CSPs, Local Search for CSPs, The Structure of Problems. Game Playing: Adversarial Search, Games, Optimal Decisions in Games, The minimax algorithm, Optimal decisions inC.B.Thaokar multiplayer games, Alpha–Beta Pruning.2 Syllabus contd. Unit IV : Logic and Knowledge Representation: Knowledge-Based Agents, The Wumpus World, Logic, Propositional Logic: A Very Simple Logic, Propositional Theorem Proving, Effective Propositional Model Checking, Agents Based on Propositional Logic First-Order Logic: Representation Revisited Syntax and Semantics of First-Order Logic, Using First-Order Logic, Knowledge Engineering in First-Order Logic, Inference in First-Order Logic, Propositional vs. First-Order Inference, Unification and Lifting, Forward Chaining, Backward Chaining, Resolution. Unit V : Natural Language Processing: Introduction, Syntactic Analysis, Semantic Analysis, Discuses and Pragmatic Processing. Introduction and Fundamentals of Artificial Neural Networks: Biological prototype, Artificial Neuron, Single layer Artificial, Neural Networks, Multilayer Artificial Neural Networks, Training of Artificial Neural Networks. Unit VI : Machine Learning: Probability basics - Bayes Rule and its Applications – Bayesian Networks – Exact and Approximate Inference in Bayesian Networks - Hidden Markov Models - Forms of Learning - Supervised Learning - Learning Decision Trees – Regression and Classification with Linear Models - Artificial Neural Networks – Nonparametric Models - Support Vector Machines - Statistical Learning - Learning with Complete Data - Learning with Hidden Variables- The EM Algorithm – Reinforcement Learning C.B.Thaokar 3 Syllabus contd. Text Books 1. Stuart Russell and Peter Norvig, Artificial Intelligence: A Modern Approach, Prentice-Hall. 2. David L. Poole, Alan K. Mackworth, Artificial Intelligence: Foundations of Computational Agents, Cambridge University Press, 2010. 3. Natural Language processing and Information Retrieval: U.S. Tiwary, Tanveer Siddique, 1st edition, Oxform University Press. Reference Books 1. Nils J. Nilsson, Artificial Intelligence: A New Sythesis, Morgan-Kaufmann. 2. Ethem Alpaydin, Introduction to Machine Learning (Adaptive Computation and Machine Learning series), The MIT Press; second edition, 2009 3. Introduction to Artificial Intelligence & Expert System: D. Patterson 1st Edition, PHI. a) NPTEL Videos - Dr. Sudeshna Sarkar C.B.Thaokar 4 b) NPTEL Videos - Dr. Deepak Khemani Course Outcomes 1. Ability to formulate an efficient problem space for a problem expressed in natural language. 2. Select a search algorithm for a problem and estimate its time and space complexities. 3. Demonstrate knowledge representation using the appropriate technique for a given problem. 4. Possess the ability to apply AI techniques to solve problems of game playing, and machine learning. 5. Understand the concept of Natural Language Processing and applying the different of machine learning algorithms. C.B.Thaokar 5 UNIT-1 Introduction: what is AI? History Application Intelligent agents Performance Measures Rationality Structure of Agents C.B.Thaokar 6 Introduction • In March 2016, Alpha-Go of DeepMind defeated Lee Sedol, who was the strongest human GO player at that time. • Deep Blue IBM machine won its first game against world champion Garry Kasparov in game one of a six-game match on 10 February 1996. C.B.Thaokar 7 Introduction A- NLP engine (like IBM Watson – deep blue-Jeopardy) In 2011, a Jeopardy! quiz show exhibition match, IBM's question answering system, Watson, defeated the two greatest Jeopardy! champions, Brad Rutter and Ken Jennings, by a significant margin. Baidu brain AI project of china Baidu, Inc. C.B.Thaokar 8 Introduction • Humanoid Robot Sophia: Sophia is a social humanoid robot developed by Hong Kong-based company Hanson Robotics. Sophia, the robot became the first robot to receive citizenship of any country C.B.Thaokar 9 Definitions • AI is a branch of computer science concerned with the study and creation of computer systems that exhibits some form of intelligence. • Systems that can learn new concepts and tasks. • Systems that can understand a Natural Langauge or perceive and comprehend a visual scene • System that perform other types of feats that require human type of intelligence. C.B.Thaokar 10 What AI is not? • AI is not the study and creation of conventional computer systems. • Its not the study of the mind , nor of the body, nor of langauges C.B.Thaokar 11 Obvious question • What is AI? Programs that behave externally like humans? Programs that operate internally as humans do? Computational systems that behave intelligently? Rational behaviour? C.B.Thaokar 12 What is Intelligence? Intelligence is a property of mind that encompasses many related mental abilities, such as the capabilities to – reason – plan – solve problems – think abstractly – understand ideas and language – learn 13 What’s involved in Intelligence? • Ability to interact with the real world – – – – to perceive, understand, and act e.g., speech recognition and understanding and synthesis e.g., image understanding e.g., ability to take actions, have an effect • Reasoning and Planning – modeling the external world, given input – solving new problems, planning, and making decisions – ability to deal with unexpected problems, uncertainties • Learning and Adaptation – we are continuously learning and adapting – our internal models are always being “updated” • e.g., a baby learning to categorize and recognize animals 14 Turing Test • Human beings are intelligent • To be called intelligent, a machine must produce responses that are indistinguishable from those of a human Alan Turing C.B.Thaokar 15 Turing Test AI system Experimenter 16 Control Foundation of AI • • • • • • • Philosophy Mathematics Economics Neuroscience Psychology Control Theory John McCarthy- coined the term- 1950’s C.B.Thaokar 17 History of AI 1943: early beginnings McCulloch & Pitts: Boolean circuit model of brain 1950: Turing Turing's "Computing Machinery and Intelligence“ 1956: birth of AI Dartmouth meeting: "Artificial Intelligence“ name adopted 1950s: initial promise Early AI programs, including Samuel's checkers program Newell & Simon's Logic Theorist 1955-65: “great enthusiasm” Newell and Simon: GPS, general problem solver Gelertner: Geometry Theorem Prover McCarthy: invention of LISP 18 History of AI 1966—73: Reality dawns Realization that many AI problems are intractable Limitations of existing neural network methods identified Neural network research almost disappears 1969—85: Adding domain knowledge Development of knowledge-based systems Success of rule-based expert systems, E.g., DENDRAL, MYCIN But were brittle and did not scale well in practice 1986-- Rise of machine learning Neural networks return to popularity Major advances in machine learning algorithms and applications 1990-- Role of uncertainty Bayesian networks as a knowledge representation framework 1995-- AI as Science Integration of learning, reasoning, knowledge representation AI methods used in vision, language, data mining, etc 19 Categories of AI System 20 Categories of AI System • Systems that think like humans • Systems that act like humans • Systems that think rationally • Systems that act rationally 21 Categories of AI System 1. Systems that think like humans Most of the time it is a black box where we are not clear about our thought process. One has to know functioning of brain and its mechanism for possessing information. Neural network is a computing model for processing information similar to brain. The exciting new effort to make computers think . . . machines with minds, in the full and literal sense.” (Haugeland, 1985) “The automation of activities that we associate with human thinking, activities such as decision-making, problem solving, learning . . .” (Bellman, 1978) 22 Categories of AI System 2. Systems that act like humans The overall behavior of the system should be human like. It could be achieved by observation. The art of creating machines that perform functions that require intelligence when performed by people.” (Kurzweil, 1990) “The study of how to make computers do things at which, at the moment, people are better.” (Rich and Knight, 1991) 23 Categories of AI System 3. Systems that think rationally Such systems rely on logic rather than human to measure correctness. For thinking rationally or logically, logic formulas and theories are used for synthesizing outcomes. The study of mental faculties through the use of computational models.” (Charniak and McDermott, 1985) “The study of the computations that make it possible to perceive, reason, and act.” (Winston, 1992) 24 Categories of AI System 4. Systems that act rationally Rational behavior means doing right thing. Even if method is illogical, the observed behavior must be rational (logical). Computational Intelligence is the study of the design of intelligent agents.” (Poole et al., 1998) “AI . . . is concerned with intelligent behavior in artifacts.” (Nilsson, 1998) 25 Intelligent Systems in Your Everyday Life • Post Office – automatic address recognition and sorting of mail • Banks – automatic check readers, signature verification systems – automated loan application classification • Customer Service – automatic voice recognition • The Web – Identifying your age, gender, location, from your Web surfing – Automated fraud detection • Digital Cameras – Automated face detection and focusing • Computer Games – Intelligent characters/agents 26 AI have applications? • Autonomous planning and scheduling of tasks aboard a spacecraft • Steering a driver-less car • Understanding language • Robotic assistants in surgery • Monitoring trade in the stock market to see if insider trading is going on • Automated Reasoning and Theorem Proving • Expert Systems • Natural Language Understanding and Semantic Modelling • Modelling Human Performance • Planning and Robotics C.B.Thaokar 27 Intelligent Agents C.B.Thaokar 28 Agents • An agent is anything that can be viewed as perceiving its environment through sensors and acting upon that environment through actuators • Human agent: eyes, ears, and other organs for sensors • Hands, legs, mouth, and other body parts for actuators • Robotic agent: cameras and infrared range finders for sensors • various motors for actuators Agents and environments • The agent function maps from percept histories to actions: [f: P* A] • The agent program runs on the physical architecture to produce f • agent = architecture + program Ex-1:Vacuum-cleaner world • Percepts: location and contents, e.g., [A,Dirty] • Actions: Left, Right, Suck, NoOp Ex-1:Vacuum-cleaner world Program implements the agent function tabulated in Fig. 2.3 Function Reflex-Vacuum-Agent([location,status]) return an action If status = Dirty then return Suck else if location = A then return Right else if location = B then return left Rational agents • An agent should strive to "do the right thing", based on what it can perceive and the actions it can perform. The right action is the one that will cause the agent to be most successful • Performance measure: An objective criterion for success of an agent's behavior E.g., performance measure of a vacuum-cleaner agent could be amount of dirt cleaned up, amount of time taken, amount of electricity consumed, amount of noise generated, etc. Concept of Rationality Rational agent One that does the right thing = every entry in the table for the agent function is correct (rational). What is correct? The actions that cause the agent to be most successful So we need ways to measure success. Rationality factors PEAS • PEAS: Performance Actuators, Sensors measure, Environment, • Must first specify the setting for intelligent agent design – – – – Performance measure Environment ( Prior Knowledge) Actuators ( Sequence of actions) Sensors ( Percept sequence ) PEAS for Task environments • Consider, e.g., the task of designing an automated taxi driver: – Performance measure: • Safe, fast, legal, comfortable trip, maximize profits – Environment: • Roads, other traffic, pedestrians, customers – Actuators: • Steering wheel, accelerator, brake, signal, horn – Sensors: • Cameras, sonar, speedometer, GPS, odometer, engine sensors, keyboard Task environments A sketch of automated taxi driver PEAS Agent: Medical diagnosis system • Performance measure: – Healthy patient, minimize costs, lawsuits • Environment: – Patient, hospital, staff • Actuators: – Screen display (questions, tests, diagnoses, treatments, referrals) • Sensors: – Keyboard (entry of symptoms, findings, patient's answers) PEAS Agent: Part-picking robot • Performance measure: – Percentage of parts in correct bins • Environment: – Conveyor belt with parts, bins • Actuators: – Jointed arm and hand • Sensors: – Camera, joint angle sensors PEAS Agent: Interactive English tutor • Performance measure: – Maximize student's score on test • Environment: – Set of students • Actuators: – Screen display (exercises, suggestions, corrections) • Sensors: – Keyboard Properties of task environments Fully observable vs. Partially observable If an agent’s sensors give it access to the complete state of the environment at each point in time then the environment is effectively and fully observable if the sensors detect all aspects That are relevant to the choice of action Partially observable • An environment might be Partially observable because of noisy and inaccurate sensors or because parts of the state are simply missing from the sensor data. • Example: A local dirt sensor of the cleaner cannot tell whether other squares are clean or not Properties of task environments Deterministic vs. stochastic next state of the environment completely determined by the current state and the actions executed by the agent, then the environment is deterministic Stochastic means the next state has some uncertainty associated with it. Uncertainty could come from randomness, lack of a good environment model, or lack of complete sensor coverage. Outcome cannot be determined Eg. Taxi driving is stochastic because of some unobservable aspects Properties of task environments Episodic vs. sequential An agents experience is divided into atomic episodes . In each episode agent receives percept and performs single operation .The quality of the agent’s action does not depend on other episodes Every episode is independent of each other Episodic environment is simpler The agent does not need to think ahead Sequential Current action may affect all future decisions -Ex. Taxi driving and chess. Properties of task environments Static vs. dynamic An environment that keeps constantly changing itself when the agent is up with some action is said to be dynamic. E.g., the number of people on the street Other agents in an environment make it dynamic An idle environment with no change in its state is called a static environment. Agent need not look at the world to take actions E.g., Chess without clock Semidynamic environment is not changed over time but the agent’s performance score does change E.g., Chess with clock Properties of task environments Discrete vs. continuous If there are a limited number of distinct states, clearly defined percepts and actions, the environment is discrete E.g., Chess game Continuous: It relies on unknown and rapidly changing data sources. E.g Vision systems in drones Self-driving cars Properties of task environments Single agent VS. multiagent Playing a crossword puzzle – single agent Chess playing – two agents Competitive multiagent environment Chess playing Cooperative multiagent environment Automated taxi driver Properties of task environments Known vs. unknown This distinction refers not to the environment itself but to the agent’s (or designer’s) state of knowledge about the environment. - In known environment, the outcomes for all actions are given. ( example: solitaire card games rules are known but Partially observable). - If the environment is unknown, the agent will have to learn how it works in order to make good decisions. ( example: new video game). Environment Examples Environment Chess with a clock Fully observable vs. partially observable Deterministic vs. stochastic / strategic Episodic vs. sequential Static vs. dynamic Discrete vs. continuous Single agent vs. multiagent Obser vable Determi nistic Episodic Static Discrete Agents Environment Examples Environment Obser vable Determi nistic Episodic Static Discrete Agents Chess with a clock Fully Strategic Sequential Semi Discrete Multi Fully observable vs. partially observable Deterministic vs. stochastic / strategic Episodic vs. sequential Static vs. dynamic Discrete vs. continuous Single agent vs. multiagent Environment Examples Environment Obse Deter Episodic Stat Discre rvabl ministi ic te e c Agent s Chess with a clock Fully Strateg Sequent Stat ic ial ic Discre te Multi Taxi driving Parti al Stocha Sequent Dyn stic ial ami c Contin Multi uous Fully observable vs. partially observable Deterministic vs. stochastic / strategic Episodic vs. sequential Static vs. dynamic Discrete vs. continuous Single agent vs. multiagent Environment Examples Environment Obse rvabl e Deter ministi c Chess with a clock Fully Strateg Sequenti Sem Discret Multi ic al i e Taxi driving Parti al Stocha stic Medical diagnosis Fully observable vs. partially observable Deterministic vs. stochastic / strategic Episodic vs. sequential Static vs. dynamic Discrete vs. continuous Single agent vs. multiagent Episodic Stati Discret Agent c e s Sequenti Dyn Contin al amic uous Multi Environment Examples Environment Obse Deter Episodic Stat Discre rvabl ministi ic te e c Agent s Chess with a clock Fully Strateg Sequent Sem Discre ic ial i te Multi Taxi driving Parti al Stocha Sequent Dyn stic ial ami c Contin Multi uous Medical diagnosis Parti al Stocha Episodic Stat stic ic Contin Single uous Fully observable vs. partially observable Deterministic vs. stochastic / strategic Episodic vs. sequential Static vs. dynamic Discrete vs. continuous Single agent vs. multiagent Environment Examples Environment Obse Deter Episodic Stat Discre rvabl ministi ic te e c Agent s Chess with a clock Fully Strateg Sequent Sem Discre ic ial i te Multi Chess without a clock Fully Strateg Sequent Stat ic ial ic Discre te Multi Taxi driving Parti al Stocha Sequent Dyn stic ial ami c Contin Multi uous Medical Deterministic vs. diagnosis stochastic / strategic Robot part Episodic vs. sequential picking Static vs. dynamic Parti al Stocha Episodic Stat stic ic Contin Single uous Fully Deter Episodic Sem Discre ministi i te c Fully observable vs. partially observable Discrete vs. continuous Single agent vs. multiagent Single Structure of agents Agent = architecture + program Architecture = some sort of computing device (sensors + actuators) (Agent) Program = some function that implements the agent mapping = “?” Agent Program = Job of AI Agent programs Input for Agent Program Only the current percept Input for Agent Function The entire percept sequence The agent must remember all of them Implement the agent program as A look up table (agent function) Agent programs Skeleton design of an agent program Agent types / Agent programs Four basic types in order of increasing generality: • Simple reflex agents • Model-based reflex agents • Goal-based agents • Utility-based agents • Learning agents Simple reflex agents It selects action on the basis of current percept and does not remember percept history. Eg. Vaccum cleaner agent (Local ) It uses just condition-action rules The rules are like the form “if … then …” Work comfortably only if the environment is fully observable If environment is partially observable than infinite loops are unavoidable E.G. Vaccum cleaner location sensor Simple reflex agents Simple reflex agents Simple reflex agents Problems with Simple reflex agents are : •Very limited intelligence. •No knowledge of non-perceptual parts of state. •Usually too big to generate and store. •If there occurs any change in the environment, then the collection of rules need to be updated. Model-based Reflex Agents For the world that is partially observable the agent has to keep track of an internal state That depends on the percept history The current state is stored inside the agent which maintains some kind of structure describing the part of the world which cannot be seen. E.g., driving a car and changing lane Requiring two types of knowledge How the world evolves independently of the agent How the agent’s actions affect the world Model-based Reflex Agents Model-based Reflex Agents The agent is with memory Goal-based agents These agents take decision based on how far they are currently from their goal (description of desirable situations). Action is intended to reduce its distance from the goal. The knowledge that supports its decisions is represented explicitly and can be modified, which makes these agents more flexible. They usually require search and planning. The goal-based agent’s behavior can easily be changed. Goal-based agents Utility-based agents The agents which are developed having their end uses as building blocks are called utility based agents. When there are multiple possible alternatives, then to decide which one is best, utility-based agents are used. Achieving the desired goal is not enough. Look for a quicker, safer, cheaper trip to reach a destination. i.e agent happiness should be taken into consideration. Because of the uncertainty in the world, a utility agent chooses the action that maximizes the expected utility that describes degree of happiness. E.g. Food prepared in the canteen is outcome but whether its good / bad Utility-based agents Learning Agents After an agent is programmed, can it work immediately? No, it still need teaching In AI, Once an agent is done We teach it by giving it a set of examples Test it by using another set of examples Learning Agents