Chapter

2

Vector Spaces

2.1 INTRODUCTION

In various practical and theoretical problems, we come across a set V whose elements may be

vectors in two or three dimensions or real-valued functions, which can be added and multiplied by a constant (number) in a natural way, the result being again an element of V . Such

concrete situations suggest the concept of a vector space. Vector spaces play an important role

in many branches of mathematics and physics. In analysis infinite dimensional vector spaces

(in fact, normed vector spaces) are more important than finite dimensional vector spaces while

in linear algebra finite dimensional vector spaces are used, because they are simple and linear

transformations on them can be represented by matrices. This chapter mainly deals with finite

dimensional vector spaces.

2.2 DEFINITION AND EXAMPLES

VECTOR SPACE. An algebraic structure (V,

F, ⊕, ) consisting of a non-void set V , a field F, a

binary operation ⊕ on V and an external mapping : F ×V → V associating each a ∈ F, v ∈ V

to a unique element a v ∈ V is said to be a vector space over field F, if the following axioms

are satisfied:

V -1 (V, ⊕) is an abelian group.

V -2 For all u, v ∈ V and a, b ∈ F, we have

(i)

(ii)

(iii)

(iv)

a (u ⊕ v) = a u ⊕ a v,

(a + b) u = a u ⊕ b u,

(ab) u = a (b u),

1 u = u.

The elements of V are called vectors and those of F are called scalars. The mapping is

called scalar multiplication and the binary operation ⊕ is termed vector addition.

115

116 • Theory and Problems of Linear Algebra

If there is no danger of any confusion we shall say V is a vector space over a field F,

whenever the algebraic structure (V, F, ⊕, ) is a vector space. Thus, whenever we say that

V is a vector space over a field F, it would always mean that (V, ⊕) is an abelian group and

: F ×V → V is a mapping such that V -2 (i)–(iv) are satisfied.

V is called a real vector space if F = R (field of real numbers), and a complex vector space

if F = C (field of complex numbers).

REMARK-1

V is called a left or a right vector space according as the elements of a skew-field

F are multiplied on the left or right of vectors in V . But, in case of a field these two concepts

coincide.

REMARK-2

The symbol ‘+’ has been used to denote the addition in the field F. For the sake

of convenience, in future, we shall use the same symbol ‘+’ for vector addition ⊕ and addition

in the field F. But, the context would always make it clear as to which operation is meant.

Similarly, multiplication in the field F and scalar multiplication will be denoted by the same

symbol ‘·’.

REMARK-3 In this chapter and in future also, we will be dealing with two types of zeros. One

will be the zero of the additive abelian group V , which will be known as the vector zero and

other will be the zero element of the field F which will be known as scalar zero. We will use the

symbol 0V to denote the zero vector and 0 to denote the zero scalar.

REMARK-4

Since (V,⊕) is an abelian group, therefore, for any u, v, w ∈ V the following

properties will hold:

(i) u ⊕ v = u ⊕ w ⇒ v = w

(Cancellation laws)

(ii) v ⊕ u = w ⊕ u ⇒ v = w

(iii)

(iv)

(v)

(vi)

(vii)

(viii)

u ⊕ v = 0v ⇒ u = −v and v = −u

−(−u) = u

u ⊕ v = u ⇒ v = 0v

−(u ⊕ v) = (−u) ⊕ (−v)

0v is unique

For each u ∈ V, −u ∈ V is unique.

REMARK-5

If V is a vector space over a field F, then we will write V (F).

ILLUSTRATIVE EXAMPLES

EXAMPLE-1

Every field is a vector space over its any subfield.

SOLUTION Let F be a field, and let S be an arbitrary subfield of F. Since F is an additive

abelian group, therefore, V -1 holds.

Taking multiplication in F as scalar multiplication, the axioms V -2 (i) to V -2 (iii) are

respectively the right distributive law, left distributive law and associative law. Also V -2 (iv) is

property of unity in F.

Vector Spaces

•

117

Hence, F is a vector space over S.

Since S is an arbitrary sub field of F, therefore, every field is a vector space over its any

subfield.

REMARK.

The converse of the above Example is not true, i.e. a subfield is not necessarily

a vector space over its over field. For example, R is not a vector space over C, because

multiplication of a real number and a complex number is not necessarily a real number.

EXAMPLE-2

R is a vector space over Q, because Q is a subfield of R.

EXAMPLE-3

C is a vector space over R, because R is a subfield of C.

EXAMPLE-4

Every field is a vector space over itself.

SOLUTION

Example 1.

Since every field is a subfield of itself. Therefore, the result directly follows from

REMARK.

In order to know whether a given non-void set V forms a vector space over a field

F, we must proceed as follows:

(i) Define a binary operation on V and call it vector addition.

(ii) Define scalar multiplication on V , which associates each scalar in F and each vector in

V to a unique vector in V .

(iii) Define equality of elements (vectors) in V .

(iv) Check whether V -1 and V -2 are satisfied relative to the vector addition and scalar

multiplication thus defined.

The set F m×n of all m × n matrices over a field F is a vector space over F with

respect to the addition of matrices as vector addition and multiplication of a matrix by a scalar

as scalar multiplication.

EXAMPLE-5

SOLUTION

(i)

(ii)

(iii)

(iv)

For any A, B,C ∈ F m×n , we have

(A + B) +C = A + (B +C) (Associative law)

A + B = B + A (Commutative law)

A + O = O + A = A (Existence of Identity)

A + (−A) = O = (−A) + A (Existence of Inverse)

Hence, F m×n is an abelian group under matrix addition.

If λ ∈ F and A ∈ F m×n , then λA ∈ F m×n and for all A, B ∈ F m×n and for all λ, µ ∈ F, we have

(i)

(ii)

(iii)

(iv)

λ(A + B) = λA + λB

(λ + µ)A = λA + µA

λ(µA) = (λµ)A

1A = A

Hence, F m×n is a vector space over F.

118 • Theory and Problems of Linear Algebra

The set R m×n of all m × n matrices over the field R of real numbers is a vector

space over the field R of real numbers with respect to the addition of matrices as vector addition

and multiplication of a matrix by a scalar as scalar multiplication.

EXAMPLE-6

SOLUTION

Proceed parallel to Example 5.

The set Q m×n of all m × n matrices over the field Q of rational numbers is not a

vector space over the field R of real numbers, because

a matrix √

in Q m×n and

√ multiplication ofm×n

m×n

, then 2 A ∈

/ Qm×n

a real number need not be in Q √ . For example, if 2 ∈ R and A ∈ Q

because the elements of matrix 2 A are not rational numbers.

REMARK.

EXAMPLE-7

over F.

The set of all ordered n-tuples of the elements of any field F is a vector space

SOLUTION Recall that if a1 , a2 , . . . , an ∈ F, then (a1 , a2 , . . . , an ) is called an ordered

n-tuple of elements of F. Let V = {(a1 , a2 , . . . , an ) : ai ∈ F for all i ∈ n} be the set of all

ordered n-tuples of elements of F.

Now, to give a vector space structure to V over the field F, we define a binary operation on

V , scalar multiplication on V and equality of any two elements of V as follows:

Vector addition (addition on V ): For any u = (a1 , a2 , . . . , an ), v = (b1 , b2 , . . . , bn ) ∈ V , we

define

u + v = (a1 + b1 , a2 + b2 , . . . , an + bn )

Since ai + bi ∈ F for all i ∈ n. Therefore, u + v ∈ V .

Thus, addition is a binary operation on V .

Scalar multiplication on V : For any u = (a1 , a2 , . . . , an ) ∈ V and λ ∈ F, we define

λu = (λa1 , λa2 , . . . , λan ).

Since λ ai ∈ F for all i ∈ n. Therefore, λu ∈ V .

Thus, scalar multiplication is defined on V .

Equality of two elements of V : For any u = (a1 , a2 , . . . , an ), v = (b1 , b2 , . . . , bn ) ∈ V , we define

u = v ⇔ ai = bi

for all i ∈ n.

Since we have defined vector addition, scalar multiplication on V and equality of any two

elements of V . Therefore, it remains to check whether V -1 and V -2(i) to V -2(iv) are satisfied.

Vector Spaces

•

119

V -1. V is an abelian group under vector addition.

Associativity: For any u = (a1 , a2 , . . . , an ), ν = (b1 , b2 , . . . , bn ), w = (c1 , c2 , . . . , cn ) ∈ V, we

have

(u + ν) + w = (a1 + b1 , . . . , an + bn ) + (c1 , c2 , . . . , cn )

= ((a1 + b1 ) + c1 , . . . , (an + bn ) + cn )

[By associativity of addition on F]

= (a1 + (b1 + c1 ), . . . , an + (bn + cn ))

= (a1 , . . . , an ) + (b1 + c1 , . . . , bn + cn )

= u + (ν + w)

So, vector addition is associative on V .

Commutativity: For any u = (a1 , a2 , . . . , an ), ν = (b1 , . . . , bn ) ∈ V, we have

u + ν = (a1 + b1 , . . . , an + bn )

[By commutativity of addition on F]

= (b1 + a1 , . . . , bn + an )

= ν+u

So, vector addition is commutative on V .

Existence of identity (Vector zero): Since 0 ∈ F, therefore, 0 = (0, 0, . . . , 0) ∈ V such that

u + 0 = (a1 , a2 , . . . , an ) + (0, 0, . . . , 0)

⇒

u + 0 = (a1 + 0, a2 + 0, . . . , an + 0) = (a1 , a2 , . . . , an ) = u for all u ∈ V

Thus, 0 = (0, 0, . . . , 0) is the identity element for vector addition on V .

Existence of inverse: Let u = (a1 , a2 , . . . , an ) be an arbitrary element in V . Then,

(−u) = (−a1 , −a2 , . . . , −an ) ∈ V such that

u + (−u) = a1 + (−a1 ), . . . , an + (−an ) = (0, 0, . . . , 0) = 0.

Thus, every element in V has its additive inverse in V .

Hence, V is an abelian group under vector addition.

V -2 For any u = (a1 , . . . , an ), ν = (b1 , . . . , bn ) ∈ V and λ, µ ∈ F, we have

(i)

λ(u + ν) = λ(a1 + b1 , . . . , an + bn )

= (λ(a1 + b1 ), . . . , λ(an + bn ))

= (λa1 + λb1 , . . . , λan + λbn )

= (λa1 , . . . , λan ) + (λb1 , . . . , λbn )

= λu + λν

By left distributivity of multiplication

over addition in F

120 • Theory and Problems of Linear Algebra

(λ + µ)u = (λ + µ)a1 , . . . , (λ + µ)an

(ii)

[By right dist. of mult. over add. in F]

= (λa1 + µa1 , . . . , λan + µan )

= (λa1 , . . . , λan ) + (µa1 , . . . , µan )

(iii)

= λu + µu.

(λµ)u = (λµ)a1 , . . . , (λµ)an

= λ(µa1 ), . . . , λ(µan )

[By associativity of multiplication in F]

= λ(µu)

1u = (1a1 , . . . , 1an ) = (a1 , . . . , an ) = u

(iv)

[∵ 1 is unity in F]

Hence, V is a vector space over F. This vector space is usually denoted by F n .

If F = R, then Rn is generally known as Euclidean space and if F = C, then Cn is

called the complex Euclidean space.

REMARK.

EXAMPLE-8

Show that the set F[x] of all polynomials over a field F is a vector space

over F.

SOLUTION In order to give a vector space structure to F[x], we define vector addition, scalar

multiplication, and equality in F[x] as follows:

Vector addition on F[x]: If f (x) = ∑ ai xi , g(x) = ∑ bi xi ∈ F[x], then we define

i

i

f (x) + g(x) = Σ(ai + bi )xi

i

Clearly, f (x) + g(x) ∈ F[x], because ai + bi ∈ F for all i.

Scalar multiplication on F[x]: For any λ ∈ F and f (x) = ∑ ai xi ∈ F[x] λ f (x) is defined as the

i

polynomial ∑(λai )xi . i.e,

i

λ f (x) = Σ(λai )xi

i

Obviously, λ f (x) ∈ F[x], as λai ∈ F for all i.

Equality of two elements of F[x]: For any f (x) = ∑ ai xi , g(x) = ∑ bi xi , we define

i

f (x) = g(x) ⇔ ai = bi

i

for all i.

Now we shall check whether V -1 and V -2(i) to V -2(iv) are satisfied.

Vector Spaces

•

121

V -1 F[x] is an abelian group under vector addition.

Associativity: If f (x) = ∑ ai xi , g(x) = ∑ bi xi , h(x) = ∑ ci xi ∈ F[x], then

i

i

i

[ f (x) + g(x)] + h(x) = Σ(ai + bi )xi + Σ ci xi

i

i

i

= Σ[(ai + bi ) + ci ]x

i

= Σ[ai + (bi + ci )]xi

[By associativity of + on F]

i

= Σ ai xi + Σ(bi + ci )xi

i

i

= f (x) + [g(x) + h(x)]

So, vector addition is associative on F[x].

Commutativity: If f (x) = ∑ ai xi , g(x) = ∑ bi xi ∈ F[x], then

i

i

f (x) + g(x) = Σ(ai + bi )xi = Σ(bi + ai )xi

i

i

[By commutativity of + on F]

f (x) + g(x) = Σ bi xi + Σ ai xi = g(x) + f (x)

⇒

i

i

So, vector addition is commutative on F[x].

Existence of zero vector: Since 0̂(x) = ∑ 0xi ∈ F[x] is such that for all f (x) = ∑ ai xi ∈ F[x]

i

i

⇒

0̂(x) + f (x) = Σ 0xi + Σ ai xi = Σ(0 + ai )xi

⇒

0̂(x) + f (x) = Σ ai xi

⇒

0̂(x) + f (x) = f (x)

Thus,

0̂(x) + f (x) = f (x) = f (x) + 0̂(x)

i

i

i

[∵ 0 ∈ F is the additive identity]

i

for all f (x) ∈ F[x].

So, 0̂(x) is the zero vector (additive identity) in F[x].

Existence of additive inverse: Let f (x) = ∑ ai xi be an arbitrary polynomial in F[x]. Then,

i

− f (x) = Σ(−ai )xi ∈ F[x] such that

i

f (x) + (− f (x)) = Σ ai xi + Σ(−ai )xi = Σ[ai + (−ai )]xi = Σ 0xi = 0̂(x)

i

i

i

Thus, for each f (x) ∈ F[x], there exists − f (x) ∈ F[x] such that

f (x) + (− f (x)) = 0̂(x) = (− f (x)) + f (x)

So, each f (x) has its additive inverse in F[x].

Hence, F[x] is an abelian group under vector addition.

i

122 • Theory and Problems of Linear Algebra

V -2 For any f (x) = ∑ ai xi , g(x) = ∑ bi xi ∈ F[x] and λ, µ ∈ F, we have

i

i

(i) λ[ f (x) + g(x)] = λ Σ(ai + bi )xi

i

= Σ λ(ai + bi )xi

i

= Σ(λai + λbi )xi

[By left distributivity of multiplication over addition]

i

= Σ(λai )xi + Σ(λbi )xi

i

= λ f (x) + λg(x)

(ii)

(λ + µ) f (x) = Σ(λ + µ)ai xi

i

= Σ(λai + µai )xi

i

[By right distributivity of multiplication over addition]

= Σ(λai )xi + Σ(µai )xi

i

i

= λ f (x) + µf (x).

(iii)

(λµ) f (x) = Σ(λµ)ai xi

i

= Σ λ(µai )xi

[By associativity of multiplication on F]

i

= λ Σ(µai )xi

i

= λ(µf (x))

(iv)

1 f (x) = Σ(1ai )xi

i

= Σ ai xi

[∵

i

1 is unity in F]

= f (x)

Hence, F[x] is a vector space over field F.

EXAMPLE-9 The set V of all real valued continuous (differentiable or integrable) functions

defined on the closed interval [a, b] is a real vector space with the vector addition and scalar

multiplication defined as follows:

( f + g)(x) = f (x) + g(x)

(λ f )(x) = λ f (x)

For all f , g ∈ V and λ ∈ R.

Vector Spaces

•

123

SOLUTION Since the sum of two continuous functions is a continuous function. Therefore,

vector addition is a binary operation on V . Also, if f is continuous and λ ∈ R, then λ f is

continuous. We know that for any f , g ∈ V

f = g ⇔ f (x) = g(x)

for all x ∈ [a, b].

Thus, we have defined vector addition, scalar multiplication and equality in V .

It remains to verify V -1 and V -2(i) to V -2(iv).

V-1 V is an abelian group under vector addition.

Associativity: Let f , g, h be any three functions in V . Then,

[( f + g) + h](x) = ( f + g)(x) + h(x) = [ f (x) + g(x)] + h(x)

[By associativity of addition on R]

= f (x) + [g(x) + h(x)]

= f (x) + (g + h)(x)

= [ f + (g + h)](x)

So,

for all x ∈ [a, b]

( f + g) + h = f + (g + h)

Thus, vector addition is associative on V .

Commutativity: For any f , g ∈ V , we have

( f + g)(x) = f (x) + g(x) = g(x) + f (x)

⇒

( f + g)(x) = (g + f )(x)

So,

f +g = g+ f

[By commutativity of + on R]

for all x ∈ [a, b]

Thus, vector addition is commutative on V .

Existence of additive identity: The function 0̂(x) = 0 for all x ∈ [a, b] is the additive identity,

because for any f ∈ V

( f + 0̂)(x) = f (x) + 0̂(x) = f (x) + 0 = f (x) = (0̂ + f )(x)

for all x ∈ [a, b].

Existence of additive inverse: Let f be an arbitrary function in V . Then, a function − f defined

by (− f )(x) = − f (x) for all x ∈ [a, b] is continuous on [a, b], and

[ f + (− f )](x) = f (x) + (− f )(x) = f (x) − f (x) = 0 = 0̂(x)

Thus,

f + (− f ) = 0̂ = (− f ) + f

So, each f ∈ V has its additive inverse − f ∈ V .

Hence, V is an abelian group under vector addition.

for all x ∈ [a, b].

Vector Spaces

•

125

V-1. V1 ×V2 is an abelian group under vector addition:

Associativity: For any (u1 , u2 ), (v1 , v2 ), (w1 , w2 ) ∈ V1 ×V2 , we have

[(u1 , u2 ) + (v1 , v2 )] + (w1 , w2 ) = (u1 + v1 ), (u2 + v2 ) + (w1 , w2 )

= (u1 + v1 ) + w1 , (u2 + v2 ) + w2

By

associativity

of

= u1 + (v1 + w1 ), u2 + (v2 + w2 ) vector addition on V1

and V2

= (u1 , u2 ) + (v1 + w1 , v2 + w2 )

= (u1 , u2 ) + [(v1 , v2 ) + (w1 , w2 )]

So, vector addition is associative on V1 ×V2 .

Commutativity: For any (u1 , u2 ), (v1 , v2 ) ∈ V1 ×V2 , we have

(u1 , u2 ) + (v1 , v2 ) = (u1 + v1 , u2 + v2 )

= (v1 + u1 , v2 + u2 )

[By commutativity of vector addition on V1 and V2 ]

= (v1 , v2 ) + (u1 , u2 )

So, vector addition is commutative on V1 ×V2 .

Existence of additive identity: If 0V1 and 0V2 are zero vectors in V1 and V2 respectively, then

(0V1 , 0V2 ) is zero vector (additive identity) in V1 ×V2 . Because for any (u1 , u2 ) ∈ V1 ×V2

(u1 , u2 ) + (0V1 , 0V2 ) = (u1 + 0V1 , u2 + 0V2 )

= (u1 , u2 ) = (0V1 , 0V2 ) + (u1 , u2 ) [By commutativity of vector addition]

Existence of additive Inverse: Let (u1 , u2 ) ∈ V1 ×V2 . Then, (−u1 , −u2 ) is its additive inverse.

Because,

(u1 , u2 ) + (−u1 , −u2 ) = u1 + (−u1 ), u2 + (−u2 )

By commutativity of vector

= (0V1 , 0V2 ) = (−u1 , −u2 ) + (u1 , u2 )

addition

Thus, each element of V1 ×V2 has its additive inverse in V1 ×V2 .

Hence, V1 ×V2 is an abelian group under vector addition.

V-2. For any (u1 , u2 ), (v1 , v2 ) ∈ V1 ×V2 and λ, µ ∈ F, we have

(i)

λ[(u1 , u2 ) + (v1 , v2 )] = λ(u1 + v1 , u2 + v2 )

= λ(u1 + v1 ), λ(u2 + v2 )

= (λu1 + λv1 , λu2 + λv2 )

= (λu1 , λu2 ) + (λv1 , λv2 )

= λ(u1 , u2 ) + λ(v1 , v2 )

[By V -2(i) in V1 and V2 ]

126 • Theory and Problems of Linear Algebra

(ii)

(λ + µ)(u1 , u2 ) = (λ + µ)u1 , (λ + µ)u2

= λu1 + µu1 , λu2 + µu2

[By V -2(ii) in V1 and V2 ]

= (λu1 , λu2 ) + (µu1 , µu2 )

(iii)

= λ(u1 , u2 ) + µ(u1 , u2 )

(λµ)(u1 , u2 ) = (λµ)u1 , (λµ)u2

= λ(µu1 ), λ(µu2 )

[By V -2(iii) in V1 and V2 ]

= λ(µu1 , µu2 )

= λ {µ(u1 , u2 )}

(iv)

1(u1 , u2 ) = (1u1 , 1u2 )

[By V -2(iv) in V1 and V2 ]

= (u1 , u2 )

Hence, V1 ×V2 is a vector space over field F.

EXAMPLE-11 Let V be a vector space over a field F. Then for any n ∈ N the function space

V n = { f : f : n → V } is a vector space over field F.

SOLUTION

We define vector addition, scalar multiplication and equality in V n as follows:

Vector addition: Let f , g ∈ V n , then the vector addition on V n is defined as

( f + g)(x) = f (x) + g(x) for all x ∈ n.

Scalar multiplication: If λ ∈ F and f ∈ V n , then λ f is defined as

(λ f )(x) = λ( f (x)) for all x ∈ n.

Equality: f , g ∈ V n are defined as equal if f (x) = g(x) for all x ∈ n.

Now we shall verify V n -1 and V n -2(i) to V n -2 (iv).

V n − 1. V n is an abelian group under vector addition:

Associativity: For any f , g, h ∈ V n , we have

[( f + g) + h](x) = ( f + g)(x) + h(x) = [ f (x) + g(x)] + h(x)

= f (x) + [g(x) + h(x)]

[By associativity of vector addition on V ]

= f (x) + (g + h)(x)

= [ f + (g + h)](x)

∴

( f + g) + h = f + (g + h)

So, vector addition is associative on V n .

for all x ∈ n

for all x ∈ n

Vector Spaces

•

127

Commutativity: For any f , g ∈ V n , we have

( f + g)(x) = f (x) + g(x)

⇒

( f + g)(x) = g(x) + f (x)

⇒

( f + g)(x) = (g + f )(x)

∴

f +g = g+ f

for all x ∈ n

[By commutativity of vector addition on V ]

for all x ∈ n

So, vector addition is commutative on V n .

0(x) = 0v

Existence of additive identity (zero vector): The function 0 : n → V defined by for all x ∈ n is the additive identity, because

⇒

( f +

0)(x) = f (x) + 0(x) = f (x) + 0V

( f +

0)(x) = f (x) for all f ∈ V n and for all x ∈ n

[∵ 0V is zero vector in V ]

Existence of additive inverse: Let f be an arbitrary function in V n . Then − f : n → V

defined by

(− f )(x) = − f (x)

for all x ∈ n

is additive inverse of f , because

[ f + (− f )](x) = f (x) + (− f )(x) = f (x) − f (x) = 0V = 0(x)

for all x ∈ n.

Hence, V n is an abelian group under vector addition.

V n -2. For any f , g ∈ V n and λ, µ ∈ F, we have

(i)

[λ( f + g)](x) = λ[( f + g)(x)] = λ[ f (x) + g(x)]

⇒

[λ( f + g)](x) = λ f (x) + λg(x)

⇒

[λ( f + g)](x) = (λ f + λg)(x)

∴

λ( f + g) = λ f + λg.

(ii)

[(λ + µ) f ](x) = (λ + µ) f (x)

⇒

[(λ + µ) f ](x) = λ f (x) + µf (x)

⇒

[(λ + µ) f ](x) = (λ f )(x) + (µf )(x)

⇒

[(λ + µ) f ](x) = (λ f + µf )(x)

∴

(λ + µ) f = λ f + µf

(iii)

⇒

⇒

[(λµ) f ](x) = (λµ) f (x)

[(λµ) f ](x) = λ µf (x)

[(λµ) f ](x) = λ (µf )(x)

[By V -2(i) in V ]

for all x ∈ n

[By V -2(ii) in V ]

for all x ∈ n

[By V -2(iii) in V ]

Vector Spaces

•

129

9. Let U and W be two vector spaces over a field F. Let V be the set of ordered pairs (u,w)

where u ∈ U and w ∈ W . Show that V is a vector space over F with addition in V and

scalar multiplication V defined by

(u1 , w1 ) + (u2 , w2 ) = (u1 + u2 , w1 + w2 )

and

a(u, w) = (au, aw)

(This space is called the external direct product of u and w).

10. Let V be a vector space over a field F and n ∈ N. Let V n = {(v1 , v2 , . . . , vn ) : vi ∈ V ;

i = 1, 2, . . . , n}. Show that V n is a vector space over field F with addition in V and scalar

multiplication on V defined by

(u1 , u2 , . . . , un ) + (v1 , v2 , . . . , vn ) = (u1 + v1 , u2 + v2 , . . . , un + vn )

and

a(v1 , v2 , . . . , vn ) = (av1 , av2 , . . . , avn )

11. Let V = {(x, y) : x, y ∈ R}. Show that V is not a vector space over R under the addition

and scalar multiplication defined by

(a1 , b1 ) + (a2 , b2 ) = (3b1 + 3b2 , −a1 − a2 )

k(a1 , b1 ) = (3kb1 , −ka1 )

for all (a1 , b1 ), (a2 , b2 ) ∈ V and k ∈ R.

12. Let V = {(x, y) : x, y ∈ R}. For any α = (x1 , y1 ), β = (x2 , y2 ) ∈ V, c ∈ R, define

α ⊕ β = (x1 + x2 + 1, y1 + y2 + 1)

c α = (cx1 , cy1 )

(i) Prove that (V, ⊕) is an abelian group.

(ii) Verify that c (α ⊕ β) = c α ⊕ c β.

(iii) Prove that V is not a vector space over R under the above two operations.

13. For any u = (x1 , x2 , x3 ), v = (y1 , y2 , y3 ) ∈ R3 and a ∈ R, let us define

u ⊕ v = (x1 + y1 + 1, x2 + y2 + 1, x3 + y3 + 1)

a u = (ax1 + a − 1, ax2 + a − 1, ax3 + a − 1)

R3

is a vector space over R under these two operations.

Prove that

14. Let V denote the set of all positive real numbers. For any u, v ∈ V and a ∈ R, define

u ⊕ v = uv

a u = ua

Prove that V is a vector space over R under these two operations.

15. Prove that R3 is not a vector space over R under the vector addition and scalar multiplication defined as follows:

(x1 , x2 , x3 ) + (y1 , y2 , y3 ) = (x1 + y1 , x2 + y2 , x3 + y3 )

a(x1 , x2 , x3 ) = (ax1 , ax2 , ax3 )

130 • Theory and Problems of Linear Algebra

16. Let V = {(x, 1) : x ∈ R}. For any u = (x, 1), v = (y, 1) ∈ V and a ∈ R, define

u ⊕ v = (x + y, 1)

a u = (ax, 1)

Prove that V is a vector space over R under these two operations.

17. Let V be the set of ordered pairs (a, b) of real numbers. Let us define

(a, b) ⊕ (c, d) = (a + c, b + d)

and

k(a, b) = (ka, 0)

Show that V is not a vector space over R.

18. Let V be the set of ordered pairs (a, b) of real numbers. Show that V is not a vector space

over R with addition and scalar multiplication defined by

(i) (a, b) + (c, d) = (a + d, b + c) and k(a, b) = (ka, kb)

(ii) (a, b) + (c, d) = (a + c, b + d) and k(a, b) = (a, b)

(iii) (a, b) + (c, d) = (0, 0)

(iv) (a, b) + (c, d) = (ac, bd)

and k(a, b) = (ka, kb)

and k(a, b) = (ka, kb)

19. The set V of all convergent real sequences is a vector space over the field R of all real

numbers.

20. Let p be a prime number. The set Z p = {0, 1, 2, . . . , (p − 1)} is a field under addition and

multiplication modulo p as binary operations. Let V be the vector space of polynomials

of degree at most n over the field Z p . Find the number of elements in V .

[Hint: Each polynomial in V is of the form f (x) = a0 + a1 x + · · · + an xn , where

a0 , a1 , a2 , . . . , an ∈ Z p . Since each ai can take any one of the p values 0, 1, 2, . . . , (p− 1).

So, there are p n+1 elements in V ]

2.3 ELEMENTARY PROPERTIES OF VECTOR SPACES

THEOREM-1

Let V be a vector space over a field F. Then

(i) a · 0V = 0V

(ii) 0 · v = 0V

for all a ∈ F

for all v ∈ V

(iii) a · (−v) = −(a · v) = (−a) · v

(iv) (−1) · v = −v

(v) a · v = 0V ⇒

for all v ∈ V and for all a ∈ F

for all v ∈ F

either a = 0 or v = 0V

Vector Spaces

•

131

PROOF. (i) We have,

⇒

∴

a · 0V = a · (0V + 0V )

for all a ∈ F

a · 0V = a · 0V + a · 0V

for all a ∈ F

a · 0V + 0V = a · 0V + a · 0V

for all a ∈ F

0V = a · 0V

⇒

for all a ∈ F

[∵

0V + 0V = 0V ]

[By V -2(i)]

[0V is additive identity in V ]

By left cancellation law for addition

on V

(ii) For any v ∈ V , we have

⇒

∴

⇒

0 · v = (0 + 0) · v

[∵ 0 is additive identity in F]

0·v = 0·v+0·v

[By V -2(ii)]

0 · v + 0V = 0 · v + 0 · v

[∵ 0V is additive identity in V ]

0 · v = 0V

[By left cancellation law for addition in V ]

So, 0 · v = 0V for all v ∈ V .

(iii) For any v ∈ V and a ∈ F, we have

a · [v + (−v)] = a · v + a · (−v)

[By V -2(i)]

⇒

a · 0V = a · v + a · (−v)

[∵ v + (−v) = 0V for all v ∈ V ]

⇒

0V = a · v + a · (−v)

[By (i)]

⇒

[∵ V is an additive group]

a · (−v) = −(a · v)

Again,

[a + (−a)] · v = a · v + (−a) · v

⇒

0 · v = a · v + (−a) · v

⇒

0V = a · v + (−a) · v

⇒

(−a) · v = −(a · v)

[By V -2(ii)]

[∵ a + (−a) = 0]

[By (ii)]

[∵ V is an additive group]

Hence, a · (−v) = −(a · v) = (−a) · v

(iv) Taking a = 1 in (iii), we obtain

for all a ∈ F and for all v ∈ V.

(−1) · v = −v for all v ∈ V .

(v) Let a · v = 0V , and let a = 0. Then a−1 ∈ F.

Now

a · v = 0V

⇒

a−1 · (a · v) = a−1 · 0V

⇒

(a−1 a) · v = a−1 · 0V

⇒

(a−1 a) · v = 0V

⇒

1 · v = 0V

⇒

v = 0V

[By V -2(iii)]

[By (i)]

[By V -2(iv)]

132 • Theory and Problems of Linear Algebra

⇒

⇒

⇒

⇒

Again let a · v = 0V and v = 0V . Then to prove that a = 0, suppose a = 0. Then a−1 ∈ F.

Now

a · v = 0V

a−1 · (a · v) = a−1 · 0V

(a−1 a) · v = a−1 · 0V

[By V -2(iii)]

1 · v = 0V

[By (i)]

[By V -2(iv)]

v = 0V , which is a contradiction.

Thus, a · v = 0V and v = 0V ⇒ a = 0.

Hence, a · v = 0V ⇒ either a = 0 or v = 0V .

Q.E.D.

It follows from this theorem that the multiplication by the zero of V to a scalar in F or by

the zero of F to a vector in V always leads to the zero of V .

Since it is convenient to write u − v instead of u + (−v). Therefore, in what follows we

shall write u − v for u + (−v).

REMARK.

For the sake of convenience, we drop the scalar multiplication ‘·’ and shall write av

in place of a · v.

Let V be a vector space over a field F. Then,

THEOREM-2

(i) If u, v ∈ V and 0 = a ∈ F, then

au = av ⇒ u = v

(ii) If a, b ∈ F and 0V = u ∈ V , then

PROOF.

(i) We have,

au = av

⇒

au + (−(av)) = av + (−(av))

⇒

au − av = 0V

⇒

a(u − v) = 0V

⇒

u − v = 0V

au = bu ⇒ a = b

[By Theorem 1(v)]

⇒

u=v

(ii) We have,

au = bu

⇒

au + (−(bu)) = bu + (−(bu))

⇒

au − bu = 0V

⇒

(a − b)u = 0V

⇒

a−b = 0

⇒

a=b

[By Theorem 1(v)]

Q.E.D.

Vector Spaces

•

133

EXERCISE 2.2

1. Let V be a vector space over a field F. Then prove that

a(u − v) = au − av

for all a ∈ F and u, v ∈ V.

2. Let V be a vector space over field R and u, v ∈ V . Simplify each of the following:

6(3u + 2v) + 5u − 7v

2

(iii) 5(2u − 3v) + 4(7v + 8) (iv) 3(5u + )

v

3. Show that the commutativity of vector addition in a vector space V can be derived from

the other axioms in the definition of V .

4. Mark each of the following as true or false.

(i)

4(5u − 6v) + 2(3u + v) (ii)

(i) Null vector in a vector space is unique.

(ii) Let V (F) be a vector space. Then scalar multiplication on V is a binary operation

on V .

(iii) Let V (F) be a vector space. Then au = av ⇒ u = v for all u, v ∈ V and for all

a ∈ F.

(iv) Let V (F) be a vector space. Then au = bu ⇒ a = b for all u ∈ V and for all

a, b ∈ F.

5. If V (F) is a vector space, then prove that for any integer n, λ ∈ F, v ∈ V, prove that

n(λv) = λ(nv) = (nλ)v.

ANSWERS

2.

(i) 26u − 22v

(ii) 23u + 5v

(iii) The sum 7v + 8 is not defined, so the given expression is not meaningful.

4.

(iv) Division by v is not defined, so the given expression is not meaningful.

(i) T (ii) F (iii) F (iv) F

2.4 SUBSPACES

Let V be a vector space over a field F. A non-void subset S of V is said to be a

subspace of V if S itself is a vector space over F under the operations on V restricted to S.

If V is a vector space over a field F, then the null (zero) space {0V } and the entire space V

are subspaces of V . These two subspaces are called trivial (improper) subspaces of V and any

other subspace of V is called a non-trivial (proper) subspace of V .

SUB-SPACE

(Criterion for a non-void subset to be a subspace) Let V be a vector space over

a field F. A non-void subset S of V is a subspace of V iff or all u, v ∈ S and for all a ∈ F

(i) u − v ∈ S and, (ii) au ∈ S.

THEOREM-1

134 • Theory and Problems of Linear Algebra

PROOF. First suppose that S is a subspace of vector space V . Then S itself is a vector space

over field F under the operations on V restricted to S. Consequently, S is subgroup of the

additive abelian group V and is closed under scalar multiplication.

Hence, (i) and (ii) hold.

Conversely, suppose that S is a non-void subset of V such that (i) and (ii) hold. Then,

(i) ⇒ S is an additive subgroup of V and therefore S is an abelian group under vector

addition.

(ii) ⇒ S is closed under scalar multiplication.

Axioms V -2(i) to V -2(iv) hold for all elements in S as they hold for elements in V .

Hence, S is a subspace of V .

Q.E.D.

THEOREM-2 (Another criterion for a non-void subset to be a subspace) Let V be a vector

space over a field F. Then a non-void subset S of V is a subspace of V iff au + bv ∈ S for all

u, v ∈ S and for all a, b ∈ F.

PROOF. First let S be a subspace of V . Then,

for all u, v ∈ S and for all a, b ∈ F

[By Theorem 1]

for all u, v ∈ S and for all a, b ∈ F[∵

S is closed under vector addition]

au ∈ S, bv ∈ S

⇒

au + bv ∈ S

Conversely, let S be a non-void subset of V such that au + bv ∈ S for all u, v ∈ S and for all

a, b ∈ F.

Since 1, −1 ∈ F, therefore

1u + (−1)v ∈ S

for all u, v ∈ S

u−v ∈ S

⇒

for all u, v ∈ S.

(i)

Again, since 0 ∈ F. Therefore,

au + 0v ∈ S

au ∈ S

⇒

for all u ∈ S and for all a ∈ F.

for all u ∈ S and for all a ∈ F

From (i) and (ii), we get

u − v ∈ S and au ∈ S for all u, v ∈ S and for all a ∈ F.

Hence, by Theorem 1, S is a subspace of vector space V .

(ii)

Q.E.D.

ILLUSTRATIVE EXAMPLES

EXAMPLE-1

Show that S = {(0, b, c) : b, c ∈ R} is a subspace of real vector space R3 .

Obviously, S is a non-void subset of R3 . Let u = (0, b1 , c1 ), v = (0, b2 , c2 ) ∈ S

and λ, µ ∈ R. Then,

SOLUTION

Vector Spaces

•

135

λu + µv = λ(0, b1 , c1 ) + µ(0, b2 , c2 )

λu + µν = (0, λb1 + µb2 , λc1 + µc2 ) ∈ S.

⇒

Hence, S is a subspace of R3 .

Geometrically-R3 is three dimensional Euclidean plane and S is yz-plane which

is itself a vector space. Hence, S is a sub-space of R3 . Similarly, {(a, b, 0) : a, b ∈ R},

i.e. xy-plane, {(a, 0, c) : a, c ∈ R}, i.e. xz plane are subspaces of R3 . In fact, every plane

through the origin is a subspace of R3 . Also, the coordinate axes {(a, 0, 0) : a ∈ R}, i.e. x-axis,

{(0, a, 0) : a ∈ R}, i.e. y-axis and {(0, 0, a) : a ∈ R}, i.e. z-axis are subspaces of R3 .

REMARK.

Let V denote the vector space R3 , i.e. V = {(x, y, z) : x, y, z ∈ R} and S consists

of all vectors in R3 whose components are equal, i.e. S = {(x, x, x) : x ∈ R}. Show that S is a

subspace of V .

EXAMPLE-2

Clearly, S is a non-empty subset of R3 as (0, 0, 0)∈ S.

Let u = (x, x, x) and v = (y, y, y) ∈ S and a, b ∈ R. Then,

SOLUTION

au + bv = a(x, x, x) + b(y, y, y)

⇒

au + av = (ax + by, ax + by, ax + by) ∈ S.

Thus, au + bv ∈ S for all u, v ∈ S and a, b ∈ R.

Hence, S is a subspace of V .

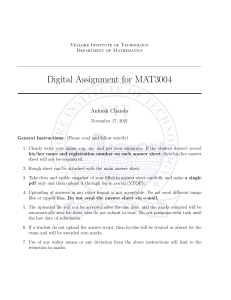

REMARK. Geometrically S, in the above example, represents the line passing through the

origin O and having direction ratios proportional to 1,1,1 as shown in the following figure.

Z

S

O

X

Y

116 • Theory and Problems of Linear Algebra

If there is no danger of any confusion we shall say V is a vector space over a field F,

whenever the algebraic structure (V, F, ⊕, ) is a vector space. Thus, whenever we say that

V is a vector space over a field F, it would always mean that (V, ⊕) is an abelian group and

: F ×V → V is a mapping such that V -2 (i)–(iv) are satisfied.

V is called a real vector space if F = R (field of real numbers), and a complex vector space

if F = C (field of complex numbers).

REMARK-1

V is called a left or a right vector space according as the elements of a skew-field

F are multiplied on the left or right of vectors in V . But, in case of a field these two concepts

coincide.

REMARK-2

The symbol ‘+’ has been used to denote the addition in the field F. For the sake

of convenience, in future, we shall use the same symbol ‘+’ for vector addition ⊕ and addition

in the field F. But, the context would always make it clear as to which operation is meant.

Similarly, multiplication in the field F and scalar multiplication will be denoted by the same

symbol ‘·’.

REMARK-3 In this chapter and in future also, we will be dealing with two types of zeros. One

will be the zero of the additive abelian group V , which will be known as the vector zero and

other will be the zero element of the field F which will be known as scalar zero. We will use the

symbol 0V to denote the zero vector and 0 to denote the zero scalar.

REMARK-4

Since (V,⊕) is an abelian group, therefore, for any u, v, w ∈ V the following

properties will hold:

(i) u ⊕ v = u ⊕ w ⇒ v = w

(Cancellation laws)

(ii) v ⊕ u = w ⊕ u ⇒ v = w

(iii)

(iv)

(v)

(vi)

(vii)

(viii)

u ⊕ v = 0v ⇒ u = −v and v = −u

−(−u) = u

u ⊕ v = u ⇒ v = 0v

−(u ⊕ v) = (−u) ⊕ (−v)

0v is unique

For each u ∈ V, −u ∈ V is unique.

REMARK-5

If V is a vector space over a field F, then we will write V (F).

ILLUSTRATIVE EXAMPLES

EXAMPLE-1

Every field is a vector space over its any subfield.

SOLUTION Let F be a field, and let S be an arbitrary subfield of F. Since F is an additive

abelian group, therefore, V -1 holds.

Taking multiplication in F as scalar multiplication, the axioms V -2 (i) to V -2 (iii) are

respectively the right distributive law, left distributive law and associative law. Also V -2 (iv) is

property of unity in F.

Vector Spaces

•

135

λu + µv = λ(0, b1 , c1 ) + µ(0, b2 , c2 )

λu + µν = (0, λb1 + µb2 , λc1 + µc2 ) ∈ S.

⇒

Hence, S is a subspace of R3 .

Geometrically-R3 is three dimensional Euclidean plane and S is yz-plane which

is itself a vector space. Hence, S is a sub-space of R3 . Similarly, {(a, b, 0) : a, b ∈ R},

i.e. xy-plane, {(a, 0, c) : a, c ∈ R}, i.e. xz plane are subspaces of R3 . In fact, every plane

through the origin is a subspace of R3 . Also, the coordinate axes {(a, 0, 0) : a ∈ R}, i.e. x-axis,

{(0, a, 0) : a ∈ R}, i.e. y-axis and {(0, 0, a) : a ∈ R}, i.e. z-axis are subspaces of R3 .

REMARK.

Let V denote the vector space R3 , i.e. V = {(x, y, z) : x, y, z ∈ R} and S consists

of all vectors in R3 whose components are equal, i.e. S = {(x, x, x) : x ∈ R}. Show that S is a

subspace of V .

EXAMPLE-2

Clearly, S is a non-empty subset of R3 as (0, 0, 0)∈ S.

Let u = (x, x, x) and v = (y, y, y) ∈ S and a, b ∈ R. Then,

SOLUTION

au + bv = a(x, x, x) + b(y, y, y)

⇒

au + av = (ax + by, ax + by, ax + by) ∈ S.

Thus, au + bv ∈ S for all u, v ∈ S and a, b ∈ R.

Hence, S is a subspace of V .

REMARK. Geometrically S, in the above example, represents the line passing through the

origin O and having direction ratios proportional to 1,1,1 as shown in the following figure.

Z

S

O

X

Y

136 • Theory and Problems of Linear Algebra

EXAMPLE-3 Let a1 , a2 , a3 be fixed elements of a field F. Then the set S of all triads (x1 , x2 , x3 )

of elements of F, such that a1 x1 + a2 x2 + a3 x3 = 0, is a subspace of F 3 .

Let u = (x1 , x2 , x3 ), v = (y1 , y2 , y3 ) be any two elements of S. Then x1 , x2 , x3 ,

y1 , y2 , y3 ∈ F are such that

SOLUTION

a1 x1 + a2 x2 + a3 x3 = 0

a1 y1 + a2 y2 + a3 y3 = 0

and,

(i)

(ii)

Let a, b be any two elements of F. Then,

au + bv = a(x1 , x2 , x3 ) + b(y1 , y2 , y3 ) = (ax1 + by1 , ax2 + by2 , ax3 + by3 ).

Now,

a1 (ax1 + by1 ) + a2 (ax2 + by2 ) + a3 (ax3 + by3 )

= a(a1 x1 + a2 x2 + a3 x3 ) + b(a1 y1 + a2 y2 + a3 y3 )

= a0 + b0 = 0

∴

[From (i) and (ii)]

au + bv = (ax1 + by1 , ax2 + by2 , ax3 + by3 ) ∈ S.

Thus, au + bv ∈ S for all u, v ∈ S and all a, b ∈ F. Hence, S is a subspace of F 3 .

EXAMPLE-4 Show that the set S of all n × n symmetric matrices over a field F is a subspace

of the vector space F n×n of all n × n matrices over field F.

Note that a square matrix A is symmetric, if AT = A.

Obviously, S is a non-void subset of F n×n , because the null matrix 0n×n is symmetric.

Let A, B ∈ S and λ, µ ∈ F. Then,

SOLUTION

(λA + µB)T = λAT + µBT

⇒

(λA + µB)T = λA + µB

⇒

λA + µB ∈ S.

[∵

A, B ∈ S

∴

AT = A, BT = B]

Thus, S is a non-void subset of F n×n such that λA + µB ∈ S for all A, B ∈ S and for all

λ, µ ∈ F.

Hence, S is a subspace of F n×n .

EXAMPLE-5

Let V be a vector space over a field F and let v ∈ V . Then Fv = {av : a ∈ F} is a

subspace of V .

Since 1 ∈ F, therefore v = 1 v ∈ Fv . Thus, Fv is a non-void subset of V .

Let α, β ∈ Fv . Then, α = a1 v, β = a2 v for some a1 , a2 ∈ F.

SOLUTION

136 • Theory and Problems of Linear Algebra

EXAMPLE-3 Let a1 , a2 , a3 be fixed elements of a field F. Then the set S of all triads (x1 , x2 , x3 )

of elements of F, such that a1 x1 + a2 x2 + a3 x3 = 0, is a subspace of F 3 .

Let u = (x1 , x2 , x3 ), v = (y1 , y2 , y3 ) be any two elements of S. Then x1 , x2 , x3 ,

y1 , y2 , y3 ∈ F are such that

SOLUTION

a1 x1 + a2 x2 + a3 x3 = 0

a1 y1 + a2 y2 + a3 y3 = 0

and,

(i)

(ii)

Let a, b be any two elements of F. Then,

au + bv = a(x1 , x2 , x3 ) + b(y1 , y2 , y3 ) = (ax1 + by1 , ax2 + by2 , ax3 + by3 ).

Now,

a1 (ax1 + by1 ) + a2 (ax2 + by2 ) + a3 (ax3 + by3 )

= a(a1 x1 + a2 x2 + a3 x3 ) + b(a1 y1 + a2 y2 + a3 y3 )

= a0 + b0 = 0

∴

[From (i) and (ii)]

au + bv = (ax1 + by1 , ax2 + by2 , ax3 + by3 ) ∈ S.

Thus, au + bv ∈ S for all u, v ∈ S and all a, b ∈ F. Hence, S is a subspace of F 3 .

EXAMPLE-4 Show that the set S of all n × n symmetric matrices over a field F is a subspace

of the vector space F n×n of all n × n matrices over field F.

Note that a square matrix A is symmetric, if AT = A.

Obviously, S is a non-void subset of F n×n , because the null matrix 0n×n is symmetric.

Let A, B ∈ S and λ, µ ∈ F. Then,

SOLUTION

(λA + µB)T = λAT + µBT

⇒

(λA + µB)T = λA + µB

⇒

λA + µB ∈ S.

[∵

A, B ∈ S

∴

AT = A, BT = B]

Thus, S is a non-void subset of F n×n such that λA + µB ∈ S for all A, B ∈ S and for all

λ, µ ∈ F.

Hence, S is a subspace of F n×n .

EXAMPLE-5

Let V be a vector space over a field F and let v ∈ V . Then Fv = {av : a ∈ F} is a

subspace of V .

Since 1 ∈ F, therefore v = 1 v ∈ Fv . Thus, Fv is a non-void subset of V .

Let α, β ∈ Fv . Then, α = a1 v, β = a2 v for some a1 , a2 ∈ F.

SOLUTION

Vector Spaces

•

137

Let λ, µ ∈ F. Then,

λα + µβ = λ(a1 v) + µ(a2 v)

⇒

λα + µβ = (λa1 )v + (µa2 )v

[By V -2(iii)]

⇒

λα + µβ = (λa1 + µa2 )v

[By V -2(ii)]

⇒

λα + µβ ∈ Fv

[∵

λa1 + µa2 ∈ F]

Thus, Fv is a non-void subset of V such that λα + µβ ∈ Fv for all α, β ∈ Fv and for all

λ, µ ∈ F.

Hence, Fv is a subspace of V .

EXAMPLE-6 Let AX = O be homogeneous system of linear equations, where A is an n × n

matrix over R. Let S be the solution set of this system of equations. Show S is a subspace of Rn .

Every solution U of AX = O may be viewed as a vector in Rn . So, the solution set

of AX = O is a subset of Rn . Clearly, AX = O. So, the zero vector O ∈ S. Consequently, S is

non-empty subset of Rn .

Let X1 , X2 ∈ S. Then,

X1 and X2 are solutions of AX = O

(i)

⇒ AX1 = O and AX2 = O

Let a, b be any two scalars in R. Then,

SOLUTION

⇒

⇒

[Using(i)]

A(aX1 + bX2 ) = a(AX1 ) + b(AX2 ) = aO + bO = O

aX1 + bX2 is a solution of AX = O

aX1 + bX2 ∈ S.

Thus, aX1 + bX2 ∈ S for X1 , X2 ∈ S and a, b ∈ R.

Hence, S is a subspace of Rn .

REMARK. The solution set of a non-homogeneous system AX = B of linear equations in n

unknowns is not a subspace of Rn , because zero vector O does not belong to its solution set.

EXAMPLE-7

Let V be the vector space of all real valued continuous functions over the field R

of all real numbers. Show that the set S of solutions of the differential equation

2

d2y

dy

− 9 + 2y = 0

2

dx

dx

is a subspace of V.

SOLUTION

We have,

dy

d2y

S = y : 2 2 − 9 + 2y = 0 ,

dx

dx

where y = f (x).

Vector Spaces

•

139

Now,

(aa1 + ba2 ) + (ab1 + bb2 ) + 2(ac1 + bc2 )

= a(a1 + b1 + 2c1 ) + b(a2 + b2 + 2c2 )

= a×0+b×0 = 0

[Using (i)]

au + bv = (aa1 + ba2 , ab1 + bb2 , ac1 + bc2 ) ∈ S

∴

Thus, au + bv ∈ S for all u, v ∈ S and a, b ∈ R.

Hence, S is a subspace of R3 .

Let S be the set of all elements of the form (x + 2y, y, −x + 3y) in R3 , where

x, y ∈ R. Show that S is a subspace of R3 .

EXAMPLE-9

We have, S = {(x + 2y, y, −x + 3y) : x, y ∈ R}

Let u, v be any two elements of S. Then,

SOLUTION

u = (x1 + 2y1 , y1 , −x1 + 3y1 ), v = (x2 + 2y2 , y2 , −x2 + 3y2 )

where x1 , y1 , x2 , y2 ∈ R.

Let a, b be any two elements of R. In order to prove that S is a subspace of V , we have

to prove that au + bv ∈ S, for which we have to show that au + bv is expressible in the form

(α + 2β, β, −α + 3β).

Now,

⇒

au + bv = a(x1 + 2y1 , y1 , −x1 + 3y1 ) + b(x2 + 2y2 , y2 , −x2 + 3y2 )

au + bv = (ax1 + bx2 ) + 2(ay1 + by2 ), ay1 + by2 , − (ax1 + bx2 ) + 3(ay1 + by2 )

⇒

au + bv ∈ S

⇒

au + bv = (α + 2β, β, −α + 3β) ,

where α = ax1 + bx2

and

β = ay1 + by2 .

Thus, au + bv ∈ S for all u, v ∈ S and a, b ∈ R.

Hence, S is a subspace of R3 .

EXAMPLE-10

Show that:

Let V be the vector space of all 2×2 matrices over the field R of all real numbers.

(i) the set S of all 2 × 2 singular matrices over R is not a subspace of V .

(ii) the set S of all 2 × 2 matrices A satisfying A2 = A is not a subspace of V .

0 a

0 0

,B=

, a = 0 b = 0 are elements of S.

0 0

b 0

(i) We observe that A =

0 a

But, A + B =

is not an element of S as |A + B| = −ab = 0.

b 0

So, S is not a subspace of V .

SOLUTION

140 • Theory and Problems of Linear Algebra

(ii) We observe that I =

So,

2 0

∈

/ S, because

∈ S as = I. But, 2I =

0 2

2 0

2 0

4 0

(2I)2 =

=

= 2I.

0 2

0 2

0 4

1 0

0 1

I2

S is not a subspace of V .

SMALLEST SUBSPACE CONTAINING A GIVEN SUBSET.

Let V be a vector space over a field

F, and let W be a subset of V . Then a subspace S of V is called the smallest subspace of V

containing W , if

and

(i) W ⊂ S

(ii) S is a subspace of V such that W ⊂ S ⇒ S ⊂ S .

The smallest subspace containing W is also called the subspace generated by W or subspace

spanned by W and is denoted by [W ]. If W is a finite set, then S is called a finitely generated

space.

In Example 5, Fv is a finitely generated subspace of V containing {v}.

EXERCISE 2.3

1. Show that the set of all upper (lower) triangular matrices over field C of all complex

numbers is a subspace of the vector space V of all n × n matrices over C.

2. Let V be the vector space of real-valued functions. Then, show that the set S of all

continuous functions and set T of all differentiable functions are subspaces of V .

3. Let V be the vector space of all polynomials in indeterminate x over a field F and S be

the set of all polynomials of degree at most n. Show that S is a subspace of V .

4. Let V be the vector space of all n × n square matrices over a field F. Show that:

(i) the set S of all symmetric matrices over F is a subspace of V .

(ii) the set S of all upper triangular matrices over F is a subspace of V .

(iii) the set S of all diagonal matrices over F is a subspace of V .

(iv) the set S of all scalar matrices over F is a subspace of V .

5. Let V be the vector space of all functions from the real field R into R. Show that S is a

subspace of V where S consists of all:

(i) bounded functions.

(ii) even functions.

(iii) odd functions.

6. Let V be the vector space R3 . Which of the following subsets of V are subspaces of V ?

(i) S1 = {(a, b, c) : a + b = 0}

(ii) S2 = {(a, b, c) : a = 2b + 1}

142 • Theory and Problems of Linear Algebra

12. Which of the following sets of vectors α = (a1 , a2 , . . . , an ) in Rn are subspaces of Rn ?

(n ≥ 3).

(i) all α such that a1 ≥ o

(ii) all α such that a1 + 3a2 = a3

(iii) all α such that a2 = a21

(iv) all α such that a1 a2 = 0

(v) all α such that a2 is rational.

13. Let V be the vector space of all functions from R → R over the field R of all real numbers.

Show that each of the following subsets of V are subspaces of V .

(i) S1 = { f : f : R → R satisfies f (0) = f (1)}

(ii) S2 = { f : f : R → R is continuous }

(iii) S3 = { f : f : R → R satisfies f (−1) = 0}

14. Let V be the vector space of all functions from R to R over the field R of all real numbers.

Show that each of the following subsets of V is not a subspace of V .

(i) S1 = f : f satisfies f (x2 ) = { f (x)}2 for all x ∈ R

(ii) S2 = { f : f satisfies f (3) − f (−5) = 1}

15. Let Rn×n be the vector space of all n × n real matrices. Prove that the set S consisting of

all n × n real matrices which commutate with a given matrix A in Rn×n form a subspace

of Rn×n .

16. Let C be the field of all complex numbers and let n be a positive integer such that n ≥ 2.

Let V be the vector space of all n × n matrices over C. Show that the following subsets

of V are not subspaces of V .

(i) S = {A : A is invertible} (ii) S = {A : A is not invertible}

17. Prove that the set of vectors (x1 , x2 , . . . , xn ) ∈ R n satisfying the m equations is a subspace

of R n (R):

a11 x1 + a12 x2 + · · · + a1n xn = 0

a21 x1 + a22 x2 + · · · + a2n xn = 0

..

..

..

.

.

.

am1 x1 + am2 x2 + · · · + amn xn = 0,

aij ’s are fixed reals.

Vector Spaces

•

145

If S1 ⊂ S2 , then S1 ∪ S2 = S2 , which is a subspace of V . Again, if S2 ⊂ S1 , then S1 ∪ S2 = S1

which is also a subspace of V .

Hence, in either case S1 ∪ S2 is a subspace of V .

Conversely, suppose that S1 ∪ S2 is a subspace of V . Then we have to prove that either

S1 ⊂ S2 or S2 ⊂ S1 .

If possible, let S1 ⊂

/ S2 and S2 ⊂

/ S1 . Then,

S1 ⊂

/ S2 ⇒ There exists u ∈ S1

such that u ∈

/ S2

and, S2 ⊂

/ S1 ⇒ There exists v ∈ S2

such that v ∈

/ S1 .

Now,

u ∈ S1 , v ∈ S2

⇒

u, v ∈ S1 ∪ S2

⇒

u + v ∈ S1 ∪ S2

⇒

u + v ∈ S1 or, u + v ∈ S2 .

If

u + v ∈ S1

⇒

(u + v) − u ∈ S1

⇒

v ∈ S1 , which is a contradiction

If

u + v ∈ S2

⇒

(u + v) − v ∈ S2

[∵

S1 ∪ S2 is a subspace of V ]

[∵ u ∈ S1 and S1 is a subspace of V ]

[∵ v ∈

/ S1 ]

[∵ v ∈ S2 and S2 is a subspace of V ]

⇒

u ∈ S2 , which is a contradiction

/ S2 and S2 ⊂

/ S1 .

Since the contradictions arise by assuming that S1 ⊂

Hence, either S1 ⊂ S2 or, S2 ⊂ S1 .

[∵ u ∈

/ S2 ]

Q.E.D.

REMARK. In general, the union of two subspaces of a vector space is not necessarily a subspace. For example, S1 = {(a, 0, 0) : a ∈ R} and S2 = {(0, b, 0) : b ∈ R} are subspaces of

R3 . But, their union S1 ∪ S2 is not a subspace of R 3 , because (2, 0, 0), (0, −1, 0) ∈ S1 ∪ S2 , but

(2, 0, 0) + (0, −1, 0) = (2, −1, 0) ∈

/ S1 ∪ S2 .

As remarked above the union of two subspaces is not always a subspace. Therefore, unions

are not very useful in the study of vector spaces and we shall say no more about them.

The inter section of a family of subspaces of V (F) containing a given subset S

of V is the (smallest subspace of V containing S) subspace generated by S.

THEOREM-4

PROOF. Let {Si : i ∈ I} be a family of subspaces of V (F) containing a subset S of V . Let

T = ∩ Si . Then by Theorem 2, T is a subspace of V . Since Si ⊃ S for all i. Therefore,

i∈I

T = ∩ Si ⊃ S.

i∈I

Hence, T is a subspace of V containing S.

146 • Theory and Problems of Linear Algebra

Now it remains to show that T is the smallest subspace of V containing S.

Let W be a subspace of V containing S. Then W is one of the members of the family

{Si : i ∈ I}. Consequently, W ⊃ ∩ Si = T .

i∈ I

Thus, every subspace of V that contains S also contains T . Hence, T is the smallest subspace of V containing S.

Q.E.D.

Let

A = {v1 , v2 , . . ., vn } be a non-void finite subset of a vector space V (F).

n

Then the set S = ∑ ai vi : ai ∈ F is the subspace of V generated by A, i.e. S = [A].

THEOREM-5

i=1

PROOF. In order to prove that S is a subspace of V generated by A, it is sufficient to show that

S is the smallest subspace of V containing A.

We have, vi = 0 · v1 + 0v2 + · · · + 0vi−1 + 1 · vi + 0vi+1 + · · · + 0vn

So, vi ∈ S for all i ∈ n

⇒ A ⊂ S.

n

n

Let u = ∑ ai vi , v = ∑ bi vi ∈ S, and let λ, µ ∈ F. Then,

i=1

i=1

λu + µv = λ

n

n

Σ ai vi + µ

i=1

n

n

Σ bi vi

i=1

⇒

λu + µv = Σ (λai )vi + Σ (µbi )vi

⇒

λu + µv = Σ (λai + µbi )vi ∈ S

i=1

n

[By V -2(i) and V -2(iii)]

i=1

i=1

[∵ λai + µbi ∈ F for all i ∈ n]

Thus, λu + µv ∈ S for all u, v ∈ S and for all λ, µ ∈ F.

So, S is a subspace of V containing A.

Now, let W be a subspace of V containing A. Then, for each i ∈ n

ai ∈ F, vi ∈ A

⇒

ai ∈ F, vi ∈ W

⇒

ai vi ∈ W

⇒

[∵

A ⊂W]

[∵ W is a subspace of V ]

n

Σ ai vi ∈ W

i=1

Thus, S ⊂ W .

Hence, S is the smallest subspace of V containing A.

Q.E.D.

REMARK. If ϕ is the void set, then the smallest subspace of V containing ϕ is the null space

{0V }. Therefore, the null space is generated by the void set.

SUM OF SUBSPACES. Let S and T be two subspaces of a vector space V (F). Then their sum

(linear sum) S + T is the set of all sums u + v such that u ∈ S, v ∈ T .

Thus, S + T = {u + v : U ∈ S, v ∈ T }.

Vector Spaces

THEOREM-6

•

147

Let S and T be two subspaces of a vector space V (F). Then,

(i) S + T is a subspace of V .

(ii) S + T is the smallest subspace of V containing S ∪ T , i.e. S + T = [S ∪ T ].

PROOF. (i) Since S and T are subspaces of V . Therefore,

0V ∈ S, 0V ∈ T ⇒ 0V = 0V + 0V ∈ S + T.

So, S + T is a non-void subset of V .

Let u = u1 + u2 , v = v1 + v2 ∈ S + T and let λ, µ ∈ F. Then, u1 , v1 ∈ S and u2 , v2 ∈ T .

Now,

λu + µv = λ(u1 + u2 ) + µ(v1 + v2 )

⇒

λu + µv = (λu1 + λu2 ) + (µv1 + µv2 )

[By V -2(i)]

⇒

λu + µv = (λu1 + µv1 ) + (λu2 + µv2 )

[Using comm. and assoc. of vector addition]

Since S and T are subspaces of V . Therefore,

u1 , v1 ∈ S and λ, µ ∈ F ⇒ λu1 + µv1 ∈ S

and,

u2 , v2 ∈ T and λ, µ ∈ F ⇒ λu2 + µv2 ∈ T.

Thus,

λu + µv = (λu1 + µv1 ) + (λu2 + µv2 ) ∈ S + T.

Hence, S + T is a non-void subset of V such that λu + µv ∈ S + T for all u, v ∈ S + T and

λ, µ ∈ F. Consequently, S + T is a subspace of V .

(ii) Let W be a subspace of V such that W ⊃ (S ∪ T ).

Let u + v be an arbitrary element of S + T . Then u ∈ S and v ∈ T .

Now,

u ∈ S, v ∈ T ⇒ u ∈ S ∪ T, v ∈ S ∪ T ⇒ u, v ∈ W

⇒ u+v ∈W

Thus,

[∵ W ⊃ S ∪ T ]

[∵ W is a subspace of V ]

u+v ∈ S+T ⇒ u+v ∈W

⇒ S+T ⊂W.

Obviously, S ∪ T ⊂ S + T .

Thus, if W is a subspace of V containing S ∪ T , then it contains S + T . Hence, S + T is the

Q.E.D.

smallest subspace of V containing S ∪ T .

DIRECT SUM OF SUBSPACES. A vector space V (F) is said to be direct sum of its two subspaces

S and T if every vector u in V is expressible in one and only one as u = v + w, where v ∈ S and

w ∈ T.

If V is direct sum of S and T , then we write V = S ⊕ T .

Vector Spaces

•

151

Consider the vector space V = R3 . Let S = {(x, y, 0) : x, y ∈ R} and

T = {(x, 0, z) : x, z ∈ R}. Prove that S and T are subspaces of V such that V = S + T , but

V is not the direct sum of S and T .

EXAMPLE-4

Clearly, S and T represent xy-plane and xz-plane respectively. It can be easily

checked that S and T are subspaces of V .

Let v = (x, y, z) be an arbitrary point in V . Then,

SOLUTION

v = (x, y, z) = (x, y, 0) + (0, 0, z)

⇒

v ∈ S+T

∴

V ⊂ S+T

Also,

S + T ⊂ V.

[∵ (x, y, 0) ∈ S and (0, 0, z) ∈ T ]

Hence, V = S + T i.e. R3 is a sum of the xy-plane and the yz-plane.

Clearly, S ∩ T = {(x, 0, 0) : x ∈ R} is the x-axis.

∴

S ∩ T = {(0, 0, 0)}.

So, V is not the direct sum of S and T .

COMPLEMENT OF A SUBSPACE. Let V (F) be a vector space and let S be a subspace of V . Then

a subspace T of V is said to be complement of S, if V = S ⊕ T.

INDEPENDENT SUBSPACES. Let S1, S2 , . . . , Sn

are said to be independent, if

be subspaces of a vector space V. Then S1,S2 , . . . ,Sn

n

Si ∩ Σ S j = {0V } for all i = 1, 2, . . . , n.

j=1

j =i

Let S1 , S2 , . . . , Sn be subspaces of a vector space V (F) such that

V = S1 + S2 + · · · + Sn . Then, the following are equivalent:

THEOREM-8

(i) V = S1 ⊕ S2 ⊕ · · · ⊕ Sn .

(ii) S1 , S2 , . . . , Sn are independent.

n

(iii) For each vi ∈ Si , ∑ vi = 0V ⇒ vi = 0V .

i=1

PROOF. Let us first prove that (i) implies (ii).

n

Let u ∈ Si ∩ ∑ S j . Then there exist v1 ∈ S1 , v2 ∈ S2 , . . . , vi ∈ Si , . . . , vn ∈ Sn such that

j=1

j=i

u = vi = v1 + v2 + · · · + vi−1 + vi+1 + · · · + vn

⇒

u = v1 + v2 + · · · + vi−1 + 0V + vi+1 + · · · + vn = 0v1 + 0v2 + · · · + 1vi + · · · + 0vn

⇒

v1 = 0V = v2 = · · · = vi = · · · = vn

⇒

u = 0V

[∵ V = S1 ⊕ S2 ⊕ · · · ⊕ Sn ]

152 • Theory and Problems of Linear Algebra

n

Thus, u ∈ Si ∩ ∑ S j ⇒ u = 0V .

j=1

j=i

n

Hence, Si ∩ ∑ S j = 0V . Consequently, S1 , S2 , . . . , Sn are independent.

j=1

j=i

(ii) ⇒(iii).

Let v1 + v2 + · · · + vn = 0V with vi ∈ Si , i ∈ n. Then,

⇒

vi = −(v1 + v2 + · · · + vi−1 + vi+1 + · · · + vn )

⇒

vi = − Σ v j .

n

j=1

j=i

n

n

j=1

j=i

j=1

j=i

Since vi ∈ Si and − ∑ v j ∈ ∑ S j . Therefore,

n

vi ∈ Si ∩ Σ S j

j=1

j=i

⇒

vi = 0V

for all i ∈ n.

[∵ S1 , S2 , . . . , Sn are independent]

for all i ∈ n

(iii) ⇒ (i)

Since V = S1 + S2 + · · · + Sn is given. Therefore, it is sufficient to show that each v ∈ V has

a unique representation as a sum of vectors in S1 , S2 , . . . , Sn .

Let, if possible, v = v1 + · · · + vn and v = w1 + · · · + wn be two representations for v ∈ V .

Then,

v1 + v2 + · · · + vn = w1 + w2 + · · · + wn

⇒

(v1 − w1 ) + · · · + (vn − wn ) = 0V

⇒

v1 − w1 = 0V , . . . , vn − wn = 0V

⇒

v1 = w1 , . . . , vn = wn .

[Using (iii)]

Thus, each v ∈ V has a unique representation. Hence, V = S1 ⊕ S2 ⊕ · · · ⊕ Sn .

Consequently, (iii) ⇒(i).

Hence, (i) ⇒ (ii) ⇒ (iii) ⇒ (i) i.e. (i) ⇔ (ii) ⇔ (iii)

LINEAR VARIETY. Let

S be a subspace of a vector space V (F), and let v ∈ V . Then,

v + S = {v + u : u ∈ S}

is called a linear variety of S by v or a translate of S by v or a parallel of S through v.

S is called the base space of the linear variety and v a leader.

Q.E.D.

Vector Spaces

•

153

Let S be a subspace of a vector space V (F) and let P = v + S be the parallel of

S through v. Then,

THEOREM-9

(i) every vector in P can be taken as a leader of P, i.e u + S = P for all u ∈ P.

(ii) two vectors v1 , v2 ∈ V are in the same parallel of S iff v1 − v2 ∈ S

PROOF. (i) Let u be an arbitrary vector in P = v + S. Then there exists v1 ∈ S such that

u = v + v1 . ⇒ v = u − v1 .

Let w be an arbitrary vector in P. Then,

w = v + v2

for some v2 ∈ S.

⇒

w = (u − v1 ) + v2

[∵ v = u − v1 ]

⇒

w = u + (v2 − v1 )

[∵ v2 − v1 ∈ S]

⇒

w ∈ u+S

Thus, P ⊂ u + S.

Now let w ∈ u + S. Then,

w = u + v3

for some v3 ∈ S.

⇒

w = (v + v1 ) + v3

⇒

w = v + (v1 + v3 )

⇒

w ∈ v + S

[∵ v = u − v1 ]

[∵ v1 + v3 ∈ S]

Thus, u + S ⊂ P. Hence, u + S = P

Since u is an arbitrary vector in P. Therefore, u + S = P for all u ∈ P.

(ii) Let v1 , v2 be in the same parallel of S, say u + S. Then there exist u1 , u2 ∈ S such that

v1 = v + u1 , v2 = v + u2

⇒

v1 − v2 = u1 − u2 ⇒ v1 − v2 ∈ S

[∵ u1 , u2 ∈ S ⇒ u1 − u2 ∈ S]

Conversely, if v1 − v2 ∈ S, then there exists u ∈ S such that

v1 − v2 = u

⇒

v1 = v2 + u

⇒

v1 ∈ v2 + S.

[∵ u ∈ S]

Also, v2 = v2 + 0V where 0V ∈ S

⇒

v2 ∈ v2 + S.

Hence, v1 , v2 are in the same parallel of S.

Q.E.D.

154 • Theory and Problems of Linear Algebra

EXERCISE 2.4

1. Let V = R3 , S = {(0, y, z) : y, z ∈ R} and T = {(x, 0, z) : x, z ∈ R}

Show that S and T are subspaces of V such that V = S + T . But, V is not the direct sum

of S and T .

2. Let V (R) be the vector space of all 2× 2 matrices over R. Let S be the set of all symmetric

matrices in V and T be the set of all skew-symmetric matrices in V . Show that S and T

are subspaces of V such that V = S ⊕ T .

3. Let V = R3 , S = {(x, y, 0) : x, y ∈ R} and T = {(0, 0, z) : z ∈ R}.

Show that S and T are subspaces of V such that V = S ⊕ T .

4. Let S1 , S2 , S3 be the following subspaces of vector space V = R3 :

S1 = {(x, y, z) : x = z, x, y, z ∈ R}

S2 = {(x, y, z) : x + y + z = 0, x, y, z ∈ R}

S3 = {(0, 0, z) : z ∈ R}

Show that: (i) V = S1 + S2 (ii) V = S2 + S3 (iii) V = S1 + S3 When is the sum direct?

5. Let S and T be the two-dimensional subspaces of R3 . Show that S ∩ T = {0}.

6. Let S, S1 , S2 be the subspaces of a vector space V (F). Show that

(S ∩ S1 ) + (S ∩ S2 ) ⊆ S ∩ (S1 + S2 )

7.

8.

9.

10.

Find the subspaces of R2 for which equality does not hold.

Let V be the vector space of n × n square matrices. Let S be the subspace of upper

triangular matrices, and let T be the subspaces of lower triangular matrices.

Find (i) S ∩ T (ii) S + T .

Let F be a subfield of the field C

of complex

the vector

numbers and

let V be

space of all

x y

x 0

2 × 2 matrices over F. Let S =

: x, y, z ∈ F and T =

: x, y ∈ F .

z 0

0 y

Show

that S and

T are subspaces

of V such that V =

S + T but

V = S ⊕ T .

x 0

0 0

Hint: S ∩ T =

:x∈F

∴ S ∩ T =

0 0

0 0

Let S and T be subspaces of a vector space V (F) such that V = S + T and S ∩ T = {0V }.

Prove that each vector v in V there are unique vectors v1 in S and v2 in T such that

v = v1 + v2 .

Let U and W be vector spaces over a field F and let V = U ×W = {(u, w) : u ∈ U and

w ∈ W }. Then V is a vector space over field F with addition in V and scalar multiplication

on V defined by

(u1 , w1 ) + (u2 , w2 ) = (u1 + u2 , w1 + w2 )

and,

k(u, w) = (ku, kw).

Let S = {(u, 0) : u ∈ U } and T = {(0, w) : w ∈ W }. Show that

Vector Spaces

•

157

Since S is a subgroup of additive abelian group V . Therefore, for any u + S, v + S ∈ V /S

u+S = v+S ⇔ u−v ∈ S

Thus, we have defined vector addition, scalar multiplication on V /S and equality of any two

elements of V /S. Now we proceed to prove that V /S is a vector space over field F under the

above defined operations of addition and scalar multiplication.

THEOREM-1

Let V be a vector space over a field F and let S be a subspace of V . Then, the set

V /S = {u + S : u ∈ V }

is a vector space over field F for the vector addition and scalar multiplication on V /S defined

as follows:

(u + S) + (v + S) = (u + v) + S

and,

a(u + S) = au + S

for all u + S, v + S ∈ V /S and for all a ∈ F.

PROOF. First we shall show that above rules for vector addition and scalar multiplication are

well defined, i.e. are independent of the particular representative chosen to denote a coset.

u + S = u + S, where u, u ∈ V and, v + S = v + S, where v, v ∈ V.

Let

Then,

u + S = u + S ⇒ u − u ∈ S and, v + S = v + S ⇒ v − v ∈ S.

Now,

u − u ∈ S, v − v ∈ S

⇒

(u − u ) + (v − v ) ∈ S

⇒

(u + v) − (u + v ) ∈ S

⇒

(u + v) + S = (u + v ) + S

⇒

(u + S) + (v + S) = (u + S)(v + S)

[∵ S is a subspace of V ]

Thus, u + S = u + S and v + S = v + S

⇒ (u + S) + (v + S) = (u + S) + (v + S) for all u, u , v, v ∈ V .

Therefore, vector addition on V /S is well defined.

Again,

λ ∈ F, u − u ∈ S ⇒ λ(u − u ) ∈ S ⇒ λu − λu ∈ S ⇒ λu + S = λu + S

158 • Theory and Problems of Linear Algebra

Therefore, scalar multiplication on V /S is well defined.

V − 1 : V /S is an abelian group under vector addition:

Associativity: For any u + S, v + S, w + S ∈ V /S, we have

[(u + S) + (v + S)] + (w + S) = ((u + v) + S) + (w + S)

= {(u + v) + w} + S

[By associativity of vector addition on V ]

= {u + (v + w)} + S

= (u + S) + ((v + w) + S)

= (u + S) + [(v + S) + (w + S)]

So, vector addition is associative on V /S.

Commutativity: For any u + S, v + S ∈ V /S, we have

(u + S) + (v + S) = (u + v) + S

= (v + u) + S

[By commutativity of vector addition on V ]

= (v + S) + (u + S)

So, vector addition is commutative on V /S.

Existence of additive identity: Since 0V ∈ V therefore, 0V + S = S ∈ V /S.

Now,

(u + S) + (0V + S) = (u + 0V ) + S = (u + S)

∴

for all u + S ∈ V /S

(u + S) + (0V + S) = (u + S) = (0V + S) + (u + S) for all u + S ∈ V /S.

So 0V + S = S is the identity element for vector addition on V /S.

Existence of additive inverse: Let u + S be an arbitrary element of V /S. Then,

u ∈ V ⇒ −u ∈ V ⇒ (−u) + S ∈ V /S

Thus, for each u + S ∈ V /S there exists (−u) + S ∈ V /S such that

(u + S) + ((−u) + S) = [u + (−u)] + S = 0V + S = S

⇒ (u + S) + ((−u) + S)= 0V + S = (−u) + S + (u + S) [By commutativity of addition on V /S]

So, each u + S ∈ V /S has its additive inverse (−u) + S ∈ V /S.

Hence, V /S is an abelian group under vector addition.

Vector Spaces

•

159

V − 2 : For any u + S, v + S ∈ V /S and λ, µ ∈ F, we have

(i)

λ[(u + S) + (v + S)] = λ[(u + v) + S]

= [λ(u + v)] + S

[By V -2(i) in V]

= (λu + λv) + S

(ii)

= (λu + S) + (λv + S)

[By definition of addition on V/S]

= λ(u + S) + λ(v + S)

[By def. of scalar multiplication on V/S]

(λ + µ)(u + S) = (λ + µ)u + S

[By def. of scalar multiplication on V/S]

= (λu + µu) + S

= (λu + S) + (µu + S)

[By V -2(ii) in V]

[By definition of addition on V/S]

= λ(u + S) + µ(u + S)

(iii)

(λµ)(u + S) = (λµ)u + S

= λ(µu) + S

[By V -2(iii) in V]

= λ (µu + S)

= λ[µ(u + S)]

1(u + S) = 1u + S = u + S

(iv)

[By V -2(iv) in V]

Hence, V /S is a vector space over field F.

Q.E.D.

QUOTIENT SPACE.

Let V be a vector space over a field F and let S be a subspace of V . Then

the set V /S = {u + S : u ∈ V } is a vector space over field F for the vector addition and scalar

multiplication defined as

(u + S) + (v + S) = (u + v) + S

a(u + S) = au + S

For all u + S, v + S ∈ V /S and for all a ∈ F.

This vector space is called a quotient space of V by S.

EXERCISE 2.5

1. If S is a subspace of a vector space V (F), then show that there is one-one correspondence

between the subspaces of V containing S and subspaces of the quotient space V /S.

2. Define quotient space.

160 • Theory and Problems of Linear Algebra

2.6 LINEAR COMBINATIONS

LINEAR COMBINATION.

Let V be a vector space over a field F. Let v1 , v2 , . . . , vn be n vectors

n

in V and let λ1 , λ2 , . . . , λn be n scalars in F. Then the vector λ1 v1 + · · · + λn vn (or ∑ λi vi ) is

i=1

called a linear combination of v1 , v2 , . . . , vn . It is also called a linear combination of the set

S = {v1 , v2 , . . . , vn }. Since there are finite number of vectors in S, it is also called a finite linear

combination of S.

If S is an infinite subset of V , then a linear combination of a finite subset of S is called a

finite linear combination of S.

ILLUSTRATIVE EXAMPLES

Express v = (−2, 3) in R2 (R) as a linear combination of the vectors v1 = (1, 1)

and v2 = (1, 2).

EXAMPLE-1

Let x, y be scalars such that

SOLUTION

v = xv1 + yv2

⇒

(−2, 3) = x(1, 1) + y(1, 2)

⇒

(−2, 3) = (x + y, x + 2y)

x + y = −2 and x + 2y = 3

⇒

x = −7, y = 5

⇒

Hence, v = −7v1 + 5v2 .

Express v = (−2, 5) in R2 (R) as a linear combination of the vectors v1 = (−1, 1)

and v2 = (2, −2).

EXAMPLE-2

SOLUTION

Let x, y be scalars such that

v = xv1 + yv2

⇒

(−2, 5) = x(−1, 1) + y(2, −2)

⇒

(−2, 5) = (−x + 2y, x − 2y)

⇒

−x + 2y = −2 and x − 2y = 5

This is an inconsistent system of equations and so it has no solution.

Hence, v cannot be written as the linear combination of v1 and v2 .

EXAMPLE-3

Express v = (1, −2, 5) in R3 as a linear combination of the following vectors:

v1 = (1, 1, 1), v2 = (1, 2, 3), v3 = (2, −1, 1)

162 • Theory and Problems of Linear Algebra

This is equivalent to

1 2 1

x

2

0 2 −2 y = 1 Applying R2 → R2 + 3R1 , R3 → R3 + (−2)R1

0 −5 5

z

−1

1 2 1

x

2

5

or, 0 2 −2 y = 1 Applying R3 → R3 + R2

2

0 0 0

z

3/2

This is an inconsistent system of equations and so has no solution. Hence, v cannot be written

as a linear combination of v1 , v2 and v3 .

Express the polynomial f (x) = x2 +4x−3 in the vector space V of all polynomials

over R as a linear combination of the polynomials g(x) = x2 − 2x + 5, h(x) = 2x2 − 3x and

φ(x) = x + 3.

EXAMPLE-5

Let α, β, γ be scalars such that

SOLUTION

f (x) = u g(x) + v h(x) + w φ(x)

2

2

for all x ∈ R

2

⇒

x + 4x − 3 = u(x − 2x + 5) + v(2x − 3x) + w(x + 3 for all x ∈ R

⇒

x2 + 4x − 3 = (u + 2v)x2 + (−2u − 3v + w)x + (5u + 3w) for all x ∈ R

(i)

⇒

u + 2v = 1, −2u − 3v + w = 4, 5u + w = −3

(ii)

The matrixform of this system

of equations

is

1 2 0

u

1

−2 −3 1 v = 4

5 0 3

w

−3

This system of equations is equivalent to

1 2 0

u

1

0 1 1 v = 6 Applying R2 → R2 + 2R1 , R3 → R3 − 5R1

0 −10 3

w

−8

1 2 0

u

1

0 1 1 v = 6 Applying R3 → R3 + 10R2

or,

0 0 13

w

52

Thus, the system of equations obtained in (i) is consistent and is equivalent to

u + 2v = 1, v + w = 6 and 13w = 52 ⇒

Hence,

u = −3, v = 2, w = 4

f (x) = −3g(x) + 2h(x) + 4φ(x).

REMARK. The equation (i) obtained in the above solution is an identity in x, that is it holds for

any value of x. So, the values of u, v and w can be obtained by solving three equations which

can be obtained by given any three values to variable x.

164 • Theory and Problems of Linear Algebra

Let x, y be scalars such that

SOLUTION

u = xv1 + yv2

⇒

(1, k, 4) = x(1, 2, 3) + y(2, 3, 1)

⇒

(1, k, 4) = (x + 2y, 2x + 3y, 3x + y)

x + 2y = 1, 2x + 3y = k and 3x + y = 4

⇒

7

1

and y = −

5

5

11

Substituting these values in 2x + 3y = k, we get k =

5

Solving x + 2y = 1 and 3x + y = 4, we get x =

Consider the vectors v1 = (1, 2, 3) and v2 = (2, 3, 1) in R3 (R). Find conditions on

a, b, c so that u = (a, b, c) is a linear combination of v1 and v2 .

EXAMPLE-8

SOLUTION

Let x, y be scalars in R such that

u = xv1 + yv2

⇒

(a, b, c) = x(1, 2, 3) + y(2, 3, 1)

⇒

(a, b, c) = (x + 2y, 2x + 3y, 3x + y)

⇒

x + 2y = a, 2x + 3y = b and 3x + y = c

Solving first two equations, we get x = −3a + 2b and y = 2a − b.

Substituting these values in 3x + y = c, we get

3(−3a + 2b) + (2a − b) = c or, 7a − 5b + c = 0 as the required condition.

EXERCISE 2.6

1. Express v = (3, −2) in R2 (R) as a linear combination of the vectors v1 = (−1, 1) and

v2 = (2, 1).

2. Express v = (−2, 5) in R2 (R) as a linear combination of the vectors v1 = (2, −1) and

v2 = (−4, 2).

3. Express v = (3, 7, −4) in R3 (R) as a linear combination of the vectors v1 = (1, 2, 3),

v2 = (2, 3, 7) and v3 = (3, 5, 6).

4. Express v = (2, −1, 3) in R3 (R) as a linear combination of the vectors v1 = (3, 0, 3),

v2 = (−1, 2, −5) and v3 = (−2, −1, 0).

5. Let V be the vector space of all real polynomials over field R of all real numbers. Express the polynomial p(x) = 3x2 + 5x − 5 as a linear combination of the polynomials

f (x) = x2 + 2x + 1, g(x) = 2x2 + 5x + 4 and h(x) = x2 + 3x + 6.

6. Let V = P2 (t) be the vector space of all polynomials of degree less than or equal to 2 and

t be the indeterminate. Write the polynomial f (t) = at 2 + bt + c as a linear combination

of the polynomials p1 (t) = (t − 1)2 , p2 (t) = t − 1 and p3 (t) = 1.

7. Write the vectors u = (1, 3, 8) in R3 (R) as a linear combination of the vectors v1 = (1, 2, 3)

and v2 = (2, 3, 1).

Vector Spaces

•

165

8. Write the vector u = (2, 4, 5) in R3 (R) as a linear combination of the vectors v1 = (1, 2, 3)

and v2 = (2, 3, 1).

2×2 of all 2 × 2 matrices over the field R of real numbers.

9. Consider the vector space

R

5 −6

Express the matrix M =

as a linear combination of the matrices

7 8

1 0

0 1

0 0

0 0

E11 =

, E12 =

, E21 =

and E22 =

0 0

0 0

1 0

0 1

1

−7

v1 + v2

1. v =

3

3

3. v = 2v1 − 4v2 + 3v3

ANSWERS

2. v is not expressible as a linear combination of v1 and v2 .

4. v is not expressible as a linear combination of v1 , v2 , v3 .

5. p(x) = 3 f (x) + g(x) − 2h(x)

7. u = 3v1 − v2

8. Not Possible

6. f (t) = ap1 (t) + (2a + b)p2 (t) + (a + b + c)p3 (t)

9. M = 5E11 − 6E12 + 7E21 + 8E22

2.7 LINEAR SPANS

LINEAR SPAN OF A SET. Let V be a vector space over a field F and let S be a subset of V . Then

the set of all finite linear combinations of S is called linear span of S and is denoted by [S].

Thus,

[S] =

n

∑ λi vi : λ1 , λ2 , . . . , λn ∈ F, n ∈ N and v1 , v2 , . . . , vn ∈ S .

i=1

If S is a finite

1 , v2 , . . . , vn }, then

set, say S = {v

[S] =

n

Σ λi vi : λi ∈ F .

i=1

In this case [S] is also written as [v1 , v2 , . . . , vn ].

If S = {v}, then [S] = Fv as shown in Example 5 on page 136.

Consider a subset S = {(1, 0, 0), (0, 1, 0)} of real vector space R3 . Any linear combination

of a finite number of elements of S is of the form λ(1, 0, 0)+ µ(0, 1, 0) = (λ, µ, 0). By definition,

the set of all such linear combinations is [S]. Thus, [S] = {(λ, µ, 0) : λ, µ ∈ R}. Clearly [S] is

xy-plane in three dimensional Euclidean space R3 and is a subspace of R3 . In fact, this is true

for every non-void subset of a vector space. The following theorem proves this result.

Let S be a non-void subset of a vector space V (F). Then [S], the linear span of

S, is a subspace of V .

THEOREM-1

PROOF. Since 0V = 0v1 + 0v2 + · · ·+ 0vn for every positive integer n. Therefore, 0V is a finite

linear combination of S and so it is in [S].

Thus, [S] is a non-void subset of V .

166 • Theory and Problems of Linear Algebra

Let u and v be any two vectors in [S]. Then,

u = λ1 u1 + λ2 u2 + · · · + λn un for some scalars λi ∈ F, some u’s

i ∈ S, and a positive integer n.

’s

’s

and, v = µ1 v1 + µ2 v2 + · · · + µm vm for some µi ∈ F, some vi ∈ S, and a positive integer m.

If λ, µ are any two scalars in F, then