A Data Augmentation Approach for a Class of Statistical Inference Problems

advertisement

A Data Augmentation Approach for a Class of Statistical

Inference Problems

†

†

∗

‡

Rodrigo Carvajal , Rafael Orellana , Dimitrios Katselis , Pedro Escárate and

†

Juan C. Agüero

†

Electronics Engineering Department, Universidad Técnica Federico Santa Marı́a, Chile.

B {rodrigo.carvajalg, juan.aguero}@usm.cl, rafael.orellana.prato@gmail.com

∗

Coordinated Science Laboratory, University of Illinois at Urbana-Champaign, IL, USA. B katselis@illinois.edu

‡

Large Binocular Telescope Observatory, Steward Observatory, University of Arizona, Tucson, AZ, USA.

B pescarate@lbto.org

Abstract

We present an algorithm for a class of statistical inference problems. The main idea is to reformulate the

inference problem as an optimization procedure, based on the generation of surrogate (auxiliary) functions.

This approach is motivated by the MM algorithm, combined with the systematic and iterative structure of

the Expectation-Maximization algorithm. The resulting algorithm can deal with hidden variables in Maximum

Likelihood and Maximum a Posteriori estimation problems, Instrumental Variables, Regularized Optimization

and Constrained Optimization problems.

The advantage of the proposed algorithm is to provide a systematic procedure to build surrogate functions

for a class of problems where hidden variables are usually involved. Numerical examples show the benefits of

the proposed approach.

1

Introduction

Problems in statistics and system identification often involve variables for which measurements are not available.

Among others, real-life examples can be found in communication systems [5, 19] and systems with quantized

data [18, 50]. In Maximum Likelihood (ML) estimation problems, the likelihood function is in general difficult

to optimize by using closed-form expressions, and numerical approximations are usually cumbersome. These

difficulties are traditionally avoided by the utilization of the Expectation-Maximization (EM) algorithm [10], where

a surrogate (auxiliary) function is optimized instead of the main objective function. This surrogate function includes

the complete data, i.e. the measurements and the random variables for which there are no measurements. The

incorporation of such hidden data or latent variables is usually termed as data augmentation, where the main goal

is to obtain, in general, simple and fast algorithms [49].

On the other hand, the MM1 algorithm [27] is generally employed for solving more general optimization problems, not only for ML and Maximum a Posteriori (MAP) estimation problems. In general, the main motivation

1 MM

stands for Maximization-Minorization or Minimization-Majorization, depending on the optimization problem that needs to

be solved.

1

for using the MM algorithm is the lack of closed-form expressions for the solution of the optimization problem

or dealing with objective cost functions that are not convex. Applications where the MM algorithm has been

utilized include communication systems problems [38] and image processing [14]. For constrained optimization

problems, an elegant solution is presented by Marks and Wright [34], where the constraints are incorporated via

the formulation of surrogate functions. Surprisingly, Marks’ approach has not received the same attention from

the scientific community when it comes to compare it with the EM and the MM algorithms. In fact, these three

approaches are contemporary, but the EM algorithm has attracted most of the attention (out of the three methods),

and it has been used for solving linear and nonlinear statistical inference problems in biology and engineering, see

e.g. [1,4,21,26,42,52], amongst others. On the other hand, as shown in [27], the MM algorithm has obtained much

less attention, while Marks’ approach is mostly known to a limited audience in the the Communication Systems

community. These three approaches have important similarities: i) a surrogate function is defined and optimized in

place of the original optimization problem, and ii) the solution is obtained iteratively. In general, these algorithms

are “principles and recipes” [36] or a “philosophy” [27] for constructing solutions to a broad variety of optimization

problems.

In this paper we adopt the ideas behind [10, 27, 34] to develop an algorithm for a special class of functions. Our

approach generalizes the ones of [10, 27, 34] by reinterpreting the E-step in the EM algorithm and expressing the

cost function in terms of an infinite mixture or kernel. In particular cases, the kernel corresponds to a variancemean Gaussian mixture (VMGM), see e.g. [39]. VMGMs, also referred to as normal variance-mean mixtures [3]

and normal scale mixtures [51], have been considered in the literature for formulating EM-based approaches to

solve ML [2] and MAP problems [6, 17, 39]. Our approach is applicable to a wide class of functions, which allows

for defining the likelihood function, the prior density function, and constraints as kernels, extending also the work

in [34]. Thus, our work encompasses the following contributions: i) a systematic approach to constructing surrogate

functions for a class of cost functions and constraints, ii) a class of kernels where the unknown quantities of the

algorithm can be easily computed, and iii) a generalization of [10, 27, 34] by including the cost function and the

constraints in one general expression. Our proposal is based, among other things, on a particular way to apply

Jensen’s inequality [12, pp. 24–25]. In addition, we provide the details on how to construct quadratic surrogate

functions for cost functions and constraints.

Our algorithm is tested by two examples. In the first one we considered the problem of estimating the rotational

velocities of stars. The system model corresponds to the convolution of two probability density functions (pdf’s)

and thus is an infinite mixture. We show that our reinterpretation of the EM algorithm allows for the direct

application of our proposal for the correct estimation of the parameter of interest. In the second example we

considered a system modelled by a linear regression. In this example the regression matrix is known and the

parameter vector is unknown. The problem is solved considering a constrained optimization problem, where the

(inequality) constraint is given by a multivariate `q -norm of the parameter vector. In this example, we show that

although the problem cannot be solved using the EM algorithm, the solution given by our proposal can iteratively

converge to the global optimum.

2

2

Rudiments of the proposed approach

2.1

The EM algorithm

Let us consider an estimation problem and its corresponding log-likelihood function defined as `(θ) = log p(y|θ),

where p(y|θ) is the likelihood function, θ ∈ Rp , and y ∈ RN . Denoting the complete data by z ∈ Ω(y), and using

Bayes’ theorem, we can obtain:

`(θ) = log p(y|θ) = log p(z|θ) − log p(z|y, θ).

(1)

(i)

Let us assume that at the ith iteration we have the estimate θ̂ . By integrating at both sides of (1) with respect

(i)

(i)

(i)

to p(z|y, θ̂ ) we obtain `(θ) = Q(θ, θ̂ ) − H(θ, θ̂ ), where

Z

(i)

(i)

Q(θ, θ̂ ) =

log p(z|θ)p(z|y, θ̂ )dz,

Ω(y)

Z

(i)

(i)

H(θ, θ̂ ) =

log p(z|y, θ)p(z|y, θ̂ )dz.

(2)

(3)

Ω(y)

(i)

Using Jensen’s inequality [12, pp. 24–25], it is possible to show that for any value of θ, the function H(θ, θ̂ ) is

(i)

decreasing. Hence, the optimization is only carried out on the auxiliary function Q(θ, θ̂ ) because, by maximizing

(i)

Q(θ, θ̂ ), the new parameter θ̂

(i+1)

is such that the likelihood function increases (see e.g. [10, 35]).

In general, the EM method can be summarised as follows:

E-step: Compute the expected value of the joint likelihood function for the complete data (measurements and

(i)

hidden variables) based on a given parameter estimate, θ̂ . Thus, we have (see e.g. [10]):

(i)

Q(θ, θ̂ ) = E[ log p(z|θ)|y, θ̂

(i)

],

(4)

(i)

M-step: Maximize the function Q(θ, θ̂ ) (4), with respect to θ:

θ̂

(i+1)

(i)

= arg max Q(θ, θ̂ ).

(5)

θ

This succession of estimates converges to a stationary point of the log-likelihood function [48].

2.2

The MM algorithm

(i)

The idea behind the MM algorithm [27] is to construct a surrogate function g(θ, θ̂ ), that majorizes (for minimization problems) or minorizes (for maximization problems) a given cost functions f (θ) [27] at θ̂

(i)

f (θ) ≤ g(θ, θ̂ )

such that,

for minimization problems, or

(i)

f (θ) ≥ g(θ, θ̂ )

(i)

(i)

for maximization problems, and

(i)

f (θ) = g(θ , θ ),

where θ̂

(i)

is an estimate of θ. Then, the surrogate function is iteratively optimized until convergence. Hence, for

maximizing f (θ) we have [45]

θ̂

(i+1)

(i)

= arg max g(θ, θ̂ ).

θ

(6)

For the construction of the surrogate function, popular techniques include the second order Taylor approximation,

the quadratic upper bound principle and Jensen’s inequality for convex functions, see, e.g., [45].

3

Remark 1 The iterative strategy utilized in the MM algorithm converges to a local optimum since

f (θ̂

(i+1)

) ≥ g(θ̂

(i+1)

(i)

(i)

(i)

(i)

, θ̂ ) ≥ g(θ̂ , θ̂ ) = f (θ̂ ).

5

2.3

Data Augmentation in Inference Problems

Data augmentation algorithms are based on the construction of the augmented data and its many-to-one mapping

Ω(y). This augmented data is assumed to describe a model from which the observed data y is obtained via

marginalization [46]. That is, a system with a likelihood function p(y|θ) can be understood to arise from

Z

p(y|θ) = p(y, x|θ)dx,

(7)

where the augmented data corresponds to (y, x) and x is the latent data [46, 49]. This idea has been utilized for

supervised learning [13] and the development of the Bayesian Lasso [37], to mention a few examples. In those

problems, the Laplace distribution is expressed as a two-level hierarchical-Bayes model. This equivalence is obtained

from the representation of the Laplace distribution as a VMGM:

a −a|θ|

e

=

2

Z

0

∞

2

2

1

a2

√

e−θ /(2λ) e−a λ/2 dλ.

2

2πλ

{z

} | {z }

|

(8)

p(λ)

p(θ|λ)

In fact, there are several pdf’s than can be expressed as VMGMs, as shown in Table 1 [39], where g(θ) is the penalty

term that can be expressed as a pdf. In addition, in [6] it was developed an early version of the methodology

presented in this paper, exploring the estimation of a sparse parameter vector utilizing the `q -(pseudo)norm, with

0 < q < 1.

Table 1: Selection of mean-variance mixture representations for penalty functions. p(θj )

=

R∞

0

Nθj (µj +

λj uj , τ 2 s2j λj )p(λj )dλj

Penalty function

g(θj )

uj µj

p(λj )

Ridge

(θj /τ )2

0

0

λj = 1

Lasso

|θj /τ |

0

0 Exponential

Bridge

|θj /τ |α

0

0

0

0 Exp-Gamma

Generalized

Double-Pareto

3

3.1

h

(1+α)

τ

i

log 1 +

|θj |

(ατ )

Stable

Marks’ approach for constrained optimization

Constrained problems in Statistical Inference

Statistical Inference and System Identification techniques include a variety of methods that can be used in order

to obtain a model of a system from data. Classical methods, such as Least Squares, ML, MAP [20], Prediction

Error Method, Instrumental Variables [43], and Stochastic Embedding [32] have been considered in the literature for

4

such task. However, the increasing complexity of modern system models has motivated researchers to revisit and

reconsider those techniques for some problems. This has resulted in the incorporation of constraints and penalties,

yielding an often more complicated optimization problem. For instance, it has been shown that the incorporation

of linear equality constraints may improve the accuracy of the parameter estimates, see e.g [33]. On the other

hand, the incorporation of regularization terms (or penalties) also improves the accuracy of the estimates, reducing

the effect of noise and eliminating spurious local minima [30]. Regularization can be mainly incorporated in two

ways: by adding regularizing constraints (a penalty function) or by including a probability density function (pdf)

as a prior distribution for the parameters, see e.g. [17]. Another way to improve the estimation is by incorporating

inequality constraints, where certain functions of the parameters may be required, for physical reasons amongst

others, to lie between certain bounds [25]. From this point of view, it is possible to consider the classical methods

with constraints or penalties, as in [25, 28, 30, 32].

Perhaps one of the most utilized approaches for penalized estimation (with complicated non-linear expressions)

is the MM algorithm – for details on the MM algorithm see Section 2.2. This technique allows for the utilization of

a surrogate function that is simple to handle, in terms of derivatives and optimization techniques, and that is, in

turn, iteratively solved. However, its inequality constraint counterpart, here referred to as Marks’ approach [34],

is not as well known as the MM algorithm. Moreover, there is no straightforward manner to obtain such surrogate

function. In this paper we focus on a systematic way to obtain the corresponding surrogate function using Marks’

approach for a class of constraints.

3.2

Mark’s approach

The approach in [34] deals with inequality constraints by using a similar approach to the EM and MM algorithms.

The basic idea is, again, to generate a surrogate function that allows for an iterative procedure whose optimum

value is the optimum value of the original optimization problem.

Let us consider the following constrained optimization problem:

θ ∗ = arg min f (θ)

θ

s. t. g(θ) ≤ 0,

(9)

where f (θ) is the objective function and g(θ) encodes the constraint of the optimization problem. In particular,

let us focus on the case where g(θ) is not a convex function. This implies that the optimization problem cannot

be solved directly using standard techniques, such as quadratic programming or fractional programming. This

(i)

(i)

difficulty can be overcome by utilizing a surrogate function Q(θ, θ̂ ) at a given estimate θ̂ , such that

(i)

g(θ) ≤ Q(θ, θ̂ )

(i)

(i)

(10)

(i)

g(θ̂ ) = Q(θ̂ , θ̂ )

d

g(θ)

dθ

=

θ=θ̂

(i)

(i)

d

Q(θ, θ̂ )

dθ

(11)

(12)

θ=θ̂

(i)

Provided the above properties are satisfied, then the following approximation of (9):

θ (i+1) = arg min f (θ)

θ

(i)

s. t. Q(θ, θ̂ ) ≤ 0,

(13)

iteratively converges to the solution of the optimization problem (9). As shown in [34], the optimization problem

5

in (13) is equivalent to the original problem (9), since the solution of (13) converges to a point that satisfies the

Karush-Kuhn-Tucker conditions of the original optimization problem.

Remark 2 Mark’s approach can be considered as a generalization of the MM algorithm, since the latter can be

derived (for a broad class of problems) from the former. Let us consider the following problem:

θ ∗ = arg min f (θ).

(14)

θ

Using the epigraph representation of (14) [24], we obtain the equivalent problem

θ ∗ = arg min t

θ

s. t. f (θ) ≤ t,

(15)

Using Mark’s approach (13), we can iteratively find a local optimum of (14) via

θ (i+1) = arg min t

θ

(i)

s. t. Q(θ, θ̂ ) ≤ t,

(16)

(i)

where Q(θ, θ̂ ) in (16) is a surrogate function for f (θ) in (14). From the epigraph representation we then obtain

(i)

θ (i+1) = arg min Q(θ, θ̂ ),

(17)

which is the definition of the MM algorithm (see 2.2) for more details.

5

θ

4

A systematic approach to construct surrogate functions for a class

of optimization problems

Here, we consider a general optimization cost defined as:

Z

V(θ) =

K(z, θ)dµ(z),

(18)

Ω(y)

where θ is a parameter vector, y is a given data (i.e. measurements), z is the complete data (comprised of the

observed data y and the hidden variables (unobserved data), Ω(y) is a mapping from the sample space of z to

the sample space of y, K(·, ·) is a (positive) kernel function, and µ(·) is a measure, see e.g [12]. The definition in

(18) is based on the definition of the auxiliary function Q in the EM algorithm [10, eq. (1.1)], where it is assumed

throughout the paper that there is a mapping that relates the not observed data to the observed data, and that

the complete data lies in Ω(y) [10]. Notice that in (18) the kernel function may not be a pdf. However, several

functions can be expressed in terms of a pdf. The most common cases are Gaussian kernels (yielding VMGMs) [3]

and Laplace kernels (yielding Laplace mixtures) [16].

Remark 3 Notice that, as explained in Section 1, once the hidden data has been selected, the data augmentation

procedure comes with the definition of V(θ) in (18). From here, we follow the systematic nature of the EM and

MM algorithms in terms of the iterative nature of the technique.

6

5

Since we are considering the optimization of the function V(θ), we can also consider the optimization of the

function

J (θ) = log V(θ).

(19)

Without modifying the cost function in (19), we can multiply and divide by the logarithm of the kernel function,

obtaining:

J (θ) = log V(θ) = log V(θ)

K(z, θ)

log K(z, θ)

= log K(z, θ) − log

.

log K(z, θ)

V(θ)

(20)

(i)

(i)

Let us assume that at the ith iteration we have the estimate θ̂ . Then, we can multiply by

K(z, θ̂ )

V(θ̂

(i)

and

integrate on both sides of (20) with respect to dµ(z), obtaining:

(i)

Z

log V(θ)

J (θ) =

K(z, θ̂ )

V(θ̂

Ω(y)

(i)

(i)

dµ(z) = log V(θ)

(i)

= Q(θ, θ̂ ) − H(θ, θ̂ ).

(21)

where :

(i)

Z

(i)

Q(θ, θ̂ ) =

log[K(z, θ)]

(i)

(i)

dµ(z),

(22)

V(θ̂ )

Ω(y)

Z

H(θ, θ̂ ) =

K(z, θ̂ )

log

Ω(y)

(i)

K(z, θ) K(z, θ̂ )

dµ(z),

(i)

V(θ)

V(θ̂ )

(23)

are auxiliary functions. As in the EM algorithm, for any θ, and using Jensen’s inequality [12, pp. 24–25], we have:

(i)

Z

(i)

(i)

(i)

K(z, θ) K(z, θ̂ )

H(θ, θ̂ )− H(θ̂ , θ̂ ) = log

dµ(z)

(i)

V(θ)

V(θ̂ )

Ω(y)

"

Z

−

log

(i)

(i) #

K(z, θ̂ ) K(z, θ̂ )

dµ(z)

(i)

V(

θ̂

)

Ω(y)

"

(i)

(i) #

Z

K(z, θ)V(θ̂ ) K(z, θ̂ )

dµ(z)

=

log

(i)

(i)

V(θ)K(z,

θ̂

)

V(

θ̂

)

Ω(y)

Z

K(z, θ)

≤ log

dµ(z)

V(θ

(i)

V(θ̂ )

Ω(y)

= 0.

(24)

(i)

Hence, for any value of θ, the function H(θ, θ̂ ) in (23) is a decreasing function.

Remark 4 The kernel function K(z, θ) satisfies the standing assumption K(z, θ) > 0 since the proposed scheme

is built, among others, on the logarithm of the kernel function K(z, θ). The definition of the kernel function in

(18) allows for kernels that are not pdf ’s. On the other hand, some kernels may correspond to a scaled version of

a pdf. In that sense, for the cost function in (18) we can define a new kernel and a new measure as

Z

K(z, θ)

R

K̄(z, θ) =

, µ̄(z) =

K(z, θ)dθ µ(z),

K(z, θ)dθ

Z

⇒ V(θ) =

K̄(z, θ)dµ̄(z).

Ω(y)

5

7

Remark 5 In the proposed methodology, it is possible to optimize the surrogate function defined by

Z

(i)

(i)

Q̄(θ, θ̂ ) =

log[K(z, θ)]K(z, θ̂ )dµ(z),

(25)

Ω(y)

(i)

since V(θ̂ ) in (22) does not depend on the parameter θ. Thus, the proposed method corresponds to a variation of

the EM algorithm that is not limited to probability density functions (e.g. the likelihood function) for solving ML

and MAP estimation problems. Instead, our version considers general measures (µ(z)), where the mapping over

5

the measurement data Ω(y) is a given set.

The idea behind using a surrogate function is to obtain a simpler algorithm for the optimization of the objective

function when compared to the original optimization problem. This can be achieved iteratively if the Fisher Identity

for the surrogate function and the objective function is satisfied. That is,

∂

J (θ)

∂θ

=

θ=θ̂

(i)

(i)

∂

Q(θ, θ̂ )

∂θ

.

θ=θ̂

(26)

(i)

(i)

Lemma 1 For the class of objective functions in (18), the surrogate function Q(θ, θ̂ ) in (22) satisfies the Fisher

identity defined in (26).

Proof : From (21) we have:

∂

J (θ)

∂θ

=

θ=θ̂

(i)

(i)

∂

Q(θ, θ̂ )

∂θ

−

θ=θ̂

(i)

(i)

∂

H(θ, θ̂ )

∂θ

.

θ=θ̂

(i)

(i)

Next, let us consider the gradient of the auxiliary function H(θ, θ̂ ):

(i)

∂

H(θ, θ̂ )

∂θ

Z

=

K(z, θ)

V(θ)

ZΩ(y)

∂

∂θ

=

θ=θ̂

(i)

(i)

K(z, θ̂ )

∂ K(z, θ)

dµ(z)

(i)

(i)

(i)

V(θ

θ=θ̂ ∂θ

θ=θ̂

V(θ̂ )

−1

K(z, θ)

V(θ)

dµ(z)

θ=θ̂

(i)

Ω(y)

Z

=

∂

∂θ

K(z, θ)

dµ(z)

V(θ)

Ω(y)

θ=θ̂

(i)

= 0.

Hence, (26) holds.

Remark 6 Note that the Fisher identity in Lemma 1 is well known in the EM-framework. However, we have specialized this result for the problem in this paper (i.e. when K(z, θ) is not necessarily a probability density function)5

(i)

Lemma 2 The surrogate function Q(θ, θ̂ ) in (22) can be utilized to obtain an adequate surrogate function that

satisfies the properties in Mark’s approach in (10)-(12).

Proof : Notice that in Mark’s approach the optimization problem corresponds to the minimization of the objective

(i)

(i)

function. Hence, to maximize, we have J (θ) = −Q(θ, θ̂ ) + H(θ, θ̂ ). From (21) we can construct the surrogate

8

Table 2: Proposed algorithm.

Step 1: Find a kernel that satisfies (18).

Step 2: i = 0.

(i)

Step 3: Obtain an initial guess θ̂ .

(i)

Step 4: Compute Q(θ, θ̂ ) as in (22).

(i)

Step 5: Compute Q̃(θ, θ̂ ).

(i)

Step 6: Incorporate Q̃(θ, θ̂ ) in the optimization problem and solve.

Step 7: i = i + 1 and back to Step 4 until convergence.

(i)

(i)

functions Q̃(θ, θ̂ ) and H̃(θ, θ̂ ) since

(i)

(i)

(i)

(i)

(i)

(i)

(i)

(i)

J (θ) = − (Q(θ, θ̂ ) − Q(θ̂ , θ̂ ) + J (θ̂ ) + (H(θ, θ̂ ) − Q(θ̂ , θ̂ ) + J (θ̂ ) .

{z

} |

{z

}

|

Q̃(θ,θ̂

(i)

(i)

H̃(θ,θ̂

)

(i)

(i)

(i)

(27)

)

(i)

(i)

The function H̃(θ, θ̂ ) satisfies H̃(θ, θ̂ ) − H̃(θ̂ , θ̂ ) ≥ 0, which implies that J (θ) ≤ Q̃(θ, θ̂ ), satisfying

(i)

(i)

(i)

(i)

(i)

(i)

(i)

(i)

(10). From Q̃(θ, θ̂ ) = Q(θ, θ̂ ) − Q(θ̂ , θ̂ ) + J (θ̂ ) we can obtain Q̃(θ̂ , θ̂ ) = J (θ̂ ), satisfying (11).

(i)

(i)

Finally, given that the auxiliary function H̃(θ, θ̂ ) satisfies (26), Q̃(θ, θ̂ ) satisfies (12).

Remark 7 Since

(i)

d

dθ Q(θ, θ̂ )

=

(i)

d

dθ Q̃(θ, θ̂ ),

(i)

(i)

it is simpler to consider the function Q(θ, θ̂ ) instead of Q̃(θ, θ̂ )

in penalized (regularized) and MAP estimation problems, as shown in [6] and [17].

5

We summarize our proposed algorithm in Table 2.

5

A quadratic surrogate function for a class of kernels

In this paper we focus on a special class of the kernel functions K(z, θ). For this particular class, the following is

satisfied:

∂

log[K(z, θ)] = A(z)θ + b,

∂θ

(28)

where A(z) is a matrix and b is a vector, both of adequate dimensions. Then, we have that

Z

(i)

∂

Q(θ, θ̂ ) =

∂θ

(i)

[A(z)θ + b]

K(z, θ̂ )

(i)

dµ(z)

V(θ̂ )

Ω(y)

=

Z

(i)

A(z)

K(z, θ̂ )

(i)

V(θ̂ )

Z

dµ(z)

θ + b

Ω(y)

(i)

K(z, θ̂ )

(i)

dµ(z)

V(θ̂ )

Ω(y)

= Rθ + b.

(29)

(i)

Remark 8 Notice that the previous expression is linear with respect to θ. This implies that the function Q(θ, θ̂ )

5

is quadratic with respect to the parameter vector θ.

From the Fisher Identity in (26) we have that

∂

J (θ)

∂θ

= Rθ̂

θ=θ̂

(i)

9

(i)

+ b,

(30)

from which we can solve for R in some cases2 . In particular, if A(z) is a diagonal matrix, then R is also a diagonal

matrix defined by R = diag[r1 , r2 , ...]. Thus, we have

∂

J (θ)

∂θk

=

θ=θ̂

(i)

(i)

rk θ̂i + bk

⇒ rk =

(i)

where θi is the ith component of the parameter vector θ, θ̂ i

∂

∂θk J (θ)

θ=θ̂

(i)

θ̂k

(i)

− bk

,

(i)

is the ith component of the estimate θ̂ , ri is the

ith element of the diagonal of R, and bi is the ith element of the vector b. Hence, when optimizing the auxiliary

(i)

function Q(θ, θ̂ ) we obtain

r1

(i)

∂

Q(θ, θ̂ ) =

∂θ

r2

..

.

bk

(i+1)

=− .

θ + b = 0 ⇒ θ̂k

rk

Equivalently,

θ̂

(i+1)

= R−1 b.

(31)

This implies that in our approach, it is not necessary to obtain the auxiliary function Q and optimize it. Instead,

by computing R and b at every iteration, the new estimate can be obtained.

For the class of kernels here described, the proposed method for constructing surrogate functions can also be

understood as part of sequential quadratic programming (SQP) methods [15, ch. 12.4]. Indeed, the general case

of equality and inequality-constrained minimization problems is defined as [29, ch. 4]:

θ ∗ = arg min f (θ)

θ

s. t. h(θ) = 0 , g(θ) ≤ 0,

which is solved by iteratively defining quadratic functions that approximate the objective function and the inequality

(i)

constraint around a current iterate θ̂ . In the same way, our proposal generates an algorithm with quadratic

surrogate functions.

6

Numerical Examples

In this section, we illustrate our proposed algorithm with two numerical examples.

6.1

Example 1: Estimation of the Distribution of Stellar Rotational Velocities

One of the many problems in Astronomy deals with is the estimation of rotational velocities of stars. This particular

problem is of great importance, since it allows astronomers to describe and model the stars formation, their internal

structure and evolution, as well as how they interact with other stars, see e.g. [7–9].

Modern telescopes allow for the measurement of the rotational velocities from the telescope point of view, that

is, a projection of the true rotational velocity. This is modelled (spatially) as the convolution of the true rotational

velocity pdf and a uniform distribution over the sphere (for more details see e.g. [9]):

Z

p(y|σ) =

y

2 The

∞

y

x

p

x2 − y 2

matrix R can also be computed using Monte Carlo algorithms.

10

p(x|σ)dx,

(32)

where p(y|σ) is the uniform projected rotational velocity pdf and p(x|σ) is the true rotational velocity pdf to be

estimated, and σ a hyperparameter.

A common model for p(x|σ) found in the Astronomy literature is the Maxwellian distribution (see e.g. [9, 11])

r

2 1 2 −x2 /(2σ2 )

x e

.

(33)

p(x|σ) =

π σ3

In practice, the measurements correspond to realizations of p(y|σ) [9], from which the likelihood function can be

defined as:

N

Y

p(y|σ) =

p(yk |σ),

(34)

k=1

where y = [y1 , ..., yN ]T ,

Z

∞

p(yk |σ) =

xk

yk

p

yk

p(xk |σ)dxk ,

x2k − yk2

xk is Maxwellian distributed, and N is the number of measurement points. Hence, the log-likelihood function can

be expressed as:

`(σ) =

N

X

∞

"Z

log

yk

k=1

#

yk

p

p(xk |σ)dxk .

xk x2k − yk2

(35)

If we define the complete data z = (x, y), the kernel function K(·, ·) and the measure µ(·) in (18) can be defined as

r

2 x2k −x2k /(2σ2 )

K(xk , σ) = p(xk |σ) =

e

,

(36)

π σ3

and

dµ(xk , yk ) =

xk

p

yk

dxk .

x2k − yk2

(37)

Then, the log-likelihood function in (35) can be written as:

`(σ) =

N

X

log [Vk (σ)] ,

(38)

k=1

with

Z

∞

Vk (σ) =

K(xk , σ)dµ(xk , yk ),

(39)

yk

Thus, the ML estimator is obtained from:

σ̂ML = arg max

σ

N

X

log Vk (σ).

(40)

k=1

Since the parameter that is needed to be estimated is part of the convolution in (32), the optimization problem in

(40) cannot be solved in a straightforward manner. Instead, we utilize the re-interpretation of the EM algorithm

that we propose for solving (40).

First, notice that from the surrogate function Q̄(σ, σ̂ (i) ) can be expressed as:

Q(σ, σ̂ (i) ) =

N

X

Qk (σ, σ̂ (i) ),

(41)

k=1

with

Z

∞

(i)

Qk (σ, σ̂ ) =

log(K(xk , σ))

yk

K(xk , σ̂ (i) )

dµ(xk , yk ).

Vk (σ̂ (i) )

(42)

For convenience, we can differentiate the auxiliary function Q(σ, σ̂ (i) ) in (41) with respect to 1/σ obtaining:

N

∂ Q̄(σ, σ̂ (i) ) X

=

∂(1/σ)

Z

∞

k=1 yk

x2k K(xk , σ̂ (i) )

3σ −

dµ(xk , yk ).

σ

Vk (σ̂ (i) )

11

(43)

Then, equating to zero and solving for σ we finally obtain

r

S(y, σ̂ (i) )

(i+1)

σ̂

=

,

3N

where

(i)

S(y, σ̂ ) =

N

X

Z

∞

x2k

yk

t=1

(44)

K(xk , σ̂ (i) )

dµ(xk , yk ).

Vk (σ̂ (i) )

(45)

In Table 3 we summarized the specialisation of our proposed algorithm for this example.

Table 3: Proposed algorithm for Maxwellian distribution estimation in Example 1.

Step 1: i = 0.

Step 2: Obtain an initial guess σ̂ (i) .

Step 3: Compute the integral given by (45).

Step 4: Compute σ̂ (i+1) (44)

Step 5: i = i + 1 and back to Step 3 until convergence.

For the numerical simulation, we have considered the problem solved in [9], with the true dispersion parameter

σ0 = 8. The measurement data y = [y1 , ..., yN ] was generated using the Slice Sampler (see e.g. [40]) applied to

(32). The simulation setup is as follows:

• The data length is given by N = 10000.

• The number of Monte Carlo (MC) simulations is 50.

• The stopping criterion is given by:

kσ̂ (i) − σ̂ (i−1) k/kσ̂ (i) k < 10−6 ,

or the maximum number of iterations of 100 has been reached.

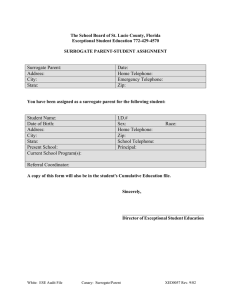

The results are shown in Fig. 1, were the estimated p(x|σ) for each MC simulation is shown. It is clear that

the estimated Maxwellian distributions are very similar to the true density distribution. The mean value of the

estimated parameter was σ̂ = 7.9920. The estimation from each MC simulation is shown in Fig 2. It can be clearly

seen that the estimated parameter σ̂ is close to the true value.

6.2

Example 2: Inequality Constrained ML Estimation

Let us consider the following inequality constrained ML estimation problem:

θ ∗ = arg min ky − Aθk22

θ

√

M

X

βm

θm

s. t. f (θ) =

τ

m=1

q

≤ γ,

(46)

2

where θ = [θ T1 , ..., θ TM ]T is an unknown vector parameter, A is the regressor matrix, and y corresponds to the

measurements, with q = 0.4, τ = 0.2, and γ = 7. Notice that the constraint function belongs to a family of sparsity

constraints found in sparse system identification and optimization – see e.g [28] and the references therein. The

function of the unknown parameter in the inequality constraint can be understood as its “group `q -norm”, with

M groups and where θ m is the mth group of length βm 3 . Hence, the inequality constraint can be expressed as a

3 Notice

that when m = 1 we obtain the standard `q -norm, and when q = 1 we obtain the `1 -norm utilized in the Lasso.

12

Figure 1: Convergence of the proposed approach to the global optimum

Figure 2: Convergence of the proposed approach to the global optimum

Multivariate Power Exponential (MPE) distribution [44] of θ. On the other hand, the MPE distributions can be

expressed as VMGMs [31], which allows for utilizing the ideas presented in Section 4, where the corresponding kernel

function is a multivariate Gaussian distribution. In this example, the elements of the matrix A were generated

using a Normal distribution (N (0, 1)) and the measurement data was generated from

y = Aθ + n,

where n ∼ N (0, 0.01I) is the additive measurement noise. Here the number of measurements is 256 and θ = [0.54,

1.83, −2.26, 0, 0, 0, 0, 0, 0, 0.86, 0.32, −1.31]T , with βm = 3 ∀m, and thus M = 4. We have also considered 150

Monte Carlo simulations.

For the attainment of the surrogate function we consider the following:

13

Figure 3: Convergence of the proposed approach to the global optimum

1. When expressed as a MPE distribution, the group `q -norm is given by [44],

√

p(θ m ) = κm (q, τ )e

−

βm

τ

q

θm

2

,

where κm (q, τ ) is a constant that depends on τ and q, and

M

Y

p(θ) =

p(θ m ).

m=1

2. When expressed as a VMGM, the group `q -norm is given by

Z

p(θ m ) = Nθ (0, Σ(λm ))p(λm )dλm .

3. With the previous expressions, and using the results in Section 5, we notice that

(i)

∂Q(θ, θ̂ )

(i)

= −km

θm ,

θm

(i)

(i)

(i)

where θ̂ m is the estimate of the mth group at the ith iteration and km is a constant that depends on θ̂ m .

(i)

k(i)

T

4. Then we obtain Q(θ, θ̂ ) = m

θ

θ

m m + c, where c is a constant.

2

Then, the inequality constraint in (46) can be replaced with the following constraint (that includes the surrogate

(i)

function Q̃(θ, θ̂ ) as shown in (27))

√

M

(i)

X

(i)

(i)

km T

βm (i)

θ m θ m − (θ̂ m )T (θ̂ m ) +

θ̂ m

2

τ

m=1

q

≤ γ,

(47)

2

The results of the optimization problem for one realization is shown in Fig. 3, where the optimization problem with

the surrogate function was solved using the optimization software CVX [22,23] and the original non-convex problem

was solved using the optimization software BARON [41, 47], which allowed us to obtain the global optimum. From

Fig. 3 it can be seen that our approach finds the global minimum with a few iterations only. On the other hand,

we have also considered the mean square error (MSE) of the estimates and compared them with the unconstrained

problem (least squares). The MSE obtained with our approach is 3.6 × 10−2 , whilst the least squares estimate

yielded an MSE equal to 4.34 × 10−2 . Clearly, the incorporation of the constraint aided the estimation.

14

Conclusions

In this paper we have presented a systematic approach for constructing surrogate functions in a wide range of

optimization problems. Our approach can be utilized for constructing surrogate functions for both the cost function

and the constraints, generalizing the popular EM and MM algorithms. Our approach is based on the utilization of

data augmentation and kernel functions, yielding simple optimization algorithms when the kernel can be expressed

as VMGM.

Acknowledgments

This work was partially supported by FONDECYT - Chile through grants No. 3140054 and 1181158. This work

was also partially supported by the Advanced Center for Electrical and Electronic Engineering (AC3E, Proyecto

Basal FB0008), Chile. The worl of R. Orellana was partially supported by PIIC program, scholarship 015/2018,

UTFSM.

References

[1] J. C. Agüero, W. Tang, J. I. Yuz, R. Delgado, and G. C. Goodwin. Dual time-frequency domain system

identification. Automatica, 48(12):3031–3041, 2012.

[2] N. Balakrishnam, V. Leiva, A. Sanhueza, and F. Vilca. Estimation in the Birnbaum-Saunders distribution

based on scale-mixture of normals and the EM algorithm. SORT, 33(2):171–192, 2009.

[3] O. Barndorff-Nielsen, J. Kent, and M. Sorensen. Normal variance-mean mixtures and z distributions. Int.

Stat. Review, 50(2):145–159, 1982.

[4] M. J. Beal and Z. Ghahramani. The variational bayesian EM algorithm for incomplete data: with application

to scoring graphical model structures. In Proc. of the 7th Valencia International Meeting), Valencia, Spain,

2003.

[5] R. Carvajal, J. C. Agüero, B. I. Godoy, and G. C. Goodwin. EM-based Maximum-Likelihood channel estimation in multicarrier systems with phase distortion. IEEE Trans. Vehicular Technol., 62(1):152–160, 2013.

[6] R. Carvajal, J. C. Agüero, B. I. Godoy, and D. Katselis. A MAP approach for `q -norm regularized sparse

parameter estimation using the EM algorithm. In Proc. of the 25th IEEE Int. Workshop on Mach. Learning

for Signal Process (MLSP 2015), Boston, USA, 2015.

[7] S. Chandrasekhar and G. Münch. On the Integral Equation Governing the Distribution of the True and the

Apparent Rotational Velocities of Stars. Astrophysical Journal, 111:142–156, January 1950.

[8] A. Christen, P. Escarate, M. Curé, D. F. Rial, and J. Cassetti. A method to deconvolve stellar rotational

velocities ii. Astronomy & Astrophysics, 595(A50):1–8, 2016.

[9] M. Curé, D. F. Rial, A. Christen, and J. Cassetti. A method to deconvolve stellar rotational velocities.

Astronomy & Astrophysics, 565, May 2014.

[10] A. P. Dempster, N. M. Laird, and D. B. Rubin. Maximum likelihood from imcomplete data via the EM

algorithm. Journal of the Royal Statistical Society, Series B, 39(1):1–38, 1977.

15

[11] A. J. Deutsch. Maxwellian distributions for stellar rotation. In Proc. of the IAU Colloquium on Stellar

Rotation, pages 207–218, Ohio, USA, September 1969.

[12] R. Durret. Probability: Theory and examples. Cambridge University Press, 4th edition, 2010.

[13] M. A. T. Figueiredo. Adaptive sparseness for supervised learning. IEEE Trans. Pattern Anal. Mach. Intell.,

25(9):1150–1159, Sept 2003.

[14] M. A. T. Figueiredo, J. M. Bioucas-Dias, and R. D. Nowak. Majorization–Minimization algorithms for waveletbased image restoration. IEEE Trans. Image Process., 16(12):2980–2991, 2007.

[15] R. Fletcher. Practical methods of optimization. John Wiley & Sons, Chichester, GB, 2nd edition, 1987.

[16] P Garrigues and B. Olshausen. Group sparse coding with a laplacian scale mixture prior. Advances in Neural

Information Processing Systems, 23:676–684, 2010.

[17] B. I. Godoy, J. C. Agüero, R. Carvajal, G. C. Goodwin, and J. I. Yuz. Identification of sparse FIR systems

using a general quantisation scheme. Int. J. Control, 87(4):874–886, 2014.

[18] B. I. Godoy, G. C. Goodwin, J. C. Agüero, D. Marelli, and T. Wigren. On identification of FIR systems

having quantized output data. Automatica, 47(9):1905–1915, 2011.

[19] A. Goldsmith. Wireless Communications. Cambridge University Press, New York, NY, USA, 2005.

[20] G. C. Goodwin and R. L Payne. Dynamic system identification : experiment design and data analysis.

Academic Press New York, 1977.

[21] R. B. Gopaluni. A particle filter approach to identification of nonlinear processes under missing observations.

Can J. Chem. Eng., 86(6):1081–1092, 2008.

[22] M. Grant and S. Boyd. Graph implementations for nonsmooth convex programs. In V. Blondel, S. Boyd,

and H. Kimura, editors, Recent Advances in Learning and Control, Lecture Notes in Control and Information

Sciences, pages 95–110. Springer-Verlag Limited, 2008. http://stanford.edu/~boyd/graph_dcp.html.

[23] M. Grant and S. Boyd. CVX: Matlab software for disciplined convex programming, version 2.1. http:

//cvxr.com/cvx, March 2014.

[24] M. C. Grant and S. P. Boyd. Recent Advances in Learning and Control, chapter Graph Implementations for

Nonsmooth Convex Programs, pages 95–110. Springer, London, 2008.

[25] M. A. Hanson. Inequality constrained maximum likelihood estimation. Ann Inst Stat Math, 17(1):311–321,

1965.

[26] A. Hobolth and J. L. Jensen. Statistical inference in evolutionary models of DNA sequences via the EM

algorithm. Statistical Applications in Genetics and Molecular Biology, 4(1), 2005. Article 18.

[27] D.R. Hunter and K. Lange. A tutorial on MM algorithms. The American Statistician, 58(1):30–37, 2004.

[28] M.M. Hyder and K. Mahata. A robust algorithm for joint-sparse recovery. Signal Process. Letters, IEEE,

16(12):1091–1094, Dec 2009.

16

[29] A. F. Izmailov and M. V. Solodov. Newton-typo methods for optimization and variational problems. Springer,

Cham, Switzerland, 2014.

[30] K. Lange, editor. Optimization. Springer, New York, USA, 2nd edition, 2013.

[31] K. Lange and J. S. Sinsheimer. Normal/independent distributions and their applications in robust regression.

J. Comput. Graphical Stat., 2(2):175–198, 1993.

[32] L. Ljung, G. C. Goodwin, and J. C. Agüero. Stochastic embedding revisited: A modern interpretation. In

Proc. of the 53rd IEEE Conf. Decision and Control), Los Angeles, CA, USA, 2014.

[33] K. Mahata and T Söderström. Improved estimation performance using known linear constraints. Automatica,

40(8):1307 – 1318, 2004.

[34] B. R. Marks and G. P. Wright. A general inner approximation algorithm for nonconvex mathematical programs.

Operations Research, 26(4):681–683, 1978.

[35] G. J. McLachlan and T. Krishnan. The EM Algorithm and Extensions. Wiley, 1997.

[36] X. L. Meng. Thirty years of EM and much more. Statistica Sinica, 17(3):839–840, 2007.

[37] T. Park and G. Casella. The Bayesian Lasso. Journal of the American Statistical Association, 103(482):681–

686, 2008.

[38] E. Pollakis, R. L. G. Cavalcante, and S. Stańczak. Base station selection for energy efficient network operation

with the Majorization- Minimization algorithm. In Proc. 13th IEEE Int. Workshop on Signal Process. Adv.

Wireless Commun. (SPAWC 2012), Çeşme, Turkey, 2012.

[39] N.G. Polson and J.G. Scott. Data augmentation for non-gaussian regression models using variance-mean

mixtures. Biometrika, 100:459–471, 2013.

[40] C.P. Robert and G. Casella. Monte Carlo statistical methods. Springer, New York, 1999.

[41] N. V. Sahinidis. BARON 14.3.1: Global Optimization of Mixed-Integer Nonlinear Programs, User’s Manual,

2014.

[42] T. B. Schön, A. Wills, and B. Ninness. System identification of nonlinear state-space models. Automatica,

47(1):39–49, 2011.

[43] T. Söderström and P. Stoica, editors. System Identification. Prentice-Hall, Inc., Upper Saddle River, NJ,

USA, 1988.

[44] N. Solaro. Random variate generation from multivariate exponential power distribution. Statistica & Applicazioni, 2(2):25–44, 2004.

[45] B. K. Sriperumbudur, D. A. Torres, and G. R. G. Lanckriet. A majorization-minimization approach to the

sparse generalized eigenvalue problem. Machine Learning, 85(1–2):3–39, 2011.

[46] M.A. Tanner. Tools for Statistical Inference: Observed Data and Data Augmentation Methods. Springer, 1991.

[47] M. Tawarmalani and N. V. Sahinidis. A polyhedral branch-and-cut approach to global optimization. Mathematical Programming, 103:225–249, 2005.

17

[48] F. Vaida. Parameter convergence for EM and MM algorithms. Statistica Sinica, 15(3):831–840, 2005.

[49] D. A. Van Dyk and X.-L. Meng. The art of data augmentation. Journal of Computational and Graphical

Statistics, 10(1):1–50, 2001.

[50] L. Wang, J. Zhang, and G. G. Yin. System identification using binary sensors. IEEE Trans. Autom. Control,

48(11):1892–1907, 2003.

[51] M. West. On scale mixtures of normal distributions. Biometrika, 74(3):646–648, 1987.

[52] S. Yang, J. K. Kim, and Z. Zhu. Parametric fractional imputation for mixed models with nonignorable missing

data. Statistics and Its Interface, 6(3):339–347, 2013.

18