Econometrics Lecture Notes: Regression & Correlation Analysis

advertisement

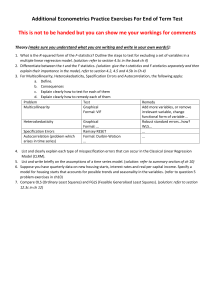

ECONOMETRICS MODULE 2 ABSTRACT IS ONE PARAGRAPH THAT SUMMARIZE WHAT YOU DID. INTRODUCTION: (MORE DETAIL) WHAT YOU’RE PLANNING TO DO AND WHY DO YOU THINK THAT’S IMPORTANT and exploring the relationship btw co2 and gdp in your country. CONCLUSION: SUMMARIZE EVERYTHING WHAT YOU HAVE DONE CHAPTER 3 20/04/2022 REGRESSION we make a specific assumption about causality In regression, we treat the dependent variable (y) and the independent variable(s) (x’s) very differently. (Asymmetric) The y variable is assumed to be random or “stochastic” in some way, i.e. to have a probability distribution. The x variables are, however, assumed to have fixed (“nonstochastic”) values in repeated samples. Y(dependent v.)=α+βx(independent v.)+ε Y is the variable that I want to explain (regressand) and x (regressor) is the variable that I am using to explain y. Like more square metres higher price CORRELATION we don’t make a specific assumption about causality If we say y and x are correlated, it means that we are treating y and x in a completely symmetrical wayI am not assuming casual regression Correlation takes values btw (-1) and (1) SCATTER DIAGRAM OF REGRESSIONRegression is the best line that connects our observation α and β are about the population, SO THEIR TRUE VALUE ARE IMPOSSIBLE TO FIND I can calculate them just from the sample AND I OBTAIN alfa^ and beta^. Alfa hat and beta hat are estimates so they have some uncertainty measured by standard error term of beta^ and alfa^. The lower the standard error the more precise is the estimate. So the error is included bc we cannot explain everything. The disturbance term can capture a number of features: - We always leave out some determinants of yt - There may be errors in the measurement of yt that cannot be modelled. - Random outside influences on yt which we cannot model OLS trying to minimize the distance btw observations and regression line. PROJECT 1. TIMES SERIES PLOT after highlighting both variables separate small graph 2. CORRELATION, tasto destroy after highlighting both variables 3. SCATTERPLOT: inversion of tendence, in the beginning in order to become richer you need to pollute more but in late 1900s getting richer means better technologies wich employs less CO2 and 4. Filefunction packageservercolorx_y_ plot tight click install *COPY THE COMMAND AND PASTE IT IN CONTROL 5. RUN A REGRESSION: WHAT IS THE RELAT BTW POLLUTION AND WEALTH model OLS DEP VAR: CO2 INDEP: GDP Constant term: Alfa hat - prima colonna prima riga ----- standard error of alfa (measures the uncertainty) alfa hat/standard error of alfa = t-ratio which is Coefficient 1: Beta hat - prima colonna seconda riga 6. GRAPH FITTED ACTUAL PLOT In the last graph, FAP, Y is the red point, all the observation we have, the blue line represents y hat and Yyhat = u hat (residual) With OLS you tring to minimize uhat (the error) and you’re trying to fit the best line. ALFA HAT AND BETA HAT coming from the sample, a subset of the population. The standard errors are going to tell me how precise the estimates of this parameters are. STANDARD ERROR is going to be relatively low if all the point are very close to the regression line. POPULATION REGRESSION FUNCTION (PRF) y= α+βx + u SAMPLE REGRESSION FUNCTION (SRF) y hat = α hat +β hat x U= y – y hat. Lower s.e. less uncertainty best estimates. We usually employ OLS when we have linearity. One way of estimating linear models when things are not linear is by including LOGARITMIC TRANSFORMATIONS. 1. HIGHLOGH THE VARIABLES ADD LOGS OF SELECTED VARIABLES. 2. HIGHLIGHT EVERYTHING TASTO DESTRO TIME SERIES PLOT AND SEPARATE 4 GRAPHS. This will show that the scale on vertical axis is significantly reduced. 3. Scatter plot of the logs 4. Comparing model 1 (normal variable) and model 2 (log variable) 5. R squared: how much the variation of gdp per capita can explain the variation of annual gdp in model 1, the percentage variation of gdp per capita that can explain the percentage variation of emissions. From model 1 to model 2 R squared has increased a lot. When we take the logs we try to run a non linear model in a linear way. ESTIMATORS: formulae used to calculate the coefficients ESTIMATES: In gretl we observe that estimates are the actual numerical values for the coefficients. 1. Test in Gretl in for E(u) = 0 (expected value of error is equal to zero): TEST NORMALITY OF RESIDUALS I GET DISTRIBUTION OF RESIDUALS AND THE MEAN VALUE, which must be close to zero. (more than 2/3 zero are okay to define the number as a value very close to zero) 2. Test in Gretl whether the variance is constant HOMOSKEDASTICITY 3. The error are statistically independent one another NO AUTOCORRELATION (the errors yesterday are not correlated with errors today) 4. There is no relationship between error and x U is random and not related to some variable 5. U is following normal distribution IF THIS ASSUMPTIONS HOLDS MEANS OLS PARAMETERS ARE BLUE ESTIMATORS (ALFA HAT AND BETA HAT ARE VERY CLOSE/EQUAL TO TRUE VALUE. BEST MEANS MINIMUM VARIANCE) Consistency: as our sample size increase, I am getting better estimates Unbiased: on average my estimates are goin to be equal to true value. Reliability and precision: standard error of alfa hat and beta hat tell me. They have to be as small as possible. 2:17: 23 DUE GRAFICI, UNO A PALLINA A SX E UNO A RETTA DI PUNTI A DX On the left graph: no data for low values of ex ALFA HAT could be problematic I do not have information. Like if x is square metres and y is price of flat: beta is how much more I pay for an increase in square metres. Alfa measures the value of an apartment with zero square metres. IMPOSSIBLE When x equals zero y equals alfa. The larger the T (sample size) the smaller the coefficient variances. More precise the estimates, standard error reduced significantly. T STATISTIC: alfa hat/standard error of alfa hat The lower the standard error the more precise is the estimate. WE NEED TO DO HYPOTESIS TESTING TO CHECK HOW RELIABLE IS THE ESTIMATES TEST OF SIGNIFICANCE APPROACH T RATIO: higher in absolute terms, higher the significance of the variable is. If p value is very low, near to zero, then I reject the null that zero is a probable value for alfa. In other words, alfa is statistically significant. CONFIDENCE INTERVALS APPROACH: for confidence intervals creations you go to analysis and confidence intervals for coefficients. You find min and max value for the variable and average value. 21/04/2022 Test of significance approach test statistic and p value Alternative: confidence intervals TEST OF SIGNIFICANCE APPROACH (B hat- B) / SE (B hat) Level of significance: 1%,5%,10% Ho: B=0 this is an important statement bc we are valuing if x is significant to explain y When a coefficient can take the value 0, then the variable (X) attached to that coefficient is not helping me in explaining Y. NORMAL DISTRIBUTION - Bell shape 0 mean, 1 variance Relation btw T Distribution and Normal Distribution: both symmetric and centred around 0 but T distribution has another parameter, degrees of freedom, and we employ it when we have a relatively small sample T has flatter tails = extreme values are more frequent respect to the Normal distribution. T distribution (flatter tails) Normal Distribution There are infinite number of T distributions due to the DEGREES OF FREEDOM = difference btw number of observations les number of coefficients estimated (n-k). Critical values on T are always higher than the critical values on the Normal. THE CONFIDENCE INTERVAL Includes all the possible values of a coefficient/parameters. Min, max and average value. Usually two sided. How to build them: calculate alfa hat, beta hat and the standard errors, chose level of significance and follow this formula: The number on axis of T Distrib depends on Degrees of Freedom. Vedi 00:23 CONFIDENCE INTERVAL DIFFERENT TESTS Β= 1 reject Β= 0 do not reject bc in the confidence value the 0 is comprehended FOR THE PROJECT TO BOTH TEST OF SIGNIFICANCE AN CONFIDENCE INTERVAL ERRORS IN HYPOTESIS TESTS 1. Reject a null that is true 2. Not a rejecting a null when it’s false The best solution to type 1 and 2 errors is to increase sample size NEED COMPARABLE DATA IF THEY ARE MISSING T RATIO Tells if coefficient is significant or not. The constant term can be not significant when we have no data about what is happening around intercept. EXAMPLE OF T RATIO If T ratio in absolute terms is greater than 1.96 we reject H0 SKIP OTHER EXAMPLES THE EXACT SIGNIFICANCE LEVEL OR P-VALUE The p-value gives us the plausibility of null hypothesis CHAPTER 4 MULTIPLE REGRESSION When we have more than 1 variables in righten side. We can add more variables if we find something that can help us explain CO2 emissions (?) F STATISTIC WHEN YOU WANT TO TEST MORE H0, MORE EQUATIONS SIMULTANEOUSLY. NON -LINEAR MODEL Maybe I need bc the relationship is non linear HOW TO DO THIS IN GRETL? HIGHLOGHT GDP PER CAPITA (NO LOGS) ADD SQUARE OF SELECTED VARIABLES MODEL OLS AND PUT SQUARE GDP IN REGRESSORS In fact the model we are running is non linear as the country become richer and richer we see co2 emission declining we have sort of 3 phases. 1. You need to pollute to become richer 2. Pollution is constant 3. You go richer and emissions decline ENVIRONMENTAL KUTNEZT CURVE. If you’re analysis is about a rich country, you will probably have this. While for a poor country you see just the 1 and maybe 2. F test to test if the 2 coefficients (GDP per capita and sq_GDP_pc) are simultaneously zero. Null: b1 and b2 are simultaneously zero Test omit variables highlight the 2 coefficients you want to test wald test or sequential testing look at p value. F DISTRIBUTION Depends also on degrees of freedom. F dist is similar to T distrib, while Chi square distrib is similar to Normal Distribution. Can I use F test for single hypothesis? Yes. Any test that is done as a T-test can be done as an F too R^2: how well the sample fits the data Square of the correlation between Y an Y hat. R^2 tells me the estimates sum of residuals divided by the total sum of residuals. Problem: R^2 keeps going up when you increase the number of variables. this is why we use adjusted R^2 with extra variables. If we want to compare 2 models, we have to use adjusted R^2. COMPARE ADJUSTED R^2 OF LINEAR, LOGARITMIC AND NON-LINEAR MODEL TO SEE WHICH MODEL IS BETTER AKAIKE CRITERION The smaller the value the better the model. DUMMY VARIABLE: it takes just 1 and 0 values. gives you additional explaining when something extreme like a war is happening. Then watch T-RATIO (?) QUANTILE REGRESSION Standard regression approaches effectively model the (conditional) mean of the dependent variable. We can calculate from the fitted regression line the value that y takes for any value of the explanatory variables. BUT QR is basically an extrapolation of the behavior of the relationship btw x and y at the mean of the remainder of the data. This is a suboptimal approach bc OLS is measuring the average approach. For example, OLS cannot help if you focus on what happens if Y is relatively low or high. For example, there might be a non linear relationship btw x and y. Estimating a standard linear regression model may lead to seriously misleading estimates of this relationship as it will ‘average’ the positive and negative effects. It would be possible to include non-linear (i.e. polynomial) terms in the regression model (for example, squared, cubic, . . . terms) But quantile regressions represent a more natural and flexible way to capture the complexities by estimating models for the conditional quantile functions Quantile regressions can be conducted in both time-series and cross-sectional contexts It is usually assumed that the dependent variable, often called the response variable, is independently distributed and homoscedastic Quantile regressions are more robust to outliers and non-normality than OLS regressions HOW TO IDENTIFY OUTLIERS IN THE PROJECT graphs residual plot against time I can identify big drops or big ups. How can I take outliers into account? I can create 2 dummy variables, one for each year GO TO ADD OBSERVATION RANGE DUMMY ONE DUMMY FOR EACH YEARTHEN MODEL OLS INCLUDE THE 2 DUMMIES AS REGRESSORS and when you see statistics you understand if they are or not statistically significant. If not (no asterischi) we didn’t identify the outliers correctly. QUANTILE REGRESSION P.2 • Quantile regression is a non-parametric technique since no distributional assumptions are required to optimally estimate the parameters • The notation and approaches commonly used in quantile regression modelling are different to those that we are familiar with in financial econometrics • Increased interest in modelling the ‘tail behaviour’ of series have spurred applications of quantile regression in finance what happens when co2 emissions per capita are relatively low or relatively high. • A common use of the technique here is to value at risk modelling This seems natural given that the models are based on estimating the quantile of a distribution of possible losses. • • Quantiles, denoted τ , refer to the position where an observation falls within an ordered series for y , for example: – The median is the observation in the very middle – The (lower) tenth percentile is the value that places 10% of observations below it (and therefore 90% of observations above) More precisely, we can define the τ -th quantile, Q(τ ), of a random variable y having cumulative distribution F(y) as Q(τ ) = inf y : F(y) ≥ τ where inf refers to the infimum, or the ‘greatest lower bound’, which is the smallest value of y satisfying the inequality • By definition, quantiles must lie between zero and one • Quantile regressions effectively model the entire conditional distribution of y given the explanatory variables. • The OLS estimator finds the mean value that minimises the RSS and minimising the sum of the absolute values of the residuals will yield the median • The absolute value function is symmetrical so that the median always has the same number of data points above it as below it • If the absolute residuals are weighted differently depending on whether they are positive or negative, we can calculate the quantiles of the distribution • To estimate the τ -th quantile, we would set the weight on positive observations to τ and that on negative observations to 1 – τ We can select the quantiles of interest and common choices would be 0.05, 0.1, 0.25, 0.5, 0.75, 0.9, 0.95 The fit is not good for values of τ too close to its limits of 0 and 1. HOW NOT TO DO IT • As an alternative to quantile regression, it would be tempting to think of partitioning the data and running separate regressions on each of them – • However, this process, tantamount to truncating the dependent variable, would be wholly inappropriate – • For example, dropping the top 90% of the observations on y and the corresponding data points for the xs, and running a regression on the remainder It could lead to potentially severe sample selection biases In fact, quantile regression does not partition the data – All observations are used in the estimation of the parameters for every quantile 2:27 FOR PROJECT: MODEL ROBUST ESTIMATION QUANTILE REGRESSION GET RID OF DUMMIES AND NEED CO2 EMISSIONS PER CAPITA DESIRED QUANTILES: 0.5/0.25/0.50/0.75/0.95 CHOOSE ALL COMPUTE CONFIDENCE INTERVALS in this way we can see whether a coefficient is statistically significant or not. CLICK OK FOR GDP_PC I HAVE 5 COEFFICIENTS: the first one represent when co2 is relatively low, the last one is when co2 is relatively high and the third one out of five is where the co2 is relatively medium IF WE WANT TO SEE WHAT HAPPENS WE HAVE TO GO TO GRAPHS TAU SEQUENCE GDP_PC: THIS GRAPH WILL COMPARE OLS (BLUE) (and the dotted line above and below are confidence intervals for the OLS) WITH coefficients from QUANTILE REGRESSION (BLACK). OLS IS TELLING US WHAT’S HAPPENING IN THE MIDLE, IS giving us the average response; AND QUANTILE REGRESSION IS TELLING US WHETER THERE IS A SIGNIFICANT ASYMMETRY WHEN CO2 EMISSIONS ARE RELATIVELY HIGH OR RELATIVELY LOW, THE DIFFERENCE BTW QR AND OLS FOR UK IS NOT VERY IMPORTANT, IS RELATIVELY LIMITED: Ad ogni coefficient del gdp_pc corrisponde un punto sulla QR line del grafico we are comparing OLS which assumes the average symmetric response with QR which is looking at different segment of the distribution. As here the blue line is very close to black line it seems that the average response is a good approximation of what is happening in the different segments of the distribution (so when co2 is relatively low, high) The blue line is the average response that assumes symmetry, while black line assumes asymmetry QR says that the response of co2 emissions to change in gdp is not a number as OLS says but is a function of whether co2 emissions are relatively high or relatively high (different response basing on the cases). WORRY ABOUT OUTLIERS WHEN YOU ARE USING R^2, NOT WHEN USING QR, because quantile is looking at the different segments of the distribution, they can basically take into account OUTLIERS IN A MORE EFFICIENT WAY, outliers would affect more what’s happening in a specific segment. Difference depending on number of quantiles: I get different responses depending on the number of quantiles you chose. 22/04/2022 PROJECT + PPP COLOR-XY installed QUANTILE REGRESSION QR can examine the potential asymmetry btw 2 variables. We need quantile regression bc it is looking at the entire conditional distribution while OLS just focuses at the mean and average response/value, it doesn’t tell us what happens when value are relatively low or high. OLS measure average responses symmetry/linearity/average responses (1 β) QR: (more β relative to each quantiles values) Asymmetric and looking at the entire distribution it’s looking at the conditional quantile functions. The dependent variable is usually called response var and we assume it is independently distributed and homoscedastic. The advantage is that QR is robust to outliers, so we don’t need DUMMY variables. Non parametric technique, the notation is different! Tail behaviour = relative high and low values of response variable (Y) Very powerful tool to use if we are in finance or environment Denoted with TAU: when tau is 0.10 it means it contains the first ten % of observation (relatively low values) and when 0.90 it refers to relatively high value. REMEMBER: OBSERVATIONS (Y) ARE ORDERED Quantiles lies btw 0 and 1 QUANTILE REGRESSION CAN MODEL THE ENTIRE CONDITIONAL DISTRIBUTION OF Y USING EXPL VAR (co2 per capita given GDP) (USE FOR THE PROJECT we need quantile regression in order to investigate whether there is a difference btw relatively high CO2 emission and relatively low co2 emissions. TO JUSTIFY WHY WE ARE USING QR WRONG ALTERNATIVE: separate regression for relative high value of y and relative low value this is wrong. Quantile gives different WEIGHTs to different observations, but it does not partition the data. CASE STUDY (0:33, SAME AS GRAPH IN LESSON BEFORE): they compared OLS with QR. If you bootsrap the standard error you get more reliable result (FOR THE PROJECT) and we can also do a table like ABOVE with different quantiles. Q(0.5) median that you can compare with OLS. 00:37 modelling on GRETL 0:43 IMPORTANT Model 1 QR Regressors: const/GDP_pc click on Robust standard error/intervals and on compute confidence intervals 5 quantiles graphs tau sequence GDP_pc MODEL 2 Doing the same (quantile regression model) but for logs so I put as dependent variable log of annual co2 and as regressors const e log of GDP. confidence intervals and robust stand error graphs tau sequence gdp_pc : CHECH FOR DIFFERENCES WITH MODEL 1 EXPLANATION OLS results: dependent var is annual CO2 e indep var is GDP per capita. If GDP increases by 1% CO2 leads to 0.49% in GDP per capita. AVERAGE RESPONSE. QR results: 0.41% in co2 with 1% increase in GDP. When you are relatively rich and increase in co2 are consequentially relative high it happens that we have an asymmetric response bc CO2 emission increases less when you are rich and more when you are poor. (I can see that from increasing quantiles) ASYMMETRIC RESPONSE. To compare OLS and QR I go to the median response. PROJECT 1:07 TO INSERT DATA IN EXCEL: YOU DOWNLOAD DATA, FILTER, USE UNDERSCORE TO READ IN GRETL, AND OPEN THE FILE, DELETE ALL DATA APPARTE 3 COLONNE CON ANNO, CO2 E GDP CHAPTER 5 VIOLATION OF THE ASSUMPTIONS OF THE CLRM/*CLRM: test you need to do for all your models Test for VIOLATIONS: 1. 2. 3. 4. 5. E (U) = 0 average value of U is zero NORMALITY OF RESIDUAL TEST HOMOSKEDASTICITY WHITE TEST NO AUTOCORRELATION AUTOCORRELATION TEST X MATRIX U follows normal distribution NORMALITY OF RESIDUAL TEST Consequences of VIOLATIONS in general, we could encounter any combination of 3 problems: - the coefficient estimates are wrong the associated standard errors are wrong the distribution that we assumed for the test statistics will be inappropriate - Solutions the assumptions are no longer violated we work around the problem so that we use alternative techniques which are still valid STATISTICAL DISTRIBUTION FOR DIAGNOSTIC TEST • Often, an F- and a 2- version of the test are available. • The F-test version involves estimating a restricted and an unrestricted version of a test regression and comparing the RSS. (F needs DEGREES OF FREEDOM) • The 2- version is sometimes called an “LM” test, and only has one degree of freedom parameter: the number of restrictions being tested, m. (just one degrees of freedom parameter which is the number of restrictions) • Asymptotically, the 2 tests are equivalent since the 2 is a special case of the F-distribution: • For small samples, the F-version is preferable. DoF ARE NUMBER OF OBSERVATION – ESTIMATE COEFFICIENTS. Chi square (/LM test. 1 degree of freedom) RELATIVELY BIG SAMPLES or F test (implies that we are using degrees of freedom FOCUS ON THIS BC WE HAVE RELATIVELY SMALL SAMPLE ASSUMPTION 1: the mean of disturbance is zero. The mean of the residuals will always be zero provided that there is a constant term in the regression satisfied when we include a constant term. DIAGNOSTIC TEST NUMBER 1: NORMALITY OF RESIDUALS. IF P VALUE IS ZERO THEY ARE NOT NORMALLY DISTRIBUTED. ASSUMPTION 2: HOMOSKED. (variance of errors is constant). To test this we are going to use White test: we start with a linear model, we take the residuals and we run the auxiliary regression, obtain R^2 from that and DIAGNOSTIC TEST NUMBER 2: white test from logarithmic or also from normal variables and then edit and add logarithmic variables 1:29 If I have heteroskedasticity VARIANCE OF ERROR IS NOT CONSTANT. Standard errors are inappropriate I cannot use t test, p value ecc so I need to update standard error to take heteroskedasticity into account. We can reduce heterosked by 1. taking logs 2. We use standard errors that consider heterosk EDIT, MODIFY MODEL, CLICK ON ROBUST and you’ll see that stand err is increasing, if I do the white test again the result will be the same but Heterosk will just affect the standard errors and not the coefficient. WE ARE GONNA GET WIDER STANDARD ERRORS. We are going to produce wider CONFIDENCE INTERVALS, so we are MORE CONSERVATIVE CREATE A LAGGED VARIABLE: Y (t-1). Delta Yt is usually growth rate of the variable. ASSUMPTION 3: NO AUTOCORRELATION = YOUR MODEL IS GOOD IF THERE ARE NO PATTERNS IN THE MODEL NEG/POS AUTOCORRELATION NO AUTOCORRELATION = EVERYTHING IS RANDOM = YOUR MODEL IS GOOD. TO TEST AUTOCORRELATION, we use DURBIN WATSON TEST (first order autocorrelation so we use just one lag, so just t and t-1). If DW is near 2 there’s little evidence of AUTOCORRELATION, 0 POSITIVE AUTOCORREL, 4 NEGATIVE AUTOCORRELATION but there are areas where the test is INCONCLUSIVE. WE SEE THE TEST STATISTIC FROM OLS MODEL DIRECTLY (you have to do it for all your models ) BUT WE NEED TO CHECH FOR DW P VALUE: WE GO TO TEST, DW P VALUE AND WE SEE THE P VALUE FOR NEG AND POS AUTOCORR. H0 IS THERE IS NO AUTOCORRELATION (1:48) In the case BELOW I reject the alternative case of prof h1.1 I reject the null, H1.2 I do not reject the null so there is positive autocorrelation. Conclusion: we have evidence of positive autocorrelation. ALTRIMENTI: BREUSH GODFREY TEST that is a test for higher order autocorrelation. TEST ON GRETL: AUTOCORRELATION 2 LAGS P VALUE NEAR ZERO WE REJECT CONSEQUENCES OF IGNORING AUTOCORRELATION IF IT IS PRESENT: • The coefficient estimates derived using OLS are still unbiased, but they are inefficient, i.e. they are not BLUE, even in large sample sizes. • Thus, if the standard error estimates are inappropriate, there exists the possibility that we could make the wrong inferences. • R2 is likely to be inflated relative to its “correct” value for positively correlated residuals. REMEDIES: • If the form of the autocorrelation is known, we could use a GLS procedure – i.e. an approach that allows for autocorrelated residuals e.g., Cochrane-Orcutt. • But such procedures that “correct” for autocorrelation require assumptions about the form of the autocorrelation. • If these assumptions are invalid, the cure would be more dangerous than the disease! - see Hendry and Mizon (1978). • However, it is unlikely to be the case that the form of the autocorrelation is known, and a more “modern” view is that residual autocorrelation presents an opportunity to modify the regression: WE INCLUDE Yt-1 (lagged value of the dependent variable Y) in the model. SO EDIT MODIFY CLICK ON LAGS1 LAG OF THE DEPENDENT VARIABLE OK ROBUST STANDARD ERROR IF WHITE TEST SHOWED HETEROSKED RERUNN THE MODEL WITH LAGGED VALUE. REDO TEST OF NORMALITY, HETEROSKED AND AUTOCORRELATION AND ALL THE RESULTS WILL BE UNDER MY MODELS IF AUTOCORRELATION IS STILL PRESENT, I HAVE TO MODIFY THE MODEL AGAIN, FOR EXAMPLE INSERTING THE DUMMIES. IN PROF CASE THE 2 DUMMIES HELP SOLVING. Dummies makes all your diagnostic behave better and increase your adjusted r^2 significantly Explanation of Coefficient of DUMMY: 4ex in 1921 the coefficient is -0.4013. So only in that year we have a significant reduction in annual co2 emission by 0.4013 because of war. GRAPHS ANALYSIS INFLUENTIAL OBSERVATION you can see if some observations are more influential than others. 2.30) BOOTSTRAP: analysis BOOSTRAP -> CHOSE A COEFFICIENT AND THIS IS GOING TO BOOTSTRAP YOUR STANDARD ERROR. I GO WITH WILD BOOTSTRAP AND THIS WILL GIVE ME THE EMPIRICAL DISTRIBUTION OF THE COEFFICIENTS. WHY DO WE WANT TO INCLUDE LAGS? - To get rid of autocorrelation Inertia of the dependent variable Over-reaction Measuring time series as overlapping moving averages However, other problems with the regression could cause the null hypothesis of no autocorrelation to be rejected: - Omission of relevant variables, which are themselves autocorrelated. If we have committed a “misspecification” error by using an inappropriate functional form. Autocorrelation resulting from unparameterised seasonality. (WE HAVE THE DUMMIES JUST IF EVERY TEST WE DID BEFORE DO NOT CORRECT AUTOCORRELATION I CAN SEE IT FROM GRAPH 1 DUMMY PER POINT, YOU CAN CHECK ON ANALYSIS AND RESIDUALS and you see from the dummy what happened in each years) The next step is to do model in FIRST DIFFERENCES. FOR EACH MODEL (L, NL , LOG) WE NEED ALL THE INTERPR, STATISTICAL SIGNIFICANCE (t.test, p value), outliers, time series blabla IN THE INTRODUCTION YOU CAN DISCUSS WHY YOU ARE EXPECTING CO2 AND GDP TO BE RELATED, WHAT YOU KNOW FROM THEORY ABOUT THEIR RELATIONSHIP! 26/04/2022 REMEDIES FOR AUTOCORRELATION AUTOCORRELATION: when we have correlation btw 2 subsequent points in time create problem both for standard errors and for It’s an opportunity to MODIFY THE REGRESSION ADD LAGS TO THE RIGHTEN SIDE OF CAUSALITY EQUATION Sometime this change is enough, sometimes NOT TEST FOR HETEROSKED (WHITE TEST) no need to test again. TEST FOR AUTOCORRELATION GODFREY BREUSH TEST UPDATE STAND ERR ADD LAG VARIABLE OF Y RETEST to see if autocorrelation have been taken into account. I NEED TO RETEST FOR AUTOCORRELATION (risenti questa parte perchè sta roba sopra non torna) DYNAMIC MODELS BC WE HAVE LAGGED VALUE OF DEP VARIABLE ON RIGHTEN SIDE Y: dependent variable Y is CO2 emissions per capita Xt: independent variable GDP per capita Y(t-1): lagged value of CO2 emissions per capita Why do we have lagged value of dep variable BC WE INTRODUCE THIS TO REMEDY AUTOCORRELATION SE NON RIESCI A RISOLVERE AUTOCORRELATION YOU USE DUMMIES OUTLIERS, STATISTICALLY SIGNIFICANT DUMMIES, IS THE COEFFICIENT TO THE DUMMIES SIGNIFICANT? LO VEDI DAL TIME SERIES PLOT WHY INTRODUCE LAGS? LAGS MEASURE INERTIA, SO HOW MUCH PAST (past observation) INFLUENCE PRESENT (current observations) B2 (coefficient of lagged variable) measures autocorrelation Possible reasons why we may have autocorrelation: - omission of relevant variables we might have a misspecification bc of using an inappropriate functional form WE USE LOGS OF THE VARIABLE FOR THIS unparametrized seasonality (remove seasonality DESEASONALIZING THE DATA) outliers THEODOR EXAMPLE RIVEDI, 16.28 CIRCA VEDI OUTLIERS ma also he added in the model the 2 lag: in the model of OLS he pushed lags and this this: And autocorrelation got better. In his case Breush Godfrey test was good even for 2nd order LAGS (they were significant). So devi provare a vedere gli ouliers and mettere i laags degli outliers (t-2) MODELS IN FIRST DIFFERENCE FORM From LEVELS (Yt, Xt) to FIST DIFFERENCE (deltaY, deltaX) Delta: Y(t)- Y(t-1) If Y and X are in LOGS then deltaY and deltaX are measuring the GROWTH RATE OF Y/X So by a model in FIRST DIFFERENCE we refer to a model which TRY TO UNDERSTAND THE GROWTH RATE OF CO2 EMISSIONS (4ex) HOW TO DO THAT IN GRETL (00:28) 1. Select the two variables ADD chose log differences of selected variables OR select log variables and chose FIRST DIFFERENCE OF SELECTED VARIABLE = same result 2. Select 4 variables (normal and log difference of annual co2 emission and log difference of gdp per capita which I just created) Create 4 graphs time series plot in separate small graph you can see levels of variables and growth rate of GDP per capita and growth rate of co2. 3. Ripartendo dale 4 variabili selezionate (Y, X, deltaY, deltaX) View summary statistics Create statistics (summary statistics) show full statistics 4. SELECT JUST GROWTH RATE VARIABLES (FIRST DIFFERNECE) tasto destroy XY-Scatter plot you can see the growth rate relations and identify the outliers (MAKE A NOTE THAT THE OUTLIERS YOU IDENTIFY AT THE LEVELS (that we have identify so far) DO NOT NECESSARY BE THE SAME OULIERS OF FIRST DIFFERENCE MODEL) 5. RUN OLS REGRESSION with robust standard error where I am going to have growth rate of CO2 emission e growth rate of GDP (= first difference). From that you can see if the growth rate of gdp is statistically significant 6. FILE FUNCTION PACKAGE on server Confidence band plot then go back to model above GRAPHS Confidence band plot IT PLOTS THE REGRESSION LINE TOGETHER WITH THE CONFIDENCE INTERVAL you need to define confidence level (0.95 he uses) 7. TEST FOR NORMALITY 8. TEST AUTOCORRELATION 9. DURBIN WATSON P VALUE TELLS ME THERE IS FIRST ORDER AUTOCORRELATION 10. TEST HETEROSKEDASTICITY PROBLEM: MODEL IN FIRST DIFFERENCES DO NOT HAVE LONG RUN SOLUTIONS DeltaYt = Yt - Yt-1 and usually all the variables are in logs IN THE LONG RUN Yt =(will equal) Yt-1 Y is the equilibrium value deltaYt will be equal to zero in the long run So, when I am using a model in first differences in long run: 1. 2. 3. 4. 5. 6. We do not write Xt Yt-1 but just X/Y SO deltaY=0 deltaX=0 X(2t-1) = x2 Y(t-1)= Y U(t) = 0 0= b1+ b3x2+ b4y FIRST DIFFERENCE tells how the model behave in SHORT RUN LEVELS tells how the model behave in LONG RUN Problems with Adding Lagged Regressors to “Cure” Autocorrelation • Inclusion of lagged values of the dependent variable violates the assumption that the RHS variables are non-stochastic. (price that we need to pay) • What does an equation with a large number of lags actually mean? Difficult to interpret a model with lots of lags • Note that if there is still autocorrelation in the residuals of a model including lags, then the OLS estimators will not even be consistent. MULTICOLLINEARITY Problem that occurs when explanatory variables are very high correlated with each other If 2 righten side variables are correlated, then we have multicollinearity in our case all we have on righten side are dummies and lagged variables but if we want to try to add variables on the righten side that could explain co2 emissions you can have multicollinearity with that. For example we can add industrial production find data and say that It could also increase Co2 emissions industrial prod. And gdp are correlated so there we can test for multicollinearity. There is no test for multicollin. So we just can look at the correlation matrix of the righten side variable if the results are the same for 2 or more variables we have to drop one of the variables or TRANSFORM, even better, the model. • This problem occurs when the explanatory variables are very highly correlated with each other. • Perfect multicollinearity: Cannot estimate all the coefficients - e.g. suppose x3 = 2x2 and the model is yt = 1 + 2x2t + 3x3t + 4x4t + ut • Problems if Near Multicollinearity is Present but Ignored - R2 will be high but the individual coefficients will have high standard errors. - The regression becomes very sensitive to small changes in the specification. - Thus confidence intervals for the parameters will be very wide because there are high standard errors, and significance tests might therefore give inappropriate conclusions. We identify the problem by looking at correlation matrix or we transform the model in order to solve multicollinearity. • “Traditional” approaches, such as ridge regression or principal components. But these usually bring more problems than they solve. • Some econometricians argue that if the model is otherwise OK, just ignore it • The easiest ways to “cure” the problems are - drop one of the collinear variables - transform the highly correlated variables into a ratio - go out and collect more data e.g. - a longer run of data - switch to a higher frequency (1:19) ADOPTING THE WRONG FUNCTIONAL FORM- RAMSEY’S RESET TEST • We have previously assumed that the appropriate functional form is linear. • This may not always be true. • We can formally test this using Ramsey’s RESET test, which is a general test for mis-specification of functional form we seen so far 4 functional forms: linear, logarithmic, first difference and non linear. Which of this is the most realistic for our data? Ramsey reset test will give us the answer. 2 3 Essentially the method works by adding higher order terms of the fitted values (e.g. yt , yt etc.) ut into an auxiliary regression: Regress ut 0 y y ... 2 1 t 3 2 t on powers of the fitted values: y vt p p 1 t so we obtain residual on lefthand side and on righthand side we have y hat square, y hat power 3 and so on. 2 Obtain R2 from this regression. The test statistic is given by TR2 and is distributed as a ( p 1) . • So if the value of the test statistic is greater than a ( p 1) (Chi square) then reject the null which is the hypothesis that the functional form was correct. 2 On GRETL OLS co2 emissions on the left, GDP on right I am going to test for functional form misspecification using ramsey reset for linear OLS MODEL. Test ramsey reset test keep the default option squares and cube ok viene fuori anche un auxiliary regression for reset test con (y hat ^2) and (y hat ^3) model and test: in case of Ramsey test the p-value is almost zero the null hypothesis is that the functional form is correct we reject so this is not a good model. There are no guarantees that I will be able to find a good model but we try with each one. (1:30) Auxiliary regression: We also have (y hat ^2) and (y hat ^3) In yellow the p-value • The RESET test gives us no guide as to what a better specification might be we have to experiment with different specifications • One possible cause of rejection of the test is if the true model is yt 1 2 x2t 3 x22t 4 x23t ut non linear In this case the remedy is obvious. • Another possibility is to transform the data into logarithms. This will linearise many previously y Axt eut ln yt ln xt ut multiplicative models into additive ones: t TESTING FOR NORMALITY • Why did we need to assume normality for hypothesis testing? Testing for Departures from Normality • The Bera Jarque normality test follows Chi distribution • A normal distribution is not skewed and is defined to have a coefficient of kurtosis of 3. • The kurtosis of the normal distribution is 3 so its excess kurtosis (b2-3) is zero. • Skewness and kurtosis are the (standardised) third and fourth moments of a distribution. The first moment of a distribution is the expectation and the second moment is the variance. NORMAL DISTRIBUTION: Symmetric VS SKIED DISTRIBUTION: asymmetric LEPTOKURTIC: many observations around the mean (higher than a Normal) TEST OF NORMALITY IN GRETL TEST NORMALITY OF RESIDUALS SEE P VALUE HO: ERROR IS NORMALLY DISTRIBUTED IF YOU REJECT THE NULL: NON NORMALITY IS NOT A BIG PROBLEM, DUMMIES CAN IMPROVE THE MODEL EVEN HERE. WHAT DO WE DO WITH NON-NORMALITY? • It is not obvious what we should do! • Could use a method which does not assume normality, but difficult and what are its properties? • Often the case that one or two very extreme residuals causes us to reject the normality assumption. • An alternative is to use dummy variables. e.g. say we estimate a monthly model of asset returns from 1980-1990, and we plot the residuals, and find a particularly large outlier for October 1987: OF COURSE WE NEED A THEORETICAL REASON FOR ADDING DUMMY VARIABLE Omission of an Important Variable • Consequence: The estimated coefficients on all the other variables will be biased and inconsistent unless the excluded variable is uncorrelated with all the included variables. • Even if this condition is satisfied, the estimate of the coefficient on the constant term will be biased. • The standard errors will also be biased. Inclusion of an Irrelevant Variable • Coefficient estimates will still be consistent and unbiased, but the estimators will be inefficient. PARAMETER STABILITY TEST • So far, we have estimated regressions such as • We have implicitly assumed that the parameters (1, 2 and 3) are constant for the entire sample period. For what reason? There is no reason to assume that this is the case… so: We can test this implicit assumption using parameter stability tests. The idea is essentially to split the data into sub-periods and then to estimate up to three models, for each of the sub-parts and for all the data and then to “compare” the RSS (residual sum of square) of the models. For example if you have data from 1800-2020, you divide in 3 parts bc some events changed things: 1.1800-1939 2.1940-2020 3.1800-2020 (one for the entire period) • There are two types of test we can look at: - Chow test (analysis of variance test) - Predictive failure tests THE CHOW TETS The steps involved are: 1. Split the data into two sub-periods. Estimate the regression over the whole period and then for the two sub-periods separately (3 regressions). Obtain the RSS for each regression. 2. The restricted regression is now the regression for the whole period while the “unrestricted regression” comes in two parts: for each of the sub-samples. We can thus form an F-test which is the difference between the RSS’s. The Statistic is: RSS RSS1 RSS2 T 2k RSS1 RSS2 k where: RSS = RSS for whole sample RSS1 = RSS for sub-sample 1 RSS2 = RSS for sub-sample 2 T = number of observations 2k = number of regressors in the “unrestricted” regression (since it comes in two parts) k = number of regressors in (each part of the) “unrestricted” regression 3. Perform the test. If the value of the test statistic is greater than the critical value from the Fdistribution, which is an F(k, T-2k), then reject the null hypothesis that the parameters are stable over time. NULL HP: The parameters are stable over time (no structural break)The alternative is that the 2 subsample are different ON GRETL: CHOW TEST TEST CHOW TEST OBSERVATION YEARS IN WHICH WE NEED TO SPLIT (DEPENDS ON THEORY AND ON COUNTRY) IF WE REJECT, WE SHOULD REDEFINE THE BREAKS AND WE NEED TO ESTIMATE A DIFFERENT MODEL BEFORE A CERTAIN DATA AND A DIFFERENT MODEL AFTER A CERTAIN DATA. OR WE CAN FIND A MODEL THAT TAKES INTO ACCOUNT THAT VARIABLES ARE CHANGING OVER TIME! CUSUM TEST indication of the stability of the model. ANOTHER WAY TO TEST STABILITY OF THE PARAMETERS IS TO DO A CUSUM TEST : WE NEED IT TO SEE HOW COEFFICIENTS ARE CHANGING OVER TIME AND TO SEE IF FROM SOME POINT IN TIME THE COEFFICIENTS ARE CHANGING SO IT’S ANOTHER INDICATION THAT THE STABILITY OF THE MODEL IS NOT SUPPORTED BY THE DATA. QUESTIONS: 1. MULTICOLLINEARITY. In our case we have just one independent variable, so I do not have multicollinearity problem. BUT if I add variables I can test for multicollinearity. CHOW TEST EXAMPLE (2:10) CUSUM TEST Null is parameter stability. If you want to see which possible year is potential to split the sample you can use the CUSUM test to see of and when there is a break in the sample. QLR TEST You can also RUN QLR TEST: serve a performing the sequential for all possible observation it’s like running a CHOW test for all possible years. (2:14) we don’t know when this break (in which year) is taking place. The only thing we know is the trimming of the sample we can delete some observation (like the first 15%) If p value=0 means there’s no stability and a potential year for a break is 1896 (GIVEN BY LIKELIHOOD RATIO TEST/similar to CHOW). HOW TO DECIDE WHICH SUBPARTS USE • As a rule of thumb, we could use all or some of the following: 1. Plot the dependent variable over time and split the data accordingly to any obvious structural changes in the series, e.g. 2. Split the data according to any known important 3. historical events (e.g. stock market crash, new government elected) 4. Use all but the last few observations and do a predictive failure test on those. MEASUREMENT ERROR MAYBE NEED ALTERNATIVE CO2 MEASURES. If there is measurement error in one or more of the explanatory variables, this will violate the assumption that the explanatory variables are non-stochastic Sometimes this is also known as the errors-in-variables problem Measurement errors can occur in a variety of circumstances, e.g. o Macroeconomic variables are almost always estimated quantities (GDP, inflation, and so on), as is most information contained in company accounts o Sometimes we cannot observe or obtain data on a variable we require and so we need to use a proxy variable – for instance, many models include expected quantities (e.g., expected inflation) but we cannot typically measure expectations. From this slide, the rest of the slides skipped till GENERAL TO SPECIFIC APPROACH PROJECT SECTION 3 1. 2. 3. 4. 5. LINEAR MODEL AND DIAGNOSTICS (AUTOCORR, WHITE, RAMSEY, DARBIN WATS, CHOW TEST…) NON LINEAR AND SAME DIAGNOSTICS LOGARITMIC FIRST DIFFERENCE QR QUESTIONS FINE LEZIONE TOGLI TUTTI GLI ANNI CHE NON ABBIAMO LE OSSERVAZIONI PER ENTRAMBE LE VARIABILI PER FARE IL CHECK DEI DECIMALI: PROBLEM OF DECIMAL POINT- GO TO EXCEL FILE AGAIN, FILTER THE DATA, CHECK TO DO IT RIGHT, PASTE IT CAREFULLY AND JUST USE THE YEARS WHEN YOU HAVE OBSERVATION FOR BOTH VARIABLES ONCE YOU HAVE A CLEAN EXCEL FILE YOU HAVE TO INSERT IN EXCEL ALL YOUR ROWS AND TELL GRETL THAT THE FIRST ROW ARE THE YEARS (TIME DIMENSION). FOR QR DO WE HAVE TO DO DIAGNOSTIC TEST ON IT OR JUST TO COMPARE IT WITH LINEAR? THE ONLY TEST YOU CAN DO IS FOR NORMALITY OF RESIDUALS (statistically significance of the coefficients) EXTRA VARIABLES IS OPTIONAL 27/04/2022 COLOR XY graph To identify OUTLIERS use BOX PLOT insert annual co2, logged and first difference. MATRIX CORRELATION: do it if you want to check the correlation between two variables (in the case you added another variable) NON-LINEAR MODEL question about autocorrelation : (0:43) I can keep increasing the number of lags if it doesn’t work you say that we cannot solve the autocorrelation problem even trying with log value and first differences. CHAPTER 6 VARIABLES THAT ARE OPERATING IN TIME SERIES DIMENSION TIME SERIES MODEL: model in which the lagged value of the dependent variable helps us explain and forecast what we are going to do. WEAKLY STATIONARY PROCESS: Y is stationary if it has: If a series satisfies the next three equations, it is said to be weakly or covariance stationary 1. E(yt) = , t = 1, 2,..., constant mean 2 2. E ( yt )( yt ) Constant variance 3. E ( yt1 )( yt 2 ) t 2 t1 t1 , t2 Constant covariance However, the value of the autocovariances depend on the units of measurement of yt. That's why we are usually EMPLOYING autocorrelations. Autocorrelations are normalized autocovariances WHERE WE divide covariance by variance to obtain correlation (TAUs). can I compare the autocovariances of two variables? NO Can we compare covariances? NO THE answere IS NO, because all the covariance depends on the units of measurement of Y. Can I COMPARE Autocorrelation? YES. So if we take the autocorrelations and plot them, this is called the auto correlation function or the correlogram. CORRELOGRAM: correlogram measures How strong is the memory of a process. Measures. HOW IMPORTANT ARE LAG VALUED, PAST OBSERVATION FOR A PROCESS. (1:12) ON GRETL: right click on variable and find the correlogram: you can leave the default option of GRETL. AUTOCOVARIANCES (takes values btw -1 and 1) ARE NOT COMPARABLE BUT AUTOCORRELATIONS (takes values btw -1 and 1 and measure dependence btw 2 subsequent observations) ARE and the last are obtained by dividing autocovariance by the variance. WHITE NOISE PROCESS - Random, with no structure Zero autocovariances what’s happening in the past does not affect what is happening now. We need a white noise process bc it can give us confidence intervals of insignificant autocorrelation. THE NULL HYPOTESIS IS THAT THE TRUE VALUE FOR AUTOCORRELATION IS ZERO. The blue lines around zero are the confidence intervals given by the white noise process SO EVERYTHING THAT IS INSIDE THE BLUE INTERVAL IS NOT SIGNIFICANT, EVERYTHING THAT IS OUTSIDE IS SIGNIFICANT. (WE ARE JUST LOOKING AT THE FIRST GRAPH RIGHT NOW, ACF) Now I GO TO FIRST DIFFERENCES (just of Annual CO2) AND I CREATE A CORRELOGRAM THERE. IF THE AUTOCORRELATIONS ARE OUTSIDE THE WHITE NOISE PROCESS THEN THEY ARE SIGNIFICANT JOINT HYPOTESIS TEST FOR AUTOCORRELATION FUNCTION: We can do that with Q statistics or Q*: the difference is that Q* is used when we have small sample. Q statistic in GRETL is given here: and p value right next to it. EXAMPLE: IN THIS CASE ONLY THE FIRST OF THE AUTOCORRELATIONS IS SIGNIFICANT, THE OTHERS AREN’T, GIVEN THAT INTERVAL OF CONFIDENCE. THERE IS SIGNIFICANT CORRELATION BTW THOSE TWO SUBSEQUENT POINT IN TIME. WE CAN ALSO DO A JOINT TEST WITH Q AND Q STAR AND WE ARE GONNA FIND THAT COEFFICIENTS ARE JOINTLY NOT SIGNIFICANT MOVING AVERAGE PROCESS MA: Yt = U (constant term) + coefficient β1 (that I want to estimate) per lag valued of the error + ε (error term) So from this equation I have to estimate value of (U hat) and (β1 hat). SO WHAT IS A MAP TELLING US: that the variable that we are trying to explain depends on lagged value of the random variable. ON GRETL: FROM LOG DIFFERENCE (che dovrebbero essere I first difference) MODEL UNIVARIATE TIME SERIES ARIMA AR: 0 bc we haven’t done autoregressive model yet, and 2 for MA You can put dummies under regressors or you cannot, it’s the same. I USE MY Y VARIABLE WICH IS GROWTH RATE OF CO2 EMISSION PER CAPITA. THEN IN THE OUTPUT I HAVE THETA ONE AND THETA DUE AS IN THE PREVIOUS SLIDE THE CONSTANT TERM IS NOT STATISTICALLY SIGNIFICANT THETA ONE IS SIGNIFICANT THETA 2 IS NOT MA IS TELLING ME THAT THE LAGGED VARIABLE HELPS ME OR NOT TO EXPLAIN AND FORECAST THE GROWTH RATE OF CO2 EMISSION FROM MA MODEL ANALYSIS FORECAST and I CAN FORECAST CO2 emissions. ( I drop one observation quindi tolgo un anno andando su SAMPLE (pagina delle variabili) e abbassando di un anno così posso forecast that observation to see how good my forecast is. I NEED TO COMPARE MY FORECAST WITH SOMETHING (THAT IS THE REAL VALUE) SO THAT I KNOW HOW GOOD MY FORECAST IS. SO I DROP ONE FROM THE LAST YEAR AND THEN I REESTIMATE THE MODEL. AND THEN I USE THIS NEW MODEL TO FORECAST WHAT IS HAPPENING IN 2020. ANALYSIS FORECAST FOR THAT MISSING YEAR. (1:36) SEMBRA UN’ANALISI BRUTTA, PROVA A METTERE DYNAMIC FORECAST ALL’INIZIO. You compare the moving average with the autoregressive. 1:41 AUTOREGRESSIVE PROCESS: Process in which the lagged values of the variable itself are used to explain Y. Yt is the variable that I am trying to explain u is the constant term Y t-1 is the lagged value of Y (WE CAL ALSO HAVE 2 LAGS, so another variable which is Y t-2) Ut is the error THE DIFFERENCE BTW THE MA(1) AND THE AR(1) is that in the first case we are concentrating in lagged values of a random variable, while in ARP we concentrate on lagged value of variable itself (the independent one) (2:04) A ARM ON GRETL: MOVING AVERAGE: Select log difference of annual co2 model univariate time series ARIMA AUTOREGRESSIVE: Same as before model univariate time series ARIMA in the table on the right (orders): 1 at AR, 0 MA MA (1. left) VS AR (2. right) DO IN THE PROJECT: Const term in AR is NOT statistically significant. PHI 1 is Significant. Same for constant term and regressor of MAV STATIONARY CONDITION FOR AN AR MODEL You can use AR and MA model just when the variable is stationary so in our case we should use this 2 models only for a growth rate of CO2 emissions. Skipping until PARTIAL AUTOCORRELATION FUNCTION (PACF) • Measures the correlation between an observation k periods ago and the current observation, after controlling for observations at intermediate lags (i.e. all lags < k). the effect (in last example) of T-5 once you removed the effect of all that’s happening in the middle (so t1, t-2, t-3, t-4) ON GRETL: Log difference annual CO2 tasto destro correlogram 23 lag but go with the preimpostata output of PACF as a number and as a graph ARMA (1,1) Combination of AR and MA. GRETL: model univariate time series ARIMA and the put 1 and 1 COMPARING AR, MA, ARMA -estimate AR, MA, ARMA - for comparing you need data from INFORMATION CRITERIA which allows me to compare the models the best model is the one that minimises information criteria AIC is the AKAIKE CRITERION (look at the most negative) ANS SCHWARTZ Criterion which is SBIC 28/04/2022 QUESTIONS Add lags re estimate the model watch for outliers (0:19) DIAGNOSTIC FOR MA, AR, ARIMA: the main test you can do is autocorrelation: CHAPTER 8 STATIONARY: Definition by three properties 1. Constant mean 2. Constant variance 3. Constant covariance The stationarity of a series can its behaviour and its properties (4ex in non-stationary process persistence of shocks will be infinite, so shocks will affect the series forever) SPURIOUS REGRESSION: when you regress one non-stationary variable on another non stationary variable this is called spurious regression. Two variables trending over time: definition of non-stationarity A regression of non stationary variables on each other will create a higher R^2 WITH NO MEANING T ratio are not gonna follow a t distribution and HYPOTESIS TESTING IS NOT VALID So: when 2 variables are not correlated, I GET ZERO R^2. BUT in this case, bc of non-stationarity of 2 variables I will get an R^2 that is higher than zero, like if there is a causal relationship but it’s NOT. TWO TYPES OF NON- STATIONARY 1. RANDOM WALK MODEL: 2. DETERMINISTIC TREND PROCESS: GENERAL CASE OF RANDOM WALK MODEL (slide 6) MODEL WHERE PHI1 CAN TAKE ANY VALUE THAT WE WANT WE ARE GONNA CONCENTRATE ON: PHI BEING >1 explosive case (growth rate that keeps going up) AND NON STATIONARY PHI = 1: NON STATIONARY, IT’S A RANDOM WALK PHI BEING <1: a variable is STATIONARY CASE 1: PHI BEING <1: a variable is STATIONARY THIS MEANS SHOCKS TO THE SYSTEM WILL GRADUALLY DIE AWAY AND AT SOME POINT, THIS WILL DIE AWAY. HALF LIFE depends on PHI. If PHI is 0.95 you are forgetting very slowly. If Phi is 0.1 you are forgetting very fast (closer to zero the faster) Financial variables they do forget very easily about shocks and in 2 weeks time the shock can be absorbed by the system. Totally opposite with economic variables. CASE 2: PHI = 1: the shock will persist forever RANDOM WALK CASE 3: Phi >1 explosive case HOW DO WE MAKE A VARIABLE STATIONARY IF IT’S NOT? If it is RANDOM WALK we take first difference (non stationary) BY TAKING FIRST DIFFERENCES (we imply that the variable is log) ON GRETL: GDP_PC IS NON-STATIONARY FOR EXAMPLE I TAKE LOGARITMIC FIRST DIFFERENCE (quindi log) and obtain LOG OF GDP_PC which is a STATIONARY. White noise process is -random - No structure - stationary HOW TO TURN SOMETHING NON STATIONARY IN STATIONARY: TAKE FIRST DIFFERENCES OF THE LOGS UNIT ROOT I(0) SERIES IS A STATIONARY SERIES can be used in the regression I(1) SERIES CONTAINS ONE UNIT ROOT is non stationary, I cannot use it in the regression to make it stationary I take first differences of the logs. GDP is I(1) variable Delta GDP is I(0) CO2 is I(1) Delta CO2 is I(0) I(2) to make them stationary you need to take differences twices HOW DO WE TEST FOR A UNIT ROOT? (SLIDE 19) (1:16) We have a AR model (theoretical DF) HO: SERIES CONTAINS A UNIT ROOT, PHI=1, VARIABLE IS NOT STATIONARY H1: SERIES IS STATIONARY, PHI<1 Watching an AR model it can be SPURIOUS if this is a spurious regression I cannot do hypothesis testing We can solve this issue by estimating the EMPIRICAL Dickey Fuller (1:19) DF TEST STATISTIC Sometime it suffers from Autocorrelation you solve it by adding lags (1:21) ON GRETL: UNIT ROOT TEST: CHECK FOR STATIONARITY: gdp value (normale) variable unit root test dickey- (adf) HOW MANY LAGS? MAX 14 AND YOU CAN CHOSE EITHER AKAIKE CRITEIRON OR SCWARTZ HOW MANY LAGS MINIMIZE THE AKAIKE Informational Criteria? WHICH IS THE LOWEST AKAIKE? Check it and (1:28) UNIT ROOT FOR GROWTH RATE OF GDP PC: I EXPECT THAT THE VARIABLE IS STATIONARY. I DO THE SAME AS BEFORE (14 LAGS, AKAIKE CRIT) WHICH IS THE LAG THAT MINIMIZE AKAIKE IC 6TH ONE SO WE HAVE A MODEL WITH 6 LAGS -> P VALUE OF DF TEST is about zero so the null hp (Non-stationary) is rejected. SO THE VARIABLE IS STATIONARY. WE HAVE TO CHECK FOR STATIONARITY OF BOTH CO2 E GDP (NORMAL) VARIABLES if both of them are non stationary then the first OLS regression we runned is a spurious regression: IF I FIND THAT THE MODEL IS SPURIOUS I HAVE TO SAY THAT THE MOST APPROPRIATE MODEL IS THE MODEL THAT USES ONLY STATIONARY VARIABLES, SO FIRST DIFFERENCE ONE!!!! I CAN CHECK THAT FIRST DIFFERENCE CO2 AND GDP ARE STATIONARY (MAYBE INSERT ANCHE QUESTO GIUSTO PER) ad esempio nell’esempio del prof co2 emission is not stationary but GDP is stationary. SI TEST EVERYTHING FOR STATIONARITY Remember we are taking lags to correct for autocorrelation (slide 23) FIGURES: time series plot, scatter plot and from ADD color xy plot 2: 11 plot everything together? residual plot should go to analysis TESTING FOR HIGHER ORDER INTERGRATION WHEN A VARIABLE COULD BE I(2) you have to take first difference again and do again DF test THE PHILIPS-PERRON TEST (unit root test) The correction for autocorrelation is different FROM DF PP is using a non-parametric correction to autocorrelation: first difference GDP variable unit root PHILIPS PERRON TEST IF IT’S NOT AVAILABLE YOU GO TO OPTION FILE, FUNCTION PACKAGE, ON SERVER ADF (DICKEY FULLER) ADDS LAG TO CORRECT AUTOCORRELATION 2:15 06/05/2022 THEO ED EDO PRESENTATION - FAI COLOR XY PER TODO In the project is show PUT AUTOCORRELATION AS FIRST DIAGNOSTIC TEST BC IT’S THE MOST IMPORTANT BREAKS FROM KPSS ARE SIMILAR TO OUTLIERS OR NOT? In their case completely different. (AOUND 00:15) Cointegration is long run findings FILE FUNCTION PACKAGE OBSERVER KETVALS The environmental cost of being rich is decreasing over time. unit radical test for stationarity should be done at the beginning to understand if the correlation btw 2 variables bc it’s more correct but Prof wants to do it in this way. BEA AND MARCUS (00:30) Hamilton filter for graphs LINEAR MODEL + OUTPUT + CONFIDENCE INTERVAL NON LINEAR MODEL + OUTPUT + CONFIDENCE INTERVALS KETVALS CHIARA AND LISA Do actual fitted plot GDP pc against time ISA AND SABRI Modrick-Prescott filter Time series plot Xy scatterplot Color xy YOU HAVE TO PUT CO2 on VERTICAL AXES Chow test just do it for structural break and special years COMPARISON LIKE THIS NICE NADARAYA WATSON LOG ANNUAL CO2, CONST AND LOG OF GDP AS REGRESOR LOGARITHMIC MODEL - model ROBUST ESTIMATION NADARAYA WATSON –NON PARAMETRIC MODEL produces a fit that if you click on the graph we can see File function packages server KETVALS install file function packeges on local machine right click execute create this Then this Click on graph on output and then get time variety coefficients, you have 2 graphs and