Ref. Ares(2017)529646 - 31/01/2017

Technical note D4.3

Preliminary description of visionbased navigation and guidance

approaches

31st December, 2016 (M10)

Author: Yoko Watanabe, Balint Vanek, Akos Zarandy, Antal Hiba, Shinji Suzuki,

Ryota Mori

Publish date: 31/01/2017

1

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

Document Information Sheet

General information

Deliverable no.

4.3

Document title

Preliminary description of vision-based navigation and guidance

approaches

PU (Public)

Dissemination level

Project name

Validation of Integrated Safety-enhanced Intelligent flight cONtrol

EU

EU-H2020 GA-690811

Grant no.

Japan NEDO GA- 062800

EU

Hugues Felix (EC)

Project officers

Japan Hiroyuki Hirabayashi (NEDO)

EU

Yoko Watanabe (ONERA)

Coordinators

Japan Shinji Suzuki (the University of Tokyo)

Lead beneficiary

ONERA

WP no.

4.2

Due date

31/12/2016 (M10)

Delivered date

31/01/2017

Revision

8

Revised date

27/01/2017

Approvals

Authors

EU

Yoko Watanabe (ONERA), Balint Vanek (SZTAKI), Akos Zarandy

(SZTAKI), Antal Hiba (SZTAKI)

Japan Shinji Suzuki (UTOKYO), Ryota Mori (ENRI)

Task leader

ONERA

WP leader

UTOKYO

2

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

History

Revision

1

2

Date

13/12/2016

21/12/2016

3

22/12/2016

4

09/01/2017

5

16/01/2017

6

24/01/2017

7

26/01/2017

8

27/01/2017

Modifications

Creation

SZTAKI 1st input

1st update on the vision-based navigation

approach (ONERA)

2nd update on the vision-based navigation

approach (ONERA)

Integration of UTOKYO’s input on the

trahectory optimization

SZTAKI 2nd input

3rd update on the vision-based navigation

approach (ONERA)

Update by UTOKYO, RICOH and ENRI

4th update on the vision-based navigation

approach (ONERA)

Authors

Yoko Watanabe

Antal Hiba

Yoko Watanabe

Yoko Watanabe

Antal Hiba

Yoko Watanabe

Ryota Mori

Yoko Watanabe

3

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

Table of Contents

Introduction ............................................................................................................................... 6

1. VISION project overview ............................................................................................... 6

2. WP4.2 overview............................................................................................................. 7

3. Objective of the document............................................................................................ 8

Preliminary design of vision-based navigation ........................................................................ 9

1. Sensor failure scenarios................................................................................................. 9

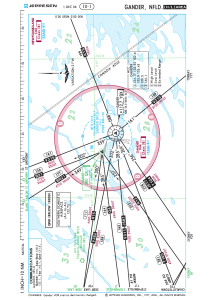

a. GNSS-based approach .................................................................................................... 9

b. ILS-based approach ...................................................................................................... 10

2. Reference coordinate frames and transformations................................................... 11

a. Inertial frame: FI........................................................................................................... 11

b. ECEF coordinate frame: FECEF ..................................................................................... 11

c. Geodetic coordinate frame: FGEO ............................................................................... 11

d. Local NED and ENU frames: FNED, FENU..................................................................... 12

e. Runway fixed frame: FRWY .......................................................................................... 13

f. Vehicle carried frame: FV ............................................................................................. 13

g. Aircraft body frame: FB ................................................................................................ 13

h. Camera frame and pixel-coordinates on an image: FC, Fi ........................................... 14

3. The aircraft kinematic model ...................................................................................... 15

4. Navigation sensors ...................................................................................................... 16

a. AHRS (IMU + Magnetometer) ...................................................................................... 16

b. GNSS/SBAS ................................................................................................................... 16

c. Baro-altimeter .............................................................................................................. 17

d. Inclinometer ................................................................................................................. 17

e. Vision systems .............................................................................................................. 17

f. ILS ................................................................................................................................. 19

a. Navigation performance criteria .................................................................................. 20

b. Integrity monitoring ..................................................................................................... 21

6. Preliminary design of vision-aided navigation system .............................................. 27

a. Estimator design........................................................................................................... 27

b. Fault detection and estimation (FDE) .......................................................................... 29

7. Validation plan............................................................................................................. 30

a. Validation of the image processing algorithms............................................................ 30

b. Validation of the estimation algorithms ...................................................................... 30

c. Plan for 1st flight test .................................................................................................... 30

d. Preliminary plan for further flight tests ....................................................................... 31

8. Others........................................................................................................................... 31

Preliminary design of vision-based guidance ......................................................................... 32

1. Trajectory optimization for small deviation from the nominal glide path ............... 32

a. Trajectory optimization................................................................................................ 32

b. Decision making ........................................................................................................... 32

c. Validation plan ............................................................................................................. 32

2. Image-based obstacle avoidance approach ............................................................... 33

a. Obstacle detection and possible avoidance maneuvers.............................................. 33

b. Detection and tracking ................................................................................................. 33

4

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

c. Decision based on tracks.............................................................................................. 33

Terminology ............................................................................................................................. 35

References................................................................................................................................ 36

5

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

Introduction

1. VISION project overview

To contribute towards the global goal of the aircraft accident rate reduction, this EU-Japan

collaborative research project called VISION (Validation of Integrated Safety-enhanced Intelligent

flight cONtrol) has the objectives of investigating, developing, and above all, validating advanced

aircraft Guidance, Navigation and Control (GN&C) solutions that can automatically detect and

overcome some critical flight situations. The focus of this project is on the final approach phase

where more than half of the fatal accidents have occurred in the last decade. The VISION project

tackles the following two different types of fault scenarios, which cover a dominant part of the causal

factors of the fatal aircraft accidents in the world.

• Flight control performance recovery from

o Actuator faults/failures (jamming, authority deterioration, etc.)

o Sensor failures (lack of airspeed etc.)

• Navigation and guidance performance recovery from

o Sensor failures (lack of SBAS, lack of ILS, etc.)

o Proximity of unexpected obstacles

These two fault types have completely different natures and so require different approaches. While

the first recovery scenario set will be achieved by applying fault detection and fault tolerant control

techniques, the second one will be achieved by proposing precision navigation and approach

guidance systems using new onboard sensing technologies. Flight validations of the developed

techniques and methods will be performed on JAXA’s MuPAL-alpha experimental aircraft in Japan for

the first scenario and on USOL K-50 UAV platform in Europe for the second scenario.

Figure 1 illustrates how the European and Japanese partners from academia, national research

institutes and industries will make synergetic and complementary efforts to achieve these ambitious

scientific objectives within this VISION project, in order to improve global aviation safety.

Figure 1 EU-Japan mutual efforts to the VISION project

6

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

2. WP4.2 overview

WP4 of VISION project is dedicated to the second type of failure scenario, “Navigation and Guidance

performance recovery.” WP4.2 aims at developing navigation and guidance algorithms which use

vision information, provided by the systems developed in WP 4.1, for the purpose of maintaining

flight safety and navigability in the fault scenarios defined in WP 2.2. The objectives of this workpackage are the following:

•

•

•

•

To specify the vision information and its performance criteria to be fed back into the aircraft

navigation and guidance system for each fault scenario,

To develop vision-based navigation algorithms which estimate the aircraft flight path relative

to a runway, from the specified vision information in the navigation data degradation/failure

scenario,

To develop vision-based guidance algorithms which modify the aircraft flight path in order to

ensure flight safety upon the detection of unexpected obstacles on the current planned path,

and

To adapt and improve the performance of the developed systems through analysing results

of simulations and intermediate flight tests.

Figure 2 shows the relationship of WP4.2 with other sub-WPs.

Figure 2 The VISION work-packages

7

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

3. Objective of the document

WP4.2 has been active since M4 (06/2016) and continues until M30 (08/2018). The first six month of

this work-package 4.2 was devoted to

• Investigation in existing approaches for vision-based navigation and guidance for aircraft final

approach and landing,

• Study in navigation performance criteria and integrity monitoring algorithms, especially for

GNSS-based approach, and

• Preliminary design of vision-based navigation and guidance for specific fault scenarios

defined in WP2.2.

This document provides current results of the above listed tasks, focusing on descriptions of

preliminary design of vision-based navigation (in case of sensor failure) and guidance (in case of

obstacle detection) systems, which are to be evaluated with data recorded during the first flight test

campaign.

8

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

Preliminary design of vision-based navigation

1. Sensor failure scenarios

This section recalls the navigation sensor failure scenarios, defined in WP2.2 and provided in D2.1

“Fault scenario descriptions,” for which we propose a vision-based navigation system to mitigate a

critical situation. Two different approach procedures are considered in this VISION project; the GNSSbased LPV approach (APV-I or SBAS CAT-I) and the classical ILS approach. The interest of using

onboard vision in case of failure for both scenarios is to allow the pilot to continue the approach until

a decision height corresponding to APV-I or SBAS CAT-I instead of triggering a go-around (or missed

approach) procedure. VISION system only addresses the final segment approach, supposing that an

accurate relative position with respect to a runway is available on the initial segment for initializing

the visual tracker.

a. GNSS-based approach

In the first set of the sensor failure scenarios, it is supposed that the aircraft applies the GNSS-based

LPV approach procedure. In this procedure, horizontal and vertical approach guidance uses the GNSS

positioning based on GPS signals and the SBAS augmentation [dgac-2011].

The following four GNSS failures were listed in D2.1.

1) Degradation of GPS signals due to a reduced number of visible satellites with SBAS

augmentation

2) Lack of SBAS signals with GPS signals fully available

3) Degradation of GPS signals due to ionosphere interference with SBAS augmentation

4) Lack of SBAS signals and GPS signals due to jamming

We consider that the GNSS/SBAS functions correctly at the beginning of the final approach phase and

then the failure occurs before the aircraft reaches at DA. GNSS-based system causes positioning

errors with various reasons, such as ionosphere interference, lower atmosphere, multi-path effect,

and the satellite constellation. SBAS system provides the augmentation information which improves

the positioning accuracy.

Figure 3 Vision-aided navigation system with degraded GNSS positioning

9

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

During the last WP4 technical meeting (held in Madrid in 10/2016, in conjunction with PM1), the

WP4 partners agreed on prioritizing the scenario 2 (lack of SBAS with GPS) then 4 (lack of SBAS and

GPS) over the other two. It was also re-confirmed the need of a supervision loop which provides

continuously a Horizontal and Vertical Protection Levels (HPL/VPL) corresponding to an estimated

error of position with a given probability of missed detection.

Figure 3 illustrates a vision-aided navigation system, proposed in this project, with degraded GNSS

positioning.

b. ILS-based approach

The second set of the sensor failure scenario supposes the classical ILS approach procedure. In this

procedure, the ILS provides lateral and vertical deviations from a 3-degree final glide path with

precision.

In VISION project, we consider the following two ILS failures.

5) Lack of ILS information during the ILS approach

6) Misleading of ILS information due to ILS secondary lob

As in the GNSS-based navigation scenario, we suppose that the ILS functions at the beginning of the

final approach phase and then the failure occurs before the aircraft reaches at DA. During flight

validation, as ONERA flight test site is not equipped with ILS, ILS sensor will be emulated onboard by

using real-time data and a-priori knowledge on the environment (runway location, elevation and

size).

The WP4 partners agreed on that scenario 5, which coincide with Scenario 2 in terms of available

sensor set, is prioritized over 6.

Figure 4 shows a vision-aided ILS-like approach guidance system in case of ILS failure.

Figure 4 Vision-aided ILS-like approach guidance system

10

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

2. Reference coordinate frames and transformations

Before introducing the navigation systems design, this section overviews different reference

coordinate frames.

a. Inertial frame: FI

An inertial reference frame is a fixed frame, in which Newton’s second law is valid.

b. ECEF coordinate frame: FECEF

ECEF (Earth-Centered Earth-Fixed) coordinate frame is a Cartesian frame whose origin is at the center

of the earth, X-axis pointing to an intersection of the equator and the prime meridian (latitude ϕ =

longitude λ = 0°), Z-axis to the North pole, and Y-axis completing the right-hand-rule. The ECEF frame

is fixed on the earth, which is rotating about the ECEF Z-axis with a rotational speed ωE with respect

to the inertial frame. We denote a position of a point represented in FECEF as

c. Geodetic coordinate frame: FGEO

The geodetic coordinate frame, widely used in GNSS-based navigation and measurements, is an

Earth-fixed frame which represents a point near the earth surface in terms of latitude ϕ, longitude λ,

and height h. The latitude is an angle in the meridian plane from the equatorial plane to the ellipsoid

normal. The longitude is the angle in the equatorial plane from the prime meridian to the projection

of the point onto the equatorial plane. The height is a distance to the point from the earth ellipsoid

surface in its normal direction. As the earth ellipsoid surface coincides with the mean-sea-level (MSL),

the height h of this geodetic coordinate frame is an altitude above MSL.

A relation between the position in FECEF and the coordinates (ϕ, λ, h) in FGEO is given as follows.

(1)

where Ra=6,378,137.0 m is the length of the Earth semi-major axis and e=0.08181919 is the Earth

eccentricity (values according to WGS84 [WGS-1984]).

From (1), it is straightforward that the longitude λ can be obtained by

(2)

Finding (ϕ, h) from the position in FECEF has some difficulty. The Bowring’s method [Bowring-1976],

which uses a center of curvature of the earth surface point corresponding to the point of interest

(h=0 in the equation (1)), is widely used to obtain a good approximate solution without iteration. This

method calculates the latitude ϕ by

(3)

where

is a reduced latitude approximate by

11

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

Then, the MSL altitude can be obtained by using the resulting latitude ϕ.

(4)

Our scenarios of VISION project consider a final approach phase of an aircraft, which is a near-ground

operation. Hence, the altitude h remains small and so does the approximation error.

d. Local NED and ENU frames: FNED, FENU

The NED (North-East-Down) or ENU (East-North-Up) frame is a reference frame locally fixed to a

point on the earth surface and its X-Y-Z axes pointing to North-East-Down (NED frame) or East-NorthUp (ENU frame). The Z-axis aligns the earth ellipsoid normal direction. These frames are suited for

local navigation and local measurements, which is the case for our final approach scenarios.

Let pECEF0 be the origin of the NED (or ENU) frame expressed in FECEF, and (ϕ0, λ0, h0) be its geodetic

coordinates. Then, a point given by pECEF in FECEF can be expressed in the NED (or ENU) frame as

follows.

(5)

where Ri represents a rotation matrix about i-axis.

Figure 5 shows the three Earth-fixed coordinate frames; FECEF, FGEO and FNED. Those frames are

fixed to the rotating earth and hence not an inertial frame (i.e., Newton’s second law does not apply).

However, in many airplane dynamics problems, the Earth’s rotation ωE relative to FI can be

neglected and those Earth-fixed frames can be used as an inertial frame [Etkin-1972]. In our

navigation system design, the NED frame is used as an inertial reference.

Figure 5 Earth-fixed coordinate frames (ECEF, GEO, and NED) [Cai-2011]

12

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

e. Runway fixed frame: FRWY

In our application of the local relative navigation for the aircraft

final approach, we define a frame locally fixed to the runway on

which the aircraft is approaching. Let pECEFthd be a runway

reference point, which locates at an intersection of the runway

threshold and center line, expressed in the ECEF frame. We set this

point as an origin of the runway fixed frame. Assume a flat runway

with an approach direction ϕRWY from the North and a slight slope

of θRWY from the horizontal plane. Then, as shown in Figure 6, we

define the runway frame orientation so that a rotation matrix from

FNED to FRWY becomes RY(θRWY)Rz(ψRWY).

Hereinafter, we define the NED frame at pECEFthd. Then the

transformation from FNED to FRWY is simply given by the rotation.

Figure 6 Runway Frame

(5)

f. Vehicle carried frame: FV

The vehicle carried frame is defined as the NED frame with its origin at the aircraft center-of-mass.

g. Aircraft body frame: FB

The aircraft body frame is a frame fixed to an aircraft, with its origin at the aircraft center-of-mass. A

plane of symmetry of the aircraft body defines the X-Z plane, with the X- and Z-axes pointing forward

and downward respectively. Then, Y-axis completes the right-hand-rule, resulting in pointing

rightward (See Figure 7). In general, the aircraft attitude is expressed by the Euler angles (φ, θ, ψ)

which rotate the Vehicle carried frame to the aircraft body frame. For local navigation problem, we

can neglect the earth curvature and so the vehicle carried frame has the same 3D orientation as the

NED frame defined somewhere on the earth in the vicinity of the aircraft. Let pNEDa be the aircraft

center-of-mass position expressed in FNED. Then, the transformation from FNED to FB is given by

(6)

In the navigation filter design, instead of the Euler angles, the quaternion q is often used to represent

the attitude in order to avoid the singularity occurring at ±90° pitch angle. A relation between the

Euler angles and the quaternion q = [q0 q1 q2 q3] is given by

The rotation matrix RB can be written in function of q as follows.

(7)

13

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

h. Camera frame and pixel-coordinates on an image: FC, Fi

A camera coordinate frame is fixed to a camera with its origin at the center-of-projection (COP) of

the camera, its Z-axis aligned with the camera optical axis (which is perpendicular to the image plane),

X- and Y-axes aligned with the horizontal (rightward in image width) and vertical (downward in image

height) axes of the image plane.

Let pNEDc be the camera’s COP and (φc, θc, ψc) be the Euler angles representing the camera attitude

with respect to FNED. Then, the position of a point in FC is give by

Let pBc and RCB be a position and an orientation (represented by a rotation matrix from FB to FC) of

the camera expressed in the aircraft body frame. Then, pC and RC can be written as

(8)

In VISION project, we consider cameras rigidly fixed to the aircraft body. Then pBc and RCB become

known constant, and so pC and RC can be determined by pB and RB, i.e. the aircraft position and

attitude, as seen in the equation (8). For Stereo-vision system, the left camera’s frame is usually used

as a reference frame.

Figure 7 Different coordinate frames (NED, Body, Camera and Pixel-coordinate frames)

2D pixel-coordinates are defined by a frame fixed on an image with its origin at the image left-top, xaxis pointing to the right (in an image width direction) and y-axis pointing down (in an image height

direction), as seen in Figure 7. Assuming a pin-hole camera model, a transformation from the 3D

camera frame to 2D pixel-coordinates is determined by the camera’s intrinsic parameters; axis-skew

s, focal length (fx, fy) and offset of the image center (principle point) (x0, y0).

Let pi be a 2D pixel coordinate of the point pC expressed in the camera frame. Then, pi is given by

(9)

14

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

where KC is called the camera intrinsic matrix, which is determined by a camera calibration process,

for example, by using a Matlab calibration toolbox from Caltech1.

ZC is the image depth and αC is its inverse. αC is called inverse depth and is widely used in computer

vision in order to exploit the linearity property in the measurement equation (9) [Matthies1989][Civera-2008]. The same concept of inverse range is also introduced in bearing-only target

tracking problems [Aidala-1983].

3. The aircraft kinematic model

The state of the aircraft is represented by the followings.

• Position expressed in the ECEF frame: pECEF = (XECEF, YECEF, ZECEF)

• Translational velocity expressed in the body frame: vB = (u, v, w)

• Quaternion rotating from the vehicle carried frame to the body frame: qB = (q0, q1, q2, q3)

• Angular velocity expressed in the body frame: ωB = (p, q, r)

The VISION project treats the aircraft local navigation problem during the final approach. By

neglecting the earth’s rotation and curvature, the aircraft kinematic equations are given by

(10)

where the rotation matrices RNED and RB are given by (5) and (7) respectively, and the 4x4 matrix Ω

is defined as below.

aB is the aircraft translational acceleration expressed in the body frame.

1

https://www.vision.caltech.edu/bouguetj/calib_doc/

15

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

4. Navigation sensors

This section summarizes navigation sensors available onboard an aircraft, and their measurement

models.

a. AHRS (IMU + Magnetometer)

AHRS (Attitude and Heading Reference System) combines 3 axis gyroscopes, accelerometers and

magnetometers to provide the attitude information. It outputs measurements of the nongravitational acceleration, angular velocity and attitude of the sensor at high frequency. The IMU

accelerometer and gyroscope measurements incorporate a bias. AHRS uses the earth magnetic field

and the gravity vector to compensate the angular velocity bias and estimate the attitude.

We suppose that the AHRS sensor is fixed at the aircraft center-of-mass with its 3 axes aligned with

the aircraft body frame axes. Then the AHRS measurements are modelled as follows.

(11)

(12)

(13)

where b denote the measurement bias, and ν the measurement noise modelled as a zero-mean

Gaussian noise. g =[0 0 g] is the earth gravity vector defined in the NED frame. As we consider the

near-ground operation, the normal gravity magnitude g at the latitude ϕ can be approximated by

that at the earth ellipsoid surface:

where e is the Earth eccentricity, ge = 9.7803253359 m/s2 and gp = 9.8321849378 m/s2 are the

normal gravity at the equator and at the pole, respectively [WGS-1984]. For more simplicity, we can

even ignore the variation of the gravity with latitude and approximate it by constant g = g(ϕ0) at the

origin of the local reference frame.

b. GNSS/SBAS

GNSS (Global Navigation Satellite System) provides pseudo-range measurements from visible

satellites to a receiver. The pseudo-range is obtained by multiplying the signal travel time from the

satellite to the receiver by the speed of light c. As the satellite clock and the receiver clock are not

synchronized, the pseudo-range measurement includes a bias due to their offset. Then the pseudorange measurement of i-th visible satellite is modelled by

(14)

where pECEFsati and pECEFrec are the position in FECEF, τI and τ are the clock bias of the i-th satellite

and the receiver, respectively. The receiver position in FECEF can be obtained from the aircraft

position pECEF and the fixed receiver position in FB. Here we use an estimate of the satellite clock

bias and its estimation error is included in the noise (that’s why there is a “hat” on τi). νρI is a zeromean Gaussian measurement noise which includes errors from different sources such as the satellite

clock and ephemeris errors, compensation errors in ionosphereic and tropospheric signal delays,

multi-path effects, etc [Faurie-2011]. Standard deviation of this measurement noise, denoted by

σUERE (UERE stands for User Equivalent Range Error), is calculated and provided by the receiver. The

receiver clock-bias can be modelled as a 2nd order random process, such as,

16

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

(15)

SBAS (Satellite-Based Augmentation System) is a GNSS augmentation system based on geostationary

satellites which broadcast correction information on the satellite clock and ephemeris, and the

ionospheric delay. Such correction information is collected by a network of ground stations receiving

and monitoring signals from GNSS satellites. The SBAS correction significantly reduces the errors in

pseudo-range measurement (i.e. σUERE).

c. Baro-altimeter

Barometric altimeter measures air pressure to obtain altitude information. A relation between the air

pressure pair and the MSL altitude h is given by

where g is the gravity acceleration, R = 287.058 J·kg·K−1 is the specific gas constant, L = 0.0065 K/m

is the decrease rate of the atmosphere temperature in height, and T0 = 15°C = 288.15 K is the

standard temperature [icao-1993]. This gives a = 2.2557x10−4 and b = 5.2557. P0 is the pressure

adjusted to the standard atmosphere at the Sea-Level, and this information is given as the QNH

pressure. Then by using it, the barometer pressure measurement can be converted to the altitude

measurement which is modelled as

(16)

with a non-zero Gaussian measurement noise.

d. Inclinometer

Two inclinometers are used to measure roll (φ) and pitch (θ) angles of the aircraft.

e. Vision systems

In VISION project, two types of vision systems are proposed to use in order to increase an accuracy

and integrity of the aircraft relative navigation to the runway in case of sensor failure. Detailed

description of preliminary designs of these two vision systems is given in D2.1 “Preliminary

description of vision sensor for navigation and guidance recovery scenario”. Vision system 1 provides

a relative position and orientation information of cameras with respect to the runway, while Vision

system 2 provides also the relative motion (linear and angular velocity) information.

Relative position and orientation

By using the stereo vision system, Vision System 1 and 2 will provide a relative position and

orientation of the camera with respect to the runway. In other words, it finds a transformation

between the camera frame FC and the runway frame FRWY, which is represented by a rotation

matrix RRWY/C and the threshold position in the camera frame pCthd.

The vision system 1 and 2 will be designed to output the 3D relative position Dp of the camera to the

threshold point expressed in the runway frame FRWY and the relative orientation angles

(Dφ, Dθ, Dψ) of the camera (FC) to the runway (FRWY), giving the rotation matrix RRWY/C.

17

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

The error model of Dp is obtained by an error model of pCthd, which is obtained from the pixelcoordinate measurement pi and the scaling factor measurement αC in equation (9). By assuming unbiased Gaussian error model for pi and αC , the inverse transformation of (9) gives the error model

of Dp. The error model of the relative attitude (Dφ, Dθ, Dψ) can be modelled as zero-mean Gaussian

with changing sigma’s. The details will be provided in the deliverable D4.2 “Preliminary description of

vision-based system for navigation and guidance recovery scenario” to be submitted in M11.

Those measurements can be expressed in function of the aircraft pose (pECEF, qB), the known

camera pose parameters (pBc, RCB) in the aircraft body, and the runway pose parameters (pECEFthd,

θRWY, ψRWY) as follows.

Relative motion (linear and angular velocity)

In addition to the relative pose (position and orientation) information, the vision system 2 also

provides the relative motion information, i.e., linear and angular velocity of the camera with respect

to the runway in the camera frame FC. This can be done either by deriving from the camera pose

estimation for the two successive images or by calculating the velocities directly from a kind of visual

odometry (estimation of camera displacement).

Or the following.

Based on simulation results, the output error can be modeled with experimentally determined set of

Gaussian distributions, where sigma is the function of Dp. Again, the details will be provided in the

deliverable D4.2 “Preliminary description of vision-based system for navigation and guidance

recovery scenario” to be submitted in M11.

18

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

f. ILS

ILS is composed of the lateral localizer (LOC)

and the vertical glide slope (GS), which

provides

final

approach

guidance

information, that is, the deviations of the

aircraft flight path from the desired glide

course (normally 3° glide path). Each of the

LOC and GS antennas emits two lobes of

signals modulated at 90Hz and 150Hz

centered at the desired glide path in

horizontal and vertical plan respectively

(Figure 8). The deviations from the desired

glide path can be measured by taking a

difference in amplitude of the two signals,

called DDM (Difference in depth of

modulation).

DDM = DM90 – DM150

Figure 8 ILS LOC and GS signal emissions

where DM is the percentage modulation depth of 90 /150 Hz signals. DDM becomes zero when the

aircraft is on the desired glide course.

DDM is linear with respect to a deviation angle (denoted ∆θLOC and ∆θGS in Figure 8) from the front

course line (DDM=0).

where KLOC and KGS are called the angular displacement sensitivity. For the localizer, the nominal

displacement sensitivity is defined in distance (but not in angle) and it is 0.00145 DDM/m at the ILS

reference datum (= runway threshold) within the half course sector. For the glide slope, KGS = 0.0875

is defined [icao-2006].

19

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

5. Navigation performance requirement and Integrity monitoring

This section provides the current specifications of GNSS and ILS for being used in the aircraft final

approach procedure defined by ICAO.

a. Navigation performance criteria

GNSS

According to ICAO’s GNSS manual [icao-2005], the aircraft navigation system should be evaluated

against the following four criteria:

• Accuracy: The difference between the estimated and actual aircraft position.

• Integrity: The ability of the navigation system to alert the user within the prescribed time

period (time-to-alert), when it should not be used for the intended flight phase.

• Continuity: The capability of the navigation system to perform its function without

interruptions during the intended flight operation.

• Availability: The portion of time during which the navigation system is delivering the

required accuracy, integrity and continuity.

GNSS alone cannot meet the stringent aviation requirements of those four criteria, thus the

augmentation systems are proposed such as SBAS and ABAS with other information sources.

The navigation performance required for the GNSS-based approach procedure is given in [icao-2006]

and summarized in the table below (Table 1).

Table 1 Navigation performance requirement for GNSS-based approach [icao-2006]

GNS

S

Accuracy

Horizontal

Vertical

(95%)

(95%)

220m

N/A

(720ft)

Continuity Availability

Continuity

risk

-4

0.99 to

NPA

N/A

1x10 to

-8

0.99999

1x10 /h

-7

-6

APV

16m

20m

10s

40m

50m

0.99 to

8x10 /

2x10 /

(130ft)

(164ft)

0.99999

I

(52ft)

(66ft)

approach

15s

-7

-6

APV

16m

8m

6s

40m

20m

0.99 to

2x10 /

8x10 /

II

(52ft)

(26ft)

(130ft)

(66ft)

0.99999

approach

15s

-7

-6

CAT I

16m

6 to 4m

6s

40m

35 to 10m

0.99 to

2x10 /

8x10 /

(130ft)

0.99999

(52ft)

(20-13ft)

(115-33ft)

approach

15s

(NPA: Non-Precision Approach, APV: Approach with Vertical Guidance, HAL/VAL: Horizontal/Vertical Alert Limit)

Integrity

risk

-7

1x10 /h

Integrity

TimeHAL

to-alert

10s

556m

(0.3NM)

VAL

ILS

The navigation performance required to ILS is also given in [icao-2006] and summarized in Table 2.

Table 2 Navigation performance requirement for ILS-based approach [icao-2006]

ILSLOC

CAT

III

CAT

II

CAT

I

DDM

(95%)

Accuracy

Modula-tion

tolerance

0.031 to

0.005

0.031 to

0.005

0.031 to

0.015

±1.0%

±1.5%

±2.5%

Integrity

Alert limit of

Alert limit of

course

displacealignment

ment

error

sensitivity

3m

±10%

(10ft)

7.5m

±17%

(25ft)

10.5m

±17%

(35ft)

0.015DDM

Continuity

Max

Continuity

duration of

risk

signal

absence

-6

2s

2x10 / 30s

-6

5s

2x10 / 15s

10s

4x10 / 15s

-6

20

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

Availability

False signal

risk

-9

0.5x10 /

approach

-9

0.5x10 /

approach

-7

1.0x10 /

approach

ILSGS

CAT

III

CAT

II

CAT

I

DDM

(95%)

0.035

to

0.023

0.035

to

0.023

0.035

Accuracy

Modul Course

a-tion

alignm

toleran

ent

ce

Alert

limit of

course

alignmen

t error

Integrity

Alert

limit of

displace

ment

sensitivit

y error

±25%

Alert

limit of

DDM=

0.0875

displace

ment

0.7475θ

±1.0%

+0.04θ

-0.075θ

+0.10θ

±1.5%

+0.075

θ

-0.075θ

+0.10θ

±25%

±2.5%

+0.075

θ

-0.075θ

+0.10θ

±25%

Continuity

Max

Continu

duratio

ity risk

n of

signal

absence

Availability

False signal

risk

-6

0.5x10 /

approach

-6

0.5x10 /

approach

-6

1.0x10 /

approach

2s

2x10 /

15s

0.7475θ

2s

2x10 /

15s

0.7475θ

6s

4x10 /

15s

-9

-9

-7

b. Integrity monitoring

Integrity monitoring function is the must for the aircraft navigation system in order to avoid a pilot

(or auto-pilot), by providing a warning, to continue using erroneous information in guidance and

control.

GNSS Integrity Monitoring

ABAS (Aircraft-Based Augmentation System) applies Receiver-Based Integrity Monitoring (RAIM)

algorithms on redundant satellite information to perform fault detection, identification and exclusion

[Brown-1992] [Lee-1996]. The RAIM algorithms are based on a statistical test on a residual of the

measured pseudo-range and a predicted value of that using the least-square error solution. RAIM

requires measurements from at least five satellites for fault detection, and six satellites for fault

exclusion. If an altitude input (e.g. baro-altimeter) is provided, these numbers of minimum satellites

can be reduced by one.

• LS-RAIM

Let ρ = [ρ1 ρ2 … ρN]T be a GNSS measurement vector consisting of a set of pseudo-range

measurements of N visible satellites. Then, it can be written as a nonlinear function of the receiver

position pECEFrec and its clock bias τ as given in (14):

(17)

Let

be a predicted value of the receiver position and clock bias before taken the

measurements. Usually, it coincides with the estimate at the previous time step. Then the GNSS

measurement vector (17) can be linearized about this prediction as follows.

(18)

Then the linearized GNSS measurement equation is formulated as

(19)

21

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

A new estimate for the receiver position and clock bias can be obtained as a least-square solution.

(20)

The associated estimation error covariance will be PGNSS = GρRρGρ T where Rρ is the covariance

matrix of the measurement noise νρ.

The LS-RAIM (Least Square-error RAIM) algorithm uses a residual:

(21)

Let us consider the following two hypotheses.

• H0:

H0 No failure on any of the N visible satellites.

• H1:

H1 Failure on one of the N visible satellites, and no failure on the others.

Note that the probability of having simultaneous failures on multiple satellites is quite low and hence

it is usually neglected in the RAIM algorithm.

As a failure introduces a bias in the pseudo-range measurement on the i-th satellite, the residual (21)

becomes

(22)

The sum of the square of the residual (error) is defined as SSE = ∆yρ T Rρ -1∆yρ , and the statistical test

is applied on the following value.

(23)

Under the hypothesis H0, SSE results in a Chi-squared distribution with N-4 degree of freedom,

. For the fault detection, the decision test value Td can be derived by a maximum

allowable false detection probability pfd such that the following equation satisfies (See also Figure 9).

(24)

The failure case H1 is detected when T > Td, otherwise no failure case H0 is assumed.

22

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

Figure 9 Chi-squared distribution and Decision test value

• Protection levels

The protection levels are defined by the smallest detectable position error by means of the test

statistics T > Td, while satisfying the missed detection requirement. Consider the case of failure on

the i-th satellite (but not on any other satellites). Then the measurement residual ∆yρ in (22)

incorporates an bias b on its i-th element, and the SSE value now follows the non-central Chisquared distribution with N-4 degree of freedom, denoted as

where λ is a noncentrality parameter (Figure 9). The minimum value for λ which satisfies the missed detection

probability (pmd) can be identified by

(25)

for a given pmd and the decision value Td defined in (24). From the relation between the noncentrality parameter λ and the pseudo-measurement bias b, the smallest detectable bias of the i-th

satellite measurement ρi can be obtained by

(26)

where σρi is the standard deviation of the measurement noise νρi, and Dρii is the i-th diagonal

element of the matrix Dρ defined in (24). Finally, the position (as well as the receiver clock bias)

estimation bias induced by this pseudo-range bias is derived from the equation (20).

where Gρ(:,i) is the i-th column of the matrix Gρ defined in (20). From this, the horizontal and vertical

protection levels (HPL, VPL) are defined by

23

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

(27)

These correspond to the smallest detectable horizontal/vertical position error which satisfies the

miss detection probability requirement. In other words, these are the largest horizontal/vertical

position error bias possible when not detecting the occurring failure.

• Fault exclusion

Once the failure is detected by means of T > Td, the next step is to identify the error source (satellite)

and exclude it. In order to do so, a bank of N estimators {Ei | i=1,2,…N}, each of which uses (N-1)

pseudo-range measurements excluding one from the i-th satellite, is used. The estimated receiver

position and clock bias as well as the test value Ti are calculated in the same manner as in (20) and

(23) for each estimator Ei with a different set of (N-1) pseudo-range measurements. Under the

assumption H1, only one estimator out of N does not use the erroneous measurement and will

provide a correct estimation result. If the failure occurs on the i-th satellite measurement, the

estimator Ei is such an estimator. We define the decision value for the data exclusion Te in the same

manner as in (24) but with the requirement of the maximum false decision of exclusion pfde instead

of pfd. Then, if only one estimator, say Ej, out of the N estimators {Ei | i=1,2,…N} gives T ≤ Te, the

failure can be identified on the j-th satellite and its pseudo-range measurement will be excluded.

• AAIM (Aircraft Autonomous Integrity Monitoring)

Unlike RAIM which is self-contained on the GNSS receiver, AAIM algorithms use other sensors (INS,

baro-altimeter, etc.) to improve the FDE (Fault Detection and Exclusion) capability. In AAIM, the

GNSS pseudo-range measurements ρ = [ρ1 ρ2 … ρN]T are fused with the measurements from other

available sensors to estimate the receiver state (position, velocity, etc.) and the sensor bias including

the GNSS receiver clock bias: x = [pT … ]T. Then the MSS (Multiple Solution Separation) method

[Brenner-1998] is applied directly in the position (but not the pseudo-range) domain in order to

perform the FDE. Usually, AAIM algorithm is to detect the failure on one of the N GNSS

measurements assuming that the other used sensors are failure-free.

Like in RAIM, consider the full-set estimator E0 which uses all the N pseudo-range measurements,

and a bank of N subset estimators {Ei | i=1,2,…N}, each of which uses (N-1) pseudo-range

measurements excluding one from the i-th satellite. Then the separation ∆xi is defined for each

subset estimator Ei as a difference in the estimated states between this estimator Ei and the full-set

one E0. The separation ∆xi and its covariance matrix ∆Pi are given by

(28)

(29)

where Pi is the error covariance of the estimation state of Ei , and hρiT is the Jacobian matrix (a row

vector) of the measurement ρi with respect to the estimation state x. The horizontal and vertical

positions and their associated covariance matrices are given by

24

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

(30)

Eigenvalue decomposition can be applied to the horizontal separation covariance matrix ∆Phi and the

separation ∆pHi can be projected on the eigenvectors:

(31)

Then the test value of the horizontal separations is defined and is often approximated as follows.

(32)

In case of no failure, these test values follow a zero-mean Gaussian distribution with a standard

deviation of ∆σΗi1. In case of presence of failure on one of the pseudo-range measurements used in

Ei, they follow a non-null mean Gaussian distribution. Based on the maximum allowable false

detection probability criterion pfd, the decision value TdHi is calculated as follows.

(33)

where erfc() is the complementary error function. The failure is detected when THi > TdHi. The same

statistical test is performed also on the vertical separation, by using TVi = |∆pVi| and ∆σVi = √∆PVi.

These two tests on the horizontal and vertical separations are independently performed for each of

the N subset estimators. That is why the minimum false detection probability pfd is divided by 2N, a

total number of the statistical tests.

Similarly to the RAIM algorithms, the horizontal and vertical protection level (HPL and VPL) are to

be defined based on the missed detection probability criterion (pmd). HPL is defined as the maximum

position error possible without detecting any failure when satisfying this criterion:

(34)

By the triangle inequality, the HPL can be approximated by the following in a conservative manner.

(35)

Consider the case of failure of the j-th satellite, where the j-th subset estimator Ej functions correctly

and its estimation error follows a zero-mean Gaussian distribution with a known covariance matrix

PHi. Then, the HPL for this case of the j-th satellite failure, denoted as HPLj, is obtained by solving

(36)

25

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

Through the similar operation as (31) by using Eigenvalue decomposition,

(37)

where σΗi1 is the largest standard deviation of the covariance PHi. HPLj can be obtained for all

j=1,2,…N. Then the HPL is defined as its maximum value.

(38)

In the same way, the vertical protection level can be obtained as follows.

(39)

ILS Integrity Monitoring

Transmission of ILS signals are continuously monitored, and an installation is automatically switched

off (cease of radiation or removal of navigation component from the carrier) when anomaly is

detected. The anomaly is detected when at least one of the alert limits listed in Table 2 is exceeded.

26

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

6. Preliminary design of vision-aided navigation system

This section provides description of the preliminary design of vision-aided navigation systems for

SBAS or ILS failure scenarios, defined in D2.1 and recalled in Section 1. Figure 10 illustrates an

overview of the aircraft navigation system, which is composed of the estimator and the integrity

monitoring system for fault detection and exclusion (FDE).

Figure 10 Overview of Aircraft Navigation System

a. Estimator design

In this preliminary design, we suppose that the aircraft attitude qB and angular velocity ωB are

estimated separately from the position and linear velocity by a fusion of AHRS and inclinometer

measurements. This attitude estimator is assumed to give the un-biased estimated attitude and

angular velocity as well as their error covariance at the INS frequency.

Then, the objective of this estimator is to reconstitute the aircraft position and translational velocity

from available onboard sensors. As shown in Figure 10, the estimator is based on INS integration and

correction of its drift by the other sensors (GNSS, vision, barometer) through non-linear/linear

Kalman filtering techniques. The estimator runs at a frequency of INS (AHRS), and after each INS

integration, each of newly arrived sensor measurements will be examined for its validity and used for

the estimation correction if it is valid.

Estimation state vector

The estimation sate vector is defined as follows.

27

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

(40)

where pNED and vNED are the the aircraft position and linear velocity expressed in the local NED

frame fixed at the known runway threshold position pECEFthd, (τ, vτ) are the GNSS receiver clock bias

and drift, and ba is the accelerometer bias.

INS integration

By taking a time-derivative of the estimation sate vector (40), the process model is given by

(41)

By discretizing this process model, and the INS integration will be given by

(42)

The error covariance matrix of this predicted state becomes

(43)

where the last term is due to the attitude estimation error and

Measurement update

At each time step, newly arrived measurements will be used to make a correction on the predicted

state (42). The sensor set includes GNSS, baro-altimeter, vision systems and ILS. The integrity

monitoring algorithm, to be presented in the next section, is applied first so that the erroneous

information source will be removed. Then a Kalman filter-like update process (EKF, UKF, etc.) is

applied on the sensor models of only the selected (non-excluded by the FDE function) sensor

measurements. The estimation error on the attitude and the angular velocity would be also

considered when propagating the error covariance.

28

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

The GNSS pseudo-range measurements (instead of the resulting receiver position and clock bias) are

directly used in the estimation filter, which results in a tight fusion of GNSS with INS and other

sensors. For the purpose of integrity monitoring, the sensor measurement sets should be

independent each other, and so it is preferable to use row sensor data (especially for vision system).

b. Fault detection and estimation (FDE)

Like GNSS, it is very important for the aircraft navigation system to provide the integrity monitoring

function. It is proposed here to apply the similar process to the AAIM algorithms (presented in

Section 5.b) to perform FDE in case of the data fusion of different sensors. Note that, in this work,

the INS (AHRS) is assumed to function always correctly and never fail.

GNSS-RAIM

As stated before, GNSS sensor normally provides a set of redundant measurements, while the other

sensors do not. Therefore, for GNSS, the RAIM algorithm introduced in Section 5.b can be already

applied before the sensor fusion. In the RAIM, the initial receiver position can be obtained from the

predicted state from the INS integration (42). So the erroneous pseudo-range measurement is

supposed to be removed already before the measurement update.

FDE for sensor fusion

Consider that measurements from m different sensors are newly arrived at a time step k+1. Two

approaches can be proposed for the FDE. The first one is to apply the test statistics on the innovation

term for each of the m sensor independently. It is the similar test as in the RAIM, the equation (23).

Then the decision threshold is used to detect the sensor failure. The second approach is to apply a

similar process to the AAIM algorithm. In this approach, a bank of the m subset estimation filters {Ei |

i=1,2,…m}, each of which uses measurement from the (m-1) sensors except the i-th sensor. The

updated estimation state of each Ei is compared to that of the full-set estimator E0 which uses the

available measurements from all the m sensors. Then the MSS (Multiple Solution Separation) method

can be applied in the same way as in the AAIM algorithm (Section 5.b) to detect the sensor failure.

The horizontal and vertical protection levels can be also derived in the same way as in (38, 39) based

on the estimation error covariance matrix.

In the preliminary design, the occurrence of failure is assumed to be at the current time step but not

before. Extension of the work by assuming the failure occurrence sometime in the past could be

considered later in the project, by keeping the bank of estimation filters from the previous time step

for the FDE.

29

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

7. Validation plan

This section overviews the validation plan of the vision-based navigation systems for the sensor

failure scenarios.

a. Validation of the image processing algorithms

Validation will be made in three phases:

• Own simple environment: Synthetic projections of runway from known camera position and

orientation. Input error is only caused by numeric representation (no pixelation). Zero error

of image processing algorithm (features are known). Goal: Validation of position and

orientation extraction from known image features. Status: Goal has already reached.

• FlightGear-based environment: Realistic computer graphics provides input for image

processing. The image is pixelized, realistic distance-based blurs. Goal: Testing and

preliminary validation of Image processing algorithms. The reached accuracy is compared to

navigation performance requirements. Status: In progress.

• Offline/Online image-based navigation on real data: All necessary data is recorded during

flight tests for offline repeatability. Goal 1: Best possible accuracy without real-time

constraints. Final Goal: Real-time online operation in closed-loop flight test. Status:

Preparation for first capture-only flight experiment.

b. Validation of the estimation algorithms

Validation will be made in three phases:

• Numerical simulation: The estimator designs (including the FDE functions) will firstly be

tested in numerical simulation environment with simulated sensors. ENRI provides the GNSS

and ILS sensor models (nominal and failure), which will be integrated in Matlab simulations

for the estimator validation. The image processor outputs will be also simulated based on the

models given in Section 4.e.

• Offline test on real flight (ground) test data: All necessary data (image + sensor data) is

recorded during flight tests for offline repeatability. The GPS-RTK/AHRS navigation filter on

the flight avionics provides the true reference of the trajectory. The developed estimation

algorithms will be tested on these real data, with a real image processing results obtained

offline/online.

• Online test on the platform: After the above simulation validations, the estimation

algorithms will be implemented on the onboard software architecture along with the image

processor, and will be tested online onboard the K50 platform during the flight. It will be

tested in a open-loop manner (i.e., the estimation results will not be fed-back into the flight

guidance and control module), then in a closed-loop manner.

c. Plan for 1st flight test

The primary goal of first flight test is to ensure vision sensor operation on the K-50 platform and

record video data synchronized with other sensor data.

Operations on Ground before first flight:

• Installation of vision sensors to K-50 airframe: Mounting camera modules, mounting image

processing payload computers, power supply, cabling between camera modules and image

processing computers, cabling Onera payload computer and image processing computers.

• Testing operation of modules: Testing operation of vision sensor after mounting while K50’s

engine is operating.

• Calibration of vision sensor: Measure the position and orientation of cameras after

mounting. Tune anti-vibration equipment.

• Testing communication of vision sensor: RS232 communication test, UDP test.

Flight experiments:

30

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

•

•

Recording: Record video data with other sensor data during final approach and landing.

Different approach paths (and orientations) are needed for better evaluation of image

processing algorithms.

Reliability test: The system has to operate during the experiments.

d. Preliminary plan for further flight tests

•

•

Pseudo closed-loop test: The vision sensor calculates and sends its output to ONERA payload

computer, but it is not used by the control loop.

Closed-loop test: The vision sensor’s output is integrated to the control.

8. Others

Different modules use different frames (local NED/ENU, body, runway fixed, camera, etc.) introduced

in Section 2. These differences require special attention during the project.

31

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

Preliminary design of vision-based guidance

1. Trajectory optimization for small deviation from the nominal glide path

a. Trajectory optimization

UTOKYO works on online trajectory optimization. The scenario is defined as follows; when a plane is

approaching the runway, a flying obstacle on the glide path is detected, or a moving obstacle is

detected on the runway. The optimal flight path which can avoid the obstacle is computed based on

the detected obstacle’s position. Research on potential trajectory optimization algorithms is ongoing.

The optimal trajectory should avoid the obstacle with sufficient reliability based on the image sensor

information and the onboard-information (aircraft position and attitude). The image sensor can

detect the relative distance to the obstacle, but it is difficult to detect the exact position of the

obstacle due to the presence of noise in the data. Besides, the possible detection range and the

camera angle are also limited, and the optimal trajectory should keep the constraints associated with

the aircraft flight envelope and ATC. Therefore, the position of the obstacle should be estimated first,

and then the optimal trajectory can be generated to avoid the obstacle. The constraints mentioned

above are considered in the optimization, and the objective function will consist of the distance from

the obstacle, aircraft acceleration, etc.

b. Decision making

In the optimal computation, the fundamental decision must be determined whether a landing

approach continues or go-around is carried out. Decision making algorithms are investigated based

on situations. Present operations and aeronautics laws are checked at the same time.

c. Validation plan

Since it is difficult to conduct a flight experiment of the whole scenario for safety reasons, it will be

validated in a simulation with the required data obtained offline. The offline data will be obtained in

advance with two drones. A drone (drone A) will act as an approaching aircraft with an image sensor

and GPS/INS installed, while another drone (drone B) will act as a flying obstacle with GPS. Drone A

will fly straight on a nominal path, and drone B will intersect the nominal path at certain timing.

Drone A will detect the drone B by image sensor, and the relative distance to the drone B will be

obtained. Since Drone A will have a GPS/INS installed, its own position and attitude can also be

obtained. Drone B has a GPS, so the accuracy of the relative distance between drone A and drone B

will also be investigated. This data acquisition experiment is expected to be conducted several times

to simulate various cases. Finally, using the obtained data (image sensor data and aircraft

position/attitude data), a whole scenario including trajectory optimization will be numerically

simulated. In numerical simulations, a fixed-wing aircraft model of UAV will be considered.

32

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

2. Image-based obstacle avoidance approach

Our approach is based on recent image-based obstacle avoidance results achieved at SZTAKI [Bauer2016, Hiba-2016, Zsedrovits-2016]. We have reached the proof of concept level real-time on-board

avoidance in the case of sky-background detections. There are many interesting contributions on this

topic [Fasano-2008, Fasano-2015, Ramasamy-2014], most of them utilize RADAR/LIDAR and IR

sensors beyond the visible light spectrum video. IR is very useful in bad weather conditions and in the

detection of jet engines. Our results with visible light spectrum images can be applied to small- and

micro-UAVs and could enhance the reliability of sensor fusion approaches that lead to obstacle

avoidance products for mid- and large-sized UAVs and aircrafts.

a. Obstacle detection and possible avoidance maneuvers

SZTAKI works on image-based flying obstacle avoidance. The task is to detect and track unknownsized flying objects on camera screen, and alert the piloting system if an object moving towards our

aircraft. The system assumes straight flight paths and chooses the most appropriate from predefined

avoidance maneuvers. RICOH works also on obstacle detection on runway, with the help of stereo

vision sensor. In the presence of an obstacle on runway the aircraft starts a missed approach

procedure (go-around).

b. Detection and tracking

Image-based obstacle or intruder aircraft detection often divided to two scenarios: detection against

sky background, detection against non-sky background. We will use different methods for the two

scenarios. The basic steps of detection are: sky-ground separation, blob detection, filtering false

objects. After filtering the remaining blobs are added to the tracker. We prefer tracking false objects

than losing a real object. False objects can be eliminated based on multiple observations, however

they can corrupt the tracks of real objects.

c. Decision based on tracks

The tracks consist centroid (x,y) and size (Sx,Sy) information about the intruder aircraft. Monocular

vision-based techniques investigate the trends of centroid and size. A trivial example is to initiate an

avoidance maneuver if the size of the object on the image plane increases.

Our decision making approach assumes that the intruder and the own aircraft follow a straight flight

path with constant speed. We model the intruder as a disk with radius r in the horizontal plane. With

these assumptions we could estimate the closest point of approach (CPA) as a multiplicand of 2r (the

size of the intruder is not known), and also the time to closest point of approach (TTCPA). It is easy to

give an appropriate threshold on CPA and TTCPA. For instance, we initiate an avoidance maneuver if

the intruder approaces us closer than 10 times its size, and we want to make this decision 20 seconds

before this CPA realizes. For details see [Bauer-2016].

33

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

Figure 11 Basic properties of detected aircraft on the image plane

Figure 12 Disk model of intruder object. P is the image plane

34

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

Terminology

AAIM

ABAS

AGL

AHRS

ATC

CAT

COP

CPA

DA(H)

DDM

DGAC

DoF

ECEF

EKF

ENU

FAA

FDE

GCS

GN&C

GNSS

GPS

GS

HAL

HITL

HPL

ICAO

ILS

IMU

INS

LOC

LPV

NED

PL

RAIM

RNAV

RTK

SBAS

SSE

TCH

TTCPA

UAV

UKF

VAL

VPL

WP

: Aircraft Autonomous Integrity Monitoring

: Aircraft-Based Augmentation System

: Above Ground Level

: Attitude and Heading Reference System

: Air Traffic Control

: CATegory

: Center of Projection

: Closest Point of Approach

: Decision Altitude (Height)

: Difference in Depth of Modulation

: Direction Générale de l’Aviation Civile (French Civil Aviation Authority)

: Degrees of Freedom

: Earth-Centered Earth-Fixed

: Extended Kalman Filter

: East-North-Up

: Federal Aviation Administration (U.S.A.)

: Fault Detection and Exclusion

: Ground Control Station

: Guidance, Navigation and Control

: Global Navigation Satellite System

: Global Positioning System

: Glide Slope

: Horizontal Alert Level

: Hardware-In-The-Loop

: Horizontal Protection Level

: International Civil Aviation Organization

: Instrument Landing System

: Inertial Measurement Unit

: Inertial Navigation System

: LOCalizer

: Localizer Performance with Vertical guidance

: North-East-Up

: Payload

: Receiver Autonomous Integrity Monitoring

: aRea NAVigation

: Real-Time Kinematic

: Satellite-Based Augmentation System

: Sum of Squared Errors

: Threshold Crossing Height

: Time to Closest Point of Approach

: Unmanned Aerial Vehicle

: Unscented Kalman Filter

: Vertical Alert Level

: Vertical Protection Level

: Work Package

35

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

References

[ec-2012]

[dgac-2011]

[faa-2015]

[casa]

[WGS-1984]

[Etkin-1972]

[Bowring1976]

[Cai-2011]

[Matthies1989]

[Civera-2008]

[Aidala-1983]

[Faurie-2011]

[icao-1993]

[icao-2005]

[icao-2006]

[Brown-1992]

[Lee-1996]

[Brenner1998]

[Bauer-2016]

[Hiba-2016]

[Zsedrovits2016]

[Fasano-2008]

[Fasano-2015]

“RNAV Approaches”, Eurocontrol, 2012.

“Technical Guidelines O1 – PBN Guidelines for RNP APCH operations also known as

RNAV(GNSS) Edition No.2”, DGAC, 2011.

“Aeronautical Information Manual – Official Guide to Basic Flight Information and

ATC Procedures”, US Department of Transportation FAA, 2015.

“Go-arounds”, Australian Government Civil Aviation Safety Authority,

https://www.casa.gov.au/standard-page/go-arounds

“World Geodetic System 1984 – Its Definition and Relationships with Local Geodetic

Systems”, National Imagery and Mapping Agency, 1984.

B. Etkin, “Dynamics of Atomospheric Flight”, John Wiley & Sons, 1972.

B.R. Bowring, “Transformation from Spatial to Geographical Coofdinates”, Survey

Review 23, 1976.

G. Cai, B.M. Chen and T.H. Lee, “Unmanned Rotorcraft Systems”, Chapter:

Coordinate Systems and Transformations, Springer, 2011.

L. Matthies and T. Kanade, “Kalman Filter-based Algorithms for Estimating Depth

from Image Sequences”, International Journal of Computer Vision, 1989.

J. Civera, A.J. Davison and J.M. Martinez Montiel, “Inverse Depth Parametrization for

Monocular SLAM”, IEEE Transactions on Robotics, vol.24, no.5, 2008.

V.J. Aidala and S.E. Hammel, “Utilization of Modified Polar Coordinates for BearingsOnly Tracking”, IEEE Transactions on Automatic Control, vol.28, no.3, 1983.

F. Faurie, “Algorithmes de contrôle d’intégrité pour la navigation hybride GNSS et

systèmes de navigation inertielle en présence de multiples mesures satellitaires

défaillantes,” Ph.D. thesis, University of Bordeaux, 2011.

“Manual of the ICAO Standard Atmosphere”, ICAO Doc 7488/3, 1993.

“Global Navigation Satellite System (GNSS) Manual”, ICAO Doc 9849/457, 2005.

“Aeronautical Telecommunications: Volume 1 Radio Navigation Aid”, Annex 10 to

the Convention on International Civil Aviation, ICAO, 2006.

R. Grover Brown, “A baseline of GPS RAIM Scheme and a Note on the Equivalence of

Three RAIM methods”, Journal of the Institute of Navigation, vol.39, no.3, 1992.

Y. Lee, K. Van Dyke, B. Decleene, J. Studenny and M. Beckmann, “Summary of RTCA

SC-159 GPS Integrity Working Group Activities”, Journal of the Institute of

Navigation, vol.43, no.3, 1996.

M.A. Brenner, “Navigation system with solution separation apparatus for detecting

accuracy failures”, US Patent 5760737 A, 1998.

P. Bauer, A. Hiba, B. Vanek, A. Zarandy, and J. Bokor. Monocular image-based time

to collision and closest point of approach estimation. Mediterranean Conference on

Control and Automation, 2016.

A. Hiba, T. Zsedrovits, P. Bauer, and A/ Zarandy. Fast horizon detection for airborne

visual systems. International Conference on Unmanned Aircraft Systems, 2016.

T. Zsedrovits, P. Bauer, B. J. M. Pencz, A. Hiba, I. Gozse, M. Kisantal, M. Nemeth, Z.

Nagy, B. Vanek, A. Zarandy, and J. Bokor. Onboard visual sense and avoid system for

small aircraft. IEEE Aerospace and Electronic Systems Magazine, 2016.

G. Fasano, D. Accardo, A. Moccia, C. Carbone, U. Ciniglio, F. Corraro, and S. Luongo.

Multi-sensor-based fully autonomous non-cooperative collision avoidance system

for unmanned air vehicles. Journal of aerospace computing, information, and

communication, 5(10):338_360, 2008.

G. Fasano, D. Accardo, A. Elena Tirri, A. Moccia, and E. De Lellis. Sky region obstacle

detection and tracking for vision-based UAS sense and avoid. Journal of Intelligent &

Robotic Systems, pages 1_24, 2015.

36

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)

[Ramasamy2014]

S. Ramasamy, R. Sabatini, and A. Gardi. Avionics sensor fusion for small size

unmanned aircraft sense-and-avoid. In Metrology for Aerospace (MetroAeroSpace),

2014 IEEE, pages 271_276. IEEE, 2014.

37

EU-H2020 GA-690811

NEDO GA-062800

VISION: Validation of Integrated Safety-enhanced Intelligent flight cONtrol (2016-2019)