Information Theory: Final Exam on 21 June 2019

1. (a) (6%) What are the three axioms raised by Shannon for the measurement of information?

(b) (6%) Define the divergence typical set as

PX n (xn )

n

n 1

− D(PX PX̂ ) < δ .

An (δ):= x ∈ X : log2

n

PX̂ n (xn )

It can be shown that for any sequence xn in An (δ),

PX n (xn )2−n(D(PX PX̂ )−δ) > PX̂ n (xn ) > PX n (xn )2−n(D(PX PX̂ )+δ) .

Prove that

PX̂ n (An (δ)) ≤ 2−n(D(PX PX̂ )−δ) PX n (An (δ)).

Solution.

(a)

i) Monotonicity in event probability

ii) Additivity for independent events

iii) Continuity in event probability

(b)

PX̂ n (An (δ)) =

PX̂ n (xn )

xn ∈An (δ)

≤

PX n (xn )2−n(D(PX PX̂ )−δ)

xn ∈An (δ)

= 2−n(D(PX PX̂ )−δ)

PX n (xn )

xn ∈An (δ)

= 2

−n(D(PX PX̂ )−δ)

PX n (An (δ))

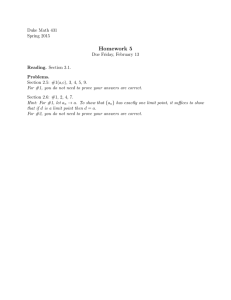

2. (6%) For the basic transformation of polar code shown below, answer the following

questions.

U1 = 0

-⊕

X1

-

BEC(ε)

Y1 -

X2

-

BEC(ε)

Y2 -

6

U2

Note that here we assume the two channels are independent binary erasure channels.

Determine the erasure probability for U2 , given U1 = 0 (i.e., frozen as zero)?

Solution. From

Q+ :

Y1 ⊕ U1 = Y1 , if Y1 ∈ {0, 1}

U2 = Y2 ,

if Y2 ∈ {0, 1}

?,

if Y1 = Y2 = E

U2 is erased iff both Y1 and Y2 equal erasure, which occurs with probability ε2 .

3. (a) (6%) Let X be a random variable with pdf fX (x) and support X . Prove that for

any function q(·) and positive C,

C · E[q(X)] − h(X) ≥ ln(D)

where h(X) is the differential entropy of X and

1

D=

X

e−C·q(X) dx

.

Hint: Reformulate

∞

C · E[q(X)] − h(X) = C ·

−∞

fX (x)q(x)dx −

∞

−∞

fX (x) ln

1

dx

fX (x)

and use

fX (x)

dx ≥ 0

fY (x)

X

for any continuous random variable Y that admits a pdf fY (x) over support X .

(b) (6%) When does equality hold in (a) such that

D(XY ) =

fX (x) ln

h(X) = C · E[q(X)] − ln(D)?

(c) (6%) Use (a) and (b) to find the random variable that maximizes the differential

entropy among all variables with finite support [a, b].

Hint: Choose q(x) = 1 over [a, b] and use (b).

Solution.

(a)

C · E[q(X)] − h(X) = C ·

∞

∞

−∞

fX (x)q(x)dx −

∞

−∞

fX (x) ln

fX (x)

dx

e−C·q(x)

−∞

∞

fX (x)

fX (x) ln

dx + ln(D)

=

De−C1 ·q(x)

−∞

∞

fX (x)

dx + ln(D)

fX (x) ln

=

fY (x)

−∞

≥ ln(D)

=

fX (x) ln

where fY (x) = De−C·q(x) is a pdf defined over x ∈ X .

1

dx

fX (x)

(b) From the derivation in (a), equality holds iff fX (x) = fY (y), i.e., fX (x) = De−C·q(x)

for x ∈ X .

(c) From (a), we know that

h(X) ≤ C · E[q(X)] − ln(D).

Thus, the differential entropy is maximized if equality holds in the above inequality.

Set q(x) = 1. Then, equality holds if

fX (x) =

e−C

b −C

e dx

a

=

1

.

b−a

Consequently, the random variable that maximizes the differential entropy among

all variables with finite support [a, b] has a uniform distribution.

4. (8%) Suppose the capacity of k parallel channels, where the ith channel uses power Pi ,

follows a logarithm law, i.e.,

C(P1 , . . . , Pk ) =

k

1

i=1

2

log2 1 +

Pi

σi2

,

where σi2 is the noise variance of channel i. Determine the optimal {Pi∗ }ki=1 that maximize

C(P1 , . . . , Pk ) subject to ki=1 Pi = P .

Hint: By using the Lagrange multipliers technique and verifying the KKT condition, the

maximizer (P1 , . . . , Pk ) of

k

k

k

1

Pi

log2 1 + 2 +

λi Pi − ν

Pi − P

max

2

σi

i=1

i=1

i=1

can be found by taking the derivative of the above equation (with respect to Pi ) and

setting it to zero.

Solution. By using the Lagrange multipliers technique and verifying the KKT condition,

the maximizer (P1 , . . . , Pk ) of

k

k

k

1

Pi

log2 1 + 2 +

λi Pi − ν

Pi − P

max

2

σ

i

i=1

i=1

i=1

can be found by taking the derivative of the above equation (with respect to Pi ) and

setting it to zero, which yields

1

1

+ ν = 0, if Pi > 0;

−

2 ln(2) Pi + σi2

λi =

1

1

−

+ ν ≥ 0, if Pi = 0.

2 ln(2) Pi + σi2

Hence,

Pi = θ − σi2 , if Pi > 0;

Pi ≥ θ − σi2 , if Pi = 0,

(equivalently, Pi = max{0, θ − σi2 }),

k

i=1

where θ:= log2 (e)/(2ν) is chosen to satisfy

Pi = P .

5. (a) (6%) Prove that the number of xn ’s satisfying PX n (xn ) ≥

1

N

is at most N.

(b) (6%) Let Cn∗ be the set that maximizes Pr[X n ∈ Cn∗ ] among all sets of the same size

Mn . Prove that

1

1

n

∗

n

Pr[X ∈ Cn ] ≤ Pr hX n (X ) > log Mn ,

n

n

where hX n (X n ) log P

1

X 3 (X

3)

.

Hint: Use (a).

(c) (8%) Let Cn be a subset of X n , satisfying that |Cn | = Mn . Prove that for every

γ > 0,

1

1

n

n

Pr[X ∈ Cn ] ≥ Pr hX n (X ) > log Mn + γ − exp{−nγ}.

n

n

Hint: It suffices to prove that

1

1

n

n

Pr [X ∈ Cn ] ≤ Pr hX n (X ) ≤ log Mn + γ + exp{−nγ}

n

n

1

1

1

log

≤ log Mn + γ + exp{−nγ}

= Pr

n

PX n (X n )

n

1 −nγ

n

e

= Pr PX n (X ) ≥

+ exp{−nγ}.

Mn

Solution.

(a) This can be proved by contradiction. Suppose there are N + 1 xn ’s satisfying

PX n (xn ) ≥ N1 . Then, 1 = xn ∈X n PX n (xn ) ≥ NN+1 , which is a contradiction. Hence,

the number of xn ’s satisfying PX n (xn ) ≥ N1 is at most N.

(b) From (a), the number of xn ’s satisfying PX n (xn ) ≥ M1n is at most Mn . Since Cn∗

should consist of Mn words with larger probabilities, we have

1

n

∗

n

Pr[X ∈ Cn ] ≥ Pr PX n (X ) ≥

Mn

1

1

1

= Pr

log

≤ log Mn

n

PX n (X n )

n

1

1

n

= Pr hX n (X ) ≤ log Mn ,

n

n

which implies

n

Pr[X ∈

Cn∗ ]

1

1

n

≤ Pr hX n (X ) > log Mn .

n

n

(c) We derive

n

Pr [X ∈ Cn ] =

≤

=

<

=

=

1 −nγ

Pr X ∈ Cn and PX n (X ) ≥

e

Mn

1 −nγ

n

n

e

+ Pr X ∈ Cn and PX n (X ) <

Mn

1 −nγ

n

Pr PX n (X ) ≥

e

Mn

1 −nγ

n

n

e

+ Pr X ∈ Cn and PX n (X ) <

Mn

1 −nγ

e

Pr PX n (X n ) ≥

Mn

1 −nγ

n

n

PX n (x ) · 1 PX n (x ) <

e

+

Mn

xn ∈Cn

1 −nγ

n

e

Pr PX n (X ) ≥

Mn

1

1 −nγ

−nγ

n

+

e

· 1 PX n (x ) <

e

M

M

n

n

n

x ∈Cn

1 −nγ

1 −nγ

n

e

e

Pr PX n (X ) ≥

+ |Cn |

Mn

Mn

1 −nγ

n

Pr PX n (X ) ≥

e

+ e−nγ .

Mn

n

n

6. (a) (6%) Find the average number of random bits executed per output symbol in the

below program.

For i = 1 to i = n do the following

{

Flip-a-fair-coin;

If “Head”, then output 0;

else

{

Flip-a-fair-coin;

If “Head”, then output −1;

else output 1;

}

}

\\ one random bit

\\ one random bit

(b) (6%) Find the probabilities of the random output sequence X1 , X2 , . . ., Xn of the

above algorithm. What is the entropy rate of this random output sequence? Is

the above algorithm asymptotically optimal in the sense of minimizing the average

number of random bits executed per output symbol among all algorithms that

generate the random outputs of the same statistics?

(c) (6%) What is the resolution rate of X1 , X2 , . . ., Xn in (b)?

Solution.

(a) On an average, 1.5 random bits are executed per output symbol.

(b) For each i, PXi (−1) = 1/4, PXi (0) = 1/2, and PXi (1) = 1/4, and X1 , X2 , . . .,

Xn are i.i.d. Its entropy rate is 1.5 bits. Since entropy rate is equal to the average

number of random bits executed per output symbol, the algorithm is asymptotically

optimal.

(c) Each Xi is 4-type. Hence, its resolution rate is n1 R(X n ) = log2 (4) = 2 bits.

7. Complete the proof of Feinstein’s lemma (indicated in three boxes below).

Lemma (Feinstein’s Lemma) Fix a positive n. For every γ > 0 and input distribution

PX n on X n , there exists an (n, M) block code for the transition probability PW n = PY n |X n

that its average error probability Pe (Cn ) satisfies

1

1

n

n

Pe (Cn ) < Pr iX n W n (X ; Y ) < log M + γ + e−nγ .

n

n

Proof:

Step 1: Notations. Define

1

1

n

n

n

n

n

n

G (x , y ) ∈ X × Y : iX n W n (x ; y ) ≥ log M + γ .

n

n

Let νe−nγ + PX n W n (G c ).

Feinstein’s Lemma obviously holds if ν ≥ 1, because then

1

1

n

n

Pe (Cn ) ≤ 1 ≤ ν Pr iX n W n (X ; Y ) < log M + γ + e−nγ .

n

n

So we assume ν < 1, which immediately results in

PX n W n (G c ) < ν < 1,

or equivalently,

PX n W n (G) > 1 − ν > 0.

Therefore, denoting

A{xn ∈ X n : PY n |X n (Gxn |xn ) > 1 − ν}

with Gxn {y n ∈ Y n : (xn , y n ) ∈ G}, we have

PX n (A) > 0,

(1)

because if PX n (A) = 0,

(∀ xn with PX n (xn ) > 0) PY n |X n (Gxn |xn ) ≤ 1 − ν

⇒

PX n (xn )PY n |X n (Gxn |xn ) = PX n W n (G) ≤ 1 − ν,

xn ∈X n

and a contradiction to (1) is obtained.

Step 2: Encoder. Choose an xn1 in A (Recall that PX n (A) > 0.) Define Γ1 = Gxn1 .

(Then PY n |X n (Γ1 |xn1 ) > 1 − ν.)

Next choose, if possible, a point xn2 ∈ X n without replacement (i.e., xn2 cannot be

identical to xn1 ) for which

PY n |X n Gxn2 − Γ1 xn2 > 1 − ν,

and define Γ2 Gxn2 − Γ1 .

Continue in the following way as for codeword i: choose xni to satisfy

i−1

Γj xni > 1 − ν,

PY n |X n Gxni −

j=1

and define Γi Gxni − i−1

j=1 Γj .

Repeat the above codeword selecting procedure until either M codewords are selected or all the points in A are exhausted.

Step 3: Decoder. Define the decoding rule as

i,

if y n ∈ Γi

n

φ(y ) =

arbitrary, otherwise.

Step 4: Probability of error. For all selected codewords, the error probability given

codeword i is transmitted, λe|i , satisfies

λe|i ≤ PY n |X n (Γci |xni ) < ν.

(Note that (∀ i) PX n |X n (Γi |xni ) ≥ 1 − ν by Step 2.) Therefore, if we can show that

the above codeword selecting procedures will not terminate before M, then

M

1 λe|i < ν.

Pe (Cn ) =

M i=1

Step 5: Claim. The codeword selecting procedure in Step 2 will not terminate before

M.

Proof: We will prove it by contradiction.

Suppose the above procedure terminates before M, say at N < M. Define the set

F

N

i=1

Γi ∈ Y n .

Consider the probability

PX n W n (G) = PX n W n [G ∩ (X n × F )] + PX n W n [G ∩ (X n × F c )].

Since for any y n ∈ Gxni ,

(2)

PY n |X n (y n |xni )

,

PY n (y ) ≤

M · enγ

n

we have

PY n (Γi ) ≤ PY n (Gxni )

1 −nγ

≤

e PY n |X n (Gxni )

M

1 −nγ

e .

≤

M

(a) (6%) Show that

PX n W n [G ∩ (X n × F )] ≤

N −nγ

e .

M

Hint: Use (3).

(b) (6%) Show that

PX n W n [G ∩ (X n × F c )] ≤ 1 − ν.

Hint: For all xn ∈ X n ,

PY n |X n

Gxn −

N

i=1

Γi xn ≤ 1 − ν.

(c) (6%) Prove that (a) and (b) jointly imply N ≥ M, resulting

in a contradiction.

Hint: By definition of G, PX n W n (G) = 1 − ν + e−nγ .

Solution. See the note or slides.

(3)