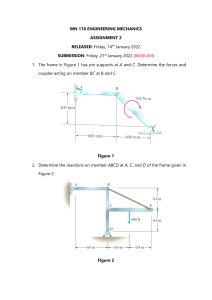

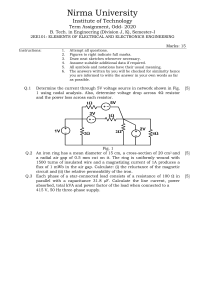

ISSCC 2022 / SESSION 2 / PROCESSORS / OVERVIEW Session 2 Overview: Processors DIGITAL ARCHITECTURES AND SYSTEMS SUBCOMMITTEE Session Chair: Hugh Mair MediaTek, Austin, TX Session Co-Chair: Shidhartha Das Arm, Cambridge, United Kingdom Mainstream high performance processors take center stage in this year’s conference with next-generation architectures being detailed for x86 and Power™ processors by Intel, AMD, and IBM. In addition to mainstream compute, the session features groundbreaking work in parallel/array compute, leading off with the massive performance and integration of Intel’s Ponte Vecchio, while a multi-die approach to reconfigurable compute from researchers at UCLA features an ultra-high density die-to-die interface. Mobile processing also marks a milestone this year with the introduction of the ARMv9 ISA into flagship smartphones. 2.1 8:30 AM Ponte Vecchio: A Multi-Tile 3D Stacked Processor for Exascale Computing Wilfred Gomes, Intel, Portland, OR In Paper 2.1, Intel details the Ponte-Vecchio platform for next-generation data center processing, integrating 47 tiles from 5 different process nodes into a single package, including 16 5nm compute tiles. 45TFLOPS of sustained FP32 vector processing is demonstrated alongside 5TB/s of memory fabric bandwidth and >2TB/s of aggregate memory and scale-out bandwidth. 2.2 8:40 AM Sapphire Rapids: The Next-Generation Intel Xeon Scalable Processor Nevine Nassif, Intel, Hudson, MA In Paper 2.2, Intel’s next-generation Xeon Scaleable processor utilizing a quasi-monolithic approach to integration in 7nm is presented. The 2×2 die array features an ultra-high bandwidth multi-die fabric IO featuring 10TB/s total die-to-die bandwidth across the 20 interfaces, while maintaining a low 0.5pJ/b energy consumption. 8:50 AM 2.3 IBM Telum: A 16-Core 5+ GHz DCM Ofer Geva, IBM Systems and Technology, Poughkeepsie, NY In Paper 2.3, IBM’s Z series processor advances into 7nm technology, featuring many architectural improvements to best leverage this class of CMOS technology. The processor leverages a large 32MB L2 cache, creating large virtual L3 and L4 caches and a fully synchronous interface that connects two co-packaged 530mm2 die operating at half the CPU clock. 40 • 2022 IEEE International Solid-State Circuits Conference 978-1-6654-2800-2/22/$31.00 ©2022 IEEE ISSCC 2022 / February 21, 2022 / 8:30 AM 2 2.4 9:00 AM POWER10TM: A 16-Core SMT8 Server Processor with 2TB/s Off-Chip Bandwidth in 7nm Technology Rahul M. Rao, IBM, Bengaluru, India In Paper 2.4, IBM describes a 7nm 16-core Power10™ processor, featuring a series of architectural, design and implementation improvements to ensure continued performance gains. The 602mm2 die features an impressive 2TB/s bandwidth aggregated across chip-to-chip, DRAM and PCIe interfaces. 2.5 9:10 AM A 5nm 3.4GHz Tri-Gear ARMv9 CPU Subsystem in a Fully Integrated 5G Flagship Mobile SoC Ashish Nayak, MediaTek, Austin, TX In Paper 2.5, MediaTek unveils their first ARMv9 CPUs for flagship mobile applications, featuring a 3.4GHz maximum clock rate and a tri-gear CPU subsystem with out-of-order CPUs for both mid and high-performance gears. Manufactured in 5nm, resource scaling and implementation methodologies of the high-performance gear achieve a 27% performance uplift vs. the mid-gear. 2.6 9:20 AM A 16nm 785GMACs/J 784-Core Digital Signal Processor Array with a Multilayer Switch Box Interconnect, Assembled as a 2×2 Dielet with 10µm-Pitch Inter-Dielet I/O for Runtime Multi-Program Reconfiguration Uneeb Rathore, University of California, Los Angeles, CA In Paper 2.6, UCLA demonstrates a 2×2 multi-die reconfigurable processor with a multi-layer switch-box interconnect and ultra-highdensity multi-die interfacing. Fabricated in 16nm and utilizing a silicon interposer, the die-to-die IO features a 10μm bump pitch with each IO circuit occupying 137μm2 and consuming 0.38pJ/b of energy. 2.7 9:30 AM Zen3: The AMD 2nd-Generation 7nm x86-64 Microprocessor Core Thomas Burd, AMD, Santa Clara, CA In Paper 2.7, AMD discusses the micro-architectural features of “Zen 3”, providing a unique 7nm-to-7nm (same-node) power and performance comparison to the prior generation. The 68mm2 8-Core Complex with 32MB L3 cache achieves a 19% core IPC improvement through architectural enhancements, coupled with a 6% frequency improvement, yielding an increase in power efficiency by up to 20%. DIGEST OF TECHNICAL PAPERS • 41 ISSCC 2022 / SESSION 2 / PROCESSORS / 2.1 2.1 Ponte Vecchio: A Multi-Tile 3D Stacked Processor for Exascale Computing Wilfred Gomes1, Altug Koker2, Pat Stover3, Doug Ingerly1, Scott Siers2, Srikrishnan Venkataraman4, Chris Pelto1, Tejas Shah5, Amreesh Rao2, Frank O’Mahony1, Eric Karl1, Lance Cheney2, Iqbal Rajwani2, Hemant Jain4, Ryan Cortez2, Arun Chandrasekhar4, Basavaraj Kanthi4, Raja Koduri6 Intel, Portland, OR; 2Intel, Folsom, CA; 3Intel, Chandler, AZ; 4Intel, Bengaluru, India Intel, Austin, TX, 6Intel, Santa Clara, CA 1 5 Ponte Vecchio (PVC) is a heterogenous petaop 3D processor comprising 47 functional tiles on five process nodes. The tiles are connected with Foveros [1] and EMIB [2] to operate as a single monolithic implementation enabling a scalable class of Exascale supercomputers. The PVC design contains >100B transistors and is composed of sixteen TSMC N5 compute tiles, and eight Intel 7 memory tiles optimized for random access bandwidth-optimized SRAM tiles (RAMBO) 3D stacked on two Intel 7 Foveros base dies. Eight HBM2E memory tiles and two TSMC N7 SerDes connectivity tiles are connected to the base dies with 11 dense embedded interconnect bridges (EMIB). SerDes connectivity provides a high-speed coherent unified fabric for scale-out connectivity between PVC SoCs. Each tile includes an 8-port switch enabling up to 8-way fully connected configuration supporting 90G SerDes links. The SerDes tile supports load/store, bulk data transfers and synchronization semantics that are critical for scaleup in HPC and AI applications. A 24-layer (11-2-11) substrate package houses the 3D Stacked Foveros Dies and EMIBs. To handle warpage, low-temperature solder (LTS) was used for Flip Chip Ball Grid Array (FCBGA) design for these die and package sizes. The foundational processing units of PVC are the compute tiles. The tiles are organized as two clusters of 8 high-performance cores with distributed caches. Each core contains 8 vector engines processing 512b floating-point/integer operands and 8 matrix engines with an 8-deep systolic array executing 4096b vector operations/engine/clock. The compute datapath is fed by a wide load/store unit that fetches 512B/clock from a 512KB L1 data cache that is software configurable as a scratchpad memory. Each vector engine achieves throughput of 512/256/256 operations/clock for FP16/FP32/FP64 data formats, while the matrix engine delivers 2048/4096/4096/8192 ops/clock for TF32/FP16/BF16/INT8 operands as shown in Fig. 2.1.1. The two 646mm2 base dies provide a communication network for the stacked tiles and includes SoC infrastructure modules including memory controllers, fully integrated voltage regulators (FIVR) [3], power management and 16 PCIe Gen5/CXL host interface lanes. The base dies are fabricated on a 17-metal Intel 7 [4] enhanced for Foveros process technology that includes through-silicon vias (TSV) in a high-resistivity substrate. Compute and memory tiles are stacked face-to-face on top of the base dies using a dense array of 36μm-pitch micro bumps. This dense pitch provides high assembly yield, high power bump density and current capacity, and ~2× higher signal density compared to the 50μm bump pitch used in Lakefield [5]. Power TSVs through the base die are built as 1×2, 2×1, 2×2, 2×3 and 2×4 arrays within a single C4 bump shadow. Die-to-die routing and power delivery uses two top-level copper metals with 1μm and 4μm pitch thick metal layers [6]. Each base die connects to four HBM2E tiles and a SerDes tile using a 55μm pitch EMIB. The cross-sectional details of the 3D stacked base die and EMIB are shown in Fig. 2.1.2. The base tile also contains a 144MB L3 cache, called the Memory Fabric (MF), with a complex geometric topology operating at 4096B/cycle to support the distributed caches located under the shadow of the compute tile cores. The L3 cache is a large storage that backs up various L1 caches inside the Core. It is organized as multiple independent banks each of which can perform one 64B read/write operation/clock. To maximize the cache storage that can be supported on PVC, the distributed cache architecture splits the total cache between the base die and RAMBO tiles. A modular L3 bank design allows the data array to be placed at arbitrarily distances with a FIFO interface. This allows the pipeline to tolerate large propagation latencies. With this organization, the data array can be placed on a separate tile above the bank logic that resides on the base die. Each RAMBO tile contains 4 full banks of 3.75MB, providing 15MB per tile, with 60MB distributed among four RAMBO tiles per base tile (Fig. 2.1.3). The base tile connects the compute tiles and RAMBO tiles using a 3D stacked die-to-die link called Foveros Die Interconnect (FDI). Transmitter (Tx) and receiver (Rx) circuits of this interface are powered by the compute tile rail. Level-shifters on the base tile convert to the supply voltage within the asynchronous interface. The CMOS link has a 3:2 buswidth compression and can run at a variable ratio from 1.0-1.5× relative to the base clock to balance speed and energy efficiency. After traversing the FDI link, signals are decompressed back to full width in the destination Rx domain. The FDI link is organized as eight groups, with each group consisting of 800 lanes per compute tile. Each group uses common clocking with phase compensation on the base die to correct for variation between base and compute tiles. This necessitates a base-to-compute tile clock and a return clock going back to the base die to enable clock compensation. 42 • 2022 IEEE International Solid-State Circuits Conference FDI cells are clumped together to limit lead way routes to <300μm. 33% of micro bumps are reserved for a robust power delivery for tile circuitry located under the micro bump field. Data lanes are bundled to minimize the clock power overhead. The die-to-die channel includes capacitance from the micro-bump and relatively small ESD diodes. The coupling capacitance from the six adjacent micro bumps is <5% of the total interconnect capacitance. The energy efficiency of FDI is 0.2pJ/b, and the entire link can run up to 2.8GT/s. A multiple shorted spine structure enables a 20% reduction in skew. The clocking scheme for the die-to-die interface is shown in Fig. 2.1.4. The capability of independent voltage planes along with frequency separation between the compute tiles and base die allows independent voltage islands to target each compute die and the base die for the optimal frequency. Power delivery is implemented with 3D-stacked Fully Integrated Voltage Regulators (FIVRs) located on the base die. 3D-stacked FIVRs enable high-bandwidth fine-grained control over multiple voltage domains and reduce input current by ~60% and I2R losses by 85%. The power density challenges of 3D stacking, along with degraded inductor Q factor caused by scaled core footprints, required a new in-package substrate inductor technology called Coaxial Magnetic Integrated Inductor (CoaxMIL) [5] that provides highQ inductors with reduced footprint (Fig. 2.1.5). This technology improves regulator efficiency by 3% relative to air core inductors. High-density Metal-Insulator-Metal (MIM) capacitors on the base die reduce supply first droop and augment power delivery to the top dies. FIVR on the base die deliver up to 300W per base die into a 0.7V supply. The effective input resistance of 0.15mΩ for both base dies minimizes I2R loss. Efficiency of this 3D-stacked FIVR implementation compares favorably to a monolithic solution, with just a <1% gap (Fig. 2.1.5). The top die shadows (TDS) need to be managed for both IR drop and current return and the process and the power grid was co-optimized to enable the power delivery for this product (Fig. 2.1.5). There are two external facing high-speed interfaces included in the Ponte Vecchio SoC: a 16 PCIe Gen5/CXL 32 GT/s interface on the base die and an 8×4-lane SerDes connectivity tile. The PCI Gen5 interface is used to connect PVC as a host interface to the CPU. The high-speed signals for this interface are connected to the package through the base die TSVs and the impact of the TSVs on the channel insertion and return loss is included as part of the link budgeting. The TSVs in this Intel 7 Foveros base die technology minimize high-frequency loss for high-speed I/Os as described in [6]. The TSVs contribute ~0.3dB to the channel insertion loss at 16GHz. Thermal management poses significant challenges in a 3D-stacked design. Several strategies were used to meet 600W target for high-end platforms (Fig. 2.1.6). Thick interconnect layers in the base and compute tiles act as lateral heat spreaders. High micro-bump density is maintained over potential hotspots to compensate for reduced thermal spreading in a thin-die stack. High array density of power TSVs is used to reduce C4 bump temperature. Compute tile thickness is increased to 160μm to improve thermal mass for turbo performance. In addition to the 47 functional tiles, there are 16 additional thermal shield dies stacked to provide a thermal solution over exposed base die area to conduct heat. Backside metallization (BSM) with solder thermal interface material (TIM) is applied on all the top dies. The TIM eliminates air gaps caused by different die stack heights to reduce thermal resistance. We also add BSM to the HBM and SerDes dies and enable a solder TIM. The thermal solution and the results are shown in Fig. 2.1.6. As die-to-die FDI micro-bump pitch (36μm) is denser than the available high-volume wafer probe pitch, the probed micro-bumps were snapped to a more relaxed pitch. Comprehensive test of each tile precedes the assembly of each design to ensure high yield before packaging the parts and attaching the HBM die. Initial PVC silicon provides 45TFLOPS of sustained vector FP32 performance, 5TB/s of sustained memory fabric bandwidth and >2TB/s of aggregate memory and scale-out bandwidth. PVC achieves ResNet-50 inference throughput of >43K images/s, with training throughput reaching 3400 images/s. References: [1] D. Ingerly et al., “Foveros: 3D Integration and the use of Face-to-Face stacking for Logic Devices,” IEDM, pp. 19.6.1-19.6.4, 2019. [2] R. Mahajan et al., “Embedded Multi-die Interconnect Bridge (EMIB) — A High Density, High Bandwidth Packaging Interconnect,” IEEE Trans. on Components, Packaging and Manufacturing Tech., vol. 9, no. 10, pp. 1952-1962, 2019,. [3] E. A. Burton et al., “Fully integrated voltage regulators on 4th generation Intel® Core™ SoCs”, IEEE Applied Power Electronics Conf., pp. 432-439, 2014. [4] C. Auth et al., “A 10nm High Performance and Low-Power CMOS Technology,” IEDM, pp. 29.1.1-29.1.4, 2017. [5] Krishna Bharath et al., “Integrated Voltage Regulator Efficiency Improvement using Coaxial Magnetic Composite Core Inductors,” IEEE Elec. Components and Tech. Conf., pp. 1286-1292, 2021 [6] W. Gomes et al., “Lakefield and Mobility Compute: A 3D Stacked 10nm and 22FFL Hybrid Processor System in 12×12mm2, 1mm Package-on-Package,” ISSCC, pp. 144145, 2020. 978-1-6654-2800-2/22/$31.00 ©2022 IEEE ISSCC 2022 / February 21, 2022 / 8:40 AM 2 Figure 2.1.1: 3D and 2D system partitioning with Foveros and EMIB on PVC. Figure 2.1.2: Process details for Foveros and EMIB. Figure 2.1.3: Base die cache design, RAMBO and die-to-die connectivity. Figure 2.1.4: PVC clocking, die-to-die IO and comparisons. Figure 2.1.5: Power delivery, voltage drop for compute and base tiles. Figure 2.1.6: Thermal solutions for PVC. DIGEST OF TECHNICAL PAPERS • 43 ISSCC 2022 PAPER CONTINUATIONS Figure 2.1.7: Ponte Vecchio chip photographs and key attributes. • 2022 IEEE International Solid-State Circuits Conference 978-1-6654-2800-2/22/$31.00 ©2022 IEEE ISSCC 2022 / SESSION 2 / PROCESSORS / 2.2 2.2 Sapphire Rapids: The Next-Generation Intel Xeon Scalable Processor Nevine Nassif1, Ashley O. Munch1, Carleton L. Molnar1, Gerald Pasdast2, Sitaraman V. Iyer2, Zibing Yang1, Oscar Mendoza1, Mark Huddart1, Srikrishnan Venkataraman3, Sireesha Kandula1, Rafi Marom4, Alexandra M. Kern1, Bill Bowhill1, David R. Mulvihill5, Srikanth Nimmagadda3, Varma Kalidindi1, Jonathan Krause1, Mohammad M. Haq1, Roopali Sharma1, Kevin Duda5 Intel, Hudson, MA; 2Intel, Santa Clara, CA; 3Intel, Bangalore, India; 4Intel, Haifa, Israel Intel, Fort Collins, CO 1 5 Sapphire Rapids (SPR) is the next-generation Xeon® Processor with increased core count, greater than 100MB shared L3 cache, 8 DDR5 channels, 32GT/s PCIe/CXL lanes, 16GT/s UPI lanes and integrated accelerators supporting cryptography, compression and data streaming. The processor is made up of 4 die (Fig. 2.2.7) manufactured on Intel 7 process technology which features dual-poly-pitch SuperFin (SF) transistors with performance enhancements beyond 10SF, >25% additional MIM density over SuperMIM and a metal stack with a 400nm pitch routing layer optimized for global interconnects. This layer achieves ~30% delay reduction at the same signal density and is key for achieving the required latency. The core provides better performance via a programmable power management controller. New technologies include Intel Advanced Matrix Extensions (AMX), a matrix multiplication capability for acceleration of AI workloads and new virtualization technologies to address new and emerging workloads. Server usages benefit from high core counts and an increasing number of IO lanes to deliver the performance demanded by customers but are limited by die size. Yield constraints favor smaller die. The concept of a quasi-monolithic SoC is introduced: 4 interconnected die with aggregate area beyond the reticle limit implementing an equivalent monolithic die (Fig. 2.2.1). Each die is built as a 6×4 array, where each slot is populated by one modular component. The on-die coherent fabric (CF) is used to provide low latency, high bandwidth (BW) communication. Each modular component (core/LLC, memory controller, IO, or accelerator complex) contains an agent which provides access to the CF. On-die voltage regulators (FIVR) [1] are arranged in horizontal strips. The SoC requires 2 die, mirrors of each other, arranged in a 2×2 matrix connected by 10 Embedded Multi-Die Interconnect Bridges (EMIB) [2]. Multi-Die Fabric IO (MDFIO) is introduced as a new ultra-high BW, low-latency, lowpower (0.5pJ/b) Die-2-Die (D2D) interconnect aiming to provide an extension of the CF across multiple die. This requires handling on-die dynamic voltage frequency scaling (DVFS) (from 800MHz to 2.5GHz) and carrying the full fabric BW (500GB/s per crossing) across 20 crossings, equal to over 10TB/s aggregate D2D BW. The raw-wire BER spec was set to 1e-27 to achieve a FIT of <1 and avoid the need for in-line correction or replay to allow <10ns round trip latency. A highly parallel 1.6G-to-5.0GT/s signaling scheme was chosen to meet all requirements. MDFIO PHY architecture is shown in Fig. 2.2.3. Source-synchronous clocking with DLL and PIs for training on the RX side, low-swing double-data-rate N/N transmitter for reduced power with AC-DBI for power supply noise reduction, strongarm latch receiver with VREF training and offset cancellation directly connected on the Rx bump. The PHY is trained at several operating frequency points and the resulting DLL and PI trained values are locally stored to handle fast transitions during DVFS events. Most of the traffic across this interface is source synchronous and retimed with an asynchronous FIFO not requiring to be validated by timing tools. However, there are various debug and test-related fabrics which utilize asynchronous buffers for both data and clock. These interfaces are validated by one of 2 models: a cross-die timing model, or a single-die loopback model (Fig. 2.2.2). These models comprehend the Tx/Rx analog circuit delays, EMIB electrical parasitics and variation cross die. While disaggregation helps with overall yield, SPR also employs extensive block repair/recovery methods to further increase good die per wafer. SPR extends techniques used in past products [3] for core and cache recovery to uncore and IO blocks. Repair techniques use redundant circuits to enable a block with a defect to be returned to full functionality. MDFIO implements lane repair to recover defects pre and post assembly. Block-level recovery takes advantage of the modular die, as well as redundant placements in the socket. Unused PCIe blocks in the south die enable IO recovery through positional defeaturing. In total 74% of the die is recoverable. Test and debug solutions provide a way to test and debug die individually or assembled with reuse of test patterns and debug hooks. A combination of muxes and IO signals allow the test and debug fabrics to operate within a die or utilize the EMIB to enable a D2D data-path on assembled parts. A parallel test interface is accessible via DDR channels and a single die provides scan data, via packetized test data, to access IPs on an assembled 4-die part. Parallel test of re-instantiated IPs on all four die is supported 44 • 2022 IEEE International Solid-State Circuits Conference with a single set of patterns in tester memory along with test failure information to support recovery. A parallel trace interface is available via dedicated GPIO to allow access to debug data from any of the 4 individual dies. JTAG is implemented as single controller for system view, but four individual controllers for high volume manufacturing access. SPR uses a combination of FIVRs and motherboard VRs optimized for power management. Choice of voltage regulator types were determined based on max-current and physical spread of the domain and fastest switching events. To decouple the challenges of power delivery with large switching current impacting sensitive IO, highpower noisy digital logic FIVRs and sensitive low-power analog IO FIVRs were sourced by separate MBVRs. FIVR was also used to deliver two quiet analog power rails to MDFIO to enable double pumping and reduce die perimeter. To combat the challenge of a shrinking core footprint, coaxial magnetic integrated inductors [4] are used resulting in high-Q inductors with smaller footprint and increased FIVR efficiency. Power optimization techniques are employed to achieve high performance and reliability. For high-speed interconnect, distributed inline datapath layout is implemented including optimized driver placement/sizing with customized full-metal-stack routing and low-resistive via laddering. In conjunction, recombinant multi-source clock distribution is used across the high-speed uncore clock domains to achieve low insertion delay and skew. Multiple approaches including optimized multiple poly-pitch libraries, low-leakage device maximization, vector sequential optimization, automated clock gating, multi-power domain partitioning, and selective power gating are employed. Protection of vulnerable architecture states is increased via ECC coverage, end-to-end parity and soft-errorresilient sequentials. SPR supports DDR5 memory technology, with eight channels, each capable of 2-DIMM per channel, allowing SPR to deliver twice the bandwidth of the prior generation. The DDR PHY achieves 50% higher maximum data rate by adopting a new IO architecture and advanced signal integrity enablers. Half-rate analog and digital clocking are introduced, along with pseudo differential wide-range DLLs for optimal power and performance. The DDR5 receiver (Rx) has unmatched data (DQ) and strobe (DQs) paths, and its new 4-tap Decision Feedback Equalizer (DFE) enables better channel ISI compensation (Fig. 2.2.4). On-die local voltage regulators provide quiet supplies to the clocking circuits minimizing the power-noise jitter for READ and WRITE operations. In addition, voltage-mode linear equalization with two post-cursor taps is implemented in the full-rate command transmitters. Periodic DQ-DQs retraining helps mitigate the jitter due to the different temperature drift on the unmatched DQ and DQs paths. The 8-channel interface is split into four independent and identical IP instances, each with two channels. Every channel has two 40b sub-channels on the north and south sections of the IP, and command, control, clock signals and PVT compensation circuitry located in the middle. Each die contains 2.5-to-32Gb/s NRZ transceiver lanes used for PCIe and UPI® links. In the transceiver, the transmitter clock path is sourced by two LCPLLs. Meeting the LCPLL reference clock jitter requirements was challenging given board and package crosstalk, EMI, and skew. A differential reference clock distribution using specialized clock buffers allows all transceivers within the die to share a reference clock pin, enabling optimum placement of the reference clock pin away from serial IO and DDR aggressors within the package, socket and board (Fig. 2.2.5). Transmitters include a 3-tap FIR equalizer, a power-efficient voltage mode driver with T-coils to cancel ESD capacitance, and a lowlatency serialization pipeline. The receiver front-end (Fig. 2.2.6) consists of an input pi-coil network to optimize insertion and return loss followed by a passive low-frequency zero-pole pair. Gain and boost are provided by a 3-stage active Continuous Time Linear Equalizer (CTLE) with inductive shunt-peaking. Two-way interleaved data and edge summers feed into respective data and edge double-tail samplers with combined offset and reference voltage cancellation differential pairs. 12-tap data and 4-tap edge DFE currents also feed into the respective summing nodes. A four-stage differential ring oscillator with active-inductor load generates 8-to-16GHz clocks used by the samplers after appropriate dividers. The oscillator employs RC filtering to suppress bias and powersupply noise, eliminating the need for a dedicated voltage regulator. The design features extensive digital calibrations to cancel device mismatch. Digital synthesized logic and firmware running on a micro-controller are used to adapt and optimize the receiver gain, equalization, offset and DFE across channels up to 37dB at 16GHz. Eight data lanes and a shared common lane, consume 6.48pJ/b running at 32Gb/s and occupy 2.27mm2. Acknowledgement: The authors thank all the teams that have worked relentlessly on this product. References: [1] E. A. Burton et al., “FIVR – Fully integrated voltage regulators on 4th generation intel Core SoCs,” IEEE APEC, pp. 432-439, 2014. [2] https://www.intel.in/content/www/in/en/silicon-innovations/6-pillars/emib.html. [3] S. M. Tam et al., “SkyLake-SP: A 14nm 28-Core Xeon® Processor,” ISSCC, pp. 3435, 2018. [4] K. Bharath et al., “Integrated Voltage Regulator Efficiency Improvement using Coaxial Magnetic Composite Core Inductors,” IEEE Elec. Components and Tech. Conf., pp. 12861292, 2021. 978-1-6654-2800-2/22/$31.00 ©2022 IEEE ISSCC 2022 / February 21, 2022 / 8:50 AM 2 Figure 2.2.1: Die floorplan in 2×2 quasi-monolithic configuration. EMIB highlighted Figure 2.2.2: A cross-die timing model, or a single-die loopback model. at die-to-die interfaces. Figure 2.2.3: MDFIO PHY architecture. Figure 2.2.4: SPR DDR5 DQ Rx and 4-tap DFE. Figure 2.2.5: A differential reference clock distribution allows all transceivers within the die to share a reference clock pin. Figure 2.2.6: SPR PCIe Gen5 receiver front end. DIGEST OF TECHNICAL PAPERS • 45 ISSCC 2022 PAPER CONTINUATIONS Figure 2.2.7: Die photo of left and right die arranged in 2×2 quasi-monolithic configuration. EMIB placement highlighted in blue. • 2022 IEEE International Solid-State Circuits Conference 978-1-6654-2800-2/22/$31.00 ©2022 IEEE ISSCC 2022 / SESSION 2 / PROCESSORS / 2.3 2.3 IBM Telum: A 16-Core 5+ GHz DCM Ofer Geva , Chris Berry , Robert Sonnelitter , David Wolpert , Adam Collura , Thomas Strach2, Di Phan1, Cedric Lichtenau2, Alper Buyuktosunoglu3, Hubert Harrer2, Jeffrey Zitz1, Chad Marquart1, Douglas Malone1, Tobias Webel2, Adam Jatkowski1, John Isakson4, Dina Hamid1, Mark Cichanowski4, Michael Romain1, Faisal Hasan4, Kevin Williams1, Jesse Surprise 1, Chris Cavitt1, Mark Cohen1 1 1 1 1 1 IBM Systems and Technology, Poughkeepsie, NY IBM Systems and Technology, Boeblingen, Germany 3 IBM Research, Yorktown Heights, NY 4 IBM Systems and Technology, Austin, TX 1 2 IBM “Telum”, the latest microprocessor for the next generation IBM Z system has been designed to improve performance, system capacity and security over the previous enterprise system [1]. The system topology has changed from a two-design strategy featuring one central system-controller chip (SC) and four central-processor (CP) chips per drawer into a one-design strategy, featuring distributed cache management across four Dual-Chip Modules (DCM) per drawer, each consisting of two processor chips for both core function and system control. The CP die size is 530mm² in 7nm bulk technology [5] [6]. The system contains up to 32 CPs in a four-drawer configuration (Fig 2.3.1). Each CP (shown in Fig 2.3.2 die photo) contains 22B transistors, operates at over 5GHz and is comprised of 8 cores, each with a 128KB L1 instruction cache, a 128KB L1 data cache and a 32MB L2 cache. Chip interfaces include 2 PCIe Gen4 interfaces, an M-BUS interface to the other CP on the same DCM and 6 X-BUS interfaces connecting to other CP chips on the drawer. 6 out of the 8 CPs in each drawer have an A-Bus connection to the other drawers in the system. The transition from the previous system 14nm SOI process [2] to the 7nm bulk process drove significant changes to the physical design capabilities and technology enablement tooling. Key electrical scaling challenges were reduced maximum operating voltage, increased BEOL RC delay and power grid IR loss. Additional significant changes were the loss of deep-trench memory cells (EDRAM) and decoupling capacitors (DTCAP), conversion from radial BITIEs to per-row substrate/NW contacts and new antenna and latch-up avoidance infrastructure. New challenges in EUV and design rule constraints drove new shape-based and cell-based fill tooling to support cross-hierarchical and multilayer density challenges. Many of these updates further complicated concurrent hierarchical design capabilities, prompting new design methodologies as well as additional design constraints and methodology-checking infrastructure. Migrating to a bulk 7nm process and losing the EDRAM technology leveraged by previous designs drove a new cache microarchitecture and a single chip design. The new structure of the CP chip consists of eight processor cores, each with a 32MB private L2 cache which are connected by a dual on-chip ring that provides >320 GB/s of bandwidth (Fig. 2.3.3). A fully populated drawer has a total of 64 physical processor cores and L2 caches. The 64 independent physical caches work together to act as a multi-level shared victim cache that provides 256MB of on chip virtual L3 and 2GB of virtual L4 across up to 8 chips. The 8 chips are fully connected which provides substantially more aggregate drawer bandwidth than predecessor designs. It also reduces the average L1 miss latency compared to the shared, inclusive cache hierarchy on the previous Z15 system, and when combined with the larger L2 and more effective drawer cache utilization enables “Telum” to significantly improve overall system performance. “Telum” features an on-chip AI accelerator that was designed to support the low-latency, high-volume in-transaction AI inferencing requirements of the enterprise customers [3]. Instead of spreading the AI compute power with generic wide-vector arithmetic operation in each core, a single, on-chip AI accelerator is accessible by all the cores on the chip. It consists of high-density multiply-accumulate systolic arrays for matrix multiplication and convolution computation, as well as specialized activation blocks to efficiently handle operations like “sigmoid” or “soft max”. It provides in total 6TFLOPS of FP16 compute performance and is connected to the on-chip ring reaching up to 200GB/s read/write bandwidth. Internal data movers and formatters feed the compute engines with a bandwidth exceeding 600GB/s. Workloads that run on IBM Z generally have large instruction cache footprints [1], as a result, they benefit from massive capacity in the branch prediction logic tables. Prior generation predictors used EDRAM technology to help store the large number of branches. The new branch prediction logic was completely redesigned utilizing an approach that allowed the branch predictor to dynamically reconfigure itself to adapt to the number of branches within a line of a given workload while maximizing capacity and minimizing latency to the downstream core pipeline. This design was partitioned into four identical quadrants with a semi-inclusive level 1 Branch Target Buffer (BTB1) and level 2 (BTB2) hierarchy surrounding a central logic complex that predicts up to 24 branches per prediction bundle. A single quadrant’s BTB2 contained 24 dense SRAM 46 • 2022 IEEE International Solid-State Circuits Conference arrays with a capacity of 4× the full-custom SRAMs used in the BTB1, providing a BTB2 capacity of over 3.6Mb. The total capacity of the entire branch predictor’s BTB1 and BTB2 was over 15Mb which was used to store up to 272,000 branches. The loss of deep-trench EDRAM technology that drove the nest topology change also impacted the amount of on-die decap. Deep Trench Capacitors (DTCAP) were formerly used to achieve a total chip capacitance of more than 30μF to deal with the total chip leakage and transient currents, both expected to increase in many areas due to the denser design. To effectively manage chip power noise, an on-chip decoupling scheme was engineered to replace the previous deep-trench distribution method. The decoupling capacitors distribution was strategically implemented across the different areas of the chip, while maintaining the necessary VDD/VIO decoupling ratio in the IO areas. The design’s overall on-chip decoupling capacitance increased by ~25% vs. the default decap distribution (Fig. 2.3.4). The decrease in overall on-chip decoupling capacitance (vs. previous technologies that used DTCAPs) caused voltage droop times to be much shorter in very large delta-I events. Noise simulations were used to improve reaction time of the on-chip throttling logic [4]. A global Performance Throttle Mechanism (PTM) is augmented with local PTM for faster reaction to droop events. The local PTM is within the core-centric Digital Droop Sensor (DDS) and is physically close to the areas where throttling can be induced, acting more quickly than the global PTM. The controllability and data accuracy were improved by increasing the throttling level and number of throttle patterns from 16 to 32 and increasing the resolution of the DDS from 12b to 24b. The DCM (71×79mm2) packages both dies with a 500μm edge-to-edge spacing, connected by a high-speed M-Bus (3.6088Tb/s or 451.1GB/s for a processor clock running up to 5.2GHz) interface through the top layers of the laminate, enabling synchronous operation and effectively allowing the microarchitecture to treat the DCM as a single chip with 16 physical cores per socket (Fig. 2.3.5). The MBUS tight-length interconnect constraints required innovation of a highperformance, low-latency, power-efficient interface. The interface is fully synchronous, operating at half the processor clock rate without the use of any serialization and deserialization to achieve minimal latency. The interface is implemented as an extremely wide bus consisting of 1388 single-ended data lanes to compensate for the lack of serialization. Test drivers and receivers are utilized, as well as redundant lanes to repair the bus if defective lanes are detected. Thermal and mechanical stress/strain management (Fig. 2.3.6) is critical to package performance with two closely spaced die. The concentrated heat load is managed by IBM-developed Thermal Interface Materials (TIMs), a single custom copper lid optimized for heat and mechanical load spreading, and a liquid-cooled cold plate integral to the socket-level mechanical loading system. Core, I/O and cache temperature are kept below critical limits at all projected workloads and further protected from excursions with active system-level temperature monitoring core throttling. Both processors are connected to each other on the board by a 2×18 differential pair bus running synchronously at four times the processor speed. The board routing encroached underneath the DCM to satisfy the tight signal integrity requirements due to the plane openings of the land grid array connector with 4753 IOs (Fig. 2.3.7). To support both CPs acting in unison, a new PLL clock signal distribution and associated circuits were employed to minimize clocking uncertainty for the M-Bus interface. A single PLL from one processor chip sources both chips’ clock grid distributions eliminating the effects of long-term drift error associated with multiple PLLs. Each chip is equipped with specialized circuits that allow for the sampling of each die’s clock grid signal and de-skewing and aligning the phases for both clock signals. Acknowledgement: The authors would like to thank the entire IBM Z team, the IBM EDA team, the IBM Enterprise Systems Product Engineering team, the IBM Research team, and the Samsung fabrication team for all their hard work and contributions to the success of this project. References: [1] C. Berry et al., “IBM z15: A 12-Core 5.2GHz Microprocessor,” ISSCC, pp. 54–56, 2020. [2] D. Wolpert et al., “IBM’s Second Generation 14-nm Product, z15,” IEEE JSSC, vol. 56, no.1, pp. 98-111, 2021. [3] C. Jacobi, “IBM Z Telum Mainframe Processor,” IEEE Hot Chips Symp, 33, 2021. [4] Tobias Webel et al., “Proactive Power Management in IBM z15”, IBM J. of Res. and Dev., pp. 15:1-15:12, Sept.-Nov., 2020 [5] Samsung 7nm Logic Technology. [6] W. C. Jeong et al., “True 7nm Platform Technology featuring Smallest FinFET and Smallest SRAM cell by EUV, Special Constructs and 3rd Generation Single Diffusion Break,” IEEE Symp. VLSI Tech., pp. 59-60, 2018. 978-1-6654-2800-2/22/$31.00 ©2022 IEEE ISSCC 2022 / February 21, 2022 / 9:00 AM 2 Figure 2.3.1: 32 CPs on a 4 drawer configuration. Figure 2.3.2: CP Die photo (courtesy of Samsung). Figure 2.3.3: CP structure and RING. Figure 2.3.4: VDD Gate Decoupling Capacitance density (pF/mm2). Figure 2.3.5: Physical view of the high speed M-BUS interconnect between processor Figure 2.3.6: Chip carrier mechanical strain variation showing peak levels concentrated between dies (mm/mm). die. DIGEST OF TECHNICAL PAPERS • 47 ISSCC 2022 PAPER CONTINUATIONS Figure 2.3.7: Single wiring layer board routing example. • 2022 IEEE International Solid-State Circuits Conference 978-1-6654-2800-2/22/$31.00 ©2022 IEEE ISSCC 2022 / SESSION 2 / PROCESSORS / 2.4 2.4 POWER10TM: A 16-Core SMT8 Server Processor with 2TB/s Off-Chip Bandwidth in 7nm Technology Rahul M. Rao1, Christopher Gonzalez2, Eric Fluhr3, Abraham Mathews3, Andrew Bianchi3, Daniel Dreps3, David Wolpert4, Eric Lai3, Gerald Strevig3, Glen Wiedemeier3, Philipp Salz5, Ryan Kruse3 IBM, Bengaluru, India; 2IBM, Yorktown Heights, NY; 3IBM, Austin, TX IBM, Poughkeepsie, NY; 5IBM, Boblingen, Germany 1 4 The POWER10™ processor designed for enterprise workloads contains 16 synchronous SMT8 cores (Fig. 2.4.1) coupled through a bi-directional high-bandwidth race-track [1][2]. A SMT8 core with its associated cache is called a core chiplet, and a pair of core chiplets forms a 39.4mm2 design tile. Designed in a 7nm bulk technology, the 602mm2 chip (0.85× of POWER9™ [3]) has nearly 18B transistors, 110B vias and 20 miles of onchip interconnect distributed across 18 layers of metal: 8 narrow-width layers for short range routes, 8 medium-width layers for high performance signals and two 2160nm ultra-thick metal (UTM) layers dedicated for power and global clock distribution. There are 10 input voltages as shown in Fig. 2.4.1: core/cache logic (Vdd), cache arrays (Vcs), nest logic (Vdn), two PHY voltages (Vio, Vpci), stand-by logic (Vsb), a high-precision reference voltage (Vref), DPLL voltage (VDPLL), analog circuitry voltage (VAVDD), and an interface voltage (V3P3). The C4 array contains 24477 total connections (1.25× of [3]) with 10867 power, 11879 ground and 1731 signal connections. A core and its associated L2 cache are power-gated together, while the L3 cache is power-gated independently. Scaling from 14nm SOI to 7nm bulk technology forced numerous design innovations. Power grid robustness is bolstered with 19μF (0.4× of [3]) of on-chip capacitance along with additional sensors for droop detection. The change from SOI’s sparse buried insulator ties to bulk’s denser nwell/substrate contacts resulted in 32× as many placed cells consuming 14× as much area as the previous design, driving new algorithms for cell insertion around large latch clusters and voltage regions. A new hierarchical antenna infrastructure was created, aware of macro dimensions, pin locations, and multi-sink nets, with path-aware insertion of wire jumpers and diodes to enable concurrent hierarchical design. Increased wire parasitics and 1× metal layer rule constraints drove extensive use of via meshes, particularly on wide-wire timing-critical nets. Place and route innovations coupled with a 9 tracks-per-bit library cell image improved silicon utilization and routability without sacrificing performance. SER-resilient latches with redundant state-saving nodes were used, trading 2.5× latch size in <0.5% of architecturally critical instances. As shown in Fig. 2.4.2, the clock infrastructure uses a pair of redundant reference clocks with dynamic switch-over capability for RAS. 34 PLL/DPLLs control 90 independent meshes across the chip. A complex network of 20 differential multiplexers allow a multitude of reference clocks with varying spread-spectrum and jitter capability into the PLL/DPLLs. A single DPLL generates synchronous clocks for the 8 design tiles, each of which contains four 1:1 resonant meshes (tuned to 2.8GHz), four 2:1 non-resonant meshes, and a chip-wide nest / fabric mesh. Within each design tile, 4 skew-sensors, 4 skew-adjusts, and 4 programmable delays continuously align all running meshes to the chip-wide nest mesh within 15ps across the entire voltage range. Another DPLL generates groups of synchronous Power Accelerator Unit (PAU) clocks, where each PAU portion can be independently mesh-gated. The clock design can also choose between an on-chip-generated PCIe reference clock or two off-chip PCIe reference clocks to further improve its spread-spectrum and jitter. Similar to [3], the programmability of clock drive strengths, pulse widths, and resonance mode reduces clock power by 18% over traditional designs. Four variants of customized SRAM cells constitute over 200MB of on-die memory. A performance-optimized lower threshold voltage (VT) 0.032μm2 6 transistor (6T) SRAM cell with single- and dual-port versions is used in 7 high-speed core arrays and singleport compilable SRAMs. The dense L2/L3 caches use a leakage-optimized 6T 0.032μm2 cell with dual supply, while a 0.054μm2 8 transistor (8T) SRAM cell is used in two-port compilable arrays. A larger menu (1.5× of [3]) of different ground rule clean cells is used in 10 custom plus several compilable multi-port register files, 3 content-addressable memories (CAM) and synthesized memories. SRAM arrays have optional write-assist circuitry applying negative bitline boost or local voltage collapse for banked 6T designs to support operation down to 0.45V. Most of the array peripheral logic (decoder, latches, IO and test) is synthesized, with structured placement enabling in-context optimization, logic and latch sharing and simplified custom components [4]. The SMT8 core was optimized for both single thread and overall throughput performance on enterprise-scale workloads. Core microarchitecture enhancements [5] include 1.5× instruction cache, 4× L2 cache, and 4× TLB, all with constant or improved latency, larger branch predictors and significantly increased vector SIMD throughput compared to [3]. The core additionally includes four 512b matrix-multiply assist (MMA) units. The MMA unit shares the core clock mesh to reduce overall instruction latency, yet has a separate power domain that is dynamically power-gated off when not in use to optimize energy 48 • 2022 IEEE International Solid-State Circuits Conference efficiency. The core physical design consists of fully abutted physical hierarchies, with the instruction and execution control units flanking the load store and the arithmetic units, placed below the MMA (Fig. 2.4.1). Traditional logical unit boundaries were dissolved, and content was redistributed into large floorplanned blocks. Each resulting block utilized all metal levels except the UTM layers. This improved methodology removed 2 levels of physical hierarchy, enabled efficient area and metal usage in the core, and resulted in 13 synthesized blocks (0.1× of [3]) with an average of 800k nets, 750k cells and a total of 404 hard array instances. Each core chiplet includes 6 digital thermal sensors (DTS), 2 digital droop sensors (DDS) with associated controllers for thermal and voltage management, along with 4 process-sensitive ring oscillators (PSRO). The DTS and DDS are located at high thermal and voltage stress locations in the design as shown in Fig. 2.4.3, superimposed on a thermal map of a 10-core enabled processor running a core-heavy workload. Additionally, 14 DTS, 8 high-precision analog thermal diodes, 16 PSROs (distributed across two voltage rails), 12 skitters for clock jitter monitoring and a composite array of process monitors are distributed across the rest of the chip. The high-bandwidth performance-critical race track is structured through the design tiles on metal10 and above, enabling improved silicon efficiency and reduced latency. The synchronous portion of the nest also includes power management, 2 memory management units, interrupt handling, a compression unit, test infrastructure and system configuration and control logic. Additionally, 6 accelerator functions, 4 memory controllers, 2 PCIe host bridge units, data and transaction link logic, all on the VDN rail, operate asynchronous to the core, while tied to the I/O speeds. These components, 12 of which can be selectively power gated (Fig. 2.4.4) are built in a modular fashion (identical across the east / west regions), primarily at metal8 ceiling to prioritize racetrack wiring. POWER10TM features 144 lanes of high-speed serial I/O capable of running 25-to32.5Gb/s at 5pJ/b supporting the OpenCAPI protocol and SMP interconnect providing 585GB/s of bandwidth in each direction as shown in Fig. 2.4.4. To support up to 30dB of channel loss an Rx architecture using 3-tap decision feedback equalizer (DFE) with continuous time-linear equalization (CTLE) and LTE was used, as well as a series-source terminated style Tx utilizing 2 taps of feed-forward equalization and duty-cycle correction circuitry. A dual-bank architecture on the receiver enables complete recalibration of analog coefficients during runtime. 16 OpenCapi Memory Interface (OMI)/DDIMM 8-lane busses capable of running at 21-to-32GB/s designed with the same PHY architecture as the SMP interconnect provides 409.6GB/s of bandwidth. 32 lanes of industry-standard 32Gb/s PCIe Gen5-compatible PHYs are implemented with vendor IP. 16 of the 32 lanes are limited to Gen4, providing a total bandwidth of 96GB/s. Energy efficiency was significantly improved through micro-architectural and design changes including improved clock gating and branch prediction, instruction fusion and reduced memory access [5]. Power consumption of functional areas and components is illustrated in Fig. 2.4.5. Splitting the VIO domain into multiple islands (Fig. 2.4.1) allows for system-specific power-supply enablement of only used interfaces. System-specific supply voltage modulation enables nest power to be maintained at less than 5%. Leakage power is maintained at less than 20% via aggressive multi-corner design optimization and intelligent usage of three different threshold voltage (VT) logic devices, with less than 3% usage of the fastest device type. A 25% increase in latches connected to a local clock buffer through improved library design, placement algorithms, and less than 3% usage of high-power latches enables power of sequential components of a core chiplet to be less than ~20%. The clock network consumes ~10% of the total power. These enhancements enable 65% of the power to be allocated to the core chiplets. Frequency vs. voltage shmoo is shown in Fig. 2.4.6 for cores within a chip, and across process splits. The shallower slope at higher voltage and faster process can be attributed to wire-dominated lowest VT paths being limiters. Frequency is boosted based on workload [6] up to an all-core product maximum of 4.15GHz. A POWER10™ processor can be packaged in a single-chip, as well as dual-chip module, enabling up to a maximum of 256 threads per socket. References: [1] Samsung 7nm Technology. [2] W. C. Jeong et al., “True 7nm Platform Technology featuring Smallest FinFET and Smallest SRAM cell by EUV, Special Constructs and 3rd Generation Single Diffusion Break,” IEEE Symp. VLSI Tech., pp. 59-60, 2018. [3] C. Gonzalez et al., “POWER9TM: A Processor Family Optimized for Cognitive Computing with 25Gb/s Accelerator Links and 16Gb/s PCIe Gen4,” ISSCC, pp. 50-51, 2017. [4] P. Salz et al., “A System of Array Families and Synthesized Soft Arrays for the POWER9™ Processor in 14nm SOI FinFET technology,” ESSCIRC, pp. 303-307, 2017. [5] W. Starke, B. Thompto “IBM’s POWER10TM Processor”, IEEE HotChips Symp., 2020. [6] B. Vanderpool et al., “Deterministic Frequency and Voltage Enhancements on the POWER10TM Processor,” ISSCC, 2022. 978-1-6654-2800-2/22/$31.00 ©2022 IEEE ISSCC 2022 / February 21, 2022 / 9:10 AM 2 Figure 2.4.1: POWER10 floorplan and voltage representation. Figure 2.4.2: POWER10 clock distribution. Figure 2.4.3: POWER10 sensors on 10 core thermal map for a core sensitive Figure 2.4.4: POWER10 interfaces and power gating. workload. Figure 2.4.5: Chip power components. Figure 2.4.6: Frequency sensitivity across voltage and process. DIGEST OF TECHNICAL PAPERS • 49 ISSCC 2022 PAPER CONTINUATIONS Figure 2.4.7: POWER10 die micrograph (courtesy of Samsung). • 2022 IEEE International Solid-State Circuits Conference 978-1-6654-2800-2/22/$31.00 ©2022 IEEE ISSCC 2022 / SESSION 2 / PROCESSORS / 2.5 2.5 A 5nm 3.4GHz Tri-Gear ARMv9 CPU Subsystem in a Fully Integrated 5G Flagship Mobile SoC Ashish Nayak1, HsinChen Chen1, Hugh Mair1, Rolf Lagerquist1, Tao Chen1, Anand Rajagopalan1, Gordon Gammie1, Ramu Madhavaram1, Madhur Jagota1, CJ Chung1, Jenny Wiedemeier1, Bala Meera1, Chao-Yang Yeh2, Maverick Lin2, Curtis Lin2, Vincent Lin2, Jiun Lin2, YS Chen2, Barry Chen2, Cheng-Yuh Wu2, Ryan ChangChien2, Ray Tzeng2, Kelvin Yang2, Achuta Thippana1, Ericbill Wang2, SA Hwang2 devices, avoiding any lower-VT devices, thereby reducing leakage power and extending the useable range of the BP core to lower voltage and frequency. Figure 2.5.4 shows the silicon measurement of Vmin vs. yield data collected from >1000 dies for the HP core demonstrating robust yield at 3.4GHz. MediaTek, Austin, TX; 2MediaTek, Hsinchu, Taiwan To enhance the capability of the on-die power supply monitoring, a high-bandwidth peak low detection capability is introduced which measures the lowest voltage of rapid supply voltage droops. Conventional data converters need to sample and convert at a multi-GHz rate to measure meaningful values, resulting in a power-hungry and area-consuming design. Prior solutions [2] utilized a single-bit converter with a predetermined voltage threshold. This solution, however, relies on under-sampling and the repetitive nature of the supply droop. This paper presents a tri-gear ARMv9 CPU subsystem incorporated in a 5G flagship mobile SoC. Implemented in a 5nm technology node, a 3.4GHz High-Performance (HP) core is introduced along with circuit and implementation techniques to achieve CPU PPA targets. A die photograph is shown in Fig. 2.5.7. The SoC integrates a 5G modem supporting NR sub-6GHz with downlink and uplink speed up to 7.01Gb/s and 2.5Gb/s, respectively, an ARMv9 CPU subsystem, an ARM Mali G710 GPU for 3D graphics, an in-house Vision Processing Unit (VPU), and a Deep-Learning Accelerator (DLA) for highperformance and power-efficient AI processing. The integrated display engine can provide portrait panel resolution up to QHD+ 21:9 (1600×3360) and frame rates up to 144Hz. Multimedia and imaging subsystems decode 8K video at 30fps, while encoding 4K video at 60fps; camera resolutions up to 320MPixels are supported. LPDDR56400/LPDDR5X-7500 memory interfaces facilitate up to 24GB of external SDRAM over four 16b channels for a peak transfer rate of 0.46Tb/s. To address deficiencies of prior works, a hybrid peak low detector is developed with a high-speed peak low detection channel 0 and two low-speed conversion channels, shown in Fig. 2.5.5. In channel 0, one of the sampling capacitors (C0p or C0m) holds the previous peak low, while the other capacitor is sampling the current VDD. If the newly sampled VDD is lower than the previous peak low, the newly sampled voltage will be held. Cp and Cm are synchronized with C0p and C0m, respectively, therefore sampling and holding the same voltage as C0p and C0m. An 8b R2R DAC is used as the reference voltage for channel P and channel N. Once C0p (or C0m) holds a new peak low voltage, channel P (or channel M) will start a SAR conversion at a low clock rate to obtain the output code D. Since lowspeed A2D conversion is only triggered with a new peak low event, the power consumption is significantly reduced. Die-level supply measurements utilizing this newly introduced circuit are shown in Fig. 2.5.6 where the waveform is a continuous singlepass capture. All processor cores in the CPU subsystem incorporate the ARMv9 instruction set with key architectural advances. Memory Tagging Extension (MTE) enables greater security by locking data in the memory using a tag which can only be accessed by the correct key held by the pointer accessing the memory location, as shown in Fig. 2.5.1. Further, a Scalable Vector Extension 2 (SVE2) allows a scalable vector length in multiples of 128b, up to 2048b, enabling increased DSP and ML vector-processing capabilities, as shown in Fig. 2.5.1. To further improve the power efficiency of the CPU subsystem, this work proposes a adaptive voltage scaling (AVS) architecture by combining frequency-locked loop (FLL) and variation-resistant CPU speed binning technologies. The FLL architecture proposed in [3] addresses the challenge of limited power supply bandwidth, but is unable to cover for long-term (>ms) DC variations, such as supply load regulation or temperature variations. To achieve full-bandwidth protection and voltage margin reduction, a digitally controlled Ring Oscillator (ROSC) frequency-limiting mechanism is added to the FLL, to provide an operating condition reference. This is achieved by applying a minimum fine code (minFC) that the ROSC is allowed to operate on. 1 The heterogeneous CPU complex, shown in Fig. 2.5.2, is organized into 3 gears. The 1st gear is a single HP core which utilizes the ARMv9 Cortex-X2 microarchitecture with 64KB L1 instruction cache, 64KB L1 data cache, and a 1MB private L2 cache. The 2nd gear consists of three Balanced Performance (BP) cores utilizing the ARMv9 Cortex-A710 architecture, each with a 64KB L1 instruction cache, a 64KB L1 data cache, and a 512KB private L2 cache. The 3rd gear features four High Efficiency (HE) ARMv9 Cortex-A510 cores [1], with each core using a 64KB L1 instruction cache, 64KB L1 data cache. Further, the HE CPU cores are implemented in pairs to facilitate the sharing of a 512KB L2 cache, floating-point and vector hardware between two CPUs cores, improving area and power efficiency, maintaining full v9 compatibility, without sacrificing performance of key workloads. Finally, an 8MB L3 cache is shared across all the cores of the CPU complex. The HP core runs up to 3.4GHz clock speed to meet high-speed compute demands, while the HE cores are optimized to operate efficiently at ultra-low voltage. The BP cores provide a balance of power and performance for average workloads. Depending on the dynamic computing needs, workloads can be seamlessly switched and assigned across different gears of the CPU subsystem enabling maximum power efficiency. Dynamic voltage and frequency scaling (DVFS) is employed along with adaptive voltage scaling to adjust operating voltage and frequency. Figure 2.5.1 demonstrates the power efficiency of the CPU subsystem achieving 27% improvement in single thread performance of the HP core over the BP core. To achieve higher performance, several microarchitectural changes, listed in Fig. 2.5.3, were adopted across multiple pipeline stages in the HP / Cortex-X2. These microarchitectural advancements have led to an increase in instance count and silicon area of the X2 CPU, as shown in Fig. 2.5.3. Due to the increase in instance count, the implementation adopted a hierarchical approach to achieve an acceptable implementation turn-around time. The implementation followed the hierarchical strategy shown in Fig. 2.5.3, with two main sub-blocks integrated into the top level. The sub-block shape, pin assignments, and timing budgets were pushed down from top-level implementation. The sub-blocks were then implemented stand-alone meeting these requirements. The toplevel implementation utilized abstracted timing and physical models, enabling a concurrent approach to sub-block and top-level implementation. While implementation was hierarchical, final timing sign-off remained flat ensuring the interface timing between all blocks were met, eliminating the need for any additional timing margin. A similar flat approach was adopted for verifying power-grid integrity, layout versus schematic, and design rule checks. All cores in the CPU subsystem are implemented using a 210nm tall standard cell library in TSMC 5nm. The HP core targets ULVT (ultra-low threshold voltage) devices with minimal use of ELVT (extra-low threshold voltage) devices on critical timing paths to meet the frequency target. On the other hand, the BP core targets low-leakage ULVT 50 • 2022 IEEE International Solid-State Circuits Conference When the operating condition degrades, such as increased IR-drop, the FLL clock frequency will be limited to guarantee safe CPU operation. A voltage increase request, sent to the PMIC, is generated by comparing the FLL output frequency to the PLL input frequency. Conversely, when operating conditions improve and extra voltage margin is no longer needed, the ROSC will oscillate at PLL frequency with a fine code higher than minFC. A voltage decrease request will be sent to the PMIC to reduce the supply voltage until the FLL is frequency locked while using minFC for the ROSC. The minFC is derived through a post-silicon binning process using automated test equipment patterns. By enhancing the same concept of voltage and frequency relationship adjustment described in [4], this work proposes deriving the optimal ROSC configuration by sweeping the ROSC code from high (slower) to low (faster) while various workloads are executed, gradually increasing ROSC frequency until the test pattern fails. The minFC is then recorded as the optimal ROSC code that can still pass the silicon test. This utilizes the benefit of both ROSC and CPU being subjected to the same supply and temperature variations, hence reducing a large portion of the fixed voltage margin of the conventional approach. Figure 2.5.6 shows the adaptive voltage scaling design, as well as the silicon result. This AVS technology is able to provide 4% supply voltage reduction based on different CPU workloads, while tracking closely with the target frequency at 100%. In summary, a tri-gear ARMv9 CPU subsystem for a flagship 5G smartphone SoC is introduced with a high-performance core achieving 3.4GHz at robust yield and delivering up to 27% higher peak performance through microarchitectural and implementation advancements. Further, circuit innovation to enable continuous monitoring of on-die power supply is shown. Finally, a new variation aware AVS technology is introduced to further improve CPU power efficiency. References: [1] “First Generation Armv9 High-Efficiency “LITTLE” Cortex CPU based on Arm DynamIQ Technology,” <https://www.arm.com/products/silicon-ip-cpu/cortex-a/cortexa510>. [2] H. Mair et al., “A 10nm FinFET 2.8GHz Tri-Gear Deca-Core CPU Complex with Optimized Power-Delivery Network for Mobile SoC Performance,” ISSCC, pp. 56-57, 2017. [3] H. Chen et al., “A 7nm 5G Mobile SoC Featuring a 3.0GHz Tri-Gear Application Processor Subsystem,” ISSCC, pp. 54-56, 2021. [4] F. Atallah et al., “A 7nm All-Digital Unified Voltage and Frequency Regulator Based on a High-Bandwidth 2-Phase Buck Converter with Package Inductors,” ISSCC, pp. 316318, 2019. 978-1-6654-2800-2/22/$31.00 ©2022 IEEE ISSCC 2022 / February 21, 2022 / 9:20 AM 2 Figure 2.5.1: ARMv9 memory tagging, SVE2 performance chart, CPU power Figure 2.5.2: ARMv9 CPU cluster. efficiency. Figure 2.5.3: HP core microarchitecture, instance count and hierarchical Figure 2.5.4: HP core silicon measurement data. implementation. Figure 2.5.5: Peak low detector circuit and timing diagram. Figure 2.5.6: Adaptive Voltage Scaling (AVS) using FLL and minimum ROSC code. DIGEST OF TECHNICAL PAPERS • 51 ISSCC 2022 PAPER CONTINUATIONS Figure 2.5.7: Die micrograph. • 2022 IEEE International Solid-State Circuits Conference 978-1-6654-2800-2/22/$31.00 ©2022 IEEE ISSCC 2022 / SESSION 2 / PROCESSORS / 2.6 2.6 A 16nm 785GMACs/J 784-Core Digital Signal Processor Array with a Multilayer Switch Box Interconnect, Assembled as a 2×2 Dielet with 10µm-Pitch Inter-Dielet I/O for Runtime Multi-Program Reconfiguration Uneeb Rathore*, Sumeet Singh Nagi*, Subramanian Iyer, Dejan Marković University of California, Los Angeles, CA *Equally Credited Authors (ECAs) The increasing amount of dark silicon area in power-limited SoCs makes it attractive to consider reconfigurable architectures that could intelligently repurpose dark silicon. FPGAs are more efficient than CPUs, but lack temporal dynamics of CPUs, efficiency and throughput of accelerators. Coarse-grain reconfigurable arrays (CGRAs) can achieve higher throughput, with substantial energy efficiency gap relative to accelerators, and limited multi-program dynamics. This paper introduces a domain-specific and energyefficient (within 2-10× of accelerators) multi-program runtime-reconfigurable 784-core processor array in 16nm CMOS. Our design maximizes generality for signal processing and linear algebra with minimal area and energy penalty. The main innovation is a statistics-driven multi-layer network which minimizes network delays, and a switch box that maximizes connectivity per hardware cost. The layered network is O(N) with the number of processing elements N, which allows monolithic or multi-dielet scaling. The network features deterministic routing and timing for fast program compile and hardware-resource reallocation, suitable for data-driven attentive processing, including program trace uncertainties and application dynamics. Further, this work demonstrates multiple functional dielets that have been integrated at 10μm bump pitch to build a monolithic-like scalable design. The multi-dielet (2×2) scaling is enabled by energyefficient high-bandwidth inter-dielet communication channels that seamlessly extend the intra-die routing network across dielet boundaries, quadrupling the number of compute resources. The reconfigurable compute fabric (Fig. 2.6.1) consists of 2×2 Universal Digital Signal Processor (UDSP) dielets on a 10μm bump pitch Silicon Interconnect Fabric (Si-IF). Each dielet has 196 compute cores (14×14 array), a 3-layer interconnect network, 28boundary Streaming Near Range 10μm (SNR-10) inter-dielet 64b I/O channels, a PLL for high-speed clock generation and a control interface. The control module supports run-time (RT) reconfiguration, including holding pre-written programs in soft reset, concurrent writing and execution of multiple programs, and collecting finished programs to make space or for reuse at a later time. The control module includes a program counter and associated memory for each of the cores, which is used to support algorithm dynamics. The internal architecture of the core (Fig. 2.6.2, some connections omitted for clarity) contains two 16b adders and two 16b multipliers, four 16b inputs and four 16b outputs, 2-9 variable delay lines, a 256b data memory and a 384b instruction memory per program counter. In order to balance compute efficiency and flexibility, the core configuration is designed to support signal processing and basic linear algebra kernels such as FIR/IIR direct- and lattice-form filters, matrix multiplication, CORDIC, mix-radix FFT, etc. The internal architecture of SNR-10 (Fig. 2.6.2) uses standard-cell-based high-current buffers to drive short-distance (~100μm), low-impedance Si-IF inter-die communication channels. The SNR-10 channels can be configured for synchronous or asynchronous operation. The built-in self-test allows the SNR-10 channel to heal hardware faults at chip start-up by routing around the faults using redundant pins and communicating the correction to its connected neighboring dielet through repair/control pads. Each SNR10 channel houses 32 Tx and 32 Rx bits and an Rx FIFO to adjust for clock skew. The channel occupies 237×40μm2 as dictated by the physical dimensions of Si-IF bumps. There is sufficient space to accommodate Duty-Cycle Correction (DCC) and Double Data Rate (DDR) features in future iterations. Figure 2.6.3 shows the layered interconnect network and the tile-able core, called Vertical Stack (VS). We analyze the Data-Flow Graphs (DFGs) of common DSP and linear algebra functions by clustering them into cores and deriving cluster statistics, such as node degree distributions. We generate DSP-like Bernoulli random graphs with similar statistics and sort them on a 2D Manhattan grid by minimizing the wire length cost ∑all wires(Lx+Ly)2. The distribution of obtained wire lengths is shown as a 2D PDF and CDF in Fig. 2.6.3. Based on the CDF, a distance-2 1-hop network can cover over 90% connectivity requirements of the domain. The VS consists of a compute core connected to three-layer switch boxes (SBs). Layer1 SB interfaces with the compute core and provides distance-1 communication, layer-2 SB provides sqrt(2) and layer-3 SB provides distance-2 communication with adjacent 52 • 2022 IEEE International Solid-State Circuits Conference VSs. The data can only be registered inside the compute core and communication between SBs does not add pipeline registers, allowing for a single clock cycle communication between VSs at 1.1GHz in 16nm technology. The internal design and optimizations of SBs (Fig. 2.6.4) aim to reduce hardware cost while maximizing connectivity. We start with a multilayer SB design, where the number of layers and nodes per layer are treated as optimization parameters. The connections between the layers are converted to a hyper-matrix representation where each entry in the hyper-matrix represents a complete path through SB. The connections are pruned by minimizing hyper-row cross-correlations of the matrix. At every prune step, the SB design is tested for Mean Connections Before Failure (MCBF), the number of successfully mapped random input-output pairs before a routing conflict, against the Hardware Cost (HwC) in terms of 2-input MUXes. The selected switch box architecture maximizes the ratio of MCBF to HwC. The setup for UDSP testing (Fig. 2.6.5) includes a compiler flow supporting RT reconfiguration. The user input to the compiler is a DFG. A library of commonly used blocks such as FFT or vector MAC can be designed to simplify and speed up programming. The compiler retimes and clusters the DFG, maps I/O to the SNR-10 channels & arithmetic units to cores, maximizing core utilization. The clustered DFG is assigned to the array grid and placement of the cores is optimized using simulated annealing, with timing-aware masks. The SBs inside the VSs are configured nearly independently and, because of the deterministic nature of SB, the routing process can quickly inspect nearly all of the SB routes in parallel. If the routing fails, the compiler optimizes the program placement, core mappings and clustering iteratively to achieve a successful compilation. At the end, the compiler generates metadata pertaining to the bounding boxes of the program and I/O port map to help the run-time scheduler achieve a fast run-time placement of programs. The modularity & symmetry of the array allows the scheduler to use the program metadata to quickly perform program relocation based on runtime dynamics and current hardware resource utilization of other programs on the array, and also use quick SB routing to route the I/Os of the program to the external I/O ports. The 2×2 multi-dielet UDSP assembly (Figs. 2.6.1 and 2.6.7) features 784 cores on an Si-IF with 10μm inter-dielet I/O links [1]. The VSs on the die edge communicate seamlessly over the Si-IF interposer using the multilayer SBs and SNR-10 channels. The intra-dielet SB interconnect naturally extends across dielet boundaries for a multi-dielet configuration. Clock is sourced from the PLL of one of the dielets and distributed as a balanced tree on the interposer. The plot of power and frequency vs. supply voltage (Fig. 2.6.6) shows a maximum frequency of 1.1GHz at 0.8V for high-throughput applications. A peak energy efficiency of 785GMACs/J is achieved at 0.42V and 315MHz, for energy-sensitive applications. DSP algorithms including beamforming, FIR filters, and matrix-multiply show algorithmindependent, line-rate throughput as well as >90% utilization of the allocated cores. The FFT achieves 53% utilization due to its intrinsic multiplier-to-adder ratio of ~0.6 (for large FFT sizes), as compared to the ratio of 1 inside the core. The energy efficiency is still high, at 4.9pJ per complex radix-2, by keeping the unused elements in soft-reset. The SNR-10 I/O links connecting the 4 dielets are stress-tested for efficiency and operating frequency. With a clock rate of 1.1Gbps/pin, at 0.8V, the channel achieves a 70.4Gbps bandwidth with a shoreline density of 297Gbps/mm. This is achieved with a 2-layer SiIF and 10μm bump pitch. While state-of-the-art multi-chip modules (MCMs) use lower swing and/or lower frequency [2,3] to increase energy efficiency, SNR-10 link achieves a better energy efficiency of 0.38pJ/b, which accounts for total power draw of Tx, Rx channels and wire links, due to smaller capacitance of shorter reach wires, smaller pads (due to fine pitch), smaller ESD, and simpler unidirectional drivers. Acknowledgement: The authors thank SivaChandra Jangam and Krutikesh Sahoo for help with Si-IF packaging and assembly, Sina Basir-Kazeruni for help with layout, DARPA CHIPS and DRBE programs for funding support. References: [1] S. Jangam et al., “Demonstration of a Low Latency (<20 ps) Fine-pitch (≤10 μm) Assembly on the Silicon Interconnect Fabric,” IEEE Elec. Components and Tech. Conf., pp. 1801-1805, 2020. [2] C. Liu, J. Botimer, and Z. Zhang, “A 256Gb/s/mm-shoreline AIB-Compatible 16nm FinFET CMOS Chiplet for 2.5D Integration with Stratix 10 FPGA on EMIB and Tiling on Silicon Interposer,” IEEE CICC, 2021. [3] M. Lin et al., “A 7-nm 4-GHz Arm¹-Core-Based CoWoS¹ Chiplet Design for HighPerformance Computing,” IEEE JSSC, vol. 55, no. 4, pp. 956-966, 2020. [4] J. M. Wilson et al., “A 1.17pJ/b 25Gb/s/pin Ground-Referenced Single-Ended Serial Link For Off- and On-Package Communication in 16nm CMOS Using a Process- and Temperature-Adaptive Voltage Regulator,” ISSCC, pp. 276-278. 2018. 978-1-6654-2800-2/22/$31.00 ©2022 IEEE ISSCC 2022 / February 21, 2022 / 9:30 AM 2 Figure 2.6.1: Top-level architecture, multi-chip reconfigurable fabric with 4 UDSPs, Figure 2.6.2: UDSP core and SNR-10 channel design. 784 cores and 112 channels. Figure 2.6.3: Analysis of algorithm statistics and design of a delay-less 3D multi- Figure 2.6.4: Switchbox design space exploration and optimal switchbox architecture. layer interconnect. Figure 2.6.5: Automated compiler, run-time scheduler and measurement setup. Figure 2.6.6: Chip performance, power and efficiency measurements, and inter-dielet communication protocol comparison. DIGEST OF TECHNICAL PAPERS • 53 ISSCC 2022 PAPER CONTINUATIONS Figure 2.6.7: UDSP chip summary and micrograph. • 2022 IEEE International Solid-State Circuits Conference 978-1-6654-2800-2/22/$31.00 ©2022 IEEE ISSCC 2022 / SESSION 2 / PROCESSORS / 2.7 2.7 Zen3: The AMD 2nd-Generation 7nm x86-64 Microprocessor Core Thomas Burd1, Wilson Li1, James Pistole1, Srividhya Venkataraman1, Michael McCabe1, Timothy Johnson1, James Vinh1, Thomas Yiu1, Mark Wasio1, Hon-Hin Wong1, Daryl Lieu1, Jonathan White2, Benjamin Munger2, Joshua Lindner2, Javin Olson2, Steven Bakke2, Jeshuah Sniderman2, Carson Henrion3, Russell Schreiber4, Eric Busta3, Brett Johnson3, Tim Jackson3, Aron Miller3, Ryan Miller3, Matthew Pickett3, Aaron Horiuchi3, Josef Dvorak3, Sabeesh Balagangadharan 5, Sajeesh Ammikkallingal 5, Pankaj Kumar5 AMD, Santa Clara, CA AMD, Boxborough, MA 3 AMD, Fort Collins, CO 4 AMD, Austin, TX 5 AMD, Bangalore, India 1 2 “Zen 3” is the first major microarchitectural redesign in the AMD Zen family of microprocessors. Given the same 7nm process technology as the prior-generation “Zen 2” core [1], as well as the same platform infrastructure, the primary “Zen 3” design goals were to provide: 1) a significant instruction-per-cycle (IPC) uplift, 2) a substantial frequency uplift, and 3) continued improvement in power efficiency. The core complex unit (CCX) consists of 8 “Zen 3” cores, each with a 0.5MB private L2 cache, and a 32MB shared L3 cache. Increasing this from 4 cores and 16MB L3 in the prior generation provides additional performance uplift, in addition to the IPC and frequency improvements. The “Zen 3” CCX shown in Fig. 2.7.1 contains 4.08B transistors in 68mm2, and is used across a broad array of client, server, and embedded market segments. The ”Zen 3” design has several significant microarchitectural improvements. A high-level block diagram is shown in Fig. 2.7.2. The front-end had the largest number of changes, including a 2× larger L1 Branch-Target-Buffer (BTB) at 1024 entries, improved branch predictor bandwidth by removing the pipeline bubble on predicted branches and faster recovery from mispredicted branches, and faster sequencing of op-cache fetches. In the execution core, the integer unit issue width was increased from 7 to 10, including dedicated branch and store pipes, the reorder buffer was increased by 32 entries to 256, while in the floating-point unit, the issue width was increased from 4 to 6 and the FMAC latency was reduced from 5 to 4 cycles. In the load-store unit, both the maximum load and store bandwidth was increased by one to 3 and 2, respectively, and the translation look-aside buffer (TLB) was enhanced with 4 additional table walkers for a total of 6. Overall, the “Zen 3” core delivers +19% average IPC uplift over “Zen 2” across a range of 25 single-threaded industry benchmarks and gaming applications, with some games showing greater than +30% [2]. To support the change to an 8-core CCX for “Zen 3,” and the corresponding increase in L3 cache slices from 4 to 8, the cache communication network was converted to a new bi-directional ring bus with 32B in each direction, providing both high bandwidth and low latency. The 8-core CCX with 32MB L3 provides 2× the available local L3 cache as “Zen 2” per core, providing significant uplift on many lightly-threaded applications. The L3 cache also contains the through-silicon vias (TSVs) to support AMD V-Cache, allowing an additional 64MB AMD 3D V-Cache to attach to the base die via direct copper-to-copper bond, which can triple the L3 capacity per CCX to 96MB. Another key change from “Zen 2” was converting the L3 cache from the high-current (HC) bitcell to the high-density (HD) bitcell, yielding an area improvement of 14%, and a leakage reduction of 24% of the cache array, all while matching the higher core clock frequencies. The critical physical design challenge of the new “Zen 3” cache was the implementation of the ring bus and the interface to the AMD V-Cache. As can be seen in Fig. 2.7.3, two columns of TSVs run down the left and right halves of the cache, providing connectivity to the stacked AMD V-Cache that effectively triples the memory capacity of each slice. The TSV interface supports >2Tb/s per slice, for an aggregate bandwidth between the two dies of >2TB/s. The ring bus has a cross-sectional bandwidth of >2Tb/s to match the peak core-L3 bandwidth. Architecturally, the L3 cache supports up to 64 outstanding misses from each core to the L3, and 192 outstanding misses from L3 to external memory. 54 • 2022 IEEE International Solid-State Circuits Conference “Zen 3” uses the same 13-metal telescoping stack as “Zen 2,” optimized for density at the lower layers and for speed on the upper layers [1]. A key goal of “Zen 3” was to increase frequency at fixed voltage by 4% across the full range of voltage, with 6% increase at high-voltage to drive a corresponding improvement in single-thread performance. Median silicon measurements of frequency vs. voltage are shown in Fig. 2.7.4, demonstrating success in meeting the goal, and more importantly, the ability to achieve superior single-thread operation at 4.9GHz. Structured logic placement, judicious cell selection, targeted use of low VTs, and wire engineering were used to drive the large frequency increase at high voltage, and the tiles of the core were kept small to enable multiple design iterations per week. To deliver the average IPC uplift of 19% and frequency increase of 6%, the CCX effective switched capacitance (Cac) increased by 15%. Maintaining a ratio of ΔCac/ΔIPC at less than one produces a more power efficient core. A breakdown of the “Zen 3” Cac is shown in Fig. 2.7.5. The fraction of Cac consumed by clock gaters has increased slightly over the previous generation and the fraction consumed by flops and combinational logic has decreased slightly due to the additional effort to improve the clock gating efficiency of “Zen 3.” A modest increase in leakage power was outweighed by the frequency increase to further improve power efficiency. While the Cac and leakage increase leads to higher power at fixed voltage, at ISO performance the “Zen 3” core delivers up to 20% higher performance/W, as shown in Fig. 2.7.6. The “Zen 3” core IP was simultaneously used in two distinct tape-outs. The first was the “Zen 3” core complex die (CCD) chiplet, comprising the CCX, a system management unit (SMU), and an Infinity Fabric On-Package (IFOP) SerDes link to connect to a separate IO die (IOD). The primary upgrade from the prior “Zen 2” CCD [3] was replacing the two 4core “Zen 2” CCXs with a single 8-core “Zen 3” CCX, which allowed for a more streamlined on-die Infinity Fabric to connect only one CCX and leveraged the remaining IP from the prior-generation CCD. The size of this CCD is 81mm2, containing 4.15B transistors. Similar to the prior generation, the CCD was combined with a low-cost 12nm IOD to provide very cost-effective performance, and the modularity of chiplets enabled product configurations to service the entire breadth of the server and desktop PC markets. AMD “Milan” server products combine the server IOD with 2-to-8 “Zen 3” chiplets to deliver a cost-optimized product stack from 16-to-64 cores. AMD “Vermeer” client products combine the much smaller client IOD with 1-to-2 “Zen 3” chiplets to cover the spectrum from top-end 16-core performance desktop products to mainstream 8core value products. The second tape-out was AMD “Cezanne”, a monolithic APU, which upgraded the prior generation APU [4] from two “Zen 2” CCXs to a single “Zen 3” CCX, having the same core as in the CCD and a cut-down 16MB L3 Cache. This die, measuring 180mm2 with 10.7B transistors, contains an 8-compute-unit Vega graphics engine, a multimedia engine, a display engine, an audio engine, two DDR4/LPDDR4 memory channels, as well as PCIe, USB and SATA ports. Combining both the IPC and frequency gains of “Zen 3” over “Zen 2” yields significant performance gains in both single- and multi-thread performance across the entire spectrum of product segments. Figure 2.7.7 shows the Cinebench R20 benchmark score increase of single-thread (1T) performance of 17% on the “Vermeer” desktop part: 13% from IPC and 4% from increased frequency. The multi-threaded Cinebench R20 improvement on the “Cezanne” mobile APU shows 14% uplift. Despite being constrained to the same process technology as the prior generation, the “Zen 3” family of products deliver a substantial boost in performance over the prior generation through the combination of a ground-up redesign of the underlying Zen microarchitecture and the innovative work of the physical design team to achieve up to 6% higher frequency at the same voltage. References: [1] T. Singh et al., “Zen 2: The AMD 7nm Energy-Efficient High-Performance x86-64 Microprocessor Core,” ISSCC, pp. 42-43, 2020. [2] M. Evers et al., “Next Generation ‘Zen 3’ Core,” IEEE Hot Chips Symp., 2021. [3] S. Naffziger et al., “AMD Chiplet Architecture for High-Performance Server and Desktop Products,” ISSCC, pp. 44-45, 2020. [4] S. Arora et al., “AMD Next Generation 7nm Ryzen 4000 APU ‘Renoir’,” IEEE Hot Chips Symp., 2020. 978-1-6654-2800-2/22/$31.00 ©2022 IEEE ISSCC 2022 / February 21, 2022 / 9:40 AM 2 Figure 2.7.1: Die photo of “Zen 3” core complex unit (CCX). Figure 2.7.2: “Zen 3” architecture. Figure 2.7.3: L3 ring bus design. Figure 2.7.4: Frequency improvement. Figure 2.7.5: Cac breakdown. Figure 2.7.6: Performance (average) versus power for one 8C32M “Zen 3” CCX versus two 4C16M “Zen 2” CCXs. DIGEST OF TECHNICAL PAPERS • 55 ISSCC 2022 PAPER CONTINUATIONS Figure 2.7.7: Cinebench R20 performance improvement. • 2022 IEEE International Solid-State Circuits Conference 978-1-6654-2800-2/22/$31.00 ©2022 IEEE