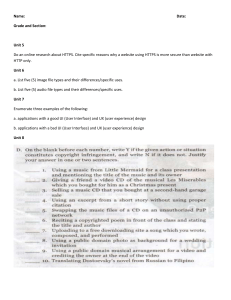

18MAB204T-U1-Common-Lecture Notes

AT

H

IT

H

A

N

S

18MAB204T-Probability and Queueing Theory

Prepared by

F

Dr. S. ATHITHAN

S

O

Assistant Professor

O

TE

Department of of Mathematics

Faculty of Engineering and Technology

LE

C

TU

R

E

N

SRM INSTITUTE OF SCIENCE AND TECHNOLOGY

Kattankulathur-603203, Chengalpattu District.

SRM INSTITUTE OF SCIENCE AND TECHNOLOGY

Kattankulathur-603203, Chengalpattu District.

18MAB204T-Probability and Queueing Theory

S. ATHITHAN

Contents

1

Probability (Review)

2

2

Discrete and Continuous Distributions

5

3

Mathematical Expectation

7

4

Moments and Moment Generating Function

8

5

Examples

6

Function of a Random Variable

7

Exercise/Practice/Assignment Problems

33

36

LE

C

TU

R

E

N

O

TE

S

O

F

AT

H

IT

H

A

N

S

11

Page 1 of 38

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

1

S. ATHITHAN

Probability (Review)

1.1

Definition of Probability and Basic Theorems

1.1.1

Random Experiment

An Experiment whose outcome or result can be predicted with certainty is called a deterministic

experiment.

S

An Experiment whose all possible outcomes may be known in advance, the outcome of a particular performance of the experiment cannot be predicted owing to a number of unknown cases

is called a random experiment.

The Approaches to Define the Probability

IT

1.1.2

H

A

N

Definition 1.1.1 (Sample Space). Set of all possible outcomes which are assumed to be equally

likely is called the sample space and is usually denoted by S. Any subset A of S containing

favorable outcomes is called an event.

AT

H

Definition 1.1.2 (Mathematical or Apriori Definition of Probability). Let S be a sample space

and A be an event associated with a random experiment. Let n(S) and n(A) be the number of

elements of S and A. Then the probability of event A occurring, denoted by P(A) and defined as

n(S)

Number of cases favorable to A

F

=

Exhaustive number of cases in S(Set of all possible cases)

O

n(A)

S

P (A) =

P (A) = lim

n→∞

n1

n

TU

R

E

N

O

TE

Definition 1.1.3 (Statistical or Aposteriori Definition of Probability). Let a random experiment

n1

be repeated n times and let an event A occur n1 times out of n trials. The ratio

is called

n

n1

the relative frequency of the event A. As n increases,

shows a tendency to stabilize and to

n

approach a constant value and is denoted by P(A) is called the probability of the event A. i.e.,

LE

C

Definition 1.1.4 (Axiomatic Approach/Definition of Probability). Let S be a sample space and

A be an event associated with a random experiment. Then the probability of the event A is

denoted by P(A) is defined as a real number satisfying the following conditions/axioms:

(i) 0 ≤ P (A) ≤ 1

(ii) P(S)=1

(iii) If A and B are mutually exclusive events, then P (A ∪ B) = P (A) + P (B)

Axiom (iii) can be extended to arbitrary number of events. i.e., If A1 , A2 , . . . , An , . . . be

mutually exclusive, then P (A1 ∪ A2 ∪ A3 · · · ∪ An . . . ) = P (A1 ) + P (A2 ) + P (A2 ) +

P (A3 ) + · · · + P (An ) + . . .

Theorem 1.1. The probability of the impossible event is zero. i.e., if ϕ is a subset of S containing

no event, then P (ϕ) = 0.

Page 2 of 38

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

S. ATHITHAN

Theorem 1.2. If A be the complimentary event of A, then P (A) = 1 − P (A) ≤ 1.

Theorem 1.3 (Addition Theorem of Probability). If A and B be any two events, then P (A ∪

B) = P (A) + P (B) − P (A ∩ B) ≤ P (A) + P (B).

S

The above Theorem can be extended for 3 events which is given by P (A ∪ B ∪ C) =

P (A) + P (B) + P (C) − P (A ∩ B) − P (B ∩ C) − P (C ∩ A) + P (A ∩ B ∩ C)

A

N

Theorem 1.4. If B ⊂ A, then P (B) ≤ P (A).

H

Conditional Probability and Independents Events

IT

1.2

P (A ∩ B)

P (A)

, provided P (A) ̸= 0

O

F

P (B/A) =

AT

H

The conditional probability of an event B, assuming that the event A has happened, is denoted

by P (B/A) and is defined as

TE

S

Theorem 1.5 (Product Theorem of Probability). P (A ∩ B) = P (A) · P (B/A)

O

The product theorem can be extended to three events such as

E

N

P (A ∩ B ∩ C) = P (A) · P (B/A) · P (C/A and B)

R

There are few properties for the conditional distribution. Please go through it in the textbook.

LE

C

TU

When two events A and B are independent, we have P (B/A) = P (B) and the Product

Theorem takes the form P (A ∩ B) = P (A) · P (B). The converse of this statement is also.

i.e., If P (A ∩ B) = P (A) · P (B), then the events A and B are said to be independent.

This result can be extended to any number of events. i.e., If A1 , A2 , . . . , An be n no. of

events, then

P (A1 ∩ A2 ∩ A3 ∩ · · · ∩ An ) = P (A1 ) · P (A2 ) · P (A3 ) · · · · P (An )

Theorem 1.6. If the events A and B are independent, then the events A and B (and similarly

A and B, A and B) are also independent.

1.3

Total Probability

Page 3 of 38

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

S. ATHITHAN

Theorem 1.7 (Theorem of Total Probability). If B1 , B2 , . . . , Bn be the set of exhaustive and

mutually exclusive events and A is another event associated with(or caused by)Bi , then

P (A) =

n

X

P (Bi )P (A/Bi )

LE

C

TU

R

E

N

O

TE

S

O

F

AT

H

IT

H

A

N

S

i=1

Page 4 of 38

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

2

S. ATHITHAN

Discrete and Continuous Distributions

Definition 2.0.1. A Random Variable (abbreviated as RV) is a function that assigns a real

number X(s) to every element s ∈ S, where S is the sample space corresponding to a

random experiment E.

Discrete Random Variable

S

If X is a random variable (RV) which can take a finite number or countably infinite number

of values, X is called a discrete RV. When the RV is discrete, the possible values of X may

be assumed as x1 , x2 , . . . , xn , . . . . In the finite case, the list of values terminates and in the

countably infinite case, the list goes upto infinity.

H

A

N

For example, the number shown when a die is thrown and the number of alpha particles emitted

by a radioactive source are discrete RVs.

IT

Probability Mass Function

AT

H

If X is a discrete RV which can take the values x1 , x2 , x3 , . . . such that P (X = xi ) = pi ,

then pi is called the PROBABILITY FUNCTION or PROBABILITY MASS FUNCTION or POINT

PROBABILITY FUNCTION , provided pi (i = 1, 2, 3, . . . ) satisfy the following conditions:

O

F

(a) pi ≥ 0, for all i, and

X

pi = 1

(b)

S

i

O

TE

The collection of pairs {xi , pi }, i = 1, 2, 3, . . . is called the probability distribution of the

RV X, which is sometimes displayed in the form of a table as given below:

x1

p1

x2

p2

x3

p3

...

...

xn

pn

...

...

E

N

X = xi

P (X = xi )

TU

R

Continuous Random Variable

C

If X is a RV which can take all values (i.e., infinite number of values) in an interval, then X is

called a continuous RV.

LE

For example, the length of time during which a vacuum tube installed in a circuit functions is a

continuous RV.

Probability Density Function

If X is a continuous RV such that

1

1

P x − dx ≤ X ≤ x − dx = f (x)dx

2

2

then f (x) is called the PROBABILITY

fies the following conditions:

DENSITY FUNCTION ( PDF )

of X, provided f (x) satis-

(a) f (x) ≥ 0, for all x ∈ RX (Range space of ) X, and

Page 5 of 38

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

S. ATHITHAN

Z

f (x) dx = 1

(b)

RX

Moreover, P (a ≤ X ≤ b) or P (a < X < b) is defined as P (a ≤ X ≤ b) =

Z b

f (x) dx.

a

The curve y = f (x) is called the probability curve of the RV X.

Z

a

Note : When X is a continuous RV d P (X = a) = P (a < X < a) =

f (x) dx = 0

a

N

S

This means that it is almost impossible that a continuous RV assumes a specific value. Hence

P (a ≤ X ≤ b) = P (a ≤ X < b) = P (a < X ≤ b) = P (a < X < b).

A

Cumulative Distribution Function (CDF)

j

Z

AT

H

IT

H

If X is an RV, discrete or continuous, then P (X ≤ x) is called the CUMULATIVE DISTRI BUTION FUNCTION of X or DISTRIBUTION FUNCTION of X and denoted as F (x). If X is

discrete,

X

pj

F (x) =

x

f (x) dx

−∞

S

O

F

If X is continuous, F (x)P (−∞ < X ≤ x) =

TE

Properties of the cdf F(x)

O

(i) F (x) is a non-decreasing function of x, i.e., if x1 < x2 , then F (x1 ) ≤ F (x2 )

N

(ii) F (−∞) = 0 and F (∞) = 1.

R

E

(iii) If X is a discrete RV taking values x1 , x2 , x3 , . . . where x1 < x2 < x3 < · · · <

xi−1 < xi < . . . then P (X = xi ) = F (xi ) − F (xi−1 ).

TU

(iv) If X is a continuous RV, then

dx

F (x) = f (x) at all points where F (x) is differen-

C

tiable.

d

LE

Note : Although we may talk of probability distribution of a continuous RV, it cannot be represented by a table as in the case of a discrete RV. The probability distribution of a continuous

RV is said to be known, if either its pdf or cdf is given.

For the problems on Discrete and Continuous Random Variables (RV’s) we have to note down

the following points from the table for understanding.

Page 6 of 38

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

Discrete ♯ RV

(Probability Distribution Function)

S. ATHITHAN

Continuous ♯ RV

(Probability Density Function)

Z

Σ

Operator

1, 2, 3, . . .

0<x<∞

Standard Notation

P (X = x)

f (x)

F (X = x) = P (X ≤ x)

F (X = x) = P (X ≤ x)

Total Probability

Z∞

N

∞

X

P (X = x) = 1

A

CDF∗

f (x)dx = 1

−∞

H

IT

RV-Random Variable, *CDF-Cumulative Distribution Function or Distribution Function

Mathematical Expectation

AT

3

H

−∞

♯

S

Takes Values of the form (Eg.)

S

O

F

Definition 3.0.1 (Expectation). An average of a probability distribution of a random variable

X is called the expectation or the expected value or mathematical expectation of X and is

denoted by E(X).

N

O

TE

Definition 3.0.2 (Expectation for Discrete Random Variable). Let X be a discrete random variable taking values x1 , x2 , x3 , . . . with probabilities P (X = x1 ) = p(x1 ), P (X = x2 ) =

∞

X

xi p(xi ),

p(x2 ), P (X = x3 ) = p(x3 ), . . . , the expected value of X is defined as E(X) =

i=−∞

E

if the right hand side sum exists.

C

TU

R

Definition 3.0.3 (Expectation for Continuous Random Variable). Let X be a continuous random variable with pdf f (x) defined in (−∞, ∞), then the expected value of X is defined as

Z∞

E(X) =

xf (x)dx.

LE

−∞

Note: E(X) is called the mean of the distribution or mean of X and is denoted by X or µ.

3.1

Properties of Expectation

1. If c is any constant, then E(c) = c.

2. If a, b are constants, then E(aX + b) = aE(X) + b.

3. If (X, Y ) is two dimensional random variable, then E(X + Y ) = E(X) + E(Y ).

4. If (X, Y ) is a two dimensional random variable, then E(XY ) = E(X)E(Y ) only if

X and Y are independent random variables.

Page 7 of 38

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

3.2

S. ATHITHAN

Variance and its properties

Definition 3.2.1. Let X be a random variable with mean E(X), then the variance of X is

2

defined as σ 2 = E{(X − µ)2 } and it is denoted by V ar(X) or σX

.

Note: V ar(X) = E(X 2 ) − [E(X)]2 .

Properties of Variance

1. V ar(a) = 0, where a is any constant.

S

2. V ar(aX) = a2 V ar(X)

N

3. V ar(aX ± b) = a2 V ar(X)

IT

H

A

4. V ar(aX + bY ) = a2 V ar(X) + b2 V ar(Y ) only if X and Y are independent

random variables.

Moments and Moment Generating Function

4.1

AT

H

4

Moments

S

O

F

Definition 4.1.1 (Moments about origin). The r th moment of a random variable X about the

origin is defined as E(X r ) and is denoted by µ′r . Moments about origin are known as raw

moments.

O

TE

n

X

xr P (X = x) if X is discrete

x=0

Z∞

xr f (x)dx if X is continuous

x=0

TU

R

E

N

µ′r = E(X r ) =

Note:By moments we mean the moments about origin or raw moments.

LE

C

The first four moments about the origin are given by

1. µ′1 = E(X)=Mean

2. µ′2 = E(X 2 )

3. µ′3 = E(X 3 )

4. µ′4 = E(X 4 )

2

Note: V ar(X) = E(X 2 ) − [E(X)]2 =pµ′2 − µ′1 =Second moment - square of the first

moment. Standard Deviation SD = σX = V ar(X).

Definition 4.1.2 (Moments about mean or Central moments). The r th moment of a random

variable X about the mean µ is defined as E[(X − µ)r ] and is denoted by µr .

Page 8 of 38

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

S. ATHITHAN

The first four moments about the mean are given by

1. µ1 = E(X − µ) = E(X) − E(µ) = µ − µ = 0

2. µ2 = E[(X − µ)2 ] = V ar(X)

3. µ3 = E[(X − µ)3 ]

4. µ4 = E[(X − µ)4 ]

Definition 4.1.3 (Moments about any point a). The r th moment of a random variable X about

any point a is defined as E[(X − a)r ] and we denote it by m′r .

S

The first four moments about a point ‘a’ are given by

N

1. m′1 = E(X − a) = E(X) − a = µ − a

H

A

2. m′2 = E[(X − a)2 ]

IT

3. m′3 = E[(X − a)3 ]

AT

H

4. m′4 = E[(X − a)4 ]

Relation between moments about the mean and moments about any arbitrary point a

TE

S

O

F

Let µr be the r th moment about mean and m′r be the r th moment about any point a. Let µ be

the mean of X.

E[(X − µ)r ]

E[(X − a) − (µ − a)]r

E[(X − a) − m′1 ]r

E (X − a)r − r C1 (X − a)r−1 m′1 + r C2 (X − a)r−2 (m′1 )2 − · · · + (−1)r (m′1 )r

E(X − a)r − r C1 E(X − a)r−1 m′1 + r C2 E(X − a)r−2 (m′1 )2

−r C3 E(X − a)r−3 (m′1 )3 + r C4 E(X − a)r−4 (m′1 )4 − · · · + (−1)r (m′1 )r

= m′r − r C1 m′r−1 m′1 + r C2 m′r−2 (m′1 )2 − r C3 m′r−3 (m′1 )3 + r C4 m′r−4 (m′1 )4

− · · · + (−1)r (m′1 )r

LE

C

TU

R

E

N

O

∴ µr =

=

=

=

=

We define(fix) m′0 = 1, then we have

µ1 = m′1 − m′0 m′1 = 0

µ2 = m′2 − 2 C1 m′1 · m′1 + (m′1 )2

= m′2 − (m′1 )2

µ3 = m′3 − 3 C1 m′2 · m′1 + 3 C2 m′1 · (m′1 )2 − (m′1 )3 ·

= m′3 − 3m′2 · m′1 + 2(m′1 )3

µ4 = m′4 − 4 C1 m′3 · m′1 + 4 C2 m′2 · (m′1 )2 − 4 C3 m′1 · (m′1 )3 + (m′1 )4 ·

= m′4 − 4m′3 · m′1 + 6m′2 · (m′1 )2 − 3(m′1 )4

Page 9 of 38

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

4.2

S. ATHITHAN

Moment Generating Function (MGF)

Definition 4.2.1 (Moment Generating Function (MGF)). The moment generating function of a

random variable X is defined as E(etX ) for allt ∈ (−∞, ∞). It is denoted by M (t) or

MX (t).

MX (t) = E(etX ) =

Z∞

etx f (x)dx if X is continuous

N

x=0

S

n

X

etx P (X = x) if X is discrete

x=0

O

F

AT

H

IT

H

A

If X is a random variable. Its MGF MX (t) is given by

tX

(tX)2

(tX)3

(tX)r

tX

MX (t) = E(e ) = E 1 +

+

+

+ ··· +

+ ...

1!

2!

3!

r!

(t)2

(t)3

(t)r

t

E(X 2 ) +

E(X 3 ) + · · · +

E(X r ) + . . .

= 1 + E(X) +

1!

2!

3!

r!

(t)2 ′

(t)3 ′

(t)r ′

t

µ2 +

µ3 + · · · +

µ + ...

= 1 + µ′1 +

1!

2!

3!

r! r

TE

S

i.e.

C

TU

R

E

N

O

µ′1 = Coefft. of t in the expansion of MX (t)

t2

µ′2 = Coefft. of

in the expansion of MX (t)

2!

..

.

tr

µ′r = Coefft. of

in the expansion of MX (t)

r!

..

.

LE

∴ MX (t) generates all the moments about the origin. (That is why we call it as Moment

Generating Function (MGF)).

Another phenomenon which we often use to find the moments is given below:

(r)

µ′r = MX (0)

We have

MX (t) = E(etX ) = 1 +

t

1!

µ′1 +

(t)2

2!

µ′2 +

(t)3

3!

µ′3 + · · · +

(t)r

r!

µ′r + . . .

Differentiate with respect to t, we get

Page 10 of 38

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

′

MX

(t)

=

µ′1

+

tµ′2

+

′′

MX

(t) = µ′2 + tµ′3 +

(t)2

2!

(t)2

2!

µ′3

+ ··· +

µ′4 + · · · +

(t)r−1

(r − 1)!

(t)r−2

(r − 2)!

S. ATHITHAN

µ′r + . . .

µ′r + . . .

S

..

.

(r)

MX (t) = µ′r + terms of higher powers of t

..

.

N

Putting t = 0, we get

AT

H

IT

H

A

′

MX

(0) = µ′1

′′

MX

(0) = µ′2

..

.

(r)

MX (0) = µ′r

..

.

S

′

MX

(0)

1!

+

t2

2!

′′

MX

(0) + . . .

TE

MX (t) = MX (0) +

t

O

F

The Maclaurin’s series expansion given below will give all the moments.

E

N

O

The MGF of X about its mean µ is MX−µ (t) = E et(X−µ) .

Similarly, the MGF of X about any point a is MX−a (t) = E et(X−a) .

R

Properties of MGF

TU

1. McX (t) = MX (ct)

C

2. MX+c (t) = ect MX (t)

LE

3. MaX+b (t) = ebt MX (at)

4. If X and Y are independent RV’s then, MX+Y (t) = MX (t) · MY (t).

5

Examples

Example: 5.1. From 6 positive and 8 negative numbers, 4 are chosen at random(without replacement) and multiplied. What is the probability that the product is negative?

Solution: If the product is negative(-ve), any one number is -ve (or) any 3 numbers are -ve.

No. of ways choosing 1 negative no. = 6 C3 ·8 C1 = 20 · 8 = 160.

No. of ways choosing 3 negative nos. = 6 C1 ·8 C3 = 6 · 56 = 336.

Page 11 of 38

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

S. ATHITHAN

Total no. of ways choosing 4 nos. out of 14 nos. =14 C4 = 1001.

∴ P (the product is -ve) =

160 + 336

1001

=

496

1001

.

Example: 5.2. A box contains 6 bad and 4 good apples. Two are drawn out from the box at a

time. One of them is tested and found to be good. What is the probability that the other one is

also good.

Aliter: Probability of the first drawn apple is good=

C2 /10 C2

4

10

4

6/45

=

A

P (A)

4

=

4/10

=

1

3

H

P (A ∩ B)

IT

∴ The required probability is P (B/A) =

N

S

Solution: Let A be an event that the first drawn apple is good and let B be an event that the

other one is also good.

H

.

10

Once the first apple is drawn, the box contains only 9 apples in total and 3 good apples.

1

AT

3

9

=

3

.

O

F

∴ Probability of the second drawn apple is also good=

E

N

O

TE

S

Example: 5.3. A random variable X has the following distribution

x

0 1 2

3

4

5

6

7

2

2

2

P[X=x] 0 k 2k 2k 3k k

2k

7k + k

Find (i) the value of k,(ii) the Cumulative Distribution Function (CDF) (iii) P (1.5 < X <

1

4.5/X > 2) and (iv) the smallest value of α for which P (X ≤ α) > .

2

TU

R

Solution:

P [X = xi ] = 1.

i

LE

Here,

X

C

(i) We know that

P [X = 0] + P [X = 1] + P [X = 2] + P [X = 3]+

P [X = 4] + P [X = 5] + P [X = 6] + P [X = 7]

i.e. 0 + k + 2k + 2k + 3k + k2 + 2k2 + 7k2 + k

i.e. 10k2 + 9k − 1

i.e. (10k − 1)(k + 1)

1

=⇒ k =

or k = −1

10

Since the value of the probability is not negative, we take the value k =

=

=

=

=

1

10

1

1

0

0

.

∴ The probability distribution is given by

Page 12 of 38

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

x

P [X = x]

0

0

P [X = x]

0

1

k

1

2

2k

2

3

2k

2

4

3k

3

10 10 10 10

(ii) The Cumulative Distribution Function (CDF)

S. ATHITHAN

5

k2

1

6

2k2

2

7

7k + k

17

100

100

100

2

F (x) = P (X ≤ x)

F (0) = P (X ≤ 0) = P (X = 0) = 0

1

10

1

=

10

1

S

F (1) = P (X ≤ 1) = P (X = 0) + P (X = 1) = 0 +

F (2) = P (X ≤ 1) = P (X = 0) + P (X = 1) + P (X = 2) = 0 +

+

2

3

10

AT

H

IT

H

A

N

10

10

..

.

F (7) = P (X ≤ 7) = P (X = 0) + P (X = 1) + P (X = 2) + P (X = 2)

+P (X = 4) + P (X = 5) + P (X = 6) + P (X = 7)

100

=

=1

100

=

0

1

2

3

P [X = x]

0

k

2k

S

P [X = x]

0

F [X = x]

2k

TE

10

6

7

3k

k2

2k2

7k2 + k

2

2

3

1

2

17

10

3

10

5

10

8

100

81

100

83

10

10

10

100

100

100

100

=1

100

O

10

1

5

E

0

1

4

O

x

N

F

The detailed CDF F (X = x) is given in the table.

TU

R

(iii)

P [(1.5 < X < 4.5) ∩ (X > 2)]

P (X > 2)

C

P (1.5 < X < 4.5/X > 2) =

LE

P (1.5 < X < 4.5) = P (X = 3) + P (X = 4) =

10

P (X > 2) = 1 − P (X ≤ 2)

= 1 − {P (X = 0) + P (X = 1) + P (X = 2)}

3

7

= 1−

=

10

10

P (1.5 < X < 4.5/X > 2) =

5

10

7

10

=

5

7

(iv) The smallest value of α for which P (X ≤ α) >

Page 13 of 38

5

1

2

= 0.5.

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

S. ATHITHAN

5

From the CDF table, we found that P (X ≤ 3) =

= 0.5. But we need the probability

10

8

= 0.8 > 0.5. ∴ α = 4 satisfies

which is more than 0.5, for this we have P (X ≤ 4) =

10

the given condition.

Solution:

P [X = x]

2

5k

5

3

7k

7

4

9k

9

5

11k

11

6

13k

13

81

81

81

81

81

81

81

81

81

81

H

3

7k

7

4

9k

9

5

11k

11

6

13k

13

7

15k

15

8

17k

17

81

81

=1

81

O

TE

2

5k

5

81

9

81

16

81

25

81

36

81

49

81

64

81 81

(iii) P (0 < X < 3/X > 2)

81

81

81

81

81

81

TU

R

E

81

4

F [X = x]

81

1

1

3k

3

N

P [X = x]

0

k

1

S

(ii) the Distribution Function (CDF)

x

P [X = x]

8

17k

17

F

1

7

15k

15

IT

1

3k

3

O

(i) The value of k =

0

k

1

AT

x

P [X = x]

H

A

N

S

Example: 5.4. A random variable X has the following distribution

x

0 1

2

3

4

5

6

7

8

P[X=x] k 3k 5k 7k 9k 11k 13k 15k 17k

Find (i) the value of k,(ii) the Distribution Function (CDF) (iii) P (0 < X < 3/X > 2) and

1

(iv) the smallest value of α for which P (X ≤ α) > .

2

LE

C

P (0 < X < 3/X > 2) =

P [(0 < X < 3) ∩ (X > 2)]

P (X > 2)

(iv) The smallest value of α for which P (X ≤ α) >

From the CDF table, we found that P (X ≤ 5) =

1

2

36

= 0(∵ there is no common elemen

= 0.5.

< 0.5. But we need the probability

81

49

> 0.5. ∴ α = 6 satisfies the

which is more than 0.5, for this we have P (X ≤ 6) =

81

given condition.

Page 14 of 38

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

kx,

k,

Example: 5.5. Find the value of k for the pdf f (x) =

3k − kx,

0,

find (a) the CDF of X (b) P (1.5 < X < 3.2/0.5 < X < 1.8)

S. ATHITHAN

when 0 ≤ x ≤ 1

when 1 ≤ x ≤ 2

. Also

when 2 ≤ x ≤ 3

otherwise

Solution:

f (x)dx = 1

Z0

Z1

0 · dx +

i.e.

1

−∞

2

Z2

kx · dx +

3

Z3

k · dx +

1

f (x)dx = 1

Z∞

(3k − kx)dx +

2

0 · dx = 1

3

2

+k

[x]21

+ 3k · x − k ·

S

1

0

x2

2

3

+0 = 1

2

i.e. 2k = 1

N

O

i.e. 0 + k

x2

TE

O

F

0

f (x)dx +

H

0

f (x)dx +

AT

−∞

f (x)dx +

Z∞

Z3

H

f (x)dx +

i.e.

Z2

IT

Z1

A

−∞

N

We know that

Z0

S

Z∞

k=

1

2

E

=⇒

LE

C

TU

R

x

,

2

1

,

1

(a) Since k = , f (x) = 2

2

1

(3 − x),

2

0,

Page 15 of 38

when 0 ≤ x ≤ 1

when 1 ≤ x ≤ 2

when 2 ≤ x ≤ 3

otherwise

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

S. ATHITHAN

Zx

The CDF of X is F (x) = P (X ≤ x) =

f (x)dx

−∞

When x < 0, F (x) = 0, since f (x) = 0 for x < 0

Zx

When 0 ≤ x < 1, F (x) =

f (x)dx

−∞

=

Zx

f (x)dx =

2

dx =

A

H

f (x)dx

dx +

TE

x

O

N

=

2

E

R

0

Zx

dx +

1

2

Zx

dx +

0

0

TU

(2x − 1)

S

f (x)dx

Z1

4

O

Zx

0

2

dx =

F

0

When 2 ≤ x < 3, F (x) =

1

1

AT

2

H

=

Zx

x

IT

0

Z1

4

0

Zx

When 1 ≤ x < 2, F (x) =

x2

N

0

x

S

Zx

1

2

(3 − x) dx =

1

4

(−x2 + 6x − 5)

0

When x ≥ 3, F (x) = 1

whenx < 0

LE

C

0

x2

,

2

1

F (x) =

(2x − 1),

4

1

(−x2 + 6x − 5),

4

1,

∴

when 0 ≤ x ≤ 1

when 1 ≤ x ≤ 2

when 2 ≤ x ≤ 3

when x ≥ 3

(b) P (1.5 < X < 3.2/0.5 < X < 1.8) =

Page 16 of 38

P [(1.5 < X < 3.2) ∩ (0.5 < X < 1.8)]

P (0.5 < X < 1.8)

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

S. ATHITHAN

P [(1.5 < X < 3.2) ∩ (0.5 < X < 1.8)] = P (1.5 < X < 1.8)

Z1.8

1

3

=

dx =

2

20

1.5

Z1

x

P (0.5 < X < 1.8) =

2

Z1.8

dx +

0.5

1

2

dx =

3

16

1

S

P [(1.5 < X < 3.2) ∩ (0.5 < X < 1.8)]

P (1.5 < X < 3.2/0.5 < X < 1.8) =

N

P (0.5 < X < 1.8)

A

16

=

AT

H

IT

H

20

TE

S

O

F

Example: 5.6. Experience has shown that while walking in a certain park, the time X (in

minutes) duration between seeing two people smoking has a density function

(

kxe−x , when x > 0

f (x) =

0,

otherwise

N

O

Find the (a) value of k (b) distribution function of X (c) What is the probability that a person,

who has just seen a person smoking will see another person smoking in 2 to 5 minutes, in at

least 7 minutes?

C

TU

R

E

Solution: Given the pdf of X is

LE

∴ f (x) ≥ 0 ∀ x

=⇒

(

kxe−x ,

f (x) =

0,

when x > 0

otherwise

k > 0.

Z∞

f (x) dx = 1

(a) We know that

−∞

Z∞

i.e.

kxe−x dx = 1

0

i.e. k x

e−x

−1

−

e−x

∞

(−1)2

0

= 1

=⇒ k = 1

Page 17 of 38

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

(

xe−x ,

∴ f (x) =

0,

S. ATHITHAN

when x > 0

otherwise

(b) The distribution function (CDF) is given by

Zx

F (x) = P (X ≤ x) =

f (x) dx

Here we have x > 0, F (x) =

xe−x dx = 1 − (1 + x)e−x , x > 0

N

Zx

S

−∞

A

0

H

(c)

Z5

O

2

xe−x dx

F

=

AT

2

H

f (x) dx

P (2 < X < 5) =

IT

Z5

TE

S

= −6e−5 + 3e−2

= −0.04 + 0.406 = 0.366

Z∞

P (X ≥ 7) =

TU

C

LE

Page 18 of 38

f (x) dx

7

R

E

N

O

and

Z∞

=

xe−x dx

7

= 8e−7

= 0.007

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

S. ATHITHAN

Example: 5.7. The sales of convenience store on a randomly selected day are X thousand

dollars, where X is a random variable with a distribution function

0,

when x < 0

2

x

,

when 0 ≤ x < 1

F (x) =

2

k(4x − x2 ) − 1, when 1 ≤ x < 2

1,

when x ≥ 2

A

N

S

Suppose that this convenience store’s total sales on any given day is less than 2000 units(Dollars

or Pounds or Rupees). Find the (a) value of k (b) Let A and B be the events that tomorrow the

store’s total sales between 500 and 1500 units respectively. Find P (A) and P (B). (c) Are A

and B are independent events?

H

IT

when x < 0

when 0 ≤ x < 1

when 1 ≤ x < 2

when x ≥ 2

F

AT

0,

d

x,

F (x) =

f (x) = F ′ (x) =

dx

k(4 − 2x),

0,

H

Solution: The pdf of X is given by

TE

S

O

Since f (x) is a pdf,

Z∞

O

Z1

f (x)dx +

R

TU

−∞

Z0

LE

C

i.e.

−∞

0

2

Z∞

k(4 − 2x) dx +

0

1

x2

2

1

0 · dx = 1

2

2

+ k 4x − x2 1 + 0 = 1

0

=⇒ k =

0,

x,

f (x) =

(2 − x),

0,

Page 19 of 38

f (x)dx = 1

Z2

x dx +

i.e. 0 +

f (x)dx +

1

Z1

0 · dx +

Z∞

Z2

f (x)dx +

E

i.e.

−∞

N

Z0

f (x)dx = 1

we have

1

2

when x < 0

when 0 ≤ x < 1

when 1 ≤ x < 2

when x ≥ 2

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

S. ATHITHAN

Since the total sales X is in thousands of units, the sales between 500 and 1500 units is the

1

3

event A which stands for

= 0.5 < X <

= 1.5 and the sales over 1000 units is the

2

2

event B which stands for X > 1. =⇒ A ∩ B = 1 < X < 1.5

Now

Z1.5

P (A) = P (0.5 < X < 1.5) =

f (x)dx

(2 − x) dx =

x dx +

4

1

H

A

0.5

3

N

=

Z1.5

S

0.5

Z1

f (x)dx

H

P (B) = P (X > 1) =

IT

Z2

AT

1

Z2

(2 − x) dx =

=

2

O

F

1

1

1

O

TE

S

Z1.5

P (A ∩ B) = P (1 < X < 1.5) =

f (x)dx

1

R

E

N

Z1.5

3

=

(2 − x) dx =

8

LE

C

TU

The condition for independent events: P (A) · P (B) = P (A ∩ B)

3

3 1

Here, P (A) · P (B) = · = = P (A ∩ B)

4 2

8

∴ A and B are independent events.

Example: 5.8. If a random variable X has the following probability distribution, find

x

−1 0

1

2

E(X), E(X 2 ), V ar(X), E(2X + 1), V ar(2X + 1).

p(x) 0.3 0.1 0.4 0.2

Solution: Here X is a discrete RV. ∴

∞

X

E(X) =

xi p(xi )

i=−∞

= (−1) × 0.3 + 0 × 0.1 + 1 × 0.4 + 2 × 0.2

= −0.3 + 0 + 0.4 + 0.4 = 0.5

Page 20 of 38

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

∞

X

2

E(X ) =

S. ATHITHAN

x2i p(xi )

i=−∞

2

= (−1) × 0.3 + 02 × 0.1 + 12 × 0.4 + 22 × 0.2

= 0.3 + 0 + 0.4 + 0.8 = 1.5

S

V ar(X) = E(X 2 ) − [E(X)]2

= (1.5) − (0.5)2

H

IT

E(2X + 1) = 2E(X) + 1

= 2 × (0.5) + 1

H

A

N

= 1.5 − 0.25 = 1.25

AT

= 1+1= 2

F

V ar(2X + 1) = 22 V ar(X)

N

random variable X

E(X 2 ), V ar(X),

3

4

7

9

has the following probability disE(3X − 4), V ar(3X − 4).

TU

R

E

Example:

5.9. If a

tribution, find E(X),

x

0

1

2

1

3

5

p(x)

25 25 25

O

TE

S

O

= 4 × (1.25) = 5

25

25

LE

C

Solution: Here X is a discrete RV. ∴

E(X) =

X

xi p(xi )

i

=

E(X 2 ) =

14

5

X

x2i p(xi )

i=

=

Page 21 of 38

46

5

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

S. ATHITHAN

V ar(X) = E(X 2 ) − [E(X)]2

2

46

14

=

−

5

5

34

=

25

E(3X − 4) = 3E(X) − 4

S

22

5

N

= =

H

IT

306

25

AT

H

=

A

V ar(3X − 4) = 32 V ar(X)

O

TE

S

O

F

Example: 5.10. A test engineer found that the distribution function of the lifetime X (in years)

of an equipment follows a distribution function which is given by

(

0,

when x < 0

F (x) =

−x

1 − e 5 , when x ≥ 0

N

Find the pdf, mean and variance of X.

E

Solution: The pdf of X is given by

when x < 0

when x ≥ 0

LE

C

TU

R

0,

d

f (x) = F ′ (x) =

F = 1 −x

e 5,

dx

5

Z∞

The Mean E(X) =

xf (x)dx

−∞

Z0

=

1 x

x e− 5 dx

5

−∞

= −

1

5

x

(5x + 25)e− 5

∞

0

= 5

V ar(X) = E(X 2 ) − [(X)]2

Page 22 of 38

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

Z∞

Now E(X 2 ) =

S. ATHITHAN

x2 f (x)dx

−∞

Z0

=

1 x

x2 e− 5 dx

5

−∞

V ar(X) = 50 − [5]2 = 25

H

A

N

∴

1

x ∞

(5x2 + 50x + 250)e− 5 0 = 50

5

S

= −

AT

H

IT

Example: 5.11. Find the mean and standard deviation of the distribution

(

kx(2 − x), when 0 ≤ x ≤ 2

f (x) =

0,

otherwise

TE

S

O

F

Solution: Given that the continuous RV X whose pdf is given by

(

kx(2 − x), when 0 ≤ x ≤ 2

f (x) =

0,

otherwise

N

O

Since f (x) is a pdf,

Z∞

f (x)dx = 1

E

we have

TU

R

−∞

Z0

f (x)dx +

LE

C

i.e.

−∞

−∞

f (x)dx +

0

Z0

i.e.

Z∞

Z2

2

Z∞

Z2

0 · dx +

kx(2 − x) dx +

0

0 · dx = 1

2

2

i.e. 0 + k x −

x3

3

2

+0 = 1

0

=⇒ k =

Page 23 of 38

f (x)dx = 1

3

4

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

S. ATHITHAN

Z∞

The Mean E(X) =

xf (x)dx

−∞

Z0

3

x x(2 − x)dx

4

=

−∞

3

2

x

x4

= 1

−

= − 2

4

3

4 0

N

H

3

x2 x(2 − x)dx

4

F

=

AT

−∞

Z0

O

−∞

3

N

V ar(X) =

6

5

4

− [1]2 =

2

x4

1

5

4

−

x5

5

2

=

0

6

5

r

=⇒

S.D. =

1

5

TU

R

E

∴

O

TE

S

= −

IT

x2 f (x)dx

H

Now E(X 2 ) =

A

V ar(X) = E(X 2 ) − [(X)]2

Z∞

S

3

LE

C

Example: 5.12. If X has the distribution function

0, when x < 1

1

, when 1 ≤ x < 4

3

1

, when 4 ≤ x < 6

F (x) =

2

5

, when 6 ≤ x < 10

6

1, when x ≥ 10

Find (a) the probability distribution of X (b) Find the mean and variance of X.

Page 24 of 38

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

Solution: Given that the CDF of X is

0,

1

,

3

1

,

F (x) =

2

5

,

6

1,

S. ATHITHAN

when x < 1

when 1 ≤ x < 4

when 4 ≤ x < 6

when 6 ≤ x < 10

N

S

when x ≥ 10

H

A

We know that P (X = xi ) = F (xi ) − F (xi−1 ), i = 1, 2, 3, . . . , where F is constant in

xi−1 ≤ x ≤ xi .

AT

H

IT

The CDF changes its values at x = 1, 4, 6, 10. ∴ The probability distribution takes its values

as follows:

O

F

P (X = 1) = F (1) − F (0) =

TE

S

P (X = 4) = F (4) − F (1) =

3

1

2

5

6

N

O

P (X = 6) = F (6) − F (4) =

1

−0=

−

−

1

3

1

2

5

6

3

=

=

=

1

6

1

3

1

6

TU

R

E

P (X = 10) = F (10) − F (6) = 1 −

1

x

p(x)

4

1

6

1

10

1

3

6

3

6

LE

C

∴ The probability distribution is given by

1

1

14

Mean=E(X) = Σxp(x) =

.

3

95

E(X 2 ) = Σx2 p(x) =

.

3

285

196

89

V ar(X) = E(X 2 ) − [E(X)]2 =

−

=

.

9

9

9

Page 25 of 38

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

S. ATHITHAN

N

S

Example: 5.13. If X has the distribution function

0, when x < 0

1

, when 0 ≤ x < 2

6

1

, when 2 ≤ x < 4

F (x) =

2

5

, when 4 ≤ x < 6

8

1, when x ≥ 6

A

Find (a) the probability distribution of X (b) Find the mean and variance of X.

H

IT

when x < 0

AT

when 0 ≤ x < 2

F

when 2 ≤ x < 4

O

when 4 ≤ x < 6

when x ≥ 6

O

TE

S

0,

1

,

6

1

,

F (x) =

2

5

,

8

1,

H

Solution: Given that the CDF of X is

N

We know that P (X = xi ) = F (xi ) − F (xi−1 ), i = 1, 2, 3, . . . , where F is constant in

xi−1 ≤ x ≤ xi .

LE

C

TU

R

E

The CDF changes its values at x = 1, 4, 6, 10. ∴ The probability distribution takes its values

as follows:

P (X = 0) =

1

6

P (X = 2) = F (2) − F (0) =

P (X = 4) = F (4) − F (2) =

1

2

5

8

−

−

P (X = 6) = F (6) − F (4) = 1 −

Page 26 of 38

1

6

1

2

5

8

=

=

=

1

3

1

8

3

8

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

x

∴ The probability distribution is given by

p(x)

37

.

Mean=E(X) = Σxp(x) =

12

101

E(X 2 ) = Σx2 p(x) =

.

6

2

2

6

−

37

2

1

4

1

6

3

6

3

8

8

2

12

= 16.83 − 9.51 = 7.32.

A

N

S

V ar(X) = E(X ) − [E(X)] =

101

0

1

S. ATHITHAN

IT

H

Example: 5.14. The first four moments about X = 5 are 2,5,12 and 48. Find the first four

central moments.

AT

H

Solution: Let m′1 , m′2 , m′3 , m′4 be the first four moments about X = 5. Given m′1 =

1, m′2 = 5, m′3 = 12, m′4 = 48 be the first four moments about X = 5 m′1 = E(X −

5) = 1 =⇒ E(X) − 5 = 1 =⇒ E(X) = µ1 = 6

E

N

O

TE

S

O

F

µ2 = m′2 − (m′1 )2 = 5 − 1 = 4

µ3 = m′3 − 3m′2 · m′1 + 2(m′1 )3

= 12 − 3 × 5 × 1 + 2(1) = −1

µ4 = m′4 − 4m′3 · m′1 + 6m′2 · (m′1 )2 − 3(m′1 )4

= 48 − 4(12)(1) + 6(5)(1) − 3(1) = 27

TU

R

Example: 5.15. The first four moments about x = 4 are 1,4,10 and 45. Find the first four

moments about the mean.

Solution:

LE

C

Let m′1 , m′2 , m′3 , m′4 be the first four moments about X = 4. Given m′1 = 1, m′2 =

4, m′3 = 10, m′4 = 45 be the first four moments about X = 4 m′1 = E(X − 4) =

1 =⇒ E(X) − 4 = 1 =⇒ E(X) = µ1 = 5

µ2 = m′2 − (m′1 )2 = 4 − 1 = 3

µ3 = m′3 − 3m′2 · m′1 + 2(m′1 )3

= 10 − 3 × 4 × 1 + 2(1) = 0

µ4 = m′4 − 4m′3 · m′1 + 6m′2 · (m′1 )2 − 3(m′1 )4

= 45 − 4(10)(1) + 6(4)(1) − 3(1) = 26

Page 27 of 38

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

S. ATHITHAN

Example: 5.16. A random variable X has the pdf f (x) = kx2 e−x , x ≥ 0. Find the r th

moment and hence find the first four moments.

Solution: We know that

Z∞

f (x) dx = 1

−∞

Z∞

i.e.,

kx2 e−x dx = 1

e−x x3−1 dx = 1

N

i.e., k

S

0

Z∞

∵ Γ(n) =

H

i.e., kΓ(3) = 1

e−x xn−1 dx

IT

Z∞

A

0

.

[∵ Γ(n) = (n − 1)!]

O

F

Now, The r th moment is given by

2

AT

i.e., k · 2! = 1 =⇒ k =

H

0

1

−∞

Z∞

=

E

N

O

TE

S

µ′r = E(X r )

Z∞

=

xr f (x) dx

LE

C

TU

R

0

Z∞

= k

e−x xr+3−1 dx

0

=

Now, First Moment µ′1 =

Second Moment µ′2 =

Third Moment µ′3 =

Fourth Moment µ′4 =

Page 28 of 38

xr kx2 e−x dx

1

2

1

2

1

2

1

2

1

2

Γ(r + 3) =

1

2

(r + 2)!

(3)! = 3

(4)! = 12

(5)! = 60

(6)! = 360

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

Example: 5.17. A random variable X has the pdf f (x) =

1

S. ATHITHAN

x

e− 2 , x ≥ 0. Find the

2

MGF(Moment Generating Function) and hence find its mean and variance.

Solution: The MGF of X is given by

MX (t) = E(etX ) =

Z∞

etx f (x) dx

−∞

Z∞

2

x

etx e− 2 dx

S

=

1

1

2

1

e− 2 (1−2t)x dx

A

=

N

0

Z∞

∴ MX (t) =

0

1

1 − 2t

1

IT

2 − 12 (1 − 2t)

H

#∞

e− 2 (1−2t)x

AT

=

1

1

H

0

"

if t <

2

.

O

F

= (1 − 2t)−1 = 1 + 2t + 4t2 + 8t3 + . . .

TE

S

Now, Differentiating w.r.to t, we get

=

=

=

=

=

2 + 8t + 24t2 + . . .

8 + 48t + . . .

′

(0) = 2

MX

′′

MX (0) = 8

E(X 2 ) − [E(X)]2 = 8 − 4 = 4.

LE

C

TU

R

E

N

O

′

MX

(t)

′′

MX (t)

Now, First Moment=Mean= E(X) = µ′1

Second Moment= E(X 2 ) = µ′2

∴ V ar(X)

1

, x = 1, 2, 3, . . . . Find the

2x

MGF(Moment Generating Function) and hence find its mean and variance.

Example: 5.18. A random variable X has the pmf p(x) =

Page 29 of 38

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

S. ATHITHAN

Solution: The MGF of X is given by

tX

MX (t) = E(e

∞

X

) =

=

etx p(x)

x=−∞

∞

X

tx

e

∞ t x

X

e

1

∞ t x

X

e

=

=

2x

2

x=1

x=1

"

#

t t 2

e

e

+

1+

+ ...

2

2

t −1

e

1−

2

2

N

2

et

2−

et

if et ̸= 2.

Now, Differentiating w.r.to t, we get

2et

O

F

′

MX

(t) =

AT

H

IT

∴ MX (t) =

et

A

=

2

H

=

et

S

x=1

TE

S

′′

MX

(t) =

(2 − et )2

2[(2 − et )et + 2e2t ]

(2 − et )3

TU

R

E

N

O

′

(0) = 2

Now, First Moment=Mean= E(X) = µ′1 = MX

2

′

′′

Second Moment= E(X ) = µ2 = MX (0) = 6

∴ V ar(X) = E(X 2 ) − [E(X)]2 = 6 − 4 = 2.

LE

C

Example: 5.19. A random variable X has the r th moment of the form µ′r = (r + 1)!2r . Find

the MGF(Moment Generating Function) and hence find its mean and variance.

Page 30 of 38

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

S. ATHITHAN

Solution: The MGF of X is given by

t

1!

+

2+

t2

2!

t2

2!

µ′2

2

+

3!2 +

t3

3!

µ′3

t3

3!

+ ··· +

tr

r!

µ′r + . . .

4!23 + . . .

IT

= 1+

1!

µ′1

S

) = 1+

t

N

∴ MX (t) = E(e

(r + 1)!2r

2!2

3!22

4!23

A

tX

=

=

=

=

..

.

H

Given µ′r

∴ µ′1

µ′2

µ′3

AT

H

= 1 + 2(2t) + 3(2t)2 + 4(2t)3 + . . .

F

∴ MX (t) = (1 − 2t)−2 .

=

=

=

=

=

−2(1 − 2t)−3 (−2)

6(1 − 2t)−4 (−2)2

′

MX

(0) = 4

′′

MX (0) = 24

E(X 2 ) − [E(X)]2 = 24 − 16 = 8.

TU

R

E

N

O

TE

S

′

MX

(t)

′′

MX (t)

Now, First Moment=Mean= E(X) = µ′1

Second Moment= E(X 2 ) = µ′2

∴ V ar(X)

O

Now, Differentiating w.r.to t, we get

1 3 1

, , re8 4 8

spectively. Evaluate P {|X − µ| ≥ 2σ} and compare it with the upper bound given by

Tchebycheff’s(Chebychev’s) inequality.

LE

C

Example: 5.20. A random variable X takes the values -1,0,1 with probabilities

Page 31 of 38

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

S. ATHITHAN

Solution:

E(X) = 0

1

E(X 2 ) =

4

1

V ar(X) =

4

∴ P {|X − µ| ≥ 2σ} = P {|X| ≥ 1}

= P {X = −1 or X = 1} =

N

σ2

P {|X − µ| ≥ c} ≤

c2

A

Choosing c = 2σ

H

1

P {|X − µ| ≥ 2σ} ≤

4

S

By Chebychev’s inequality

1

IT

4

AT

H

In this problem both the values coincide.

O

TE

S

O

F

Note: Alternative form of Chebychev’s inequality: If we put c = kσ, where k > 0, then

it takes the form

1

|X − µ|

≥σ ≤ 2

P

k

k

N

P

|X − µ|

k

<σ

≥1−

1

k2

TU

R

E

Example: 5.21. A fair dice is tossed 720 times. Use Tchebycheff’s(Chebychev’s) inequality to

find a lower bound for the probability of getting 100 to 140 sixes.

LE

C

Solution:

p = P {getting ‘6’ in a single toss} =

q = 1−

1

6

=

5

6

1

6

and

n = 720

X follows binomial distribution with mean np = 120 and variance npq = 100. i.e. µ =

120 and σ = 100.

Page 32 of 38

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

S. ATHITHAN

By Alternate form of Chebychev’s inequality

P {|X − 120| ≤ 10k} ≥ 1 −

P {120 − 10k ≤ X ≤ 120 + 10k} ≥ 1 −

Choosing k = 2,

k2

1

k2

N

A

IT

H

4

.

Function of a Random Variable

O

6

F

AT

4

1

H

3

k2

we get,

3

P {100 ≤ X ≤ 140} ≥

∴ Required lower bound is

1

S

P {|X − µ| ≤ kσ} ≥ 1 −

TU

R

E

N

O

TE

S

In the analysis of electrical systems, we will be often interested in finding the properties of

a signal after it has been subjected to certain processing operations by the system, such as

integration, weighted averaging, etc. These signal processing operations may be viewed as

transformations of a set of input variables to a set of output variables. If the input is a set of

random variables (RVs), then the output will also be a set of RVs. In this chapter, we deal with

techniques for obtaining the probability law (distribution) for the set of output RVs when the

probability law for the set of input RVs and the nature of transformation are known.

Finding fY (y), when fX (x) is given or known

LE

C

Let us now derive a procedure to find fY (y), the pdf of Y , when Y = g(X), where X is a

continuous RV with pdf fX (x) and g(x) is a strictly monotonic function of x.

Case (i): g(x) is a strictly increasing function of x.

fY (y) =

=

=

=

P [Y ≤ y], where fY (y) is the cdf of Y

P [g(X) ≤ y]

P [X ≤ g −1 (y)]

fX [g −1 (y)]

Differentiating both sides with respect to y,

fY (y) = fX (x)

Page 33 of 38

dx

dy

, where x = g −1 (y)

(6.1)

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

S. ATHITHAN

Case (ii): g(x) is a strictly decreasing function of x.

P [Y ≤ y], where fY (y) is the cdf of Y

P [g(X) ≤ y]

P [X ≥ g −1 (y)]

1 − P [X ≤ g −1 (y)]

1 − fX [g −1 (y)]

fY (y) =

=

=

=

=

dy

, where x = g −1 (y)

(6.2)

N

dx

A

fY (y) = −fX (x)

S

Differentiating both sides with respect to y,

dy

dx

H

dx

AT

fY (y) = fX (x)

IT

H

Combining (6.1) and (6.2), we get

|g ′ (x)|

O

F

fY (y) = fX (x)

S

Note : The above formula for fY (y) can be used only when x = g −1 (y) is single valued.

N

O

TE

When x = g −1 (y) takes finitely many values x1 , x2 , x3 , . . . , xn , i.e., when the roots of the

equation y = g(x) are x1 , x2 , x3 , . . . , xn , the following extended formula should be used to

find fY (y):

dx1

E

TU

R

fY (y) = fX (x1 )

LE

C

fY (y) = fX (x1 )

dy

dx1

+ fX (x1 )

|g ′ (x1 )|

dxn

+ fX (x2 )

+ · · · + fX (xn )

dy

dx2

|g ′ (x2 )|

dy

+ · · · + fX (xn )

Example: 6.1. Let X be a random variable with pdf f (x) =

2

dxn

2

9

or

dxn

|g ′ (xn )|

(x + 1), −1 < x < 2. Find

the pdf of the random variable Y = X .

Solution:

Divide the interval into two parts (-1,1) and (1,2).

dx

fY (y) =

fX (x)

dy

1

2

2

2

√

√

When −1 < x < 1, fY (y) = √

(1 + y) + (1 − y) = √ , 0 < y <

2 y 9

9

9 y

1.

1

2

√

When 1 < x < 2, fY (y) = √

(1 + y) , 1 < y < 4.

2 y 9

Page 34 of 38

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

Example: 6.2. Let X be a random variable with pdf f (x) =

of the random variable Y = 2X − 3.

Solution:

fY (y) =

dx

dy

fX (x) =

1

2

·

When 1 < x < 5, fY (y) =

x

12

S. ATHITHAN

, 1 < x < 5. Find the pdf

x

12

y+3

48

, −1 < y < 7.

dx

fX (x) =

1

1

·

1

N

A

π/2 − (−π/2)

=

π

IT

Now, fY (y) =

1

H

Solution:

Given that fX (x) is uniformly distributed. ∴ fX (x) =

S

Example: 6.3.

If X is uniformly distributed in (−π/2, π/2), find the pdf of Y = tan X.

(1 + y 2 )

1

When x ∈ (−π/2, π/2), fY (y) =

, −∞ < y < ∞.

π(1 + y 2 )

π

LE

C

TU

R

E

N

O

TE

S

O

F

AT

H

dy

Page 35 of 38

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

7

S. ATHITHAN

Exercise/Practice/Assignment Problems

1. Find the probability of drawing two red balls in succession from a bag containing 3 red

and 6 black balls when (i) the ball that is drawn first is replaced, (ii) it is not replaced.

2. A bag contains 3 red and 4 white balls. Two draws are made without replacement; what

is the probability that both the balls are red.

O

TE

S

O

F

AT

H

IT

H

A

N

S

3. A random variable X has the following distribution

X

-2 -1

0

1

2

3

P[X=x] 0.1 k 0.2 2k 0.3 3k

Find (i) the value of k,(ii) the Distribution Function (CDF) (iii) P (0 < X < 3/X <

1

2) and (iv) the smallest value of α for which P (X ≤ α) > .

2

1

Ans: k =

15

4. A random variable X has the following distribution

X

0 1

2

3

4

P[X=x] k 2k 5k 7k 9k

Find (i) the value of k,(ii) the Distribution Function (CDF) (iii) P (0 < X < 3/X <

1

2) and (iv) the smallest value of α for which P (X ≤ α) > .

3

1

Ans: k =

24

5. A random variable X has a pdf

(

3x2 , when 0 ≤ x ≤ 1

f (x) =

0,

otherwise

LE

C

TU

R

E

N

Find the (i) Find a, b such that P (X ≤ a) = P (X > a) and P (X > b) = 0.05,(ii)

r

3 1

the Distribution Function (CDF) (iii) P (0 < X < 0.5/X < 0.8). Ans: a =

,

2

r

3 19

b=

20

6. The amount of bread (in hundred kgs) that a certain bakery is to sell in a day is a random

variable X with a pdf

when 0 ≤ x < 5

Ax,

f (x) = A(10 − x), when 5 ≤ x < 10

0,

otherwise

Find the (i) the value of A,(ii) the Distribution Function (CDF) (iii) P (X > 5/X <

5), P (X > 5/2.5 < X < 7.5). (iv) the probability that in a day the sales is (a) more

1

than 500 kgs (b) less than 500 kgs (c) between 250 and 750 kgs. Ans: A =

25

7. The Cumulative Distribution Function (CDF) of a random variable X is given by

1 − 4 , when x > 2

F (x) =

x2

0,

otherwise

Page 36 of 38

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

S. ATHITHAN

Find (i) the pdf of X,(ii) P (X > 5/X < 5), P (X > 5/2.5 < X < 7.5) (iii)

P (X < 3), P (3 < X < 5).

8. A coin is tossed until a head appears. What is the expected value of the number of tosses?.

Also find its variance.

9. The pdf of a random variable X is given by

(

a + bx,

f (x) =

0,

when 0 ≤ x ≤ 1

otherwise

N

S

Find the (i) the value of a, b if the mean is 1/2,(ii) the variance of X (iii) P (X >

0.5/X < 0.5)

H

A

10. The first three moments about the origin are 5,26,78. Find the first three moments about

the value x=3. Ans: 2,5,-48

H

AT

when 0 ≤ x ≤ 1

otherwise

F

12. The pdf of a random variable X is given by

(

k(1 − x),

f (x) =

0,

IT

11. The first two moments about x=3 are 1 and 8. Find the mean and variance. Ans: 4,7

S

O

Find the (i) the the value of k,(ii) the r th moment about origin (iii) mean and variance.

Ans: k = 2

O

TE

13. An unbiased coin is tossed three times. If X denotes the number of heads appear, find

the MGF of X and hence find the mean and variance.

N

14. Find the MGF of the distribution whose pdf is f (x) = ke−x , x > 0 and hence find its

mean and variance.

LE

C

TU

R

E

15. The pdf of a random variable X is given by

when 0 ≤ x ≤ 1

x,

f (x) = 2 − x, when 1 < x ≤ 2

0,

otherwise

For this find the MGF and prove that mean and variance cannot be find using this MGF

and then find its mean and variance using expectation.

16. The pdf of a random variable X is given by f (x) = ke−|x| , −∞ < x < ∞ Find the

(i) the the value of k,(ii) the r th moment about origin (iii) the MGF and hence mean and

1

variance. Ans: k =

2

17. Find the MGF of the RV whose moments are given by µ′r = (2r)!. Find also its mean

and variance.

18. Use Tchebycheff’s(Chebychev’s) inequality to find how many times a fair coin must be

tossed in order to the probability that the ratio of the number of heads to the number of

tosses will lie between 0.45 and 0.55.

Page 37 of 38

https://sites.google.com/site/lecturenotesofathithans/home

18MAB204T-Probability and Queueing Theory

S. ATHITHAN

19. If X denotes the sum of the numbers obtained when 2 dice are thrown, obtain an upper

bound for P {|X − 7| ≥ 4} (Use Chebychev’s inequality). Compare with the exact

probability.

20. A RV X has mean µ = 12 and variance σ 2 = 9 and unknown probability distribution.

Find P [6 < X < 8].

√ √

3σ

.

21. If a RV X is uniformly distributed over (− 3, 3), compute P |X − µ| ≥

2

N

A

H

when 0 ≤ x ≤ 1

otherwise

IT

23. Given a RV X with density function

(

2x,

f (x) =

0,

S

22. A RV X is exponentially distributed with parameter 1. Use Chebychev’s inequality to

show that P (−1 < X < 3) ≥ 3/4. Find the actual probability to compare.

H

find the pdf of Y = 8X 3 .

AT

24. If X has an exponential distribution with parameter a, find the pdf of Y = log X.

√

25. If X has an exponential distribution with parameter 1, find the pdf of Y = X.

O

F

26. If X is uniformly distributed in (1, 2), find the pdf of Y = 1/X.

TE

S

27. If X is uniformly distributed in (−2π, 2π), find the pdf of (i) Y = X 3 and (ii) Y =

X 4.

LE

C

TU

R

E

N

O

S OLVE /P RACTICE M ORE P ROBLEMS FROM THE T EXTBOOK AND R EFERENCE B OOKS

Figure 7.1: Values of e−λ .

Contact: (+91) 979 111 666 3 (or) athithansingaram@gmail.com

Visit: https://sites.google.com/site/lecturenotesofathithans/home

Page 38 of 38

https://sites.google.com/site/lecturenotesofathithans/home