Vehicular Edge Computing: Data Stream Classification Algorithms

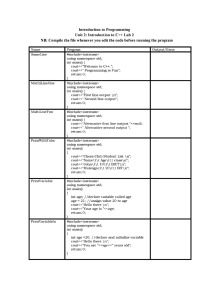

advertisement