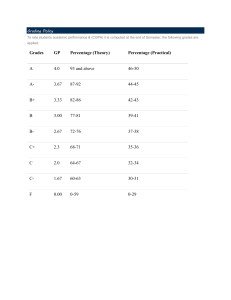

Grade Inflation: Academic Standards in Higher Education

advertisement