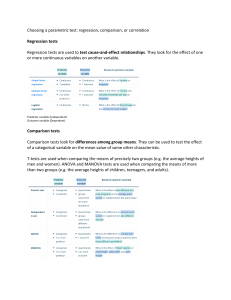

The T-TEST ANOVA CORRELATION CHI-SQUARE REGRESSION Human behavior Submitted by: Salwa Buriro Roll No: 2k19/HBBAE/19 Assigned by: Syed Safdar Ali Shah BBA(EVENING) 1|Pag e The T-TEST T-tests offer an opportunity to compare two groups on scores such as differences between boys and girls or between children in different school grades. A t-test is a type of inferential statistic, that is, an analysis that goes beyond just describing the numbers provided by data from a sample but seeks to draw conclusions about these numbers among populations. The t-test is one of many tests used for the purpose of hypothesis testing in statistics. Calculating a t-test requires three key data values. They include the difference between the mean values from each data set (called the mean difference), the standard deviation of each group, and the number of data values of each group. the t-test analyzes the difference between the two means derived from the different group scores. T-tests tell the researcher if the difference between two means is larger than would be expected by chance. There are three versions of t-test: 1. Independent samples t-test (which compares mean for two groups) 2. Paired sample t-test (which compares means from the same group at different times) 3. One sample t-test (which tests the mean of a single group against a known mean.) Dependent samples t-test (also called repeated measures t-test or paired-samples ttest) t-tests are used when we want to compare two groups of scores and their means. Sometimes, however, the participants in one group are somehow meaningfully related to the participants in the other group. One common example of such a relation is in a pretest post-test research design. Because participants at the pre-test are the same participants at the post-test, the scores between pre- and post-test are meaningfully related. independent samples t-tests the independent samples t-test is used to compare two groups whose means are not dependent on one another. In other words, when the participants in each group are independent from each other and actually comprise two separate groups of individuals, who do not have any linkages to particular members of the other group One sample t-test (a single group against a known mean.) 2|Pag e Analysis of Variance Analysis of variance (ANOVA) and is a test of hypothesis that is appropriate to compare means of a continuous variable in two or more independent comparison groups. The ANOVA technique applies when there are two or more than two independent groups. The ANOVA procedure is used to compare the means of the comparison groups The fundamental strategy of ANOVA is to systematically examine variability within groups being compared and also examine variability among the groups being compared. ANOVA using the five-step approach. Step 1. Set up hypotheses and determine level of significance. Step 2. Select the appropriate test statistic. Step 3. Set up decision rule. Step 4. Compute the test statistic. Step 5. Conclusion. There are two types of ANOVA One Way ANOVA Two Way ANOVA One Way ANOVA: A one-way ANOVA is used to compare two means from two independent (unrelated) groups using the F-distribution. The null hypothesis for the test is that the two means are equal. Therefore, a significant result means that the two means are unequal. A one-way ANOVA will tell you that at least two groups were different from each other. But it won’t tell you what groups were different. Two Way ANOVA: A Two Way ANOVA is an extension of the One Way ANOVA. With a One Way, you have one independent variable affecting a dependent variable. With a Two Way ANOVA, there are two independents. Use a two-way ANOVA when you have one measurement variable (i.e. A quantitative variable) and two nominal variables. In other words, if your experiment has a quantitative outcome and you have two categorical explanatory variables, a two-way ANOVA is appropriate. 3|Pag e CHI-SQUARE A chi-squared test, also written as χ2 test, is any statistical hypothesis test where the sampling distribution of the test statistic is a chi-squared distribution when the null hypothesis is true. Without other qualification, 'chi-squared test' often is used as short for Pearson's chi-squared test. The chi-squared test is used to determine whether there is a significant difference between the expected frequencies and the observed frequencies in one or more categories. In the standard applications of this test, the observations are classified into mutually exclusive classes, and there is some theory, or say null hypothesis, which gives the probability that any observation falls into the corresponding class. The purpose of the test is to evaluate how likely the observations that are made would be, assuming the null hypothesis is true. chi-squared test is used to compare the distribution of plaintext and (possibly) decrypted cipher text. The lowest value of the test means that the decryption was successful with high probability. This method can be generalized for solving modern cryptographic problems. There are two types of chi-square tests. Both use the chi-square statistic and distribution for different purposes: chi-square goodness of fit test chi-square test for independence chi-square goodness of fit test A chi-square goodness of fit test determines if a sample data matches a population. a family of continuous probability distributions, which includes the normal distribution and many skewed distributions, and proposed a method of statistical analysis consisting of using the Pearson distribution to model the observation and performing the test of goodness of fit to determine how well the model and the observation really fit. the goodness-of-fit test, which asks something like "If a coin is tossed 100 times, will it come up heads 50 times and tails 50 times?" chi-square test for independence A chi-square test for independence compares two variables in a contingency table to see if they are related. In a more general sense, it tests to see whether distributions of categorical variables differ from each another. A very small chi square test statistic means that your observed data fits your expected data extremely well. In other words, there is a relationship. A very large chi square test statistic means that the data does not fit very well. In other words, there isn’t a relationship. the test of independence, which asks a question of relationship, such as, "Is there a relationship between gender and SAT scores?" 4|Pag e Correlation Correlation is a statistical measure that indicates the extent to which two or more variables fluctuate together. A positive correlation indicates the extent to which those variables increase or decrease in parallel; a negative correlation indicates the extent to which one variable increases as the other decreases. A correlation coefficient is a statistical measure of the degree to which changes to the value of one variable predict change to the value of another. When the fluctuation of one variable reliably predicts a similar fluctuation in another variable, there’s often a tendency to think that means that the change in one causes the change in the other. Correlation is a statistic that measures the degree to which two variables move in relation to each other. In finance, the correlation can measure the movement of a stock with that of a benchmark index, such as the Beta. Correlation measures association, but does not tell you if x causes y or vice versa, or if the association is caused by some third (perhaps unseen) factor. Investment managers, traders and analysts find it very important to calculate correlation, because the risk reduction benefits of diversification rely on this statistic. There are four types of correlations: Pearson correlation Kendall rank correlation Spearman correlation The Point-Biserial correlation. Pearson correlation: Pearson correlation is the most widely used correlation statistic to measure the degree of the relationship between linearly related variables. Kendall rank correlation: Kendall rank correlation is a non-parametric test that measures the strength of dependence between two variables. Spearman rank correlation: Spearman rank correlation is a non-parametric test that is used to measure the degree of association between two variables. The Spearman rank correlation test does not carry any assumptions about the distribution of the data and is the appropriate correlation analysis when the variables are measured on a scale that is at least ordinal. The Point-Biserial correlation: The point-biserial correlation is conducted with the Pearson correlation formula except that one of the variables is dichotomous. 5|Pag e Regression Regression analysis is a powerful statistical method that allows you to examine the relationship between two or more variables of interest. While there are many types of regression analysis, at their core they all examine the influence of one or more independent variables on a dependent variable. Regression analysis provides detailed insight that can be applied to further improve products and services. Regression analysis is a reliable method of identifying which variables have impact on a topic of interest. There are 7 types of regression: Linear Regression Logistic Regression Polynomial Regression Stepwise Regression Ridge Regression Lasso Regression Elastic Net Regression Linear Regression: In this technique, the dependent variable is continuous, independent variable(s) can be continuous or discrete, and nature of regression line is linear. Logistic Regression: Logistic regression is used to find the probability of event=Success and event=Failure. We should use logistic regression when the dependent variable is binary (0/ 1, True/ False, Yes/ No) in nature. Polynomial Regression: A regression equation is a polynomial regression equation if the power of independent variable is more than 1. Stepwise Regression: This form of regression is used when we deal with multiple independent variables. The selection of independent variables is done with the help of an automatic process, which involves no human intervention. Ridge Regression: Ridge Regression is a technique used when the data suffers from multicollinearity (independent variables are highly correlated). Lasso Regression: Lasso (Least Absolute Shrinkage and Selection Operator) also penalizes the absolute size of the regression coefficients. It is capable of reducing the variability and improving the accuracy of linear regression models. Elastic Net Regression: Elastic Net is hybrid of Lasso and Ridge Regression techniques. It is trained with L1 and L2 prior as regularize. Elastic-net is useful when there are multiple features which are correlated. Lasso is likely to pick one of these at random, while elastic-net will pick both.