philip e. tetlock - superforecasting the art and science of prediction

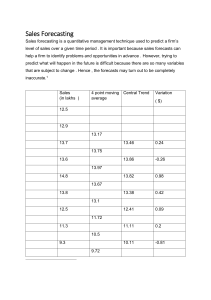

advertisement