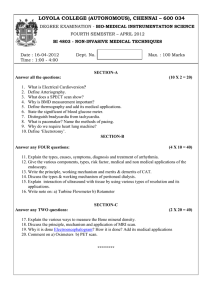

Expert Systems with Applications 159 (2020) 113547 Contents lists available at ScienceDirect Expert Systems with Applications journal homepage: www.elsevier.com/locate/eswa Simultaneous localization and mapping using swarm intelligence based methods Nadia Nedjah a,⇑, Luiza Macedo Mourelle de b, Pedro Jorge Albuquerque de Oliveira a a b Department of Electronics Engineering and Telecommunication, Engineering Faculty, State University of Rio de Janeiro, RJ, Brazil Department of System Engineering and Computation, Engineering Faculty, State University of Rio de Janeiro, RJ, Brazil a r t i c l e i n f o Article history: Received 9 September 2019 Revised 8 May 2020 Accepted 9 May 2020 Available online 13 May 2020 Keywords: SLAM Scan matching PSO FA ABC a b s t r a c t The problem known as simultaneous localization and mapping is of fundamental importance both in its own right and because of its potential applications in the development of autonomous robots. While many solutions exist that are based on classical Newton-like optimization techniques regarding scan matching, relatively no work has been done with respect to the application of derivative free bioinspired techniques to this particular area of robotics. That being said, we propose a novel approach to the scan-matching step within the Simultaneous Localization And Mapping (SLAM) problem based on the exploitation of swarm intelligence. For this purpose, we have chosen three swarm intelligence optimization methods to be the subjects of our research investigation, namely particle swarm optimization, artificial bee colony and the firefly algorithm. Aiming at reducing further the translational and rotational scan alignment errors, the proposed scan matching proceeds in two main steps, namely scan-to-scan and scan-to-map matching. Furthermore, we have made use of a pose graph based approach as a means of maintaining the consistency of our estimates across the mapping process. Systems designed to perform simultaneous localization and mapping using pose graphs are currently the state of the art and it is our belief that a robust scan-matching system is currently of the utmost importance to further the field. We tested the proposed solution in many scenarios. We conclude that the artificial bee colony strategy is efficient in a large range of circumstances. This affirmation is backed by the fact that in the best case scenarios, we have obtained good and some times better accuracy gains, regarding the translational and rotational estimates of the robot’s trajectory, by using the this meta-heuristic, when compared to state of the art SLAM systems. The firefly algorithm, while not as accurate as the artificial bee colony technique, was faster on 7 out of the 8 public domain datasets. The firefly algorithm consumed, on average less time spent per scan than by the artificial bee colony optimization technique. Particle swarm optimization has shown an inferior accuracy when compared to the artificial bee colony optimization technique and an intermediate processing time when compared to the other two optimization methods. So, firefly based meta-heuristic can be considered as the technique that provides a good trade-off between the high accuracy offered by the artificial bee colony and the high execution speed of the particle swarm optimization. Ó 2020 Elsevier Ltd. All rights reserved. 1. Introduction The Simultaneous Localization and Mapping (SLAM) problem deals with the intertwined tasks of estimating the trajectory of a moving agent and a map of the environment in which it takes place. This area of research has received a great deal of attention from the robotics research community in particular and the expert and intelligent systems research community in general, over the ⇑ Corresponding author. E-mail addresses: nadia@eng.uerj.br (N. Nedjah), ldmm@eng.uerj.b (L. Macedo Mourelle de), pedro.jorge@uerj.br (P.J. Albuquerque de Oliveira). https://doi.org/10.1016/j.eswa.2020.113547 0957-4174/Ó 2020 Elsevier Ltd. All rights reserved. past few decades due to its fundamental role in the construction of truly autonomous robots (Thrun, Burgard, and Fox, 2005). The problem itself has been cast into many different forms. The abundance of formulations is due in great part to apply specific techniques aiming at maximizing the performance of each solution in its particular application niche or perhaps in an exploratory fashion, thus enabling the analysis of the behavior of specific methods. In this work we present a system capable of solving the SLAM problem by making use of scan-matching procedure based on swarm intelligence optimization meta-heuristics. In the case of SLAM a map takes the role of world model, and its estimation is conjugated to the inference of the robot’s trajectory 2 N. Nedjah et al. / Expert Systems with Applications 159 (2020) 113547 therein. The map may take many different forms such as feature maps, occupancy grid maps, and point clouds. Independently of its form, the construction of a coherent world model, however it may present itself, begets the need to obtain information from various locations in the environment one seeks to model. For the purpose of analyzing the relations between the data available, the data may be regarded as belonging to data frames, where each data frame could be, for example, a set of laser range scan data, a set of photographs taken from the same vantage point, a group of sonar readings or whatever other form of data the sensors may provide. The acquisition of these data frames leads to another sort of challenge because the measurements gathered are always expressed in a local reference frame of an entity whose position is unknown and whose very task requires motion. In the case of laser scan data, as was used in the present work, the sensor returns a set of distances to objects that lie within a specific angular sector around the robot, and it is the role of the SLAM back end to take that information and in as efficient and accurate a manner possible return a data frame that may be utilized to update the global model, wherefore a procedure capable of performing the alignment of data frames (scan matching) must be employed (LLu and Miliosu and Milios, 1997). Scan matching and the closely related image registration problem are a fundamental part of many methods developed to address the problems of simultaneous localization and mapping, structure from motion, object recognition, image fusion and 3D modeling. In most cases, the goal is to find a transformation that leads to maximum overlap between two sets of measurements taken from different viewpoints, or perhaps, from a single vantage point at two different instants in time or even between a set of measurements and a global model being constructed. In the context of SLAM, such a procedure is usually employed either to recover, or to enhance a gross estimate of the relative motion observed between two data frames. In the specific case of laser range data, the problem of aligning measurements is often called scan alignment or scan matching. There are many different formulations of the scanmatching problem, as touching the form taken by the data and the pattern to be aligned. The most commonly encountered such variations are: scan-to-scan; scan-to-line; line-to-line; scan-tomap; map-to-map. The distinction between these formulations, and especially their reason for being, is but one of type, and level of complexity, of the pre-processing performed on the sensed data. It is thus sufficient to note that each one tries to exploit a particular characteristic of the data in order to get a better result under a specific set of conditions. For instance, the scan-to-line formulation is expected to have a better performance than the simple alignment of raw scan data, if the data in question has a strong linear trend, but may perform poorly if the entries of the covariance matrix of the scan data are of similar magnitude. The map-tomap approach tries to exploit all of the available data and is usually seen in the form of correlative matching (Thrun et al., 2005). Nonetheless, for all its advantages, like less sensitivity to outliers and more effective usage of past information, the large number of floating point operations it requires, makes it computationally expensive to perform, in some cases prohibitively so. This is said, it is of utmost importance to emphasize that the performance of the implementation of the scan-matching step is the main bottleneck for any SLAM solution. Hence, it is safe to establish that any improvement in terms of accuracy and/or execution speed regarding scan matching is most welcome in any SLAM system. In this paper we present a solution to the SLAM problem that is based on scan matching through bio-inspired derivative free optimization methods. The advantage of such an approach is apparent from the well-known multi-modality of the scan-matching problem. As far as we are concerned, this approach has not been investigated before. Furthermore, we have employed a graph-based back-end to allow this system to be consistent in exploration sessions that are either too long or cover too large an area. In both cases, a solution based exclusively on scan matching is known to fail due to the incremental nature of the estimation error. In addition, graph-based SLAM systems are currently considered state of the art due to their accuracy, capacity to recover from association errors and the speed of execution, permitted by recent advances in computational methods used in sparse linear algebra. This work provides contributions both in the form of a comparison of the methods employed, which opens new exploration paths within this relatively unexplored sub-field of the SLAM research, and in the system developed itself that, as will become apparent, has demonstrated a comparable performance to well-known approaches to the SLAM problem. Indeed, our proposal has, in the scenarios we tested, either matched the accuracy of other systems within this particular field or exceeded it. Moreover, in all cases over the course of the experiments conducted we have attained a more consistent response, as demonstrated by the fact that the coefficient of variation of the error metric employed has been smaller than all other systems. This substantiates our claim that a potential application of the method presented herein would be to treat SLAM where consistency of the responses, and the ability to correctly predict the performance of the system, is more important than raw speed or accuracy. The rest of this paper is organized in sections. First, in Section 2, we make the tour of relevant existing works related to solving the SLAM problem. Then, in Section 3, we give a general overview of the slam problem and related challenges, including localization and mapping. After that, in Section 4, we describe the main steps of the optimization techniques used, which are based on swarm intelligence. Subsequently, in Section 5, we explain the proposed method to perform robot SLAM efficiently yet accurately using computational swarm intelligence. There follows, in Section 6, the performance results and their analysis, discussion and comparison with existing state-of-the-art solutions. Finally, in Section 7, we draw some conclusions of the described works and point out some promising future directions to improve the proposed methodology. 2. Related works In robotics, the SLAM problem was introduced through a series of papers by Cheeseman et Al., in which the extended Kalman filter (EKF) SLAM algorithm was first described (SSmith and Cheesemanmith and Cheeseman, 1986) and revisited in Smith, Self, and Cheeseman (1990). The first mention of relative graph-like constraints in the SLAM literature date back to the publications by Cheeseman and Smith in 1986, SSmith and Cheesemanmith and Cheeseman (1986). These approaches, however, lacked any form of global relaxation or optimization in their approach to solve the problem. Historically, the representation of the SLAM prior as a set of links between robot poses was first presented in a seminal paper by LLu and Miliosu and Milios (1997). It is, indeed, in LLu and Miliosu and Milios (1997) that, for the first time, the SLAM problem was cast in the form of a global optimization problem and where the first algorithm to derive a map from such a set of constraints was first presented. Unique to their approach was the usage of the robot’s pose variables as the frame of reference, which greatly diverges from the point of view of standard EKF based solutions where the poses are integrated out. In the rest of this section, we give a chronological retrospective of the main related works of the state-of-the-art. In BBesl and McKayesl and McKay (1992), the most frequently cited reference for the Iterative Closest Point (ICP) algorithm, the authors present numerous formulae for calculating the distance N. Nedjah et al. / Expert Systems with Applications 159 (2020) 113547 between different representations of the geometric data under consideration and proceed to show in what manner those distance measures can be utilized within the framework of the ICP algorithm. In addition, the authors also propose an Accelerated ICP and perform an analysis of the convergence properties of both algorithms (Luo, 2017). In LLu and Miliosu and Milios (1997), the authors present a comprehensive body of work on the subject of consistent laser scan alignment and registration employing the concept of pose graphs, and thus utilizing Pose Graph Optimization (PGO). The authors do affirm that by maintaining a complete record of all the data frames acquired while a mapping agent moves through an environment it is possible to utilize the information there contained to construct a network of relations between those sets of sensed data and, by combining odometric information and scan matching based constraints, a map of said environment. The map construction procedure is made possible by assuming that the trajectory most likely to be correct is the one that minimizes what they call the ‘‘network energy”. In BBiber and Strasseriber and Strasser (2003), the authors propose an alternative representation for a range scan, which termed the Normal Distributions Transform (NDT), which can be considered as a point-cloud matching method. This representation is similar to an occupancy grid as it subdivides the 2D plane into cells, and associates to each entry a normal distribution that locally models the probability of measuring a point. This results into a piecewise continuous and differentiable probability density that can be used to match a given scan using Newton’s algorithm. So, no explicit correspondences have to be established. In Nieto, Bailey, and Nebot (2007), the authors describe an approach to SLAM based on the joint application of extended Kalman filters and scan matching. The method, called Scan-SLAM, was devised with the intent of generalizing EKF-SLAM’s applicability to environments with markers that could be defined by simple geometrical models. The authors also proposed that the markers could be defined by templates comprised of unprocessed measurements. They defend the idea that templates, thus defined, may exploit most of the information contained within scans to, for example, improve the information contained in the templates along the operation of the system. Moreover, given the way the authors defined these templates, it is possible to envision the application of data fusion to create higher dimensional templates, an example of which could be the fusion of camera image and metric data from laser range finders. In Grisetti, Stachniss, and Burgard (2007), a system called GMapping based on Rao-Blackwell particle filters (RBPF) is proposed. However, it is noteworthy to point out that an RBPF has been exploited earlier in the FastSLAM algorithm (Montemerlo, Thrun, Koller, and Wegbreit, 2002), wherein landmark maps are used instead of grid maps. In general, Rao-Blackwell particle filters based algorithms break down the state space into a analytical part and a non-analytical part, which are interconnected to form a filter bank. It is a well-known fact that several implementations of RaoBlackwell particle filters require a high memory consumption, considering that each particle must store an environment map with it. It is therefore of great interest to use the smallest number of particles necessary for the effective operation of the SLAM system. The system proposed in Grisetti et al. (2007) uses a occupancy grid map environment representation. The fundamental notion used to reduce the number of particles needed is the Rao-Blackwell theorem, which claims that it is possible to initially estimate the robot’s trajectory and then use it to generate the map, which is strongly dependent on it. In effect, this factoring makes it possible to obtain the map efficiently, since both the pose and the observations are known. A significant improvement offered by GMapping is its proposed distribution for sampling. In general, systems based on par- 3 ticle filters use only information from the odometry-based motion model. However, the proposed system in Grisetti et al. (2007) uses in addition to these data, the last measurement performed, thus focusing on sampling in the most likely regions. In DDiosi and Kleemaniosi and Kleeman (2007), the authors propose an algorithm, which they denote as polar scan matching (PSM). In this algorithm, the measurements obtained by means of a laser sensor are aligned as polar coordinates. As this kind of sensor measures distances to the nearest obstacles in a scan along a predetermined angular space, the use of the proposed method makes the repeated transformation of the measured values for a Cartesian system unnecessary. Furthermore, it was possible to take advantage of the fact that in a polar system, displacements along the angular coordinate correspond to rotations in a Cartesian system. This fact that can be used to estimate orientation in a SLAM system that applies this technique. In Censi (2008), the authors describe an algorithm, called pointto-line ICP (PLICP), for scan matching based on the usage of a point to line metric which, they claim, allows them to better gauge the distance between the point and the surface whereof the scan was obtained. The authors proceed to describe a closed form solution to the minimization of this metric and affirm the algorithm that employs it converges quadratically in the number of iterations, unlike the classical ICP that converges linearly in the number of iterations (BBesl and McKayesl and McKay, 1992). In Segal, Haehnel, and Thrun (2009), the authors combine the Iterative Closest Point ICP (BBesl and McKayesl and McKay, 1992) and point-to-plane ICP (CChen and Medionihen and Medioni, 1992) algorithms into a single probabilistic framework, called Generalized ICP (GICP). Then, they use this framework to model locally the planar surface structure obtained from both scans instead of just the model scan as is typically done with the point-to-plane method (CChen and Medionihen and Medioni, 1992). This approach can be thought of as plane-to-plane scan matching. This approach proved to be more robust to inaccurate associations, and thus allowed a better tunning regarding the maximum match distance parameter present in most variants of ICP. In Olson (2009), the computational power available to modern robots is taken advantage of as it motivates the investigation of correlative scan-matching solutions that examine quality vs. complexity trade-offs. The authors propose a probabilistic-based scanmatching algorithm that produces higher quality and more robust results at the cost of additional computation time. The approach casts the problem in a probabilistic, attempting to find the correct transformation that maximizes the probability of having the observed data. They describe several novel implementations of the proposed approach that achieve real-time performance on modern hardware, including a multi-resolution implementation for conventional CPUs and a parallel implementation for graphics processing units (GPUs). In CChatterjee and Matsunohatterjee and Matsuno (2010), the authors exploit a proposed modified Particle Swarm Optimization, called Geese PSO algorithm, to tune a fuzzy supervisor for an adaptive EKF based approach to solve the SLAM problems for mobile robots. They demonstrate that the proposed approach provides better estimation and map-building performance in comparison with those fuzzy supervisors for the adaptive EKF algorithm, where the free parameters of the fuzzy systems are tuned using basic PSO based algorithm. The utility of the proposed approach is aptly demonstrated by employing it for several benchmark environment situations with various numbers of waypoints and landmarks, where the Geese PSO algorithm could tune the fuzzy supervisor better than the canonical PSO based algorithm. In Chatterjee, Ray, Chatterjee, and Rakshit (2011), the authors demonstrates a successful real implementation of an EKF based SLAM algorithm for indoor environments, utilizing two web-cam 4 N. Nedjah et al. / Expert Systems with Applications 159 (2020) 113547 based stereo-vision sensing mechanism. The vision-sensing mechanism used was a successful development of a real algorithm for image feature identification in frames grabbed from continuously running videos on 2 cameras, tracking of the identified features in input frames and incorporation of these landmarks in the yielded map. They utilize a 3D distance computation method. The system was test-run in laboratory environments wherein the robot is commanded to navigate through a given specified trajectory defined by waypoints and create a map of its surrounding environment. In Mingas, Tsardoulias, and Petrou (2012) the authors propose a solution to the SLAM problem via scan matching performed by means of a Genetic Algorithm (GA). In their work, the authors applied a raw scan to global occupancy grid map matching procedure. Furthermore, a Field Programmable Gate Array (FPGA) based implementation is proposed and compared to a pure software version running on a general-purpose computer. The results presented were promising, but after analysis, the authors claimed that the method weaknesses regarding rotational error accumulation of and the difficulty to correctly determine rigid transformations in environments with little visual changes may be verified in spite of large motions. In Mutz et al. (2016), the authors present an end-to-end framework for precise large-scale mapping with applications in autonomous car driving. In special, they study the problem of mapping complex environments, with features changing from tree-lined streets to urban areas with dense traffic. The used robotic car is equipped with an odometry sensor, a 3D light detection and ranging device (LiDAR) and a low cost global positioning system (GPS). The proposed approach integrates the data generated by these sensors into a pose-based GraphSLAM estimator. They contributed a new strategy for identification and correction of odometry data using evolutionary algorithms is presented. This new strategy makes odometry data significantly more consistent with GPS. Loop closures are detected using GPS data, and Generalized ICP (GICP), a 3D point cloud scan-matching registration algorithm to estimate the displacement between the different travels over the same region (Segal et al., 2009). After path estimation, the 3D LiDAR data is used to build an occupancy grid mapping of the environment. The proposed framework is tested in real-world environments with different characteristics, whereby the results were satisfactory regarding precision in loop closures even when the vehicle traveled long distances. In Akai, Morales, Takeuchi, Yoshihara, and Ninomiya (2017), NDT (BBiber and Strasseriber and Strasser, 2003) is extended to 3D NDT, wherein it is proposed to estimate the error of NDT scan matching beforehand (i.e. off-line), and while the vehicle navigates in the environment, the appropriate uncertainty is assigned to the scan matching. The off-line estimate is combined with a roadmarker matching approach using a particle-filtering algorithm. It further improved the localization estimates in the final SLAM. In Jeong, Yoon, and Park (2018), the authors propose a new approach to address the semantic 3D mapping, which is mainly composed of 3D reconstruction and semantic segmentation. They use 2D convolutional neural network for semantic segmentation. To build a semantic 3D map, they integrate the 3D map with semantic information, using coordinate transformation and Bayes’ update scheme, which is improved via a 3D refinement process to correct inaccurate segmented voxels and remove traces of moving vehicles in the 3D map. Through experiments on challenging sequences, they demonstrate that the proposed method outperforms state-of-the-art methods in terms of accuracy and intersection over union (IoU). In Zhang, Wang, and hai Chen (2019), the authors address the self-localization that allows a mobile robot to identify and keep track of its own position and orientation as the robot moves through the environment. They propose a hybrid localization approach based on the particle filter and particle swarm optimization algorithm. Their methodology is focused on the localization tasks when an a priori environment map is available. The work yielded an accurate and robust particle filter based localization algorithm that is able to work in symmetrical environments. The performance of the proposed approach is efficient in indoor robot localization. 3. The SLAM problem The SLAM problem consists of the concurrent estimation of the robot trajectory and a map of the environment in which a robotic entity moves in the course of its operation. A solution to this problem is required in situations in which a robot has no access to its trajectory and/or to a map of the environment wherein it moves. Any SLAM system needs to provide a solution to the trajectory estimation, i.e. solve the localization problem, and to the approximate environment map building, i.e. solve the mapping problem. In Section 3.2, we introduce the required concepts to tackle the localization problem. Subsequently, in Section 3.3, define the necessary elements to approach the mapping problem. It is noteworthy to point out that the purpose of this section is completeness. It points to the main techniques that have been applied later to form the basis concepts for understanding the proposed SLAM implementation, described in Section 5. 3.1. Coordinate systems SLAM aims at describing some region’s map and the robot’s trajectory during the mapping operation. To this end, it is essential that we are able to adequately express the full range of movements and coordinate changes necessary both by the view change and restrictions due to the used sensors. The task establishes a dichotomy between the robot’s local coordinate system and the one in which the map (the global system) is expressed. The analysis of the relationship between these two systems is of fundamental importance, not only because it is essential to new data integration, as obtained during robot movement, but also because the relationship between two sets of measurements containing overlap determines, at least approximately, the relationship between the coordinate systems in which both are obtained. Determining a transformation that maximizes the overlap between two data sets allows for a rotational translation that approximates the movement performed by the robot (or its reverse). Generally speaking, the set of isometries that represent rigid movements in n dimensions has nðn1Þ degrees of freedom. In the case of SLAM, 2 this means that the complete determination of a pose in n dimenrotational coorsions requires n translational coordinates and nðn1Þ 2 dinates (Woods, 2005). In this work, we exploit the homogeneous coordinates used in projective geometry, with wide application in the field of computer graphics and computer vision (Zhang, 1994). 3.2. Localization This section deals with the theoretical rationale underlying the use of projective coordinate systems to define the pose of the robot, which represents the effective robot pose at a given time instant, as the graph of poses, which registers the pose history and allows the map construction. In Section 3.2.1, we provide the formal definition and characteristics of a robot pose and the usual operations that apply. Subsequently, in Section 3.2.2, we describe and define the pose graph, as used in this work and how it can be optimized. 5 N. Nedjah et al. / Expert Systems with Applications 159 (2020) 113547 3.2.1. Pose The concept of pose refers to a set of coordinates that are sufficient to determine the location and orientation of the robot in relation to the environment. In the case of confined systems moving in a two-dimensional R3 subspace, the pose must contain three coordinates. If a Cartesian system is considered to be R2 , the pose can be completely determined by two translational coordinates and an angle specifying the orientation of the system. However, if the robot moves in a three-dimensional subspace of R3 , it is necessary to specify six coordinates so that the position and orientation of the robot is completely determined. In this case, the complete determination is given by three translational coordinates and three rotational coordinates. Let V b ¼ ðxb ; yb ; hb Þ be a two-dimensional pose. If we consider a pose D ¼ ðx; y; hÞ, which represents a variation of pose V b that transforms it into pose V a ¼ ðxa ; ya ; ha Þ, we would have the composition of V b with D to be denoted by V a ¼ V b D, and defined as in Eq. 1: 3 2 3 2 3 2 3 2 3 xb xb x xa x cos hb y sin hb 6 7 6 7 6 7 6 7 6 7 4 ya 5 ¼ 4 yb 5 4 y 5 ¼ 4 yb 5 þ 4 x sin hb þ y cos hb 5: h ha hb hb h 2 ð1Þ Considering that an absolute pose defines a coordinate system, and that a relative pose defines a change in the coordinate system, composed of a translation followed by a rotation, the pose composition operation determines the new coordinate system, obtained after the transformation. Similarly, it is possible to define the inverse operation of pose composition. This operation requires two poses and returns the relative pose. Such an operation is denoted by D ¼ V a V b , and its effect is defined by Eq. 2: 3 2 3 2 3 2 3 2 x xb xa ðxa xb Þ cosðhb Þ þ ðya yb Þ sinðhb Þ 7 6 7 6 7 6 7 6 4 y 5 ¼ 4 ya 5 4 yb 5 ¼ 4 ðxa xb Þ sinðhb Þ þ ðya yb Þ cosðhb Þ 5; ha h mp ðha hb Þ hb ð2Þ wherein function mp ðdÞ is required because the orientation of the resulted pose need to be normalized to ½p; pÞ. It produces the smallest angle needed to rotate ha into hb , respecting the periodic interpretation of angles, and is defined as in Eq. 3 (Hertzberg, Wagner, Frese, and Schroder, 2013): mp ðdÞ ¼ d 2p dþp : 2p ð3Þ With the pose difference operation, it is also possible to define for a relative pose Dab ¼ V a V b its inverse relative pose Dba using the definition of Eq. 4: Dba ¼ Dab ¼ ð0; 0; 0Þ Dab ; ð4Þ wherein the notation Dab is taken directly from LLu and Miliosu and Milios (1997) and, even though it may be considered a notation abuse, has been retained for historical reasons. We need also to define the composition between a pose, say Va ¼ ðxa ; ya ; ha Þ and a point, say u ¼ ðx; yÞ, denoted as u0 ¼ ðx0; y0Þ ¼ V a u, which results in the same point expressed in the coordinate system. The transformation will be analogous to that given in Eq. 1. The composition operation between a pose and a point in two dimensions is defined by Eq. 5: x0 y0 ¼ xa ya x y ¼ xa ya þ x cos ha y sin ha x sin ha þ y cos ha : ð5Þ Having in mind possible transformations in a projective space, it is interesting to note that a pose can be specified as a rigid body transformation. Likewise, operations between poses can be specified as transformation compositions. For instance, if we have pose V ¼ ðx; y; hÞ, it can be specified in an equivalent manner by the transformation matrix displayed in Eq. 6: 2 cosðhÞ sinðhÞ x 6 M ¼ 4 sinðhÞ 0 3 cosðhÞ 7 y 5: 0 1 ð6Þ So, given the definition in Eq. 6, it is possible to express all operations between poses by matrix operations. 3.2.2. Pose graph The methods used to solve the SLAM problem that are take advantage of a pose graph are based on a formulation of the SLAM problem in terms of a graph of relationships between various frames in which the robot has met over time. These methods address the problem of state estimation as an optimization problem (LLu and Miliosu and Milios, 1997; Kaess, Ranganathan, and Dellaert, 2008; Grisetti, Kummerle, Stachniss, and Burgard, 2010; Kümmerle, Grisetti, Strasdat, Konolige, and Burgard, 2011). Generally, these kind of solutions deal with the full SLAM problem, where the objective is to estimate the complete trajectory of the robot that is performing the mapping task in addition to determining the current pose and the environment map. These techniques tend to produce more consistent estimations than those based on statistical filtering techniques. This is because techniques such as Kalman filters (Jiang, Li, and Yu, 2016), particle filters (Grisetti et al., 2007), information filters (Walter, Eustice, and Leonard, 2007), and their counterparts are inherently subject to inconsistencies arising from linearization errors caused by inaccurate, or even erroneous, estimates of previous poses. The key point is that filtering techniques perpetuate these inaccuracies or errors, which is intrinsic to the way they operate. In contrast, graph based techniques, sometimes called smoothers, are able, by virtue of their solution to the full SLAM problem, to revise estimates for the entire trajectory based on new evidence, a hence producing results of higher accuracy and consistency. It is worth mentioning here, as an addendum, that information filters can also make this kind of correction by operating off-line and over the entire dataset (Thrun et al., 2005). Nevertheless, this may take a reasonably long time because of the combinatorial nature of the problem. Most graph-based SLAM algorithms are structured into two main and complementary components: a front-end, which is also called the scan alignment system; and a back-end, which is also called graph manipulation and optimization system. The scan alignment system is able to extract constraints between problem variables, such as poses and landmarks, from the sensory information by detecting points of interest and aligning the corresponding scans. The graph manipulation and optimization system is responsible for optimizing the set of constraints, which is actually finding the configuration of the problem variables that occasion the smallest possible error. In effect, the system that optimizes the set of constraints is the one that performs the optimization of the pose graph system optimization. Constraints in the context of graphbased SLAM algorithms are sets of isometries that relate poses or observations. Regarding the relations between poses, there are both odometry-derived constraints, which can either be enhanced or not by the scan matching, and constraints imposed by observations. Restrictions imposed by observations are created by observing the same location from two different poses. In fact, if we adopt the convention that poses are expressed as transformations in a projective space, and assume that pose X i corresponds to the i-th pose, then an odometer constraint between poses X i and X j ¼ X iþ1 would take the form X 1 X iþ1 . In the case of constraints i issued from observations, we would have the constraint defined X j , given two poses X i and X j that are related by one obseras X 1 i vation. We also take advantage of the graph-based SLAM method 6 N. Nedjah et al. / Expert Systems with Applications 159 (2020) 113547 contributed in Grisetti et al. (2010). It works iteratively to optimize the most likely node configuration. The algorithm requires the initial node pose estimates, the constraints, and their respective covariance matrices (uncertainties). It returns the updated set of poses x and the information matrix H . The procedure iterates the given steps until convergence occurs. At each iteration, an information matrix and an information vector are computed. At each iteration, it starts with the null matrix H and a null vector b. Then, for each constraint, vector b and matrix H are updated. The linear system HDx ¼ b is solved, which allows to yield the value of the upgrade step for node configuration Dx. At the end of each iteration, we have H ¼ H and x ¼ x. Once there is convergence the process is terminated and x and H are returned. This kind of technique usually produces topological maps, i.e. maps that are focused on the representation of the relationship between places of interest rather than their metrical representation. Although it is possible to work the later to obtain other types of spatial representations such as described in Section 3.3.2. 3.3. Mapping This section deals with the theoretical rationale underlying the use the current pose as well as the pose graph assembled so far to map the environment where the robot is inserted. This includes the scan alignment, the map internal representation and update, and detection of previously visited locations to achieve loop closure properly. First, in Section 3.3.1, we discuss the required concepts related to the scan-matching problem. After that, in Section 3.3.2, we explain how maps can be represented and analyze pros and cons. Then, in Section 3.3.3, we briefly describe the search for nearest neighbors problem. 3.3.1. Scan matching Because scan matching is at the heart of this work, we dedicate this section to explaining the idea behind such a process and its functionality as well as the different methodologies taken to approach it. It is noteworthy to point out that here we use a Light Detection And Ranging device (LiDAR). The scan-matching process aims to determine the best transformation between two point clouds obtained by some sensing instrument. Scan-matching usage, as a way of estimating the movement between two poses, from which measurements were taken, is effective as long as we can rely on the accuracy of both measurements and on the convergence of the underlying optimization process, within a reasonable time. There are several effective scan-matching algorithms. These algorithms employ heuristics in order to quickly to reach a matching result. In Cox (1991) points are used as primitives and matched to lines that are included in the model. In BBesl and McKayesl and McKay (1992), the Iterative Closest Point (ICP) algorithm is proposed. Therein, the authors present several ways for calculating the distance between different representations of the geometric data under consideration. In CChen and Medionihen and Medioni (1992) considered the more specific problem of aligning range data for object modeling. The proposed approach therein takes advantage of the tendency of most range data to be locally planar. This algorithm is considered to be the point-to-plane variant of ICP. In GGutmann and Schlegelutmann and Schlegel (1996), the lines are extracted from the scans. This work was later revisited, wherein the lines extracted from a scan are matched to those in the model (Gutmann, Weigel, and Nebel, 1999). In LLu and Miliosu and Milios (1997), a general approach, which matches points to points, is introduced. This is essentially a variant of the ICP (Iterated Closest Point) algorithm (BBesl and McKayesl and McKay, 1992) applied to laser scan matching. In BBiber and Strasseriber and Strasser (2003), the NDT algorithm is proposed. It models the distribution of all reconstructed 2D points of one laser scan by a collection of local normal distributions. In Olson (2009), a probabilisticallymotivated scan-matching algorithm that produces higher quality and more robust results at the cost of additional computation time is presented. In Akai et al. (2017), the accuracy of the NDT algorithm is improved allowing an off-line estimation of the matching error, which is corrected later in the scan-matching process. In Segal et al. (2009), the Generalized-ICP is based on attaching a probabilistic model to the last minimization step used in ICP, keeping the rest of the algorithm unchanged to reduce complexity and maintain computation speed. So it only deals with the iterative computation of the transformation to improve accuracy. In DDiosi and Kleemaniosi and Kleeman (2007), the scan matching PSM algorithm aligns the measurements obtained by a laser sensor with polar coordinates, making the repeated transformation of the measured values for a Cartesian system unnecessary. In Censi (2008), the scan matching algorithm PLICP, which is based on the usage of a point-to-line metric, allows for a more accurate distance evaluation between the point and the surface whereof the scan was obtained. Existing scan-matching algorithms, although reasonably efficient, they are very sensitive to initialization. In BBesl and McKayesl and McKay (1992), it is ensured that the ICP process always converges to a local maximum. In addition, it is noteworthy to point out that an inadequate initialization can cause the alignment process to fail or to be inefficient. One way to mitigate this problem, and to prevent these algorithms from converging to optimal locations, is to initialize the algorithm with an estimate that is believed to be close to the overall optimal alignment. Another way is to use a more robust alignment search method rather than simply applying the method described in BBesl and McKayesl and McKay (1992). Given the advantages of applying techniques that are not only able to fully exploit the search space but also to exploit the solution space independently of function derivatives, and taking into account the fact that different heuristics present characteristics that make them better suited to solving particular types of problems, we decided that it would be advantageous and positively contributive for our work to investigate swarm optimization techniques to implement the scan-matching problem. In line with the objective of comparing the performance of swarm optimization meta-heuristics, we selected the part of the system, which is responsible for scan alignment to be implemented in such a way in order to allow the interchangeable application of any selected techniques. In this work, a map representation is used as an occupancy grid, and the scans are represented as point clouds. This option was taken because it allows the possibility to align scans (with each other) with less effort than necessary to construct two local maps and later align them. Moreover, this type of representation allows the advantages of both representations to be used. Given that the system preserves all information regarding the trajectory, it is always possible to perform the conversion between a points cloud in an occupancy map. The disadvantage, however, is that it is necessary to perform frequent searches for correspondence between clouds that can entail a high computational cost. The computational burden of searching for closer neighbors has been reduced by using KD-tree of posses instead of a simple graph (Friedman, Bentley, and Finkel, 1977; BBentley and Friedmanentley and Friedman, 1979; Bentley, 1980). We use the so-called Cauchy loss function, which is presented, in terms of the quadratic error. 3.3.2. Occupancy maps Occupancy maps are a form of spatial representation based on space discretization i.e. associates one cell to each space region, N. Nedjah et al. / Expert Systems with Applications 159 (2020) 113547 each of which can be classified either as full or empty. This type of spatial representation is based on the hypotheses of world staticity, independence between random variables that represent the map, and the fact that a cell can be completely full or completely empty. In other words, the presence of an obstacle in a part of a given space region makes one consider the region as entirely filled and what is estimated is the probability that this fact is true. The hypothesis of independence between the various cells is based on the probability distribution of the map given by the product of the probabilities of all its cells (Thrun et al., 2005). In general, the problem of estimating an occupancy map is divided into smaller sub-problems, such as the estimation related to the state of individual cells (Thrun et al., 2005). In this work, we will adopt the convention used in Thrun et al. (2005), wherein the algorithm that allows to perform cells mapping in this kinds of representation can be found. 3.3.3. Nearest neighbor search A fundamental part of several of the steps of this work consists of searching for the nearest neighbors to a given point in a set of points. IN order to give a formal definition of the problem, let P ¼ fp1 ; p2 ; ; pN g a set of points in R2 , and a point q. The problem of searching for the nearest neighbor of point q in P is to find point p 2 P, such that p is the closest to q. The simplest way to accomplish such a task would be to perform an exhaustive search computing the distance between any point of P to q and choosing the one that occasion the smallest distance. However, this approach is the least efficient and it is evident when the cardinality of P is large. In the proposed SLAM system, we are led to perform multiple searches for nearest neighbors, each with neighbors of entire point sets in a counterpart set, we proposed to store P in a structure of data that facilitates a fast search. One of the possible data structures we use in the proposed method is the KD-tree, which improves the search process (Friedman et al., 1977). Pose bookkeeping in a KD-tree requires the pose set to be divided. One of the most common forms of division makes use of the median of the coordinates of the poses to be stored. The usage of KD-trees reduces significantly the time required to find a closer set of neighbors (BBesl and McKayesl and McKay, 1992). One of the most traditional methods for approach the aforementioned task is called Iterative Closest Point (ICP) (BBesl and McKayesl and McKay, 1992). The main advantages of ICP-based methods are their simplicity of implementation and agility when implemented using KD-Trees (Friedman et al., 1977; BBentley and Friedmanentley and Friedman, 1979; Bentley, 1980; Zhang, 1994). However, ICP assumes that the forms whose correspondence is sought can be completely and perfectly superimposed. Of course, it is more common for the samples under analysis to have only partial overlap, such as when they are obtained with different sampling rates or from different points of view. It is interesting to note, however, that in order to improve the robustness of the technique, in the proposed implementation, several changes have been proposed which range from suggestions of point association heuristics to the implementation of hybrid methods that employ global optimization techniques to provide ICP with best initial estimate. The algorithm for ICP classical method can be found in BBesl and McKayesl and McKay (1992). In general terms, the underlying operation can be described as an iterated application of the following steps: 1. Matching: Associate to each pose in the source dataset its nearest neighbor in the destination set. 7 2. Minimization: Minimize the error measure according to the cost function used. 3. Transformation: Transform the poses in the source dataset using the result of the minimization process. 4. Swarm intelligence optimization Swarm intelligence is a field within artificial intelligence that draws inspiration from collective behavior. The most traditional optimization meta-heuristics that belong to this category are based on the interactions of creatures, such as ants, bees, worms, birds, fishes, among others. From the coordination, or apparent coordination, of the individual behavior of entities, which is of little sophistication in their modus operandi, a complex pattern usually emerges. The search process, employed by all swarm intelligence based techniques consists of an interaction and a dichotomy between two, well known, processes: exploration and exploitation (Kennedy, 2006). Exploration consists of the discovery of new potential solutions within the search space, and typically leads to a growth of the known part of the search space. Exploitation is the process of intensifying the search within the neighborhood of known solutions, thus making use of the information gathered by the swarm up to the present moment to try and improve the quality of the known solutions. In this work we use three swarm intelligence techniques: Particle Swarm Optimization (PSO), which we describe in Section 4.1; Artificial Bee Colony Optimization (ABC), which is described in 4.2; Firefly behavior-based Optimization Algorithm (FA), which is described in Section 4.3. 4.1. Particle swarm based optimization The PSO is an algorithm that keeps a set of entities known as particles, each particle position represents a codified solution to the underlying optimization problem. The key idea of all variants of this algorithm is that the interplay between each particle individual experience along the search process and the collective experience of the swarm is what guides the search through the solution space. The search procedure occurs in an iterative fashion and each particle, along its trajectory through the search space, keeps in its memory the best solution it has thus far attained. The interaction between each individual particle, and the swarm to which it belongs, is controlled by the modulation of the velocity of the members of the swarm. Each particle velocity is defined as the composition of three distinct components: social, cognitive and inertial. The social component is responsible for guiding each particle in a direction that approaches the best solution found by the swarm, or by a subset of the swarm depending on the topology that is employed. The cognitive component purpose is to guide every particle towards the best solution they have found themselves. Finally, the inertial component has a role that, much like the corresponding force in Newtonian mechanics, opposes any change in the tendency of motion. As such, the inertia effectively limits the particles ability to change its path through the search space in a drastic manner. Let xi ðtÞ be the position of the particle i at the instant t; v i ðtÞ the component of the velocity of the particle i at instant t; w the coefficient of inertia, c1 the cognitive coefficient, c2 the social coefficient, n the swarm size and f the objective function. The basic algorithm for the PSO with global best communication strategy can then be stated in Algorithm 1 (KKennedy and Eberhartennedy and Eberhart, 1995; Kennedy, 2006). 8 N. Nedjah et al. / Expert Systems with Applications 159 (2020) 113547 Algorithm 1 PSO Require f , n, w, c1 and c2 1: Initialize the swarm 2: repeat 3: for i :¼ 1; 2; . . . ; n do 4: if f ðparticlei Þ < f ðpbesti Þ then 5: pbesti :¼ particlei 6: end if 7: if f ðpbest i Þ < f ðgbestÞ then 8: gbest :¼ pbest i 9: end if 10: end for 11: for i :¼ 1; 2; . . . ; 3 do 12: Update velocity according to Eq. 7. 13: Update position according to Eq. 8 14: end for 15: until Stopping criteria are met 16: return gbest The operation of Algorithm 1 can be briefly explained as the succession of the following steps: starting and iterating a swarm moving process in the search of good quality solutions. Swarm initialization consists of random generation of particles (coded solutions) that are spread throughout the search space. As long as no stopping conditions have been met, the swarm movement process is repeated. The swarm movement process depends on the quality of the swarm members and the past experiences of each particle. In each iteration, the quality of the solution, as coded by each particle, is evaluates. Whenever this is the best solution found by the particle, its position is used to update pbest. Each time the particle quality is evaluated, the particle’s pbest is compared to the best position found so far by swarm as a whole. Whenever the quality of the particle’s pbest is found to be better than of the global best, gbest is then updated using pbest. During the process, each particle has its velocity and position updated. At the end of the optimization process gbest is returned as the solution to the problem. In Algorithm 1, the particle velocity is computed following Eq. 7: v i ðt þ 1Þ ¼ w v i ðtÞ þ c1 r1 ðpbesti ðtÞ xi ðtÞÞ þ c2 r2 ðgbestðtÞ xi ðtÞÞ; ð7Þ wherein pbesti ðtÞ represents the best position in the history of the particle until instant t; gbestðtÞ is the best global to moment t and r 1 and r 2 are pseudo-random numbers sampled using a uniform distribution in the range ½0; 1. Regarding random numbers r 1 and r 2 , we further state that for each dimension of the problem the update step is to generate one of these numbers independently. The position update of each particle is performed according to Eq. 8: xi ðt þ 1Þ ¼ xi ðtÞ þ v i ðt þ 1Þ: ð8Þ In Algorithm 1, we did not specify which stopping conditions to satisfy, although this is fundamental for any approximate optimization technique. Defining an appropriate stopping condition can be done in several ways, the most common of which are: specifying a maximum number of iterations, setting a tolerated error for the objective function value regarding to the found best solution, setting a value change threshold of the objective function and the definition of a variation threshold of the objective function argument. Cases where thresholds of variation are specified are actually measures of stagnation, and these thresholds are typically associated with a maximum number of iterations so that the limited amounts they violate before the whole optimization process is completed. With these definitions established, we can then try to quantify the importance of each of the parameters mentioned for the convergence and efficiency of the process. Several studies have been performed on parameter selection for an efficient optimization process using PSO. The parameters denoted c1 and c2 are also known as acceleration coefficients. As this name implies, low values of c1 and c2 lead to smoother trajectories, and allow particles to explore the search space longer, temporarily moving away from good regions before being attracted to a balance condition. Conversely, high values of these two parameters can lead to steeper trajectories and cause particles to converge faster for good solutions, but they can also bounce them beyond good regions of the search space. In Engelbrecht (2006), one can find reference to simplified canonical PSO models, wherein certain components of the velocity formula are suppressed. Indeed, the suppression of certain components that influence the particle trajectory in the search space allows a better notion of their contribution to the quality of the results obtained. To these simplified models the author of Engelbrecht (2006) names Cognition-Only Model, Social-Only Model and Selfless Model (altruistic model). These simplified models are instructive in that they unequivocally illustrate to what extent the alteration of the three parameters mentioned above, namely: the coefficient of inertia, the cognitive coefficient and the social coefficient affects swarm behavior and overall algorithm performance. According to KKennedy and Eberhartennedy and Eberhart (1995), the purely cognitive model is more susceptible to failure than the complete model. Also according to KKennedy and Eberhartennedy and Eberhart (1995), this type of velocity model leads to an intense search behavior close to the initially populated regions, degenerating the PSO into a case of parallel random search. According to Kennedy (1997), Engelbrecht (2006) the purely cognitive model still has a slower rate of convergence, as confirmed in CCarlisle and Dozierarlisle and Dozier (2000). The so-called purely social model has been lauded in KKennedy and Eberhartennedy and Eberhart (1995), CCarlisle and Dozierarlisle and Dozier (2000), Engelbrecht (2006) for being faster and more efficient than the full model and the purely cognitive model. This model represents the tendency of particles to mimic their most successful neighbors. Finally, Kennedy mentions the altruistic model. The latter is nothing but the social model under the restriction that the best particle in a neighborhood cannot be the particle itself. That is, when a member of a neighborhood seeks out the most successful particle in the vicinity, it abstains of the competition. This model has been described as being faster than purely social for some problems in Kennedy (1997), although unsatisfactory results have been reported for the optimization case in CCarlisle and Dozierarlisle and Dozier (2000). 4.2. Artificial bee colony based optimization The ABC algorithm was first proposed by Karaboga (2005) and draws its inspiration from the foraging behavior of honey bees. In the model used by the algorithm the hive is divided into three classes of bees: worker, onlookers and scouts. The fundamental notion of the algorithm is that each food source represents a potential solution and the amount of nectar in the food source represents its quality, as measured by the objective function. It should be noted that the number of employed bees is always equal to the number of potential solutions in the swarm, denoted food sources within the meta-heuristic. The main step of the ABC optimization 9 N. Nedjah et al. / Expert Systems with Applications 159 (2020) 113547 strategy are given in Algorithm 2. It is essential to make it clear that, in this algorithm, each food source represents a possible solution to the optimization problem and the nectar quantity represents the quality of the solution, which is computed according to the objective function. Algorithm 2 ABC require f , n, N and l 1: Send scout bees to initial food sources, as chosen using Eq. 9. 2: repeat 3: Send worker bees to food sources to assess nectar quantity. 4: Select neighboring sources for each worker bee using Eq. 9. 5: Compute preference probability of food source by onlooker bees using Eq. 10. 6: Send onlooker bees to food sources to assess nectar quantity. 7: Stop exploring food sources when these have been completely exploited. 8: Move randomly scouts bees using Eq. 9. 9: Update s the best food source as discovered so far. 10: until Stopping criteria are met 11: return s Every cycle of the ABC search procedure consists of three steps: dispatching the employed bees to the food sources and the subsequent evaluation of the nectar collected by each of them, the selection of new promising food sources based on the information that was shared by the returning employed bees and the selection, by the onlookers, of promising food sources to where they are sent. The food source selection process, by the bees, is controlled by a parameter denominated limit. This limit is a predefined number of attempts to improve the quality of a food source, after which it is abandoned and the employed bee that was in charge of the aforementioned food source becomes a scout bee. These details show that the exploration stage of the search is carried out by the scouts while the exploitation stage is performed by the employed bees and the onlookers. The ABC algorithm uses as its control parameters, such as the swarm size, the limit of attempts to improve a food source, the number of onlookers and the number of scouts. Usually, the employed bees compose half of the swarm. In Algorithm 2, the initialization of food sources can be done as defined by Eq. 9: xij ¼ lj þ r1 ðuj lj Þ; ð9Þ wherein xij is the coordinate j of food source i; r 1 is a uniformly distributed pseudo-random number within the range ½0; 1; lj ¼ l is the lower bound along the j direction of the subset of the objective function regarding the considered space search and uj is the upper bound of the aforementioned set. Upon their return to the hive, the bees share their discovery about the food sources they explored with the other bees in the hive dance region. After the sharing of information regarding the quality of the discovered food sources is completed, worker bees return to the food sources they visited in the previous iteration, given the fact that such locality is recorded in the worker bee’s memory. Then the bee choses a new food source in the vicinity of the previous one. Subsequently, each onlooker bee chooses a food source based on the worker bee dance. Importantly, the probability of selecting a food source increases with the increase in the amount of nectar detected over there. Indeed, the probability of selecting a particular source pi is set according to Eq. 10: f ðxi Þ pi ¼ PN ; k¼1 f ðxk Þ ð10Þ wherein f ðxi Þ denotes the value of the objective function of food source i and N represents the number of food sources. It is important to note that each worker or onlooker bee alters the food source in its memory with a given probability. This modification is kept as long as the new food source is more fruitful than the original. The selection algorithm can therefore be characterized as greedy. The change of the food source is determined as in Eq. 11: v ij ¼ xij þ rij ðxij xkj Þ; ð11Þ wherein r ij is a uniformly distributed pseudo-random number such that rij 2 ½1; 1 R; k 2 f1; ; Ng N; i 2 f1; ; Ng N; j 2 f1; ; Ng N; xij is coordinate j of food source i, and xkj represents coordinate j of food source that is randomly selected. 4.3. Firefly based optimization The FA is swarm intelligence method (Fister, Yang, and Brest, 2013). In essence it is a bio-inspired stochastic metaheuristic. The inspiration of FA comes from the bioluminescence of fireflies. In fact, it is precisely the periodic flickering of lights, a striking feature of these insects, which, together with the physical characteristic of the decay of light intensity, was used to elaborate this optimization strategy. In Fister et al. (2013), it is shown that the decay of the luminous intensity I follows a relation of the type I / r12 , where r indicates the distance to the source of luminescence. The communication between social insects has been successfully used several times as an inspiration for the development of optimization techniques, such as in the cases of ants and bees (Dorigo, Caro, and Gambardella, 1999; Karaboga, 2005). It is important to emphasize that in swarm algorithms, the decision-making processes of each entity typically occur in a decentralized way, and for this reason it is essential that there is communication between the members of the swarm in order to have a cohesive behavior of the whole. The parameters of the FA are b0 ; c and n. The coefficient b0 is the attractiveness of a firefly when the distance to it is zero. The constant c determines the decay rate of the attractiveness of a firefly in terms of the distance to it. In the Algorithm 3, each firefly was denoted by s and the objective function by f. Algorithm 3 FA Require b0 ,c,n 1: Initialize the swarm 2: repeat 3: aðtÞ ¼ newAlfaðÞ 4: for i :¼ 1; 2; . . . ; n do 5: Compute f ðsi Þ 6: end for 7: Sort the fireflies s of the swarm according to their quality f ðsÞ. 8: Assign the fittest firefly to s . 9: Move the fireflies according to Eq. 12. 10: Until Stopping criteria are met 11: return s 10 N. Nedjah et al. / Expert Systems with Applications 159 (2020) 113547 The essence of FA operation is the combination of random motion with the attraction of each firefly by the other brighter members of the swarm. In fact, firefly sj is attracted to the firefly si and its motion is defined by Eq. 12: stþ1 ¼ sti þ b0 expðc r2ij Þ ðsj si Þ þ a i ; i ð12Þ wherein c represents the light absorption factor, that is how light diffuses through the medium in which it propagates between one firefly and another. The parameter a 2 ½0; 1 is responsible for the random term a i of Eq. 12, and is sampled from a uniform distribution in that interval. The value i is sampled from a Gaussian distribution. The value of r ij is the distance between the fireflies si and sj , as defined by the two-dimensional Euclidean metric of Eq. 13: rffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffi X2 r ij ¼ ksj si k ¼ ðs sik Þ2 : k¼1 jk ð13Þ Algorithm 4 Proposed SLAM Solution 1: G :¼ £; lv :¼ £ 2: lk :¼ 0; rk :¼ 0 ;k :¼ 0 3: DP :¼ ½ 0 0 0 T 4: lv ð0Þ :¼ Acquire_ScanðÞ 5: t :¼ 1 6: repeat 7: lv ðtÞ :¼ Acquire_ScanðÞ 8: DP :¼ Align_Scansðlv ðtÞ; lv ðt 1ÞÞ 9: G :¼ Update_GraphðG; DP; t 1; tÞ 10: G :¼ Seek_Loop_ClosureðG; lv Þ 11: G :¼ Optimize_GraphðGÞ 12: t :¼ t þ 1 13: until Halt command is received. 14: M :¼ Generate_Global_Map ðG; lv ÞÞ 15; return M It is important to note that in Algorithm 3, index k in the sum of the Eq. 13 always varies in the range of 1 to the maximum number of dimensions of the problem. Function newAlphaðÞ is used to allow an adaptive variation of a, in order to reduce the degree of randomness of the movement of the fireflies as the iterations pass. In FA, the swarm of fireflies is initialized randomly, and the search process is guided by the luminous intensity of the swarm’s fireflies, which is proportional to the quality of the solution as perceived by their peers. This quality, as seen by the other fireflies, it is called attractiveness (Fister et al., 2013). An interesting phenomenon, regarding the comparison between FA and other optimization algorithms, is that FA presents two remarkable asymptotic behaviors, according to the selected value of parameter c. The first one is c ! 0, since the attractiveness becomes constant and equal to b0 throughout the search space. So, the algorithm behaves as a particular case of PSO. The second notable asymptotic behavior occurs when we have c ! 1, which causes the FA to behave as a parallel case of Simulated Annealing (Fister et al., 2013). As a conclusion, any AF based process has an intermediate behavior between these two techniques. In the FA described in Gao, Luo, Teng, He, and Jiang (2013), several stopping criteria are mentioned, such as the quality of the worst solution in the swarm, the quality of the best solution in the swarm and the number of evaluations of the fitness function. Regarding the first and second conditions, it noteworthy to point out that when a threshold such as this is imposed, it is expected that there will be convergence of the algorithm with a smaller number of iterations, and consequently with a smaller number of evaluations of the function goal. However, this convergence comes coupled with a loss of precision, i.e. there may be premature convergence in the presence of a local maximum. The proposed solution has an incremental character, therefore in each iteration of Algorithm 4 a single scan is processed. Every iteration begins by the acquisition of a scan by means of an invocation of the method called Acquire_ Scan. The scan thus obtained is then stored in a list called lv that contains all the scans heretofore collected in chronological order. Then, the scan acquired at the present instant lv ðtÞ is matched against the scan obtained in the instant that immediately preceded it lv ðt 1Þ, hence an estimate of the motion that took place in between both measurements is attained in the form of a relative pose DP (sequential pose difference). After that, the pose graph G is updated by the inclusion of a new node, which is connected to the last created node by an edge that encodes the sequential pose difference DP. Immediately following this update, the resulting graph is optimized. Once the optimization of G is concluded, the method Seek_Loop_Closure is called, receiving as arguments G and lv , whereby new edges are added if any pair of nodes is determined to have, in its corresponding scan, represented the same region of the space being explored. Afterwards, the yielded graph pose G is once more optimized thus ending the processing of scan lv ðtÞ, and concluding the current iteration. This sequence of steps is repeated until a Halt order is issued by an external agent, in which case the global map M is generated from G and lv ðtÞ by a call to Generate_Global_Map and the whole procedure is finished. The first iteration constitutes a special case since no previous scan exists upon which a reference may be based. Therefore, it is pre-determined that the reference frame of the first pose, and its corresponding scan, will be used as a basis for all succeeding measurements and poses. In fact, the first scan acquisition induces the system to introduce the first node in the graph. 5. Proposed solution 5.1. The scan matcher The proposed solution to the SLAM problem consists of four parts: (i) a scan matcher; (ii) a pose-graph updating system; (iii) a pose-graph optimization system; and (iv) a loop closure detection system. Algorithm 4 shows the macro-architecture of the proposed method, wherein, the scan matcher is implemented by function Align_Scans; the pose-graph updating system by function Update_Graph; the pose-graph optimization system by function Optimize_Graph; and the loop closure detection system by function Seek_Loop_Closure. The scan matcher role, in our system, is to determine the relationship that exists between pairs of LiDAR scans. While odometric relationships could be derived for the case of subsequent scans, no reliable correspondence could be established between nonsequential pairs of scans without a proper scan-matching procedure. Therefore, the impact of such a fundamental part of our SLAM solution cannot be understated, and its accuracy and overall performance is bound to deeply affect the overall system’s performance. 11 N. Nedjah et al. / Expert Systems with Applications 159 (2020) 113547 The scan matcher, whose main steps are given in Algorithm 5, is designed to operate on two different, and complementary, modes. The first mode of operation is a scan-to-scan alignment based on a point-to-point distance metric. Its operation is dependent on the minimization of the used distance metric, and it operates on a pair of scans by first determining a set of correspondences between them, based on a nearest neighbor criteria. The second mode is a scan-to-map alignment and consists of minimizing the distance between the pairs of corresponding points. The result of the aforementioned procedure is a transformation that leads to a maximal alignment between the input scans. So, the scan matcher works in two modes in an attempt to reduce uncertainties of scan matching. Hence, when the scan-to-scan mode fails to achieve an acceptable alignment (i.e. alignment error is above a threshold), the scanto-map is executed, attempting to fine tune the relative position found by the scan-to-scan alignment in order to hopefully reduce the alignment error. Algorithm 5 Align_Scansðv ; v r Þ Require t, lk , rk ; 1: lc :¼ Seek_Correspondencesðv ; v r Þ 2: ½ DP k T :¼ Minimize Eq. 14 2: if k > then 4: m :¼ Renderize_Local_Map ðv r Þ ½ DP k T :¼ Minimize Eq. 16 with arguments ðv ; mÞ k :¼ Calculate the new k using Eq. 14 with DP as its argument. 7: end if 8: lk :¼ 1t k þ ðt 1Þlk h i1 r2k þ ðk lk Þ2 2 9: rk :¼ t1 t 5: 6: 10: :¼ lk þ rk 11: return DP The scan alignment of Algorithm 5 receives two scans: the scan under investigation v and the reference scan v r . Its operation begins by seeking correspondences between the scans v and v r , which procedure is denoted Seek_Correspondences. It returns a bijection between v and v r that associates to each point of v the closest point in v r , following thus a nearest neighbor association criterion. Given the list of correspondences between the points of each scan, the scan alignment task is, thus, reduced to the determination of a minimum point via function f defined in Eq. 14, called the objective function of the minimization matching process that ensures the scan alignment during the point-to-point mode. The optimization is performed by the application of the selected swarm intelligence technique, which were previously defined: ABC, FA and PSO. This process returns two results: a relative pose, called DP, and the value of the aforementioned objective function, denoted k. If k is below the error threshold , the alignment is deemed to be satisfactory and the relative pose DP is accepted. Otherwise, and in order to improve the so-far achieved scan alignment, we attempt to reduce the scan-matching error by doing a local fine search around the found relative position. This will be further explained in the sequel. In this purpose, the reference scan v r is rendered as a local occupancy grid map m and a scan alignment is attempted in the scan-to-map mode of operation. This alignment is also performed via a minimization process using the selected swarm-based optimization meta-heuristic. Nevertheless, in this case, the objective function is different from the one used during the scan-to-scan alignment. So, during this procedure, the objective function employed takes the form in function g, defined by Eq. 16. The result of the optimization procedure is, as in the first case, a pair containing DP and k. It is noteworthy to point out, however, that the value of k thus obtained is not comparable with the previous given that it is computed using a different objective function. Hence, a new value of k is computed using Eq. 14. This new value is used to update the dynamic error threshold . The rejection threshold determines whether the point-topoint alignment produced a pose that is an outlier. In this positive case, the scan-to-map mode would be employed to correct the pose estimation, which is especially recommended in the case of loop closures. There are several strategies for rejection threshold determination (Pomerleau, Colas, Ferland, and Michaud, 2009). These were first used to determine outliers when using ICP and its variants (Druon, Aldon, and Crosnier, 2006). Among these strategies, one can find: Fix strategy, wherein the threshold is a fixed pre-defined value; Zhang’s strategy, wherein the threshold is based on statistical moments of the data distribution. It is set to either l þ 3r when the pairing is assumed to be quite good, l þ 2r when the pairing is assumed to be still good, l þ r when the pairing is assumed to be not so bad or the median of the distribution when the pairing is assumed to really bad, depending on a user-defined parameter (Zhang, 1994); Mean strategy, wherein the threshold is set l þ r (Druon et al., 2006). We use the latter rejection strategy i.e. the mean strategy to decide whether to apply the scan-to-map to correct the pose obtained by the point-to-point based alignment. So, threshold is defined as the sum of the mean and the standard deviation of variable k obtained along the current SLAM session. At the end of each, and every, call to Algorithm 5 there is an update to lk and rk , followed by an update to , so that its value is consistent with the new running mean and standard deviation of the objective function values. Note that values of lk and rk for the current scan alignment are computed incrementally based on their values in the previous scan, taking into account the total number of scans done so far, denoted by t, which is initialized as 1 and incremented at the end of each at iteration of the SLAM process, as shown in line 5 and line 12 of Algorithm 4, respectively. Evidently, and regardless of what mode of operation is responsible for its computation, Algorithm 5 DP is always returned. The objective function used for the scan-to-scan alignment, which evaluates the quadratic distance between the points of scan v and those of scan v r in the coordinate system centered in pose DP, is specified by Eq. 14: f ðDPÞ ¼ log 1 þ XN i¼1 DP vx vy i i v rx v ry 2! i ; ð14Þ i where DP is a relative pose and is the operation of composition between a pose and a point, defined in Eq. 1. This composition allows us to express the points in v in the same coordinate system as the points in v r centered at new pose DP. Note that the log function is computed in order to limit the wide variation of the used comparison function f. So, we impose the logð1 þ xÞ re-scale transformation (Baeck, Fogel, and Michalewicz, 2000). Constant 1 is added to avoid the non-definition of the logarithm function on 0. The scan-to-map alignment allows us to correct the robot pose estimation exploiting three facts: (i) all beams of a scan must end at obstacles; (ii) the robot estimated position must be empty in the current occupancy map; and (iii) robot must be able to reach that position in the map and continue its mapping from thereon. The objective function for this second mode of scan-to-map matching is inspired by these two facts. The minimization of the proposed objective function attempts to find the best matching that mini- 12 N. Nedjah et al. / Expert Systems with Applications 159 (2020) 113547 mizes any breach of these two facts. Moreover, the scan-to-map alignment is performed in the manner previously described via a minimization process using the swarm-based optimization metaheuristic. In this case, the objective function takes on the form described in Eq. 15 (Oliveira, Nedjah, and Mourelle, 2017). Namely, let v xi and v yi be the Cartesian coordinates of the obstacle detected by the i-th beam of scan v and let DP be a relative pose. The result of the composition operation between the point v xi ; v yi and the relative pose DP, in the coordinates of the local occupancy grid map, is denoted ðni ; fi Þ and defined by Eq. 15: ni fi ¼ DP vx vy i : ð15Þ i The process of scan-to-map alignment is then reduced to the minimization of the objective function g defined in Eq. 16: gðni ; fi Þ ¼ n X 255 mðni ; fi Þ 255 i¼1 þ .1 X .1 X mðxr þ k; yr þ ‘Þ ; 255 ð. þ 2Þ k¼1. ‘¼1. 1 ð16Þ 2 wherein n is the number of beams within the current scan, xr and yr represent the position of the robot in the local map. Variable m represents the local occupancy grid map as a matrix of unsigned eight bit integer numbers that codify the occupancy probabilities from 0 to 100% by mapping them linearly to the range from 255 to 0, respectively. So, it is established that value 0 indicates an entry in the map of 100% probability of being occupied while value 255 denotes an entry of 100% probability of being empty. Variable . is defined in terms of the robot’s diameter d and the map cell size c as in Eq. 17: .¼ 8 > < dd=ce þ 1 if dd=ceiseven > : otherwise: ð17Þ dd=ce It is noteworthy to emphasize that the first summation term in Eq. 16 is with respect to all n beams within the scan one wishes to localize and thus evaluates the veracity of the first fact (i.e. scan beams must end at obstacles), and the second summation term is employed to penalize the presence of occupied cells in the ð. þ 2Þ2 adjacent cells of the robot location, taking into account the robot’s size and map cell size, and thus verifying the veracity of the second fact (i.e. robot estimate position must be empty in the map), augmented by the extra cells around the robot location, thus guaranteeing the veracity of the third fact (i.e. robot must be able to reach the position and continue its trajectory from it). By aiming at an empty position yet with neighboring position also empty, the robot can be away from walls and corners and any other obstacles in a such a way that it can map the wall and other obstacles and still maneuver to continue its journey, mapping the so-far unknown environment. For instance, with a usual cell size of 0.05 m and a robot with a diameter of 0.15 m, Eq. 16 would penalize the 25 (i.e. 9 cells where the robot is located and 16 cells around that location) adjacent cells, around the robot estimated position (cell mðxr ; yr Þ) in the map. In both modes of operation three halting conditions have been imposed upon the optimization procedure: a limit of 1000 maximum iterations performed by each technique, a stagnation limit of 50 iterations, wherein the difference between the best and the worst solution within the swarm does not vary by more than 106 , and a tolerance threshold, whereby a solution whose corresponding objective function value is inferior to 10 accepted. 6 is immediately 5.2. Pose graph manipulation Pose graphs are the backbone of our solution by providing us with a means of attaining, and maintaining, global consistency within the maps generated and the trajectory estimated therein. We have adopted the methods described in detail within (Grisetti et al., 2010) to perform the optimization of the pose graphs generated by our proposed solution. 5.2.1. Graph update The steps required to update the pose graph order to incorporate the effects of a restriction in a given edge labeled by the obtained pose DP are specified in Algorithm 6. So, besides inserting an edge between nodes i and j, procedure Insert_Edge_RestrictionðG; i; j; DPÞ also computes the restriction heij ; Xij i associated with this edge. Every time a relative pose DP is determined by the scan matching, it is passed to this procedure, which evaluates whether the new pose represents a translation of more than 0:5 m or a rotation of more than 0:5 rad. In the positive case, a new node, called j, is inserted into the pose graph. In effect, if node j is already in the graph i.e. in case of some loop closure, a new edge is inserted in between i and j, otherwise a new node j is inserted and it is linked to node i by an edge corresponding to the restriction implied by DP. It should be noted that the existence of node i in the graph set of nodes GN is always true. Recall that pose DP is determined by the scan matcher with an associated standard deviation of rlambda for the objective function value k, defined in Eq. 14. Algorithm 6 Update_Graph(G, DP, i, j) Require X qffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffi 1: if DP 2x þ DP2y > 0:5morjDPh j > 0:5rad then 2: if j R GN then 3: G :¼ Insert_NodeðG, jÞ 4: end if 5: G :¼ Insert_Edge_RestrictionðG, i, j, DPÞ 6: end if 7: Return G Let eij and Xij be the error and the information matrix associated with an newly inserted edge between node i in reference to pose pi , and node j in reference to pose pj , given the relative pose DP obtained by the scan matching of Algorithm 5. Error eij is defined as in Eq. 18 (Carlone, Aragues, Castellanos, and Bona, 2011; Grisetti et al., 2010): " eij ¼ RTDP RTi ðt j t i Þ tDP hj hi hDP # ; ð18Þ wherein transformation is defined as in Eq. 2, and Ri is the rotation matrix that has as orientation angle that of pose pi and RDP is a rotation matrix whose angle coincides with that of a rotation that leads to the maximum overlap of observation made from pose pi to that made from pose pj . This is simply orientation hDP of pose DP. Matrix Ri is defined in Eq. 19: 2 6 Ri ¼ 4 cosðhi Þ sinðhi Þ sinðhi Þ cosðhi Þ 3 2 7 5; 6 RD P ¼ 4 cosðhDP Þ sinðhDP Þ sinðhDP Þ cosðhDP Þ 3 7 5: ð19Þ T T Vectors t i and t j are defined as v xi v yi and v xj v yj respectively, while vector tDP is the translational component whose value N. Nedjah et al. / Expert Systems with Applications 159 (2020) 113547 allows the maximum overlap of the observation made from poses pi to that made from pose pj . This is simply the translational component of pose DP defined as ½ v DP v DP . The information matrix Xij regarding the inserted edge between nodes i and j, given the resulting pose DP yielded by the scan matching step with standard deviation rk is defined as in Eq. 20: 13 ðH Dx ¼ bÞ we implemented the QR factorization, using the library available of Guennebaud et al. (2010). T Xij ¼ 1 r2k I; ð20Þ wherein I is the identity matrix. 5.2.2. Pose graph optimization As previously stated, the method employed to optimize pose graphs within the present work uses the exact same algorithm for this purpose described in the graph-based SLAM Tutorial (Grisetti et al., 2010). This algorithm allows to optimize the information matrix and the information vector for all cases. However, it must not be ignored that while in function Optimize GraphðGÞ the only input argument is the pose graph itself, it should be understood that from such an argument that the list of poses (nodes) GN ¼ x¼ x1:T and the list of constraints (edges) N C can be extracted. Moreover, the graph optimization function seeks the most likely configuration of nodes by means of a Gauss–Newton type of optimization procedure. Furthermore, it should be self-evident that this function alters the considered pose graph by updating the poses to the ones deemed more likely to be the case by this procedure. To give a brief idea on the graph optimization process, let eij be the value of the difference between the expected observation zij and the actual observation ^zij . This process is termed as optimization because it minimizes the odd-logarithmic probability of a given node configuration, i.e. given a node configuration and a set of restrictions between them, and assuming that the node position is approximately normally distributed, there is a probability density function of the form g expð0:5 eTij Xij eij Þ for each pair of nodes corresponding to poses i and j between which there is a measurement zij , wherein g is a normalization constant and Xij is the information matrix of a given virtual measurement between the nodes i and j. From this fact, it follows that, if it is assumed that the positions of the nodes are independently distributed, the probability of a given configuration of nodes can be expressed as a product of all the exponential terms presented. This conclusion follows immediately from the premise of the independence of variables and the law of total probability (KKokoska and Zwillingerokoska and Zwillinger, 1999). The problem of maximizing the probability of a configuration is formulated in a computationally more efficient way, adopting the log-likelihood. Using the odd-logarithmic probability, the task of maximizing the probability of a node configuration is then posed as a problem of minimizing a sum rather than maximizing a product. The explicit form of the minimized nonlinear objective function to find the optimal node configuration is defined by Eq. 21: Fðx1:t Þ ¼ X eTij Xij eij ; ð21Þ hi;ji2GC wherein GC is the constraints set regarding all edges of G and x1:t denotes the sequence of all poses collected from time 1 to time t. It is noteworthy to point out that the linearization of Eq. 21 was used in order to apply the Gauss–Newton method. The derivation process of the linear form is quite usual and can be found at TThrun and Montemerlohrun and Montemerlo (2006), Grisetti et al. (2010), Kümmerle et al. (2011). In order to solve the system 5.2.3. Loop closure search and detection In Algorithm 7, we describe the method whereby the proposed solution deals with the problems of finding adequate extemporaneous constraints and loop closures. This particular subsystem is periodically invoked through the entire duration of the SLAM task in order to maintain the global consistency of the map and the trajectory under construction. In a nutshell, we dealt with the problem of seeking and detecting loop closures by means of a successive, and methodical, elimination of potential candidates. Algorithm 7 Seek_Loop_ClosureðG, lv Þ T :¼ Select_Candidates(G) 1: lc l0v 0 T 0 2: lc l00v :¼ Eliminate_Outliers(G, lc , lv ) 3: fori ¼ 1; ; N c do 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: ½ DP k T :¼ Align_Scans(v , v r ) Ebefore :¼ Calculate_Error(G) 0 G :¼ Insert_EdgeðG, t, lc , DPÞ G :¼ Optimize_Graph(G) Eafter :¼ Calculate_Error(G) if Ebefore < Eafter then 0 G :¼ Remove_EdgeðG, t, lc , DPÞ G :¼ Optimize_Graph(G) end if end for return G The process of selecting candidates suitable for pairing with the current pose to form a loop closure edge are selected by searching the graph for the nearest poses that have been traversed. This search is conducted with the aid of an auxiliary KD-Tree (Friedman et al., 1977; BBentley and Friedmanentley and Friedman, 1979; Bentley, 1980), containing the translational components of the poses. The Eliminate_Outliers procedure receives as its arguments a list 0 of the potential candidates lc , and the list lv that contains the scans taken from each of the poses in lc . Then all the nodes whose corresponding poses lie outside of the covariance ellipse, that demarks the threshold around the mean wherein 95% of the mass of the probability distribution density function of current pose is concentrated, are removed from lc . Of course, each time a pose within the candidate list is eliminated from lc the corresponding scan is also 0 removed from lv . Afterwards, each of the poses within the list is tested by the alignment of the scan obtained from each pose to the one corresponding to the current pose. Each alignment, produces a relative pose and an error value, as was specified in Algorithm 5. In order to validate a possible correspondence, we assumed the criterion that any constraint that is valid must not make the error of the graph optimization procedure grow, since this would indicate that the node configuration that ensues is less likely than it was before the introduction of the aforementioned constraint. This final testing stage, therefore, proceeds by, one at a time, introducing an edge between the current pose and each of the remaining candidate poses. The restriction is generated based on the relative pose returned by the scan matcher. Every time a constraint is added, the graph is optimized and the resulting error is compared to the one that was obtained before the introduction of the new constraint, if it is larger the constraint is removed from the graph and the candidate is eliminated from 14 N. Nedjah et al. / Expert Systems with Applications 159 (2020) 113547 the candidate list. The search continues in such a fashion until no more candidates remain in the list. It should be kept in mind that by the end of the search the graph will contain all the valid loop closures the system could detect, however many there may be, and be optimized using those constraints. Algorithm 8 computes the error incurred by the current configuration of nodes in the graph. It does so by sweeping all edges in the graph and accumulating the value eij corresponding to the error between every node pair (i; j) that is connected by an edge in the graph. The value Xij is the block matrix Hij matrix, i.e. the block of the information matrix encompassing nodes i and j Algorithm 8: Calculate_ErrorðGÞ 1: E :¼ 0 2: fori ¼ 1, , t do 3: forj ¼ 1, , t do 4: if an edge between nodes i and j exists then 5: E :¼ E þ eTij Xij eij 6: end if 7: end for 8: end for 9: return E 6. Performance results The system thus far described has been implemented in C++ and the general framework for graph optimization G2O (Kümmerle et al., 2011). Moreover, we have also employed the linear algebra library Eigen version 3:2:9 through the system, the nanoflann library version 1:2:2 was used for implementing the nearest neighbor search with KD-Trees and the OpenCV library version 3:2:0 was used to produce the image files corresponding to the occupancy grid maps that were generated internally. All the tests that were performed on public domain datasets used a PC with an Intel Core i7 870 2:93 GHz processor, 8 GB RAM running Debian Linux 8:6. It is noteworthy mentioning that the performance analysis performed in this section is twofold. first, in Section 6.4, we assess and compare the performance of the three used meta-heuristics, then, in Section 6.5, we compare the whereby achieved performance to that achieved by some notable SLAM published works. The presented analysis is based on the most used error metrics for SLAM methods, as defined in Section 6.1, a set of SLAM benchmarks, as introduced in Section 6.2, and on a parameter settings for the three exploited meta-heuristics, as set in Section 6.3. 6.1. Error metrics We have performed tests to evaluate our system based on its accuracy and the overall speed of execution. About the accuracy, it should be noted that we have employed the method described in Kümmerle et al. (2009). The accuracy evaluation, by this method, is based on the application of Eqs. 22 and 23. The absolute translational error and is defined by Eq. 22: eT ¼ N 1X jTðdi;j di;j Þj; N i;j ð22Þ where N is the number of relations considered for the evaluation, di;j is the difference between poses i and j; di;j is the reference relation and the operator TðÞ allows the separation of the translational components of a relative pose. The absolute rotational error is analogously defined by Eq. 23: eR ¼ N 1X jRðdi;j di;j Þj; N i;j ð23Þ wherein RðÞ is the operator that separates the angular component (orientation) of a relative pose and is completely analogous to TðÞ. Recall that the operation used to compute pose difference is given in Eq. 2. 6.2. Benchmark datasets To validate our SLAM technique, and enable its comparison to other systems currently in existence we have selected eight public domain datasets. The datasets were selected based on two criteria: diversity and notoriety. By diversity we mean that the datasets were selected with the intent of presenting various distinctive sets of characteristics. By notoriety we simply bring attention to the widespread usage of the aforementioned datasets within the SLAM research community, as can be concluded by analyzing the sheer number of works that employ them such as (Grisetti et al., 2007; Kaess et al., 2008; Olson, 2008; Kümmerle et al., 2009; Grisetti et al., 2010; Kümmerle et al., 2011; Hess, Kohler, Rapp, and Andor, 2016). All the datasets we have used can be seen in Fig. 1, and are available at HHoward and Royoward and Roy (2003), Carlone (2014), including reference measurement data that are used to compute the reference relation. First on the top left corner is a reference map of the INTEL Research Lab (INTEL), followed to its right by a reference map of the third floor of the ACES building in the Texas University in Austin (ACES3), then to its right is the reference map of the Freiburg building 79 (FR079), and followed at to the right the reference map of the University of Washington in Seattle (SEATTLE). Below these four maps, we have first the reference map of a site in Orebro University in Sweden (OREBRO), followed to the right by a reference map of the Computer Science Artificial Intelligence Laboratory at MIT (CSAIL) map, followed to the right by a reference map of the MIT Killian Court (KILLIAN). In the remainder of the last row, we show a reference map of the Acapulco Convention Center in Mexico (MEXICO). It is noteworthy to point out that the maps were generated using the MatlabTM function buildMap and are down scaled approximately as indicated in each map of shown in Figure fig:compositeMaps. 6.3. Parameter settings The parameters of the meta-heuristics were adjusted empirically based on 30 repeated alignment tests per scenario regarding each of the used meta-heuristics. Each case was constructed based on a scan of one of the data sets that we selected for random experimentation (a random number generator was used so that the selection could take place without any human bias). We selected 10 scans and applied 3 transformation types to each scan: a pure rotation, a pure translation and a roto-translation. From the resulting 30 scenarios, two variants were generated: one without noise and one with zero mean Gaussian noise and a unit variance. The tests that were carried out led to the choice of parameters specified in Table 1. It is worth mentioning that the time taken to execute the optimization process as well as the achieved accuracy were taken into account. 6.4. Comparison of meta-heuristics’ performance Table 2 shows the absolute translational and rotational errors for each of the 8 used benchmarks of Fig. 1, wherein the lowest error and time values are highlighted. It allows a fair comparison of how each meta-heuristic performed with respect to its accuracy 15 N. Nedjah et al. / Expert Systems with Applications 159 (2020) 113547 Fig. 1. Reference benchmark maps: INTEL [1:4,200], ACES3 [1:4,500], FR079 [1:5,000] and SEATTLE [1:5,000]; OREBRO [1:1,700], CSAIL [1:5,000] and KILLIAN [1:5,000]; MEXICO [1:3,200]. Table 1 Configuration of the meta-heuristic’s parameters. PSO Parameter Swarm (n) Inertia (w) Cognitive (c1 ) Social (c2 ) ABC Value 50 0:7 1:49 1:49 Parameter Colony (n) Food source (N) Threshold (l) FA Value 20 10 10 Parameter Fireflies (n) Randomization (a) Attractiveness (b0 ) Absorption (c) Value 20 0:75 0:2 1:0 16 N. Nedjah et al. / Expert Systems with Applications 159 (2020) 113547 Table 2 Absolute translational errors in meters and rotational errors in radian, regarding each of the 8 used benchmark together with the required execution time per scan matching in milliseconds. Benchmark Metric ABC FA PSO INTEL eT eR ACES3 eT eR 0:0366 0:015 3:33 0:98 243:86 32:18 0:043 0:1 1:277 0:075 365:49 41:33 0:092 0:007 0:234 0:337 1; 479:90 90:33 0:113 0:036 0:619 0:039 849:69 30:27 0:255 0:032 2:28 1:904 56:48 12:78 0:481 0:006 0:381 0:068 270:95 14:99 0:083 0:005 0:617 0:353 100:69 6:81 0:358 0:003 0:128 0:012 63:77 8:78 0:086 0:029 2:05 0:321 185:66 32:69 0:021 0:012 0:253 0:059 112:97 17:78 0:085 0:020 0:985 1:039 1; 588:70 53:75 0:269 0:202 0:043 0:052 655:96 51:99 0:015 0:135 593:90 0:028 0:181 208:01 0:104 0:200 56:63 0:232 0:045 231:02 0:253 0:253 362:68 0:169 0:411 191:68 Time Time FR079 eT eR Time SEATTLE eT eR Time OREBRO eT eR Time CSAIL eT eR KILLIAN eT eR MEXICO eT eR Time Time Time 0:012 0:075 35:57 0:007 0:052 30:51 1:451 0:0170 1:68 0:109 8891:31 472:47 0:6136 0:07 0:759 0:0688 287:16 36:07 in each of the aforementioned eight datasets. The smallest errors are highlighted. Indeed, it is easy to verify that ABC attained a higher degree of accuracy than the other optimization procedures in the estimation of the translational component of the robot’s trajectory in 4 of the 8 datasets, namely: INTEL, OREBRO, CSAIL and KILLIAN. Still regarding the translational estimation, the FA algorithm achieved a higher accuracy than the other two techniques in 3 out of the 8 cases: FR079, KILLIAN and MEXICO. We also remark that the PSO algorithm only obtained a more accurate result in the estimation of the translational components of the trajectory in the ACES3 dataset. In the case of the estimation of the orientation of the robot along its path, unlike what was observed in the estimation of the translational components, the behavior of all meta-heuristics was more balanced. The ABC algorithm only exhibited a performance superior to the other two techniques in 2 of the 8 cases: ACES3 and OREBRO. The PSO algorithm showed a higher accuracy in 4 out of the 8 cases: INTEL, SEATTLE, CSAIL and MEXICO. In this case, the FA algorithm exhibited a performance in between the ones shown by the other two techniques. Moreover, the FA algorithm has presented the smallest absolute rotational error in 2 of the 8 of the test cases: FR079 and KILLIAN. In Fig. 2, the average processing time per scan matching, i.e. the average duration of a cycle of toggling between the scan alignment that sequentially estimates the displacement every time a new scan is acquired and the whole process of updating the pose graph to reflect the new information. It is noteworthy to point out that, even though the choice of optimization technique definitely affects the speed of the whole system, the environmental characteristics seem to be one of the main factors that determine the time consumed processing each scan. On that regard, the fewer distinctive characteristics the environment, and consequently the scans obtained, has, the harder it would be to confidently determine correspondences between measurements acquired from different poses. This leads to a cascade effect, given that low quality correspondences force the pose graph manipulation and optimization subsystems to operate with bigger uncertainty bounds and, consequently, with potentially erroneous loop closure constraints. Bearing that in mind, we may remark that the FA algorithm was the 0:006 0:068 7:41 0:008 0:077 23:39 0:8019 0:1863 2:79 1:266 72:55 5:55 0:0517 0:01 0:614 0:279 79:30 4:45 0:001 0:059 72:98 0:008 0:007 31:55 1:3329 0:1842 1:451 0:557 341:61 39:09 0:689 0:159 0:917 0:0802 381:09 42:62 fastest of the three we tested, outperforming both the other techniques in 6 of the 8 cases, i.e. excluding FR079 and CSAIL. The PSO algorithm was the second fastest, outperforming ABC in 7 out of the 8 test scenarios, i.e. excluding KILLIAN. The ABC algorithm was the slowest of the three meta-heuristics, in spite of having been faster than PSO in the KILLIAN dataset. 6.5. Comparative analysis We shall, henceforth, compare the results of our proposed solution to other existing SLAM systems. In particular, we shall apply our system, with each of the swarm intelligence meta-heuristics, to each of the datasets that were tested in Kümmerle et al. (2009) using the results reported therein, thus allowing a direct comparison of the results we obtained regarding the accuracy of our approach. We remark that in the following results: the application scan matching (only) was denoted SM, the application of a Rao-Blackwellized particle filter has been denoted RBPF, the application of what the authors of Kümmerle et al. (2009) called Graph Mapping was denoted GM and the results denoted Cartographer are but a direct application of the system proposed in Hess et al. (2016). The scan matching employed in Kümmerle et al. (2009) consists of an incremental and sequential alignment of scans as described within LLu and Miliosu and Milios (1997), Censi, Iocchi, and Grisetti (2005). The RBPF based solution used was based on the system described in Grisetti et al. (2007), and used 50 particles. The Graph Mapping approach estimates a map by means of a pose graph optimization procedure described in detail in Grisetti et al. (2010). The Cartographer system is a recent approach to SLAM and was described in some detail in Hess et al. (2016). Cartographer is quite similar to our solution in many aspects since both use scan matching as the core mechanism for the estimation of constraints for the pose graph, both rely on pose graph optimization to recover the map and trajectory estimates and both operate online. Our implementation, however, employs swarm intelligence based optimization to perform scan matching, as opposed to Newton-like methods, and uses no pre-computed grids, unlike Cartographer. It is worthy mentioning that all standard deviations have been omitted from the bar charts in Figs. 3 N. Nedjah et al. / Expert Systems with Applications 159 (2020) 113547 17 Fig. 2. Average processing time per scan matching in milliseconds in logarithmic scale for the proposed approach. and 4 due to the magnitude difference between the error values of some other systems and ours, which had a much smaller spread as will be further demonstrated. Note that only 5 out of the 8 maps are used in this comparison. This is simply due to the nonavailability of the performance metrics regarding the SEATTLE, OREBRO and MEXICO datasets. Amongst the meta-heuristics we have tested, ABC is the one that obtained the lower absolute translational error in the majority Fig. 3. Comparative chart of the absolute translational error in meters, regarding 5 of the 8 public domain benchmarking datasets utilized. Fig. 4. Comparative chart of the absolute rotational error in degrees, regarding 5 out of the 8 public domain benchmarking datasets utilized. of the cases. In that regard, when we compare the results achieved by the proposed solution, using the ABC meta-heuristic, with Cartographer, for example, we shall find that in two of the 5 datasets, for which we have the results of Cartographer, namely INTEL and CSAIL datasets our system had, respectively, 22% and 44% lower absolute translational errors. Furthermore, in the particular case of the CSAIL dataset the absolute rotational error was 50:95% inferior to that of Cartographer. Nonetheless, in the ACES3 dataset, our implementation obtained an absolute rotational error that was 242:36% superior to the one reported for Cartographer. A comparison to the RBPF based solution was even more encouraging, and our approach has obtained results that are in the order of or superior to the particle filter approach in 60% of the cases analyzed. Further still, it is also verifiable that in 40% of the considered cases our implementation has shown results that were either within the same bounds or superior to the ones reported for Graph Mapping. In fact, in ACES3 dataset our approach managed to get a translational error that was 87:86% inferior to what is reported Graph Mapping. As was expected, our approach was shown to be more effective than the exclusive application of scan matching in 80% of the scenarios contemplated within this particular benchmark. The Firefly Algorithm did not achieve results as accurate as those of ABC, even though it was the fastest of the 3 metaheuristics we have tested in 87:5% of the cases and consuming, on average, only 23% of the time spent per scan by ABC. The FA meta-heuristic has shown to be faster in the following datasets: INTEL, FR079, SEATTLE, OREBRO, KILLIAN and MEXICO. It is noteworthy to observe that FA is the absolute winner in the case of the MEXICO dataset as it achieved the lowest translational err, the lowest rotational error and this in a record minimal execution time. None of the other techniques has reached such a outstanding performance. The particle swarm optimization technique has shown an accuracy that was inferior to that achieved when applying ABC, but its average processing time per scan was in between the one presented by the other two meta-heuristics. The poor accuracy shown in 62:5% of the cases, however, leaves little room for its usage as a compromise solution in the form of trade of speed for accuracy, at least in the form known as global best PSO, which we employed. Furthermore, we strongly think that the proposed SLAM system would profit a great deal in terms of accuracy if it embeds the likelihood field (Thrun et al., 2005), which takes into account measurement uncertainty, into the proposed methodology. An example is the likelihood analysis used by GMapping (Grisetti et al., 2007). 18 N. Nedjah et al. / Expert Systems with Applications 159 (2020) 113547 Because the performance of our proposed approach is highly dependent on that of the scan matching, we have also complemented this analysis by comparing the accuracy obtained by some existing different scan-matching algorithms, namely: standard ICP algorithm (LLu and Miliosu and Milios, 1997), PLICP (Censi, 2008) and PSM (DDiosi and Kleemaniosi and Kleeman, 2007). The dataset used is the INTEL Lab and the MIT KILLIAN. These two benchmarks are used due to the availability of performance measures in Li, Zhong, Hu, and Ai (2016), which are computed as in the pointto-point metrics defined in Eq. 24:a ex ¼ N 1X jv x v xi j; N i¼1 i eh ¼ N 1X jv h v hi j; N i¼1 i ey ¼ N 1X jv y v yi j; N i¼1 i ð24Þ wherein x and y are the ground truth regarding translation and h is that regarding orientation, and N denotes the number of scan pairs used. Table 3 shows the translational and rotational errors achieved by the compared scan-matching algorithms, wherein the lowest error values are highlighted. In this experiment, we use 50 pairs of scans, randomly selected from those available in the data of the used benchmarks (HHoward and Royoward and Roy, 2003). It is important to emphasize that the same scans are used for all compared matching techniques, and that the performance ICP, PLICP and PSM are taken from Li et al. (2016). The comparison of these performances is illustrated via the bar diagram of Fig. 5. Based on the results of these two case studies, i.e. INTEL and CSAIL, it is safe to conclude, the proposed approach achieves better accuracy than those obtained by the ICP and PLICP both in terms of translational and rotational movements. Furthermore, the swarmbased technique achieved better accuracy than PSM regarding translational movements. For the four instances (i.e. either ex and/or ey for both benchmarks), the proposed technique provides alignments with lower errors than PSM. Nonetheless, the latter provides somehow better, but similar performance regarding the rotational component. For both benchmarks, PSM occasioned the lowest rotational error. It also safe to conclude that the proposed scan matching based on ABC produces better alignments both in terms of translational and rotational adjustments. However, it noticeable that the proposed scan-matching solutions based on FA and PSO introduce higher errors regarding rotational movements, with PSO being the worst case. It is noteworthy to emphasize that generally speaking, PSM and the proposed scan-matching solutions based on ABC, FA and PSO all perform much better than ICP and PLICP both regarding the translational and rotational errors, with PLICP achieving better accuracy than standard ICP. However, it is worth mentioning that without initial guess of ICP, i.e. the initial guess was set to zero. Finally, it is important to point out that, because the execution times for public benchmarks were not provided for the compared SLAM methods nor for the scan matching techniques, we are not able to situate the proposed approach in terms of execution speed, except regarding the ICP scan matching, as it was also implemented locally. For 100 iterations, it achieved in average a slightly lower execution time. However, given the fact that the ABC, FA and PSO are computationally intensive due to the repeated evaluation of the objective functions during the scan-matching step, we suspect that our approach would require more time than PLICP and PSM. So, this may limit the application of the proposed SLAM in a system, wherein a real-time response is required. Nonetheless, a parallel GPU-based implementation of the proposed approach can certainly mitigate this limitation, and it might even outperform existing approaches as the meta-heuristics are embarrassingly parallel workloads. Table 3 Translational errors in meters and rotational errors in radian, regarding the scan matching step only for the INTEL and CSAIL datasets for different scan-matching algorithms. Datasets INTEL Metrics ex ey eh ex ey eh 0.44 0.17 0.06 0.0298 0.2811 0.1239 0.36 0.22 0.22 0.0215 0.03593 0.0799 0.41 0.39 0.08 0.1211 0.1015 0.1399 0.58 0.53 0.28 0.0178 0.0751 0.1510 0.27 0.29 0.09 0.0185 0.0503 0.0293 0.25 0.26 0.13 0.1659 0.2012 0.2528 Algorithms Proposed ICP PLICP PSM ABC FA PSO CSAIL Fig. 5. Comparative chart of the point-to-point translational errors in meters, and rotational errors in radian, regarding the INTEL and CSAIL datasets for different scanmatching algorithms. N. Nedjah et al. / Expert Systems with Applications 159 (2020) 113547 7. Conclusions In this paper, we investigated the development of a solution to the simultaneous location and mapping problem using swarm intelligence techniques, namely ABC, FA and PSO. It is noteworthy to emphasize that swarm-intelligence-based optimization is only applied to scan matching, but not to pose graph optimization. The SLAM proposed approach is based on two pillars: the scan alignment, through the exploitation of the swarm intelligence techniques, based on the principle of maximum likelihood; and the application of a pose graph optimization system as a way to allow the system to maintain consistent estimates over extensive mapping sessions. The proposed scan-matching step proceeds into two main steps, aiming at further reducing the alignment error: The scan-to-scan alignment and the scan-to-map alignment. Both steps are achieved using the same swarm-based optimization meta-heuristics. The proposed solution has been tested on eight public domain datasets and its performance was evaluated, to the extent possible, using methodologies employed by other works in the field to allow a meaningful comparison and analysis of the different results. The experimentation we have done suggests that the ABC algorithm may be better suited to the scan-matching problem, in the SLAM context, as far as accuracy is concerned. On the other hand, the FA algorithm has presented itself as a better compromise solution compared to ABC due to its similar, but slightly inferior, precision and higher average execution speed. Indeed, in our tests the FA algorithm showed a performance in between that of ABC and PSO, overcoming the accuracy of the former in a few cases and being in most cases faster than the latter, and at least as accurate. As an overall conclusion, we can safely establish that while ABC offered accuracy and PSO offered speed, FA offered a trade-off between both metrics. Furthermore, we compared the performance of the proposed approach to three existing SLAM implementations. So, considering the used benchmarks and regarding the translational and rotational absolute errors, the proposed approach achieved similar performance in most cases (3 out of 5) and showed a better performance for some of the evaluated benchmarks (1 out 5). One of the meta-heuristics, namely ABC, obtained better translational and rotational absolute error than all compared existing implementation. Nonetheless, it exhibited a lower performance in one of the studied cases (1 out 5). We have also observed that while the accuracy provided by the proposed scan-matching approach is desirable, such characteristic comes at a price. The trade-off between speed and accuracy in the scan-matching procedure has to be carefully analyzed, since the higher the error in this stage of the process, the more difficult it becomes to find the optimal (most likely) configuration of nodes in the pose graph. Indeed, in many cases where the time of a single alignment for one of the three techniques was exceptionally lower than the time required by the other two, the time consumed while seeking loop closures grew up, as did the time required to optimize the pose-graph. In fact, this increase is often more than both the time taken seeking adequate loop closures and optimizing the pose-graph altogether. This happens especially in scenarios that involve high alignment errors, and higher uncertainty, not only compensated the time required per alignment but actually outgrew it. Here is a classical scenario, where the ‘‘There is no such a thing as free lunch” applies. So, it would be interesting to further investigate the application of the ABC to improve its execution time to take advantage of the achieved high accuracy. Also, the same strategy could be explored with FA to improve the scanmatching translational and rotational accuracy without impacting too much the overall execution time. Both improvements can be 19 viewed as a tuning the configuration of the parameters of the meta-heuristics. One possible approach for the efficient parameter setting is an adaptive configuration during the SLAM actual operation. Moreover, we intend to provide a parallel GPU-based implementation in order to reduce the execution time during scan alignments. Another alternative for future work consists of extending the proposed solution to 3D SLAM. Such an extension would only entail expanding the coordinate of the basic agent position. Furthermore, we would like also to run the proposed implementation on a real robotic platform and apply it to solve a real-world SLAM-based application. Declaration of Competing Interest The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper. Acknowledgement First and foremost, we are eternally grateful to the reviewers of this paper. The implementation of their recommendations improved a great deal the contents of the paper. We grateful to the Research Support Foundation of the State of Rio de Janeiro— FAPERJ ( http://www.faperj.br) for funding this research. We also would like to thank the National Council of Scientific and Technological Development in Brazil—CNPq ( http://www.cnpq.br) for the continuous financial support. References Akai, N., Morales, Y., Takeuchi, E., Yoshihara, Y., & Ninomiya, Y. (2017). Robust localization using 3D NDT scan matching with experimentally determined uncertainty and road marker matching. In Proceedings of the IEEE intelligent vehicles symposium (pp. 1356–1363). IEEE Press. Baeck, T., Fogel, D., & Michalewicz, Z. (2000). Evolutionary computation 1: Basic algorithms and operators (Chapter 23). Oxon, OX: Taylor & Francis. Bentley, J. L. (1980). Multidimensional divide-and-conquer. Communications of the ACM, 23(4), 214–229. Bentley, J. L., & Friedman, J. H. (1979). Data structures for range searching. ACM Computing Surveys, 11(4), 397–409. Besl, P., & McKay, N. (1992). A method for registration of 3-d shapes. IEEE Transactions on Pattern Analysis and Machine Intelligence, 14(2), 239–256. Biber, P., & Strasser, W. (2003). The normal distributions transform: A new approach to laser scan matching (3, pp. 2743–2748). IEEE Press. Carlisle, A. & Dozier, G. (2000). Adapting particle swarm optimization to dynamic environments. In International conference on artificial intelligence (Vol. 1, pp. 429–434). Las Vegas, Nevada, USA: IEEE Press.. Carlone, L. (2014). Robotics 2d-laser datasets. http://www.ipb.uni-bonn. de/datasets/.. Carlone, L., Aragues, R., Castellanos, J., & Bona, B. (2011). A linear approximation for graph-based simultaneous localization and mapping. In Robotics: Science and Systems VII. Censi, A. (2008). An ICP variant using a point-to-line metric. In 2008 IEEE international conference on robotics and automation, number 4543181 (pp. 19– 25). Pasadena, USA: IEEE.. Censi, A., Iocchi, L. & Grisetti, G. (2005). Scan matching in the hough domain. In Proceedings – IEEE international conference on robotics and automation (pp. 2739– 2744). Barcelona, Spain: IEEE.. Chatterjee, A., & Matsuno, F. (2010). A geese pso tuned fuzzy supervisor for EKF based solutions of simultaneous localization and mapping (SLAM) problems in mobile robots. Expert Systems with Applications, 37(8), 5542–5548. Chatterjee, A., Ray, O., Chatterjee, A., & Rakshit, A. (2011). Development of a real-life EKF based SLAM system for mobile robots employing vision sensing. Expert Systems with Applications, 38(7), 8266–8274. Chen, Y., & Medioni, G. (1992). Object modeling by registration of multiple range images. Image and Vision Computing, 10, 145–155. Cox, I. J. (1991). Blanche—an experimental in guidance and navigation of an autonomous robot vehicle. IEEE Transactions on Robotics and Automation, 7(2), 193–204. Diosi, A., & Kleeman, L. (2007). Fast laser scan matching using polar coordinates. International Journal of Robotic Research, 26, 1125–1153. Dorigo, M., Caro, G. D., & Gambardella, L. M. (1999). Ant algorithms for discrete optimization. Artificial Life, 5, 137–172. 20 N. Nedjah et al. / Expert Systems with Applications 159 (2020) 113547 Druon, S., Aldon, M.-J., & Crosnier, A. (2006). Color constrained ICP for registration of large unstructured 3d/color data sets. IEEE International Conference on Information Acquisition, 62, 249–255. Engelbrecht, A. P. (2006). Fundamentals of computational swarm intelligence (Chapters 12 and 13). John Wiley & Sons, Chichester, West Sussex, England, 1 edition.. Fister, I., Yang, X. S., & Brest, J. (2013). A comprehensive review of firefly algorithms. Swarm and Evolutionary Computation, 13, 34–46. Friedman, J. H., Bentley, J. L., & Finkel, R. A. (1977). An algorithm for finding best matches in logarithmic expected time. ACM Transactions on Mathematical Software, 3(3), 209–226. Gao, M.-L., Luo, D.-S., Teng, Q.-Z., He, X.-H., & Jiang, J. (2013). Object tracking using firefly algorithm. IET Computer Vision, 7(4), 227–237. Grisetti, G., Kummerle, R., Stachniss, C., & Burgard, W. (2010). A tutorial on graphbased SLAM. IEEE Intelligent Transportation Systems Magazine, 2(4), 31–43. Grisetti, G., Stachniss, C., & Burgard, W. (2007). Improved techniques for grid mapping with rao-blackwellized particle filters. IEEE Transactions on Robotics, 23, 34–46. Guennebaud, G., Jacob, B. & et al. (2010). Eigen v3. http://eigen.tuxfamily.org.. Gutmann, S., & Schlegel, C. (1996). Amos: Comparison of scan matching approaches for self-localizationin indoor environments. In Proceedings of the First Euromicro Workshop on Advanced Mobile Robots (pp. 61–67). IEEE Press. Gutmann, S., Weigel, T., & Nebel, B. (1999). Fast, accurate, and robust self-localization in polygonal environments. Robocup workshop at IJCAI-99 (Vol. 3. IEEE Press. Hertzberg, C., Wagner, R., Frese, U., & Schroder, L. (2013). Integrating generic sensor fusion algorithms with sound state representations through encapsulation of manifolds. Information Fusion, 14, 57–77. Hess, W., Kohler, D., Rapp, H., & Andor, D. (2016). Real-time loop closure in 2d lidar SLAM. 2016 IEEE international conference on robotics and automation (ICRA) (pp. 1271–1278). Stockholm, Sweden: IEEE. number 7487258. Howard, A. & Roy, N. (2003). The robotics data set repository (radish). http:// radish.sourceforge.net/.. Jeong, J., Yoon, T. S., & Park, J. B. (2018). Multimodal sensor-based semantic 3d mapping for a large-scale environment. Expert Systems with Applications, 105, 1–10. Jiang, X., Li, T., & Yu, Y. (2016). A novel SLAM algorithm with adaptive kalman filter. In 2016 International conference on advanced robotics and mechatronics (ICARM) (pp. 107–111). Macau, China: IEEE. Kaess, M., Ranganathan, A. & Dellaert, F. (2008). iSAM: Incremental smoothing and mapping. 24:1365–1378.. Karaboga, D. (2005). An idea based on honey bee swarm for numerical optimization. Kayseri, Turkey: Technical report. Kennedy, J. (1997). The particle swarm: social adaptation of knowledge. In IEEE international conference on evolutionary computation (pp. 303–308). Indianapolis, USA: IEEE.. Kennedy, J. (2006). Swarm intelligence. In Handbook of nature-inspired and innovative computing (pp. 187–219). New York, USA: Springer. Kennedy, J. & Eberhart, R. C. (1995). Particle swarm optimization. In Proceedings of the 1995 IEEE international conference on neural networks (pp. 1942–1948). Perth, Australia, IEEE Service Center, Piscataway, NJ: IEEE.. Kokoska, S., & Zwillinger, D. (1999). CRC standard probability and statistics tables and formulae (Chapter 3). Boca Raton, Florida, EUA: CRC Press. Kümmerle, R., Grisetti, G., Strasdat, H., Konolige, K., & Burgard, W. (2011). G2O: A general framework for graph optimization. In Proceedings – IEEE international conference on robotics and automation (pp. 3607–3613). Shanghai, China: IEEE. Kümmerle, R., Steder, B., Dornhege, C., Ruhnke, M., Grisetti, G., Stachniss, C., & Kleiner, A. (2009). On measuring the accuracy of SLAM algorithms. Autonomous Robots, 27(4), 387. Li, J., Zhong, R., Hu, Q., & Ai, M. (2016). Feature-based laser scan matching and its application for indoor mapping. Sensors, 16(1265). Lu, F., & Milios, E. (1997). Globally consistent range scan alignment for environment mapping. Autonomous Robots, 4(4), 333–349. Lu, F., & Milios, E. (1997). Robot pose estimation in unknown environments by matching 2d range scans. Journal of Intelligent and Robotic Systems, 18(3), 249–275. Luo, F.-Y. (2017). Accelerated ICP based on linear extrapolation. Mingas, G., Tsardoulias, E., & Petrou, L. (2012). An FPGA implementation of the SMG-SLAM algorithm. Microprocessor and Microsystems, 36(3), 190–204. Montemerlo, M., Thrun, S., Koller, D. & Wegbreit, B. (2002). Fastslam: A factored solution to the simultaneous localization and mapping problem. In Proceedings of 8th National Conference on artificial intelligence/14th conference on innovative applications of artificial intelligence (68(2), pp. 593–598).. Mutz, F., Veronese, L. P., Oliveira-Santos, T., de Aguiar, E., Cheein, F. A. A., & Souza, A. F. D. (2016). Large-scale mapping in complex field scenarios using an autonomous car. Expert Systems with Applications, 46, 439–462. Nieto, J., Bailey, T., & Nebot, E. (2007). Recursive scan-matching SLAM. Robotics and Autonomous Systems, 55(1), 39–49. Oliveira, P. J. A., Nedjah, N. & Mourelle, L. M. (2017). SLAM baseado em scanmatching com otimização por enxame de partículas. In Proceedings of the XIII Brazilian Congress on Computational Intelligence, Curitiba, Brazil, DOI: 10.21528/ CBIC2017-96.. Olson, E. (2009). Real-time correlative scan matching. In IEEE international conference on robotics and automation (ICRA’09) (pp. 4387–4393). IEEE Press.. Olson, E. B. (2008). Robust and efficient robotic mapping. PhD thesis, Cambridge, MA, USA. AAI0821013.. Pomerleau, F., Colas, F., Ferland, F. & Michaud, F. (2009). Relative motion threshold for rejection in ICP registration. In Results of the 7th international conference on field and service robotics (Vol. 62, pp. 229–238).. Segal, A., Haehnel, D., & Thrun, S. (2009). Generalized-ICP. Robotics: Science and systems V (volume 5, pp. 1–8). Cambridge, Massachusetts: MIT Press. Smith, R., Self, M., & Cheeseman, P. (1990). Estimating uncertain spatial relationships in robotics. Autonomous Robot Vehicles, 4(April), 167–193. Smith, R. C., & Cheeseman, P. (1986). On the representation and estimation of spatial uncertainty. The International Journal of Robotics Research, 5(4), 56–68. Thrun, S., Burgard, W., & Fox, D. (2005). Probabilistic robotics (Chapters 6 and 10) (1, p. edition.). Cambridge, Mass, USA: The MIT Press. Thrun, S., & Montemerlo, M. (2006). The GraphSLAM algorithm with applications to large-scale mapping of urban structures. International Journal on Robotics Research, 25(5), 403–430. Walter, M. R., Eustice, R. M., & Leonard, J. J. (2007). Exactly sparse extended information filters for feature-based SLAM. The International Journal of Robotics Research, 26(4), 335–359. Woods, F. S. (2005). An introduction to advanced methods in analytic geometry (Chapter 14). New York, USA: Ginn and Company. Zhang, Q.-B., Wang, P., & hai Chen, Z. (2019). An improved particle filter for mobile robot localization based on particle swarm optimization. Expert Systems with Applications, 135, 181–193. Zhang, Z. (1994). Iterative point matching for registration of free-form curves. International Journal of Computer Vision, 13(2), 119–152.