ALGORITHM ANALYSIS

1

ANALYSIS OF ALGORITHMS

• Analysis of Algorithms is the area of computer science that

provides tools to analyze the efficiency of different methods of

solutions.

• How do we compare the time efficiency of two algorithms that

solve the same problem?

2

Running Time

– Easier to analyze

– Crucial to applications such as

games, finance and robotics

best case

average case

worst case

120

100

Running Time

• Most algorithms transform

input objects into output

objects.

• The running time of an

algorithm typically grows with

the input size.

• Average case time is often

difficult to determine.

• We focus on the worst case

running time.

80

60

40

20

0

1000

2000

3000

4000

Input Size

3

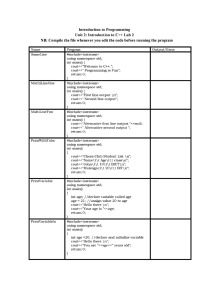

Experimental Approach

• Write a program implementing the

9000

8000

algorithm

7000

varying size and composition

• Use a method like clock() to get an

accurate measure of the actual

running time

Time (ms)

• Run the program with inputs of

6000

5000

4000

3000

2000

1000

0

• Plot the results

0

50

100

Input Size

4

Limitations of Experiments

– How are the algorithms coded?

• Comparing running times means comparing the

implementations.

• It is necessary to implement the algorithm, which

may be difficult

• implementations are sensitive to programming style

that may cloud the issue of which algorithm is

inherently more efficient.

– What computer should we use?

• We should compare the efficiency of the algorithms

independently of a particular computer.

5

Limitations of Experiments (cont.)

– What data should the program use?

• Results may not be indicative of the running time

on other inputs not included in the experiment.

• Any analysis must be independent of specific

data.

• As noted above, experimental analysis is valuable, but

it has its limitations. If we wish to analyze a particular

algorithm without performing experiments on its

running time, we can perform an analysis directly on

the high-level pseudo-code instead.

6

Pseudocode

Example: find max

• High-level description of

element of an array

an algorithm

• More structured than

Algorithm arrayMax(A, n)

English prose

Input array A of n integers

• Less detailed than a

Output maximum element of A

program

currentMax A[0]

• Preferred notation for

for i 1 to n 1 do

describing algorithms

if A[i] currentMax then

• Hides program design

currentMax A[i]

issues

return currentMax

7

Theoretical Analysis

• Uses a high-level description of the

algorithm instead of an implementation

• Characterizes running time as a function of

the input size, n.

• Takes into account all possible inputs

• Allows us to evaluate the speed of an

algorithm independent of the

hardware/software environment

8

Theoretical analysis of time efficiency

Time efficiency is analyzed by determining the

number of repetitions of the primitive(basic)

operation as a function of input size

• Basic operation: the operation that contributes

most towards the running time of the algorithm

9

Primitive operations

We define a set of primitive operations as the following:

• Basic computations performed by an algorithm

• Identifiable in pseudo-code

• Largely independent from the programming language

• Exact definition not important

• Assumed to take a constant amount of time in the RAM

model

10

Primitive operations (cont.)

Examples:

• Assigning a value to a variable

• Calling a function

• Performing an arithmetic operation (for example,

adding two numbers)

• Comparing two numbers

• Indexing into an array

• Following an object reference

• Returning from a function

11

Primitive operations (cont.)

• When we analyze algorithms, we should employ

mathematical techniques that analyze algorithms

independently of specific implementations,

computers, or data.

• To analyze algorithms:

– First, we start to count the number of significant

operations (primitive operations) in a particular solution

to assess its efficiency.

– Then, we will express the efficiency of algorithms

using growth functions.

12

The Execution Time of Algorithms

• Each operation in an algorithm (or a program) has a cost.

Each operation takes a certain amount of time.

count = count + 1;

take a certain amount of time, but it is constant

A sequence of operations:

count = count + 1;

sum = sum + count;

T1

T2

Total Time = T1 + T2

13

Counting Primitive Operations

• By inspecting the pseudocode, we can determine the maximum

number of primitive operations executed by an algorithm, as a

function of the input size

Algorithm arrayMax(A, n)

currentMax A[0]

for i 1 to n 1 do

if A[i] currentMax then

currentMax A[i]

{ increment counter i }

return currentMax

Times

2

2n

2(n 1)

2(n 1)

2(n 1)

1

Total

8n 3

14

Counting Primitive Operations

Example: Simple Loop

i = 1;

sum = 0;

while (i <= n) {

i = i + 1;

sum = sum + i;

}

Times

1

1

n+1

2n

2n

Total Time = 1 + 1 + (n+1) + 2n + 2n

The time required for this algorithm is proportional to n

15

Algorithm Growth Rates

• We measure an algorithm’s time requirement as a function of the

problem size.

– Problem size depends on the application: e.g. number of elements in a list for a

sorting algorithm, the number disks.

• So, for instance, we say that (if the problem size is n)

– Algorithm A requires 5*n2 time units to solve a problem of size n.

– Algorithm B requires 7*n time units to solve a problem of size n.

• The most important thing to learn is how quickly the algorithm’s

time requirement grows as a function of the problem size.

– Algorithm A requires time proportional to n2.

– Algorithm B requires time proportional to n.

• The change in the behavior of an algorithm as the input size

increases is known as growth rate.

• We can compare the efficiency of two algorithms by comparing

their growth rates.

16

Algorithm Growth Rates (cont.)

Time requirements as a function

of the problem size n

17

Common Growth Rates

Function

c

log N

log2N

N

N2

N3

2N

Growth Rate Name

Constant

Logarithmic

Log-squared

Linear

Quadratic

Cubic

Exponential

18

Figure 6.1

Running times for small inputs

19

Asymptotic order of growth

A way of comparing functions that ignores constant

factors and small input sizes

• Big-O: O(g(n)): class of functions f(n) that grow

no faster than g(n)

• Big- theta Θ(g(n)): class of functions f(n) that

grow at same rate as g(n)

• Big-omega: Ω(g(n)): class of functions f(n) that

20

grow at least as fast as g(n)

Order-of-Magnitude Analysis and Big O

Notation

• If Algorithm A requires time proportional to g(n), Algorithm A

is said to be order g(n), and it is denoted as O(g(n)).

• The function g(n) is called the algorithm’s growth-rate

function.

• Since the capital O is used in the notation, this notation is called

the Big O notation.

• If Algorithm A requires time proportional to n2, it is O(n2).

• If Algorithm A requires time proportional to n, it is O(n).

21

Definition of the Order of an Algorithm

Definition:

We say that the function f(n) is O(g(n)) if there is a

real constant c> 0 and an integer constant n0 1

such that :

f(n)< c*g(n) for all n n0.

• The requirement of n n0 in the definition formalizes the notion

of sufficiently large problems.

– In general, many values of c and n can satisfy this definition.

22

Order of an Algorithm

• If an algorithm requires n2–3*n+10 seconds to solve a problem

size n. If constants c and n0 exist such that

c*n2 > n2–3*n+10 for all n n0 .

the algorithm is order n2 (In fact, c is 3 and n0 is 2)

3*n2 > n2–3*n+10 for all n 2 .

Thus, the algorithm requires no more than *n2 time units for n

n0 ,

So it is O(n2)

23

Big-Oh Examples

7n-2

7n-2 is O(n)

need c > 0 and n0 1 such that 7n-2 c•n for n n0

this is true for c = 7 and n0 = 1

3n3 + 20n2 + 5

3n3 + 20n2 + 5 is O(n3)

need c > 0 and n0 1 such that 3n3 + 20n2 + 5 c•n3 for n n0

this is true for c = 4 and n0 = 21

24

Order of an Algorithm (cont.)

25

A Comparison of Growth-Rate Functions

26

Growth-Rate Functions

• If an algorithm takes 1 second to run with the problem size 8,

what is the time requirement (approximately) for that algorithm

with the problem size 16?

• If its order is:

O(1)

T(n) = 1 second

O(log2n) T(n) = (1*log216) / log28 = 4/3 seconds

O(n)

T(n) = (1*16) / 8 = 2 seconds

O(n*log2n) T(n) = (1*16*log216) / 8*log28 = 8/3 seconds

O(n2)

T(n) = (1*162) / 82 = 4 seconds

O(n3)

T(n) = (1*163) / 83 = 8 seconds

O(2n)

T(n) = (1*216) / 28 = 28 seconds = 256 seconds

27

Properties of Growth-Rate Functions

1. We can ignore low-order terms in an algorithm’s growth-rate

function.

–

–

If an algorithm is O(n3+4n2+3n), it is also O(n3).

We only use the higher-order term as algorithm’s growth-rate function.

2. We can ignore a multiplicative constant in the higher-order term

of an algorithm’s growth-rate function.

–

If an algorithm is O(5n3), it is also O(n3).

3. O(f(n)) + O(g(n)) = O(f(n)+g(n))

–

–

–

We can combine growth-rate functions.

If an algorithm is O(n3) + O(4n2), it is also O(n3 +4n2) So, it is O(n3).

Similar rules hold for multiplication.

28

What to Analyze

• An algorithm can require different times to solve different

problems of the same size.

– Eg. Searching an item in a list of n elements using sequential search. Cost:

1,2,...,n

• Worst-Case Analysis –The maximum amount of time that an

algorithm require to solve a problem of size n.

– This gives an upper bound for the time complexity of an algorithm.

– Normally, we try to find worst-case behavior of an algorithm.

• Best-Case Analysis –The minimum amount of time that an

algorithm require to solve a problem of size n.

– The best case behavior of an algorithm is NOT so useful.

• Average-Case Analysis –The average amount of time that an

algorithm require to solve a problem of size n.

29

What is Important?

• An array-based list retrieve operation is O(1), a linked-listbased list retrieve operation is O(n).

• But insert and delete operations are much easier on a linked-listbased list implementation.

When selecting the implementation of an Abstract Data Type

(ADT), we have to consider how frequently particular ADT

operations occur in a given application.

• If the problem size is always small, we can probably ignore the

algorithm’s efficiency.

– In this case, we should choose the simplest algorithm.

30