Chapter-VI

6.1

INTRODUCTION

This chapter provides necessary background for edge detection which is a

sub process of image segmentation. Image segmentation is the process of dividing

the image into regions or segments which are dissimilar in certain aspects or features

such as colour, texture or gray level. Edge detection is an important task in image

processing and it is a type of image segmentation technique where edges are

detected in an image.

Edges are introduced as a set of connected points lying on the boundary

between two regions. The edges represent object boundaries and therefore can be

used in image segmentation to subdivide an image into its basic regions or objects.

The techniques discussed in this chapter provide a general application oriented

framework in which both spatial and frequency domains are analyzed to achieve

proper edge detection. Fundamentally, an edge is a local concept whereas a region

boundary is a more global idea due to its definition. A reasonable definition of edge

requires the ability to measure gray-level transitions in a meaningful way.

Edge pixels are pixels at which the intensity of an image function varies

abruptly and edges are a set of connected edge pixels. Edge detectors are local

image processing methods designed to detect edges. In a function, singularities

can be characterized easily as discontinuities where the gradient approaches

infinity. However, image data is discrete, so edges in an image are often defined

as the local maxima of the gradient [Gonzalez & Woods, 2008].

6.2 GOAL OF EDGE DETECTION

Edges produce a line drawing from an image of that scene. Some important

features that can be extracted from the edges of an image are lines, curves and

corners. These features are used by higher level computer vision algorithms.

127

6.2.1

Causes of intensity changes in images

The various factors that contribute to intensity level changes are

Geometric events

Object boundary (discontinuity in depth and/or surface color and texture)

Surface boundary (discontinuity in surface orientation and/or surface color

and texture)

Non-geometric events

6.3

Specularity (direct reflection of light, such as a mirror)

Shadows (from other objects or from the same object)

Inter-reflections between objects

MODELS OF EDGES

There are several ways to model edges and the number of approaches used

for edge detection are discussed and these edge models are classified based on

their intensity profiles.

(a)

(b)

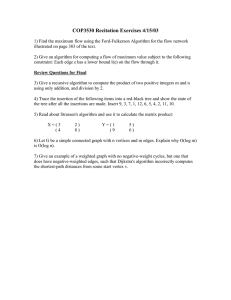

Fig. 6.1. (a) Model of an ideal edge (b) model of a ramp edge

128

Figure 6.1. (a) shows step edge involving

a transition between two

intensity levels occuring ideally over the distance of 1 pixel.Step edges occur in

images generated by a computer for use in areas such as solid modelling and

animation.Figure 6.1. (b) shows a model of intensity of ramp profile where the

slope of the ramp is inversely proportional to the degree of blurring in the edge. In

practice, digital images have edges that are blurred and noisy,with the degree of

blurring detemined principally by the electronic components of the imaging

system [Gonzalez & Woods, 2008].

6.4

OVERVIEW OF EDGE DETECTION

Edge is a boundary between two homogeneous regions. The gray level

properties of the two regions on either side of an edge are distinct and exhibit some

local uniformity or homogeneity between them. Typical analysis and detection of

edges can be done using derivatives with their magnitude and direction.

•

Magnitude of the derivative: measure of the strength/contrast of the edge

•

Direction of the derivative vector: edge orientation

An edge is typically extracted by computing the derivative of an image

intensity function. Derivatives of digital function are defined in terms of differences.

There are various ways to approximate these differences: first order and second

order derivative.

Fig. 6.2 Image of variable intensity and their horizontal intensity profile with its

first and second order derivative.

129

Figure 6.2 shows an image of variable intensity,details near the edge

showing the horizontal intensity profile with its first and second order derivatives.

6.4.1

First order derivative

The approximation of the first order derivative at a point

dimensional function

Taylor series about

of one-

is obtained by expanding the function

and letting

into

and the digital difference can be drawn

considering only the linear terms. The first order derivative of the function

be expressed as

or as

, can

and it is the slope of the tangent line to the

function at the point . Computing the first order derivative: Finite difference in 1D

function

is given by

Computing the first order derivative: Finite difference in 2D function

is given by

When computing the first order derivative at a location , the value of the

function at that point is subtracted from the next point.

6.4.2 Second order derivative

The second derivative of a function is the derivative of the derivative of that

function. The first derivative of the function decides whether the function

is

increasing or decreasing similarly second order derivative decides whether the

function

is increasing or decreasing.

Computing the second order derivative: Finite difference of 1D function

is given by

(6.3)

Computing the second order derivative: Finite difference of 2D function

along

and

directions are given by

130

(6.4)

(6.5)

6.4.3

Analysis of first and second order derivatives

First order derivative produces thicker edges whereas second order

derivative has a stronger response to fine details, thin lines, isolated points, noise

and produces double edge response. In addition second order derivative provides

zero crossing (transition from positive to negative and vice-versa) and is considered

as a good edge detector.

There are two additional properties of a second order derivatives around an edge

•

It produces two values for every edge in an image

•

Its zero crossing can be used for locating the centers of thick edges.

•

It is more sensitive to noise.

Computation of second order derivative requires both previous and

successive points of the function and is capable of detecting discontinuities such as

points, lines and edges. The segmentation of an image along the discontinuities is

achieved by running a mask through an image. The generalized

spatial domain is given in Table 6.1.

Table 6.1.

Mask.

W1

W2

W3

W4

W5

W6

W7

W8

W9

131

mask in

The response of the mask is given by

(6.6)

∑

‘

’ is the intensity of the pixel whose spatial location corresponds to the location of

the

coefficient in the mask.

6.5

TECHNIQUES OF EDGE DETECTION

Edges characterize object boundaries and are therefore useful for

segmentation, registration and object identification in image analysis. The two

different techniques are categorized as

(i)

Spatial domain techniques

(ii)

Transform domain techniques

Each of these two techniques are covered in detail in the two subsections:

6.5.1

Spatial Domain Techniques of Edge Detection

This section focuses on spatial domain techniques of edge detection where

the magnitude of the gradient determines the edges. Due to wealth of information

associated with edges, edge detection is an important task for many applications

related to computer vision and pattern recognition. The features of edge detection

are: Edge strength and Edge orientation. Edge strength determines the magnitude of

the edge pixel and edge orientation provides the angle of the gradient. The

magnitude of the gradient is zero in uniform regions of an image and has a

considerable value based on intensity level variations.

The edge detectors in spatial domain are categorized into gradient based

which determines edges based on first order derivate and Laplacian based which

locates edges based on second order derivate. The gradient is defined as a two

dimensional column vector and the magnitude of the gradient vector is referred to as

gradient. The strength of the response of a derivative operator is proportional to the

degree of discontinuity of an image at the point at which the operator is applied.

Thus, image differentiation enhances edges and other discontinuities such as noise

132

and deemphasizes areas with slowly varying gray-level values. It is observed that

the first- and second-order derivatives have the capability to encounter a noise, a

point, a line, and then the edge of an object. For an image

the magnitude of

the gradient is given by

[ ]

(6.7)

(6.8)

[( )

( ) ]

(6.9)

by approximating the squares and square root by absolute values, mag(

|

|. The direction of the gradient vector is given by

direction of an edge at

|

|

* +. The

is perpendicular to the direction of the gradient vector

at the point. The first order derivatives produce thick edges and enhance prominent

details. The edges are obtained by applying appropriate filter mask

for an image

and is given by

[

The center point

]

denotes

and

indicates

so on. The gradient operators are represented by masks

gradient of an image

and

and

, which measure

in two orthogonal directions. The bidirectional

gradients of an image are represented by inner products of an image and masks

and

6.5.1.1 Roberts Operator

This operator was one of the first and foremost edge detectors and was

proposed by Roberts. As a differential operator the idea behind Roberts cross

operator is to approximate the gradient of an image through discrete differentiation

which is obtained by computing the sum of the squares of the differences between

133

diagonally adjacent pixels. To perform edge detection through the Roberts operator,

convolution of the original image with the following two kernels is done:

*

+ &

*

+

6.5.1.2 Sobel Operator

At each point in an image, the result of the Sobel operator is either the

corresponding gradient vector or the norm of this vector. The Sobel operator is

based on convolving the image with an integer valued filter in horizontal, vertical

directions and is inexpensive in terms of computations. This operator performs a 2D spatial gradient measurement on an image and so emphasizes regions of high

spatial frequency that correspond to edges. Typically it is used to find the

approximate absolute gradient magnitude at each point in an input gray scale

image. The magnitude of the Sobel gradient operator is given by

√

.

[

]

[

&

]

The magnitude is represented by

[ ]

[

By approximation

]

| |+| |

(6.10)

(6.11)

6.5.1.3 Prewitt Operator

The Prewitt operator is based on convolving the image with a small integer

valued filter in horizontal and vertical directions. Thus, the gradient approximation

which it produces is relatively crude, in particular for high frequency variations in

the image.

134

The magnitude of the Prewitt gradient operator is given by

√

[

where

[ ]

]

[

&

[

]

]

(6.12)

6.5.1.4 Canny Operator

The drawback of the above methods is that, a fixed operator cannot be used

to obtain optimal result. A computational approach was developed and an optimal

detector can be approximated by the first order derivative of a Gaussian [Canny,

1986]. His analysis is based on step-edges corrupted by additive Gaussian noise and

the image is smoothened by Gaussian convolution. Canny has proved that the first

order derivative of the Gaussian closely approximates the operator that optimizes the

product of signal-to-noise ratio and localization. This edge detection technique

produces edges from two aspects – edge gradient direction and strength, with good

SNR and edge localization performance [Jun Li & Sheng Ding, 2011]. Thus the

algorithm is computed by

and

using Gaussian function

(6.13)

(6.14)

is the derivate of

with respect to :

is the derivate of

with respect to :

The magnitude of the gradient is computed by

√

which includes non-maxima suppression and hysteresis thresholding. Due to

multiple responses, edge magnitude

may contain wide ridges around the local

135

maxima and it removes non-maxima pixels preserving the connectivity of the

contours through non-maxima suppression. It combines both the derivative and

smoothing properties through Gaussian function in an optimal way to obtain good

edges. Hysteresis thresholding receives non-maxima suppression output and

identifies weak, strong and moderate pixels based on thresholding. The performance

of the Canny algorithm relies on parameters like standard deviation for the Gaussian

filter, and its threshold values.

This Canny algorithm uses an optimal edge detector based on a set of

criteria, which includes finding the most edges by minimizing the error rate,

marking edges as closely as possible to the actual edges to maximize localization,

and marking edges only once when a single edge exists for minimal response.

According to Canny, the optimal filter that meets all the three above criteria above

can be efficiently approximated using the first derivative of a Gaussian function.

1) The first stage involves smoothing the image by convolving with a Gaussian

filter.

2) This is followed by finding the gradient of the image by feeding the smoothened

image through a convolution operation with the derivative of the Gaussian in

both the vertical and horizontal directions. Both the Gaussian mask and its

derivative are separable, allowing the 2-D convolution operation to be

simplified.

3) The non-maximal suppression stage finds the local maxima in the direction of

the gradient, and suppresses all others, minimizing false edges. The local

maxima are found by comparing the pixel with its neighbours along the direction

of the gradient. This helps to maintain the single pixel thin edges before the final

thresholding stage [Shirvakshan & Chandrasekar, 2012].

4) Instead of using a single static threshold value for the entire image, the Canny

algorithm introduced hysteresis thresholding, which has some adaptively to the

local content of the image. There are two threshold levels,

where

. Pixel values above

high and

, low

value are immediately classified as edges.

By tracing the edge contour, neighboring pixels with gradient magnitude values

less than

can still be marked as edges as long as they are above

. This

process alleviates problems associated with edge discontinuities by identifying

136

strong edges, and preserving the relevant weak edges, in addition to maintaining

some level of noise suppression. While the results are desirable, the hysteresis

stage slows the overall algorithm down considerably.

6.5.1.5 Laplacian Operator

The Laplacian method searches for zero crossings in the second derivative of

the image to find edges. An edge has the one-dimensional shape of a ramp and

calculating the derivative of an image can highlight its location [Raman Maini &

Himanshu Aggarwal, 2010]. The Laplacian based edge detection for an image

is based on second order derivatives and is given by

(6.15)

The second order derivative is more aggressive than first order derivative in

enhancing sharp changes and has a stronger response to fine details. This operator

enhances fine details and is unacceptably sensitive to noise; the magnitude of the

Laplacian produces double edges but fails to detect edge direction. Edges are formed

from pixels with derivative values that exceed a preset threshold. The strength of the

response of a derivative operator is proportional to the degree of discontinuity of an

image at the point at which the operator is applied. Thus, image differentiation

enhances edges and other discontinuities such as noise and deemphasizes areas with

slowly varying gray-level values.

6.5.1.6 Merits and Demerits of classical operators

In summary, comparing the responses between first and second-order

derivatives, the following conclusions are arrived at (1) First-order derivatives

generally produce thicker edges in an image and have a stronger response to a graylevel step. (2) Second-order derivatives have a stronger response to fine detail, such

as thin lines, isolated points and produce double response at step changes in gray

level. In most applications, the second order derivative is better suited than the first

order derivative for image enhancement because of the ability to enhance fine detail

due to zero-crossing. The strategy is to utilize the Laplacian to highlight fine detail,

and the gradient to enhance prominent edges. The performance of these partial

137

derivative operators are shown in Figure.6.3 which in turn represents the results for

an image of narrowed artery nerve (defect in heart).

6.5.2 Transform domain techniques of edge detection

The transform domain technique seems to be promising as there is no loss of

edge information and helps in shape and contour tracking problem. The transform

domain techniques of edge detection were developed to work for noisy images.

Although the maxima of the modulus of the DWT are good approximation of the

edges in an image, even in the absence of noise there are many false edges.

Therefore a criterion must be determined to separate the real edges from the false

edges. A threshold on the intensity of the modulus maxima is a good criterion and is

described in following section. Streaking effect is the breaking up of an edge

contour caused by the operator output fluctuating above and below threshold along

the length of the contour.

6.5.2.1 Discrete Wavelet Transform approach

It is difficult to extract meaningful boundaries under noisy circumstances

directly from gray level image data, when the shapes are complex. But better results

have been achieved by first transforming the image into frequency domain

information and detecting discontinuities in intensity levels, then grouping these

edges, thus obtaining more elaborate boundaries. Though the Fourier Transform

(FT) was pioneer in transform domain, due to drawbacks of frequency domain

representation alone, the concept of wavelet a powerful tool for spectral

representation was developed for simultaneous time frequency representation. With

this development, wavelet theory is suitable for local analysis, singularity and edge

detection and have proved that the maxima of the wavelet transform modulus can

detect the location of the irregular structures. This technique has [Mallat & Hwang,

1992] refined the wavelet edge detector, in which a scale of the wavelet that adapts

to the scale of an image can be optimized and its noise level minimizes the effect of

noise on edge detection. The edges of more significance are important and are kept

intact by wavelet transforms and insignificant edges introduced by noise are

removed. Wavelet based edge detection method can detect edges of a series of

integer scales in an image. This can be useful when the image is noisy, or when

edges of certain detail or texture are to be neglected.

138

The wavelet version of the edge detection is implemented by smoothening

the surface with a convolution kernel

and is denoted as

and is

dilated. This is computed with two wavelets that are partial derivatives of :

To limit overhead the scale varies along the dyadic sequence

for

.

(

)

(6.16)

Let us denote for convenience ̅

and ̅

The dyadic wavelet transform of

(

)

at

is represented as

⟨

̅

⟩

The Discrete Wavelet Transform components are proportional to the

smoothened by ̅

coordinates of the gradient vector of

.

⃗(

/

.

̅ )

The modulus of the gradient is proportional to the wavelet transform modulus

(

)

√|

|

|

|

(6.17)

The magnitude of the wavelet transform modulus at the corresponding

locations indicates the strength of the edges caused by sharp transitions.

(

The angle of the gradient is given by

An edge point at scale

is a point, such that

)

(6.18)

(

) is locally

maximum and these points are called Wavelet Transform Modulus Maxima

(WTMM).Thus the scale space support of these modulus maxima corresponds to

multiscale edge points [Mallat, 2009].

139

Edges of higher significance are more likely to be kept by the wavelet

transform across scales. edges of lower significance are more likely to disappear

when the scale increases. A wavelet filter of large scales is more effective for

removing noise, but at the same time increases the uncertainty of the location of

edges. Wavelet filters of small scales preserve the exact location of edges, but

cannot distinguish between noise and real edges. It is required to use a larger scale

wavelet at positions where the wavelet transform decreases rapidly across scales to

remove the effect of noise, while using a smaller scale wavelet at positions where

the wavelet transform decreases slowly across scale to preserve the precise position

of the edges. Although the maxima of the modulus of the DWT are a good

approximation of the edges in an image, even in the absence of noise there are many

false edges as can be seen in Figure 4.2 (b). Therefore, a threshold on the intensity

of the maximum modulus is said to a criterion to separate the real edges from the

false edges and finally edges are retained and their characteristics are preserved

when compared to spatial domain.

6.5.2.2 Fractional Wavelet Transform approach

Unser.M & Blu.T introduced new family of wavelets based on B-Splines.

Singularities and irregular structures carry useful information in two dimensional

signals and the conventional edge detection techniques using gradient operator’s

work well for truly smooth images and not for noisy images. FrWT has all the

features of WT and it represents the signal in Fractional domain and projects the

data in time–fractional-frequency plane.

The FrWT of a 2Dfunction is given by

,

where

along

{ (

)}-

(6.19)

in equation (6.19) are dilation and translation parameters

and

directions.

It has been recognized by several researchers that there exists a strong

connection between wavelets and differential operators [Jun Li, 2003]. So, transient

features such as discontinuities are characterized by wavelet coefficients in their

neighborhood, the same way as a derivative acts locally too. One of the primary

140

reasons for the success of FrWT in edge detection application is that they can be

differentiated simply by taking finite differences. Wavelet Transform Modulus

Maxima (WTMM) developed by Mallat, carries the properties of sharp signal

transitions and singularities whereas FrWT acts as a multiscale differential operator.

The derivative like behavior of FrWT provides promising results when compared to

DWT approach.

The new family of the WT is constructed using linear combinations of the

integer shifts of the one-sided power functions:

̂

*

+

Where α is the fractional degree

(6.20)

is the gamma function. One of the

primary reasons for the success of FrWT is that they can be differentiated at

fractional order by taking finite differences. This is in contrast with the classical

wavelets whose differentiation order is constrained to be an integer. This property is

well suited for image edge detection.

The fractional derivative can be defined in Fourier domain as

̂

Where ̂

∫

(6.21)

denotes the Fourier Transform of

[Unser. M &

Blu.T, 2003]. Singularities detection can be carried out finding the local maxima of

the FrWT which is clearly evident in Figure 6.5 (c). The magnitude of the wavelet

transform modulus at the corresponding locations indicates the strength of the edges

caused by sharp transitions.

6.5.2.3 Steerable Wavelet Transform approach

An appropriate edge detection technique which ensures that the fine edges of

image without false edge detection are necessary for biomedical imagery. Machine

vision and many image processing applications require oriented filters. It is often

required to use the same set of filters for rotation at different angles and different

orientation under adaptive control. [Freeman, 1991] developed the need of designing

a filter, with filter response as a function of orientation and named it steerable. The

141

term ‘steerable’ (rotated) is used to describe a separate category of filters in which a

filter of arbitrary orientation is synthesized as linear combination of a set of basis

kernels. These filters provide components at each scale and orientation separately

and its non-aliased subbands are good for texture and feature analysis.

The steerable transform of a two dimensional function is written as linear

sum of rotated version of itself and is given by the expression as

∑

(6.22)

represents interpolation functions, ‘n’ is the number of terms required

Where

for summation. Any two filters are said to be quadrature if they possess same

frequency response and differ in phase by 90° (Hilbert Transform).The design of

quadrature pair of steerable filter is given by frequency response of second order

derivative of a Gaussian function

and its Hilbert Transform pair

These pairs

pave way for analyzing spectral strength representation of signals independent of

phase. We design steerable basis set for the second derivative of a

Gaussian

.This is the product of even parity

polynomial and a radially symmetric Gaussian function.

For edge detection the quadrature pairs set

and

are utilized. The

squared magnitude of the quadrature pair filter response steered everywhere in the

direction of dominant orientation is given by

*

A given point

+

*

+

(6.23)

is an edge point if

direction perpendicular to the local orientation

is at local maximum in the

.Steering

and

along the

dominant orientation gives the phase φ of edge points.

(6.24)

The steerable pyramid constitutes four band pass filters form a steerable

basis kernel set at each level of pyramid and these basis functions are derived by

dilation, translation and rotation of a single function. The orientation of these basis

filters were at 0°,45°,90°,135° and the coefficients of these filters obtained at any

142

orientation can also be obtained at any linear combination of these basis filters. The

original image with perfect reconstruction can be obtained, when these basis filters

are applied again at each level and the pyramid collapses to the original version

[Douglas Shy & Pietro Perona, 1994].

It consists of permanent, dedicated basis filters, which convolve the image as

it comes in, their outputs are multiplied by gain masks with appropriate interpolation

functions at each time and position and the final summation produces adaptively

steered filter. Steerable filters are useful in various tasks: shape from shading,

orientation and phase analysis, edge detection and angularly adaptive filtering

[Freeman et. al., 1991]. The focus of this paper is to provide edge detection

technique with good visual perception, undoubtly it is provided by steerable filters

by avoiding spurious edges caused by noise and detecting real edges. Simulation

results were performed to demonstrate the edge detections of images are shown in

Figure 6.5 (e,f,g) and that this technique of using Steerable Wavelet Transform

competes with other transform can be observed by means of visual perception.

6.6

INFLUENCE OF NOISE ON EDGE DETECTION

The principal sources of noise in digital images arise during image

acquisition and/or transmission. The performance of imaging sensors is affected by a

variety of factors, such as environmental conditions during image acquisition and by

the quality of the sensing elements themselves. For instance, in acquiring the images

with a CCD camera, light levels and sensor temperature are major factors affecting

the amount of noise in the resulting image.

Edges in images are susceptible to noise and this is due to the fact that the

edge detector algorithms are designed to respond to sharp changes, which can be

caused by noisy pixels. Noise may occur in digital images for a number of reasons.

The most commonly studied noises are white noise, salt & pepper noise and speckle

noise. To reduce the effects of noise, preprocessing of the image is required. The

preprocessing can be performed in two ways, filtering the image with a Gaussian

function, or by using a smoothing function. The problem with the above approaches

is that the optimal result may not be obtained by using a fixed operator. Simulation

results were carried out to discuss the influence of noise on edge detection and the

performance of edge detectors are discussed in forthcoming topics.

143

6.7

SIMULATION RESULTS

In a 2-D image signal, intensity is often proportional to scene radiance,

physical edges corresponding to the significant variations in reflectance,

illumination, orientation and depth of scene surfaces are represented in the image by

changes in the intensity function. Some types of edges results from various

phenomena; for example when one object hides another, or when there is shadow on

a surface. Noises are unwanted contamination that is intruded in an image

acquisition because of several factors: such as poor illumination settings, sensors

fault etc. The detection of edges are challenging task in noisy images. These are

demonstrated through numerical results in this section.

6.7.1

Numerical Results of edge detection using spatial domain techniques

The performance of various edge detectors in spatial domain are validated

for biomedical images such as narrowed artery nerve of a human heart and for an

iris image with cancer tissues are shown in Figure 6.3 and 6.4 respectively. These

operators are widely used for their simplicity.

(a) Narrowed artery nerve

of a human heart

(b) Prewitt image

(c)Canny image

(d) Laplacian image

(e) Sobel image

(f) Roberts image

Fig. 6.3: Performance of edge detectors for an image of narrowed artery nerve in

spatial domain.

144

(a)Iris image with cancer

(b) Prewitt image

(c)Canny image

(d) Laplacian image

(e) Sobel image

(f) Roberts image

Fig. 6.4: Performance of edge detectors for an iris image with cancer tissues in

spatial domain

The Roberts exhibits poor performance and Prewitt, Sobel performs

moderately whereas Canny’s edge detection algorithm provides better performance

but requires more overhead when compared to Sobel, Prewitt and Robert’s operator.

The Canny operator provides better results and spurious edges can be avoided by

hysteresis thresholding and can detect edges of an image but fails to detect lines.

The advantages of the zero crossing operators (Laplacian) are detecting edges and

their simple orientations, due to the approximation of the gradient magnitude and

their possession of fixed characteristics in all directions. The disadvantages of these

operators are sensitivity to the noise and detecting the edges and their orientations of

noisy image eventually degrading the magnitude of the edges.

6.7.2

Numerical Results of edge detection using spatial domain techniques

with noise

The influences of noise on biomedical images are discussed by taking into

consideration salt and pepper noise.

145

(a) Noisy image

(b) Prewitt image

(c)Canny image

(d) Laplacian image

(e) Sobel image

(f) Roberts image

Fig. 6.5: Performance of gradient edge detectors for image of narrowed artery

nerve with noise

(a) Noisy image

(b) Prewitt image

(c)Canny image

(d) Laplacian image

(e) Sobel image

(f) Roberts image

Fig 6.6: Performance of gradient edge detectors of an iris image with cancer

tissues influenced by noise

146

The primary advantages of the classical operator are simplicity but most of

these partial derivative operators are sensitive to noise. Use of these masks result in

thick edges or boundaries, in addition to spurious edge pixels due to noise. The

increase of noise in an image will eventually degrade the magnitude of the edges.

The streaking effect can be observed with noise, it is an effect caused due to

breaking up of edge contours. The major disadvantage is the inaccuracy, as the

gradient magnitude of the edges decreases; accuracy also decreases as shown in

Figure 6.5 and 6.6. The second disadvantage is that, the operation gets diffracted by

some of the existing edges in the noisy image. These gradient based operators

produce false edges in and around salt and pepper noise. The Prewitt and Roberts

operator completely fails for noisy images. Moderate performances are observed by

Laplacian and Sobel and much better results are obtained by Canny.

6.7.3 Numerical Results of edge detection using transform domain techniques

(a)Narrowed artery nerve

of a human heart

(b) DWT image

(d) Steerable wavelets

(c) FrWT image

(e)Steerable wavelets with

(f)Adaptively oriented

refined features

steered filtering

Fig. 6.7. Performance of gradient edge detectors for an image of narrowed artery

nerve in transform domain

147

The tumor cells in eye do not only supply blood to the photoreceptors (rods

and cones) of the retina and enables ophthalmologist to treat patients with large

tumors. The edge detection techniques help to diagnose whether the cells are benign

and malignant type and generally treated with enucleation, with or without

preoperative radiation.

(a)Cancer affected iris

image

(b) DWT image

(c) FrWT image

(d) Steerable wavelets

(e)Steerable wavelets with

(f)Adaptively oriented

refined features

steered filtering

Fig. 6.8: Performance of gradient edge detectors of an iris image with cancer

tissues in transform domain

As most of the gradient operators fail to detect edges of an image with

noises, some of the transform domain techniques discussed here are: DWT, FrWT,

StWT. These transforms extract salient features of the scene with no loss of edge

information when compared to spatial domain techniques and do not produce false

edges and are observed in Figure 6.7 and 6.8. As natural images contain spots, lines

and edges it is optimal to find an edge detector which responds to images with noise.

FrWT and StWT which possess local energy measure provides peak responses at

points of constant phase as a function of spatial frequency and corresponds to edge

148

points where human observers localize contours [Simina Emerich, 2008]. It is

further observed that edges are well covered visually but textures and fine structures

are removed in DWT domain. In FrWT and StWT operated images the textures and

fine structures are observed which clearly states the superiority of transform domain.

By use of angularly adaptive filtering the denoising and enhancement of orientated

structures can be done simultaneously.

6.7.4

Numerical results of edge detection using transform domain techniques

with noise

The transform domain techniques outperform the gradient operators under

noisy environment which are evident in Figure 6.9.

(a)Narrowed artery nerve

with Salt & Pepper noise

(b) DWT image

(c) FrWT image

(d) Steerable wavelets

(e) Steerable wavelets

with refined features

(f) Adaptively oriented

steered filtering

Fig 6.9: Performance of gradient edge detectors for an image of narrowed artery

nerve with noise

149

(a)Cancer affected iris

image with Salt & Pepper

noise

(b) DWT image

(c) FrWT image

(d) Steerable wavelets

(e) Steerable wavelets

(f) Adaptively oriented

with refined features

steered filtering

Fig 6.10: Performance of gradient edge detectors of an iris image with cancer

tissues influenced by noise in transform domain

The edges are not affected by streaking effect due to noise in transform

domain which is essential in biomedical applications. The steerable wavelet based

edge detector which has adaptive in nature is used to eliminate streaking of edge

contours and is evident in the Fig.6.10. The physician can vary the scale based on

the level of details required and view the delicate details of the artery nerve and

cancer tissues in eye and arrive at an amicable solution for subsequent treatments.

Thus decision making is simple and eases him to diagnose most sensitive issues

related to heart and eye diseases.

150

6.8

SUMMARY

Comparison of various edge detection techniques and to analyze the

performance of the various techniques under noisy conditions are evaluated. The

classical operators possess some of the features like simplicity, detection of edges

and their orientations, sensitivity to noise and are inaccurate. In this chapter,

different approaches of edge detection techniques like Gradient-based, Laplacian

based and Transform domain based techniques are presented. Edge detection

techniques are compared with case studies of identifying a narrowed artery nerve of

a human heart and iris image with cancer cells which provides the physician,

information in making decision to perform Coronary Artery Block Surgery or Stent

placement and in case of iris cells, assists to categorize whether benign and

malignant tissues are present and to treat with enucleation(removal of the eye), with

or without preoperative radiation.

Gradient-based algorithms have major drawback as they are sensitive to

noise. The dimension of the kernel filter in spatial domain and its coefficients are

static and it cannot be adapted to a given image. It has been observed that evaluation

of spatial domain operators like Canny, Laplacian, Sobel, Prewitt, Roberts’s

exhibited poor performance with noise. Though Laplacian performs better for some

features, it suffers from mismapping which represents edges. The edges are not

continuous hence called streaking effect is severe in noisy images.

Performance evaluation of edge detection techniques with DWT, FrWT and

StWT were discussed. The fine to coarse information related to edge detection can

be obtained through DWT based edge detection which provides the band passed

representation, with threshold limits. Computational tasks in FrWT such as

differentiation, integration and search for extrema are quite simple in transformed

coefficients. The noise components are optimally separated and hence FrWT

provides perfect localization. These estimating derivatives are helpful in edge

detection and the edge points are well localized. Thus it is less sensitive to noise and

provides robust edge detection with adjustable scale parameter which will be helpful

for the physician to diagnose the intensity of the disease with ease.

151

The steerable filters can measure local orientation, direction, strength and

phase at any orientation provides sophisticated results as these filters are oriented

and non-aliased subbands provide us elegant edge detection and can be used for

texture and feature analysis of the image. Further it is concluded that FrWT & StWT

are convenient and perspective tools for edge detection analysis in bio-medical

domain problems. The problem of streaking effect was addressed in spatial domain

and predominantly suppressed and visualized in FrWT and StWT domain.

152