Distributed Deep Reinforcement Learning with

Wideband Sensing for Dynamic Spectrum Access

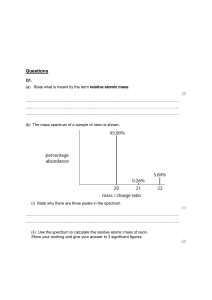

Umuralp Kaytaz∗ , Seyhan Ucar‡ , Baris Akgun† and Sinem Coleri∗

Department of Electrical and Electronics Engineering, Koc University Istanbul Turkey∗

Department of Computer Engineering, Koc University Istanbul Turkey†

InfoTech Labs, Toyota Motor North America R&D, Mountain View, CA ‡

{ukaytaz@ku.edu.tr, seyhan.ucar@toyota.com, baakgun@ku.edu.tr, scoleri@ku.edu.tr}

Abstract—Dynamic Spectrum Access (DSA) improves spectrum utilization by allowing secondary users (SUs) to opportunistically access temporary idle periods in the primary

user (PU) channels. Previous studies on utility maximizing

spectrum access strategies mostly require complete network

state information, therefore, may not be practical. Model-free

reinforcement learning (RL) based methods, such as Q-learning,

on the other hand, are promising adaptive solutions that do not

require complete network information. In this paper, we tackle

this research dilemma and propose deep Q-learning originated

spectrum access (DQLS) based decentralized and centralized

channel selection methods for network utility maximization,

namely DEcentralized Spectrum Allocation (DESA) and Centralized Spectrum Allocation (CSA), respectively. Actions that

are generated through centralized deep Q-network (DQN) are

utilized in CSA whereas the DESA adopts a non-cooperative

approach in spectrum decisions. We use extensive simulations

to investigate spectrum utilization of our proposed methods for

varying primary and secondary network sizes. Our findings

demonstrate that proposed methods significantly outperform

traditional methods, including slotted-Aloha and random assignment, while %88 of optimal channel access is achieved.

Index Terms—Cognitive radio, dynamic spectrum access,

deep reinforcement learning, medium access control (MAC).

I. I NTRODUCTION

Cognitive Radio (CR) is a promising technology for

emerging wireless communication systems due to its efficient

utilization of the frequency bands. CR enhances temporal and

spatial efficiency by exploiting temporary idle periods, a.k.a

spectrum holes in the frequency usage. Dynamic Spectrum

Access (DSA) plays a central role in CR networks by

allowing secondary users (SUs) to opportunistically access

spectrum holes in the PU channels. Network utility maximization during DSA requires efficient frequency selection

and minimal interference. Previous studies on DSA related

channel selection strategies mostly require complete network

state information, so, may not be practical [1]–[3]. Modelfree reinforcement learning (RL) based methods, such as Qlearning, on the other hand, are promising solutions that do

not require complete network information [4].

Deep Reinforcement Learning (DRL) adopts deep neural

network (DNN) architectures for approximating the objective

values during the learning [5]. High-dimensional spectrum

assignment problem, which includes large number of actions

and states space, can be solved via DRL without any prior

knowledge about the network state and/or environment.

Up to now, several works with RL and/or deep RL (DRL)

have been proposed with the aim of interference avoidance

and/or utility maximization in CR networks. DRL based

frequency allocation has been investigated for maximizing

the number of successful transmissions in [6]. A similar

approach has been proposed in [7] to maximize the network

utility during spectrum access via Deep Q-network (DQN).

Deep Q-learning (DQL) and narrowband sensing policy

has been examined for heterogeneous networks that operate

different MAC protocols [8]. However, narrowband sensing

adopted in these studies limits the usage of multiple spectral

opportunities. [9] examines multi-agent RL with wideband

sensing for learning sensing policies in a distributed manner.

Different from the proposed architecture, we focus on spectrum assignment and primary system frequency allocations.

Wideband sensing with DQN based access policy has been

investigated for utility maximization of a single SU [10].

However, existence of multiple cognitive agents in different

primary network settings and effect of centralized policy

learning is omitted in the presented work.

In this paper, we propose DQL and wideband sensing

based multiple SU utility maximization for the first time

in the literature. The original contribution of this study

is threefold. First, we develop a Markov Decision Process

(MDP) formulation for utility maximization during secondary

system decision making based on wideband sensing. Second,

we propose novel decentralized and centralized spectrum selection methods based on wideband sensing, namely DEcentralized Spectrum Allocation (DESA) and Centralized Spectrum Allocation (CSA), respectively. Third, we investigate

the spectrum utilization of the proposed spectrum allocation

methods for varying primary and secondary network sizes.

The remainder of the paper is organized as follows. Section II describes the system model. Section III presents the

formulation and RL based learning methods. DQN architecture and DQL-based spectrum allocation algorithms are

provided in Section IV. Section V details simulation setup

and analyzes experimental outcomes. Finally, we present our

concluding remarks in Section VI.

II. S YSTEM M ODEL

Fig. 1 represents IEEE 802.22 standard and Wireless Regional Area Network (WRAN) adopted network architecture.

PUs and SUs are assumed to be WRAN Base Station (BS)

IV. A PPROACH

q π (s, a) = Eπ

X

∞

γ k rt+k+1 st = s, at = a

k=0

(2)

There exists an optimal deterministic stationary policy for

each MDP model [11]. This policy maximizes the expected

reward returned from a state in the MDP model with unknown transition probabilities. Optimal state-value function

v∗ and optimal action-value function q∗ for all s ∈ S and

a ∈ A under policy π can be denoted as follows;

v∗ (s) = max v π (s)

(3)

q∗ (s, a) = max q π (s, a)

(4)

π

π

C. Q-Learning

Q-learning is a widely-used control algorithm for policy

independent approximation of optimal action-value function

(q∗) based on Bellman optimality equations. In the absence

of state transition probabilities P, optimal policy calculation

requires approximation of optimal value functions, q∗ and v∗,

for utility maximization. Bellman optimality equations allow

policy independent representations and recursive calculation

for optimal value functions [4]. These equations are;

Next, we describe our DQN architecture and algorithms for

DQL based spectrum allocation. First, we explain our DQN

architecture detailing hyperparameters selected for the learning procedure. Later on, we present wideband sensing based

DQL algorithm for single SU frequency selections. Finally,

we elaborate on our proposed centralized and decentralized

DRL architectures for multi-user utility maximization during

secondary system spectrum decisions.

A. Deep Q-Network

Q-learning calculation requires memorization and representation of Q-values during the expected reward calculation.

DQN is a deep reinforcement learning method that uses deep

neural network architecture for value storage and expected

utility approximation during Q-learning procedure. Using

DQN allows complex mapping of high-dimensional statespace representations to action-values [6], [7]. Our DQN

training procedure uses experience replay technique. With

this approach, we ensure convergent and stable training of

DQN model by sampling past experience at each batch and

training on decorrelated examples [12].

Table II: Hyperparameters for DQN

v∗ (s) = max E[ rt+1 + γv∗ (st+1 ) | st = s, at = a ]

a

(5)

Hyperparameter

Number of episodes

Number of time slots

Number of layers

Batch size B

Memory size

Learning rate α

Discount factor γ

Exploration rate ǫ

Epsilon decay

Loss function

Optimizer

q∗ (s, a) = E[ rt+1 + γ max q∗ (st+1 , at+1 ) | st = s, at = a ]

at+1

(6)

Learning algorithm uses multiple experiences and one-step

look-ahead approach for recursively approximating q∗ values,

in which, experience represents a sample taken from the MDP

model. An Experience at time slot t can be denoted using

et = hst , at , rt , st+1 i. Q-learning uses the following update

rule for solving the MDP formulation and approximating q∗

values;

q(s, a) ←

− q(s, a) + ∆q(s, a)

(7)

using learning rate α, ∆q(s, a) is defined as;

h

i

∆q(s, a) = α rt + γ max q(st+1 , at ) − q(st , at )

at

Table I: Reinforcement Learning Notation

Notation

γ

π

rt

st

at

v π (s)

q π (s, a)

v∗ (s)

q∗ (s, a)

et = hst , at , rt , st+1 i

Description

Discount factor

Policy

Reward observed at time t

State at time t

Action taken at time t

State-value function under policy π

Action-value function under policy π

Optimal state-value function

Optimal action-value function

Experience at time t

(8)

Value

200

10000

5

10

2000

0.001

0.95

1.0 −

→ 0.01

0.997

Huber

Adam

Feed-forward network consists of 5 fully connected layers,

where input is the state si . Input layer is of size 1 x N and

output layer is a vector of action set A. Hidden layers are

of size 30, 20, 10 and use ReLU activation function that

computes f (x) = max(x, 0). Last layer of the

P network

uses softmax activation function σ(xj ) = exj / i exi for

predicting Q-value of each action. We chose Adam gradient

descent optimization algorithm for updating weights during

DQN training [13]. Huber loss has been chosen as the loss

function during back propagation due to its robustness against

outliers [14]. At each iteration i, Adam algorithm is used to

update weights θi of the DQN for minimizing loss function

Li (θi ) =

(

1

2 (yi

2

− Qi )

c|yi − Qi | − 21 c2

f or |yi − Qi | ≤ c

otherwise

(9)

where yi = E[ ri+1 + γ maxa′ q∗ (si+1 , a′ )] is calculated

with the DQN using weights θi−1 from previous iteration,

Qi = q(si , a|θi ) and c = 1.0 as presented in Table II.

Algorithm 1: DQL originated Spectrum Access (DQLS)

Input: S ←

− primary system channel occupation

Output: DQN target

Data: M em : Memory, b : minibatch

train

1 Initialize DQN

, DQN target ←

− DQN (S, A)

2 for each episode Et do

3

for each state st ∈ Et do

4

Compute at = DQN train .get action(st )

5

at ←

− exploration with prob. ǫ

6

Compute rt = DQN train .get reward(st , at )

7

Get next state st+1

8

Store M em.store(et = hst , at , rt , st+1 i)

9

Update st ←

− st+1

10

if |M em| > B then

11

Compute b = M em.sample(B)

12

for each ei in b do

13

Compute

t = E[ ri+1 + γ maxai+1 q∗ (si+1 , ai+1 )]

14

Compute DQN train .train(si , t)

15

weights

Update DQN target ←−−−−− DQN train

B. DQL originated Spectrum Access Algorithm (DQLS)

Algorithm 1 is executed for learning spectrum decision

policies based on detected PU channel allocations. Learning is triggered upon receiving primary system channel

information from the environment using wideband sensing.

First, two DQN implementations (DQN train , DQN target )

are initialized for the experience replay procedure using

state space S and action space A (Line 1). Training lasts

for a predetermined amount of episodes (Lines 2-16). At

each episode randomly generated PU spectrum decisions are

determined using wideband sensing (Lines 3-15). Action at

corresponding to the highest expected utility is returned by

the DQN train given the current PU channel occupations

st (Line 4). Training procedure uses ǫ-greedy policy for

choosing random actions over actions that return highest

expected utility with probability ǫ (Line 5). Exploration

is necessary in order to find better action selections that

result in higher reward signals. We linearly decrease the

0.997

exploration rate ǫ (1.0 −−−→ 0.01) by epsilon decay for a balanced exploration-exploitation trade-off. Immediate reward

obtained by the selected action is recorded for the rest of the

training procedure (Line 6).

After getting the channel occupation in the subsequent time

slot current state is updated and an experience et is stored

in the memory M em using action at , states st , st+1 and

immediate reward rt (Lines 7-9). As the experience count

surpasses the predetermined batch size B, experiences are

randomly sampled from the memory to form a minibatch

and train on decorrelated experiences (Lines 10-11). Based

on the information contained in each experience, Q-value

Q(s, a) of that state-action pair is calculated and DQN model

DQN train is trained (Lines 12-14). After each episode

learned weights by the training model DQN train is used

for updating the target model DQN target (Line 15).

Algorithm 2: Centralized Spectrum Allocation (CSA)

Input: K ←

−number of SUs, N ←

− number of PUs

Output: Setaction : Secondary system actions

Data: si ←

− state during i-th slot

1 Compute |A| ←

− (N + 1)K

action

2 Initialize Set

= {}, assignedch = 0

target

3 Train DQN

, DQN train ←

− DQLS

target

4 Compute a = DQN

.get action(si )

ch

5 while assigned

6= K do

ch

6

Update |A| ←

− (N + 1)K−assigned −1

7

Compute P U index = ⌊(a/|A|)⌋

8

Update Setaction .append(P U index)

9

Update a = a mod |A|

10

Update assignedch += 1

C. Centralized Spectrum Allocation Algorithm (CSA)

Centralized DQL architecture uses Centralized Spectrum

Allocation Algorithm (CSA) for learning spectrum decision

policies. After obtaining primary system channel occupation

patterns using distributed sensing [9], Algorithm 2 is run for

determining secondary system spectrum decisions. Initially

the number of possible actions, action space size, is calculated considering each of K SUs has (N+1) actions for

N detected PU channels (Line 1). An empty set of actions

Setaction is created with a variable assignedch for counting

the number of assigned SU actions (Line 2). Centralized

DQN is trained and an action number for encoding all

individual actions of K SUs is computed using single DQN

based DQLS algorithm (Lines 3-4). Action code a, generated

by the central DQN for a given state si , is decoded into

separate action numbers until all SUs have an assigned action

(Lines 5-10). For each individual SU action this procedure

keeps the action of remaining SUs as a subset and divides

the action code to possible number of remaining actions

ch

(N + 1)K−assigned −1 (Lines 6-7). During the rest of the

procedure action code representing the cumulative actions of

remaining SUs and number assigned SU actions are updated

(Lines 9-10). After the calculation of the current channel

assigned to the SU, immediate reward signal is obtained from

the environment and total utility is updated (Lines 12-13).

D. Decentralized Spectrum Allocation Algorithm (DESA)

Decentralized policy learning architecture consists of noncooperative secondary BSs that perform independent wideband sensing and policy learning during DSA. Initially, each

secondary BS SU performs wideband sensing individually

and determines primary system channel allocations. Based

on spectrum occupancy information, SUs are trained using

the proposed DQLS algorithm (Lines 1-2). Upon completion

of the training procedure, secondary BSs choose the S

action and primary channel index, which provide the highest

expected utility given state sj at the current time slot j (Lines

3-4).

500

3

4

for each SU ∈ secondary system do

target

Compute aj = DQNSU

.get action(sj )

V. S IMULATIONS

A. Simulation Setup

Simulations of CSA and DESA algorithms have been

implemented for centralized and decentralized spectrum decision scenarios using open-source neural-network library

Keras [15] and numerical computation library TensorFlow

[16]. CSA uses a single DQN for generating secondary

system actions during DSA. Central DQN in the CSA is

aware of the primary system channels, which are detected by

the SUs using wideband sensing approach. During the DESA,

each SU has been modeled as a learning cognitive agent

that is capable of performing sensing and policy learning

operations independently. We have modeled PU channels as

independent 2-state Markov chains that can either be in 1

(occupied) or 0 (vacant) state. Similar to previous work in

[10], namely DQN-based access policy (DQNP), we have

implemented DSA for N = 20 channels each assigned to a

different PU with randomly generated transition probability

matrices {Pi }20

i=1 .

Network utility performance under CSA and DESA have

been compared with classical slotted-Aloha protocol and

optimal policy performance. Expected channel throughput

under slotted-Aloha protocol at a given time-slot i is given

by ni pi = (1 − pi )ni −1 , where pi represents transmission

probability and ni is number of SUs in the CR environment [7]. For the optimal policy performance comparison

we have implemented fixed-pattern channel switching based

optimal policy (OP) algorithm proposed in [6]. Each of the

simulations were carried out for T = 10, 000 time slots and

E = 200 episodes. We have used average SU utility per

episode as the performance evaluation metric for representing

average cumulative reward gained by SUs at each episode.

B. Simulation Results

We first present simulation results for spectrum decisions

of a single SU coexisting with multiple PUs in the CR

environment. Fig. 2 shows average SU utility per episode

obtained by a single SU spectrum decisions under DQLS.

Note that network scenario with 1 SU and 20 PUs corresponds to the architecture proposed for DQNP. We have

two observations from Fig. 2. First, average SU throughput

400

350

300

250

200

DQLS 1 SU 15 PU

DQLS 1 SU 20 PU (DQNP)

DQLS 1 SU 25 PU

OP 1 SU 25 PU

OP 1 SU 20 PU

OP 1SU 15 PU

150

100

50

0

0

50

100

150

200

Episodes

Figure 2: DQLS versus optimal channel access policy

increases as the number of detected PU channels decreases.

Low cardinality of the action space enables SU to learn a

better channel selection policy. Therefore, a higher value of

cumulative utility is obtained at the end of each episode as

the number of PU per SU decreases. Second, convergence

to DQN-based access policy takes longer with increasing

number of detected PU channels. Increasing complexity in

the CR network results in higher dimensionality of action

and state space representations, hence policy learning from

channel occupancy observations slows down.

Fig 3 shows average spectrum selection performance of

SUs under CSA. During simulations, the number of PU

channels is set to 20 while the number of base stations in

the secondary system vary as 2, 3 and 4. Similar to the

results presented in Fig 2, SUs perform better with DQN

based channel selection strategy as the number of available

PU channels per SU decreases. Different from slotted-Aloha

based medium access, average utility under CSA increases

with increasing number of SU. Furthermore, CSA-based

spectrum policy performance surpasses random access and

slotted-Aloha based spectrum decisions as the number of SU

increases to 4. On the other hand, 52% of optimal policy

performance is achieved.

Fig 4 depicts the performance of non-cooperative SUs

under DESA. Similar to the results depicted in Fig 2 and

Average SU Utility per Episode

Algorithm 3: Decentralized Spectrum Allocation

(DESA)

Input: S ←

− primary system channel occupation

Output: aj : PU channel at j-th slot

Data: sj ←

− state at j-th slot

1 for each SU ∈ secondary system do

target

train

, DQNSU

←

− DQLS

2

Train DQNSU

Average SU Utility per Episode

450

550

500

450

400

350

300

250

200

150

100

50

0

−50

−100

OP 20 PU 4 SU

OP 20 PU 2 SU

CSA 20 PU 4 SU

CSA 20 PU 2 SU

Slotted Aloha 20 PU 4 SU

Slotted Aloha 20 PU 2 SU

Random Access Policy

0

50

100

150

200

Episodes

Figure 3: Spectrum policy performance under CSA

Average SU Utility per Episode

R EFERENCES

550

500

450

400

350

300

250

200

150

100

50

0

−50

−100

OP 20 PU 4 SU

OP 20 PU 2 SU

DESA 20 PU 4 SU

DESA 20 PU 2 SU

Slotted Aloha 20 PU 4 SU

Slotted Aloha 20 PU 2 SU

Random Access Policy

0

50

100

150

200

Episodes

Figure 4: Spectrum policy performance under DESA

Fig 3, DESA-based channel access results show that increasing number of SUs increases the average performance

of DQN-based spectrum policy. It’s evident that DESAbased spectrum assignment outperforms other approaches.

Furthermore, this performance gap drastically increases as

the number of SU reaches to 4. Compared to results obtained

by centralized spectrum assignment under CSA, we observe

that independent policy learning in the decentralized scenario

results in higher average network utility values. Furthermore,

88% of optimal policy performance is achieved as 20 PUs

and 4 SUs are available in the CR environment.

VI. C ONCLUSION AND F UTURE W ORK

In this paper, we present multi-agent deep reinforcement

learning based spectrum selection with wideband sensing

capability for multi-user utility maximization during DSA.

Initially, DQN-based spectrum decisions of a single SU coexisting with multiple PUs have been derived. Later on, we propose novel algorithms for DQL and wideband sensing based

centralized and decentralized spectrum selection, namely

DESA and CSA. Through simulations, we demonstrate that

the performance of DQN-based frequency selection increases

as the number of available PU channels per SU decreases.

Moreover, we observe that independent policy learning in

non-cooperative manner under DESA results in more effective spectrum decisions than centralized spectrum assignment

under CSA. Overall, our proposed methods improve the spectrum utilization compared to traditional spectrum assignment

methods in which 88% and 52% of optimal channel access

are achieved by DESA and CSA, respectively.

Going forward, our future work will focus on extending

both the system model and proposed algorithms. For the

system model, we will concentrate on efficient utilization of

OFDMA resource blocks and analyze aggregated interference

at primary receivers during secondary system transmissions.

Considering the great potential of DRL-based technologies

for DSA, we also plan to work on creating an open source

dataset and implementing other DRL approaches such as

policy gradient methods.

[1] K. Wang and L. Chen, “On optimality of myopic policy for restless

multi-armed bandit problem: An axiomatic approach,” IEEE Transactions on Signal Processing, vol. 60, no. 1, pp. 300–309, Jan 2012.

[2] S. H. A. Ahmad, M. Liu et al., “Optimality of myopic sensing in

multichannel opportunistic access,” IEEE Transactions on Information

Theory, vol. 55, no. 9, pp. 4040–4050, Sep. 2009.

[3] Q. Zhao, L. Tong et al., “Decentralized cognitive mac for opportunistic

spectrum access in ad hoc networks: A pomdp framework,” Selected

Areas in Communications, IEEE Journal on, vol. 25, pp. 589 – 600,

05 2007.

[4] R. S. Sutton and A. G. Barto, Reinforcement Learning: An

Introduction, 2nd ed. The MIT Press, 2018. [Online]. Available:

http://incompleteideas.net/book/the-book-2nd.html

[5] V. Mnih, K. Kavukcuoglu et al., “Human-level control through deep

reinforcement learning,” Nature, vol. 518, no. 7540, pp. 529–533,

Feb. 2015. [Online]. Available: http://dx.doi.org/10.1038/nature14236

[6] S. Wang, H. Liu et al., “Deep reinforcement learning for dynamic

multichannel access in wireless networks,” IEEE Transactions on

Cognitive Communications and Networking, vol. 4, no. 2, pp. 257–

265, June 2018.

[7] O. Naparstek and K. Cohen, “Deep multi-user reinforcement learning for distributed dynamic spectrum access,” IEEE Transactions on

Wireless Communications, vol. 18, no. 1, pp. 310–323, Jan 2019.

[8] Y. Yu, T. Wang, and S. C. Liew, “Deep-reinforcement learning multiple

access for heterogeneous wireless networks,” in IEEE International

Conference on Communications (ICC), May 2018, pp. 1–7.

[9] J. Lunden, S. R. Kulkarni et al., “Multiagent reinforcement learning

based spectrum sensing policies for cognitive radio networks,” IEEE

Journal of Selected Topics in Signal Processing, vol. 7, no. 5, pp.

858–868, Oct 2013.

[10] H. Q. Nguyen, B. T. Nguyen et al., “Deep q-learning with multiband

sensing for dynamic spectrum access,” in 2018 IEEE International

Symposium on Dynamic Spectrum Access Networks (DySPAN), Oct

2018, pp. 1–5.

[11] R. Bellman, “The theory of dynamic programming,” Bull. Amer.

Math. Soc., vol. 60, no. 6, pp. 503–515, 11 1954. [Online]. Available:

https://projecteuclid.org:443/euclid.bams/1183519147

[12] M. Andrychowicz, F. Wolski et al., “Hindsight experience

replay,” in Advances in Neural Information Processing Systems

30, I. Guyon, U. V. Luxburg et al., Eds.

Curran

Associates, Inc., 2017, pp. 5048–5058. [Online]. Available:

http://papers.nips.cc/paper/7090-hindsight-experience-replay.pdf

[13] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,”

CoRR, vol. abs/1412.6980, 2015.

[14] P. J. Huber, “Robust estimation of a location parameter,” Ann. Math.

Statist., vol. 35, no. 1, pp. 73–101, 03 1964. [Online]. Available:

https://doi.org/10.1214/aoms/1177703732

[15] F. Chollet et al., “Keras,” https://github.com/fchollet/keras, 2015.

[16] M. Abadi, P. Barham et al., “Tensorflow: A system for large-scale

machine learning,” in 12th USENIX Symposium on Operating

Systems Design and Implementation (OSDI 16), 2016, pp. 265–283.

[Online]. Available: https://www.usenix.org/system/files/conference/

osdi16/osdi16-abadi.pdf